Abstract

We consider an LQR optimal control problem with partially unknown dynamics. We propose a new model-based online algorithm to obtain an approximation of the dynamics and the control at the same time during a single simulation. The iterative algorithm is based on a mixture of Reinforcement Learning and optimal control techniques. In particular, we use Gaussian distributions to represent model uncertainty and the probabilistic model is updated at each iteration using Bayesian regression formulas. On the other hand, the control is obtained in feedback form via a Riccati differential equation. We present some numerical tests showing that the algorithm can efficiently bring the system towards the origin.

This research has been partially supported by the INdAM Research group GNCS.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The Linear-Quadratic Regulator (LQR) optimal control problem [1, 3] is a classical problem in control theory with a wide range of applications. When the dynamics is known, the optimal control is obtained in feedback form solving a backward Riccati differential equation.

Some Reinforcement Learning (RL) problems can be seen as LQR problems where the dynamics is partially or completely unknown. Some RL algorithms try to learn a model for the dynamics and use this model to find the optimal policy. These are called model-based algorithms. Others recover the optimal control directly, without reconstructing the dynamics, and these are called model-free algorithms. For a broad overview on RL, we recommend [14].

The connection between RL and optimal control theory was already identified in the past [15]. Recently, Palladino and co-authors tried to clarify this relationship via some rigorous proofs, identifying some RL tasks as real optimal control problems with unknown dynamics [8, 10, 11]. In this context, we propose a new model-based algorithm for LQR problems where the dynamics is partially unknown, which takes contributions from both fields. In fact, our algorithm can be considered as a case of Bayesian RL, a class of model-based algorithms where the controller builds a stochastic model of the dynamics and updates it according to Bayesian statistics [6]. On the other hand, we borrow the LQR solution from optimal control theory, to get the synthesis of a suitable control [1].

In particular, our algorithm is similar to PILCO [2], from which it takes the use of Gaussian distributions and the whole process of Bayesian update. However, we propose here some novelties. The first is that in PILCO the optimal control is chosen through a gradient descent algorithm in a class of controls, whereas we solve a Riccati differential equation to identify the minimizer. The second is that PILCO needs several trials to reconstruct the dynamics and stabilise the system. Our algorithm is designed to approximate the dynamics and to find a suitable control in a single run but can be applied only to linear dynamical systems. Finally, let us mention that other works have already dealt with LQR problems with unknown dynamics (see i.e. [4, 5, 7, 9] and references therein), but they all need several trials to converge, whereas our method works with just one.

The paper is structured as follows. In Sect. 2 we recall the LQR problem. In Sect. 3 we present our algorithm and discuss some implementation details. Finally, in Sect. 4 we show and discuss some numerical tests.

2 The Classical LQR Problem

The Linear-Quadratic Regulator (LQR) problem [1, 3] is an optimal control problem with linear dynamics and quadratic cost. In the finite horizon case, the state of the system \(x(t) \in \mathbb {R}^n\) evolves according to the following controlled dynamics

We will denote by \(\mathbb {R}^{m \times n}\) the space of matrices with m rows and n columns; \(\mathbb {I}_n\) and \(\mathbf {0}_n\) will be respectively the identity and zero matrices in \(\mathbb {R}^{n \times n}\). Here \(\widehat{A} \in \mathbb {R}^{n \times n}\) and \(B\in \mathbb {R}^{n \times m}\). The control function \(u(t) \in \mathbb {R}^m\) must be chosen among the admissible controls \(\mathcal {U}:=\{u:[0,T] \rightarrow \mathbb {R}^m\; \mathrm {Lebesgue}\; \mathrm {measurable}\}\) to minimize the quadratic cost functional

The main assumptions on the cost matrices are the following:

-

\(Q, Q_f \in \mathbb {R}^{n \times n}\) are symmetric and positive semi-definite;

-

\(R \in \mathbb {R}^{m \times m}\) is symmetric and positive definite.

For any \(x_0 \in \mathbb {R}^n\), we will call \(\bar{u}\) an optimal control starting from \(x_0\) if and only if

When the dynamics is fully known, the optimal control can be obtained in feedback form [1]. Indeed, if P(t) is the unique symmetric solution of the Riccati differential equation

the optimal control is given by \(\bar{u}(t) = -R^{-1} B^T P(t) x(t)\).

We want to investigate: what happens if the dynamics is partially unknown? How can one find a suitable control? We considered the following framework:

Setting

We assume that the matrices \(B \in \mathbb {R}^{n \times m}\), \(Q, Q_f \in \mathbb {R}^{n \times n}\) and \(R \in \mathbb {R}^{m \times m}\) are given, whereas the state matrix \(\widehat{A} \in \mathbb {R}^{n \times n}\) is unknown.

3 An Online Algorithm for the LQR Problem

In this section, we describe our model-based algorithm able to solve the LQR problem without knowing the matrix \(\widehat{A}\). The algorithm is online, meaning that it doesn’t need any previous simulation or computation. Our goal is twofold: first, we look for a good estimate for the unknown dynamics matrix \(\widehat{A}\); and secondly, we want to choose a control that can steer the trajectory towards the origin. Furthermore, we assume that we can run the experiment just once, so the system must be controlled while the dynamics is still uncertain. To this end, we use a technique to get an estimate of the matrix \(\widehat{A}\) and to update the control at the same time.

We divide the interval [0, T] into equal time steps of length \(\varDelta t\), globally we will have N time steps and we group them into rounds of S steps each. The i-th round will be denoted by \([t_{i-1},t_i]\) and a superscript index will indicate a single time step:

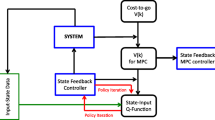

The current knowledge of the dynamics matrix is represented as a probability distribution over matrices. For each round, two major operations are carried out:

-

1.

At the beginning of each round, a probability \(\pi _{i-1}\) is given from the previous round. We use the mean \(\bar{A}_{i-1}\) of this distribution and compute a feedback control for the round solving a Riccati equation;

-

2.

At the end of each round, the current probability distribution is updated using Bayesian formulas, according to the trajectory observed during the round. The output is a new probability distribution \(\pi _i\).

The whole algorithm is summarised below as Algorithm 1. In the following, we will give more technical details.

Prior Distribution. The algorithm requires the choice of a prior distribution \(\pi _0\). We fix some \(m_0\in \mathbb {R}^n\) and \(\varSigma _0\in \mathbb {R}^{n \times n}\) and consider a random matrix A such that each of its rows \(r_k\) is distributed as an independent Gaussian vector with mean \(m_0\) and covariance matrix \(\varSigma _0\)

A typical choice for the prior distribution, when no information about the true matrix \(\widehat{A}\) is available, is \(m_0 = \left( 0, \dots , 0 \right) ^T\) and \(\varSigma _0 = n \, m \, \mathbb {I}_n\).

Feedback Control. At the beginning of the round \([t_{i-1},t_i]\) our knowledge of the matrix \(\widehat{A}\) is described by the distribution \(\pi _{i-1}\). In order to find the control to apply, we solve the evolutive Riccati equation associated with the matrix \(\bar{A}_{i-1}\), where \(\bar{A}_{i-1}\) is the mean of the distribution \(\pi _{i-1}\). The Riccati equation reads

If we denote by \(P_i(t)\) the solution of (5), our control will be the feedback control given by

where \(x_i^j=x(t_i^j)\) is the observed state of the system. Since the control must be defined for all instants \(t \in [t_{i-1}, t_i]\), we choose a piecewise constant control: \(u_i^*(t) = u_i^*(t_i^j)\) for \(t \in [t_i^j, t_i^{j+1}]\). Note that we need real-time observations of the system state to compute \(u^*_i\), since it depends on x.

Distribution Update. At the end of each round we can update the probability distribution using Bayesian regression formulas. More precisely, we know that during the time step \([t_i^j, t_i^{j+1}]\) the system evolves according to (1) where we plug-in the chosen piecewise constant control. We can easily get an approximation of the state derivative with a finite difference scheme using the state observations \(x_i^j=x(t_i^j)\). By a first-order scheme, rearranging the terms, we get

We interpret these data as observations of the dynamics with a Gaussian noise due to the error in the derivative approximation and the measurements. We treat each row \(\widehat{r}_k\) of \(\widehat{A}\) separately. Denoting by \(y^j\) the right hand side in (7), we can write its k-th component as

where \(\sigma \) is a parameter chosen according to the order of the derivative approximation.

For each row k we have a prior distribution \(r_k\sim \mathcal {N}(m_0,\varSigma _0)\) given by \(\pi _{i-1}\). We define X as the matrix whose columns are \(x_i^0,\ldots ,x_i^{S-1}\) and y as the column vector \(y^0_{(k)},\ldots ,y^{S-1}_{(k)}\). Therefore we obtain a posterior distribution \(r_k|_{y,X}\sim \mathcal {N}(m,\varSigma )\) where \(\varSigma ^{-1}=\frac{1}{\sigma ^2}XX^T+\varSigma _0^{-1}\) and \(m=\varSigma ( Xy/\sigma ^2 +\varSigma _0^{-1}m_0 )\). For more details about Bayesian Linear Regression see for instance the extensive monograph by Rasmussen and Williams [12].

Higher-Order Schemes. The derivative in (7) can be approximated with higher order finite difference schemes [13]. Note that trajectory regularity is required in the interval containing the nodes used in the approximation. While first-order approximation uses only two nodes, higher-order approximations use more nodes, thus the control cannot jump at each time step and we have to keep it constant for more steps.

Remark 1

(Heuristic argument for convergence). The algorithm cannot find the optimal control for the problem, since at the beginning the matrix \(\widehat{A}\) is unknown and it needs at least some steps to have a good estimate for \(\widehat{A}\). However, from Bayesian Regression theory (see [12]) we know that the more data we observe, the more precise our distribution \(\pi _i\) becomes, eventually converging to the Dirac delta \(\delta _{\widehat{A}}\).

Furthermore, let us note that since \(\pi _i\) is converging to \(\delta _{\widehat{A}}\), then also the mean matrix \(\bar{A}_i\) of \(\pi _i\) is converging to \(\widehat{A}\). Thus, after few rounds, the feedback control computed by the algorithm (which is using \(\bar{A}_i\)) in the interval \([t_i, T]\) should be close to the optimal control of a trajectory of the real dynamics which starts from the same point \(x_{i-1}^S\).

Finally, when \(\varDelta t \rightarrow 0\), the algorithm reaches earlier a good estimate of the dynamics matrix. This means that also the computed control is closer to the optimal one. All these heuristics are confirmed by the numerical simulations in the next section.

4 Some Numerical Tests

The following numerical tests for the algorithm described above were performed in Matlab and took few seconds to run.

Test 1. We first consider a dynamical system where the state lies in \(\mathbb {R}^2\) and the control is 1-dimensional, i.e. \(n=2\) and \(m=1\). The LQR problem is defined by the following matrices:

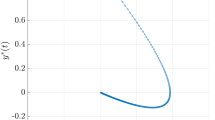

The time horizon is set to \(T=5\) and the starting point is \(x_0=(0, 1)^T\). We assume that the matrix \(\widehat{A}\) is unknown to the algorithm, though we use it to simulate the dynamics. We choose the prior distribution as recommended in Sect. 3, using \(m_0 = (0,0)^T\) and \(\varSigma _0 = 2 I_2\); for all the tests we set \(\sigma =\sqrt{10\varDelta t^p}\) and \(S=2p\), where p is the order of the scheme used in the derivative approximation. Figure 1 shows the piecewise constant controls chosen by the algorithm with \(p=1\) for different values of \(\varDelta t\) and the corresponding trajectories. Recall that the matrix \(\widehat{A}\) is completely unknown at the beginning, so the control we apply in the first steps depends only on the prior distribution we have chosen and clearly is not accurate. This causes the trajectory to deviate from the optimal solution. However, after few steps the matrix \(\widehat{A}\) is well approximated and the algorithm manages to steer the state towards the origin anyway. In Table 1a we have reported the cost of the solution found by the algorithm for different choices of \(\varDelta t\). When \(\varDelta t\) is smaller, the algorithm recovers the matrix \(\widehat{A}\) quickly and thus the deviation from the optimal solution is smaller. This confirms the heuristics of Remark 1. We can also observe a numerical order of convergence equal to 1.

Simulations of Test 1 for different values of \(\varDelta t\). The first row shows the control chosen by the algorithm as a function of time (in red) compared with the optimal control (in blue), computed knowing the matrix \(\widehat{A}\); the second row shows the trajectories in \(\mathbb {R}^2\). The red dot is the trajectory starting point. (Color figure online)

We tried different finite difference schemes for the approximation of the state derivatives (see “Higher-order schemes” in Sect. 3). Table 1b shows the error in the approximation of \(\widehat{A}\) at the end of the simulation, when using schemes of order \(p=1,2,4\) and for different values of \(\varDelta t\). As expected, when we consider more accurate approximations of the gradient, we get better estimations of the matrix \(\widehat{A}\). Unfortunately, the same does not hold for the solution costs, which are not significantly improved if compared with the ones found by the first-order approximation.

Test 2. For the second test we choose \(n=4\), \(m=3\) and \(T=10\). Our matrices are

\(Q=\frac{1}{4} \mathbb {I}_4\), \(R=\frac{1}{3} \mathbb {I}_3\) and \(Q_f=\mathbb {I}_4\), and the starting point is \(x_0 = (1,1,1,1)^T\). We set \(\varDelta t=0.025\), \(S=4\) and use a second order approximation for the derivatives. Fig. 2 shows the control found by the algorithm and Fig. 3 the corresponding trajectory. The behaviour observed in Test 1 is even more visible here: in the first steps the control is not accurate since we do not know \(\widehat{A}\), but after few steps the algorithm learns more on the matrix and manages to bring the state to the origin. The cost of the control found by the algorithm is 1.111, whereas the optimal cost computed using \(\widehat{A}\) is 1.056.

5 Conclusions

We proposed a new algorithm designed to deal with LQR problems when the dynamics is partially unknown. Numerical tests presented in Sect. 4 showed how it manages to approximate the dynamics and to find a suitable control that brings the system towards the origin in a single simulation. Future works include the convergence analysis of the algorithm and possible extensions to the nonlinear case.

References

Anderson, B.D., Moore, J.B.: Optimal Control: Linear Quadratic Methods. Courier Corporation (2007)

Deisenroth, M., Rasmussen, C.E.: PILCO: a model-based and data-efficient approach to policy search. In: Proceedings ICML 2011, pp. 465–472 (2011)

Fleming, W.H., Rishel, R.W.: Deterministic and Stochastic Optimal Control, vol. 1. Springer, New York (2012). https://doi.org/10.1007/978-1-4612-6380-7

Fong, J., Tan, Y., Crocher, V., Oetomo, D., Mareels, I.: Dual-loop iterative optimal control for the finite horizon LQR problem with unknown dynamics. Syst. Control Lett. 111, 49–57 (2018)

Frueh, J.A., Phan, M.Q.: Linear quadratic optimal learning control (LQL). Int. J. Control 73(10), 832–839 (2000)

Ghavamzadeh, M., Mannor, S., Pineau, J., Tamar, A., et al.: Bayesian reinforcement learning: a survey. Foundations Trends® Mach. Learn. 8(5–6), 359–483 (2015)

Li, N., Kolmanovsky, I., Girard, A.: LQ control of unknown discrete-time linear systems-a novel approach and a comparison study. Optimal Control Appl. Methods 40(2), 265–291 (2019)

Murray, R., Palladino, M.: A model for system uncertainty in reinforcement learning. Syst. Control Lett. 122, 24–31 (2018)

Pang, B., Bian, T., Jiang, Z.-P.: Adaptive dynamic programming for finite-horizon optimal control of linear time-varying discrete-time systems. Control Theory Technol. 17(1), 73–84 (2019). https://doi.org/10.1007/s11768-019-8168-8

Pesare, A., Palladino, M., Falcone, M.: Convergence results for an averaged LQR problem with applications to reinforcement learning. Sign. Syst. Math. Control (2021)

Pesare, A., Palladino, M., Falcone, M.: Convergence of the value function in optimal control problems with unknown dynamics. In: 2021 European Control Conference (ECC). IEEE (forthcoming)

Rasmussen, C., Williams, C.: Gaussian Processes for Machine Learning. Adaptive Computation and Machine Learning. MIT Press, Cambridge (2006)

Strikwerda, J.C.: Finite Difference Schemes and Partial Differential Equations. SIAM (2004)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (2018)

Sutton, R.S., Barto, A.G., Williams, R.J.: Reinforcement learning is direct adaptive optimal control. IEEE Control. Syst. 12(2), 19–22 (1992)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Pacifico, A., Pesare, A., Falcone, M. (2022). A New Algorithm for the LQR Problem with Partially Unknown Dynamics. In: Lirkov, I., Margenov, S. (eds) Large-Scale Scientific Computing. LSSC 2021. Lecture Notes in Computer Science, vol 13127. Springer, Cham. https://doi.org/10.1007/978-3-030-97549-4_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-97549-4_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-97548-7

Online ISBN: 978-3-030-97549-4

eBook Packages: Computer ScienceComputer Science (R0)