Abstract

Time series forecasting based on deep architectures has been gaining popularity in recent years due to their ability to model complex non-linear temporal dynamics. The recurrent neural network is one such model capable of handling variable-length input and output. In this paper, we leverage recent advances in deep generative models and the concept of state space models to propose a stochastic adaptation of the recurrent neural network for multistep-ahead time series forecasting, which is trained with stochastic gradient variational Bayes. To capture the stochasticity in time series temporal dynamics, we incorporate a latent random variable into the recurrent neural network to make its transition function stochastic. Our model preserves the architectural workings of a recurrent neural network for which all relevant information is encapsulated in its hidden states, and this flexibility allows our model to be easily integrated into any deep architecture for sequential modelling. We test our model on a wide range of datasets from finance to healthcare; results show that the stochastic recurrent neural network consistently outperforms its deterministic counterpart.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Time series forecasting is an important task in industry and academia, with applications in fields such as retail demand forecasting [1], finance [2,3,4], and traffic flow prediction [5]. Traditionally, time series forecasting was dominated by linear models such as the autoregressive integrated moving average model (ARIMA), which required prior knowledge about time series structures such as seasonality and trend. With an increasing abundance of data and computational power however, deep learning models have gained much research interest due to their ability to learn complex temporal relationships with a purely data-driven approach; thus requiring minimal human intervention and expertise in the subject matter. In this work, we combine deep learning with state space models (SSM) for sequential modelling. Our work follows recent trend that combines the powerful modelling capabilities of deep learning models with well understood theoretical frameworks such as SSMs.

Recurrent neural networks (RNN) are a popular class of neural networks for sequential modelling. There exists a great abundance of literature on time series modelling with RNNs across different domains [6,7,8,9,10,11,12,13,14,15,16]. However, vanilla RNNs have deterministic transition functions, which may limit their expressive power at modelling sequences with high variability and complexity [18]. There is recent evidence that the performance of RNNs on complex sequential data such as speech, music, and videos can be improved when uncertainty is incorporated in the modelling process [19,20,21,22,23,24]. This approach makes an RNN more expressive, as instead of outputting a single deterministic hidden state at every time step, it now considers many possible future paths before making a prediction. Inspired by this, we propose an RNN cell with stochastic hidden states for time series forecasting, which is achieved by inserting a latent random variable into the RNN update function. Our approach corresponds to a state space formulation of time series modelling where the RNN transition function defines the latent state equation, and another neural network defines the observation equation given the RNN hidden state. The main contributions of our paper are as follows:

-

1.

we propose a novel deep stochastic recurrent architecture for multistep-ahead time series forecasting which leverages the ability of regular RNNs to model long-term dynamics and the stochastic framework of state space models.

-

2.

we conduct experiments using publicly available datasets in the fields of finance, traffic flow prediction, air quality forecasting, and disease transmission. Results demonstrate that our stochastic RNN consistently outperforms its deterministic counterpart, and is capable of generating probabilistic forecasts

2 Related Works

2.1 Recurrent Neural Networks

The recurrent neural network (RNN) is a deep architecture specifically designed to handle sequential data, and has delivered state-of-the-art performance in areas such as natural language processing [25]. The structure of the RNN is such that at each time step t, the hidden state of the network - which learns a representation of the raw inputs - is updated using the external input for time t as well as network outputs from the previous step \(t - 1\). The weights of the network are shared across all time steps and the model is trained using back-propagation. When used to model long sequences of data, the RNN is subject to the vanishing/exploding gradient problem [26]. Variants of the RNN such as the LSTM [27] and the GRU [28] were proposed to address this issue. These variants use gated mechanisms to regulate the flow of information. The GRU is a simplification of the LSTM without a memory cell, which is more computationally efficient to train and offers comparable performance to the LSTM [29].

2.2 Stochastic Gradient Variational Bayes

The authors in [21] proposed the idea of combining an RNN with a variational auto-encoder (VAE) to leverage the RNN’s ability to capture time dependencies and the VAE’s role as a generative model. The proposed structure consists of an encoder that learns a mapping from data to a distribution over latent variables, and a decoder that maps latent representations to data. The model can be efficiently trained with Stochastic Gradient Variational Bayes (SGVB) [30] and enables efficient, large-scale unsupervised variational learning on sequential data. Consider input \(\boldsymbol{x}\) of arbitrary size, we wish to model the data distribution \(p(\boldsymbol{x})\) given some unobserved latent variable \(\boldsymbol{z}\) (again, of arbitrary dimension). The aim is maximise the marginal likelihood function \(p(\boldsymbol{x}) = \int p(\boldsymbol{x}|\boldsymbol{z})p(\boldsymbol{z}) \,d\boldsymbol{z}\), which is often intractable when the likelihood \(p(\boldsymbol{x}|\boldsymbol{z})\) is expressed by a neural network with non-linear layers. Instead we apply variational inference and maximise the evidence lower-bound (ELBO):

where \(q(\boldsymbol{z}|\boldsymbol{x})\) is the variational approximation to true the posterior distribution \(p(\boldsymbol{z}|\boldsymbol{x})\) and KL is the Kullback-Leibler divergence. For the rest of this paper we refer to \(p(\boldsymbol{x}|\boldsymbol{z})\) as the decoding distribution and \(q(\boldsymbol{z}|\boldsymbol{x})\) as the encoding distribution. The relationship between the marginal likelihood p(x) and the ELBO is given by

where the third KL term specifies the tightness of the lower bound. The expectation \(\mathbb {E}_{z \sim q(\boldsymbol{z|\boldsymbol{x}})} [\log p(\boldsymbol{x}|\boldsymbol{z})]\) can be interpreted as an expected negative reconstructed error, and \(KL(q(\boldsymbol{z}|\boldsymbol{x})||p(\boldsymbol{z}))\) serves as a regulariser.

2.3 State Space Models

State space models provide a unified framework for time series modelling; they refer to probabilistic graphical models that describe relationships between observations and the underlying latent variable [35]. Exact inference is feasible only for hidden Markov models (HMM) and linear Gaussian state space models (LGSS) and both are not suitable for long-term prediction [31]. SSMs can be viewed a probabilistic extension of RNNs. Inside an RNN, the evolution of the hidden states \(\boldsymbol{h}\) is governed by a non-linear transition function f: \(\boldsymbol{h}_{t+1} = f(\boldsymbol{h}_t, \boldsymbol{x}_{t+1})\) where \(\boldsymbol{x}\) is the input vector. For an SSM however, the hidden states are assumed to be random variables. It is therefore intuitive to combine the non-linear gated mechanisms of the RNN with the stochastic transitions of the SSM; this creates a sequential generative model that is more expressive than the RNN and better capable of modelling long-term dynamics than the SSM. There are many recent works that draw connections between SSM and VAE using an RNN. The authors in [18] and [19] propose a sequential VAE with nonlinear state transitions in the latent space, in [32] the authors investigate various inference schemes for variational RNNs, in [22] the authors propose to stack a stochastic SSM layer on top of a deterministic RNN layer, in [23] the authors propose a latent transition scheme that is stochastic conditioned on some inferable parameters, the authors in [33] propose a deep Kalman filter with exogenous inputs, the authors in [34] propose a stochastic variant of the Bi-LSTM, and in [37] the authors use an RNN to parameterise a LGSS.

3 Stochastic Recurrent Neural Network

3.1 Problem Statement

For a multivariate dataset comprised of \(N+1\) time series, the covariates \(\boldsymbol{x}_{1:T+\tau } = \{\boldsymbol{x}_1,\boldsymbol{x}_2,... \boldsymbol{x}_{T+\tau }\} \in \mathbb {R}^{N \times (T+\tau )}\) and the target variable \(y_{1:T} \in \mathbb {R}^{1 \times T}\). We refer to the period \(\{T+1,T+2,... T+\tau \}\) as the prediction period, where \(\tau \in \mathbb {Z}^+\) is the number of prediction steps and we wish to model the conditional distribution

3.2 Stochastic GRU Cell

Here we introduce the update equations of our stochastic GRU, which forms the backbone of our temporal model:

where \(\sigma \) is the sigmoid activation function, \(\boldsymbol{z}_t\) is a latent random variable which captures the stochasticity of the temporal process, \(\boldsymbol{u}_t\) and \(\boldsymbol{r}_t\) represent the update and reset gates, \(\boldsymbol{W}\), \(\boldsymbol{C}\) and \(\boldsymbol{M}\) are weight matrices, \(\boldsymbol{b}\) is the bias matrix, \(\boldsymbol{h}_t\) is the GRU hidden state and \(\odot \) is the element-wise Hadamard product. Our stochastic adaptation can be seen as a generalisation of the regular GRU, i.e. when \(\boldsymbol{C} = 0\), we have a regular GRU cell [28].

3.3 Generative Model

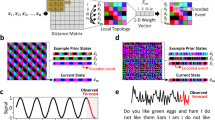

The role of the generative model is to establish probabilistic relationships between the target variable \(y_t\), the intermediate variables of interest (\(\boldsymbol{h}_t\),\(\boldsymbol{z}_t\)), and the input \(\boldsymbol{x}_t\). Our model uses neural networks to describe the non-linear transition and emission processes, and we preserve the architectural workings of an RNN - relevant information is encoded within the hidden states that evolve with time, and the hidden states contain all necessary information required to estimate the target variable at each time step. A graphical representation of the generative model is shown in Fig. 1a, the RNN transitions are now stochastic, faciliated by the random variable \(\boldsymbol{z}_t\). The joint probability distribution of the generative model can be factorised as follows:

where

where \(\textit{GRU}\) is the stochastic GRU update function given by (4)–(7). (9) defines the prior distribution of \(\boldsymbol{z}_t\), which we assume to have an isotropic Gaussian prior (covariance matrix is diagonal) parameterised by a multi-layer perceptron (MLP). When conditioning on past time series for prediction, we use (9), (10) and the last available hidden state \(\boldsymbol{h}_{last}\) to calculate \(\boldsymbol{h}_1\) for the next sequence, otherwise we initialise them to \(\boldsymbol{0}\). We refer to the collection of parameters of the generative model as \(\theta \), i.e. \(\theta = \{\theta _1,\theta _2,\theta _3\}\). We refer to (11) as our generative distribution, which is parameterised by an MLP.

3.4 Inference Model

We wish to maximise the marginal log-likelihood function \(\log p_\theta (y_{2:T}|\boldsymbol{x}_{2:T})\), however the random variable \(\boldsymbol{z}_{t}\) of the non-linear SSM cannot be analytically integrated out. We instead maximise the variational lower bound (ELBO) with respect to the generative model parameters \(\theta \) and some inference model parameter which we call \(\phi \) [36]. The variational approximation of the true posterior \(p(\boldsymbol{z}_{2:T},\boldsymbol{h}_{2:T}|y_{1:T},\boldsymbol{x}_{1:T})\) can be factorised as follows:

and

where \(p_{\theta _2}\) is the same as in (8); this is due to the fact that the GRU transition function is fully deterministic conditioned on knowing \(\boldsymbol{z}_t\) and hence \(p_{\theta _2}\) is just a delta distribution centered at the GRU output value given by (4)–(7). The graphical model of the inference network is given in Fig. 1a. Since the purpose of the inference model is to infer the filtering distribution \(q_\phi (\boldsymbol{z}_t|\boldsymbol{y}_{1:t})\), and that an RNN hidden state contains a representation of current and past inputs, we use a second GRU model with hidden states \(\boldsymbol{g}_t\) as our inference model, which takes the observed target values \(y_t\) and previous hidden state \(\boldsymbol{g}_{t-1}\) as inputs and maps \(g_t\) to the inferred value of \(\boldsymbol{z}_t\):

3.5 Model Training

The objective function of our stochastic RNN is the ELBO \(\textit{L}(\theta ,\phi )\) given by:

where \(p_\theta \) and \(q_\phi \) are the generative and inference distributions given by (8) and (12) respectively. During training, we use the posterior network (15) to infer the latent variable \(\boldsymbol{z}_t\) used for reconstruction. During testing we use the prior network (9) to forecast 1-step-ahead \(\boldsymbol{z}_t\), which has been trained using the KL term in the ELBO function. We seek to optimise the ELBO with respect to decoder parameters \(\theta \) and encoder parameters \(\phi \) jointly, i.e. we wish to find:

Since we do not back-propagate through a sampling operation, we apply the reparameterisation trick [30] to write

where \(\boldsymbol{\epsilon }\sim \boldsymbol{N}(0,\boldsymbol{\textit{I}})\) and we sample from \(\boldsymbol{\epsilon }\) instead. The KL divergence term in (16) can be analytically computed since we assume the prior and posterior of \(\boldsymbol{z}_t\) to be normally distributed.

3.6 Model Prediction

Given the last available GRU hidden state \(\boldsymbol{h}_{last}\), prediction window \(\tau \) and covariates \(\boldsymbol{x}_{T+1:T+\tau }\), we generate predicted target values in an autoregressive manner, assuming that at every time step the hidden state of the GRU \(\boldsymbol{h}_t\) contains all relevant information up to time t. The prediction algorithm of our stochastic GRU is given by Algorithm 1.

4 Experiments

We highlight the model performance on 6 publicly available datasets:

-

1.

Equity options trading price time series available from the Chicago Board Options Exchange (CBOE) datashop. This dataset describes the minute-level traded prices of an option throughout the day. We study 3 options with Microsoft and Amazon stocks as underlyings where \(\boldsymbol{x}_t=\) underlying stock price and \(y_t=\) traded option price

-

2.

The Beijing PM2.5 multivariate dataset describes hourly PM2.5 (a type of air pollution) concentrations of the US Embassy in Beijing, and is freely available from the UCI Machine Learning Repository. The covariates we use are \(\boldsymbol{x}_t=\) temperature, pressure, cumulated wind speed, Dew point, cumulated hours of rainfall and cumulated hours of snow, and \(y_t=\) PM2.5 concentration. We use data from 01/11/2014 onwards

-

3.

The Metro Interstate Traffic Volume dataset describes the hourly interstate 94 Westbound traffic volume for MN DoT ATR station 301, roughly midway between Minneapolis and ST Paul, MN. This dataset is available on the UCI Machine Learning Repository. The covariates we use in this experiment are \(\boldsymbol{x}_t=\) temperature, mm of rainfall in the hour, mm of snow in the hour, and percentage of cloud cover, and \(y_t=\) hourly traffic volume. We use data from 02/10/2012 9AM onwards

-

4.

The Hungarian Chickenpox dataset describes weekly chickenpox cases (childhood disease) in different Hungarian counties. This dataset is also available on the UCI Machine Learning Repository. For this experiment, \(y_t=\) number of chickenpox cases in the Hungarian capital city Budapest, \(\boldsymbol{x}_t=\) number of chickenpox cases in Pest, Bacs, Komarom and Heves, which are 4 nearby counties. We use data from 03/01/2005 onwards

We generate probabilistic forecasts using 500 Monte-Carlo simulations and we take the mean predictions as our point forecasts to compute the error metrics. We tested the number of simulations from 100 to 1000 and found that above 500, the differences in performance were small, and with fewer than 500 we could not obtain realistic confidence intervals for some time series. We provide graphical illustrations of the prediction results in Fig. 2a–2f. We compare our model performance against an AR(1) model assuming the prediction is the same as the last observed value (\(y_{T+\tau }=y_T\)), a standard LSTM model and a standard GRU model. For the performance metric, we normalise the root-mean-squared-error (rmse) to enable comparison between time series:

where \(\bar{y} = mean(y)\), \(\hat{y}_i\) is the mean predicted value of \(y_i\), and N is the prediction size. For replication purposes, in Table 1 we provide (in order): number of training, validation and conditioning steps, (non-overlapping) sequence lengths used for training, number of prediction steps, dimensions of \(\boldsymbol{z}_t\), \(\boldsymbol{h}_t\) and \(\boldsymbol{g}_t\), details about the MLPs corresponding to (9) (\(\boldsymbol{z}_t\) prior) and (15) (\(\boldsymbol{z}_t\) post) in the form of (n layers, n hidden units per layer), and lastly the size of the hidden states of the benchmark RNNs (LSTM and GRU). we use the ADAM optimiser with a learning rate of 0.001. In Table 2, 3, 4 and 5 we observe that the nrmse of the stochastic GRU is lower than its deterministic counterpart for all datasets investigated and across all prediction steps. This shows that our proposed method can better capture both long and short-term dynamics of the time series. With respect to multistep time series forecasting, it is often difficult to accurately model the long-term dynamics. Our approach provides an additional degree of freedom facilitated by the latent random variable which needs to be inferred using the inference network; we believe this allows the stochastic GRU to better capture the stochasticity of the time series at every time step. In Fig. 2e for example, we observe that our model captures well the long-term cyclicity of the traffic volume, and in Fig. 2d where the time series is much more erratic, our model can still accurately predict the general shape of the time series in the prediction period.

To investigate the effectiveness of our temporal model, we compare our prediction errors against a model without a temporal component, which is constructed using a 3-layer MLP with 5 hidden nodes and ReLU activation functions. Since we are using covariates in the prediction period (3), we would like to verify that our model can outperform a simple regression-type benchmark which approximates a function of the form \(y_t=f_\psi (\boldsymbol{x_t})\); we use the MLP to parameterise the function \(f_\psi \). We observe in Table 6 that our proposed model outperforms a regression-type benchmark for all the experiments, which shows the effectiveness of our temporal model. It is also worth noting that in our experiments we use the actual values of the future covariates. In a real forecasting setting, the future covariates themselves could be outputs of other mathematical models, or they could be estimated using expert judgement.

5 Conclusion

In this paper we have presented a stochastic adaptation of the Gated Recurrent Unit which is trained with stochastic gradient variational Bayes. Our model design preserves the architectural workings of an RNN, which encapsulates all relevant information into the hidden state, however our adaptation takes inspiration from the stochastic transition functions of state space models by injecting a latent random variable into the update functions of the GRU, which allows the GRU to be more expressive at modelling highly variable transition dynamics compared to a regular RNN with deterministic transition functions. We have tested the performance of our model on different publicly available datasets and results demonstrate the effectiveness of our design. Given that GRUs are now popular building blocks for much more complex deep architectures, we believe that our stochastic GRU could prove useful as an improved component which can be integrated into sophisticated deep learning models for sequential modelling.

References

Bandara, K., Shi, P., Bergmeir, C., Hewamalage, H, Tran, Q., Seaman, B.: Sales demand forecast in E-commerce using a long short-term memory neural network methodology. In: ICONIP, Sydney, NSW, Australia, pp. 462–474 (2019)

McNally, S., Roche, J., Caton, S.: Predicting the price of Bitcoin using machine learning. In: PDP, Cambridge, UK, pp. 339–343 (2018)

Hu, Z., Zhao, Y., Khushi, M.: A Survey of Forex and stock price prediction using deep learning. Appl. Syst. Innov. 4(9) (2021). https://doi.org/10.3390/asi4010009

Zhang, R., Yuan, Z., Shao, X.: A new combined CNN-RNN model for sector stock price analysis. In: COMPSAC, Tokyo, Japan (2018)

Lv, Y., Duan, Y., Kang, W.: Traffic flow prediction with big data: a deep learning approach. IEEE Trans. Intell. Transp. 16(2), 865–873 (2014)

Dolatabadi, A., Abdeltawab, H., Mohamed, Y.: Hybrid deep learning-based model for wind speed forecasting based on DWPT and bidirectional LSTM Network. IEEE Access. 8, 229219–229232 (2020). https://doi.org/10.1109/ACCESS.2020.3047077

Alazab, M., Khan, S., Krishnan, S., Pham, Q., Reddy, M., Gadekallu, T.: A multidirectional LSTM model for predicting the stability of a smart grid. IEEE Access. 8, 85454–85463 (2020). https://doi.org/10.1109/ACCESS.2020.2991067

Liu, T., Wu, T., Wang, M, Fu, M, Kang, J., Zhang, H.: Recurrent neural networks based on LSTM for predicting geomagnetic field. In: ICARES, Bali, Indonesia (2018)

Lai, G., Chang, W., Yang, Y., Liu, H.: Modelling long-and short-term temporal patterns with deep neural networks. In: SIGIR (2018)

Apaydin, H., Feizi, H., Sattari, M.T., Cloak, M.S., Shamshirband, S., Chau, K.W.: Comparative analysis of recurrent neural network architectures for reservoir inflow forecasting: Water. vol. 12 (2020). https://doi.org/10.3390/w12051500

Di Persio, L., Honchar, O.: Analysis of recurrent neural networks for short-term energy load forecasting. In: AIP (2017)

Meng, X., Wang, R., Zhang, X., Wang, M., Ma, H., Wang, Z.: Hybrid neural network based on GRU with uncertain factors for forecasting ultra-short term wind power. In: IAI (2020)

Khaldi, R., El Afia, A., Chiheb, R.: Impact of multistep forecasting strategies on recurrent neural networks performance for short and long horizons. In: BDIoT (2019)

Mattos, C.L.C., Barreto, G.A.: A stochastic variational framework for recurrent gaussian process models. Neural Netw. 112, 54–72 (2019). https://doi.org/10.1016/j.neunet.2019.01.005

Seleznev, A., Mukhin, D., Gavrilov, A., Loskutov, E., Feigin, A.: Bayesian framework for simulation of dynamical systems from multidimensional data using recurrent neural network. Chaos, vol. 29 (2019). https://doi.org/10.1063/1.5128372

Alhussein, M., Aurangzeb, K., Haider, S.: Hybrid CNN-LSTM model for short-term individual household load forecasting. IEEE Access. 8, 180544–180557 (2020). https://doi.org/10.1109/ACCESS.2020.3028281

Sezer, O., Gudelek, M., Ozbayoglu, A.: Financial time series forecasting with deep learning: a systematic literature review: 2005–2019. Appl. Soft Comput. 90 (2020). https://doi.org/10.1016/j.asoc.2020.106181

Chung, J., Kastner, K., Dinh, L., Goel, K., Courville, A., Bengio, Y.: A recurrent latent variable model for sequential data. In: NIPS, Montreal, Canada (2015)

Bayer, J., Osendorfer, C.: Learning stochastic recurrent networks. arXiv preprint. arXiv: 1411.7610 (2014)

Goyal, A., Sordoni, A., Cote, M., Ke, N., Bengio, Y.: Z-forcing: training stochastic recurrent networks. In: NIPS, California, USA, pp. 6716–6726 (2017)

Fabius, O., Amersfoort, J.R.: Variational recurrent auto-encoders. arXiv preprint. arXiv 1412, 6581 (2017)

Fraccaro, M., Sønderby, S., Paquet, U., Winther, O.: Sequential neural models with stochastic layers. In: NIPS, Barcelona, Spain (2016)

Karl, M., Soelch, M., Bayer, J., Smagt, P.: Deep Variational Bayes Filters: Unsupervised learning of state space models from raw data. In: ICLR, Toulon, France (2017)

Franceschi, J., Delasalles, E., Chen, M., Lamprier, S., Gallinari, P.: Stochastic latent residual video prediction. In: ICML, pp. 3233–3246 (2020)

Young, T., Hazarika, D., Poria, S., Cambria, E.: Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 13(3), 55–75 (2018). https://doi.org/10.1109/MCI.2018.2840738

Pascanu, R., Mikolov, T., Bengio, Y.: On the difficulty of training recurrent neural networks. In: ICML, Atlanta, GA, USA, pp. 1310–1318 (2013)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Cho, K., Merrienboer, B., Bahdanau, D., Bengio, Y.: On the properties of neural machine translation: encoder-decoder approaches. In: SSST, Doha, Qatar, pp. 103–111 (2014)

Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empiral evaluation of gated recurrent neural networks on sequence modelling. arXiv preprint. arXiv: 1412.3555 (2014)

Kingma, D., Welling, M.: Auto-encoding variational Bayes. In: ICLR, Banff, Canada (2014)

Liitiainen, E., Lendasse, A.: Long-term prediction of time series using state-space models. In: ICANN, Athens, Greece (2006)

Krishnan, R., Shalit, U., Sontag, D.: Structured inference networks for nonlinear state space models. In: AAAI, California, USA, pp. 2101–2109 (2017)

Krishnan, R., Shalit, U., Sontag, D.: Deep Kalman filters. arXiv preprint. arXiv 1511, 05121 (2015)

Shabanian, S., Arpit, D., Trischler, A., Bengio, Y.: Variational bi-LSTMs. arXiv preprint. arXiv 1711, 05717 (2017)

Durbin, J., Koopman, S.: Time Series Analysis by State Space Methods, vol. 38. Oxford University Press, Oxford (2012)

Jordan, M., Ghahramani, Z., Jaakkola, T., Saul, L.: An introduction to variational methods for graphical models. Mach. Learn. 37(2), 183–233 (1999)

Rangapuram, S., Seeger, M., Gasthaus, J., Stella, L., Yang, Y., Janushowski, T.: Deep state space models for time series forecasting. In: NIPS, Montreal, Canada (2018)

Acknowledgments

We would like to thank Dr Fabio Caccioli (Dpt of Computer Science, UCL) for proofreading this manuscript and for his questions and feedback.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Yin, Z., Barucca, P. (2021). Stochastic Recurrent Neural Network for Multistep Time Series Forecasting. In: Mantoro, T., Lee, M., Ayu, M.A., Wong, K.W., Hidayanto, A.N. (eds) Neural Information Processing. ICONIP 2021. Lecture Notes in Computer Science(), vol 13108. Springer, Cham. https://doi.org/10.1007/978-3-030-92185-9_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-92185-9_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92184-2

Online ISBN: 978-3-030-92185-9

eBook Packages: Computer ScienceComputer Science (R0)