Abstract

In this paper, we present a process of building a social listening system based on aspect-based sentiment analysis in Vietnamese, from creating a dataset to building a real application. Firstly, we create UIT-ViSFD, a Vietnamese Smartphone Feedback Dataset, as a new benchmark dataset built based on a strict annotation scheme for evaluating aspect-based sentiment analysis, consisting of 11,122 human-annotated comments for mobile e-commerce, which is freely available for research purposes. We also present a proposed approach based on the Bi-LSTM architecture with the fastText word embeddings for the Vietnamese aspect-based sentiment task. Our experiments show that our approach achieves the best performances (in F1-score) of 84.48% for the aspect task and 63.06% for the sentiment task, which performs several conventional machine learning and deep learning systems. Lastly, we build SA2SL, a social listening system based on the best performance model on our dataset, which will inspire more social listening systems in the future.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Sentiment Analysis (SA) is a significant task and widely applied in many fields such as education, commerce, and marketing. However, a regular SA system may not seem sufficient for business organizations and customers. Simple SA systems consisting of three classes: positive, negative, and neutral, have apparent weaknesses that make them difficult to apply in reality. While enterprises expect an accurate system, the SA systems cannot accurately predict if the sentence does not explicitly express a clear sentiment or an opinion. Aspect-Based Sentiment Analysis (ABSA), an extended research form of SA, has the ability to identify sentiments of specific aspects, features, or entities extracted from user comments or feedback.

According to Statista Research DepartmentFootnote 1, in 2017, the number of smartphone users in Vietnam was estimated to reach approximately 28.77 million. This indicates that around 31% of the population used a smartphone at this time, with this share predicted to rise to 40% by 2021. In Vietnam, smartphones are used for more than just making and receiving phone calls; they are also used for work, communication, entertainment, and shopping. The phone is chosen differently depending on the needs and purposes of the user. Seeing the potential of the ABSA task on the smartphone data domain in the Vietnamese, we build UIT-ViSFD, a Vietnamese Smartphone Feedback Dataset for evaluating ABSA. To ensure the data is plentiful and accurate, we collect feedback from a popular e-commerce website in Vietnam.

High-quality and large-scale datasets are essential in natural language processing for low-resource languages like Vietnamese. Hence, we aim to build a dataset and implement an approach using machine learning techniques for a social listening system. The task is described as follows: the input of the task is a textual comment related to smartphones that customers generate on social media, outputs are aspects of smartphones and their sentiments are mentioned in the textual comment. Several examples are presented in Table 1.

In this paper, we have three main contributions summarized below.

-

We present UIT-ViFSD, a new benchmark Vietnamese dataset for evaluating ABSA in mobile e-commerce, consisting of 11,122 human-annotated comments with two tasks: aspect detection and sentiment classification. Our dataset is freely available for research purposes.

-

We propose an approach using the Bi-LSTM for the Vietnamese ABSA, achieving the best F1-score performances: 84.48% for the aspect detection and 63.06% for the sentiment detection, which performs other systems based on conventional machine learning (Naive Bayes, SVM, and Random Forest) and other deep learning models (LSTM and CNN).

-

We propose SA2SL, a new social listening system based on ABSA for Vietnamese mobile e-commerce texts, which is the basis for making purchase decisions for users and the evidence for managers to improve their products and services.

2 Related Work

SA is a vibrant field to create many studies and their applications in various fields such as economics, politics, and education. In particular, there are a variety of datasets and methods built in different languages and domains. For English, datasets are available for electric fields [2]; books, equipment kitchen, and electronic products [20]. Besides, a range of competitions in SemEval 2014 Task 4 [19], SemEval 2015 Task 12 [18], and SemEval 2016 Task 5 [17] attracted significant attention.

Although Vietnam has nearly 100 million people, Vietnamese is a low-resource language. The research works in the field of SA in Vietnamese such as student feedback detection [27], hate speech detection [26], emotion analysis [10], constructive and toxic detection [15], and complaint classification [16]. However, these tasks are relatively not as complicated as the ABSA task. The first ABSA shared-task in Vietnamese was organized by the Vietnamese Language and Speech Processing (VLSP) community in 2018 [14]. Nguyen et al. [14] created datasets for studying two tasks: aspect detection and sentiment classification in the hotel and restaurant. Nguyen et al. [13] proposed the dataset on the same domains as restaurant and hotel.

We aim to create a high-quality dataset about smartphones to evaluate the ABSA task in Vietnamese. The smartphone is a top-rated commercial product that is still thriving today. The amount of feedback data from users about the smartphone is enormous and has great potential for exploitation. Therefore, we review several studies related to this domain. The competition SemEval 2016: Task 5Footnote 2 introduced a couple of datasets in Chinese and English. Singh et al. [25] presented a dataset with many aspects: camera, OS, battery, processor, screen, size, cost, storage aspect, and two sentiments labels: positive, negative for three types of phones: iPhone 6, Moto G3, and Blackberry. Yiran et al. [28] built a dataset with aspects such as display, battery, camera, and three sentiment labels: positive, negative, and neutral. In Vietnamese, Mai et al. [12] proposed a small dataset including 2,098 annotated comments about smartphones at the sentence level, not enough to evaluate current SOTA models. As a result, our dataset is more complex and extensive than the previous Vietnamese dataset [12]. Inspired from previous studies [7, 29], we also propose an approach using Bi-LSTM for Vietnamese aspect-based sentiment analysis. From the best performance of this approach and the study [6], we present a new system based on aspect-based sentiment analysis for business intelligence.

3 Dataset Creation

The creation process of our dataset comprises five different phases. First, we collect comments from a well-known e-commerce website for smartphones in Vietnam (see Sect. 3.1). Secondly, we build annotation guidelines for annotators to determine aspects and their sentiments and how to annotate data correctly (see Sect. 3.2). Annotators are trained with the guidelines and annotate data for two tasks in the two following steps: aspect detection and aspect polarity classification (see Sect. 3.3). The inter-annotator agreement (IAA) of annotators in the training process is ensured that it reaches over 80% before performing data annotation independently. Finally, we provide an in-depth analysis of the dataset that helps AI programmers or experts choose models and features suitable for this dataset (see Sect. 3.4).

3.1 Data Preparation

We crawl textual feedback from customers on a large e-commerce website in Vietnam. To ensure diverse and valued data, we collect feedback from the top ten popular smartphone brands used in Vietnam. There are various long comments, rambling reviews, and contradictory reviews, which are ambiguous to understand to determine the correct label of them. Therefore, the comments that are longer than 250 tokens (makes up a very small rate) are removed. We also delete comments that contain too many misspellings in them, which are not easy to understand and annotate correctly.

3.2 Annotation Guidelines

Data annotation is performed by five annotators who follow annotation guidelines and a strict annotating process to ensure data quality. Annotators determine aspects of each comment and then annotate their sentiment polarity labels: positive (Pos), neutral (Neu), and negative (Neg). Table 1 summarizes all aspects (10 aspects) and sentiment polarities (3 sentiment polarities) in the guidelines, including illustrative examples. For some comments that do not relate to any aspect or do not evaluate the product, we annotate an OTHERS label for these cases which do not express the sentiment.

3.3 Annotation Process

Annotators spend six training rounds to obtain a high inter-annotator agreement, and the strict guidelines are complete. In the first round, after building the first guidelines, our annotators annotate 200 comments together to understand the principles of data annotation. For the five remaining rounds, each round, we randomly take a set of 200 comments and individually annotate these 200 comments. For disagreement cases, we decide the final label by discussing and having a poll among annotators. These causes of disagreement are discussed and corrected in the guidelines; we also add cases that the guidelines have not covered after thorough discussion. The six training rounds resulted in a high inter-annotator agreement of the team and sufficiently completed full guidelines to achieve the dataset. The inter-annotator agreement is estimated by Cohen’s Kappa coefficient [2]. The formula is described as follows.

where k is the annotator agreement, Pr(a) is the relative observed agreement among raters, and Pr(e) the hypothetical probability of chance agreement. To ensure the dataset quality, we calculate inter-annotator agreements of pairs of team members that annotate both the aspect annotation task and the sentiment annotation task. Until the inter-annotator agreement of all labels reaching over 80% and completing the annotation guidelines, annotators have labeled the comments independently. During this annotating phase, in case of encountering difficult feedback, we discuss together the correct annotation and then revise the guidelines to obtains more high-quality guidelines. Figure 1 shows the inter-annotator agreements on two tasks during training phases.

3.4 Dataset Statistics

Our dataset consists of 11,122 comments, including five columns: index (row number), comment (comments), n_star (customer star ratings), date_time (comment time), and label (label of a comment). We randomly divide the dataset into three sets: the training (Train), development (Dev), and test (Test) sets in the 7:1:2 ratio.

Table 2 presents overview statistics of our dataset. The splitting ratio corresponds to the number of words and labels in the Train, Dev, and Test sets. Each comment has three aspect labels and is approximately 36 tokens on average.

Table 3 describes the distribution of aspects and their sentiment in the Train, Dev, and Test sets of our dataset. Through our analysis, the dataset has an uneven distribution on both the aspect and sentiment labels. While some aspect labels have many data points, another has a negligible number (the General aspect has 6,936 data points compared to the Storage with 132 annotated comments). In addition, we notice a significant difference between the three sentiment polarities. Positive accounts for the most significant number of 56.13% of the total number of labels followed the negative polarity with 31.70%, whereas the neutral polarity only accounts for 12.17%. Our dataset is imbalanced and includes diverse comments on different smartphone products on social media, which is challenging to evaluate ML algorithms on social media texts.

4 Our Approach

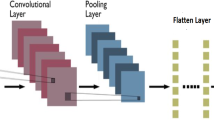

Inspired by Bi-LSTM for text classification [29], we propose an approach using the Bi-LSTM model for Vietnamese ABSA. The overview architecture is depicted in Fig. 2. This architecture consists of a tokenizer, embedding layer, SpatialDropout1D layer, Bi-LSTM layer, convolutional layer, two pooling layers, and a dense layer. First, the comment goes through a tokenizer, which converts each token in the comment to an integer value based on the vocabulary index. Then, they are processed through an embedding layer to convert into representative vectors. The architecture uses the fastText embeddings [3] as input token representations. FastText has good token representations, and it encodes for rare tokens that do not appear during training and is a good selection for Vietnamese social media texts [11]. To minimize overfitting, we utilize SpatialDropout1D to lower the parameters after each train. We employ a Bi-LSTM layer to extract abstract features, which is made up of two LSTMs with their outputs stacked together. The comment is read forward by one LSTM and backward by the other. We concatenate the hidden states of each LSTM after they have processed their respective final token. The Bi-LSTM uses two separate LSTM units, one for forward and one for backward. A convolutional layer is used to convert a multi-dimensional matrix from Bi-LSTM to a 1-dimensional matrix. The pooling layer consists of two parallel layers: the global average pool and the global max pool. The function of the pooling layer is conducted to reduce the spatial size of the representation. The idea is to choose the highest element and the average element of the feature map to extract the most salient features from the convolutional layer. Finally, the labels of the two sub-tasks for the ABSA task is obtained after normalizing in the dense layer.

5 Experiments

5.1 Baseline Systems

We compare the proposed approach with the following baselines. For traditional machine learning, we experiment with a system based on Naive Bayes [21], Support Vector Machine (SVM) [1], and Random Forest [4], which are popular methods for text classification. For deep neural networks, we implement systems based on Convolutional Neural Networks (CNN) [8] and Long Short-Term Memory (LSTM) [23], which achieved SOTA results in different NLP tasks.

5.2 Experimental Results

There are two phases to measure the ABSA systems: aspect detection and sentiment prediction. We use the precision, recall, and F1-score (macro average) to measure the performance of models. We do not detect sentiments of OTHERS aspect because they cannot show their sentiments, so we give it the NaN value.

Table 4 presents overall results on aspect-based and sentiment-based tasks on machine learning systems. According to our results, we can see that deep learning models have significantly better performance than traditional machine learning models. In particular, Bi-LSTM achieves the best F1-scores of 84.48% and 63.06% for the aspect detection and sentiment prediction, whereas SVM shows the lowest performances. Besides, the sentiment detection task makes it difficult for the first models when the F1-score are low (below 70%). There is a considerable discrepancy between deep learning and machine learning models. In particular, the deep learning model (Bi-LSTM) obtains the best F1-score of 84.48%, whereas the best machine learning model (Naive Bayes) only gains 64.65% in F1-score.

The results of the Bi-LSTM on the aspects and sentiment classification are shown in Table 5. As mentioned above, the sentiment detection performance of the Bi-LSTM is lower than that of the aspect detection results (F1-score of 84.48% for the aspect detection task and that of 63.06% for the sentiment detection task). In the aspect detection task, the Bi-LSTM system also achieves relatively high and positive results (F1-score for all aspects is 60% higher, and many aspects have F1-score below 80%). On the contrary, the system performance on the sentiment-aspect detection task is relatively low (F1-score for all aspects is below 75% and the aspect with the highest F1-score is Camera with 74.69%). In terms of aspects detection, the highest is the Battery with 95.00% F1-score. As for the sentiment detection, the Storage aspect polarity is only 30.10% F1-score. The Storage label result explains the lack of quantity uniformity in the labels (the Storage aspect only covers 1.19% of the dataset). These results are pretty interesting to explore further models on this dataset. In general, the Bi-LSTM system outperforms other algorithms when it comes to detecting aspects and their sentiments. However, their ability to extract sentiment features for each aspect is limited in all machine learning models, which will be exploited in future work.

6 SA2SL: Social Listening System Using ABSA

Inspired by the best performance results and the investigation [6], we propose SA2SL, a social listening system architecture based on Vietnamese ABSA for analyzing what customers discuss about products on social media. Figure 3 depicts a social listening system for smartphones that uses aspect-based sentiment analysis. This application assists customers and business companies in automatically categorizing comments and determining consumer perspectives. The application assists shoppers in selecting a phone that meets their specific requirements. Moreover, manufacturers can focus on the needs, expectations of the customers and propose suitable improvement options to improve product quality in future. Aspect sentiment analysis is crucial because it can assist businesses in automatically sorting and analyzing consumer data, automating procedures such as customer service activities, and gaining valuable insights.

Firstly, the user selects the name of the phone, and the application collects all comments on that phone. Secondly, we do the pre-processing of the comments, and then we feed them into word embedding, and they become vectors. Next, the vectors are then analyzed using two models: aspect detection and sentiment detection (aspect#sentiment). The input is a list of comments, and the output is aspects and their sentiments. The final analyses are visualized as follows: (1) Depending on which aspect they are interested in, the consumer or business company recognizes the analysis of user feedback regarding the sentiment polarity in the first chart. To clearly understand an aspect, the user of the system can select one of ten aspects, and then the system detail displays the distribution of its sentiment polarities. (2) The second chart describes the proportion (%) of the predicted aspects and summarizes their sentiments of all comments.

7 Conclusion and Future Work

We have three main contributions, which are (1) creating UIT-ViSFD, a benchmark dataset of smartphone feedback for the ABSA task, (2) proposing the approach for this task, and (3) building a social listening system based on the primary technology of ABSA. We experimented with two different types of models: traditional machine learning and deep learning on our dataset to compare with our approach. Our approach outperformed the others, achieving F1-scores of 84.48% and 63.06% for aspect detection and sentiment detection, respectively. Although the aspect detection results were positive, the sentiment detection results were relatively low, which is challenging for further machine learning-based systems. Finally, we presented a novel social listening system based on ABSA for the low-resource language like Vietnamese.

NLP experts can develop a new span detection dataset using our ABSA dataset. Besides, we conduct experiments based on powerful aspect-based systems using BERTology models [22], transfer learning approaches [24], and reinforcement learning [9]. We utilize the relationship between the rank of input data and the performance of a random weight neural network [5] to enhance the overall performance of the task. Lastly, we recommend that a social listening system should be integrated with different social media tasks [10, 15, 16] into the social listening system, which benefits social business intelligence.

References

Al-Smadi, M., Qawasmeh, O., Al-Ayyoub, M., Jararweh, Y., Gupta, B.: Deep recurrent neural network vs. support vector machine for aspect-based sentiment analysis of Arabic hotels’ reviews. J. Comput. Sci. 27, 386–393 (2018)

Bhowmick, P.K., Basu, A., Mitra, P.: An agreement measure for determining inter-annotator reliability of human judgements on affective text. In: COLING 2008 (2008)

Bojanowski, P., Grave, E., Joulin, A., Mikolov, T.: Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 5, 135–146 (2017)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Cao, W., Hu, L., Gao, J., Wang, X., Ming, Z.: A study on the relationship between the rank of input data and the performance of random weight neural network. Neural Comput. Appl. 32(16), 12685–12696 (2020). https://doi.org/10.1007/s00521-020-04719-8

Chaturvedi, S., Mishra, V., Mishra, N.: Sentiment analysis using machine learning for business intelligence. In: 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), pp. 2162–2166. IEEE (2017)

Do, H.T.T., Huynh, H.D., Van Nguyen, K., Nguyen, N.L.T., Nguyen, A.G.T.: Hate speech detection on vietnamese social media text using the bidirectional-LSTM model. arXiv preprint arXiv:1911.03648 (2019)

Dos Santos, C., Gatti de Bayser, M.: Deep convolutional neural networks for sentiment analysis of short texts (2014)

Gai, K., Qiu, M.: Reinforcement learning-based content-centric services in mobile sensing. IEEE Network 32(4), 34–39 (2018)

Ho, V.A., et al.: Emotion recognition for Vietnamese social media text. In: Nguyen, L.-M., Phan, X.-H., Hasida, K., Tojo, S. (eds.) PACLING 2019. CCIS, vol. 1215, pp. 319–333. Springer, Singapore (2020). https://doi.org/10.1007/978-981-15-6168-9_27

Huynh, H.D., Do, H.T.T., Van Nguyen, K., Nguyen, N.L.T.: A simple and efficient ensemble classifier combining multiple neural network models on social media datasets in vietnamese. arXiv preprint arXiv:2009.13060 (2020)

Mai, L., Le, B.: Aspect-based sentiment analysis of Vietnamese texts with deep learning. In: Nguyen, N.T., Hoang, D.H., Hong, T.-P., Pham, H., Trawiński, B. (eds.) ACIIDS 2018. LNCS (LNAI), vol. 10751, pp. 149–158. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75417-8_14

Nguyen, H., Nguyen, T., Dang, T., Nguyen, N.: A corpus for aspect-based sentiment analysis in Vietnamese, pp. 1–5 (2019)

Nguyen, H., et al.: VLSP shared task: sentiment analysis. J. Comput. Sci. Cybern. 34, 295–310 (2019)

Nguyen, L.T., Van Nguyen, K., Nguyen, N.L.T.: Constructive and toxic speech detection for open-domain social media comments in vietnamese. arXiv preprint arXiv:2103.10069 (2021)

Nguyen, N.T.H., Phan, P.H.D., Nguyen, L.T., Van Nguyen, K., Nguyen, N.L.T.: Vietnamese open-domain complaint detection in e-commerce websites. arXiv preprint arXiv:2104.11969 (2021)

Pontiki, M., et al.: Semeval-2016 task 5: aspect based sentiment analysis. In: International Workshop on Semantic Evaluation, pp. 19–30 (2016)

Pontiki, M., Galanis, D., Papageorgiou, H., Manandhar, S., Androutsopoulos, I.: Semeval-2015 task 12: aspect based sentiment analysis. Proc. SemEval 2015, 486–495 (2015)

Pontiki, M., et al.: SemEval-2014 task 4: Aspect based sentiment analysis. In: Proceedings of SemEval 2014, pp. 27–35. Association for Computational Linguistics, Dublin, Ireland (2014)

Popescu, A.M., Etzioni, O.: Extracting product features and opinions from reviews. In: Kao A., Poteet S.R. (eds.) Natural language processing and text mining, pp. 9–28. Springer, London (2007) https://doi.org/10.1007/978-1-84628-754-1_2

Rish, I.: An empirical study of the naïve bayes classifier. In: IJCAI 2001 Work Empirical Methods in Artificial Intelligence, vol. 3 (2001)

Rogers, A., Kovaleva, O., Rumshisky, A.: A primer in bertology: What we know about how bert works. Trans. Assoc. Comput. Linguis. 8, 842–866 (2020)

Ruder, S., Ghaffari, P., Breslin, J.: A hierarchical model of reviews for aspect-based sentiment analysis. In: EMNLP (2016)

Ruder, S., Peters, M.E., Swayamdipta, S., Wolf, T.: Transfer learning in natural language processing, pp. 15–18 (2019)

Singh, S.M., Mishra, N.: Aspect based opinion mining for mobile phones. In: 2016 2nd International Conference on Next Generation Computing Technologies (NGCT), pp. 540–546 (2016)

Van Huynh, T., Nguyen, V.D., Van Nguyen, K., Nguyen, N.L.T., Nguyen, A.G.T.: Hate speech detection on vietnamese social media text using the Bi-GRU-LSTM-CNN model. arXiv preprint arXiv:1911.03644 (2019)

Van Nguyen, K., Nguyen, V.D., Nguyen, P.X., Truong, T.T., Nguyen, N.L.T.: UIT-VSFC: Vietnamese students’ feedback corpus for sentiment analysis. In: KSE 2018, pp. 19–24. IEEE (2018)

Yiran, Y., Srivastava, S.: Aspect-based sentiment analysis on mobile phone reviews with lda. In: Proceedings of the 2019 4th International Conference on Machine Learning Technologies, pp. 101–105 (2019)

Zhou, P., Qi, Z., Zheng, S., Xu, J., Bao, H., Xu, B.: Text classification improved by integrating bidirectional lstm with two-dimensional max pooling. Proc. COLING 2016, 3485–3495 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Luc Phan, L. et al. (2021). SA2SL: From Aspect-Based Sentiment Analysis to Social Listening System for Business Intelligence. In: Qiu, H., Zhang, C., Fei, Z., Qiu, M., Kung, SY. (eds) Knowledge Science, Engineering and Management . KSEM 2021. Lecture Notes in Computer Science(), vol 12816. Springer, Cham. https://doi.org/10.1007/978-3-030-82147-0_53

Download citation

DOI: https://doi.org/10.1007/978-3-030-82147-0_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-82146-3

Online ISBN: 978-3-030-82147-0

eBook Packages: Computer ScienceComputer Science (R0)