Abstract

Many educators in higher education are using blended learning as an important component of their academic programs. Of the many forms of blended learning, the flipped learning approach, has attracted much attention from many universities, where students learn course materials typically through online video lectures before class so that they can do more active learning in the classroom. Its actual implementation, however, is often beset with challenges, with student disengagement in pre-class online activities being one major problem reported in many previous flipped learning studies. Students who fail to complete the pre-class tasks often have difficulty in performing the follow-up in-class discussions with the instructors and peers. This study, which is part of a larger research project on engaging student in flipped learning, explored the use of two types of chatbots in flipped learning online sessions: Quiz Chatbot, and Self-Regulated Learning Chatbot. We described in detail the implementations of the two chatbots, and evaluated the chatbots in terms of its perceived usefulness, and perceived ease of use. We also examined the extent of student behavioral engagement with the chatbots. Suggestions to improve the chatbots were discussed, along with recommendations for future research.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Background

Flipped learning refers to a pedagogical approach that combines pre-class online learning with face-to-face (F2F) learning. Pre-class preparations may take various forms including watching video lectures, taking short quizzes, and having discussions with fellow students (Lo et al. 2017). During the face-to-face sessions, students are expected to utilize their time to apply the knowledge learned through problem-based discussions and presentations (Bishop and Verleger 2013).

Although the flipped learning approach is not a panacea for all education ills, it appears to promote significantly higher student achievement compared to the traditional instructor lecture approach (Strelan et al. 2020; van Alten et al. 2019). However, it is important to note that the positive learning outcome afforded by flipped learning is only possible if all students come prepared to the face-to-face class sessions. If students fail to complete the pre-class learning activities, the instructor will have no choice but to re-teach the materials in class. This would render the entire flipped learning approach no different than a traditional lecture.

Many previous flipped learning studies have found that a lack of student engagement in the pre-class activities to be a persistent and widespread problem. Diwanji et al. (2018), for instance, reported that only 27.7% of students often prepared for a class. In other studies, 39% of students reported that they did not do any preparation at all for the face-to-face classes (Sahin et al. 2015), and more than 70% students skipped the pre-class learning activities (Palmer 2015). Some of the main reasons for not preparing for classes included a lack of motivation for doing the pre-class activities (Lo et al. 2017), and failing to understand the pre-class course content because students have difficulty communicating with the instructor when watching videos at home (Scott et al. 2016).

To engage students in the pre-class activities, most studies linked the completion of pre-class tasks to a portion of students’ final course grade (Diwanji et al. 2018; Elliott and Rob 2014). Students who complete the pre-class tasks (e.g., watch videos, answer quizzes) will be awarded some marks, while those who ignored the tasks will not receive any. Yet, using “marks-for-task-completion” may not necessary promote student in-depth thinking. Students may simply “play the game” of assessment by arbitrarily clicking on the quiz answers, or letting the videos play to completion without anyone actually watching them.

The purpose of the present study is to explore the use of chatbots in flipped learning online sessions. More specifically, this study is part of a larger research project that examines whether chatbots can be used as an innovative strategy to promote flipped learning student engagement in the online pre-class activities. In this article, we focus on providing a thick description of the development of two chatbots: Quiz bot, and Goal-setting bot, using a visual development tool – Dialogflow. We then evaluated the chatbots in terms of its perceived usefulness, and perceived ease of use from the students’ perspectives. We also examined the extent of student engagement with the chatbots.

1.2 Chatbot

A chatbot is a computer programmed conversational tool bot that interacts with users on a certain topic using natural language (Diwanji et al. 2018). A chatbot can be either a text-based or audio-based dialog system that facilitates human-computer interaction by asking or answering questions (Fryer et al. 2017), and prompt students to complete their assignments (McNeal 2017).

Chatbots have the potential to reduce the transactional distance that frequently happens between learners and instructors in an online learning space. According to Moore’s (1997) theory of transactional distance, there is a psychological and communication gap between the instructor and the learner in online learning space which creates room for potential misunderstanding. If transactional distance is reduced, learners are more likely to feel satisfied with their learning environment. Chatbots can help reduce the transactional distance by providing a dialogue for the learner to interact with the course content. There is preliminary evidence that chatbots can motivate students for learning and keep them engaged in the learning process (Diwanji et al. 2018), and decrease students’ sense of isolation during online learning (Huang et al. 2019).

Despite the growing interest in chatbots, extant research is still in its infancy. Previous research has focused mainly on the use of chatbots in second or foreign language learning (Smutny and Schreiberova 2020) such as using chatbot to practice vocabulary knowledge with learners (Kim 2018). The use of chatbots outside the domain of second or foreign language learning is limited. We do not know much about the use of chatbots to support other forms of online course activities such as providing hints to quiz questions, or providing goal-setting recommendations to learners.

Chatbot-Building Tool

In this study, Dialogflow from Google was employed to develop our chatbots activities. We decided to use Dialogflow due to the following reasons: first, it is free and enables users to add chatbots into webpages. The Moodle learning management system was utilized by the university in the present study and it allows users to add embedded code related to Dialogflow for our online activities. Second, the visual development dashboard of Dialogflow requires no computer coding knowledge, which allows non-programming users to design chatbots easily.

The chatbots in our study were rule-based, rather than AI-based. Rule-based bots answer user questions based on a pre-determined set of rules that are embedded into them, while AI bots are self-learning bots that are programmed with Natural Language Processing and Machine Learning (Joshi 2020). We chose to use a rule-based bot (rather than an AI bot) because a rule-based bot is much simpler to build especially for people who do not have an IT background, and is much faster to train (Joshi 2020). Although AI bots can self-learn from data, it usually takes a long time to train them. More importantly, the self-learning ability of AI bots is not always perfect – for example, AI bots can learn something they are not supposed to, and start to post offensive messages (Joshi 2020).

A chatbot taking the role of a learning partner, named Learning Buddy, was integrated into university students’ flipped online learning activities in two case studies. We developed two types of chatbot online activities in Learning Buddy – Quiz Chatbot and Self-Regulated Learning (SRL) Chatbot. The Quiz Chatbot offered students short quizzes and reinforce their understanding of the learning contents. The SRL Chatbot was designed to assist students to set their own personal goals when attending a course. Both chatbots provided information in the form of conversations with students.

2 Case I: Quiz Chatbot

2.1 The Development of the Quiz Chatbot

We integrated the Quiz Chatbot into Moodle where students received the online learning materials before they came to class. Students were required to interact with the Quiz Chatbot to assess the main learning contents before attending a tutorial session. The learning topic was social media. The training utterances, including questions, intents, entities, and appropriate responses, were designed based on the learning topic and manually added to the Dialogflow system. Intents refer to the goals or motive the user has in mind when conversing with a Chatbot (McGrath 2017). For example, if a user types “show me yesterday’s weather temperature”, the user’s goal or motive is to retrieve information concerning the previous day’s weather. An entity modifies an intent (McGrath 2017). For example, if a user types “show me yesterday’s weather temperature”, the entities would be “yesterday”, “weather”, and “temperature”. The Dialogflow system was able to learn from the training utterances and capture students’ inputs during the conversation.

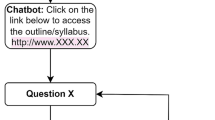

The Quiz Chatbot greeted students on the Moodle webpage via a live chat bubble (see Fig. 1). Next, three quizzes were asked by the Quiz Chatbot one after another. After students typed the answers, Quiz Chatbot would determine students’ inputs and provide relevant feedback. We employed chit-chats between quizzes to create a friendly learning climate and engage students to complete the quiz task. For example, the Quiz Chatbot asked students “is this quiz helpful in comprehending the definition of social media?” to show its concern for students’ learning process. If students appreciated the use of chatbot on knowledge understanding, Quiz Chatbot would respond “Thanks, happy to know that!”; otherwise, the chatbot would explain “I’m still being developed, and your response can help me become better. Hope you can find the next quiz helpful”. Figure 2 showed the conversation flow between students and the Quiz Chatbot.

2.2 Data Collection and Analysis

Participants were students who enrolled in an undergraduate course at a public university in Hong Kong. We obtained the ethical approval of this study from the university Institutional Review Board. The researcher introduced the chatbot activity and informed students of the study before the course. To evaluate students’ behavioral engagement, we collected conversation records between students and the Quiz Chatbot in the pre-class learning session. To investigate students’ perceived usefulness and ease of use of the Quiz Chatbot, a five-point scale questionnaire was used (adapted from Davis 1989, p. 340). Davis (1989) defined perceived ease of use is defined as “the degree to which a person believes that using a particular system would be free of effort” (p. 320), whereas perceived usefulness refers to “the degree to which a person believes that using a particular system would enhance his or her job performance” (p. 320). The scale ranged from 1 (i.e., strongly disagree) to 5 (i.e., strong agree). The questionnaire was administrated to students in their pre-class learning. Students’ perceived usefulness consisted of five items, for example, “The Learning Buddy chatbot addressed my needs of the tutorial activity preparation”. Students’ perceived ease of use was measure by another five items, such as “I found it easy to use the chatbot to communicate” and “I found it easy to recover from errors encountered while using the chatbot”.

There were 56 students enrolled in this course, and 17 students voluntarily participated in our study and interacted with the Quiz Chatbot during their pre-class learning. Within the 17 students, 15 of them finished all quizzes through the chatbot. Therefore, we collected these 15 students’ chatbot conversation records and analyzed student-chatbot utterance turn, session length, and goal completion rate. Utterance turn or conversation step refers to the number of back-and-forth exchange between a chatbot and a user (Yao 2016). For example, if a chatbot says “hello” and the user replies “hello”, this is one utterance turn. Session length, also known as session duration or handle time, can be defined as the amount of time that elapses between the moment a user starts to converse with a chatbot and the moment they end the conversation (Mead 2019). Goal completion rate refers to the number of times the chatbot succeeds in achieving its purpose, which is to help learners complete the quizzes in this case. Table 1 shows the descriptive statistics of students’ behavioral engagement. The average utterance turns between students and chatbot was 14.6 times with a standard deviation of 3.48. Students spend an average of 4.73 min conversing with the Quiz Chatbot with a standard deviation of 1.62. The goal completion rate was 88%.

A total of 7 students in this course completed the questionnaire. Table 2 shows the descriptive statistics of students’ perceived usefulness and ease of use of the Quiz Chatbot. Students interacting with the Quiz Chatbot perceived a relatively high usefulness (Mean = 4, SD = .82) indicated by the item 5, which was “Overall, I found the chatbot was useful in my learning”. Students have a strong perceived ease of use of the Quiz Chatbot (Mean = 4, SD = .58) by the item “Overall, I found the chatbot easy to use”.

3 Case II: Self-regulated Learning Chatbot

3.1 The Development of Self-regulated Learning Chatbot

The Self-Regulated Learning (SRL) Chatbot helped students to set personal learning goals with regard to the course they attended. Students were required to interact with the SRL Chatbot on Moodle webpage before coming to the first class. The SRL Chatbot engaged students with five goal-setting questions. For each of the questions, potential options were offered to inspire the directions of students’ self-regulated learning goals and expectations of the course. For example, before students attended the first lesson, the chatbot asked students “could you tell me what you want to gain most from this course?” followed by three options (Fig. 3). All students were given the opportunity to express their expectations. Based on the student's answer to each question, the SRL Chatbot would provide relevant recommendations that suit each student’s preference. Students with low self-regulation skills would not feel lost and overwhelmed when they were required to set a goal. Together with the recommendations after each choice, students can have a better idea of achieving the particular goals they set for the course.

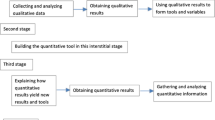

The instructor of this course participated in the SRL Chatbot design and development. Aligned with the course learning outcomes, the instructor worked out the goal-setting questions and relevant self-regulated learning recommendations. Then the chatbot developer categorized the data into intents, entities, and responses according to the Dialogflow system. The intents were pre-defined keywords of students’ inputs. The entities were the synonyms and misspellings of keywords for different intents. Recommendations for self-regulated learning strategies were fed into responses data. To minimise students’ off-topic replies, we labeled three potential options (A, B, or C) for each question posed. Figure 4 shows the flowchart for designing the SRL Chatbot. The SRL Chatbot was tested by the instructor and the developer before it was launched on Moodle.

3.2 Data Collection and Analysis

The participants were enrolled in another course in a public university in Hong Kong. The participants in Case II were different from the participants in Case I. Similar with Case I, we evaluated students’ behavioral engagement by measuring their conversation records with the SRL Chatbot (utterance turn, session length, goal completion rate). The goal completion rate in this case would be the number of times the SRL Chatbot was successful in helping students set their personal goals. Students’ perceived usefulness and ease of use were examined by the same 5-point scale questionnaire used in Case I. The usefulness scale showed a high level of internal consistency with a Cronbach's alpha of 0.859. The Cronbach's alpha for perceived ease of use items was 0.742. We obtained the ethical approval of this study from the university Institutional Review Board. An open-ended survey was conducted to obtain students’ opinions about the chatbot activity design. The open-ended survey mainly focused on (1) students’ perceived engagement factors, and (2) students’ suggestions to improve the chatbot.

Students (n = 29) set their learning goals with the assistance of the SRL Chatbot before coming to the first class. The results of students’ behavioral engagement with the chatbot activity were shown in Table 3. Students interacted with the chatbot within an average of 7.97 turns, with a standard deviation of 1.36. The duration of student-chatbot conversation was average 4 min (SD = 2.65). The conversation records revealed that almost half of the students (n = 14) completed the goal-setting activity within 7 turns, followed by 8 students talking to the chatbot within 8 utterance turns. There were 3 students achieved 10 turns (n = 2) and 13 turns (n = 1). After finishing the goal-setting task, students continued to talk with the chatbot to ask for other topics. For example, students asked chatbot “Can you speak more words?” and “When is the assignment due?” The goal completion rate was 100%.

Sixteen students completed the questionnaire (Table 4). The average mean of students’ perceived ease of use (M = 4.25, SD = .68) was slightly higher than that of the perceived usefulness of the SRL Chatbot (M = 3.94, SD = .68). Students reported that SRL Chatbot was easy to communicate. During the interaction, the SRL Chatbot performed in expected ways to help students to set personal goals.

We employed a grounded approach to analyze the open-ended survey, from which students’ responses will be categorized into different themes inductively. Two researchers coded students’ responses independently. The inter-coder agreement was 90%. The disagreement was resolved by a discussion between the two researchers.

The first open-ended question explored the various beneficial factors that students found related to the SRL Chatbot. The responses from 16 students indicated five factors: guidance, interaction, timely feedback, engagement, and personalization (see Fig. 5).

First, the interaction was remarked by 4 students who were engaged by the SRL Chatbot in a way of step-by-step conversation. Student B mentioned “I like chatbot because it directs me step by step in goal-setting”. Second, students found the SRL Chatbot could help them to understand how and when to set goals for a course. For example, Student H mentioned “I think the chatbot-assisted goal-setting activity can help me clarify my expectation for this course”. Third, conversing with the chatbot improved students’ motivation to set learning goals. Student N acknowledged “The chatbot is innovative”. Fourth, the SRL chatbot provided prompt feedbacks during students’ goal-setting process, which “solved students’ problems in time (Student D)”. Lastly, few students believed the SRL Chatbot “cater to diversity (Student O)” for individual’s goal setting, in which the chatbot provided personalized recommendation to students based on their answers.

The second open-ended question focused on students’ suggestions about the future improvements of the current SRL Chatbot. We categorized four directions to revise the chatbot activity: (a) richer recommendation, (b) more intelligence, (c) more interactive function, and (d) long-term use (see Fig. 6). First, in total of 7 students suggested the chatbot could provide more “customized recommendation (Student O)”. For each goal-setting question, we pre-defined three options (i.e., A, B, and C) to assist students to label their inputs. However, “more options (Student A and Student B)” were expected to be offered. Second, students mentioned the SRL Chatbot could be more intelligent by “answering faster (Student J)” and “chatting like Siri (Student C)”. Third, more interactive functions could be added during the conversation. Student K mentioned using “emojis in the sentences” could help enhance the interaction between students and the chatbot. Lastly, 2 students expressed their willingness to continue using the SRL Chatbot for more sessions. Student E expected the chatbot “can help students setting a timetable and remind students about each assignment they should do in Moodle”.

4 Conclusion

This study, which is part of a larger research project on engaging student in flipped learning, explored the use of two types of chatbots in flipped learning online sessions: Quiz Chatbot, and SRL Chatbot. We described in detail the implementations of the two chatbots, and evaluated the chatbots in terms of its perceived usefulness, and perceived ease of use. We also examined the extent of student behavioral engagement with the chatbots.

Overall, we found positive user experiences with both the Quiz and SRL Chatbots with regard to the chatbots’ perceived usefulness and perceived ease of use. Perceived usefulness and perceived ease of use have a direct impact on people’s intentions to use an information tool or system (He et al. 2018). In other words, if a user feels that the chatbot enhances his or her learning, and that using the chatbot is be free of effort, the user will be willing to use the tool. Analyses of the users’ conversation records with the Quiz and SRL Chatbots reveal that the average session length for both chatbots was 4 min. Although the ideal average session length will differ based on the context of the conversation, the longer the session length, the better a chatbot is at creating an engaging conversation experience for the user (Phillips 2018). At this moment, we are unable to state what the optimal average session length should be. However, from the Case II instructor’s perspective, an average session length of 4 min is indicative of an engaging chatbot experience for the learners.

The average number of utterance turn was 14 for the Quiz Chatbot and 7 for the SRL Chatbot. The average number of utterance turn for the Quiz Chatbot was higher than that for the SRL Chatbot probably because learners tend to engage in more interactions when answering quiz questions (e.g., by asking the chatbot questions and the chatbot replies) than setting their own personal goals with a chatbot. Both chatbots registered high goal completion rates (88% for the Quiz Chatbot, and 100% for the SRL Chatbot) which suggest that the two chatbots were successful in fulfilling the purposes they were created for.

For future research, we plan to implement the two chatbots with larger samples of participants involving other courses. Using larger sample sizes and other courses can help us generalize the findings to other contexts. We also plan to measure the performance of the chatbots using other metrics such as chatbot fallback rate. Chatbot fallback rate refers to the number of times a chatbot is not able to understand a user’s message and provide a relevant response (Phillips 2018). The lower the fallback rate, the higher will be the user satisfaction. In contrast, a high fallback rate means that more effort should be spent on training the chatbots.

References

Lo, C.K., Hew, K.F., Chen, G.: Toward a set of design principles for mathematics flipped classrooms: a synthesis of research in mathematics education. Educ. Res. Rev. 22, 50–73 (2017)

Bishop, J., Verleger, M.A.: The flipped classroom: A survey of the research. Paper presented at 2013 ASEE Annual Conference & Exposition, Atlanta, Georgia (2013). https://peer.asee.org/22585

Strelan, P., Osborn, A., Palmer, E.: The flipped classroom: a meta-analysis of effects on student performance across disciplines and educational levels. Educ. Res. Rev. 30 (2020). https://doi.org/10.1016/j.edurev.2020.100314

van Alten, D.C.D., Phielix, C., Janssen, J., Kester, L.: Effects of flipping the classroom on learning outcomes and satisfaction: a meta-analysis. Educ. Res. Rev. 28. https://doi.org/10.1016/j.edurev.2019.05.003

Diwanji, P., Hinkelmann, K., & Witschel, H. F. (2018). Enhance classroom preparation for flipped classroom using AI and analytics. Proceedings of the 20th International Conference on Enterprise Information Systems, 1, 477–483.

Sahin, A., Cavlazoglu, B., Zeytuncu, Y.E.: Flipping a college calculus course: a case study. Educ. Technol. Soc. 18(3), 142–152 (2015)

Palmer, K.: Flipping a calculus class: one instructor’s experience. Primus 25(9–10), 886–891 (2015)

Scott, C.E., Green, L.E., Etheridge, D.L.: A comparison between flipped and lecture-based instruction in the calculus classroom. J. Appl. Res. Higher Educ. 8(2), 252–264 (2016)

Elliott, R., Rob, Do students like the flipped classroom? An investigation of student reaction to a flipped undergraduate IT course. In: Proceedings of 2014 IEEE Frontiers in Education Conference (FIE), pp. 1–7 (2014)

Joshi, N.: Choosing between rule-based bots and AI bots. Forbes (2020). https://www.forbes.com/sites/cognitiveworld/2020/02/23/choosing-between-rule-based-bots-and-ai-bots/?sh=53a9ccb1353d

Fryer, L.K., Ainley, M., Thompson, A., Gibson, A., Sherlock, Z.: Stimulating and sustaining interest in a language course: an experimental comparison of chatbot and human task partners. Comput. Hum. Behav. 75, 461–468 (2017)

McNeal, M.:. A Siri for higher ed aims to boost student engagement. EdSurge News (2016). https://www.edsurge.com/news/2016-12-07-a-siri-for-higher-ed-aims-to-boost-student-engagement

Moore, M.G.: Theory of transactional distance. In: Keegan, D. (ed.) Theoretical Principles of Distance Education, pp. 22–38. Routledge, New York (1997)

Huang, W., Hew, K.F., Gonda, D.E.: Designing and evaluating three chatbot-enhanced activities for a flipped graduate course. Int. J. Mech. Eng. Robot. Res. 8(5), 813–818 (2019)

Smutny, P., Schreiberova, P.: Chatbots for learning: a review of educational chatbots for the Facebook Messenger. Comput. Educ. 151. https://doi.org/10.1016/j.compedu.2020.103862

Kim, N.-Y.: A study on chatbots for developing Korean college students’ English listening and reading Skills. J. Digit. Converg. 16(8), 19–26 (2018)

McGrath, C.: Chatbot vocabulary: 10 Chatbot terms you need to know. Chatbots Magazine (2017). https://chatbotsmagazine.com/chatbot-vocabulary-10-chatbot-terms-you-need-to-know-3911b1ef31b4

Davis, F.D.: Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13(3), 318–339 (1989)

Yao, M.: 5 metrics every chatbot developer needs to track. VentureBeat, The Machine (2016). https://venturebeat.com/2016/10/04/5-metrics-every-chatbot-developer-needs-to-track/

Mead, J.: What session length can tell you about your chatbot performance. Inf. Commun. (2019). https://inform-comms.com/session-length-chatbot-performance/

He, Y., Chen, Q., Kitkuakul, S.: Regulatory focus and technology acceptance: perceived ease of use and usefulness as efficacy. Cogent Bus. Manag. 5, 1 (2018). https://doi.org/10.1080/23311975.2018.1459006

Phillips, C.: Chatbot analytics 101: the essential metrics you need to track. Chatbots Magazine. https://chatbotsmagazine.com/chatbot-analytics-101-e73ba7013f00

Acknowledgement

The research was supported by a Teaching Development Grant 2019 awarded to the first author at the University of Hong Kong.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Hew, K.F., Huang, W., Du, J., Jia, C. (2021). Using Chatbots in Flipped Learning Online Sessions: Perceived Usefulness and Ease of Use. In: Li, R., Cheung, S.K.S., Iwasaki, C., Kwok, LF., Kageto, M. (eds) Blended Learning: Re-thinking and Re-defining the Learning Process.. ICBL 2021. Lecture Notes in Computer Science(), vol 12830. Springer, Cham. https://doi.org/10.1007/978-3-030-80504-3_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-80504-3_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-80503-6

Online ISBN: 978-3-030-80504-3

eBook Packages: Computer ScienceComputer Science (R0)