Abstract

Tsetlin Machines (TMs) capture patterns using conjunctive clauses in propositional logic, thus facilitating interpretation. However, recent TM-based approaches mainly rely on inspecting the full range of clauses individually. Such inspection does not necessarily scale to complex prediction problems that require a large number of clauses. In this paper, we propose closed-form expressions for understanding why a TM model makes a specific prediction (local interpretability). Additionally, the expressions capture the most important features of the model overall (global interpretability). We further introduce expressions for measuring the importance of feature value ranges for continuous features making it possible to capture the role of features in real-time as well as during the learning process as the model evolves. We compare our proposed approach against SHAP and state-of-the-art interpretable machine learning techniques.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Motivations. Computational predictive modelling is becoming increasingly complicated in order to handle the deluge of high-dimensional data in machine learning and data science-driven industries. With rising complexity, not understanding why a model makes a particular prediction is becoming one of the most significant risks [7]. To overcome this risk and to give insights into how a model is making predictions given the underlying data, several efforts over the past few years have provided methodologies for explaining so-called black-box modelsFootnote 1. The purpose is to offer performance enhancements over more simplistic, but transparent and interpretable models, such as linear models, logistic regression, and decision trees. Prominent efforts include SHAP [4], LIME [9], and modifications or enhancements to neural networks, such as in Neural Additive Models [2]. Typical approaches either create post hoc approximations of how models make a decision or build local surrogate models. As such, they require additional computation time and are not intrinsic to the data modelling or learning itself.

Apart from increased trust, attaining interpretability in machine learning is essential for several reasons. For example, it can be useful when forging an analytical driver that provides insight into how a model may be improved, from both a feature standpoint and also a validation standpoint. It can also support understanding the model learning process and how the underlying data is supporting the prediction process. Additionally, interpretability can be used when reducing the dimensionality of the input features.

This paper introduces an alternative methodology for high-accuracy interpretable predictive modelling. Our goal is to combine competitive accuracy with closed-form expressions for both global and local interpretability, without requiring any additional local linear explanation layers or inference from any other surrogate models. That is, we intend to provide accessibility to feature strength insights that are intrinsic to the model, at any point during a learning phase. To this end, the methodology we propose enhances the intrinsic interpretability of the recently introduced Tsetlin Machines (TMs) [3], which have obtained competitive results in terms of accuracy, memory footprint, and learning speed, on diverse benchmarks.

Contributions. Interpretability in all of the above TM-based approaches relies on inspecting the full range of clauses individually. Such inspection does not necessarily scale to complex pattern recognition problems that require a large number of clauses, e.g., in the thousands. A principled interface for accessing different types of interpretability at various scales is thus currently missing. In this paper, we introduce closed-form expressions for local and global TM interpretability. We formulate these expressions at both an overall feature importance level and a feature range level, namely, which ranges of the data yield the most influence in making predictions. Secondly, we evaluate performance on several industry-standard benchmark data sets, contrasting against other interpretable machine learning methodologies.

2 Tsetlin Machine Basics

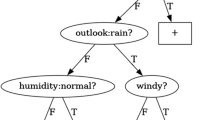

Classification. A TM takes a vector \(X=(x_1,\ldots ,x_o)\) of Boolean features as input (Fig. 1), to be classified into one of two classes, \(y=0\) or \(y=1\). Together with their negated counterparts, \(\bar{x}_k = \lnot x_k = 1-x_k\), the features form a literal set \(L = \{x_1,\ldots ,x_o,\bar{x}_1,\ldots ,\bar{x}_o\}\). In the following, we will use the notation \(I_j\) to refer to the indexes of the non-negated features in \(L_j\) and \(\bar{I}_j\) to refer to the indexes of the negated features.

A TM pattern is formulated as a conjunctive clause \(C_j\), formed by ANDing a subset \(L_j \subseteq L\) of the literal set:

E.g., the clause \(C_j(X) = x_1 \wedge x_2 = x_1 x_2\) consists of the literals \(L_j = \{x_1, x_2\}\) and outputs 1 iff \(x_1 = x_2 = 1\).

The number of clauses employed is a user set parameter m. Half of the clauses are assigned positive polarity. The other half is assigned negative polarity. In this paper, we will indicate clauses with positive polarity with odd indices and negative polarity with even indicesFootnote 2. The clause outputs, in turn, are combined into a classification decision through summation and thresholding using the unit step function \(u(v) = 1 ~\mathbf {if}~ v \ge 0 ~\mathbf {else}~ 0\):

Namely, classification is performed based on a majority vote, with the positive clauses voting for \(y=1\) and the negative for \(y=0\). The classifier \(\hat{y} = u\left( x_1 \bar{x}_2 + \bar{x}_1 x_2 - x_1 x_2 - \bar{x}_1 \bar{x}_2\right) \), e.g., captures the XOR-relation.

Learning. A clause \(C_j(X)\) is composed by a team of Tsetlin Automata [8], each Tsetlin Automaton deciding to Include or Exclude a specific literal \(l_k\) in the clause (see Fig. 1). Learning which literals to include is based on reinforcement: Type I feedback produces frequent patterns, while Type II feedback increases the discrimination power of the patterns.

A TM learns on-line, processing one training example (X, y) at a time.

Type I feedback is given stochastically to clauses with positive polarity when \(y=1\) and to clauses with negative polarity when \(y=0\). An afflicted clause, in turn, reinforces each of its Tsetlin Automata based on:

-

1.

The clause output \(C_j(X)\);

-

2.

The action of the targeted Tsetlin Automaton – Include or Exclude; and

-

3.

The value of the literal \(l_k\) assigned to the automaton.

Two rules govern Type I feedback:

-

Include is rewarded and Exclude is penalized with probability \(\frac{s-1}{s}~\mathbf {if}~C_j(X)=1~\mathbf {and}~l_k=1\). This reinforcement is strong (triggered with high probability) and makes the clause remember and refine the pattern it recognizes in X.

-

Include is penalized and Exclude is rewarded with probability \(\frac{1}{s}~\mathbf {if}~C_j(X)=0~\mathbf {or}~l_k=0\). This reinforcement is weak (triggered with low probability) and coarsens infrequent patterns, making them frequent.

Above, s is a hyperparameter that controls the frequency of the patterns produced.

Type II feedback is given stochastically to clauses with positive polarity when \(y=0\) and to clauses with negative polarity when \(y=1\). It penalizes Exclude with probability 1 \(\mathbf {if}~C_j(X)=1~\mathbf {and}~l_k=0\). This feedback is strong and produces candidate literals for discriminating between \(y=0\) and \(y=1\).

Resource allocation dynamics ensure that clauses distribute themselves across the frequent patterns, rather than missing some and overconcentrating on others. That is, for any input X, the probability of reinforcing a clause gradually drops to zero as the clause output sum

approaches a user-set target T for \(y=1\) (and \(-T\) for \(y=0\)). To exemplify, Fig. 1 plots the probability of reinforcing a clause when \(T=4\) for different clause output sums v, per class y. If a clause is not reinforced, it does not give feedback to its Tsetlin Automata, and these are thus left unchanged. In the extreme, when the voting sum v equals or exceeds the target T (the TM has successfully recognized the input X, no clauses are reinforced. Accordingly, they are free to learn new patterns, naturally balancing the pattern representation resources. See [3] for further details.

3 Tsetlin Machine Interpretability

Because the TM represents patterns as self-contained conjunctive clauses in propositional logic, the method naturally leads to straightforward interpretability. However, as is the consensus in the machine learning literature, a model is fully interpretable only if it is possible to understand how the underlying data features impact the predictions of the model, both from a global perspective (entire model) and at the individual sample level. For example, a model that predicts housing prices in California should give much emphasis on specific latitude/longitude coordinates, as well as the age of the neighbouring houses (and perhaps other factors such as proximity at the beach). For individual sample data points, the model interpretation should show which features (such as house age, proximity to the beach) had the most positive (or negative) impact in expression the prediction. Before we present how closed-form expressions for both global and local interpretability can be derived intrinsically from any TM model, we first define some basic notation on the encoding of the features to a Boolean representation and feature inclusion sets, a critical first step in TM modeling.

3.1 Boolean Representation

We first assume that the input features to any TM can be booleanized into a bit encoded representation. Each bit represents a Boolean variable that is either False or True (0 or 1). To map continuous values to a Boolean representation, we use the encoding scheme adapted as follows. Let \(I_u = [a_u, b_u]\) be some interval on the real line representing a possible range of continuous values of feature \(f_u\). Consider \(l > 0\) unique values \(u_1, \ldots , u_l \in I_u\). We encode any \(v \in I_u\) into an l-bit representation \([\{0,1\}]^l\) where the ith bit is given as \(1 \; \text{ if } \; v \ge u_i\), and 0 otherwise. We will denote \(v \in I_u\) of a feature \(f_u\) as \(X_{f_u} := [x_1, \ldots , x_l]\) where any \(x_i := v \ge u_i\). For example, suppose \(I_u =[0,10]\) with \(u_1 = 1, u_2 = 2, \ldots , u_{10} = 10\). Then \(v = 5.5\) is encoded as [1, 1, 1, 1, 1, 0, 0, 0, 0, 0], while any \(v \le 0\) becomes [0, 0, 0, 0, 0, 0, 0, 0, 0, 0], and any \(v \ge 10\) becomes [1, 1, 1, 1, 1, 1, 1, 1, 1, 1]. The choice of \(l > 0\) and the values \(u_1, \ldots , u_l \in I_u\) should typically be chosen according to the properties of the empirical distribution of the underlying feature. If the values of feature \(f_u\) are relatively uniform across \(I_u\), then a uniform choice of \(u_1, \ldots , u_l \in I_u\) would seem appropriate. Otherwise the grid should be chosen to accompany the underlying density of the data, with a finer grid choice near higher densities.

3.2 Global Interpretability

Global interpretability is interested in understanding the most salient (important) features of the model, namely to what degree and strength a certain feature impacts predictions overall. We now introduce multiple output variables \(y^i \in \{y ^1, y^2, \ldots , y^n\}\), \(y^i = \{0,1\}\) and the upper index i, which refers to a particular output variable. For simplicity in exposition, we assume that the corresponding literal index sets \(I^i_j, \bar{I}^i_j\), for each output variable, \(i = 1, \ldots , n\), and clause, \(j = 1, \ldots , m\), have been found under some performance criteria of the learning procedure, described in Sect. 2. With these sets fixed, we can now assemble the closed form expressions.

Global Feature Strength. For global feature strength we make direct use of the indexed inclusion sets \(I^i_j, \bar{I}^i_j\), which are governed by the actions of the Tsetlin Automata. Specifically, we compute positive (negative) feature strength for a given output variable \(y^i\) and kth bit of feature \(f_u\) as follows:

In other words, for any given feature index \(k \in [1,\ldots ,o]\), the frequency of its inclusion across all clauses, restricted to positive polarity, governs its global importance score g[k]. This score thus reflects how often the feature is part of a pattern that is important to making a certain class prediction \(y^i\). Notice that we are only interested in indices pertaining to the positive polarity clauses \(C^i_j(X)\) since these are the clauses that contain references to features that are beneficial for predicting the ith class index.

The g[k] and \(\bar{g}[k]\) scores give positive and negative importance at the bit level for each feature. To get the total strength for a feature \(f_u\) itself, we simply aggregate over each bit k based on Eq. 4, restricted to the given feature \(f_u\):

Above, \(X_{f_u}\) should be understood as the bit encoded features representing feature \(f_u\). The functions \(\phi (\cdot )\) accordingly measure the general importance of a feature when it comes to predicting a given class label \(y^i\). As we shall see empirically in the next section, this measure defines the most relevant features of a model.

Remark: Due to the fact that the above expression can be trivially computed given any inclusion sets derived from the clauses, the feature importance can be observed in real-time during the online training procedure of TMs, to see how the feature importance evolves with new unseen date training samples. Such a feature is not available in typical batch machine learning methodologies utilizing frameworks such as SHAP.

Global Continuous Feature Range Strength. One additional global feature importance representation that we can derive for continuous input feature ranges is measuring the importance of a feature in terms of ranges of values. To do this, we can make use of the inclusion of features and negated features to construct a mapping of the important ranges for a given class. Based on our Boolean representation of continuous features, we define the allowable range for \(f_u\) as follows:

Notice that according to the literal \(x_k\), \(\text{ max}_{k \in I^i_j} f_u(x_k)\), gives the largest \(u_k \in I_u\) such that \(v \ge u_k\) was an influential feature for an input v. Similarly, \(\text{ min}_{k \in \bar{I}^i_j} f_u(x_k)\) yields the smallest \(u_k \in I_u\) such that \(\lnot (v \ge u_k)\), or \(v < u_k\), was a relevant feature for \(y^i\).

So now do we not only know the most salient features in making a certain prediction, but we know the range of the influential values as well for every feature \(f_u\) given prediction class \(y^i\). Again, these can all be computed in real-time during the learning of the model, giving full insight into the modeling procedure.

3.3 Local Interpretability

Local interpretability is interested in understanding to what degree features positively (or negatively) impact individual predictions. Due to the transparent nature of the clause structures, it is relatively straightforward to extract the driving features of a given input sample. If we assume again the bit representation \(X = [x_1, x_2, \ldots , x_o ]\) is a concatenation of the bit representation of all features \(f_{u_j}\), and we suppose i is the predicted class index of X, then we define

which give positive importance at the bit level for each feature. To get the total predictive impact for each feature we again aggregate over the individual bits of the feature:

In other words, for a given input, all the combined clauses act as a lookup table of important features, from which the strength of each feature in a prediction can be determined.

4 Empirical Evaluation and Applications

In order to evaluate the capability of our expressions for global and local TM interpretability, we will now consider two types of data sets: 1) Data sets which have an intuitive set of features which are already quite interpretable without model insight and 2) Data sets which will be more data driven, with features that are not necessarily understood by humans. We will further compare our results with other interpretable approaches to gain insight into the properties of our closed-form expressions. We further demonstrate that the TM can compete accuracy-wise with black-box methods, while simultaneously remaining relatively transparent. We will be using the Integer Weighted Tsetlin Machine (IWTM) proposed in [1], a recent extension of the classical TM summarized in Sect. 2 that has been shown to provide superior performance in both accuracy and training time by inducing weights on the clause structures. More details on motivation, formulation, and empirical studies are given in [1].

4.1 Comparison with SHAP

We first compare our feature strength scores, as defined in Eq. 5 and Eq. 6, with SHapley Additive exPlanations (SHAP) values, a popular methodology for explaining black-box models, considered as state-of-the-art. First published in 2017 by Lundberg and Lee [4], the approach attempts to “reverse-engineer” the output of any predictive algorithm by assigning each feature an importance value for a particular prediction. As it is first and foremost concerned with the local interpretability of a model, global interpretability of the SHAP approach can be viewed at the level of visualizing all the samples in the form of a SHAP value (impact on model output) by feature value (from low to high).

We use the Wisconsin Breast Cancer data set to compare the two approaches. This data set contains 30 different features extracted from an image of a fine needle aspirate (FNA) of a breast mass. These features represent different physical characteristics like the concavity, texture, or symmetry computed for each cell with various descriptive statistics such as the mean, standard deviation and “worst” (sum of the three largest values). Each sample is labelled according to the diagnosis, benign or malign.

Before comparing the results on the most influential features, we compare the performance metrics of the IWTMs with XGBoost. Table 1 shows the comparison in terms of mean and variance on accuracy and error types where the models were constructed 10 times each, with a training set of 70% randomly chosen samples. We see that the IWTM clearly competes in performance with XGBoost, albeit with a much higher variance in performance.

In the SHAP summary plot shown in Fig. 2, we observe that the most important features for the XGBoost model are perimeter_worst, concave points-mean, concave points-worst, texture_worst and area_se among others. Taking into account the feature value and impact on model output, the most impactful features for making a prediction tend to be perimeter_worst, concave points-worst, area_se, and area_worst, whereas texture_worst and concave points_mean prove to be the most effective in their lower value range.

Figure 2 also shows the corresponding results for the TM, providing the strength of the features when predicting benign cells, calculated based on Eq. 5. We can see that concave points_mean, concave points_worst, texture_worst, area_se, and concavity_worst seem to be the strongest contributors. Apart from feature perimeter_worst being the most effective for SHAP, it can clearly be seen that both methods yield very similar global feature strengths. Nearly all of the top 10 features in both sets agree in relative strength.

Similarly, the scores of the most relevant features for the benign class are shown in Fig. 3. As seen, concave points_mean, concave points_worst, and area_worst have a non-trivial impact.

Finally, Fig. 3 also reports the global importance for the features negated, calculated using \(\bar{\phi }(f_u)\) in Eq. 5 for the top features \(f_u\). In essence, the strength of these features show that in general, the inclusion of their negated feature ranges had a positive impact on predicting the malign class of cells.

4.2 Comparison with Neural Additive Models

Recently introduced in [2], Neural Additive Models offer a novel approach to general additive models. In all brevity, the goal is to learn a linear combination of simple neural networks that each model a single input feature. Being trained jointly, they learn arbitrarily complex relationships between input features and outputs. In this section, we compare IWTM models to NAMs both in terms of AUC and interpretability. For convenience, we also show the performance against several other popular black-box type machine learning approaches.

We first investigate two classification tasks, continuing with a regression task to contrast interpretability against NAMs. All of the performance results, except for those associated with the IWTMs, are adapted directly from [2].

Credit Fraud. In this task, we wish to predict whether a certain credit card transaction is fraudulent or not. To learn such fraudulent transaction, we use the data set found in [6] containing 284,807 transactions made by European credit cardholders. The data set is highly unbalanced, containing only 492 frauds (or 0.172%) of all transactions.

The training and testing strategy is to sample 60% of the true positives of fraudulent transactions, and then randomly select an equal number of non-fraudulent transactions from the remaining 284k+ transactions. Testing is then done on the remaining fraudulent transactions.

The globally important features for the fraudulent class of transactions are shown in Fig. 4. With the exception of “time from first transaction” and “transaction amount”, there is unfortunately no reference to other features names due to confidentiality. Thus, we cannot interpret features meaningfully. However, we can analyze how the ranges of the features vary according to predicting fraudulent or non-fraudulent transactions.

Note that all plots concerning ranges have had the feature values normalized such that the minimal value for that feature is 0.0 and the maximal value is 1.0. We will use blue-colored bars to represent where the range with the most impact for the given class is located.

In applying the feature range expression from Eq. 6 to a batch of non-fraudulent samples, we see from Fig. 5 that the “time from first transaction” feature, while one of the most important, is important in its entire range for both classes of transactions. Namely there is no range that sways the model to predict either fraudulent or non-fraudulent. This is in accordance with the NAM [2], where their graph depicts no sway of contribution into either class for the entire range of values.

The “transaction amount” feature can also be compared with NAMs, again considering feature value ranges. In Fig. 5, we see that most relevant range is from around 0 to 50 for the non-fraudulent class, but for fraudulent transaction class the most salient range shrinks to about 0–25. Indeed, for most of the other V-features, the value ranges tend to shrink, in particular for the higher value ranges. We can see that even though the visual representation of the model outputs in terms of feature and range importance are quite different, they can still be traced to yield similar conclusions.

In terms of performance compared with six other approaches, Table 2 shows IWTMs have yielded competitive results with those used in [2].Footnote 3

For a more in-depth study on IWTMs comparing with other methods on benchmark classification data sets, please refer to [1].

California Housing Price Data Set. In our last example, we investigate performance and interpretability on a regression task, using the California Housing data set. This data set appears in [5] where it is used to explore important features from a regression on housing price averages, offering features that are intrinsically interpretable and easy to validate in how they contribute to predictions. The data is comprised of one row per census block group, where a block group is the smallest geographical unit for which the U.S. Census Bureau publishes sample data, with a population typically between 600 to 3,000 people. The data set suggests we can derive and understand the influence of community characteristics on housing prices by predicting the median price of houses (in million dollars) in each California district.

We include results on both global and local interpretability, while also including results on influential ranges conditional on three tiers of prediction of housing prices (low, medium, high).

The global feature importance diagram measures the importance of each feature by how often if appears as a positive contribution in the clauses, per Eq. 5. In summary, the diagram indicates that both median income and house location are the most influential features to predicting housing prices. This also conforms with the features derived by the NAM approach in [2].

We now investigate local interpretability using a specific housing price instance. For our example, we take geographical region in the scenic hill area just east of Berkeley, known for its high real estate. Being from an affluent Bay Area zip code, it should be clear that with a high housing price predicted, both median income and location should be the main drivers.

Applying the local interpretability expression from Eq. 8 to this specific sample, we see that the top features concur with the assumption on the particular real estate area (Fig. 6). The location is sparsely populated with smaller occupancy per home, but hugely driven by the large median income and the Bay Area zip code. We also see that latitude achieves an exceptionally higher influence than longitude for this example, in comparison with their global importance, which could be from the fact that this particular latitude is aligned with northern California in the Bay Area and thus has more informative content to provide for a high housing cost prediction. Furthermore, housing age and number of bedrooms for this region in the Bay Area also seem to provide more information in making a prediction than longitude. This clearly exhibits how a local explanation can differ from a general global explanation of the model feature importance.

More insight can be gained by examining the feature value ranges that are important for certain levels of housing price predictions. Using the range importance expression Eq. 6, we look at some typical ranges of values that are attributed to lower prediction in the housing price. In Fig. 7, we note that Median income is low for this class of housing prices, while latitude and longitude values take on nearly the entire range of values. On the other hand, average number of rooms does not seem be a factor. We now apply the same approach to mid-tiered housing prices. Here we expect to see higher median income and maybe some changes in the location to accommodate more rural housing prices. In general, relatively all ranges of values for most features are important, with the exception of median income which takes on the upper 70% of values. Further, the number of bedrooms plays a larger part in the prediction. Lower population seems to have a positive impact as well, accounting for those rural census blocks.

Considering regression error, we compare techniques by means of average RMSE, obtaining the one-standard deviation figures via 5-fold cross validation. Table 3 show the results compared with those from [2], Sect. 3.2.1.

We see that IWTMs performs similarly to the other techniques, however, is outperformed by the DNNs. This can be explained by the low number of clauses employed, making it more difficult to exploit the large number of samples (20k), which gives the DNNFootnote 4 an advantage.

5 Conclusion

In this paper, we proposed closed-form expressions for understanding why a TM model makes a specific prediction and capturing the most important features of the model overall (global interpretability). We further introduced expressions for measuring the importance of feature value ranges for continuous features. The clause structures that we investigated revealed interpretable insights about high-dimensional data, without the use of additional post hoc methods or additive approaches. Upon comparing the interpretability results to a staple in machine learning interpretability such as SHAP and a more recent novel interpretable extension to neural networks in NAMs, we observed that the TM-based interpretability yielded similar insights. Furthermore, we validated the approach of range importance on California housing data which offer human interpretable features.

Current and future work in the closed-form expressions are being developed for the problem of unsupervised dimension reduction and clustering in large dimensional data, along with further adapting the approach for both learning in time-series data and images.

Notes

- 1.

We will understand black-box models as models which lack intrinsic interpretability features, such as ensemble approaches, neural networks, and random forests.

- 2.

Any systematic division of clauses can be used as long as the cardinality of the positive and negative polarity sets are equal.

- 3.

The choice of hyperparameters of the IWTM can be summarized as picking the number of clauses randomly three times, between 50 and 500 clauses, with a threshold of twice the number of clauses. The best model in terms of accuracy was chosen of the three configurations.

- 4.

DNN with 10 hidden layers containing 100 units each with ReLU activation and Adam optimizer.

References

Abeyrathna, K.D., Granmo, O.C., Goodwin, M.: Extending the Tsetlin Machine with integer-weighted clauses for increased interpretability. IEEE Access 9, 8233–8248 (2020)

Agarwal, R., Frosst, N., Zhang, X., Caruana, R., Hinton, G.E.: Neural additive models: interpretable machine learning with neural nets (2020)

Granmo, O.C.: The Tsetlin Machine - a game theoretic bandit driven approach to optimal pattern recognition with propositional logic (2018). https://arxiv.org/abs/1804.01508

Lundberg, S., Lee, S.I.: A unified approach to interpreting model predictions (2017)

Pace, K., Barry, R.: Sparse spatial autoregressions. Stat. Probab. Lett. 33(3), 291–297 (1997)

Pozzolo, A.D., Bontempi, G.: Adaptive machine learning for credit card fraud detection (2015)

Rudin, C.: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1(5), 206–215 (2019)

Tsetlin, M.L.: On behaviour of finite automata in random medium. Avtomat. i Telemekh 22(10), 1345–1354 (1961)

Tulio Ribeiro, M., Singh, S., Guestrin, C.: “Why should i trust you?”: explaining the predictions of any classifier. arXiv e-prints arXiv:1602.04938 (February 2016)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Blakely, C.D., Granmo, OC. (2021). Closed-Form Expressions for Global and Local Interpretation of Tsetlin Machines. In: Fujita, H., Selamat, A., Lin, J.CW., Ali, M. (eds) Advances and Trends in Artificial Intelligence. Artificial Intelligence Practices. IEA/AIE 2021. Lecture Notes in Computer Science(), vol 12798. Springer, Cham. https://doi.org/10.1007/978-3-030-79457-6_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-79457-6_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-79456-9

Online ISBN: 978-3-030-79457-6

eBook Packages: Computer ScienceComputer Science (R0)