Abstract

We present a model-agnostic post-processing scheme to improve the boundary quality for the segmentation result that is generated by any existing segmentation model. Motivated by the empirical observation that the label predictions of interior pixels are more reliable, we propose to replace the originally unreliable predictions of boundary pixels by the predictions of interior pixels. Our approach processes only the input image through two steps: (i) localize the boundary pixels and (ii) identify the corresponding interior pixel for each boundary pixel. We build the correspondence by learning a direction away from the boundary pixel to an interior pixel. Our method requires no prior information of the segmentation models and achieves nearly real-time speed. We empirically verify that our SegFix consistently reduces the boundary errors for segmentation results generated from various state-of-the-art models on Cityscapes, ADE20K and GTA5. Code is available at: https://github.com/openseg-group/openseg.pytorch.

Y. Yuan and J. Xie—Equal contribution.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

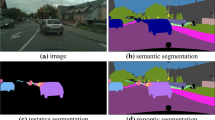

The task of semantic segmentation is formatted as predicting the semantic category for each pixel in an image. Based on the pioneering fully convolutional network [46], previous studies have achieved great success as reflected by increasing the performance on various challenging semantic segmentation benchmarks [7, 16, 68].

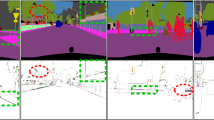

Qualitative analysis of the segmentation error maps. The 1\(^\mathrm{st}\) column presents the ground-truth segmentation maps, and the \(2^\mathrm{nd}/3^\mathrm{rd}/4^\mathrm{th}\) column presents the error maps of DeepLabv3/HRNet/Gated-SCNN separately. These examples are cropped from Cityscapes val set. We can see that there exist many errors along the thin boundary for all three methods.

Most of the existing works mainly addressed semantic segmentation through (i) increasing the resolution of feature maps [12, 13, 54], (ii) constructing more reliable context information [23, 27, 28, 39, 62,63,64,65] and (iii) exploiting boundary information [5, 9, 44, 55]. In this work, we follow the \(3^\mathrm{rd}\) line of work and focus on improving segmentation result on the pixels located within the thinning boundaryFootnote 1 via an effective model-agnostic boundary refinement mechanism.

Our work is mainly motivated by the observation that most of the existing state-of-the-art segmentation models fail to deal well with the error predictions along the boundary. We illustrate some examples of the segmentation error maps with DeepLabv3 [12], Gated-SCNN [55] and HRNet [54] in Fig. 1. More specifically, we illustrate the statistics on the numbers of the error pixels vs. the distances to the object boundaries in Fig. 2. We can observe that, for all three methods, the number of error pixels significantly decrease with larger distances to the boundary. In other words, predictions of the interior pixels are more reliable.

We propose a novel model-agnostic post-processing mechanism to reduce boundary errors by replacing labels of boundary pixels with the labels of corresponding interior pixels for a segmentation result. We estimate the pixel correspondences by processing the input image (without exploring the segmentation result) with two steps. The first step aims to localize the pixels along the object boundaries. We follow the contour detection methods [2, 4, 21] and simply use a convolutional network to predict a binary mask indicating the boundary pixels. In the second step, we learn a direction away from the boundary pixel to an interior pixel and identify the corresponding interior pixel by moving from the boundary pixel along the direction by a certain distance. Especially, our SegFix can reach nearly real-time speed with high resolution inputs.

Histogram statistics of errors: the number of error pixels vs. their (Euclidean) distances to the boundaries on Cityscapes val based on DeepLabv3/HRNet/Gated-SCNN. We can see that pixels with larger distance tend to be well-classified with higher probability and there exist many errors distributing within \(\sim 5\) pixels width along the boundary.

Our SegFix is a general scheme that consistently improves the performance of various segmentation models across multiple benchmarks without any prior information. We evaluate the effectiveness of SegFix on multiple semantic segmentation benchmarks including Cityscapes, ADE20K and GTA5. We also extend SegFix to instance segmentation task on Cityscapes. According to the Cityscapes leaderboard, “HRNet + OCR + SegFix" and “PolyTransform + SegFix" achieve \(84.5\%\) and \(41.2\%\), which rank the \(1^\mathrm{st}\) and \(2^\mathrm{nd}\) place on the semantic and instance segmentation track separately by the ECCV 2020 submission deadline.

2 Related Work

Distance/Direction Map for Segmentation: Some recent work [3, 25, 56] performed distance transform to compute distance maps for instance segmentation task. For example, [3, 25] proposed to train the model to predict the truncated distance maps within each cropped instance. The other work [6, 10, 17, 51] proposed to regularize the semantic or instance segmentation predictions with distance map or direction map in a multi-task mechanism. Compared with the above work, the key difference is that our approach does not perform any segmentation predictions and instead predicts the direction map from only the image, and then we refine the segmentation results of the existing approaches.

Level Set for Segmentation: Many previous efforts [8, 31, 50] have used the level set approach to address the semantic segmentation problem before the era of deep learning. The most popular formulation of level set is the signed distance function, with all the zero values corresponding to predicted boundary positions. Recent work [1, 14, 33, 56] extended the conventional level-set scheme to deep network for regularizing the boundaries of predicted segmentation map. Instead of representing the boundary with a level set function directly, we implicitly encode the relative distance information of the boundary pixels with a boundary map and a direction map.

DenseCRF for Segmentation: Previous work [11, 41, 58, 67] improved their segmentation results with the DenseCRF [36]. Our approach is also a kind of general post processing scheme while being simpler and more efficient for usage. We empirically show that our approach not only outperforms but also is complementary with the DenseCRF.

Refinement for Segmentation: Extensive studies [22, 24, 29, 37, 38] have proposed various mechanisms to refine the segmentation maps from coarse to fine. Different from most of the existing refinement approaches that depend on the segmentation models, to the best of our knowledge, our approach is the first model-agnostic segmentation refinement mechanism that can be applied to refine the segmentation results of any approach without any prior information.

Illustrating the SegFix framework: In the training stage, we first send the input image into a backbone to predict a feature map. Then we apply a boundary branch to predict a binary boundary map and a direction branch to predict a direction map and mask it with the binary boundary map. We apply boundary loss and direction loss on the predicted boundary map and direction map separately. In the testing stage, we first convert the direction map to offset map and then refine the segmentation results of any existing methods according to the offset map.

Boundary for Segmentation: Some previous efforts [1, 45, 59, 60] focused on localizing semantic boundaries. Other studies [5, 18,19,20, 32, 43, 44, 55] also exploited the boundary information to improve the segmentation. For example, BNF [5] introduced a global energy model to consider the pairwise pixel affinities based on the boundary predictions. Gated-SCNN [55] exploited the duality between the segmentation predictions and the boundary predictions with a two-branch mechanism and a regularizer.

These methods [5, 18, 32, 55] are highly dependent on the segmentation models and require careful re-training or fine-tuning. Different from them, SegFix does not perform either segmentation prediction or feature propagation and we instead refine the segmentation maps with an offset map directly. In other words, we only need to train a single unified SegFix model once w/o any further fine-tuning the different segmentation models (across multiple different datasets). We also empirically verify that our approach is complementary with the above methods, e.g., Gated-SCNN [55] and Boundary-Aware Feature Propagation [18].

Guided Up-Sampling Network: The recent work [47, 48] performed a segmentation guided offset scheme to address boundary errors caused by the bi-linear up-sampling. The main difference is that they do not apply any explicit supervision on their offset maps and require re-training for different models, while we apply explicit semantic-aware supervision on the offset maps and our offset maps can be applied to various approaches directly without any re-training. We also empirically verify the advantages of our approach.

3 Approach

3.1 Framework

The overall pipeline of SegFix is illustrated in Fig. 3. We first train a model to pick out boundary pixels (with the boundary maps) and estimate their corresponding interior pixels (with offsets derived from the direction maps) from only the image. We do not perform segmentation directly during training. We apply this model to generate offset maps from the images and use the offsets to get the corresponding pixels which should mostly be the more confident interior pixels, and thereby refine segmentation results from any segmentation model. We mainly describe SegFix scheme for semantic segmentation and we illustrate the details for instance segmentation in the Appendix E.

Training Stage. Given an input image \(\mathbf {I}\) of shape \(H\times W\times 3\), we first use a backbone network to extract a feature map \(\mathbf {X}\), and then send \(\mathbf {X}\) in parallel to (1) the boundary branch to predict a binary map \(\mathbf {B}\), with 1 for the boundary pixels and 0 for the interior pixels, and (2) the direction branch to predict a direction map \(\mathbf {D}\) with each element storing the direction pointing from the boundary pixel to the interior pixel. The direction map \(\mathbf {D}\) is then masked by the binary map \(\mathbf {B}\) to yield the input for the offset branch.

For model training, we use a binary cross-entropy loss as the boundary loss on \(\mathbf {B}\) and a categorical cross-entropy loss as the direction loss on \(\mathbf {D}\) separately.

Testing Stage. Based on the predicted boundary map \(\mathbf {B}\) and direction map \(\mathbf {D}\), we apply the offset branch to generate a offset map \(\varDelta {\mathbf {Q}}\). A coarse label map \({\mathbf {L}}\) output by any semantic segmentation model will be refined as:

where \(\widetilde{\mathbf {L}}\) is refined label map, \(\mathbf {p}_i\) represents the coordinate of the boundary pixel i, \(\varDelta {\mathbf {q}_i}\) is the generated offset vector pointing to an interior pixel, which is indeed an element of \(\varDelta {\mathbf {Q}}\). \(\mathbf {p}_i+\varDelta {\mathbf {q}_i}\) is the position of the identified interior pixel.

Considering that there might be some “fake” interior pixelsFootnote 2 when the boundary is thick, we propose two different schemes as following: (i) re-scaling all the offsets by a factor, e.g., 2. (ii) iteratively applying the offsets (of the “fake" interior pixels) until finding an interior pixel. We choose (i) by default for simplicity as their performance is close.

During testing stage, we only need to generate the offset maps on test set for once, and could apply the same offset maps to refine the segmentation results from any existing segmentation model without requiring any prior information. In general, our approach is agnostic to any existing segmentation models.

Illustrating the refinement mechanism of our approach: we refine the coarse label map based on the offset map by replacing the labels of boundary pixels with the labels of (more) reliable interior pixels. We represent different offset vectors with different arrows. We mark the error positions in the coarse label map with  and the corresponding corrected positions in the refined label map with

and the corresponding corrected positions in the refined label map with  . For example, the top-most error pixel (class road) in the coarse label map is associated with a direction \(\rightarrow \). We use the label (class car) of the updated position based on offset (1, 0) as the refined label. Only one-step shifting based on the offset map already refines several boundary errors.

. For example, the top-most error pixel (class road) in the coarse label map is associated with a direction \(\rightarrow \). We use the label (class car) of the updated position based on offset (1, 0) as the refined label. Only one-step shifting based on the offset map already refines several boundary errors.

3.2 Network Architecture

Backbone. We adopt the recently proposed high resolution network (HRNet) [54] as backbone, due to its strengths at maintaining high resolution feature maps and our need to apply full-resolution boundary maps and direction maps to refine full-resolution coarse label maps. Besides, we also modify HRNet through applying a \(4\times 4\) deconvolution with stride 2 on the final output feature map of HRNet to increase the resolution by \(2\times \), which is similar to [15], called Higher-HRNet. We directly perform the boundary branch and the direction branch on the output feature map with the highest resolution. The resolution is \(\frac{H}{s}\times \frac{W}{s}\times D\), where \(s=4\) for HRNet and \(s=2\) for Higher-HRNet. We empirically verify that our approach consistently improves the coarse segmentation results for all variations of our backbone choices in Sect. 4.2, e.g., HRNet-W18 and HRNet-W32.

Boundary Branch/Loss. We implement the boundary branch as \(1\times 1~{\text {Conv}} \rightarrow {\text {BN}} \rightarrow {\text {ReLU}}\) with 256 output channels. We then apply a linear classifier (\(1\times 1~{\text {Conv}}\)) and up-sample the prediction to generate the final boundary map \(\mathbf {B}\) of size \(H\times W\times 1\). Each element of \(\mathbf {B}\) records the probability of the pixel belonging to the boundary. We use binary cross-entropy loss as the boundary loss.

Direction Branch/Loss. Different from the previous approaches [1, 3] that perform regression on continuous directions in \([0^{\circ }, 360^{\circ })\) as the ground-truth, our approach directly predicts discrete directions by evenly dividing the entire direction range to m partitions (or categories) as our ground-truth (\(m=8\) by default). In fact, we empirically find that our discrete categorization scheme outperforms the regression scheme, e.g., mean squared loss in the angular domain [3], measured by the final segmentation performance improvements. We illustrate more details for the discrete direction map in Sect. 3.3.

(a) We divide the entire direction value range \([0^{\circ }, 360^{\circ })\) to m partitions or categories (marked with different colors), For example, when \(m=4\), we have \([0^{\circ }, 90^{\circ })\), \([90^{\circ }, 180^{\circ })\), \([180^{\circ }, 270^{\circ })\) and \([270^{\circ }, 360^{\circ })\) correspond to 4 different categories separately. The above 4 direction categories correspond to offsets (1, 1), \((-1,1)\), \((-1,-1)\) and \((1,-1)\) respectively. The situation for \(m=8\) is similar. (b) Binary maps \(\rightarrow \) Distance maps \(\rightarrow \) Direction maps. The ground-truth binary maps are of category car, road and side-walk. We first apply distance transform on each binary map to compute the ground-truth distance maps. Then we use Sobel filter on the distance maps to compute the ground-truth direction maps. We choose different colors to represent different distance values or the direction values. (Color figure online)

We implement the direction branch as \(1\times 1~{\text {Conv}} \rightarrow {\text {BN}} \rightarrow {\text {ReLU}}\) with 256 output channels. We further apply a linear classifier (\(1\times 1~{\text {Conv}}\)) and up-sample the classifier prediction to generate the final direction map \(\mathbf {D}\) of size \(H\times W\times m\). We mask the direction map \(\mathbf {D}\) by multiplying by the (binarized) boundary map \(\mathbf {B}\) to ensure that we only apply direction loss on the pixels identified as boundary by the boundary branch. We use the standard category-wise cross-entropy loss to supervise the discrete directions in this branch.

Offset Branch. The offset branch is used to convert the predicted direction map \(\mathbf {D}\) to the offset map \(\varDelta {\mathbf {Q}}\) of size \(H\times W\times 2\). We illustrate the mapping mechanism in Fig. 5 (a). For example, the “upright” direction category (corresponds to the value within range \([0^{\circ }, 90^{\circ })\)) will be mapped to offset (1, 1) when \(m=4\).

Last, we generate the refined label map through shifting the coarse label map with the grid-sample scheme [30]. The process is shown in Fig. 4.

3.3 Ground-Truth Generation and Analysis

There may exist many different mechanisms to generate ground-truth for the boundary maps and the direction maps. In this work, we mainly exploit the conventional distance transform [34] to generate ground-truth for both semantic segmentation task and the instance segmentation task.

We start from the ground-truth segmentation label to generate the ground-truth distance map, followed by boundary map and direction map. Figure 5 (b) illustrates the overall procedure.

Distance Map. For each pixel, our distance map records its minimum (Euclidean) distance to the pixels belonging to other object category. We illustrate how to compute the distance map as below.

First, we decompose the ground-truth label into K binary maps associated with different semantic categories, e.g., car, road, sidewalk. The \(k^{th}\) binary map records the pixels belonging to the \(k^{th}\) semantic category as 1 and 0 otherwise. Second, we perform distance transform [34]Footnote 3 on each binary map independently to compute the distance map. The element of \(k^{th}\) distance map encodes the distance from a pixel belonging to \(k^{th}\) category to the nearest pixel belonging to other categories. Such distance can be treated as the distance to the object boundary. We compute a fused distance map through aggregating all the K distance maps.

Note that the values in our distance map are (always positive) different from the conventional signed distances that represent the interior/exterior pixels with positive/negative distances separately.

Boundary Map. As the fused distance map represents the distances to the object boundary, we can construct the ground-truth boundary map through setting all the pixels with distance value smaller than a threshold \(\gamma \) as boundaryFootnote 4. We empirically choose small \(\gamma \) value, e.g., \(\gamma =5\), as we are mainly focused on the thin boundary refinement.

Direction Map. We perform the Sobel filter (with kernel size \(9\times 9\)) on the K distance maps independently to compute the corresponding K direction maps respectively. The Sobel filter based direction is in the range \([0^{\circ }, 360^{\circ })\), and each direction points to the interior pixel (within the neighborhood) that is furthest away from the object boundary. We divide the entire direction range to m categories (or partitions) and then assign the direction of each pixel to the corresponding category. We illustrate two kinds of partitions in Fig. 5 (a) and we choose the \(m=8\) partition by default. We apply the evenly divided direction map as our ground-truth for training. Besides, we also visualize some examples of direction map in Fig. 5 (b).

Empirical Analysis. We apply the generated ground-truth on the segmentation results of three state-of-the-art methods including DeepLabv3 [12], HRNet [54] and Gated-SCNN [55] to investigate the potential of our approach. Specifically, we first project the ground-truth direction map to offset map and then refine the segmentation results on Cityscapes val based on our generated ground-truth offset map. Table 1 summarizes the related results. We can see that our approach significantly improves both the overall mIoU and the boundary F-score. For example, our approach (\(m=8\)) improves the mIoU of Gated-SCNN by \(3.1\%\). We may achieve higher performance through re-scaling the offsets for different pixels adaptively, which is not the focus of this work.

Discussion. The key condition for ensuring the effectiveness of our approach is that segmentation predictions of the interior pixels are more reliable empirically. Given accurate boundary maps and direction maps, we could always improve the segmentation performance in expectation. In other words, the segmentation performance ceiling of our approach is also determined by the interior pixels’ prediction accuracy.

4 Experiments: Semantic Segmentation

4.1 Datasets and Implementation Details

Cityscapes. [16] is a real-world dataset that consists of 2, 975/500/1, 525 images with resolution \(2048\times 1024\) for training/validation/testing respectively, which contains 19/8 semantic categories for semantic/instance segmentation task.

ADE20K. [68] is a very challenging benchmark consisting of around 20, 000/2, 000 images for training/validation respectively. The dataset contains 150 fine-grained semantic categories.

GTA5. [52] is a synthetic dataset that consists of 12, 402/6, 347/6, 155 images with resolution \(1914\times 1052\) for training/validation/testing respectively. The dataset contains 19 semantic categories which are compatible with Cityscapes.

Implementation Details. We perform the same training and testing settings on Cityscapes and GTA5 benchmarks as follow. We set the initial learning rate as 0.04, weight decay as 0.0005, crop size as \(512\times 512\) and batch size as 16, and train for 80K iterations. For the ADE20K benchmark, we set the initial learning as 0.02 and all the other settings are kept the same as on Cityscapes. We use “poly” learning rate policy with power \(=0.9\). For data augmentation, we all apply random flipping horizontally, random cropping and random brightness jittering within the range of \([-10, 10]\). Besides, we all apply syncBN [53] across multiple GPUs to stabilize the training. We simply set the loss weight as 1.0 for both the boundary loss and direction loss without tuning.

Notably, our approach does not require extra training or fine-tuning any semantic segmentation models. We only need to predict the boundary mask and the direction map for all the test images in advance and refine the segmentation results of any existing approaches accordingly.

Evaluation Metrics. We use two different metrics including: mask F-score and top-1 direction accuracy to evaluate the performance of our approach during the training stage. Mask F-score is performed on the predicted binary boundary map and direction accuracy is performed on the predicted direction map. Especially, we only measure the direction accuracy within the regions identified as boundary by the boundary branch.

To verify the effectiveness of our approach for semantic segmentation, we follow the recent Gated-SCNN [55] and perform two quantitative measures including: class-wise mIoU to measure the overall segmentation performance on regions; boundary F-score to measure the boundary quality of predicted mask with a small slack in distance. In our experiments, we measure the boundary F-score using thresholds 0.0003, 0.0006 and 0.0009 corresponding to 1, 2 and 3 pixels respectively. We mainly report the performance with threshold as 0.0003 for most of our ablation experiments.

4.2 Ablation Experiments

We conduct a group of ablations to analyze the influence of various factors within SegFix. We report the improvements over the segmentation baseline DeepLabv3 (mIoU/F-score is \(79.5\%\)/\(56.6\%\)) if not specified.

Backbone. We study the performance of our SegFix based on three different backbones with increasing complexities, i.e., HRNet-W18, HRNet-W32 and Higher-HRNet. We apply the same training/testing settings for all three backbones. According to the comparisons in Table 2, our SegFix consistently improves both the segmentation performance and the boundary quality with different backbone choices. We choose Higher-HRNet in the following experiments if not specified as it performs best. Besides, we also report their running time in Table 2.

Qualitative results of our direction branch predictions. The \(1^\mathrm{st}\) and \(3^\mathrm{rd}\) columns represent the ground-truth segmentation map. The \(2^\mathrm{nd}\) and \(4^\mathrm{th}\) columns illustrate the predicted directions with the segmentation map of HRNet as the background. We mark the directions that fix errors with

and directions that lead to extra errors with

and directions that lead to extra errors with

. Our predicted directions addresses boundary errors for various object categories such as bicycle, traffic light and traffic sign. (Better viewed zoom in) (Color figure online)

. Our predicted directions addresses boundary errors for various object categories such as bicycle, traffic light and traffic sign. (Better viewed zoom in) (Color figure online)

Boundary Branch. We verify that SegFix is robust to the choice of hyper-parameter \(\gamma \) within the boundary branch and illustrate some qualitative results.

\(\Box \) Boundary Width: Table 3 shows the performance improvements based on boundary with different widths. We choose different \(\gamma \) values to control the boundary width, where smaller \(\gamma \) leads to thinner boundaries. We also report the performance with \(\gamma =\infty \), which means all pixels is identified as boundary. We find their improvements are close and we choose \(\gamma =5\) by default.

\(\Box \) Qualitative Results: We show the qualitative results with our boundary branch in the Appendix G. We find that the predicted boundaries are of high quality. Besides, we also compute the F-scores between the boundary computed from the segmentation map of the existing approaches, e.g., Gated-SCNN and HRNet, and the predicted boundary from our boundary branch. The F-scores are around \(70\%\), which (in some degree) means that their boundary maps are well aligned and ensures that more accurate direction predictions bring larger performance gains.

Direction Branch. We analyze the influence of the direction number m and then present some qualitative results of our predicted directions.

\(\Box \) Direction Number: We choose different direction numbers to perform different direction partitions and control the generated offset maps that are used to refine the coarse label map. We conduct the experiments with \(m=4\), \(m=8\) and \(m=16\). According to the reported results on the right 3 columns in Table 3, we find different direction numbers all lead to significant improvements and we choose \(m=8\) if not specified as our SegFix is less sensitive to the choice of m.

\(\Box \) Qualitative Results: In Fig. 6, we show some examples to illustrate that our predicted boundary directions improve the errors. Overall, the improved pixels (marked with

) are mainly distributed along the very thin boundary.

) are mainly distributed along the very thin boundary.

Comparison with GUM. We compare SegFix with the previous model-dependent guided up-sampling mechanism [47, 48] based on DeepLabv3 as the baseline. We report the related results in Table 4. It can be seen that our approach significantly outperforms GUM measured by both mIoU and F-score. We achieve higher performance through combining GUM with our approach, which achieves \(5.0\%\) improvements on F-score compared to the baseline.

Comparison with DenseCRF. We compare our approach with the conventional well-verified DenseCRF [36] based on the DeepLabv3 as our baseline. We fine-tune the hyper-parameters of DenseCRF and set them empirically following [11]. According to Table 5, our approach not only outperforms DenseCRF but also is complementary with DenseCRF. The possible reasons for the limited mIoU improvements of DenseCRF might be that it brings more extra errors on the interior pixels.

Application to Gated-SCNN. Considering that Gated-SCNN [55] introduced multiple components to improve the performance, it is hard to compare our approach with Gated-SCNN fairly to a large extent. To verify the effectiveness of our approach to some extent, we first take the open-sourced Gated-SCNN (multi-scale testing) segmentation results on Cityscapes validation set as the coarse segmentation maps, then we apply the SegFix offset maps to refine the results. We report the results in Table 6 and SegFix improves the boundary F-score by \(1.7\%\), suggesting that SegFix is complementary with the strong baseline that also focuses on improving the segmentation boundary quality. Besides, we also report the detailed category-wise improvements measured by both mIoU and boundary F-score in the Table 2 and Table 3 of Appendix.

4.3 Application to State-of-the-art

We generate the boundary maps and the direction maps in advance and apply them to the segmentation results of various state-of-the-art approaches without extra training or fine-tuning.

Cityscapes val: We first apply our approach on various state-of-the-art approaches (on Cityscapes val) including DeepLabv3, Gated-SCNN and HRNet. We report the category-wise mIoU improvements in Table 2 of Appendix. It can be seen that our approach significantly improves the segmentation quality along the boundaries of all the evaluated approaches. We provides some qualitative examples of the improvements with our approach along the thin boundaries based on both DeepLabv3 and HRNet in the Fig. 1 of Appendix.

Cityscapes Test: We further apply our approach on several recent state-of-the-art methods on Cityscapes test including PSANet [66], DANet [23], BFP [18], HRNet [54], Gated-SCNN [55], VPLR [69] and HRNet + OCR [61]. We directly apply the same model that are trained with only the 2, 975 training images without any other tricks, e.g., training with validation set or Mapillary Vistas [49], online hard example mining.

Notably, the state-of-the-art methods have applied various advanced techniques, e.g., multi-scale testing, multi-grid, performing boundary supervision or utilizing extra training data such as Mapillary Vistas or Cityscapes video, to improve their results. In Table 8, our model-agnostic boundary refinement scheme consistently improves all the evaluated approaches. For example, with our SegFix, “HRNet + OCR” achieves \(84.5\%\) on Cityscapes test. The improvements of our SegFix is in fact already significant considering the baseline is already very strong and the performance gap between top ranking methods is just around 0.1%–0.3%. We believe that lots of other advanced approaches might also benefit from our approach.

We also apply the SegFix scheme on two other challenging semantic segmentation benchmarks including ADE20K and GTA5. Table 7 reports the results and SegFix achieves significant performance improvements along the boundary on both benchmarks. e.g., the boundary F-score of DeepLabv3 gains \(2.9\%\)/\(11.5\%\) on ADE20K val/GTA5 test separately. Besides, we propose a unified SegFix model and compare our SegFix to model ensemble in Appendix C and D.

5 Experiments: Instance Segmentation

In Table 9, we illustrate the results of SegFix on Cityscapes instance segmentation task. We can find that the SegFix consistently improves the mean AP scores over Mask-RCNN [26], PANet [42], PointRend [35] and PolyTransform [40]. For example, with SegFix scheme, PANet gains \(1.4\%\) points on the Cityscapes test set. We also apply our SegFix on the very recent PointRend and PolyTransform. Our SegFix consistently improves the performance of PointRend and PolyTransform by \(1.5\%\) and \(1.1\%\) separately, which further verifies the effectiveness of our method.

We use the public available checkpoints from Dectectron2Footnote 5 and PANetFootnote 6 to generate the predictions of Mask-RCNN, PointRend and PANet. Besides, we use the segmentation results of PolyTransform directly. More training/testing details of SegFix on Cityscapes instance segmentation task are illustrated in the Appendix E. We believe that SegFix can be used to improve various other state-of-the-art instance segmentation methods directly w/o any prior requirements.

Notably, the improvements on the instance segmentation tasks (+1.1%–1.5%) are more significant than the improvements on semantic segmentation task (+0.3%–0.5%). We guess the main reason is that the instance segmentation evaluation (on Cityscapes) only considers 8 object categories without including the stuff categories. The performance of stuff categories is less sensitive to the boundary errors due to that their area is (typically) larger than the area of object categories. In summary, our SegFix achieves larger improvements on object categories than stuff categories.

6 Conclusion

In this paper, we have proposed a novel model-agnostic approach to refine the segmentation maps predicted by an unknown segmentation model. The insight is that the predictions of the interior pixels are more reliable. We propose to replace the predictions of the boundary pixels using the predictions of the corresponding interior pixels. The correspondence is learnt only from the input image. The main advantage of our method is that SegFix generalizes well on various strong segmentation models. Empirical results show that the effectiveness of our approach for both semantic segmentation and instance segmentation tasks. We hope our SegFix scheme can become a strong baseline for more accurate segmentation results along the boundary.

Notes

- 1.

In this paper, we treat the pixels with neighboring pixels belonging to different categories as the boundary pixels. We use the distance transform to generate the ground-truth boundary map with any given width in our implementation.

- 2.

We use “fake” interior pixels to represent pixels (after offsets) that still lie on the boundary when the boundary is thick. Notably, we identify an pixel as interior pixel/boundary pixel if its value in the predicted boundary map \(\mathbf {B}\) is 0/1.

- 3.

We use scipy.ndimage.morphology.distance\(\_\)transform\(\_\)textttedt in implementation.

- 4.

We define the boundary pixels and interior pixels based on their distance values.

- 5.

Detectron2: https://github.com/facebookresearch/detectron2.

- 6.

References

Acuna, D., Kar, A., Fidler, S.: Devil is in the edges: learning semantic boundaries from noisy annotations. In: CVPR (2019)

Arbelaez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. PAMI 33, 898–916 (2010)

Bai, M., Urtasun, R.: Deep watershed transform for instance segmentation. In: CVPR (2017)

Bertasius, G., Shi, J., Torresani, L.: High-for-low and low-for-high: efficient boundary detection from deep object features and its applications to high-level vision. In: ICCV (2015)

Bertasius, G., Shi, J., Torresani, L.: Semantic segmentation with boundary neural fields. In: CVPR (2016)

Bischke, B., Helber, P., Folz, J., Borth, D., Dengel, A.: Multi-task learning for segmentation of building footprints with deep neural networks. In: ICIP (2019)

Caesar, H., Uijlings, J., Ferrari, V.: Coco-stuff: thing and stuff classes in context. In: CVPR (2018)

Caselles, V., Kimmel, R., Sapiro, G.: Geodesic active contours. IJCV 22, 61–79 (1997). https://doi.org/10.1023/A:1007979827043

Chen, L.C., Barron, J.T., Papandreou, G., Murphy, K., Yuille, A.L.: Semantic image segmentation with task-specific edge detection using CNNS and a discriminatively trained domain transform. In: CVPR (2016)

Chen, L.C., Hermans, A., Papandreou, G., Schroff, F., Wang, P., Adam, H.: Masklab: instance segmentation by refining object detection with semantic and direction features. In: CVPR (2018)

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: semantic image segmentation with deep convolutional Nets, Atrous convolution, and fully connected CRFs. PAMI 40, 834–848 (2017)

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking Atrous convolution for semantic image segmentation. arXiv:1706.05587 (2017)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with Atrous separable convolution for semantic image segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 833–851. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_49

Chen, X., Williams, B.M., Vallabhaneni, S.R., Czanner, G., Williams, R., Zheng, Y.: Learning active contour models for medical image segmentation. In: CVPR (2019)

Cheng, B., Xiao, B., Wang, J., Shi, H., Huang, T.S., Zhang, L.: Bottom-up higher-resolution networks for multi-person pose estimation. arXiv preprint arXiv:1908.10357 (2019)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Dangi, S., Yaniv, Z., Linte, C.: A distance map regularized CNN for cardiac cine MR image segmentation. arXiv:1901.01238 (2019)

Ding, H., Jiang, X., Liu, A.Q., Thalmann, N.M., Wang, G.: Boundary-aware feature propagation for scene segmentation. In: ICCV (2019)

Ding, H., Jiang, X., Shuai, B., Liu, A.Q., Wang, G.: Semantic correlation promoted shape-variant context for segmentation. In: CVPR (2019)

Ding, H., Jiang, X., Shuai, B., Qun Liu, A., Wang, G.: Context contrasted feature and gated multi-scale aggregation for scene segmentation. In: CVPR (2018)

Dollár, P., Zitnick, C.L.: Fast edge detection using structured forests. ArXiv (2014)

Fieraru, M., Khoreva, A., Pishchulin, L., Schiele, B.: Learning to refine human pose estimation. In: CVPRW (2018)

Fu, J., et al.: Dual attention network for scene segmentation. In: CVPR (2019)

Gidaris, S., Komodakis, N.: Detect, replace, refine: deep structured prediction for pixel wise labeling. In: CVPR (2017)

Hayder, Z., He, X., Salzmann, M.: Boundary-aware instance segmentation. In: CVPR (2017)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: ICCV (2017)

Huang, L., Yuan, Y., Guo, J., Zhang, C., Chen, X., Wang, J.: Interlaced sparse self-attention for semantic segmentation. arXiv preprint arXiv:1907.12273 (2019)

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y., Liu, W.: CCNet: criss-cross attention for semantic segmentation. In: ICCV (2019)

Islam, M.A., Naha, S., Rochan, M., Bruce, N., Wang, Y.: Label refinement network for coarse-to-fine semantic segmentation. arXiv:1703.00551 (2017)

Jaderberg, M., Simonyan, K., Zisserman, A., et al.: Spatial transformer networks. In: NIPS (2015)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. IJCV 1, 321–331 (1988). https://doi.org/10.1007/BF00133570

Ke, T.-W., Hwang, J.-J., Liu, Z., Yu, S.X.: Adaptive affinity fields for semantic segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11205, pp. 605–621. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01246-5_36

Kim, Y., Kim, S., Kim, T., Kim, C.: CNN-based semantic segmentation using level set loss. In: WACV (2019)

Kimmel, R., Kiryati, N., Bruckstein, A.M.: Sub-pixel distance maps and weighted distance transforms. JMIV 6, 223–233 (1996)

Kirillov, A., Wu, Y., He, K., Girshick, R.: Pointrend: image segmentation as rendering. arXiv:1912.08193 (2019)

Krähenbühl, P., Koltun, V.: Efficient inference in fully connected CRFs with gaussian edge potentials. In: NIPS (2011)

Kuo, W., Angelova, A., Malik, J., Lin, T.Y.: Shapemask: learning to segment novel objects by refining shape priors. In: ICCV (2019)

Li, K., Hariharan, B., Malik, J.: Iterative instance segmentation. In: CVPR (2016)

Li, X., Zhong, Z., Wu, J., Yang, Y., Lin, Z., Liu, H.: Expectation-maximization attention networks for semantic segmentation. In: ICCV (2019)

Liang, J., Homayounfar, N., Ma, W.C., Xiong, Y., Hu, R., Urtasun, R.: Polytransform: Deep polygon transformer for instance segmentation. arXiv:1912.02801 (2019)

Lin, G., Milan, A., Shen, C., Reid, I.: Refinenet: multi-path refinement networks for high-resolution semantic segmentation. In: CVPR (2017)

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J.: Path aggregation network for instance segmentation. In: CVPR (2018)

Liu, S., De Mello, S., Gu, J., Zhong, G., Yang, M.H., Kautz, J.: Learning affinity via spatial propagation networks. In: NIPS (2017)

Liu, T., et al.: Devil in the details: towards accurate single and multiple human parsing. arXiv:1809.05996 (2018)

Liu, Y., Cheng, M.M., Hu, X., Wang, K., Bai, X.: Richer convolutional features for edge detection. In: CVPR (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: CVPR (2015)

Mazzini, D.: Guided upsampling network for real-time semantic segmentation. arXiv preprint arXiv:1807.07466 (2018)

Mazzini, D., Schettini, R.: Spatial sampling network for fast scene understanding. In: CVPRW (2019)

Neuhold, G., Ollmann, T., Rota Bulo, S., Kontschieder, P.: The mapillary vistas dataset for semantic understanding of street scenes. In: ICCV (2017)

Osher, S., Sethian, J.A.: Fronts propagating with curvature-dependent speed: algorithms based on Hamilton-Jacobi formulations. J. Comput. Phys. 79, 12–49 (1988)

Papandreou, G., Zhu, T., Chen, L.-C., Gidaris, S., Tompson, J., Murphy, K.: PersonLab: person pose estimation and instance segmentation with a bottom-up, part-based, geometric embedding model. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 282–299. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_17

Richter, S.R., Vineet, V., Roth, S., Koltun, V.: Playing for data: ground truth from computer games. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 102–118. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_7

Rota Bulò, S., Porzi, L., Kontschieder, P.: In-place activated batchnorm for memory-optimized training of DNNs. In: CVPR (2018)

Sun, K., et al.: High-resolution representations for labeling pixels and regions. arXiv:1904.04514 (2019)

Takikawa, T., Acuna, D., Jampani, V., Fidler, S.: Gated-SCNN: gated shape CNNs for semantic segmentation. In: ICCV (2019)

Wang, Z., Acuna, D., Ling, H., Kar, A., Fidler, S.: Object instance annotation with deep extreme level set evolution. In: CVPR (2019)

Wu, Y., Kirillov, A., Massa, F., Lo, W.Y., Girshick, R.: Detectron2. https://github.com/facebookresearch/detectron2 (2019)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122 (2015)

Yu, Z., Feng, C., Liu, M.Y., Ramalingam, S.: CASENet: deep category-aware semantic edge detection. In: CVPR (2017)

Yu, Z., et al.: Simultaneous edge alignment and learning. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11207, pp. 400–417. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01219-9_24

Yuan, Y., Chen, X., Wang, J.: Object-contextual representations for semantic segmentation. arXiv preprint arXiv:1909.11065 (2019)

Yuan, Y., Wang, J.: OCNet: object context network for scene parsing. arXiv:1809.00916 (2018)

Zhang, H., et al.: Context encoding for semantic segmentation. In: CVPR (2018)

Zhang, H., Zhang, H., Wang, C., Xie, J.: Co-occurrent features in semantic segmentation. In: CVPR (2019)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network (2017)

Zhao, H., et al.: PSANet: point-wise spatial attention network for scene parsing. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11213, pp. 270–286. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01240-3_17

Zheng, S., et al.: Conditional random fields as recurrent neural networks. In: ICCV (2015)

Zhou, B., Zhao, H., Puig, X., Fidler, S., Barriuso, A., Torralba, A.: Scene parsing through ade20k dataset. In: CVPR (2017)

Zhu, Y., et al.: Improving semantic segmentation via video propagation and label relaxation. In: CVPR (2019)

Acknowledgement

This work is partially supported by Natural Science Foundation of China under contract No. 61390511, and Frontier Science Key Research Project CAS No. QYZDJ-SSW-JSC009.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Yuan, Y., Xie, J., Chen, X., Wang, J. (2020). SegFix: Model-Agnostic Boundary Refinement for Segmentation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12357. Springer, Cham. https://doi.org/10.1007/978-3-030-58610-2_29

Download citation

DOI: https://doi.org/10.1007/978-3-030-58610-2_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58609-6

Online ISBN: 978-3-030-58610-2

eBook Packages: Computer ScienceComputer Science (R0)