Abstract

Point cloud shape completion is a challenging problem in 3D vision and robotics. Existing learning-based frameworks leverage encoder-decoder architectures to recover the complete shape from a highly encoded global feature vector. Though the global feature can approximately represent the overall shape of 3D objects, it would lead to the loss of shape details during the completion process. In this work, instead of using a global feature to recover the whole complete surface, we explore the functionality of multi-level features and aggregate different features to represent the known part and the missing part separately. We propose two different feature aggregation strategies, named global & local feature aggregation (GLFA) and residual feature aggregation (RFA), to express the two kinds of features and reconstruct coordinates from their combination. In addition, we also design a refinement component to prevent the generated point cloud from non-uniform distribution and outliers. Extensive experiments have been conducted on the ShapeNet and KITTI dataset. Qualitative and quantitative evaluations demonstrate that our proposed network outperforms current state-of-the art methods especially on detail preservation.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

As the low-cost sensors like depth camera and LIDAR are becoming increasingly available, 3D data has gained large attention in vision and robotics community. However, viewpoint occlusion and low sensor resolution in 3D scans always lead to incomplete shapes, which can not be directly used in practical applications. To this end, it is desired to recover a complete 3D model from a partial shape, which has significant values in variety of tasks such as 3D reconstruction [4, 7, 8, 45], robotics [38], scene understanding [3, 46] and autonomous driving [26].

Recent learning-based works succeed in performing shape completion on volumetric representation of 3D objects, such as occupied grids or TSDF volume, where convolution operations can be applied directly [4, 11, 19, 43, 47]. However, volumetric representation always leads to expensive memory cost and low shape fidelity. In contrast, point cloud is a more compact and finer representation of 3D data than voxel but is harder to incorporate in the neural network due to its irregular properties. Recently, several network architectures have been designed for direct point cloud shape completion, such as PCN [51], TopNet [36], RL-GAN-Net [31], demonstrating the advantage of point cloud shape completion through learning-based methods. These methods are all based on encoder-decoder networks, where the completed 3D model is recovered via a global feature vector obtained from the encoder. Though the encoded vector is able to represent the overall shape, merely considering it in shape completion will lead to the loss of geometric details existing in original point clouds.

To address this problem, rather than using only a global feature vector to generate the whole complete model, we extract multi-level features and aggregate different-level features to represent the known and missing parts separately, which contributes to both existing detail preservation and missing shape prediction, as illustrated in Fig. 1(a).

We first extract multi-level features for each point by a hierarchical feature learning architecture. These features can present the existing details well, but they do not involve enough missing part cues. If we directly use these features for shape completion, the generated results are likely tangled into the existing partial input. To this end, we consider aggregating diverse features for the known and missing parts separately. We propose two feature aggregation strategies, i.e., global & local feature aggregation (GLFA) and residual feature aggregation (RFA). For global & local feature aggregation, the main idea is that we leverage local features to represent the known part while aggregate global features for missing part. For residual feature aggregation, we aim to represent the missing part with residual features which indicates the difference between the holistic shape and the partial model. We then combine the known and missing part features with a proper ratio and reconstruct the complete model from the combined features. Finally, a successive refinement component is designed to converge the generated model to be uniformly distributed and reduce the outliers.

To summarize, our main contributions are:

-

We propose a novel learning based point cloud completion architecture which achieves better performance on both detail preservation and latent shape prediction by separated feature aggregation strategy.

-

We design a refinement component which can refine the reconstructed coordinates to be uniformly distributed and reduce the noises and outliers.

-

Extensive experiments demonstrate our proposed network outperforms state-of-the-art 3D point cloud completion methods both quantitatively and qualitatively.

2 Related Work

Deep Learning on Point Cloud. Qi et al. [28] first introduced a deep learning network PointNet which uses symmetric function to directly process point cloud. PointNet++ [29] captures point cloud local structure from neighborhoods at multiple scales. FoldingNet [49] uses a novel folding-based decoder which deforms a canonical 2D grid onto the underlying shape surface. A series of network architectures on point cloud have been proposed in succession for point cloud analysis [2, 13, 14, 18, 20, 22, 30, 35, 39, 40, 42, 44, 53, 54], and related applications such as object detection [5, 10, 17, 26, 27, 32, 41, 52] and reconstruction [12, 39, 48].

Non-learning Based Shape Completion. Shape completion has long been a popular problem on interest in the graphics and vision field. Some effective descriptors have been developed in the early years, such as [15, 24, 34], which leverages geometric cues to fill the missing parts in the surface. These methods are usually limited to fill only the small holes. Another way to complete the shape is to find the symmetric structure as priors to achieve the completion [23, 25, 37]. However, these methods work well only when the missing part can be inferred from the existing partial model. Some researchers proposed data-driven methods [16, 21, 33] which usually retrieve the most likely model based on the partial input from a large 3D shape database. Though convincing results can be obtained, these methods are time-consuming in matching process according to the database size.

Learning Based Shape Completion. Recently more researchers tend to solve 3D vision tasks using learning methods. Learning based methods on shape completion usually use deep neural network with a encoder-decoder architecture to directly map the partial input to a complete shape. Most pioneering works [4, 11, 19, 43, 47] rely on volumetric representations where convolution operations can be directly applied. Volumetric representations lead to large computation and memory costs, thus most works operate on low dimension voxel grids leading to details missing. To avoid these limitations, Yuan et al. proposed PCN [51] which directly generates complete shape with partial point cloud as input. PCN recovers the complete point cloud in a two-stage process which first generates a coarse result with low resolution and then recovers the final output using the coarse one. TopNet [36] explores hierarchical rooted tree structure as decoder to generate arbitrary grouping of points in completion task. RL-GAN-Net [31] presents a completion framework using reinforcement learning agent to control the GAN generator. All these approaches generate the complete point cloud by feeding a encoded global feature vector to a decoder network. Though the global feature can almost represent the underlying holistic surface, it discards many details existing in the partial input.

Overall network architecture. Given a partial point cloud of N points with XYZ coordinates, our network extracts multi-level features and interpolates each level feature to the same size \(N \times C_m\) (implemented with PointNet++ [29] layers). Multi-level features are then aggregated to represent known and missing parts separately with feature aggregation strategy. A high-resolution completion result \(Y_{rec}\) of size \(rN \times 3\) is reconstructed from the expanded features where r is the expansion factor. The reconstructed results are finally refined by a refinement component.

3 Network Architecture

The overall architecture of our network is shown in Fig. 2. First, the multi-level features are extracted via a hierarchical feature learning to efficiently represent both local and global properties. Then we aggregate features for known and missing parts of the point set separately to provide explicit cues for detail preservation and shape prediction. After that, we expand the features and reconstruct the coordinates from the expanded features to obtain a high-resolution result. Finally, we design a refinement component to make the complete point cloud uniformly distributed and smooth.

3.1 Multi-level Features Extraction

Both local and global structures can be efficiently explored by extracting features from different levels. We extract multi-level features via a hierarchical feature learning architecture proposed in PointNet++ [29], which is also used in PU-Net [50]. The feature extraction module consists of multiple layers of sampling and grouping operation to embed multi-level features and then interpolates each level feature to have the same point number and feature size of \(N \times C_m\), where N is the input point number and \(C_m\) refers to the interpolated multi-level feature dimension. We progressively capture features with gradually increasing grouping radius. The features at the i-th level is defined as \(f_{i}\). Different from the PU-Net [50], we extract a global feature \(f_{global}\) from the last-level feature. The global feature \(f_{global}\) have the same size \(1 \times C_m\) and we duplicate it to \(N \times C_m\).

3.2 Separated Feature Aggregation

If we directly concatenate the multi-level features for completion, a main problem is that these features can preserve the existing details, but it does not capture enough information to complete the missing shape. The generated points are easier to be tangled at the original partial input even if we add a repulsion loss on the generated results. We discuss this problem in experiments (Sect. 4.4).

To this end, we consider providing explicit cues for the network to balance detail preservation and shape prediction. We separately represent the known and missing parts with diverse features. For known part features, denoted as \(f_{known}\), we want to ensure the propagation of local structure of the input. The missing part features, denoted as \(f_{missing}\), can be regarded as the features predicted from global structure, or features indicating the difference between the complete shape and the existing partial structure.

Taking the advantages of multi-level features, we propose and compare two different types of feature aggregation strategies namely GLFA and RFA, which is illustrated in Fig. 3(a) (b).

Global & Local Feature Aggregation (GLFA). Intuitively, the known part should be represented with more local features to keep the original details, whereas the missing part should be expressed with a more global feature to predict the latent underlying surface for the completion task. Generally, lower-level features layers in a network correspond to local features in smaller scales, while higher level features are more related to the global features. To this end, we represent the \(f_{known}\) and \(f_{missing}\) as:

where n is the total level number. We aggregate the first m levels features \([f_1, \ldots , f_m]\) to \(f_{known}\) and last m level features \([f_{n-m+1}, \ldots , f_n]\) to \(f_{missing}\).

\(C_{origin}\) is the original points coordinates in the partial point cloud. \(C_{missing}\) indicates the possible coordinates of the missing part. At first, we just use the \(C_{origin}\) as \(C_{missing}\), but we find the network can converge faster if we set \(C_{missing}\) with more proper initial coordinates. To this end, we leverage a learnable transformation net \(\mathcal {T}(\cdot )\) to transform \(C_{origin}\) to proper coordinates as \(C_{missing}\). \(\mathcal {T}(\cdot )\) is similar to the T-Net proposed in Pointnet [28], containing a series of multi-layer perceptrons, a predicted transform matrix and coordinates bias. Our observation for using a transformation net is the symmetry properties of most of objects in the world, thus transforming the original coordinates properly can better fit the missing part coordinates.

Residual Feature Aggregation (RFA). A more direct way to consider this issue is to represent the missing part with features which can be seen as residual features between the global shape and the known part. We first compute the difference between the global feature vector and known part features in feature space. After that, a successive shared multi-layer perceptron is applied to generalize the difference to the residual features in the latent feature space. The \(f_{known}\) and \(f_{missing}\) are represented as:

where \(f_{res_i}\) indicates the residual features between \(f_{global}\) and the \(f_i\), and \(\mathbf {MLP}(\cdot )\) refers to a shared MLP of several fully connected layers.

3.3 Feature Expansion and Reconstruction

Feature Expansion with Combination. After feature aggregation, we combine both the known and missing features and expand the number of features to high-resolution representation. As shown in Fig. 3(c), we expand the feature number by duplicating \(f_{known}\) and \(f_{missing}\) for j and k times respectively, where \(j+k=r\). We then apply r separated MLPs to the r feature sets to generate diverse point sets. Every MLP shares weights in the feature set.

An important factor is how we define the combination ratio of j and k. In our experiments, we define \(j:k=1:1\), as the visible part is often about a half of the whole object. We discuss the effect of the ratio in the supplementary material.

Coordinates Reconstruction. We reconstruct a coarse model \(Y_{rec}\) from the expanded features via a sharded MLP and the output is point coordinates \(rN\times 3\). The corresponding reconstructed coordinates from the known part and missing part are denoted as \(Y_{known}\) and \(Y_{missing}\).

3.4 Refinement Component

In practice, we notice that the reconstructed points are easy to locate too close to each other due to the high correlation of the duplicated features, and suffer from noises and outliers. Thus, we design a refinement component to enhance the distribution uniformity and reduce useless points.

We first apply farthest point sampling (FPS) to get a relatively uniformly subsampled \(\frac{r}{2}N\) point set. However, FPS algorithm is random and the subsampling depends on which point is selected first, which will not get rid of the noises and outliers in the point set.

We apply a following attention module to reduce the incorrect points. The attention module comprises a shared MLP of 3 stacking fully connected layers with softplus activation function applied on the last layer to produce a score map. It selects the top tN features and points as shown in Fig. 2. The input to the attention module is the corresponding \(\frac{r}{2}N \times C\) features to the selected points, and the output is the indexes of the most significant tN points. The selected points are denoted as \(Y_{att}\).

Finally, we apply a local folding unit [49] to approximate a smooth surface of high resolution, which is proposed in PCN [51]. The input to the local folding unit is the \(tN \times (3+C)\) points and corresponding features. For each point, a patch of \(u^2\) points is generated in the local coordinates centered at \({x_i}\) via the folding operation, and transformed into the global coordinates by adding \({x_i}\) to the output. The final output is the refined point coordinates \(rN\times 3\).

3.5 Loss Function

To measure the differences between two point clouds \((S_{1},S_{2})\), Chamfer Distance (CD) and Earth Mover’s Distance (EMD) are introduced in recent work [6]. We choose the Chamfer distance like [31, 36], due to its efficiency over EMD.

Besides FPS algorithm, we also explicitly ensure the distribution uniformity of the attention module output in the loss function to reduce the incorrect points. We apply the repulsion loss proposed in PU-Net [50] which is defined as:

where \(\eta (\cdot )\) and \(w(\cdot )\) are two repulsion term to penalize \(x_{i}\) if \(x_{i}\) is too close to its neighboring point \(x_{i^{\prime }}\).

We jointly train the network by minimizing the following loss function:

where we set \(\alpha =0.5\) as we do not need \(Y_{rec}\) to be an very accurate result, and \(\beta = 0.2\) in our experiments. Note that we apply repulsion loss only on \(Y_{att}\), as local folding unit can approximate a smooth surface which represents the local geometry of a shape, so we just need to ensure \(Y_{att}\) to be uniformly distributed.

We do not explicitly constrain \(Y_{known}\) and \(Y_{missing}\) in the loss function to be close to the known part and missing points respectively. If we supervise \(Y_{known}\) with partial input, it will leave the whole completion task to the missing part features reconstruction. We expect the network itself to learn to reconstruct diverse coordinates according to the different feature representation for known and missing parts, which makes the completion can process in both \(Y_{known}\) and \(Y_{missing}\). Our experiments demonstrate this in Sect. 4.3.

4 Experiments

Training Set. We conduct our experiments on the dataset used by PCN [51] which is a subset of the Shapenet dataset. The ground truth contains 16384 points uniformly sampled from the mesh, and the partial inputs with 2048 points are generated by back-projecting 2.5D depth images into 3D. The training set contains 28974 different models from 8 categories. Each model contains a complete point cloud with about 7 partial point clouds taken from different viewpoint for data augmentation. The validation set contains 100 models.

Testing Set from ShapeNet. The testing set from ShapeNet [1] are divided into two sets: one contains 8 known object categories on which the models are trained; another contains 8 novel categories that are not in the training set. The novel categories are also divided into two groups: one that is visually similar to the known categories, and another that is visually dissimilar. There are 150 models in each category.

Testing Set from Kitti. We also test our methods on real-world scans from Kitti dataset [9]. The testing scans are cars which are extracted from each frame according to the ground truth object bounding boxes. The testing set contains 2483 partial point clouds labeled as cars.

Training Setup. We train our model for 30 epochs with a batch size of 8. The initial learning rate is set to be 0.0007 which is decayed by 0.7 for every 50,000 iterations. We set other parameters \(r=8\), \(t=2\), \(u=2\) in our implements.

4.1 Completion Results on ShapeNet

We qualitatively and quantitatively compare our network on both known categories and novel categories with several state-of-the-art point cloud completion methods: FC (Pointnet auto-encoder) [28], FoldingNet [49], PCN [51], and TopNet [36]. For FC, Folding, PCN, we use the pre-trained model and evaluation codes released in the public project of PCN on github. For TopNet, we use their public code and retrain their network using the training set in PCN. For the sake of clarity, we denote our network with separated feature aggregation of GLFA and RFA strategy respectively as NSFA-GLFA and NSFA-RFA. We choose our network of 5 levels during feature extraction as the baseline network. Table 1 and 2 show the quantitative comparison results on known and novel categories respectively. Both NSFA-GLFA and NSFA-RFA achieve lower values for the evaluation metrics in both known and novel categories.

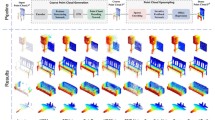

Besides quantitative results, as we claim that our methods can preserve the shape details, we select some qualitative examples of models with much details from the testing set. Results are shown in Fig. 4 where (a)–(d) are from known categories and (e)–(i) are from novel categories. We can observe that FC, Folding, PCN and TopNet can predict a overall shape but most details of the model are lost. In contrast, NSFA-GLFA and NSFA-RFA show their outperformance in both detail preservation and missing shape prediction.

4.2 Completion Results on Kitti

As there are no complete ground truth point clouds for Kitti, we use two metrics proposed in PCN to quantitatively evaluate the performance: 1) Fidelity error, which is the average distance from each point in the input to its nearest neighbour in the output. This measures how well the input is preserved; 2) Minimal Matching Distance (MMD), which is the Chamfer Distance between the output and the car point cloud from ShapeNet that is closest to the output point cloud in terms of CD. This measures how much the output resembles a typical car.

The quantitative and qualitative results are shown in Table 3 and Fig. 5 respectively. The fidelity error of each model is also attached in qualitative results. We use NSFA-RFA as our method for comparison as NSFA-RFA performs better than NSFA-GLFA on car category on ShapeNet.

From both quantitative and qualitative results, it can be observed that our method achieves lowest fidelity error, which meets our claim that our network can preserve the existing details better. In Fig. 5, we can see other methods also present reasonable results, but some details in the partial model seems to be lost. Besides, the performances of all the methods on MMD metric are very close, indicating the results from all methods can resemble typical cars.

4.3 Reconstructed Coordinates Visualization

As we consider the known and missing parts separately, we visualize the reconstructed coordinates \(Y_{known}\) and \(Y_{missing}\) in \(Y_{rec}\) from the known part and missing part features to validate our method. Figure 6 shows the visualization examples of both NSFA-GLFA and NSFA-RFA. In general, \(Y_{known}\) is closer to the partial input, and \(Y_{missing}\) is closer to the missing shape. Meanwhile, there are also completion effects for the \(Y_{known}\), and \(Y_{missing}\) also has some original points. This is reasonable as we aggregate global feature to both part features, so the completion can progress in both parts. Specifically, the completion effects on \(Y_{known}\) of NSFA-RFA is more significant than NSFA-GLFA. The reason may be that we concatenate all level features to \(f_{known}\) of NSFA-RFA but only low-level local features in NSFA-GLFA, thus the completion effects are more apparent on the \(Y_{known}\) of NSFA-RFA.

4.4 Feature Aggregation Strategy Evaluation

As we mentioned in Sect. 3.2, directly concatenating the multi-level features for completion will lead to imbalance between detail preservation and shape completion. We denote our network without feature aggregation strategies as NWoSFA. We evaluate the proposed feature aggregation strategies with qualitative examples shown in Fig. 7. It can be seen that the generated points of NWoSFA are more likely to be gathered at the original input. This effect is released in NSFA-GLFA and the generated points of NSFA-RFA seems can be well spread on the surface. The reason why NSFA-RFA performs better than NSFA-GLFA may due to its more significant completion effects on the known part as mentioned in Sect. 4.3. The completion effects on the known part can help fill the missing shape better. The quantitative results are shown in Table 5. It is notable that NSFA-RFA and NSFA-GLFA significantly boost the performance on the known categories. For novel categories, NSFA-GLFA achieves the lowest Chamfer Distance but NSFA-RFA seems not work well. This indicates that it is hard to generate missing part features using the residual feature aggregation for a totally unseen category. In contrast, NSFA-GLFA uses the global feature to represent the missing part, which is more suitable for novel category.

4.5 Effectiveness of Refinement Component

We also evaluate the effectiveness of the refinement component. Some intermediate results produced by NSFA-GLFA are shown in Fig. 8. The FPS algorithm enhances the point set distribution uniformity, but it can not get rid of the incorrect points. With the attention module, the generated points are more uniformly distributed with fewer outliers and noises. Finally, the local folding unit spread the points to a high-resolution result with smooth surface. The quantitative results are also shown in Table 4.

4.6 Symmetrical Characteristic During Completion

During the completion process, we find our network try to learn the symmetrical characteristic of the object to complete the model. As shown in Fig. 9, it can be seen that the \(Y_{missing}\) is close to the partial input after a proper transformation. This indicates that the details can be preserved not only in the partial input but also in the predicted symmetrical part taking advantages of the symmetrical characteristic.

5 Conclusion

In this work we have proposed a network for point cloud completion via with two separated feature aggregation namely GLFA and RFA, considers the existing known part and the missing part separately. RFA achieves overall better performance on known categories and GLFA shows its advantages on novel categories. Both these two strategies show significant improvements over previous methods on both detail preservation and shape prediction.

References

Chang, A.X., et al.: ShapeNet: an information-rich 3D model repository. arXiv preprint arXiv:1512.03012 (2015)

Chen, Y., Liu, S., Shen, X., Jia, J.: Fast point R-CNN. In: ICCV, pp. 9775–9784 (2019)

Dai, A., Ritchie, D., Bokeloh, M., Reed, S., Sturm, J., Nießner, M.: ScanComplete: large-scale scene completion and semantic segmentation for 3d scans. In: CVPR, pp. 4578–4587 (2018)

Dai, A., Ruizhongtai Qi, C., Nießner, M.: Shape completion using 3d-encoder-predictor CNNs and shape synthesis. In: CVPR, pp. 5868–5877 (2017)

Dinesh Reddy, N., Vo, M., Narasimhan, S.G.: CarFusion: combining point tracking and part detection for dynamic 3d reconstruction of vehicles. In: CVPR, pp. 1906–1915 (2018)

Fan, H., Su, H., Guibas, L.J.: A point set generation network for 3D object reconstruction from a single image. In: CVPR, pp. 605–613 (2017)

Fu, Y., Yan, Q., Liao, J., Xiao, C.: Joint texture and geometry optimization for RGB-D reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5950–5959 (2020)

Fu, Y., Yan, Q., Yang, L., Liao, J., Xiao, C.: Texture mapping for 3D reconstruction with RGB-D sensor. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4645–4653. IEEE (2018)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the KITTI dataset. Int. J. Rob. Res. 32(11), 1231–1237 (2013)

Giancola, S., Zarzar, J., Ghanem, B.: Leveraging shape completion for 3D Siamese tracking. In: CVPR, pp. 1359–1368 (2019)

Han, X., Li, Z., Huang, H., Kalogerakis, E., Yu, Y.: High-resolution shape completion using deep neural networks for global structure and local geometry inference. In: ICCV, pp. 85–93 (2017)

Han, Z., Wang, X., Liu, Y.S., Zwicker, M.: Multi-angle point cloud-VAE: unsupervised feature learning for 3D point clouds from multiple angles by joint self-reconstruction and half-to-half prediction. arXiv preprint arXiv:1907.12704 (2019)

He, T., et al.: GeoNet: deep geodesic networks for point cloud analysis. In: CVPR, pp. 6888–6897 (2019)

Hua, B.S., Tran, M.K., Yeung, S.K.: Pointwise convolutional neural networks. In: CVPR, pp. 984–993 (2018)

Kazhdan, M., Hoppe, H.: Screened Poisson surface reconstruction. TOG 32(3), 29 (2013)

Kim, Y.M., Mitra, N.J., Yan, D.M., Guibas, L.: Acquiring 3D indoor environments with variability and repetition. TOG 31(6), 138 (2012)

Lang, A.H., Vora, S., Caesar, H., Zhou, L., Yang, J., Beijbom, O.: PointPillars: fast encoders for object detection from point clouds. In: CVPR, pp. 12697–12705 (2019)

Le, T., Duan, Y.: PointGrid: a deep network for 3d shape understanding. In: CVPR, pp. 9204–9214 (2018)

Li, D., Shao, T., Wu, H., Zhou, K.: Shape completion from a single RGBD image. TVCG 23(7), 1809–1822 (2016)

Li, J., Chen, B.M., Hee Lee, G.: So-Net: self-organizing network for point cloud analysis. In: CVPR, pp. 9397–9406 (2018)

Li, Y., Dai, A., Guibas, L., Nießner, M.: Database-assisted object retrieval for real-time 3D reconstruction. In: CGF, vol. 34, pp. 435–446. Wiley Online Library (2015)

Liu, Y., Fan, B., Xiang, S., Pan, C.: Relation-shape convolutional neural network for point cloud analysis. In: CVPR, pp. 8895–8904 (2019)

Mitra, N.J., Guibas, L.J., Pauly, M.: Partial and approximate symmetry detection for 3D geometry. TOG 25, 560–568 (2006)

Nealen, A., Igarashi, T., Sorkine, O., Alexa, M.: Laplacian mesh optimization. In: Proceedings of the 4th International Conference on Computer Graphics and Interactive Techniques in Australasia and Southeast Asia, pp. 381–389. ACM (2006)

Pauly, M., Mitra, N.J., Wallner, J., Pottmann, H., Guibas, L.J.: Discovering structural regularity in 3D geometry. TOG 27, 43 (2008)

Qi, C.R., Litany, O., He, K., Guibas, L.J.: Deep hough voting for 3D object detection in point clouds. In: ICCV, pp. 9277–9286 (2019)

Qi, C.R., Liu, W., Wu, C., Su, H., Guibas, L.J.: Frustum PointNets for 3D object detection from RGB-D data. In: CVPR, pp. 918–927 (2018)

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: PointNet: deep learning on point sets for 3D classification and segmentation. In: CVPR, vol. 1, no. 2, p. 4 (2017)

Qi, C.R., Yi, L., Su, H., Guibas, L.J.: PointNet++: deep hierarchical feature learning on point sets in a metric space. In: NeurIPS, pp. 5099–5108 (2017)

Rethage, D., Wald, J., Sturm, J., Navab, N., Tombari, F.: Fully-convolutional point networks for large-scale point clouds. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11208, pp. 625–640. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01225-0_37

Sarmad, M., Lee, H.J., Kim, Y.M.: RL-GAN-Net: a reinforcement learning agent controlled GAN network for real-time point cloud shape completion. In: CVPR, pp. 5898–5907 (2019)

Shi, S., Wang, X., Li, H.: PointRCNN: 3D object proposal generation and detection from point cloud. In: CVPR, pp. 770–779 (2019)

Shi, Y., Long, P., Xu, K., Huang, H., Xiong, Y.: Data-driven contextual modeling for 3d scene understanding. Comput. Graph. 55, 55–67 (2016)

Sorkine, O., Cohen-Or, D.: Least-squares meshes. In: Proceedings Shape Modeling Applications, pp. 191–199. IEEE (2004)

Su, H., et al.: SPLATNet: sparse lattice networks for point cloud processing. In: CVPR, pp. 2530–2539 (2018)

Tchapmi, L.P., Kosaraju, V., Rezatofighi, H., Reid, I., Savarese, S.: TopNet: structural point cloud decoder. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 383–392 (2019)

Thrun, S., Wegbreit, B.: Shape from symmetry. In: ICCV, vol. 2, pp. 1824–1831. IEEE (2005)

Varley, J., DeChant, C., Richardson, A., Ruales, J., Allen, P.: Shape completion enabled robotic grasping. In: IROS, pp. 2442–2447. IEEE (2017)

Wang, C., Samari, B., Siddiqi, K.: Local spectral graph convolution for point set feature learning. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11208, pp. 56–71. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01225-0_4

Wang, S., Suo, S., Ma, W.C., Pokrovsky, A., Urtasun, R.: Deep parametric continuous convolutional neural networks. In: CVPR, pp. 2589–2597 (2018)

Wang, Y., Chao, W.L., Garg, D., Hariharan, B., Campbell, M., Weinberger, K.Q.: Pseudo-lidar from visual depth estimation: bridging the gap in 3D object detection for autonomous driving. In: CVPR, pp. 8445–8453 (2019)

Wu, W., Qi, Z., Fuxin, L.: PointConv: deep convolutional networks on 3D point clouds. In: CVPR, pp. 9621–9630 (2019)

Wu, Z., et al.: 3D ShapeNets: a deep representation for volumetric shapes. In: CVPR, pp. 1912–1920 (2015)

Xu, Y., Fan, T., Xu, M., Zeng, L., Qiao, Yu.: SpiderCNN: deep learning on point sets with parameterized convolutional filters. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11212, pp. 90–105. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01237-3_6

Yan, Q., Yang, L., Liang, C., Liu, H., Hu, R., Xiao, C.: Geometrically based linear iterative clustering for quantitative feature correspondence. Comput. Graph. Forum 35, 1–10 (2016)

Yan, Q., Yang, L., Zhang, L., Xiao, C.: Distinguishing the indistinguishable: exploring structural ambiguities via geodesic context. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3836–3844 (2017)

Yang, B., Wen, H., Wang, S., Clark, R., Markham, A., Trigoni, N.: 3D object reconstruction from a single depth view with adversarial learning. In: ICCV, pp. 679–688 (2017)

Yang, G., Huang, X., Hao, Z., Liu, M.Y., Belongie, S., Hariharan, B.: PointFlow: 3D point cloud generation with continuous normalizing flows. In: ICCV, pp. 4541–4550 (2019)

Yang, Y., Feng, C., Shen, Y., Tian, D.: FoldingNet: point cloud auto-encoder via deep grid deformation. In: CVPR, pp. 206–215 (2018)

Yu, L., Li, X., Fu, C.W., Cohen-Or, D., Heng, P.A.: PU-Net: point cloud upsampling network. In: CVPR, pp. 2790–2799 (2018)

Yuan, W., Khot, T., Held, D., Mertz, C., Hebert, M.: PCN: point completion network. In: 3DV, pp. 728–737. IEEE (2018)

Zhang, W., Xiao, C.: PCAN: 3D attention map learning using contextual information for point cloud based retrieval. In: CVPR, pp. 12436–12445 (2019)

Zhao, H., Jiang, L., Fu, C.W., Jia, J.: PointWeb: enhancing local neighborhood features for point cloud processing. In: CVPR, pp. 5565–5573 (2019)

Zhao, Y., Birdal, T., Deng, H., Tombari, F.: 3D point capsule networks. In: CVPR, pp. 1009–1018 (2019)

Acknowledgement

Funding was provided by the Key Technological Innovation Projects of Hubei Province (Grant No. 2018AAA062), NSFC (Grant Nos. 61972298, 61672390), National Key Research and Development Program of China (Grant No. 2017YFB 1002600), and Wuhan University - Huawei GeoInformatics Innovation Lab.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, W., Yan, Q., Xiao, C. (2020). Detail Preserved Point Cloud Completion via Separated Feature Aggregation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12370. Springer, Cham. https://doi.org/10.1007/978-3-030-58595-2_31

Download citation

DOI: https://doi.org/10.1007/978-3-030-58595-2_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58594-5

Online ISBN: 978-3-030-58595-2

eBook Packages: Computer ScienceComputer Science (R0)