Abstract

The main purpose of RGB-D salient object detection (SOD) is how to better integrate and utilize cross-modal fusion information. In this paper, we explore these issues from a new perspective. We integrate the features of different modalities through densely connected structures and use their mixed features to generate dynamic filters with receptive fields of different sizes. In the end, we implement a kind of more flexible and efficient multi-scale cross-modal feature processing, i.e. dynamic dilated pyramid module. In order to make the predictions have sharper edges and consistent saliency regions, we design a hybrid enhanced loss function to further optimize the results. This loss function is also validated to be effective in the single-modal RGB SOD task. In terms of six metrics, the proposed method outperforms the existing twelve methods on eight challenging benchmark datasets. A large number of experiments verify the effectiveness of the proposed module and loss function. Our code, model and results are available at https://github.com/lartpang/HDFNet.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Salient object detection (SOD) aims to model the mechanism of human visual attention and mine the most salient objects or regions in data such as images or videos. SOD has been widely applied in many computer vision tasks, such as scene classification [38], video segmentation [13], semantic segmentation [44], foreground map evaluation [10, 11] visual tracking [30], person re-identification [39], light field image segmentation [43] and so on.

With the advent of the fully convolutional network [29], deep learning-based SOD models [17, 27] have made great progress. Some methods [22, 33, 50, 51] have achieved very good performance on the existing benchmark datasets. However, these works are mainly based on RGB data. They still face severe challenges when handling the cluttered or low-contrast scenes. Recently, some works [2, 4, 6, 8, 14, 37, 53] introduce the depth data as an aid to further improve the detection performance. The depth information can more intuitively express spatial structures of the objects in a scene and provide a powerful supplement for the detection and recognition of salient objects. Using complementary modal cues, the scene can be further deeply and intelligently understood. However, limited by the way of using the depth information, RGB-D salient object detection is still great challenging.

It is well known that RGB images contain rich appearance and detail information while depth images contain more spatial structure information. They complement each other for many vision tasks. RGB-D SOD approaches aim to formulate cross-modal fusion in different manners. Most of them integrate depth and RGB features by element-wise addition [4, 36], concatenation [3, 12] and convolution operations [2, 42]. Some methods compute attention map [49] or saliency map [42] via a shallow or deep CNN network from pure depth images. Because of using the fixed parameters for different samples during the testing phase, the generalization capability of these models is weakened. Moreover, for dense prediction task, the loss in each spatial position is usually different. Thus, the actual optimization direction of gradients in different positions may be varying. The weight-sharing convolution operation across different positions, which is used in the existing methods, causes that the training process of each parameter relies on the global gradient. This forces the network to learn trade-off and sub-optimal parameters. To address these problems, we propose a dynamic dilated pyramid module (DDPM), which uses RGB-depth mixed features to adaptively adjust convolution kernels for different input samples and processing locations. These kernels can capture rich semantic cues at multiple scales with the help of the pyramid structure and the dilated convolution. This design is capable of making more efficient convolution operations for current RGB features and promotes the network to obtain more flexible and targeted features for saliency prediction.

Early deep learning-based SOD models [14], which use fully connected layers, destroy the spatial structure of the data. This issue is alleviated to some extent by using the fully convolutional network. But the intrinsic gridding operation and the repeated down-sampling lead to the loss of numerous details in the predicted results. Although many methods frequently combine shallower features to restore feature resolution, the improvement is still limited. While some approaches [9, 17] leverage CRF post-processing to refine subtle structures, which has a large computational cost. In this work, we design a new hybrid enhanced loss function (HEL). The HEL encourages the consistency between the area around edges and the interior of objects, thereby achieving sharper boundaries and a solid saliency area.

Our main contributions are summarized as follows:

-

We propose a simple yet effective hierarchical dynamic filtering network (HDFNet) for RGB-D SOD. Especially, we provide a new perspective to utilize depth information. The depth and RGB features are combined to generate region-aware dynamic filters to guide the decoding in RGB stream.

-

We propose a hybrid enhanced loss and verify its effectiveness in both RGB and RGB-D SOD tasks. It can effectively optimize the details of predictions and enhance the consistency of salient regions without additional parameters.

-

We compare the proposed method with twelve state-of-the-art methods on eight datasets. It achieves the best performance under six evaluation metrics. Meanwhile, we implement a forward reasoning speed of 52 FPS on an NVIDIA GTX 1080 Ti GPU. The size of our VGG16-based model is about 170 MB (Fig. 1).

2 Related Word

RGB-D Salient Object Detection. The early methods are mainly based on hand-crafted features, such as contrast [6] and shape [7]. Limited by the representation ability of the features, they can not cope with complex scenes. Please refer to [12] for more details about traditional methods. In recent years, FCN-based methods have shown great potential and some of them achieve very good performance in the RGB-D SOD task [12, 36, 49]. Chen and Li [2] progressively combine the current depth/RGB features and the preceding fused feature by a series of convolution and element-wise addition operations to build the cross fusion modules. Recently, they concatenate depth and RGB features and feed them into an additional CNN stream to achieve multi-level cross-modal fusion [3]. Wang and Gong [42] respectively build a saliency prediction stream for RGB and depth inputs and then fuse their predictions and their preceding features to obtain final prediction via several convolutional layers. Zhao et al [49] insert a lightweight net between adjacent encoding blocks to compute a contrast map from the depth input and use it to enhance the features from the RGB stream. Piao et al [36] combine multi-level paired complementary features from RGB and depth streams by convolution and nonlinear operations. Fan et al [12] design a depth depurator to remove the low-quality depth input, and for high-quality one they feed the concatenated 4-channel input into a convolutional neural network to achieve cross-modal fusion. Different from these methods, we use the RGB-depth mixed features to generate “adaptive” multi-scale convolution kernels to filter and enhance the decoding features from the RGB stream.

Dynamic Filters. The works closely related to ours are [20] and [15]. The conception of the dynamic filter is firstly proposed in video and stereo prediction task [20]. The filter is utilized to enhance the representation of its corresponding input in a self-learning manner. While we use multi-modal information to generate multi-scale filters to dynamically strengthen the cross-modal complementarity and suppress the inter-modality incompatibility. Besides, the kernel computation in [20] introduces a large number of parameters and is difficultly extended at multiple scales, which significantly increases parameters and causes optimization difficulties. To efficiently achieve hierarchical dynamic filters, we introduce the idea of depth-wise separable convolution [18] and dilated convolution [48]. In [15], the filters are computed by pooling the input feature. They share kernel parameters across different positions, which is only an image-specific filter generator. In contrast, we design position-specific and image-specific filters to provide cross-modal contextual guidance for the decoder. The parameter update of dynamic filters is determined by the gradients of local neighborhoods to achieve more targeted adjustments and guarantee the overall performance of optimization.

3 Proposed Method

In this section, we first introduce the overall structure of the proposed method and then detail two main components, including the dynamic dilated pyramid module (DDPM) and the hybrid enhanced loss (HEL).

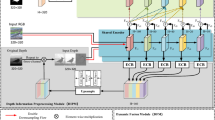

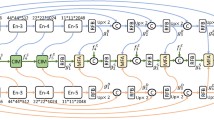

The structure of the dynamic dilated pyramid module. The DDPM contains two submodules: kernel generation units (KGUs) and kernel transformation units (KTUs). KGUs generate adaptive kernel tensors and KTUs transform these tensors to the regular form of convolution kernels with different dilation rates.

3.1 Two Stream Structure

We build a two-stream network, which structure is shown in Fig. 2. It has two inputs: one is an RGB image and the other is a depth image, which corresponds to the RGB and depth streams, respectively. Through convolution blocks \(\{E^i_{rgb}\}^{5}_{i=1}\) and \(\{E^i_{d}\}^{5}_{i=1}\) in two encoding networks, we can obtain the intermediate features with different resolutions, which are recorded as \(f^{1}\), \(f^{2}\), \(f^{3}\), \(f^{4}\), \(f^{5}\) from large to small. The third-level features still retain enough valid information. Besides, the shallower features contain more noise and also cause higher computational cost due to the larger resolution. To balance efficiency and effectiveness, we only utilize the features \(f^{3}_{d}\), \(f^{4}_{d}\), \(f^{5}_{d}\) from the deepest three blocks in the depth stream. These features are respectively combined with the features \(f^{3}_{rgb}\), \(f^{4}_{rgb}\), \(f^{5}_{rgb}\) from the RGB stream. Then, we use a dense block [19] to build the transport layer, which combines rich and various receptive fields and generates powerful mixed features \(f_{T_m}\) with both spatial structures and appearance details. These features are fed into the DDPM to produce multi-scale convolution kernels that are used to filter the features \(f_{D_{rgb}}\) from the decoder. The resulted features \(f_{M}\) are merged in the top-down pathway by element-wise addition. After recovering the resolution layer by layer, we obtain the final prediction P, which is supervised by the ground truth G.

3.2 Dynamic Dilated Pyramid Module

In order to make more reasonable and effective use of the mixed features \(f_{T_m}\) from the dense transport layer, we employ DDPMs to generate the adaptive kernel for decoding RGB features. The DDPMs contain two inputs: the mixed feature \(f_{T_m}\) and the feature \(f_{D_{rgb}}\) from the decoder. On one hand, for specific position in feature maps \(f_{D_{rgb}}\), we use kernel generation units (KGUs) to yield independent weight tensors, i.e. \(f_{g}\), that can cover a \(3 \times 3\), \(7 \times 7\) or \(11 \times 11\) square neighborhood. KGUs are also a kind of dense structure [19]. The module contains 4 densely connected layers and each layer is connected to all the others in a feed-forward fashion, which can further strengthen feature propagation and expression capabilities, encourage feature reuse and greatly improve parameter efficiency. Then, by recombining kernel tensors and inserting different numbers of zeros, kernel transformation units (KTUs) construct regular convolution kernels with different dilation rates. Please see “KTU” shown in Fig. 3 and introduced in Algorithm 1 for a more intuitive presentation. On the other hand, after preliminary dimension reduction, the other input \(f_{D_{rgb}}\) is re-weighted and integrated into three parallel branches to obtain the enhanced features \(\{f_{B^j}\}^3_{j=1}\). Note that this is actually a channel-wise adjustment and the operation of each channel is independent. Finally, after concating and merging \(\{f_{B^j}\}^3_{j=1}\) and the reduced \(f_{D_{rgb}}\), the resulted features \(\{f^{i}_{M}\}^{5}_{i=3}\) become more discriminative.

The entire process can be formulated as follows:

where \(f^i_{M}\) represents the feature from the DDPM\(^i\) related to the \(f^i_{D_{rgb}}\). \(\mathcal {DDPM}(\cdot )\), \(\mathcal {KGU}(\cdot )\) and \(\mathcal {KTU}(\cdot )\) denote the operation of the corresponding module. \(\mathcal {R}(\cdot )\) is a \(1 \times 1\) convolution operation, which is used to reduce the number of channels from 64 to 16. \(\otimes \) is an adaptive convolution operation as shown in Algorithm 1. \(\mathcal {C}(\cdot )\) is a concatenation operation and \(\mathcal {F}(\cdot )\) is a \(3 \times 3\) convolution to fuse the concatenated features from different branches. More details is as shown in Fig. 3.

3.3 Hybrid Enhanced Loss

No matter for RGB or RGB-D based SOD tasks, good prediction requires the salient area to be clearly and completely highlighted. This contains two aspects: one is the sharpness of boundaries and the other is the consistency of intra-class. We start with the loss function and design a new loss to constrain the edges and the fore-/background regions to separately achieve high-contrast predictions.

The common loss function in the SOD task is binary cross entropy (BCE). It is a pixel-level loss, which independently performs error calculation and supervision at different positions. The main form is as follows:

where \(P = \{ p | 0< p < 1 \} \in \mathbb {R}^{N \times 1 \times H \times W}\) and \(G = \{ g | 0< g < 1 \} \in \mathbb {R}^{N \times 1 \times H \times W}\) respectively represent the prediction and the corresponding ground truch. N, H and W are the batchsize, height and width of the input data, respectively. It calculates the error between the ground truth g and the prediction p at each position, and the loss \(L_{bce}\) accumulates and averages the errors of all positions.

In order to further enhance the strength of supervision at higher levels such as edges and regions, we specially constrain and optimize the regions near the edges. In particular, the loss is formulated as follows:

where \(L_{e}\) represents the edge enhanced loss (EEL), and \(\mathcal {P}(\cdot )\) denotes the average pooling operation with a \(5 \times 5\) slide window. In Eq. 3, we can obtain the local region near the contour of the ground truth by calculating e. In this region, the difference \(L_e\) between the prediction p and the ground truth g can be calculated. Through this loss, the optimization process can target the contours of salient objects.

In addition, we also design a region enhanced loss (REL) to constrain the prediction of intra-class. By respectively calculating the prediction errors within the foreground class and the background class, fore-/background predictions can be independently optimized. Specifically, the REL \(L_{r}\) is written as:

where \(L_{f}\) and \(L_{b}\) denote the fore-/background losses, respectively. The losses compute the normalized prediction errors in the intra-class regions. They depict the region-level supervision. Finally, we integrate these three losses (\(L_{bce}\), \(L_{e}\) and \(L_{r}\)) to obtain the hybrid enhanced loss (HEL), which can optimize the prediction at two different levels. The total loss is expressed as follows:

4 Experiments

4.1 Datasets

To fully verify the effectiveness of the proposed method, we evaluated the results on eight benchmark datasets. LFSD [24] is a small dataset that contains 100 images with depth information and human-labeled ground truths and is built for saliency detection on the light filed. NJUD [21] contains 1,985 groups of RGB, depth, and label images, which are collected from the Internet, 3D movies, and photographs taken by a Fuji W3 stereo camera. NLPR [34] is also called RGBD1000, which contains 1,000 natural RGBD images captured by Microsoft Kinect together with the human-marked ground truth. RGBD135 [6] is also named DES, which consists of 135 images about indoor scenes collected by Microsoft Kinect. SIP [12] includes 1,000 images with many challenging situations from various outdoor scenarios and these images emphasize salient persons in real-world scenes. SSD [52] contains 80 images picked up from three stereo movies. STEREO [32] is also called SSB, which contains 1,000 stereoscopic images downloaded from the Internet. DUTRGBD [36] is a new and large dataset and contains 800 indoor and 400 outdoor scenes paired with the depth maps and ground truths.

For comprehensively and fairly evaluating different methods, we follow the setting of [36]. On the DUTRGBD, we use 800 images for training and 400 images for testing. For the other seven datasets, we follow the data partition of [2, 4, 14, 36] to use 1,485 samples from the NJUD and 700 samples from the NLPR as the training set and the remaining samples in these datasets are used for testing.

. \(\natural \): Traditional methods. \(\dagger \): VGG-16

[40] as backbone. \(\ddagger \): VGG-19

[40] as backbone. \(\sharp \): ResNet-50

[16] as backbone. -: No data available.

. \(\natural \): Traditional methods. \(\dagger \): VGG-16

[40] as backbone. \(\ddagger \): VGG-19

[40] as backbone. \(\sharp \): ResNet-50

[16] as backbone. -: No data available.4.2 Evaluation Metrics

There are six widely used metrics for evaluating RGB and RGB-D SOD models: Precision-Recall (PR) curve, F-measure [1], weighted F-measure [31], MAE [35], S-measure [10] and E-measure [11]. PR Curve. We use a series of fixed thresholds from 0 to 255 to binarize the gray prediction map, and then calculate several groups of precision (Pre) and recall (Rec) with ground truth by \(Pre = \frac{TP}{TP+FP}\) and \(Rec = \frac{TP}{TP+FN}\). Based on them, we can plot a precision-recall curve to describe the performance of the model. F-measure [1]. It is a region-based similarity metric and is formulated as the weighted harmonic mean (the weight is set to 0.3) of Pre and Rec. In this paper, we employ the threshold changing from 0 to 255 to get \(F_{max}\), and use twice the mean value of the prediction P as the threshold to obtain \(F_{ada}\). In addition, since F-measure reflects the performance of the binary predictions under different thresholds, we evaluate the consistency and uniformity at the regional level according to F-measure threshold curves. weighted F-measure (\(F^{\omega }_{\beta }\)) [31]. It is proposed to improve the existing metric F-measure. It defines a weighted precision, which is a measure of exactness, and a weighted recall, which is a measure of completeness and follows the form of F-measure. MAE [35]. This metric estimates the approximation degree between the saliency map and ground-truth map, and it is normalized to [0, 1]. It focuses on pixel-level performance. S-measure (\(S_{m}\)) [10]. It calculates the object-/region-aware structure similarities \(S_{o}\)/\(S_{r}\) between prediction and ground truth by the equation: \(S_{m} = \alpha \cdot S_{o} + (1 - \alpha ) \cdot S_{r}, \, \alpha = 0.5\). E-measure (\(E_{m}\)) [11]. This measure utilizes the mean-removed predictions and ground truths to compute the similarity, which characterizes both image-level statistics and local pixel matching.

4.3 Implementation Details

Parameter Setting. Two encoders of the proposed model are based on the same model, such as VGG-16 [40], VGG-19 [40], and ResNet-50 [16]. In both encoders, only the convolutional layers in corresponding classification networks are retained, and the last pooling layer of VGG-16 and VGG-19 is removed at the same time. During the training phase, we use the weight parameters pre-trained on the ImageNet to initialize the encoders. Also, since the depth image is a single channel data, we change the channel number of its corresponding input layer from 3 to 1, and its parameters are initialized randomly by PyTorch. The parameters of the remaining structures are all initialized randomly.

Training Setting. During the training stage, we apply random horizontal flipping, random rotating as data augmentation for RGB images and depth images. In addition, we employ random color jittering and normalization for RGB images. We use the momentum SGD optimizer with a weight decay of 5e−4, an initial learning rate of 5e−3, and a momentum of 0.9. Besides, we apply a “poly” strategy [28] with a factor of 0.9. The input images are resized to \(320 \times 320\). We train the model for 30 epochs on an NVIDIA GTX 1080 Ti GPU with a batch size of 4 to obtain the final model.

Testing Details. During the testing stage, we resize RGB and depth images to \(320 \times 320\) and normalize RGB images. Besides, the final prediction is rescaled to the original size for evaluation.

4.4 Comparisons

In order to fully demonstrate the effectiveness of the proposed method, we compared it with the existing twelve RGB-D based SOD models, including DES [6], DCMC [8], CDCP [53], DF [37], CTMF [14], PCANet [2], MMCI [4], TANet [3], AFNet [42], CPFP [49], DMRA [36] and D3Net [12]. For fair comparisons, all saliency maps of these methods are directly provided by authors or computed by their released codes. Besides, the codes and results of AFNet [42] and D3Net [12] on the DUTRGBD [36] dataset are not publicly available. Therefore, their results on this dataset are not listed.

Quantitative Evaluation. In Table 1, we list the results of all competitors on eight datasets and six metrics. It can be seen that the proposed method performs best on most datasets and achieve significant performance improvement. On the DUTRGBD [36], our models based on VGG-16, VGG-19 and ResNet-50 have surpassed the second-best model DMRA [36] by 2.02%, 2.85% and 2.45% on \(F_{max}\), and 16.09%, 17.88% and 13.56% on MAE. At the same time, on the recent dataset SIP [12], they have increased by 3.83%, 4.65% and 5.23% on \(F_{ada}\), 5.22%, 6.37% and 6.84% on \(F^{\omega }_{\beta }\), and 20.94%, 24.65% and 24.91% on MAE, over the D3Net [12]. Because the existing RGB-D SOD datasets are relatively small, we propose a new calculation method to measure the performance of models. According to the proportion of each testing set in all testing datasets, the results on all datasets are weighted and summed to obtain an overall performance evaluation, which is listed in the row “AveMetric” in Table 1. It can be seen that our structure achieves similar and excellent results on different backbones, which shows that our structure has less dependence on the performance of the backbone. In addition, we show a scatter plot based on the average performance of each model on all datasets and the model size in Fig. 1. Our model has the smallest size while achieving the best result. We demonstrate the PR curves and the F-measure curves in Fig. 4 and Fig. 5. Our approach (red solid line) achieves very good results on these datasets. As shown in Fig. 5, our results are much flatter at most thresholds, which reflects that our prediction results are more uniform and consistent.

Qualitative Evaluation. In Fig. 6, we list some representative results. These examples include scenarios with varying complexity, as well as different types of objects, including cluttered background (Column 1 and 2), simple scene (Column 3 and 4), small objects (Column 5), complex objects (Column 6 and 7), large objects (Column 8), multiple objects (Column 9 and 10) and low contrast between foreground and background (Column 11 and 12). It can be seen that the proposed method can consistently produce more accurate and complete saliency maps with higher contrast.

4.5 Ablation Study

In this section, we perform ablation analysis over the main components of the HDFNet and further investigate their importance and contributions. Our baseline model, i.e. Model 1, uses the commonly used encoder-decoder structure, and all ablation experiments are based on the VGG-16 backbone. In the baseline model, the output features of the last three stages in the depth stream are added to the decoder after compressing the channel to 64 through an independent \(1 \times 1\) convolution. In order to evaluate the benefits of cross-modal fusion at the dense transport layer (i.e. Model 6), we feed single-modal features into this layer to build Model 2 (i.e. “+T\(_{d}\)”) and Model 4 (i.e. “+T\(_{rgb}\)”). Thus, the followed dynamic filters in the DDPM will be determined only by depth features or RGB features, respectively.

Dynamic Dilated Pyramid Module. Based on Model 2, Model 4, and Model 6, we add the dynamic dilated pyramid module to obtain Model 3, Model 5, and Model 7, respectively. In Table 2, we show the performance improvement contributed by different structures in terms of the weighted average metrics “AveMetric”. It can be seen that the DDPM significantly improves performance. Specifically, by comparing Model 3, 5 and 7 with Model 2, 4 and 6, we achieve a relative improvement of 1.47%, 3.11% and 2.11% in terms of \(F^{\omega }_{\beta }\) and 5.01%, 10.29% and 6.77% in terms of MAE, respectively. We can see that even without the HEL, the average performance of Model 7 already exceeds these existing models. More comparisons can be found in the supplementary material.

In addition, we compare the design of the dynamic filter in DCM [15] with ours. It can be seen that the proposed DDPM (Model 7) has obvious advantages over the DCM (Model 8), and it respectively increases by 3.91%, 5.60%, and 18.07% in terms of \(F_{ada}\), \(F^{\omega }_{\beta }\) and MAE. In Fig. 7, we can see that the noise in depth images interferes with the final predictions. By the cross-modal guidance from the DDPMs, the interference is effectively suppressed.

Hybrid Enhanced Loss. As shown in Table 2, the proposed hybrid enhanced loss brings huge performance improvements by comparing Model 7 with Model 12. We evaluate each component in the HEL (Model 9, 10, and 11) and all of them contribute to the final performance. In addition, the benefits of this loss are also clearly reflected in Fig. 5 where the curves of the proposed model are more straight, and Fig. 7 where the predictions of the model “B+R+D+M+L” have higher contrast than ones of the model “B+D+R+M”. Since the design goal of the HEL is to solve the general requirements of SOD tasks, we evaluate its effectiveness on several recent RGB SOD models [5, 9, 26, 45]. For a fair comparison, we retrain these models using the released code. Most of hyper-parameters are the same as the default values given by their corresponding code. The average performance “AveMetric” on five main RGB SOD datasets (DUTS [41], ECSSD [46], HKU-IS [23], PASCAL-S [25] and DUT-OMRON [47]) is shown in Table 2. More experimental details and results can be found in supplementary materials.

5 Conclusions

In this paper, we revisit the role that depth information should play in the RGB-D based SOD task. We consider the characteristics of spatial structures contained in depth information and combine it with RGB information with rich appearance details. After that, the model generates adaptive filters with different receptive field sizes through the dynamic dilated pyramid module. It can make full use of semantic cues from multi-modal mixed features to achieve multi-scale cross-modal guidance, thereby enhancing the representation capabilities of the decoder. At the same time, we can obtain clearer predictions with the aid of additional region-level supervision to the regions around the edges and fore-/background regions. Expensive experiments on eight datasets and six metrics demonstrate the effectiveness of the designed components. The proposed approach achieves state-of-the-art performance with small model size and high running speed.

References

Achanta, R., Hemami, S., Estrada, F., Süsstrunk, S.: Frequency-tuned salient region detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1597–1604 (2009)

Chen, H., Li, Y.: Progressively complementarity-aware fusion network for RGB-D salient object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3051–3060 (2018)

Chen, H., Li, Y.: Three-stream attention-aware network for RGB-D salient object detection. IEEE Trans. Image Process. 28(6), 2825–2835 (2019)

Chen, H., Li, Y., Su, D.: Multi-modal fusion network with multi-scale multi-path and cross-modal interactions for RGB-D salient object detection. Pattern Recogn. 86, 376–385 (2019)

Chen, Z., Xu, Q., Cong, R., Huang, Q.: Global context-aware progressive aggregation network for salient object detection. In: AAAI Conference on Artificial Intelligence (2020)

Cheng, Y., Fu, H., Wei, X., Xiao, J., Cao, X.: Depth enhanced saliency detection method. In: Proceedings of the International Conference on Internet Multimedia Computing and Service, pp. 23–27 (2014)

Ciptadi, A., Hermans, T., Rehg, J.M.: An in depth view of saliency (2013)

Cong, R., Lei, J., Zhang, C., Huang, Q., Cao, X., Hou, C.: Saliency detection for stereoscopic images based on depth confidence analysis and multiple cues fusion. IEEE Signal Process. Lett. 23(6), 819–823 (2016)

Deng, Z., et al.: R3Net: recurrent residual refinement network for saliency detection. In: International Joint Conference on Artificial Intelligence, pp. 684–690 (2018)

Fan, D.P., Cheng, M.M., Liu, Y., Li, T., Borji, A.: Structure-measure: a new way to evaluate foreground maps. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4548–4557 (2017)

Fan, D.P., Gong, C., Cao, Y., Ren, B., Cheng, M.M., Borji, A.: Enhanced-alignment measure for binary foreground map evaluation. In: International Joint Conference on Artificial Intelligence, pp. 698–704 (2018)

Fan, D.P., et al.: Rethinking RGB-D salient object detection: models, datasets, and large-scale benchmarks. arXiv preprint arXiv:1907.06781 (2019)

Fan, D.P., Wang, W., Cheng, M.M., Shen, J.: Shifting more attention to video salient object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 8554–8564 (2019)

Han, J., Chen, H., Liu, N., Yan, C., Li, X.: CNNs-based RGB-D saliency detection via cross-view transfer and multiview fusion. IEEE Trans. Cybern. 48(11), 3171–3183 (2017)

He, J., Deng, Z., Qiao, Y.: Dynamic multi-scale filters for semantic segmentation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3562–3572 (2019)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hou, Q., Cheng, M.M., Hu, X., Borji, A., Tu, Z., Torr, P.H.: Deeply supervised salient object detection with short connections. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3203–3212 (2017)

Howard, A.G., et al.: MobileNets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

Jia, X., De Brabandere, B., Tuytelaars, T., Gool, L.V.: Dynamic filter networks. In: Conference and Workshop on Neural Information Processing Systems, pp. 667–675 (2016)

Ju, R., Liu, Y., Ren, T., Ge, L., Wu, G.: Depth-aware salient object detection using anisotropic center-surround difference. Signal Process. Image Commun. 38, 115–126 (2015)

Wei, J., Wang, S., Huang, Q.: F3Net: fusion, feedback and focus for salient object detection. In: AAAI Conference on Artificial Intelligence (2020)

Li, G., Yu, Y.: Visual saliency based on multiscale deep features. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 5455–5463 (2015)

Li, N., et al.: Saliency detection on light field. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 2806–2813 (2014)

Li, Y., Hou, X., Koch, C., Rehg, J.M., Yuille, A.L.: The secrets of salient object segmentation. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 280–287 (2014)

Liu, J.J., Hou, Q., Cheng, M.M., Feng, J., Jiang, J.: A simple pooling-based design for real-time salient object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (2019)

Liu, N., Han, J., Yang, M.H.: PicaNet: learning pixel-wise contextual attention for saliency detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3089–3098 (2018)

Liu, W., Rabinovich, A., Berg, A.C.: ParseNet: looking wider to see better. arXiv preprint arXiv:1506.04579 (2015)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Mahadevan, V., Vasconcelos, N.: Saliency-based discriminant tracking. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (2009)

Margolin, R., Zelnik-Manor, L., Tal, A.: How to evaluate foreground maps? In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255 (2014)

Niu, Y., Geng, Y., Li, X., Liu, F.: Leveraging stereopsis for saliency analysis. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 454–461 (2012)

Pang, Y., Zhao, X., Zhang, L., Lu, H.: Multi-scale interactive network for salient object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (2020)

Peng, H., Li, B., Xiong, W., Hu, W., Ji, R.: RGBD salient object detection: a benchmark and algorithms. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8691, pp. 92–109. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10578-9_7

Perazzi, F., Krähenbühl, P., Pritch, Y., Hornung, A.: Saliency filters: contrast based filtering for salient region detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 733–740 (2012)

Piao, Y., Ji, W., Li, J., Zhang, M., Lu, H.: Depth-induced multi-scale recurrent attention network for saliency detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 7254–7263 (2019)

Qu, L., He, S., Zhang, J., Tian, J., Tang, Y., Yang, Q.: RGBD salient object detection via deep fusion. IEEE Trans. Image Process. 26(5), 2274–2285 (2017)

Ren, Z., Gao, S., Chia, L.T., Tsang, I.W.H.: Region-based saliency detection and its application in object recognition. IEEE Trans. Circuits Syst. Video Technol. 24(5), 769–779 (2013)

Rui, Z., Ouyang, W., Wang, X.: Unsupervised salience learning for person re-identification. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (2013)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Wang, L., et al.: Learning to detect salient objects with image-level supervision. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 136–145 (2017)

Wang, N., Gong, X.: Adaptive fusion for RGB-D salient object detection. IEEE Access 7, 55277–55284 (2019)

Wang, T., Piao, Y., Li, X., Zhang, L., Lu, H.: Deep learning for light field saliency detection. In: Proceedings of the IEEE International Conference on Computer Vision, October 2019

Wei, Y., et al.: STC: a simple to complex framework for weakly-supervised semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(11), 2314–2320 (2016)

Wu, Z., Su, L., Huang, Q.: Cascaded partial decoder for fast and accurate salient object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3907–3916 (2019)

Yan, Q., Xu, L., Shi, J., Jia, J.: Hierarchical saliency detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1155–1162 (2013)

Yang, C., Zhang, L., Lu, H., Ruan, X., Yang, M.H.: Saliency detection via graph-based manifold ranking. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3166–3173 (2013)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. In: Bengio, Y., LeCun, Y. (eds.) International Conference on Learning Representations (2016). http://arxiv.org/abs/1511.07122

Zhao, J.X., Cao, Y., Fan, D.P., Cheng, M.M., Li, X.Y., Zhang, L.: Contrast prior and fluid pyramid integration for RGBD salient object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3927–3936 (2019)

Zhao, J.X., Liu, J.J., Fan, D.P., Cao, Y., Yang, J., Cheng, M.M.: EGNet: edge guidance network for salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, October 2019

Zhao, X., Pang, Y., Zhang, L., Lu, H., Zhang, L.: Suppress and balance: A simple gated network for salient object detection. In: Proceedings of European Conference on Computer Vision (2020)

Zhu, C., Li, G.: A three-pathway psychobiological framework of salient object detection using stereoscopic technology. In: International Conference on Computer Vision Workshops, pp. 3008–3014 (2017)

Zhu, C., Li, G., Wang, W., Wang, R.: An innovative salient object detection using center-dark channel prior. In: International Conference on Computer Vision Workshops, pp. 1509–1515 (2017)

Acknowledgements

This work was supported in part by the National Key R&D Program of China #2018AAA0102003, National Natural Science Foundation of China #61876202, #61725202, #61751212 and #61829102, the Dalian Science and Technology Innovation Foundation #2019J12GX039, and the Fundamental Research Funds for the Central Universities #DUT20ZD212.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Pang, Y., Zhang, L., Zhao, X., Lu, H. (2020). Hierarchical Dynamic Filtering Network for RGB-D Salient Object Detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12370. Springer, Cham. https://doi.org/10.1007/978-3-030-58595-2_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-58595-2_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58594-5

Online ISBN: 978-3-030-58595-2

eBook Packages: Computer ScienceComputer Science (R0)