Abstract

Deep neural networks with a massive number of layers have made a remarkable breakthrough on single image super-resolution (SR), but sacrifice computation complexity and memory storage. To address this problem, we focus on the lightweight models for fast and accurate image SR. Due to the frequent use of residual block (RB) in SR models, we pursue an economical structure to adaptively combine RBs. Drawing lessons from lattice filter bank, we design the lattice block (LB) in which two butterfly structures are applied to combine two RBs. LB has the potential of various linear combinations of two RBs. Each case of LB depends on the combination coefficients which are determined by the attention mechanism. LB favors the lightweight SR model with the reduction of about half amount of the parameters while keeping the similar SR performance. Moreover, we propose a lightweight SR model, LatticeNet, which uses series connection of LBs and the backward feature fusion. Extensive experiments demonstrate that our proposal can achieve superior accuracy on four available benchmark datasets against other state-of-the-art methods, while maintaining relatively low computation and memory requirements.

X. Luo and Y. Xie—Equal contribution.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Single image super-resolution (SISR) pursues to recover a high-resolution (HR) image from its degraded low-resolution (LR) counterpart. The arise of convolutional neural networks, accompanying with the residual learning [12], have paved the way for the development of SISR. With the massive number of stacked residual blocks (RBs), the existing deep SR models [21, 25, 35] achieve great breakthrough in accuracy. However, they cannot be easily utilized to real applications for the high computational complexity and memory storage.

To reduce model parameters, most existing works still focus on the architecture design, such as pyramid network [20], the recursive operation with weight sharing [2, 5], channel grouping [16, 17] and neural architecture search [6]. As we know, RB as a basic unit is often utilized in many SR methods. Therefore, we aim to explore how to make a lightweight model in the view of RBs.

Inspired by the lattice filter bank [22] which is the physical realization of Fast Fourier Transformation with the butterfly structure, we design the lattice block (LB) with two butterfly structures, each of which accompanies with a RB. LB is an economical structure with the potential of various linear combination patterns of two RBs, which can help to expand representation space for achieving a more powerful network. The series connection of two RBs is only a special case of LB. Moreover, in order to obtain the appropriate combination of RBs for SR, the connection weights of pair-wise RBs in LB, named combination coefficients, are learned with the attention mechanism for information reweighting rather than from scratch.

Based on LB, we build a lightweight SR network named LatticeNet. Compared with the recursive operation [5] and channel grouping [16], LB paves a new way to a better architectural design of RB combination. Besides, a feature fusion module is used to integrate multiple hierarchical features from different receptive fields in a backward concatenation strategy, which helps to mine feature correlations of intermediate layers. Unlike the latest work IMDN [16], which uses channel split similar to DenseNet for feature learning, LB is a more general unit and can be used in the previous SR models to replace RBs, which leads to a lightweight SR model. LatticeNet is superior to the state-of-the-art lightweight SR models as shown in Fig. 1. To summarize, the main contributions are:

-

The lattice block (LB) is elaborately designed based on the lattice filter bank with two butterfly structure. With the help of this structure, the network representation capability can be significantly expanded through diverse combinational patterns of residual blocks (RBs).

-

LB is adaptively combined by the learnable combination coefficients of the RBs with the attention mechanism, which upweights the important channels of feature maps to obtain the better SR performance.

-

LB favors lightweight model design. Based on the novel block, we build a lightweight SR network dubbed LatticeNet with the backward fusion strategy for extracting hierarchical contextual information.

-

LB leads to the reduction of parameters by about half in the baseline SR models while keeping the similar SR performance if RBs are replaced by LBs. LatticeNet achieves the superior performance on several SR benchmark datasets against the state-of-the-art methods while maintaining relatively lower model size and computational complexity.

2 Related Work

2.1 Deep SR Models

Numerous deep SR models have been proposed and achieved promising performance. SRCNN [8] is the preliminary work for applying a three-layer convolutional neural network to the SR task. VDSR [18] and MemNet [30] employ skip connections for learning the residual information. RCAN [35] proposes a deep residual network for SR with the residual-in-residual structure embedded with the channel-wise attention mechanism. SAN [7] proposes a second-order attention SR network for the purpose of powerful feature expression and correlation learning. SRFBN [24] proposes a feedback mechanism to address the feedback connections and generate effective high-level feature representations. CFSNet [31] adaptively learns the coupling coefficients from different layers and feature channels for finer controlling of the recovered image. EBRN [33] proposes an incremental recovering process for texture SR. Although these deep SR models can make significant performance quantitatively and qualitatively, they are highly cost in memory storage and computational complexity.

2.2 Lightweight SR Models

Lightweight models have attracted widespread attention for saving computing resources. They can be approximately divided into three classes: the architectural design related methods [17, 29], the knowledge distillation based methods [11], and the neural architecture search based methods [6]. The first kind of lightweight SR methods mainly focus on recursive operation and channel splitting. DRCN [19] first applies the recursive neural network to SR. DRRN [29] adopts a deeper network with the recursive operation. CARN [2] utilizes a recursive cascading mechanism for learning multi-level feature representations. BSRN [5] employs a block state-based recursive network and performs well in quality measures and speed. IDN [17] utilizes group convolution and combines the local long and short-path features effectively. IMDN [16] introduces information multi-distillation blocks to enlarge receptive field for extracting hierarchical features. Recently, knowledge distillation is used to learn a lightweight student SR network from a deep and powerful teacher network in [11]. Besides, FALSR [6] applies neural architecture search to SR and performs excellently. Although the researches of lightweight SR models have made great progress, it is still in the primary stage and more discussions are required.

2.3 Attention Mechanism

Nowadays, the attention mechanism emerges in numerous deep neural networks. SENet [14] firstly proposes a lightweight module to treat channel-wise features differently according to the respective weight responses. The attention module is introduced for low-level image restoration in [23, 35]. It has also been implemented on many other tasks. DANet [10] introduces two parallel attention modules to model the semantic interdependencies in spatial and channel dimensions respectively for scene segmentation. CBAM [32] also infers attention maps along two separate dimensions including channel and spatial. Due to the effectiveness of attention models, we also embed the attention mechanism into the lattice block to combine the RBs adaptively.

3 Proposed Method

In this section, we first present our lattice block based on the lattice filter. The lattice block includes two components: the topological structure and the connection weights. The former is a butterfly structure, and the latter is computed by using the attention mechanism. Then, we describe the overall network architecture. Next, the loss function is defined to optimize the model. Finally, we discuss the differences between the proposed method and its related works.

3.1 From Lattice Filter to Lattice Block

Suppose there are two signals x and y, the linear combination O between them has the following types: 1) O = ax + y; 2) O = x + by; 3) O = ax + by, where a, b denote different weights. Generally, the three formulations are equivalent. The first two types are similar to the form of the identity mapping residual learning [13]. Therefore, we consider to employ such combination.

Lattice Filter. The structure of lattice filter is a variant of the decimation-in-time butterfly operation of FFT, which decomposes the input signal to multi-order representations. Figure 2(a) shows the basic unit of the standard lattice structure for a 2-channel filter bank, and the relationship between the input and output is formalized in Eq. (1) and Eq. (2). Here, z represents the variable of the z-transformation. \(z^{-1}\) corresponds to the delay of one unit of the signal in the time domain. The high-order components \(P_{i}(z)\) and \(Q_{i}(z)\) can be synthesized from low-order inputs \(P_{i-1}(z)\) and \(Q_{i-1}(z)\) by a crossed way. \(a_{i}\) denotes the coefficient which defines the combination between two components. It can achieve high-speed parallel processing of FFT for the modular structure [22].

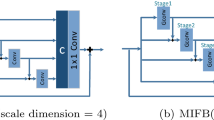

Lattice Block. Inspired by the lattice filter bank, we design the lattice block (LB) which contains two such butterfly structures with a RB per butterfly structure, as shown in Fig. 2(b). The butterfly structure favors multiple combination patterns of RBs. Thus, LB is an economical structure with the potential of various combinations of two RBs. In detail, a collection of features maps \(\mathcal {X}\) is fed into in the lower branch which contains three convolutional layers with a Leaky Rectified Linear Unit (LReLU) activation function per layer. The nonlinear function implicitly induced by all these operators is denoted as \(H(\cdot )\). Then, the first combination between \(\mathcal {X}\) and \(H(\mathcal {X})\) can be formulated as

where \(A_{i-1}\) and \(B_{i-1}\) are two vectors of combination coefficients, which are adaptively computed according to the responses of features (i.e., \(H(\mathcal {X})\), \(\mathcal {X}\)) for information reweighting. Considering a convolutional layer contains multiple channels, each channel can be viewed as a signal. Therefore, the combination coefficients \(A_{i-1}\) and \(B_{i-1}\) are vectors, whose length is equal to the number of feature maps.

Next, \(P_{i-1}(\mathcal {X})\) is fed into in the upper branch to go through the same convolutional structure as \(H(\cdot )\) defined by \(G(\cdot )\). Then, the second combination of \(P_{i}(\mathcal {X})\) and \(Q_{i}(\mathcal {X})\) can be formulated as

where \(A_{i}\) and \(B_{i}\) are also the combination coefficients corresponding to \(Q_{i-1}(\mathcal {X})\) and \(G(P_{i-1}(\mathcal {X}))\). After that, \(P_{i}(\mathcal {X})\) and \(Q_{i}(\mathcal {X})\) are concatenated in channels and followed by a \(1\times 1\) convolution for channel alignment.

Potential Combinations in LB. Here, we mainly analyse the multiple combinations of RBs in LB. Given input feature maps \(\mathcal {X}\), the output \(\mathcal {Y}\) before the \(1\times 1\) convolution of LB is denoted as

In a unrolled view, an LB contains the potential of multiple combination patterns corresponding to different combination coefficients. Note that 1 and 0 denote the vectors whose elements are 1 and 0, respectively. Assuming the following special cases:

-

(1)

\(\varvec{A_{i-1} = B_{i-1} = A_{i} = B_{i}}=\mathbf {1}.\) \(P_{i-1}=Q_{i-1}=\mathcal {X}+H(\mathcal {X})\), \(P_{i}=Q_{i}=P_{i-1}+G(P_{i-1})\). The two branches are identical, so that the LB can be simplified to series connection of two RBs as shown in Fig. 3(a).

-

(2)

\(\varvec{A_{i-1} \ne B_{i-1}, A_{i} = B_{i}}=\mathbf {1}.\) \(P_{i-1}=\mathcal {X}+A_{i-1}H(\mathcal {X})\), \(Q_{i-1}=H(\mathcal {X})+B_{i-1}\mathcal {X}\), and \(P_{i-1}\) is not equal to \(Q_{i-1}\). \(P_{i}=G(P_{i-1})+Q_{i-1}\), \(Q_{i}=Q_{i-1}+G(P_{i-1})\). Note that \(P_{i}\) is equal to \(Q_{i}\). As shown in Fig. 3(b), the LB is degraded to two parallel scaling RBs with weights sharing followed by a RB.

-

(3)

\(\varvec{A_{i-1} = B_{i-1}=\mathbf {1}, A_{i} \ne B_{i}}.\) \(P_{i-1}=Q_{i-1}=\mathcal {X}+H(\mathcal {X})\), \(P_{i}=G(P_{i-1})+A_{i}Q_{i-1}\), \(Q_{i}=Q_{i-1}+B_{i}G(P_{i-1})\). Note that \(P_{i}\) is not equal to \(Q_{i}\). As shown in Fig. 3(c), LB is degraded to a RB followed by two parallel scaling RBs with weights sharing.

-

(4)

\(\varvec{A_{i-1} \ne B_{i-1}, A_{i} \ne B_{i}}.\) \(P_{i-1} \ne Q_{i-1}\), \(P_{i} \ne Q_{i}\). As shown in Fig. 3(d), the LB is equivalent to two parallel scaling RBs with weights sharing followed by two parallel scaling RBs with weights sharing.

There still exist other combination patterns. For example, when all the coefficients are equal to \(\mathbf {0}\), the LB is equivalent to two parallel stacks of convolution layers. These special cases can be approximately achieved by normalized coefficients. Therefore, the proposed LB can be viewed as diverse combination patterns of RBs to expand the representation space with the learnable combination coefficients. The diverse structures of LB favor lightweight model design.

Combination Coefficient Learning. The vectors \(A_{i}\) and \(B_{i}\) of combination coefficients actually play the role of the connection weights in LB, as shown in Fig. 2(b). As mentioned above, the special vectors of combination coefficients are related to the special cases of LB in the unrolled view. Rather than brute-force searching all the potential structures in LB, we adopt the attention mechanism to compute the combination coefficients. Inspired by the success of SENet [14] and IMDN [16], we utilize two statistics to compute the combination coefficients: the mean and the standard deviation of a feature map.

As Fig. 4 shows, given a group of feature maps, in the upper branch, we get the mean value of each feature map by global average pooling, and in the lower branch, we compute the standard deviation of each feature map. After that, the statistic vector in each branch is passed to two \(1\times 1\) convolution layers, each of which is followed by the ReLU activation layer and Sigmoid activation layer, respectively. Finally, the output vectors of two branches are averaged as the combination coefficients.

3.2 Network Architecture

Based on the lattice block, we propose a lightweight SR network dubbed LatticeNet, which contains four components: the shallow feature extraction, multiple cascaded lattice blocks (LB), the backward fusion module (BFM), and the upsampling module as shown in Fig. 5. Here, the input LR images and the output SR images are denoted as X and Y, respectively.

Firstly, we obtain the shallow features \(F_{0}\) by applying two cascaded \(3\times 3\) convolutional layers without activation to the LR input X:

where \(R_{0}(\cdot )\) denotes the shallow convolution operation.

Several LBs are followed for deep feature interaction and mapping, which can be formulated as

where \(R_{k}(\cdot )\) indicates mapping function of the k-th LB, \(F_{k-1}\) represents the features from the previous adjacent LB, and M is the total number of LBs.

BFM is used to integrate features from all LBs for extracting hierarchical frequency information:

where \(R_{f}(\cdot )\), \(F_{f}\) are the backward feature fusion function and the fused features, respectively. Finally, the upsampling layer with sub-pixel convolution [27] is utilized for generating the SR image Y:

where \(R_{up}(\cdot )\) denotes the upsampling function.

3.3 Backward Fusion Module

The hierarchical information is very important for SR. Thus, we fuse features from multiple layers to obtain more contextual information. In this paper, we adopt a backward sequential concatenation strategy for feature fusion of different receptive fields like [28]. The core operation of our feature fusion module is the \(1\times 1\) convolution followed by the ReLU activation function (omitted in Fig. 5) for reducing feature dimension by half. Here, we denote the output of the i-th LB as \(F_{i}\). As shown in Fig. 5, there are four LBs. The features of each LB are firstly convolved by \(1\times 1\) kernel which results in dimension reduction by half. Let \(F_{i}\) \((i=1,2,3,4)\) denote the obtained features with the index increasing from the left to right in Fig. 5. The fusion operation is formulated as

where \(Concat(F_{i}, H_{i+1})\) denotes the operation that concatenates \(F_{i}\) and \(H_{i+1}\) and Conv() denotes \(1\times 1\) convolution. In detail, the last two adjacent groups of feature maps \(F_{i}\) and \(F_{i-1}\) are concatenated and then convolved with \(1\times 1\) kernel. After that, the obtained feature group \(H_{i}\) is concatenated with \(F_{i-1}\), which follows a \(1\times 1\) convolution, and so forth. The final fused features \(H_{1}\) adding the shallow features \(F_{0}\) are propagated to the upsampling layer to generate SR images. By such a backward sequential concatenation way, the feature fusion module can integrate the features from all the LBs, which helps to extract more hierarchical contextual information.

3.4 Loss Function

Mean Absolute Error (MAE) and Mean Squared Error (MSE) loss are the most frequently used loss functions for low-level image restoration tasks. Here, we only adopt MAE loss for measuring the differences between the SR images and the ground truth. Specifically, the loss function is

where \(x_{i}^{lr}\), \(x_{i}^{gt}\) denote the i-th LR patch and the corresponding ground truth. N is the total number of training samples. \(R(\cdot )\) represents the output of LatticeNet.

3.5 Discussions

In this section, we discuss the differences between the proposed model and the related works, e.g., SRResNet [21] and RDN [36].

LatticeNet vs. Residual Network. Residual blocks are popularly used in for image restoration. SRResNet [21] and RCAN are two typical residual models for SISR. The former adopts cascaded RBs as feature mapping and the later proposes a residual-in-residual structure with channel attention. In LatticeNet, the structure of two cascaded RBs is just a special case of LB. The proposed block can represent more diverse structure patterns and can be embedded in any SR networks with RBs as basic block.

LatticeNet vs. RDN. In RDN [36], the feature fusion adopts \(1\times 1\) convolution for concatenating previous features directly. In LatticeNet, BFM is used to combine those features by gradual concatenation approach.

The proposed lattice structure is not just another kind of residual and dense connection. The insight behind it is a kind of network architecture search for finding reasonable combinations of RBs to make a lightweight SR model. Rather than exhausted search, LB is a butterfly structure borrowed from signal processing and never investigated in SR before.

4 Experiments

4.1 Datasets

The model is trained with a high-quality dataset DIV2K [1], which is widely used for image SR task. It includes 800 training images and 100 validation images with rich textures. The LR images are obtained in the same way as [2, 35]. Besides, we evaluate our model on four public SR benchmark datasets: Set5 [4], Set14 [34], B100 [3], and Urban100 [15].

4.2 Implementation Details

During training, \(48\times 48\) RGB image patches are input to the network. The training data is augmented by random flipping and rotation. We use Adam optimizer with \(\beta _{1}=0.9\), \(\beta _{2}=0.999\) to train the model. The mini-batch size is 16. The learning rate is initialized as \(2e-4\) and reduced by half per 200 epochs for 1000 epochs totally. The kernel size of all convolutional layers is set to \(3\times 3\) except for \(1\times 1\) convolutional layer. The number of convolutional kernels for each non-linear function in the LB is set to 48, 48 and 64, respectively. We use PyTorch to implement our model with a GTX 1080 GPU. It takes about one day for training LatticeNet.

We use objective criteria, i.e., peak signal-to-noise ratio (PSNR), structural similarity index (SSIM) to evaluate our model performance. The two metrics are both calculated on the Y channel of the YCbCr space as adopted in the previous work [35]. Besides, we use Mult-Adds to evaluate the computational complexity of a model, which denotes the number of composite multiply-accumulate operations for a single image. Similar to [2], we also assume the size of a query image is \(1280\times 720\) to calculate Mult-Adds.

4.3 The Contribution of Lattice Block for Lightweight

As mentioned in Sect. 3.5, we embed LB in large models for validating its effectiveness. Every four consecutive RBs are replaced with an LB in SRResNet [21] and RCAN [35] (called SRResNet_LB and RCAN_LB). For a fair comparison, the combination coefficients are calculated only by global average pooling for the same configuration with RCAN.

As Table 1 shows, SRResNet_LB achieves the comparable performance on all the datasets against SRResNet* with 0.96M parameters and the PSNR value even outperforms SRResNet* by \(+0.02\) dB on Set5. Moreover, RCAN_LB also obtains the similar PSNR values against RCAN with only 8.6M parameters, which has approximately half amount of parameters than RCAN.

4.4 Ablation Analysis

In this subsection, we discuss LatticeNet and first analyse the effects of lattice block (LB), backward fusion module (BFM) and combination coefficients (CC). The baseline only contains four basic blocks, each of which only contains two cascaded RBs with three convolution layers (the number of kernels is 48, 48, 64, respectively) without BFM, and other components are similar to LatticeNet. Then, we discuss the performance of several combination patterns of pair-wise RBs. Finally, we give how the number of LBs affects SR performance and the related comparison in running time.

Lattice Block (LB). To demonstrate the effect of LB, we replace the basic block in the baseline with LB. As Table 2 shows, the PSNR values of the baseline on Set5 and Set14 for \(2\times \) SR are the lowest. After adding the butterfly structure of LB, the PSNR values are increased by \(+0.12\) dB, \(+0.16\) dB on Set5 and Set14 with only \(\sim \)50K parameters increased as the column “1st” illustrates. Besides, the baseline with LB gains 0.12 dB in Set5 while the baseline with BFM only gains 0.04 dB, which shows that LB contributes more to the SR performance than BFM. It indicates that LB improves the expression of the network through the butterfly combination patterns.

Backward Fusion Module (BFM). To evaluate the effect of the BFM, we add BFM to the baseline network. As the “3rd” column shows in Table 2, the PSNR values with BFM are both increased by \(+0.04\) dB on Set5 and Set14 with only \(\sim \)12K parameters increased upon the baseline. Besides, we also compare BFM with \(1\times 1\) convolution fusion that is used in RDN [36] for fusing all features from all LBs directly. It is observed that BFM is superior to the fusion strategy using \(1\times 1\) convolution with less parameters. Moreover, BFM is similar to FPN [26] which is proven to be effective in object detection. The baseline combined with BFM and LB achieves better SR performance whose PSNR is increased from 37.84 dB to 38.01 dB on Set5. The experiments show the effectiveness of the lightweight fusion module.

Combination Coefficients (CC). To fully employ the statistical characteristics of data, we compute combination coefficients by integrating mean pooling (MP) and standard deviation pooling (SDP). The experimental results show that a little gain can be achieved on four benchmark datasets if we put them together as illustrated in Table 3. Note that the combination coefficients in Table 2 adopt the mean and standard deviation. It shows that the attention mechanism for low-level vision tasks can be further investigated.

Comparisons of Different RB Structures. Considering that the reason for the PSNR improvement of LB may be the increase of parameters, we compare several RB structures with LB for the amount of computation and parameters. We place these structures as basic block in LatticeNet, which are defined as:

-

(1)

2RBs: two cascaded RBs, which has slightly fewer parameters and computation amount than an LB structure.

-

(2)

2RBs+R1: two cascaded RBs, each of which goes through a recursive operation. It has slightly fewer parameters and approximately twice computation amount than an LB structure.

-

(3)

2RBs+R2: two cascaded RBs with once recursive operation, which has the same parameters and computation amount with 2RBs+R1.

-

(4)

4RBs: four cascaded RBs, which has approximately twice parameters and computation amount than an LB.

The quantitative evaluation of these structures in PSNR for \(2\times \) SR is illustrated in Fig. 6. The experimental results show an LB is not only superior to 2RBs, but also better than 2RBs+R1 and 2RBs+R2. Moreover, LB can even obtain comparable performance with 4RBs, which has near twice parameters than an LB. Therefore, it can demonstrate that the performance improvement really comes from the lattice structure and LB favors lightweight model design.

Number of Lattice Blocks. For better balancing the model size, performance and execution time, we compare the proposed model with different number of LBs, i.e., 2, 4, 8, 10. As shown in Table 4, with the number of LBs increasing, the SR performance can be improved, accompanying with parameters and execution time rising up. Therefore, we use 4 LBs in our experiments. Besides, the average running time comparison in our experimental environment between IMDN [16] and LatticeNet is shown in Table 5, where the testing code is given by IMDN. It shows that the differences of running time are negligible although the parameter of LatticeNet is slightly larger than IMDN.

4.5 Comparisons with the State-of-the-arts

In this section, we conduct extensive experiments on four publicly available SR benchmark datasets and compare with 12 state-of-the-art lightweight SR models: SRCNN [8], FSRCNN [9], VDSR [18], DRCN [19], LapSRN [20], DRRN [29], MemNet [30], IDN* [17]Footnote 1, CARN [2], BSRN [5], FALSR [6], and IMDN [16].

The quantitative comparisons for 2\(\times \), 3\(\times \), and 4\(\times \) SR are shown in Table 6. When compared with all given methods, our LatticeNet performs the best on the four datasets. Meanwhile, we also give the network parameters for all the comparison methods. Our model is at the medium level of the model complexity with less 800K parameters and the performance is comparable with CARN and IMDN. Besides, Mult-Adds of LatticeNet is also relatively lower. It demonstrates that our method is superior to other SR methods in comprehensive performance.

The visual comparisons on scale 4\(\times \) on B100 and Urban100 are depicted in Fig. 7. For Image “148026” in B100, our method can recover the correct texture of the wooden bridge well than other methods. Besides, for image “img044” and “img067” in Urban100 dataset, we can also observe that our results are more favorable and can recover more details. Though our method achieves comparable performance against IMDN in PSNR and SSIM, our method is obviously superior to IMDN with a large margin in the visual effect.

5 Conclusions

In this paper, we propose the lattice block which is an economical structure favoring the lightweight model design. It has the potential of multiple combinations of two RBs benefited by the combination coefficients. The combination coefficients of the lattice block are learned with the attention mechanism for better SR performance. It can embed in any SR networks using RBs as basic block. Based on the lattice block, we also build LatticeNet which is a lightweight SR model. Moreover, we adopt a backward sequential concatenation strategy to integrate more contextual information from different receptive fields. Experimental results on several benchmark datasets also demonstrate that our method can achieve superior performance with moderate parameters.

Notes

- 1.

IDN* refers to the results given in https://github.com/Zheng222/IDN-tensorflow, which is retrained on DIV2K dataset.

References

Agustsson, E., Timofte, R.: NTIRE 2017 challenge on single image super-resolution: dataset and study. In: CVPRW (2017)

Ahn, N., Kang, B., Sohn, K.A.: Fast, accurate, and lightweight super-resolution with cascading residual network. In: ECCV (2018)

Arbelaez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. TPAMI 33, 898–916 (2011)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-Complexity Single-Image Super-resolution Based on Nonnegative Neighbor Embedding. BMVA Press (2012)

Choi, J.H., Kim, J.H., Cheon, M., Lee, J.S.: Lightweight and efficient image super-resolution with block state-based recursive network. arXiv:1811.12546 (2018)

Chu, X., Zhang, B., Ma, H., Xu, R., Li, J., Li, Q.: Fast, accurate and lightweight super-resolution with neural architecture search. arXiv:1901.07261 (2019)

Dai, T., Cai, J., Zhang, Y., Xia, S.T., Zhang, L.: Second-order attention network for single image super-resolution. In: CVPR (2019)

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. TPAMI 38, 295–307 (2016)

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 391–407. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_25

Fu, J., Liu, J., Tian, H., Fang, Z., Lu, H.: Dual attention network for scene segmentation. arXiv:1809.02983 (2018)

Gao, Q., Zhao, Y., Li, G., Tong, T.: Image super-resolution using knowledge distillation. In: Jawahar, C.V., Li, H., Mori, G., Schindler, K. (eds.) ACCV 2018. LNCS, vol. 11362, pp. 527–541. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-20890-5_34

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. arXiv:1603.05027 (2016)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: CVPR (2018)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: CVPR (2015)

Hui, Z., Gao, X., Yang, Y., Wang, X.: Lightweight image super-resolution with information multi-distillation network. In: ACMMM (2019)

Hui, Z., Wang, X., Gao, X.: Fast and accurate single image super-resolution via information distillation network. In: CVPR (2018)

Kim, J., Kwon Lee, J., Mu Lee, K.: Accurate image super-resolution using very deep convolutional networks. In: CVPR (2016)

Kim, J., Kwon Lee, J., Mu Lee, K.: Deeply-recursive convolutional network for image super-resolution. In: CVPR (2016)

Lai, W.S., Huang, J.B., Ahuja, N., Yang, M.H.: Deep Laplacian pyramid networks for fast and accurate super-resolution. In: CVPR (2017)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: CVPR (2017)

Li, B., Gao, X.: Lattice structure for regular linear phase paraunitary filter bank with odd decimation factor. IEEE Sig. Process. Lett. 21, 14–17 (2014)

Li, X., Wu, J., Lin, Z., Liu, H., Zha, H.: Recurrent squeeze-and-excitation context aggregation net for single image deraining. In: ECCV (2018)

Li, Z., Yang, J., Liu, Z., Yang, X., Wu, W.: Feedback network for image super-resolution. In: CVPR (2019)

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: CVPRW (2017)

Lin, T.Y., Dollr, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection (2017)

Shi, W., et al.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: CVPR (2016)

Shrivastava, A., Sukthankar, R., Malik, J., Gupta, A.: Beyond skip connections: top-down modulation for object detection. arXiv:1612.06851 (2016)

Tai, Y., Yang, J., Liu, X.: Image super-resolution via deep recursive residual network. In: CVPR (2017)

Tai, Y., Yang, J., Liu, X., Xu, C.: MemNet: a persistent memory network for image restoration. In: ICCV (2017)

Wang, W., Guo, R., Tian, Y., Yang, W.: CFSNet: toward a controllable feature space for image restoration. In: ICCV (2019)

Woo, S., Park, J., Lee, J., Kweon, I.: CBAM: convolutional block attention module. arXiv:1807.06521

Qiu, Y., Wang, R., Tao, D., Cheng, J.: Embedded block residual network: a recursive restoration model for single-image super-resolution. In: ICCV (2019)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: International Conference on Curves and Surfaces (2010)

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: ECCV (2018)

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: CVPR (2018)

Acknowledgment

This work is supported by the National Natural Science Foundation of China under Grant 61876161, Grant 61772524, Grant U1065252 and partly by the Beijing Municipal Natural Science Foundation under Grant 4182067.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Luo, X., Xie, Y., Zhang, Y., Qu, Y., Li, C., Fu, Y. (2020). LatticeNet: Towards Lightweight Image Super-Resolution with Lattice Block. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12367. Springer, Cham. https://doi.org/10.1007/978-3-030-58542-6_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-58542-6_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58541-9

Online ISBN: 978-3-030-58542-6

eBook Packages: Computer ScienceComputer Science (R0)