Abstract

During the last three years, the most successful systems at the Video Browser Showdown employed effective retrieval models where raw video data are automatically preprocessed in advance to extract semantic or low-level features of selected frames or shots. This enables users to express their search intents in the form of keywords, sketch, query example, or their combination. In this paper, we present new extensions to our interactive video retrieval system VIRET that won Video Browser Showdown in 2018 and achieved the second place at Video Browser Showdown 2019 and Lifelog Search Challenge 2019. The new features of the system focus both on updates of retrieval models and interface modifications to help users with query specification by means of informative visualizations.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

State-of-the-art interactive video retrieval systems [1, 2, 14, 16, 17, 20] try to face difficult video retrieval tasks by a combination of information retrieval [3], deep learning [8], and interactive search [19, 21] approaches. Such systems are annually compared at the Video Browser Showdown (VBS) [5, 11, 12] evaluation campaign, where participating teams compete in one room in the concurrent way, trying to solve a presented video retrieval task within a given time frame. The competition tasks are revealed one by one at the competition, while the video datasetFootnote 1 is provided to teams in advance. This paper presents new features of the VIRET tool prototype that regularly participates at interactive video/lifelog search competitions [9, 12].

VIRET [14, 15] is a frame-based interactive video retrieval framework, relying mostly on a set of three basic image retrieval approaches for searching a set of selected video frames – keyword search based on automatic annotation, color sketching based on position-color signatures, and query by example image relying on deep features. The basic approaches can be further used to construct more complex multi-modal and/or temporal queries, which turned out to be an important VIRET tool feature at VBS 2019. In order to provide information about location of frequent semantic concepts, the sketch canvas supports construction of filters for faces or a displayed text. In addition, several filters are supported as well, either based on content (e.g., black and white filter) or the knowledge of video/shot boundaries (e.g., show only top ranked frames for each video/shot).

The interface is divided into two blocks, one for query formulation and one for top ranked results and context inspection. Extracted small thumbnails are used to display frames. All the entered queries are visible as well as other query settings like a sorting model or filters limiting the number of returned frames for each basic query model. Once a query is provided, users can browse temporal context of each displayed frame, observe video summary or an image map dynamically computed for top ranked frames.

Whereas the presented VIRET features were competitive in expertFootnote 2 VBS search sessions, in novice sessions the previous version of the VIRET tool prototype did not perform well. Specifically, while expert VIRET users solved all ten visual KIS tasks and six out of eight textual KIS tasks (the most of all participating teams), the novice VIRET users solved just two out of five evaluated visual KIS tasks (a subset of the expert tasks). According to our analysis, in two unsolved novice tasks the searched frame appeared on the first page, but it was overlooked. In addition, the novice users faced problems with keyword search without the knowledge of the set of supported labels used for automatic annotation. Hence, we focus both on updates of retrieval models and several modifications of the user interface for the next installment of the Video Browser Showdown.

2 New VIRET Features for VBS 2020

This section summarizes considered updates of employed retrieval models and interfaces.

2.1 Retrieval Models

Since the basic retrieval models and their multi-modal temporal combination used by VIRET often turned out to be effective to bring searched frames to the first page, we plan to keep the main querying scheme. However, several updates are considered for VBS 2020.

First, the automatic annotation process employing a retrained NASNet deep classification network [22] is modified to produce different score values for the network output vector (i.e., scores of assigned labels). Instead of softmax which is used for training, the employed feature extraction process currently uses another form of network output normalization of scores that enables more effective retrieval with queries comprising a combination of multiple class labels. In the normalized vectors, all potential zero scores are further replaced with a small constant. We also plan to investigate performance benefits of additional annotation sources (e.g., using object detection networks).

Second, the performance of the vitrivr tool [17] keyword search in ASR annotationsFootnote 3 was impressive at the previous VBS event. Since the vitrivr team shared the ASR data with other teams, we plan to integrate the data to the VIRET framework. Specifically, we plan to include a video filter based on the presence of a spoken word or phrase.

Third, given a set of collected query logs, we plan to investigate and optimize meta parameters of the retrieval models with respect to the whole set of logged queries. More specifically, we plan to fine-tune the initial setting of filters for the number of top ranked frames for each model and presentation filters. Given a detected effective setting, the corresponding interface controls can be hidden or simplified for novice sessions.

Last but not least, we plan to include free-form text search using a variant of recently introduced W2VV++ model [10], extending the W2VV model [7] and relying on visual features from deep networks leveraging the whole ImageNet hierarchy [6] for training effective representations [13].

2.2 User Interface

Since the VIRET tool focuses on frequent query (re)formulations, informative visualizations are important to aid with querying and help with the semantic gap problem. For VBS 2020, we consider the following updates of the VIRET tool prototype interface.

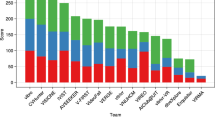

So far, the keyword search component prompted only supported class labels and their descriptions during query formulation. In the new version, we consider to automatically show also top ranked selected frames for prompted labels (see Fig. 1).

Without the knowledge of supported labels used for automatic annotation, (especially) novice users may face problems with keyword search initialization. Trying to bridge the gap, the interface was updated to show a few automatically assigned labels with the highest score for a displayed frame, once the mouse cursor hovers over the frame. This feedback helps novice users to observe and gradually learn how the automatic annotation works. Based on this feedback, users can interactively extend the query expression with labels that originally did not come to their mind.

In order to construct temporal queries using example images, we consider a hierarchical static image map [4] with an organized/sorted representative sample of the whole dataset. Let us emphasize that the primary purpose of the map is to find a suitable query example frame as finding one particular searched frame is a way more difficult task.

The last modification focuses on result presentation displays (used already at the Lifelog Search Challenge 2019). One display shows a classical long list of frames sorted by relevance (using larger thumbnails), where users can navigate using the scroll bar. The second display shows one larger page of the ranked result set, where frames are locally rearranged such that frames from one video are collocated and sorted by frame number (see Fig. 2). The video groups on one page are sorted with respect to the most relevant frame from each group and separated by a green vertical line.

3 Conclusion

This paper presents a new version of the VIRET system, focusing on updates of the utilized retrieval toolkit and interface. The updates aim at more convenient query formulation, new modality (speech), and fine-tuning of the employed ranking and filtering models.

Notes

- 1.

The V3C1 dataset [18] is currently used at VBS.

- 2.

Authors of a tool are considered to be experts as they are expected to use the tool more effectively.

- 3.

References

Amato, G., et al.: VISIONE at VBS2019. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.-H., Vrochidis, S. (eds.) MMM 2019. LNCS, vol. 11296, pp. 591–596. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05716-9_51

Andreadis, S., et al.: VERGE in VBS 2019. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.-H., Vrochidis, S. (eds.) MMM 2019. LNCS, vol. 11296, pp. 602–608. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05716-9_53

Baeza-Yates, R.A., Ribeiro-Neto, B.A.: Modern Information Retrieval - The Concepts and Technology Behind Search, 2nd edn. Pearson Education Ltd., Harlow (2011)

Barthel, K.U., Hezel, N.: Visually exploring millions of images using image maps and graphs. In: Huet, B., Vrochidis, S., Chang, E. (eds.) Big Data Analytics for Large-Scale Multimedia Search, pp. 251–275. John Wiley and Sons Inc. (2019)

Cobârzan, C., et al.: Interactive video search tools: a detailed analysis of the video browser showdown 2015. Multimed. Tools Appl. 76(4), 5539–5571 (2017). https://doi.org/10.1007/s11042-016-3661-2

Deng, J., Dong, W., Socher, R., Li, L., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255 (June 2009). https://doi.org/10.1109/CVPR.2009.5206848

Dong, J., Li, X., Snoek, C.G.M.: Predicting visual features from text for image and video caption retrieval. IEEE Trans. Multimedia 20(12), 3377–3388 (2018). https://doi.org/10.1109/TMM.2018.2832602

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016). http://www.deeplearningbook.org

Gurrin, C., et al.: [invited papers] Comparing approaches to interactive lifelog search at the lifelog search challenge (lsc2018). ITE Trans. Med. Technol. Appl. 7(2), 46–59 (2019). https://doi.org/10.3169/mta.7.46

Li, X., Xu, C., Yang, G., Chen, Z., Dong, J.: W2VV++: fully deep learning for ad-hoc video search. In: Proceedings of the 27th ACM International Conference on Multimedia, MM 2019, Nice, France, 21–25 October 2019, pp. 1786–1794 (2019). https://doi.org/10.1145/3343031.3350906

Lokoč, J., Bailer, W., Schoeffmann, K., Münzer, B., Awad, G.: On influential trends in interactive video retrieval: video browser showdown 2015–2017. IEEE Trans. Multimed. 20(12), 3361–3376 (2018). https://doi.org/10.1109/TMM.2018.2830110

Lokoč, J., et al.: Interactive search or sequential browsing? A detailed analysis of the video browser showdown 2018. ACM Trans. Multimed. Comput. Commun. Appl. 15(1), 29:1–29:18 (2019). https://doi.org/10.1145/3295663

Mettes, P., Koelma, D.C., Snoek, C.G.: The imagenet shuffle: Reorganized pre-training for video event detection. In: Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval, pp. 175–182. ICMR ’16, ACM, New York, NY, USA (2016). https://doi.org/10.1145/2911996.2912036, http://doi.acm.org/10.1145/2911996.2912036

Lokoč, J., Kovalčík, G., Souček, T., Moravec, J., Čech, P.: A framework for effective known-item search in video. In: Proceedings of the 27th ACM International Conference on Multimedia, MM 2019, pp. 1777–1785, ACM, New York (2019). https://doi.org/10.1145/3343031.3351046

Lokoč, J., Kovalčík, G., Souček, T., Moravec, J., Čech, P.: Viret: a video retrieval tool for interactive known-item search. In: Proceedings of the 2019 on International Conference on Multimedia Retrieval, ICMR 2019, pp. 177–181. ACM, New York (2019). https://doi.org/10.1145/3323873.3325034

Nguyen, P.A., Ngo, C.-W., Francis, D., Huet, B.: VIREO @ video browser showdown 2019. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.-H., Vrochidis, S. (eds.) MMM 2019. LNCS, vol. 11296, pp. 609–615. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05716-9_54

Rossetto, L., Amiri Parian, M., Gasser, R., Giangreco, I., Heller, S., Schuldt, H.: Deep learning-based concept detection in vitrivr. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.-H., Vrochidis, S. (eds.) MMM 2019. LNCS, vol. 11296, pp. 616–621. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05716-9_55

Rossetto, L., Schuldt, H., Awad, G., Butt, A.A.: V3C – a research video collection. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.-H., Vrochidis, S. (eds.) MMM 2019. LNCS, vol. 11295, pp. 349–360. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05710-7_29

Schoeffmann, K., Hudelist, M.A., Huber, J.: Video interaction tools: a survey of recent work. ACM Comput. Surv. 48(1), 14:1–14:34 (2015). https://doi.org/10.1145/2808796

Schoeffmann, K., Münzer, B., Leibetseder, A., Primus, J., Kletz, S.: Autopiloting feature maps: the deep interactive video exploration (diveXplore) system at VBS2019. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.-H., Vrochidis, S. (eds.) MMM 2019. LNCS, vol. 11296, pp. 585–590. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05716-9_50

Thomee, B., Lew, M.S.: Interactive search in image retrieval: a survey. Int. J. Multimed. Inf. Retrieval 1(2), 71–86 (2012). https://doi.org/10.1007/s13735-012-0014-4

Zoph, B., Vasudevan, V., Shlens, J., Le, Q.V.: Learning transferable architectures for scalable image recognition. CoRR abs/1707.07012 (2017). http://arxiv.org/abs/1707.07012

Acknowledgments

This paper has been supported by Czech Science Foundation (GAČR) project 19-22071Y and by Charles University grant SVV-260451. We would also like to thank Přemysl Čech and Vít Škrhák for their help with interface in WPF.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Lokoč, J., Kovalčík, G., Souček, T. (2020). VIRET at Video Browser Showdown 2020. In: Ro, Y., et al. MultiMedia Modeling. MMM 2020. Lecture Notes in Computer Science(), vol 11962. Springer, Cham. https://doi.org/10.1007/978-3-030-37734-2_70

Download citation

DOI: https://doi.org/10.1007/978-3-030-37734-2_70

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-37733-5

Online ISBN: 978-3-030-37734-2

eBook Packages: Computer ScienceComputer Science (R0)