Abstract

Extracting knowledge from digital images largely depends on how well the mining algorithms can focus on specific regions of the image. In multimodality image analysis, especially in multi-layer diagnostic images, identification of regions of interest is pivotal and this is mostly done through image segmentation. Reliable medical image analysis for error-free diagnosis requires efficient and accurate image segmentation mechanisms. With the advent of advanced machine learning methods, such as deep learning (DL), in intelligent diagnostics, the requirement of efficient and accurate image segmentation becomes crucial. Targeting the beginners, this paper starts with an overview of Convolutional Neural Network, the most widely used DL technique and its application to segment brain regions from Magnetic Resonance Imaging. It then provides a quantitative analysis of the reviewed techniques as well as a rich discussion on their performance. Towards the end, few open challenges are identified and promising future works related to medical image segmentation using DL are indicated.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Medical diagnosis, disease detection, treatment and response of treatment are key features and strategies in clinical medicine. Accurate diagnosis and early disease detection can assist doctors to ensure appropriate treatment for patients. Reliable analysis of medical images acquired from various imaging techniques (such as X-ray, Computed Tomography (CT), Magnetic Resonance Imaging (MRI), Positron Emission Tomography (PET), Optical Coherence Tomography (OCT), Endoscopy) plays an important role in diagnostic medicine. Such analysis provides clear understanding of the disease localisation which then leads to appropriate treatment followed by monitoring of treatment response in patients.

Image segmentation – a (semi)-automatic detection of boundaries in an image – is a mandatory steps of image analysis pipeline. The high degree of variability in medical images make the segmentation laborious which assists to achieve further insights in the extracted image boundary.

This work presents a survey on Application of Convolutional Neural Network (CNN) in segmenting brain regions from MRI.

The organization of this paper is as follows: Sect. 2 describes the basic of CNN architecture, Sect. 3 discusses its application in medical image segmentation, Sect. 4 presents its performance evaluation and Sect. 5 lists some open challenges and probable research opportunities.

2 The Convolutional Neural Network

In general, deep learning (DL) architectures learn deep features from data in hierarchical and composite ways. This is achieved through multiple levels of abstraction where higher levels of abstraction are built on the top of lower levels and the lowest ones are the original input data [22] (Fig. 1).

This paper focuses only on CNN due to its wide acceptance as the most popular architecture for image analysis. CNN is a fully trainable biologically inspired version of multi-layer perceptron composed of alternating convolution and pooling layers followed by a fully connected layer at the end. Usually, CNN requires a large amount of data as the number of parameters and node needed to be trained is relatively high [22]. Some widely used CNN configurations include AlexNet, VGGNet and GoogLeNet. In CNN, an input image is convolved with kernels (\(k_j^l\)), biases (\(b_j^l\)) are added and a new feature map (\(x_j^l\)) is generated, such that, \( x^l_j = f\left( \sum _{i\in M_j} x^{l-1}_i*k^l_ij + b^l_j\right) \). Unlike traditional ML approaches, CNNs learn and optimise best sets of convolution kernels. Incorporating such kernels with appropriate pooling operation extracts relevant features for a given task (e.g., classification, segmentation or recognition). CNNs are used in segmentation by classifying each pixel individually and presenting it with patches extracted around the particular pixel.

Fully Convolutional Network (FCN)– a variant of CNN– can generate a likelihood map instead of single pixel. FCN based segmentation network contains two paths: downsampling path (for semantic information) and upsamping path (for spatial information). In addition, skip connections are used to fully get the fine-grained spatial information [44].

U-Net is inspired from FCN which consists of contracting and expanding paths to capture the contextual and localisation information respectively. The segmentation map generated by U-Net contains only the pixels, so the full context is available in the input image [35].

Dual pathway architecture is generated by coupling another identical convolutional pathway. for handling multi-class problem and processing multiple sources/multi-scale information from channels in input layers. The parallel pathways extract features independently from multiple channels [16, 26].

In dilated CNN (Di-CNN), the weights of convolutional layers are sparsely distributed over a large receptive field. It is an effective architecture to achieve large receptive field with limited number of convolutional layers without subsampling layers [27, 29].

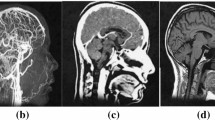

3 CNN in Segmenting MRI

DL, in particular, CNN-based segmentation of MRI has brought significant change to clinical studies towards accurate, efficient and safe evaluation [23]. MRI–a noninvasive imaging–provides detailed functional and anatomical information of soft tissues, bones and organs in any arbitrary plane. The automatic segmentation of MRI is a key step in delineating the contour and any unusual anatomical structure or interior region.

A significant number of studies related to segmentation of MRI using CNN and its variant have been reported in the literature. Moeskops et al. introduced CNN based tissue regions segmentation from brain MRI. CNN combines multiple patches and different sized kernels to learn multi-scale features by estimating both intensity and spatial characteristics [25]. Brebisson et al. applied CNN for anatomical brain segmentation [3]. The authors have benchmarked it against multi-atlas methods of 2012 MICCAI challenge.

Moeskops et al. and Milletari et al. introduced adversarial training with DiCNN [27] and Hough CNN [24] for brain MRI segmentation. Moeskops et al. also employed single trained CNN for multiple segmentation tasks including a number of tissues in MR brain images, the pectoral muscle in MR breast images, and the coronary arteries in cardiac CTA, etc. [26].

The accurate segmentation of brain into white matter (WM), gray matter (GM) and cerebrospinal fluid (CSF) is an open challenges. Nguyen et al. used Gaussian mixture model (GMM) with CNN to segment brain MRI into WM, GM and CSF [30]. Moeskops et al. applied dilated CNN to segment isointense infant brain into WM, GM and CSF [29]. The dilated triplanar network is combined with an un-dilated 3D CNN. 3D FCN is also used for WM, GM and CSF segmentation in isointense infant brain MR [44]. Multi-scale deep supervision is applied to reduce gradient vanishing problem. Xu et al. combined three FCNs fusing with predicted probability map for small WM segmentation [42]. WM hyperintensities (WMH) are signal abnormalities in the white matter, and Ghafoorian et al. trained a CNN with non-uniformly sampled patches for WMH segmentation [9]. The authors also proposed a CNN-based approach in [10] for WMH segmentation combining the anatomical location information with network. WMH has some features similar to features of lesions and difficult to distinguish. Guerrero et al. developed a FCN architecture to simultaneously segment WMH and stroke lesions. The convolutional architecture used residual element to mitigate model complexity and improve optimization speed [11]. Moeskops et al. applied CNN to segment brain tissues and WMH at the same time [28]. Rachmadi et al. segmented WMH by combining the location information and global spatial information with CNN in the convolution level [34].

Choi et al. proposed a CNN-based approach for segmenting very small region such as striatum [5]. Instead of using the whole input image to segment such a small region, the authors employed two CNNs, known as local and global. The global CNN determined probable locations of striatum and the local CNN to predict the accurate labels of striatum voxels.

Shakeri et al. proposed a FCN based approach for the segmentation of frontostriatal sub-cortical structures in MR images [37]. The output of the segmentation approach was improved by feeding it as input to Markov Random Field (MRF). Kushibar et al. developed another sub-cortical brain structure segmentation approach by combining convolutional and spatial features [18]. It employed a 2.5D CNN model and the combination of spatial features was inspired from this work [10]. 3D FCN is also employed to segment sub-cortical brain region in MRI [8]. This method avoided over fitting by generating a deep network combining small convolution kernels. He et al. segmented left and right caudate nucleus along with left and right hippocampus from brain MRI using 3D FCN and 3D U-Net [13]. Here, affine invariant spatial information is learnt from FCN and used in 3D U-net. Another 3D CNN, called DeepNAT, was proposed in [40]. Bao et al. applied multi-scale structured CNN (MS-CNN) to segment several sub-cortical structures from MRI. They used dynamic random walker (DRW) as post-processing [1].

Pereira et al. proposed CNN-based method for segmentation of glioma from MRI. The authors stacked small convolution kernel to generate deeper CNN [33]. Hussain et al. applied CNN for glioma segmentation [15]. The architecture introduced two phase training method for data imbalance problem.

Glioblastoma is a highly malignant grade IV glioma. Yi et al. applied 3D CNN for glioblastoma segmentation. The first layer of the CNN was pre-defined with Difference-of-Gaussian (DoG) filters to learn 3D tumor MRI data [43].

Hoseini et al. [14] and Havaei et al. [12] applied CNN for brain tumor segmentation from MRI. Zhao et al. integrated FCN and conditional random field (CRF) for tumor segmentation [46]. In [38], 3-FCNs are combined for tumor segmentation. Zhuge et al. applied holistically-nested network (HNN), an extension of CNN for high grade glioma (HGG) segmentation [48]. Deep supervision is performed in HNN through an additional weighted-fusion output layer (Table 1).

Kamnitsas et al. applied a dual pathway, 3D CNN with 3D fully connected CRF to segment brain/Ischemic stroke lesion [16]. Wang and Zu et al. applied CNN to segment Nasopharyngeal Cancer(NPC) and achieved performance similar to manual segmentation [41]. Laukamp et al. proposed a multiparametric deep-learning model (DLM) for detection and segmentation of meningiomas from MR images [19].

Le et al. combined recurrent FCN with level set (LS) method to develop a novel end-to-end approach (Deep Recurrent Level Set (DRLS)) [20]. Zhang et al. applied CNN to segment brain tissue in isointense stage [45]. The multiple intermediate layers of CNN are incorporated with multimodal brain information collected from T1/T2 and fractional anisotropy images as input feature maps. Isointense infant brain image segmentation is expanded and improved by Nie et al. using FCN [32]. The authors trained a separate network for each image and combined the outputs with higher network layers [32]. It optimizes the weights and biases for each modality corresponding to kernel size. FCN is also applied for infant brain segmentation in [17] and [31]. Dolz et al. proposed a densly connected 3D FCN in [7] for multimodal isointense brain segmentation. Dense connectivity exists between MR T1 and T2 in 3D context.

Multiple sclerosis (MS) is a chronic disease that causes scarred tissues (called lesions) being developed in brain/spinal cord. MRI scans show the symptoms of MS lesion as black holes. Brosch et al. proposed a novel approach for MS lesion segmentation [4] based on CEN and U-net. Valverde et al. [39] and Birenbaum et al. [2] proposed CNN based MS lesion segmentation. The first layer predicts the possible lesion areas and the second layer reduces the number of misclassified candidate pixels. Roy et al. applied FCN for MS lesion segmentation [36]. This is the first multi-view CNN segmentation approach in MRI that uses longitudinal information along with other features.

4 Performance Evaluation

Three well-known measures, the accuracy, dice coefficient, F1 score, were used to find the performance of a network architecture.

In brain tissue segmentation, the combination of FCN and DiCNN with adversarial training achieved superior dice score (DS) of 0.92 which outperforms other methods [27]. A multi-scale 3-layer CNN [25] outperformed multivariate MRF method (0.827 vs. 0.737). A multi-scale 4-layer CNN with weight sharing and location information achieved competitive DS of 0.791 [10]. Consecutive application of CNN-DNN with GMM achieved better performance than kNN (0.86 vs. 0.73) [30]. A CNN trained with non-uniform sampled patches outperformed a similar CNN with uniformly sampled patches (0.78 vs. 0.73) [9]. The uResNet architecture, containing 8-residual layers, 3-deconvolutional layers and a convolutional layer performed better than Lesion prediction algorithm (0.695 vs. 0.647) [11]. A 9-layer CNN architecture acquired DS of 0.67 [28], whereas another CNN using GSI achieved DS of 0.54 [34]. However, the latter one outperformed DBM which achieved only 0.33. The performance of FCN architectures were analysed in [42], where 3-FCNs outperformed 1-FCN (0.78 vs. 0.7). In segmenting striatum, two CNNs, local and global CNN outperformed FreeSurfer on OASIS dataset (0.893 ± 0.017 vs. 0.786 ± 0.015) and achieved competitive score on IBSR dataset (0.826 ± 0.038 vs. 0.827 ± 0.022) [5]. A 5-layer FCN and CRF was applied on IBSR dataset to segment the 3D T1-weighted MR images into thalamus, caudate, putamen and pallidum (DS: Proposed: 0.87 vs Bayesian: 0.85) [37].

The 3D FCN outperformed 2D FCN by achieving 0.92 validated on both ABIDE and IBSR dataset [8]. While segmenting caudate nucleus and hippocampus, FCN guided with shape learning network outperformed 3D U-Net on LPBA40 dataset (0.80175 vs. 0.779) [13]. CNN based methods reported outperformed the atlas-based methods (CNN:0.869 vs. LF-MA:0.867; 3D CNN: 0.906 vs. MA:0.904) on MICCAI 2012 and IBSR 18 datasets. MS-CNN based approach outperformed majority voting (MV) and patch-based label fusion (PBL) on IBSR dataset (MS-CNN: 0.807, MV: 0.658, PBL: 0.760) and LPBA40 dataset (MS-CNN: 0.843, MV: 0.764, PBL: 0.843). The performance improved on both datasets when MS-CNN was followed by DRW (MS-CNN+DRW: 0.822 vs MS-CNN: 0.807 on IBSR dataset), (MS-CNN+DRW: 0.850 vs MS-CNN: 0.843 on LPBA40 dataset) [1] (Fig. 2).

To segment HGG and LGG, CNN based methods achieved similar performances comparing with other methods (CNN: 0.88 vs. RW:0.88, TWOPATHCNN: 0.88 vs. RF: 0.87) both validated on BRATS 2013 challenge dataset [12, 33]. FCN, trained with image patches and CRF with image slices have outperformed cascaded CNN evaluated in BRATS 2013, BRATS 2015 and BRATS 2016 datasets (0.86 vs. 0.84) [46]. HNN outperformed FCN (0.78 vs. 0.61) in HGG tumor segmentation evaluated on 20 data from BRATS 2013 training dataset [48]. Two phase weighted trained CNN model performed better than anatomy guided RF model (0.86 vs. 0.85) on both BRATS 2013 and 2015 datasets [15]. A 5-layer 3D CNN based approach outperformed other methods to segment whole, core and active tumor on BRATS 2015 dataset (3D CNN: 0.89, Appearance and Context Sensitive Features: 0.83, Extremely randomized tree: 0.83) [43]. CNN has significantly outperformed Watershed algorithm for tumor segmentation on 15 T1-weighted MR images of NPC patients (0.79 vs. 0.6) [41]. In another study, CNN with task-level parallelism outperformed other methods (CNN: 0.90, LSE-KMC: 0.84). OM-Net [47], a CNN architecture achieved DS of 0.87 beating U-Net shape inspired architecture (DS: 0.85). But CNN was unable to score better result in [20] in comparison with DRLS (DRLS: 0.89, CNN: 0.88). Three concurrent FCNs, each to train 3 different filtered (Gaussian, Gabor and median) multi-MR images are applied for tumor segmentation and achieved superior result than Fuzzy C-Means (FCM) (0.8 vs. 0.75) [38]. The performance of a 11-layer DeepMedic architecture with CRF is outperformed over RF and an ensemble of three networks to segment Traumatic brain injuries (RF: 0.511 ± 0.20, RF+CRF: 0.548 ± 0.185, DeepMedic: 0.623 ± 0.164, DeepMedic+CRF: 0.63 ± 0.163), Brain tumor (Ensemble+CRF: 0.849, Ensemble: 0.845, DeepMedic+CRF: 0.847, DeepMedic: 0.836) Ischemic Stroke lesion (DeepMedic: 0.64 ± 0.23, DeepMedic+CRF: 0.66 ± 0.24) segmentation [16]. To segment infant brain images into WM, GM and CSF, CNN has outperformed RF by combining multi-modality T1, T2 and FA images (0.8503 ± 0.0227 vs. 0.8351 ± 0.252) [45]. In a similar segmentation task, multi-modality FCN also outperformed RF for WM (0.887 ± 0.021 vs. 0.841 ± 0.027) [31]. FCN outperformed U-Net (0.889 vs 0.796) [17]. For more accuracy, 3D FCN with convolution and concatenate (CC) is applied on T1 and T2 images [31] and it obtained better dice value than RF (0.94 ± 0.0075 vs. 0.8765 ± 0.0112). Another 3D FCN with context information is able to segment WM, GM and CSF from iSeg-2017 dataset superior to 3D FCN without context information (0.922 ± 0.008 vs. 0.916 ± 0.008) [44]. A 7-layer dilated triplanar CNN and 12-layer non-dilated 3D CNN are evaluated in MICCAI grand challenge on 6-month infant brain MRI segmentation into WM, GM and CSF with dice value 0.894.

5 Limitations and Challenges

Deep learning framework can learn intense features from huge imaging dataset. However, some challenges and future perspectives are discussed below:

Creating label data requires huge processing which is an open challenge for designing supervised architecture for image analysis. However the accuracy can be improved considerably using semantic segmentation which can also be explored. Inter-organizational collaborations are essential for generating gigantic volume of label data to eliminate the resource limitation problem.

Training a classifier with a large volume of collected data can biased towards majority classes and will provide low accuracy.

To handle real time segmentation of big imaging data, distributed as well as parallel computing infrastructure are required.

6 Conclusion

Automatic segmentation of medical images plays substantial role in computer-aided medical image analysis pipeline. This paper presents the use of CNN in image segmentation for diagnosis, disease detection, treatment and response of treatment from MRI. The performance of different CNN architectures has been evaluated for MRI taken from various brain tissues and/or regions. The results show that the CNN and its variant based architectures are popular in medical image segmentation. At the end, open challenges have been identified and research possibilities are outlined which can be utilized to improve the performance of DL based medical image analysis.

References

Bao, S., Chung, A.C.: Multi-scale structured CNN with label consistency for brain MRI segmentation. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 6(1), 113–117 (2018)

Birenbaum, A., Greenspan, H.: Longitudinal multiple sclerosis lesion segmentation using multi-view convolutional neural networks. In: Carneiro, G., et al. (eds.) LABELS/DLMIA - 2016. LNCS, vol. 10008, pp. 58–67. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46976-8_7

de Brebisson, A., Montana, G.: Deep neural networks for anatomical brain segmentation. In: Proceedings of IEEE CVPR, pp. 20–28 (2015)

Brosch, T., et al.: Deep 3D convolutional encoder networks with shortcuts for multiscale feature integration applied to multiple sclerosis lesion segmentation. IEEE Trans. Med. Imaging 35(5), 1229–1239 (2016)

Choi, H., Jin, K.H.: Fast and robust segmentation of the striatum using deep convolutional neural networks. J. Neurosci. Methods 274, 146–153 (2016)

Dolz, J., et al.: HyperDense-Net: a hyper-densely connected CNN for multi-modal image segmentation. IEEE Trans. Med. Imaging 38(5), 1116–1126 (2019)

Dolz, J., Ayed, I.B., Yuan, J., Desrosiers, C.: Isointense infant brain segmentation with a hyper-dense connected CNN. In: Proceedings of ISBI 2018, pp. 616–620 (2018)

Dolz, J., Desrosiers, C., Ayed, I.B.: 3D fully convolutional networks for subcortical segmentation in MRI: a large-scale study. NeuroImage 170, 456–470 (2017)

Ghafoorian, M., et al.: Non-uniform patch sampling with deep CNNs for white matter hyperintensity segmentation. In: Proceedings of ISBI, pp. 1414–1417 (2016)

Ghafoorian, M., et al.: Location sensitive deep CNNs for segmentation of white matter hyperintensities. Sci. Rep. 7, 5110 (2017)

Guerrero, R., et al.: White matter hyperintensity and stroke lesion segmentation and differentiation using CNNs. NeuroImage: Clin. 17, 918–934 (2018)

Havaei, M., et al.: Brain tumor segmentation with deep neural networks. Med. Image Anal. 35, 18–31 (2017)

He, Z., Bao, S., Chung, A.: 3D deep affine-invariant shape learning for brain MR image segmentation. In: Stoyanov, D., et al. (eds.) DLMIA/ML-CDS - 2018. LNCS, vol. 11045, pp. 56–64. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00889-5_7

Hoseini, F., Shahbahrami, A., Bayat, P.: An efficient implementation of deep convolutional neural networks for MRI segmentation. J. Digit. Imaging 31, 1–10 (2018)

Hussain, S., Anwar, S.M., Majid, M.: Segmentation of glioma tumors in brain using deep convolutional neural network. Neurocomputing 282, 248–261 (2018)

Kamnitsas, K., et al.: Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 36, 61–78 (2017)

Kumar, S., et al.: InfiNet: fully convolutional networks for infant brain MRI segmentation. In: Proceedings of ISBI 2018, pp. 145–148 (2018)

Kushibar, K., et al.: Automated subcortical brain structure segmentation combining spatial and deep convolutional features. Med. Image Anal. 48, 177–186 (2018)

Laukamp, K.R., et al.: Fully automated detection and segmentation of meningiomas using deep learning on routine multiparametric MRI. Eur. Radiol. 29(1), 124–132 (2018)

Le, T.H.N., Gummadi, R., Savvides, M.: Deep recurrent level set for segmenting brain tumors. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11072, pp. 646–653. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00931-1_74

Li, X., et al.: 3D multi-scale FCN with random modality voxel dropout learning for intervertebral disc localization and segmentation from multi-modality MRI. Med. Image Anal. 45, 41–54 (2018)

Mahmud, M., Kaiser, M.S., Hussain, A., Vassanelli, S.: Applications of deep learning and reinforcement learning to biological data. IEEE Trans. Neural Netw. Learn Syst. 29(6), 2063–2079 (2018)

Miller, C.G., Krasnow, J., Schwartz, L.H.: Medical Imaging in Clinical Trials. Springer, London (2014). https://doi.org/10.1007/978-1-84882-710-3

Milletari, F., et al.: Hough-CNN: deep learning for segmentation of deep brain regions in MRI and ultrasound. Comput. Vis. Image Underst. 164, 92–102 (2017)

Moeskops, P., et al.: Automatic segmentation of mr brain images with a CNN. IEEE Trans. Med. Imaging 35(5), 1252–1261 (2016)

Moeskops, P., et al.: Deep learning for multi-task medical image segmentation in multiple modalities. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds.) MICCAI 2016. LNCS, vol. 9901, pp. 478–486. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46723-8_55

Moeskops, P., Veta, M., Lafarge, M.W., Eppenhof, K.A.J., Pluim, J.P.W.: Adversarial training and dilated convolutions for brain MRI segmentation. In: Cardoso, M.J., et al. (eds.) DLMIA/ML-CDS - 2017. LNCS, vol. 10553, pp. 56–64. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67558-9_7

Moeskops, P., et al.: Evaluation of a deep learning approach for the segmentation of brain tissues and WMH of presumed vascular origin in MRI. NeuroImage: Clin. 17, 251–262 (2018)

Moeskops, P., Pluim, J.P.W.: Isointense infant brain MRI segmentation with a dilated convolutional neural network. CoRR abs/1708.02757 (2017)

Nguyen, D.M., et al.: 3D-brain segmentation using deep neural network and Gaussian mixture model. In: Proceedings of WACV 2017, pp. 815–824 (2017)

Nie, D., et al.: 3-D fully convolutional networks for multimodal isointense infant brain image segmentation. IEEE Trans. Cybern. 49(3), 1123–1136 (2019)

Nie, D., Wang, L., Gao, Y., Sken, D.: FCNs for multi-modality isointense infant brain image segmentation. Proceedings of ISBI 2016, pp. 1342–1345 (2016)

Pereira, S., et al.: Brain tumor segmentation using CNNs in MRI images. IEEE Trans. Med. Imaging 35(5), 1240–1251 (2016)

Rachmadi, M., et al.: Segmentation of WMHs using CNNs with global spatial information in routine clinical brain MRI with none or mild vascular pathology. Comput. Med. Imaging Graph. 66, 28–43 (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Roy, S., et al.: Multiple sclerosis lesion segmentation from brain MRI via fully convolutional neural networks. arXiv preprint arXiv:1803.09172 (2018)

Shakeri, M., et al.: Sub-cortical brain structure segmentation using F-CNN’s. In: Proceedings of ISBI 2016, pp. 269–272. IEEE (2016)

Shen, G., et al.: Brain tumor segmentation using concurrent fully convolutional networks and conditional random fields. In: Proceedings of ICMIP 2018, pp. 24–30 (2018)

Valverde, S., et al.: Improving automated multiple sclerosis lesion segmentation with a cascaded 3D CNN approach. NeuroImage 155, 159–168 (2017)

Wachinger, C., Reuter, M., Klein, T.: DeepNAT: deep CNN for segmenting neuroanatomy. NeuroImage 170, 434–445 (2018)

Wang, Y., et al.: Automatic tumor segmentation with deep convolutional neural networks for radiotherapy applications. Neural Process. Lett. 48, 1–12 (2018)

Xu, B., et al.: Orchestral fully convolutional networks for small lesion segmentation in brain MRI. In: Proceedings of ISBI 2018, pp. 889–892. IEEE (2018)

Yi, D., Zhou, M., Chen, Z., Gevaert, O.: 3-D convolutional neural networks for glioblastoma segmentation. CoRR abs/1611.04534 (2016)

Zeng, G., Zheng, G.: Multi-stream 3D FCN with MSDS for multi-modality isointense infant brain MRI segmentation. In: Proceedings of ISBI 2018, pp. 136–140 (2018)

Zhang, W., et al.: Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. NeuroImage 108, 214–224 (2015)

Zhao, X., et al.: A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 43, 98–111 (2018)

Zhou, C., Ding, C., Lu, Z., Wang, X., Tao, D.: One-pass multi-task convolutional neural networks for efficient brain tumor segmentation. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11072, pp. 637–645. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00931-1_73

Zhuge, Y., et al.: Brain tumor segmentation using holistically-nested neural networks in MRI images. Med. Phys. 44(10), 5234–5243 (2017)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Ali, H.M., Kaiser, M.S., Mahmud, M. (2019). Application of Convolutional Neural Network in Segmenting Brain Regions from MRI Data. In: Liang, P., Goel, V., Shan, C. (eds) Brain Informatics. BI 2019. Lecture Notes in Computer Science(), vol 11976. Springer, Cham. https://doi.org/10.1007/978-3-030-37078-7_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-37078-7_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-37077-0

Online ISBN: 978-3-030-37078-7

eBook Packages: Computer ScienceComputer Science (R0)