Abstract

Universal lesion detection (ULD) on computed tomography (CT) images is an important but underdeveloped problem. Recently, deep learning-based approaches have been proposed for ULD, aiming to learn representative features from annotated CT data. However, the hunger for data of deep learning models and the scarcity of medical annotation hinders these approaches to advance further. In this paper, we propose to incorporate domain knowledge in clinical practice into the model design of universal lesion detectors. Specifically, as radiologists tend to inspect multiple windows for an accurate diagnosis, we explicitly model this process and propose a multi-view feature pyramid network (FPN), where multi-view features are extracted from images rendered with varied window widths and window levels; to effectively combine this multi-view information, we further propose a position-aware attention module. With the proposed model design, the data-hunger problem is relieved as the learning task is made easier with the correctly induced clinical practice prior. We show promising results with the proposed model, achieving an absolute gain of \(\mathbf {5.65\%}\) (in the sensitivity of FPs@4.0) over the previous state-of-the-art on the NIH DeepLesion dataset.

Z. Li and S. Zhang—Equal contribution. This work is done when Zihao Li is an intern at Deepwise AI Lab.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Automated detection of lesions from computed tomography (CT) scans can significantly boost the accuracy and efficiency of clinical diagnosis and disease screening. However, existing computer aided diagnosis (CAD) systems usually focus on certain types of lesions, e.g. lung nodules [1], focal liver lesions [2], thus their clinical usage is limited. Therefore, a Universal Lesion Detector which can identify and localize different types of lesions across the whole body all at once is in urgent need.

Previous methods for ULD are largely inspired by the successful deep models in the field of natural images. For instance, Tang et al. [5] adapted a Mask-RCNN [3] based approach to exploit the auxiliary supervision from manually generated pseudo mask. On the other hand, Yan et al. proposed a 3D Context Enhanced (3DCE) RCNN model [6] which harness ImageNet pre-trained models for 3D context modeling. Due to a certain degree of resemblance between natural images and CT images, these advanced deep architectures also demonstrated impressive results on ULD.

Nonetheless, the intrinsic quality of medical images should not be overlooked. Beyond that, the inspection of medical images also exhibits different characteristics compared with recognition and detection of natural images. Therefore, it would be helpful if we can efficiently exploit proper domain knowledge to develop deep learning based diagnosis systems. We will try to analyze two aspects of such domain knowledge, and explore how to formulate these human expertise into a unified deep learning framework.

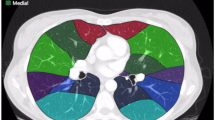

To accommodate for network input, previous studies [5, 6] use a significantly wide windowFootnote 1 to compress CT’s 12bit Hounsfield Unit (HU). However, this would severely deteriorate the visibility of lesions as a result of degenerated image contrast, as shown in Fig. 1(a). In the clinical practice, fusing information from multiple windows are effective in improving the accuracy of detecting subtle lesions and reducing false positives (FPs). During visual inspection of the CT images, radiologists would combine complex information of different inner structures and tissues from multiple reconstructions under different window widths and window levels to locate possible lesions. To imitate this process, we propose to extract prominent features from three frequently examined window widths and window levels and capture complementary information across different windows with an attention based feature aggregation module.

During the inspection of whole body CT, body position of a slice (i.e. the z-axis position of a certain slice), is also a frequently consulted prior knowledge. Experienced specialists often rely on the underlying correspondence between body position and lesion types to conduct lesion diagnosis. Moreover, radiologists would use the position cue as an indicator for choosing proper window width and window level. For instance, radiologists will mainly refer to the lung, bone and mediastinal window when inspecting a chest CT. Therefore, it would be very beneficial if we can exploit the position information to conduct lesion diagnosis and window selection in designing our deep detector.

In order to model the aforementioned domain knowledge and human expertise, we develop a MVP-Net (Multi-View FPN with Position-aware attention) for universal lesion detection. FPN [4] is used as a building block to improve detection performance for small lesions. To leverage information from multiple window reconstructions, we build a multi-view FPN to extract multi-viewFootnote 2 features using a three-pathway architecture. Then, an channel-wise attention module is employed to capture complementary information across different views. To further consider the position cues, we develop a multi-task learning scheme to embed the position information onto the appearance features. Thus, we can explicitly condition the lesion finding problem on the entangled appearance and position features. Moreover, by connecting the proposed attention module to such an entangled feature, we are able to conduct position-aware feature aggregations. Extensive experiments on the DeepLesion Dataset validate the effectiveness of our proposed MVP-Net. We can achieve an absolute gain of \(\mathbf {5.65\%}\) over the previous state-of-the-arts (3DCE with 27 slices) while considering 3D context from only 9 slices.

2 Methodology

Figure 2 gives an overview of the MVP-Net. For simplicity, we illustrate the case that take three consecutive CT slices as network input. It should be noted that MVP-Net can be easily extended to alternatives that take multiple slices as input to consider 3D context like 3DCE [6].

The proposed MVP-Net takes three views of the original CT scan as input and employs a late fusion strategy to fuse multi-view features before region proposal network (RPN). As shown in part A of Fig. 2, multi-view features are extracted from the three pathway backbone with shared parameters. Then, in part B, to exploit the position information, a position recognition network is attached to the concatenated multi-view features before RPN. Finally, a position-aware attention module is further introduced to aggregate the multi-view features, which will be passed to the RPN and RCNN network for the final lesion detection. We will elaborate these building blocks in the following subsections.

2.1 Multi-view FPN

The multi-view input for the MVP-Net is composed of multiple reconstructions under different window widths and window levels. Specifically, we adopt k-means algorithm to cluster the recommended windows (labeled by radiologists) in the DeepLesion dataset and obtain three most frequently inspected windows, whose window levels and window widths are \([50, 449]\), \([-505, 1980]\) and \([446, 1960]\) respectively. As shown in Fig. 1, these clustered windows approximately correspond to the soft-tissue window, lung window, and the union of bone, brain, and mediastinal windows respectively.

As shown in Fig. 2, we adopt a three pathway architecture to extract the most prominent features from each representative view. FPN [4] is used as the backbone network of each pathway. It takes in three consecutive slices as input to model 3D context.

2.2 Attention Based Feature Aggregation

Features extracted from different views (windows) need to be properly aggregated for accurate diagnosis. A naive implementation for feature aggregation could be concatenating them along the channel dimension. However, such an implementation would have to rely on the following convolution layers for effective feature fusion.

In the proposed MVP-Net, we employ a channel-wise attention based feature aggregation mechanism to adaptively reweight the feature maps of different views, imitating the process that radiologists put different weights on multiple windows for lesion identification. We adopt an implementation similar to the Convolutional Block Attention Module (CBAM) [9] to realize the channel-wise attention. Details for the attention module is shown in Fig. 2. Denoting \({\varvec{F}}\) as input feature map, we firstly aggregate the features with average pooling \(P_{avg}\) and max pooling \(P_{max}\) separately to extract representative descriptions, then a fully-connected bottleneck module \(\varvec{\theta (\cdot )}\) and a sigmoid layer \(\varvec{\sigma (\cdot )}\) are sequentially applied to the aggregated features to generate combinational weights of different channels. Multiplying \(\varvec{F}\) by the weights, the output \(\varvec{F}_{\varvec{c}}\) of the feature aggregation module is can be described as Eq. 1:

2.3 Position-Aware Modeling

Due to FPN’s large receptive field, the position information in the xy plane (or context information) has already been inherently modeled. Therefore, we mainly focus on the modeling of the position information along the z-axis. Specifically, we propose to learn position-aware appearance features by introducing a position prediction task during training. Entangled position and appearance features are learned through the multi-task design in the MVP-Net that jointly predicts the position and the detection bounding box.

As shown in Fig. 2, our position prediction module is supervised by two losses: a regression loss and a classification loss. The regression loss is applied after the continuous position regressor, whose learning target are generated by a self-supervised body-part regressor [8] in the DeepLesion Dataset[7]. Due to noise in the continuous labels, we further divide position values into three classes (chest, abdomen, and pelvis) according to the distribution of the position values on the z-axis, and use a classification loss to learn this discrete position, as it is more robust to noise and improves training stability.

Let \(\varvec{y,p}\) denote the ground-truth of discrete and continuous position values, given the bottleneck feature \(\varvec{x}\) of FPN, we use two subnets \(\varvec{\phi (\cdot )}\), \(\varvec{\psi (\cdot )}\) of several CNN layers to obtain the corresponding predictions. The overall loss function of the position module is described in Eq. 2.

3 Experiments

3.1 Experimental Setup

Dataset and Metric. The NIH DeepLesion [7] is a large-scale CT dataset, consisting of 32,735 lesion instances on 32,120 axial CT slices. Algorithm evaluation is conducted on the official testing set (\(15\%\)), and we report sensitivity at various FPs per image levels as the evaluation metric. For simplicity, we mainly compare the sensitivity at 4 FPs per image in the text of the following subsections.

Baselines. We compare our proposed MVP-Net with two state-of-the-art methods, i.e. 3DCE [6] and ULDOR [5]. ULDOR adopts Mask-RCNN for improved detection performance, while 3DCE exploits 3D context to obtain superior lesion detection results. Previous best results are achieved by 3DCE when using 27 slices to model the 3D context.

Implementation Details. We use FPN with ResNet-50 for all experiments. Parameters of the backbone are initialized from the ImageNet pre-trained models, and all other layers are randomly initialized. Anchor scales in FPN are set to (16, 32, 64, 128, 256). We normalize the CT slices in the z-axis to a slice interval of 2 mm, and then resize them to 800 pixels in the xy-plane for both training and testing. We augment training data with horizontal flip, and no other data augmentation strategies are employed. The models are trained using stochastic gradient descent for 13 epochs. The base learning rate is 0.002, and it is reduced by a factor of 10 after the 10-th and 12-th epoch.

3.2 Comparison with State-of-the-Arts

The comparison between our proposed model and the previous state-of-the-art methods are shown in Table 1. As the original implementation of 3DCE is based on the R-FCN [10], we re-implement 3DCE with the FPN backbone for fair comparison. The result show that with FPN as backbone, the 3DCE model achieves a performance boost of over 2% compared to the RFCN based model. This validates the effectiveness of our choice of using FPN as the base network.

More importantly, even using far less 3D context, our model with 3 slices for context modeling has already achieved SOTA detection results, outperforming 27-slices based RFCN and FPN 3DCE models by \(3.38\%\) and \(1.43\%\) respectively. When compared with 3-slices based counterpart, our model shows a superior performance gain of \(6.82\%\) and \(5.76\%\). This demonstrates the effectiveness of the proposed multi-view learning strategy as well as the position-aware attention module. Finally, by incorporating more 3D context, our model with 9 slices get a further performance boost and surpasses the previous SOTA by a large margin (\(\mathbf {5.65\%}\) for FPs@4.0 and \(\mathbf {11.35\%}\) for FPs@0.5).

3.3 Ablation Study

We perform ablation study on four major components: multi-view modeling, attention based feature aggregation, position-aware modeling, and 3D context enhanced modeling. As shown in Table 2, using simple feature concatenation for feature aggregation, the multi-view FPN obtains a \(2.91\%\) improvement over the FPN baseline. Further modifying the aggregation strategy to channel-wise attention accounts for another \(1.71\%\) improvement. Then learning the entangled position and appearance features with position-aware modeling further brings \(1.14\%\) boost on the sensitivity. Combining our proposed approach with 3D context modeling gives the best performance.

We also perform a case study to analyze the importance of multi-view modeling. As shown in Fig. 3, the model indeed benefits from the multi-view modeling: the lesions that are originally indistinguishable in the view of 3DCE due to the wide window range and lack of contrast, now becomes distinguishable under the view of appropriate windows. Thus our model presents better identification and localization performance.

4 Conclusion

In this paper, we address the universal lesion detection problem by incorporating human expertise into the design of deep architecture. Specifically, we propose a multi-view FPN with position-aware attention (MVP-Net) to incorporate the clinical practice of multi-window inspection and position-aware diagnosis to the deep detector. Without bells and whistles, our proposed model, which is intuitive and simple to implement, improves current state-of-the-art by a large margin. The MVP-Net reduces the FPs to reach a sensitivity of \(91\%\) by over three quarters (from 16 to 4) and reaches a sensitivity of \(87.60\%\) with only 2 FPs per image, making it more applicable to serve as an initial screening tool on daily clinical practice.

Notes

- 1.

Windowing, also known as gray-level mapping, is used to change the appearance of the picture to highlight particular structures.

- 2.

As a common practice in machine learning, we refer to reconstruction under a certain window width and window level as a view of that CT.

References

Wang, B., Qi, G., Tang, S., Zhang, L., Deng, L., Zhang, Y.: Automated pulmonary nodule detection: high sensitivity with few candidates. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11071, pp. 759–767. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00934-2_84

Lee, S., Bae, J.S., Kim, H., Kim, J.H., Yoon, S.: Liver lesion detection from weakly-labeled multi-phase CT volumes with a grouped single shot multibox detector. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11071, pp. 693–701. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00934-2_77

He, K., Gkioxari, G., Dollr, P., Girshick, R.: Mask R-CNN. In: ICCV, pp. 2961–2969 (2017)

Lin, T.-Y., Dollr, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: ICCV, pp. 2117–2125 (2017)

Tang, Y., Yan, K., Tang, Y., Liu, J., Xiao, J., Summers, R.M.: ULDor: a universal lesion detector for CT scans with pseudo masks and hard negative example mining. arXiv preprint arXiv:1901.06359 (2019)

Yan, K., Bagheri, M., Summers, R.M.: 3D context enhanced region-based convolutional neural network for end-to-end lesion detection. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11070, pp. 511–519. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00928-1_58

Yan, K., et al.: Deep lesion graphs in the wild: relationship learning and organization of significant radiology image findings in a diverse large-scale lesion database. In: CVPR, pp. 9261–9270 (2018)

Yan, K., Lu, L., Summers, R.M.: Unsupervised body part regression via spatially self-ordering convolutional neural networks. In: ISBI, pp. 1022–1025 (2018)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Dai, J., Yi, L., He, K., Sun, J.: R-FCN: object detection via region-based fully convolutional networks. In: NIPS, pp. 379–387 (2016)

Acknowledgement

This work is funded by the National Natural Science Foundation of China (Grant No. 61876181, 61721004, 61403383, 61625201, 61527804) and the Projects of Chinese Academy of Sciences (Grant QYZDB-SSW-JSC006 and Grant 173211KYSB20160008). We would like to thank Feng Liu for valuable discussions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, Z., Zhang, S., Zhang, J., Huang, K., Wang, Y., Yu, Y. (2019). MVP-Net: Multi-view FPN with Position-Aware Attention for Deep Universal Lesion Detection. In: Shen, D., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. MICCAI 2019. Lecture Notes in Computer Science(), vol 11769. Springer, Cham. https://doi.org/10.1007/978-3-030-32226-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-32226-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32225-0

Online ISBN: 978-3-030-32226-7

eBook Packages: Computer ScienceComputer Science (R0)