Abstract

When scientometric indicators are used to compare research units active in different scientific fields, there is often a need to make corrections for differences between fields, for instance, differences in publication, collaboration, and citation practices. Field-normalized indicators aim to make such corrections. The design of these indicators is a significant challenge. We discuss the main issues in the design of field-normalized indicators and present an overview of the different approaches that have been developed for dealing with the problem of field normalization. We also discuss how field-normalized indicators can be evaluated and consider the sensitivity of scientometric analyses to the choice of a field-normalization approach.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

- field normalization

- field classification system

- scientometrics

- scientometric indicator

- impact indicator

- productivity indicator

1 Background

Many scientometric analyses are restricted to a single field of science, but scientometric analyses also commonly stretch out over multiple scientific fields, and they often even aim to cover the entire scientific universe. University rankings, for instance, rely on scientometric indicators that are supposed to provide meaningful information about the performance of universities across many different fields of science. Likewise, many universities regularly carry out scientometric analyses in which they compare their performance in different scientific fields.

Scientific fields, of course, differ from each other in many ways, and some of these differences have important implications for scientometric analyses . For instance, in some fields, researchers tend to produce many more outputs than in other fields. In some fields, researchers focus on publishing journal articles, while in other fields they are more interested in writing books. In some fields, researchers work together in large collaborative teams, often resulting in publications with many co-authors, while in other fields researchers prefer to work individually or in small teams. In some fields, researchers cite a lot, while in other fields they cite much more sparingly, and in some fields they mainly cite recent work, while in other fields they prefer to cite older literature.

Given these differences between scientific fields, it is clear that the interpretation of a scientometric analysis that covers multiple fields is far from straightforward. Suppose that a biologist has produced 25 publications during the past 5 years, while an economist has produced 10 publications during the same time period. Can it be concluded that the biologist has been more productive than the economist? This depends on our understanding of the concept of productivity. If productivity is understood simply as the number of publications produced during a certain time period, the biologist has obviously been more productive than the economist. However, in many cases, we are probably interested in a more sophisticated concept of productivity. We may have in mind a concept of productivity that accounts for differences between fields in the rate at which researchers tend to produce publications. Based on such a more refined notion of productivity, the answer to our question is much less obvious. It may actually turn out that from this perspective, the economist should be considered more productive than the biologist.

To capture the more sophisticated concept of productivity suggested above, we need a scientometric indicator that in some way corrects for differences between scientific fields in the typical number of publications produced by a researcher. Such an indicator is referred to as a field-normalized indicator. Field-normalized indicators can be constructed not only for the concept of productivity, but also for other scientometric concepts. In the literature, field-normalized indicators of scientific impact, calculated based on citation counts, have received the most attention, and they will also play a prominent role in this chapter.

The design of field-normalized indicators is a significant challenge. In this chapter, we discuss the main issues in the design of these indicators. We present an overview of the different approaches that have been developed for dealing with the problem of field normalization . We also discuss how field-normalized indicators can be evaluated and consider the sensitivity of scientometric analyses to the choice of a field-normalization approach.

This chapter partly builds on a recent review of the literature on citation impact indicators published by one of the authors [11.1].

2 What Is Field Normalization?

It is notoriously difficult to define in a precise way what is meant by field normalization. A precise definition of the idea of field normalization requires a definition of the notion of field. It also requires a clear perspective on the way in which scientometric indicators are affected by differences between fields. As we will explain below, these requirements are challenging, and, therefore, the idea of field normalization will almost inevitably remain somewhat ill defined.

Defining the notion of a field is far from straightforward. There is a lack of standardized terminology. No agreement exists on the differences between the term field and terms such as area, discipline, domain, specialty, and topic. In fact, these terms often seem to be used more or less interchangeably. More fundamentally, the idea of a field can be conceptualized in different ways. A useful overview of different conceptualizations is provided by Sugimoto and Weingart [11.2]. They distinguish between cognitive, social, communicative, and institutional perspectives as well as perspectives based on separatedness and tradition. Each of these perspectives provides a different understanding of the idea of a field.

Defining the notion of a field is made even more difficult by the fact that science is structured in a hierarchical way, allowing fields to be identified at different hierarchical levels [11.3]. For instance, depending on the hierarchical level that one prefers, citation analysis, bibliometrics, information science, and social sciences could all be seen as fields. Moreover, even when one focuses as much as possible on a single hierarchical level, fields typically will not be neatly separated from each other. For instance, bibliometrics, scientometrics, and research evaluation could perhaps be regarded as fields at more or less the same hierarchical level. However, it is clear that these fields are strongly interrelated and have a considerable overlap.

Field normalization of scientometric indicators is motivated by the idea that differences between fields lead to distortions in scientometric indicators. One could think of this in terms of signal and noise. Scientometric indicators provide a signal of concepts such as productivity or scientific impact, but they are also affected by noise. This noise may partly be due to differences between fields, for instance, differences in publication, collaboration, and citation practices. Field normalization aims to remove this noise while maintaining the signal.

However, the distinction between signal and noise is much less clear than it may seem at first sight. To illustrate this, let us consider citation-based indicators of scientific impact. Publications in information science on average are cited much less frequently than publications in, for instance, the life sciences. A citation-based indicator that does not account for this may be considered very noisy. The indicator may be seen as strongly biased against information science research. Suppose, therefore, that we use an indicator that corrects for differences in citation density between information science and other fields. Let us now zoom in on information science. Within information science, publications in scientometrics on average receive significantly more citations than publications in, for instance, library science. Again, we may feel that our indicator is too noisy and that we need to get rid of the noise. Consequently, suppose that we use an indicator that corrects for differences in citation density not only between information science and other fields, but also between scientometrics and other subfields within information science. We now zoom in on scientometrics. Within scientometrics, publications on citation analysis tend to receive more citations than publications on a topic such as co-authorship analysis. This may also be seen as noise that we need to get rid of. The next step then may be to use an indicator that corrects for differences in citation density even between different topics within scientometrics. However, we could, of course, argue that even this indicator is noisy. Suppose that empirical publications on citation analysis are cited more frequently than theoretical publications. This could then be claimed to show that the empirical publications have a higher impact than the theoretical publications. On the other hand, we could also argue that empirical and theoretical research on citation analysis represent two different subtopics and that we need to get rid of noise due to differences in citation density between these subtopics. However, if we keep following such a reasoning, at some point everything is considered noise, and there is no signal left, meaning that indicators become completely non-informative.

The above example illustrates that there is no objective way of distinguishing between signal and noise. We may say that scientometric indicators are distorted by noise that is due to differences between fields. However, fields can reasonably be defined at different hierarchical levels, leading to different perspectives on what should count as a signal and what should be seen as noise. When working with field-normalized indicators , choosing a certain hierarchical level for defining fields, and consequently making a certain distinction between signal and noise, is a normative decision. There is no objective way in which this choice can be made. Probably there is agreement that fields should not be defined in a very broad or very narrow way, but this still leaves open many intermediate ways in which fields can be defined. A single optimal way of defining fields does not exist [11.3]. Ideally, the hierarchical level at which fields are defined is chosen in such a way that it aligns well with the purpose of a specific scientometric analysis. In some analyses, it may be desirable to work with relatively narrow fields, while in other analyses broader fields may be appropriate.

3 Field Classification Systems

Most field-normalized indicators require an operationalization of scientific fields. We refer to such an operationalization as a field classification system. Different types of field classification systems can be distinguished. We make a distinction between classification systems of journals, publications, and researchers. Many different classification systems exist. We do not aim to provide a comprehensive overview of these systems. Instead, we focus specifically on classification systems that have been used for field-normalization purposes, either in the scientometric literature or in applied scientometric work. Each of the classification systems discussed below deals in a different way with the challenges in operationalizing scientific fields.

3.1 Field Classification Systems of Journals

The field classification systems used most frequently by field-normalized indicators are journal-based systems. In these systems, each journal is assigned to one or more fields. Some journal-based classification systems do not allow fields to overlap. A journal can be assigned to only one field in such systems. However, in most journal-based classification systems, overlap of fields is allowed, in which case a journal may belong to multiple fields. Some journal-based classification systems have a hierarchical structure and consist of multiple levels. Each field at a lower level is then considered to be part of a field at a higher level.

The Web of Science ( ) database offers a classification system in which each journal indexed in the database is assigned to one or more fields. These fields are referred to as categories. There are about 250 fields in the WoS classification system.

A somewhat similar classification system is made available in the Scopus database. This system is referred to as the All Science Journal Classification ( ). The system has a hierarchical structure consisting of two levels. There are over 300 fields at the bottom level. These fields have been aggregated into 27 fields at the top level. Each journal indexed in Scopus belongs to one or more fields. A comparison of the accuracy of the WoS and Scopus classification systems is reported in a study by Wang and Waltman [11.4]. According to this study, the WoS classification system is significantly more accurate than the Scopus classification system.

In the Essential Science Indicators, a tool that is based on the WoS database, a classification system of 22 broad fields is made available. In this system, it is not possible for a journal to belong to multiple fields. Each journal is assigned exclusively to a single field.

Other journal-based classification systems include the classification system of the US National Science Foundation, the classification system developed by Science-Metrix, and the classification system of Glänzel and Schubert [11.5]. The classification system of the National Science Foundation covers 125 fields, which have been aggregated into 13 broad fields. A journal can belong to only one field in this system. The system has been used in the Science and Engineering Indicators reports prepared by the National Science Foundation for a long time. Science-Metrix is a company specialized in research evaluation that has developed its own classification system. This system has been made freely available. It includes 176 fields, aggregated into 22 broad fields, with each journal being assigned to only one field. We refer to Archambault et al [11.6] for more details on the approach that was taken to construct the Science-Metrix classification system. The classification system of Glänzel and Schubert [11.5] consists of two levels. The 67 fields at the bottom level have been aggregated into 15 fields at the top level. Journals may be assigned to more than one field in this system.

Multidisciplinary journals with a broad scope represent a significant challenge for journal-based classification systems. Nature, Proceedings of the National Academy of Sciences, and Science are well-known examples of such journals. Other examples are open access mega journals such as PLOS ONE and Scientific Reports. In a journal-based classification system, these multidisciplinary journals are typically assigned to a special category. In the WoS categories classification system, this category is, for instance, called Multidisciplinary Sciences. In the Scopus ASJC classification system, it is referred to as multidisciplinary . The use of a special category for multidisciplinary journals is problematic because such a category clearly does not represent a scientific field. In practice, this problem is often addressed by creating a publication-based classification system for publications in multidisciplinary journals and by complementing a journal-based classification system with such a publication-based classification system. This approach, introduced by Glänzel et al [11.7]; see also [11.5], has been widely adopted. Of course, there is always some arbitrariness in deciding which journals should be considered multidisciplinary. It is clear that journals such as the ones mentioned above are of a multidisciplinary nature. However, it may be argued that journals such as The Lancet, New England Journal of Medicine, and Physical Review Letters should also be considered multidisciplinary and that it would be preferable to create a publication-based classification system for publications in these journals.

3.2 Field Classification Systems of Publications

Instead of journal-based field classification systems, it is also possible to use publication-based field classification systems. Publication-based classification systems potentially offer a more accurate and more fine-grained representation of scientific fields than their journal-based counterparts. Most publication-based classification systems are restricted to a single scientific discipline. Algorithmically constructed classification systems are an exception and may cover all scientific fields.

There are various scientific disciplines that have their own publication-based classification system. These systems often have a hierarchical structure, and they usually allow publications to be assigned to multiple fields. The use of these systems in field-normalized indicators was studied by Bornmann et al [11.8], Neuhaus and Daniel [11.9], Radicchi and Castellano [11.10], and van Leeuwen and Calero Medina [11.11]. These authors focused on, respectively, the Medical Subject Headings, the Chemical Abstracts sections, the Physics and Astronomy Classification Scheme, and the EconLit classification system. Like in the case of the journal-based classification systems discussed in Sect. 11.3.1, it is important to be aware that publication-based classification systems such as the ones mentioned above were not designed specifically for field-normalization purposes.

Publication-based classification systems that are constructed algorithmically may cover all scientific fields rather than only fields within a single discipline. An approach for the algorithmic construction of publication-based classification systems was proposed by Waltman and van Eck [11.12]. In this approach, a classification system is constructed by clustering publications based on direct citation relations. Each publication is assigned to only one field. The use of algorithmically constructed publication-based classification systems in field-normalized indicators was studied by Ruiz-Castillo and Waltman [11.13] and Perianes-Rodriguez and Ruiz-Castillo [11.14]. A practical application can be found in the CWTS Leiden Ranking, a bibliometric ranking of major universities worldwide that is available at http://www.leidenranking.com. In this ranking, citation-based indicators of scientific impact are normalized using an algorithmically constructed publication-based classification system in which about 4000 scientific fields are distinguished.

An algorithmic approach to the construction of a publication-based classification system is also taken in Microsoft Academic , a recently introduced bibliometric data source somewhat similar to Google Scholar. Hug et al [11.15] found that fields in the classification system of Microsoft Academic are too specific and not coherent, leading them to conclude that the classification system of Microsoft Academic is not suitable for field-normalization purposes.

3.3 Field Classification Systems of Researchers

Field classification systems of researchers represent a quite different approach to operationalize scientific fields. The use of researcher-based classification systems in field-normalized indicators is much less common than the use of journal-based and publication-based classification systems. Below we discuss two researcher-based classification systems that have been used in the scientometric literature.

Giovanni Abramo and Ciriaco Andrea D'Angelo have published a large number of papers in which they use the official classification system of Italian researchers [11.16]. This is a hierarchical system consisting of two levels. At the top level, 14 fields are distinguished. These fields are referred to as university disciplinary areas. At the bottom level, there are 370 fields, referred to as specific disciplinary sectors, with each specific disciplinary sector being part of a single university disciplinary area. In Italy, each researcher at a university must belong to exactly one specific disciplinary sector. We will return to the Italian classification system of researchers in Sect. 11.5.

Another example of a classification system of researchers is the classification system of the Mendeley reference management tool. This system was used by Bornmann and Haunschild [11.17] in a proposal for a field-normalized indicator of scientific impact based on Mendeley reader counts. In the Mendeley classification system, a distinction between 28 fields is made. Each Mendeley user is able to assign him- or herself to one of these 28 fields.

4 Overview of Field-Normalized Indicators

In this section, we provide an overview of field-normalized indicators that have been proposed in the scientometric literature. The literature on field-normalized indicators is extensive. We, therefore, do not discuss each individual proposal presented in the literature. Instead, our focus is on what we consider to be the more significant contributions that have been made. Other contributions may not be covered or may be mentioned only very briefly. We also do not aim to give a historical overview of the literature. We discuss important ideas presented in the literature but we do not necessarily trace the historical development of these ideas.

In principle, field-normalized variants can be developed for any type of scientometric indicator. In practice, however, scientometricians have put most effort into the development of field-normalized indicators of the impact of scientific publications, where impact is typically operationalized using citations. Our focus in this section is, therefore, mostly on field-normalized indicators of impact, although we also discuss field-normalized indicators of productivity. Most of the indicators that we consider in this section rely on field classification systems such as the ones introduced in the previous section, but we also discuss indicators that do not require a field classification system.

Field-normalized indicators typically normalize not only for the field of a publication but also for the age of a publication. This is important in the case of indicators based on citations, since older publications have had more time to receive citations than younger publications. Indicators may also normalize for other characteristics of a publication. For instance, they sometimes normalize for publication type, where a distinction can be made between categories such as research article, review article, and letter.

4.1 Indicators of Impact: Indicators Based on Normalized Citation Scores

The normalized citation score of a publication can be defined in different ways. The most straightforward approach is to define it as the ratio of the actual and the expected number of citations of a publication, where the expected number of citations of a publication equals the average number of citations of all publications in the same field and in the same publication year (and often also in the same publication type category). Whether publications are in the same field is determined based on a field classification system, such as one of the systems discussed in Sect. 11.3.

In order to obtain indicators at the level of, for instance, a research group, a research institution, or a journal, the normalized citation scores of individual publications need to be aggregated. This is typically done either by averaging or by summing the normalized citation scores. Averaging the scores yields a so-called size-independent indicator of impact, while summing the scores gives a size-dependent impact indicator . These indicators are known under various different names. The size-independent indicator is, for instance, known as the mean normalized citation score [11.18], the item-oriented field normalized citation score average [11.19], the category normalized citation impact (in the commercial InCites tool), and the field weighted citation impact (in the commercial Scopus and SciVal tools). The size-dependent indicator is sometimes referred to as the total normalized citation score [11.18].

A recent development is the application of the above approach for calculating field-normalized impact indicators to bookmarks in Mendeley instead of citations. Studies of field-normalized indicators based on Mendeley bookmarks, often interpreted in terms of readership, have been reported by Fairclough and Thelwall [11.20] and Haunschild and Bornmann [11.21].

A number of alternative approaches have been explored for defining the normalized citation score of a publication. One alternative is to leave out non-cited publications from the calculation of the expected number of citations of a publication [11.22, 11.23]. Another alternative is to determine the expected number of citations of a publication based on the idea of so-called exchange rates, where the similarity between fields in the shape of citation distributions is used to determine how many citations in one field can be considered equivalent to a given number of citations in another field [11.24, 11.25]. A third alternative is to apply a logarithmic transformation to the citation counts of publications [11.19, 11.26, 11.27, 11.28]. A fourth alternative is to transform citation counts into \(z\)-scores [11.29, 11.30, 11.31]. This approach can be combined with a logarithmic transformation of citation counts [11.19]. A fifth alternative is to transform citation counts using a two-parameter power-law function [11.32]. Finally, a sixth alternative proposed in the literature is to transform citation counts into binary variables based on whether or not publications have been cited [11.28].

There has also been considerable discussion in the literature about the best way to calculate field-normalized impact indicators at aggregate levels, for instance, at the level of research groups or research institutions [11.18, 11.19, 11.33, 11.34, 11.35, 11.36, 11.37]. The approach discussed above, in which normalized citation scores of individual publications are averaged or summed, is nowadays the most commonly used approach. An alternative approach is to calculate the average or the sum of the actual citation counts of a set of publications and to divide the outcome by the average of the expected citation counts of the same set of publications [11.38, 11.39, 11.40]. In this alternative approach, normalization can be considered to take place at the level of an oeuvre of publications rather than at the level of individual publications [11.34]. When an analysis includes publications from multiple fields or multiple years, normalization at the oeuvre level will generally yield results that are different from the outcomes obtained by normalizing at the level of individual publications. We refer to Larivière and Gingras [11.41], Waltman et al [11.42], and Herranz and Ruiz-Castillo [11.43] for empirical analyses of the differences between the two approaches.

Another issue in the calculation of field-normalized impact indicators at aggregate levels is the choice of a counting method for handling co-authored publications. Full and fractional counting are the two most commonly used counting methods. In the case of full counting, each publication is fully counted for each co-author. On the other hand, in the case of fractional counting, a publication with \(n\) co-authors is counted with a weight of \(1/n\) for each co-author. The choice of counting method influences the extent to which an indicator can be considered to provide properly field-normalized statistics [11.44]. We will return to this issue in Sect. 11.5.1.

4.2 Indicators of Impact:Indicators Based on Percentiles

Percentile-based impact indicators value publications based on their position in the citation distribution of their field and publication year, where fields are defined using a field classification system, for instance, one of the systems discussed in Sect. 11.3. In the most straightforward case, these indicators make a distinction between lowly and highly cited publications. For instance, all publications that in terms of citations belong to the top \({\mathrm{10}}\%\), top \({\mathrm{5}}\%\), or top \({\mathrm{1}}\%\) of their field and publication year may be regarded as highly cited, as suggested by Tijssen et al [11.45] and van Leeuwen et al [11.46]. A generalization of this idea was proposed by Leydesdorff et al [11.47]. In their proposal, a number of classes of publications are distinguished. Each class of publications is defined in terms of percentiles of the citation distribution of a field and publication year. The first class may, for instance, include all publications whose number of citations is below the 50th percentile of the citation distribution of their field and publication year, the second class may include all publications whose number of citations is between the 50th and the 75th percentile, and so on. In the proposed approach, publications are valued based on the class to which they belong. Publications in the lowest class have a value of 1, publications in the second-lowest class have a value of 2, and so on.

A difficulty in the calculation of percentile-based indicators is the issue of ties, that is, multiple publications with the same number of citations. Suppose we want to identify the \({\mathrm{10}}\%\) most frequently cited publications in a certain field and publication year. We then need to find a threshold such that exactly \({\mathrm{10}}\%\) of the publications in this field and publication year have a number of citations that is above the threshold. In practice, it will usually not be possible to find such a threshold. Because of the issue of ties, typically, any threshold will yield either too many or too few publications whose number of citations is above the threshold. This means that fields cannot be made fully comparable, since the distortion caused by the issue of ties will be different in different fields. In the literature, various approaches for dealing with the issue of ties have been explored [11.46, 11.47, 11.48, 11.49]. We refer to Waltman and Schreiber [11.49] for a summary of these approaches and to Schreiber [11.50] for an empirical comparison.

Field-normalized impact indicators can also be constructed by combining the idea of percentile-based indicators with the idea of indicators based on normalized citation scores. Such an approach was introduced by Albarrán et al [11.51, 11.52]. In the proposed approach, indicators are used to characterize the distribution of citations over the highly cited publications in a field. The indicators resemble indicators developed in the field of economics for characterizing income distributions.

Glänzel [11.53] and Glänzel et al [11.54] proposed indicators that, like the above-mentioned indicators proposed by Leydesdorff et al [11.47], distinguish between a number of classes of publications. However, instead of percentiles, these indicators rely on the method of characteristic scores and scales [11.55] to define the classes. Publications belong to the lowest class if they have fewer citations than the average of their field, they belong to the second-lowest class if they do not belong to the lowest class and if they have fewer citations than the average of all publications not belonging to the lowest class, and so on. An alternative approach is to define the classes based on median instead of average citation counts [11.56].

Percentile-based approaches may also be used to normalize altmetric indicators. Bornmann and Haunschild [11.57] suggested a percentile-based approach for normalizing Twitter counts.

4.3 Indicators of Impact:Indicators that Do Not Usea Field Classification System

All field-normalized indicators discussed so far rely on a field classification system that operationalizes scientific fields. As discussed in Sects. 11.2 and 11.3, the operationalization of fields is a difficult problem. Field classification systems offer a simplified representation of fields. By necessity, any field classification system relies partly on arbitrary and contestable choices. In this section, we discuss field-normalized impact indicators with the attractive property that they do not require a field classification system.

An approach that has been explored in the literature is to identify for each publication a set of similar publications, allowing the citation score of the focal publication to be compared with the citation scores of the identified similar publications. Similar publications may be identified based on shared references (i. e., bibliographic coupling relations), as suggested by Schubert and Braun [11.58, 11.59]. Alternatively, as demonstrated by Colliander [11.60], the identification of similar publications may be done based on a combination of shared references and shared terms. Another possibility is to use co-citation relations to identify similar publications. This idea is used in the relative citation ratio indicator, an indicator introduced by a research team at the US National Institutes of Health [11.61] that has attracted a significant amount of attention. We refer to Janssens et al [11.62] for a critical discussion of the relative citation ratio indicator (for a response by the original authors, see [11.63]). Instead of working at the level of individual publications, it is also possible to work at the journal level. The citation score of a journal can then be compared with the citation scores of other similar journals. The latter journals may be identified based on citations given to the focal journal [11.64].

Another field normalization approach that does not require a field classification system is known as citing-side normalization [11.65], sometimes also referred to as fractional citation weighting [11.65], fractional citation counting [11.66], source normalization [11.67], or a priori normalization [11.68]. Citing-side normalization is based on the idea that differences between fields in citation density are to a large extent caused by the fact that in some fields publications tend to have longer reference lists than in other fields. Citing-side normalization performs a correction for the length of the reference list of citing publications. The basic idea of citing-side normalization can be implemented in different ways. One possibility is to correct for the average reference list length of citing journals [11.65, 11.69]. Another possibility is to correct for the reference list length of individual citing publications [11.66, 11.67, 11.68, 11.70, 11.71]. A combination of these two options is possible as well, and this is how citing-side normalization is implemented in the current version of the Source Normalized Impact per Paper ( ) journal impact indicator [11.72].

Instead of correcting for reference list length on the citing side, an alternative approach is to correct for reference list length on the cited side. In this approach, a correction can be made for either the reference list length of a cited publication [11.73] or the average reference list length of a cited journal [11.74, 11.75, 11.76]. A third possibility is to correct for the average reference list length of all publications belonging to the same field as a cited publication [11.77]. However, this again requires a field classification system, just like in the case of the indicators discussed in Sects. 11.4.1 and 11.4.2.

Recursive impact indicators, first introduced by Pinski and Narin [11.78] and often inspired by the well-known PageRank algorithm [11.79], offer another approach that is related to the idea of citing-side normalization. Examples of recursive impact indicators are the eigenfactor and article influence indicators of journal impact [11.80, 11.81] and the SCImago journal rank ( ) indicator [11.82, 11.83]. We refer to Waltman and Yan [11.84] and Fragkiadaki and Evangelidis [11.85] for overviews of the literature on recursive impact indicators and to Waltman and van Eck [11.86] for a discussion of the relation between these indicators and citing-side normalized indicators.

4.4 Indicators of Productivity

Although field-normalized indicators of impact have received most attention in the scientometric literature, some attention has also been given to field-normalized indicators of productivity (sometimes also referred to as efficiency). Productivity indicators can, for instance, be calculated for researchers, research groups, and research institutions. A simple productivity indicator is the average number of publications produced per researcher. A more advanced productivity indicator may also take into account the number of citations publications have received. Field-normalized productivity indicators perform a correction for differences between fields in the rate at which publications are produced and citations are received.

Field-normalized productivity indicators play a prominent role in the work of Giovanni Abramo and Ciriaco Andrea D'Angelo. In particular, Abramo and D'Angelo make extensive use of an indicator referred to as the fractional scientific strength ( ). For an individual researcher, FSS essentially equals the sum of the normalized citation scores (Sect. 11.4.1) of the publications of the researcher divided by the salary of the researcher. Likewise, for a group of researchers working in the same field, FSS equals the sum of the normalized citation scores of their publications divided by their total salary. When FSS is calculated for a group of researchers working in different fields, for instance, all researchers affiliated with a particular research institution, a correction needs to be made for differences between fields in the average publication output and the average salary of researchers. One way in which this can be done is by first calculating each researcher's field-normalized FSS, defined as the researcher's FSS divided by the average FSS of all researchers working in the same field and then calculating the average field-normalized FSS of all researchers. We refer to Abramo and D'Angelo [11.16] for a more detailed discussion of the calculation of the FSS indicator. For a discussion of an alternative productivity indicator, based on highly cited publications instead of normalized citation scores, we refer to Abramo and D'Angelo [11.87].

In practice, calculating the FSS indicator is highly challenging because it requires data on the publications and the salaries of all researchers working in a field. Abramo and D'Angelo address this difficulty by taking into account only Italian publications and Italian researchers in the calculation of the FSS indicator. In Italy, unlike in most other countries, the data required for the calculation of the FSS indicator is available. Abramo and D'Angelo calculate normalized citation scores of publications using the WoS journal-based field classification system (Sect. 11.3.1). However, they also need a second classification system. To calculate researchers' field-normalized FSS, they rely on a classification system of Italian researchers (Sect. 11.3.3).

In most countries, the data needed to calculate field-normalized productivity indicators is not available. Obtaining productivity indicators that allow for meaningful cross-country comparisons is even more challenging, as pointed out by Aksnes et al [11.88]. An interesting proposal for calculating field-normalized productivity indicators, even when only limited data is available, was presented by Koski et al [11.89]. This proposal focuses on the difficulty of researchers that have no publications in a certain time period. These researchers are invisible in databases such as WoS and Scopus, which causes problems when using these databases to calculate productivity indicators. To deal with this issue, a statistical methodology is proposed for estimating the number of researchers without publications.

A number of studies have focused specifically on designing field-normalized indicators of the productivity of individual researchers. In particular, several proposals have been made for variants of the \(h\)-index [11.90] that correct for field differences [11.91, 11.92, 11.93, 11.94, 11.95]. Other interesting proposals for comparing individual researchers active in different fields were presented by Kaur et al [11.96] and Ruocco and Daraio [11.97].

It is important to be aware of the difference between productivity indicators and size-independent impact indicators. Both types of indicators are independent of size, which is convenient, for instance, when making comparisons between larger and smaller research institutions. However, the two types of indicators are based on fundamentally different notions of size. Productivity indicators take an input perspective on the notion of size, for instance, the number of researchers affiliated with an institution. Size-independent impact indicators take an output perspective on the notion of size, namely the number of publications produced by an institution. From a conceptual point of view, for many purposes the input perspective seems preferable over the output perspective. From a practical point of view, however, taking the input perspective often is not possible because the data required is not available. A more elaborate discussion of the pros and cons of productivity indicators and size-independent impact indicators can be found in a recent special section of Journal of Informetrics [11.98]. In this special section, a discussion paper by Abramo and D'Angelo [11.99] argues in favor of the use of productivity indicators, while other contributions defend the use of size-independent impact indicators. We refer to Abramo and D'Angelo [11.100] for an institutional-level comparison between productivity indicators and size-independent impact indicators.

5 Evaluation of Field-Normalized Indicators

The discussion in the previous section has shown that a large variety of field-normalized indicators have been proposed in the literature. This, of course, raises various questions: Do the indicators discussed in the previous section indeed provide properly field-normalized statistics? What are the advantages and disadvantages of the different ways in which field normalization can be performed? Is it possible to identify one specific approach to field normalization that can be considered superior over other approaches? To provide some partial answers to these questions, we now discuss the scientometric literature on the evaluation of field-normalized indicators. We restrict the discussion to indicators of impact.

To evaluate field-normalized indicators, some scientometricians choose to analyze the theoretical properties of indicators, while other scientometricians prefer to study the empirical characteristics of indicators. Different approaches to evaluate field-normalized indicators sometimes lead to different conclusions. For instance, from a theoretical perspective, an indicator may seem appealing, while from an empirical perspective the same indicator may not seem very attractive. Below, we first discuss the theoretical evaluation of field-normalized indicators. We then turn to empirical evaluation.

5.1 Theoretical Evaluation of Indicators

In theoretical approaches to the evaluation of field-normalized indicators, the theoretical properties of indicators are studied. These are properties that do not depend on empirical data based on which indicators are calculated. After the theoretical properties of indicators have been established, the indicators are evaluated by deciding whether or not their properties are considered desirable. Whether a certain property is desirable is a subjective question that may legitimately be answered differently by different people. Theoretical evaluation, therefore, does not offer a universal and definitive answer to the question of whether one indicator is superior over another. Instead, it aims to provide a deep understanding of the key differences between indicators. This may then guide users in choosing the indicator that best serves their needs.

In the calculation of the normalized citation score of a publication, defined as the ratio of the actual and the expected number of citations of a publication (Sect. 11.4.1), theoretical considerations may help to choose between different ways in which the expected number of citations of a publication can be defined. The most common approach is to define a publication's expected citation count as the average citation count of all publications in the same field and in the same publication year. An alternative approach is to consider in this definition only publications that have been cited at least once [11.22, 11.23]. In the case of the former approach, for each combination of a field and a publication year, the average normalized citation score of all publications in that field and publication year equals exactly 1. This may be regarded as an important property for a field-normalized indicator. The approach in which non-cited publications are left out from the definition of a publication's expected citation count does not have this property, which may be seen as a disadvantage of this approach.

Another issue in the calculation of the normalized citation score of a publication is the way in which publications belonging to multiple fields are handled. Based on the idea that the average normalized citation score of all publications in a field and publication year should equal 1, it can be argued that the expected citation count of a publication belonging to multiple fields should be defined as the harmonic average of the expected citation counts corresponding to the different fields [11.18]. However, a theoretical analysis presented by Smolinsky [11.101] showed that there are also other ways in which the expected citation count of a publication belonging to multiple fields can be defined. These alternative approaches lead to additional properties that may be considered attractive, but they have the disadvantage of introducing challenging computational issues.

As discussed in Sect. 11.4.1, there are different ways in which field-normalized indicators can be calculated at the aggregate level of, for instance, a research institution. The oeuvre argument of Moed [11.34] is a theoretical argument in favor of one approach, while the consistency argument of Waltman et al [11.18] is a theoretical argument in favor of another approach. According to the oeuvre argument, it should not make a difference whether a citation is given to one publication in the oeuvre of a research unit or to some other publication in the same oeuvre. The basic idea of the consistency argument is that the ranking of two research units relative to each other should not change when both units make the same performance improvement. The oeuvre and consistency arguments can also be used to characterize some of the key differences between two versions of the SNIP journal impact indicator [11.67, 11.72].

The choice of the counting method used to handle co-authored publications in the calculation of a field-normalized indicator can also be analyzed theoretically. When the full counting method is used, each publication is fully counted for each co-author, as explained in Sect. 11.4.1. On the other hand, when using a fractional counting method, co-authored publications are counted with a lower weight than publications that have not been co-authored. As pointed out by Waltman and van Eck [11.44], in the case of fractional counting, the mean normalized citation score indicator (Sect. 11.4.1) has the property that the average value of the indicator for all research institutions active in a field equals exactly 1. In the case of full counting, the indicator does not have this property. Using the full counting method, co-authored publications are counted multiple times, once for each of the co-authors. This double counting of co-authored publications, which tend to be publications that have received relatively large numbers of citations, has an inflationary effect. It typically causes the mean normalized citation score indicator to have an average value for all research institutions active in a field that is above 1. Because of this inflanatory effect, which is larger in some fields than in others, the full counting method provides statistics that are only partly field normalized. In order to obtain properly field-normalized statistics, a fractional counting method needs to be used. Alternatively, the use of a so-called multiplicative counting method [11.102] can be considered.

5.2 Empirical Evaluation of Indicators

Empirical approaches to the evaluation of field-normalized impact indicators focus on three questions. First, assuming that a certain field classification system offers a satisfactory representation of scientific fields, which field-normalized indicators provide the best normalization? Second, to what extent do different field classification systems offer good representations of scientific fields, in particular for the purpose of field normalization? Third, which field-normalized indicators have the strongest correlation with peer review?

The idea of universality of citation distributions plays a key role in the literature dealing with the first question. Citation distributions are considered to be universal if the distribution of normalized citation scores is essentially identical for all scientific fields. The idea of universality of citation distributions was introduced by Radicchi et al [11.95]; see also [11.10]. They claimed that universality of citation distributions can be achieved using a straightforward normalization approach in which the number of citations of each publication in a field is divided by the average number of citations of all publications in the field (excluding non-cited publications). However, in subsequent studies, it has been shown that this straightforward normalization approach yields citation distributions that are only approximately universal [11.103, 11.104, 11.29].

Based on the idea of universality of citation distributions, a so-called fairness test for field-normalized indicators was proposed [11.105]. This test has been used in various studies in which field-normalized indicators are compared [11.105, 11.106, 11.32]. The objective of having normalized citation distributions that are identical across fields also serves as the foundation of a methodology for quantifying the degree to which field-normalized indicators succeed in correcting for field differences [11.25]. This methodology has been applied in various studies [11.107, 11.108, 11.24, 11.25]. Using the methodology of Crespo et al [11.25], it was found that the normalization approach proposed by Radicchi and Castellano [11.32], based on a two-parameter power law transformation of citation counts, outperforms a number of other normalization approaches [11.107]. However, the standard approach of dividing the actual number of citations of a publication by the expected number of citations has also been shown to perform well. A study by Abramo et al [11.22] in which a comparison is made of a number of field-normalized indicators is also based on the idea of trying to obtain normalized citation distributions that are identical across fields.

The above-mentioned studies assume that one has a satisfactory field classification system. They do not evaluate whether a certain classification system offers a good representation of scientific fields. This limited perspective on the evaluation of field-normalized indicators was criticized by Sirtes [11.109] in a letter commenting on Radicchi and Castellano [11.105]; for a response, see [11.110]. According to Sirtes [11.109]; see also [11.108], it is incorrect to evaluate a field-normalized indicator using the same classification system that is also used in the calculation of the indicator. This brings us to the second question raised in the beginning of this section: How suitable are different field classification systems for the purpose of field normalization?

Evaluations of the use of the WoS journal-based field classification system for the purpose of field normalization have been reported by van Eck et al [11.111] and Leydesdorff and Bornmann [11.112]. In both studies, the appropriateness of the fields in the WoS classification system for normalization purposes is questioned. For other studies questioning the use of the WoS classification system and proposing the use of alternative classification systems, we refer to Bornmann et al [11.8], Neuhaus and Daniel [11.9], van Leeuwen and Calero Medina [11.11], and Ruiz-Castillo and Waltman [11.13]. A systematic methodology for comparing the suitability of different classification systems for field-normalization purposes was presented by Li and Ruiz-Castillo [11.113]. We refer to Perianes-Rodriguez and Ruiz-Castillo [11.14] for an application of this methodology.

Empirical approaches to the evaluation of field-normalized impact indicators also study the extent to which these indicators correlate with peer review. At the level of research programs and research departments in the natural sciences, indicators that use the standard normalization approach of dividing the actual number of citations of a publication by the expected number of citations have been shown to be moderately correlated with peer review assessments made by expert committees [11.114, Chap. 19], [11.115]. The correlation between normalized impact indicators and peer review has also been analyzed based on peer review outcomes from the Research Assessment Exercise ( ) in the UK [11.116]. The main finding of this analysis is that impact indicators normalized at the level of journals hardly correlate with peer review, while impact indicators normalized at the level of journal-based fields in the WoS database or units of assessment in the RAE correlate significantly with peer review.

At the level of individual publications, the recently introduced relative citation ratio indicator has been claimed to be well correlated with expert judgments [11.61, p. 9]. However, in a study by Bornmann and Haunschild [11.117], the correlation between the relative citation ratio indicator and expert judgments was characterized as only low to medium (p. 1064). In addition, it has been shown that, in terms of correlation with peer review, the relative citation ratio indicator has a performance that is similar to other field-normalized impact indicators. The studies by Hutchins et al [11.61] and Bornmann and Haunschild [11.117] both make use of F1000 post-publication peer review data. Data from F1000 has also been used to analyze, at the level of individual publications, how strongly a number of field-normalized impact indicators correlate with peer review [11.118]. It was found that different field-normalized impact indicators all have a similar correlation with peer review. However, the authors leave open the possibility that F1000 data may not be sufficiently accurate to make fine-grained distinctions between different field-normalized impact indicators.

6 How Much Difference Does It Make in Practice?

We have discussed a large number of field-normalized indicators as well as a large number of field classification systems that can be used by these indicators. We now consider the following question: How much difference does the choice of a field-normalized indicator, and possibly also a field classification system, make in practice, for instance, when field-normalized indicators are used in the evaluation of research institutions, research groups, or individual researchers?

Various papers have presented analyses that provide insight into this question, most of them focusing on field-normalized indicators of impact. Before reporting our own analysis, we first briefly mention some of these papers, without going into the details of their findings. At the level of individual publications, the sensitivity of field-normalized indicators to the choice of a field classification system was studied by Zitt et al [11.3]. In the context of quantifying the impact of journals, field-normalized indicators that use a field classification system (Sects. 11.4.1 and 11.4.2) were compared with field-normalized indicators that use citing-side normalization (Sect. 11.4.3) and that do not require a field classification system [11.106, 11.119]. Similar comparisons have also been made for indicators based on Mendeley bookmarks [11.17]. In the context of quantifying the impact of research institutions and their internal units, a number of studies investigated for specific field-normalized indicators the effect of the choice of a field classification system. The use of the WoS journal-based classification system was compared with the use of other less fine-grained journal-based systems [11.120, 11.38], but also with the use of more fine-grained publication-based systems [11.13]. In addition to analyzing the effect of the choice of a classification system, studies have also compared different normalization approaches for a given classification system. Perianes-Rodriguez and Ruiz-Castillo [11.121], for instance, performed a comparison of two different ways in which normalized impact indicators can be obtained at the aggregate-level of research institutions. Finally, as has already been mentioned, field-normalized indicators of productivity have received relatively limited attention in the literature. A comparison of two ways in which the FSS indicator (Sect. 11.4.4) can be calculated at the level of research institutions was reported by Abramo and D'Angelo [11.122].

6.1 Empirical Analysis of the Sensitivity of Field-Normalized Impact Indicators to the Choiceof a Field Classification System

Complementary to the studies mentioned above, we now present our own analysis. Our focus is on the sensitivity of field-normalized impact indicators to the choice of a field classification system. We are interested in particular in the sensitivity of the indicators at lower levels of aggregation, that is, at the level of internal units within a research institution. This level is highly relevant in practical applications of field-normalized impact indicators.

The mean normalized citation score ( ) [11.18] (Sect. 11.4.1), and the proportion of top \({\mathrm{10}}\%\) publications ( ) [11.49] (Sect. 11.4.2), represent two of the most frequently used size-independent field-normalized impact indicators (taking into account also the use of variants of these indicators in commercial tools such as InCites and SciVal). Given the popularity of these indicators, it is important to understand their sensitivity to the choice of a field classification system. In this section, we, therefore, analyze the sensitivity of these indicators to the choice between, on the one hand, a traditional journal-based classification system, namely the classification system consisting of about 250 fields that is available in the WoS database, and, on the other hand, a publication-based classification system constructed algorithmically using the methodology of Waltman and van Eck [11.12]. At the level of research institutions, the sensitivity to the choice between these two classification systems has been found to be relatively limited [11.13]. However, this sensitivity has not yet been analyzed in a systematic way for smaller units. Below we present such an analysis for internal units within a large European university.

Our analysis is based on the WoS database. More specifically, we use the Science Citation Index Expanded, the Social Sciences Citation Index, and the Arts & Humanities Citation Index. We use data on the publications of our focal university in the period 2010–2014. Publications are assigned to internal units within the university at three hierarchical levels. We refer to these levels as the faculty level, the department level, and the research group level. We take into only take into account units that have at least 50 publications. Also, only publications classified as research articles or review articles are considered. There are 13 faculties, 36 departments, and 130 research groups with 50 or more publications. Some basic statistics on the numbers of publications of these faculties, departments, and research groups are reported in Table 11.1.

Citations are counted until the end of 2015. Author self-citations are excluded. In the case of the WoS journal-based classification system, publications in journals belonging to the Multidisciplinary Sciences category are reassigned to other categories based on their references. In the case of the publication-based classification system, we use a system that includes about 4000 fields. This is in line with the recommendation made by Ruiz-Castillo and Waltman [11.13]. The calculation of the MNCS and PP(top \({\mathrm{10}}\%\)) indicators is based on, respectively, Waltman et al [11.18] and Waltman and Schreiber [11.49]. Normalization is performed for field and publication year, but not for publication type. A full counting approach is taken. Hence, each publication authored by a unit is fully counted for that unit, irrespective of possible co-authorship with other units inside or outside the focal university.

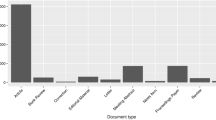

The results of the analysis are presented in Fig. 11.1a,b for the 13 faculties, in Fig. 11.2a,b for the 36 departments, and in Fig. 11.3a,b for the 130 research groups. Each figure shows two scatter plots, one for the MNCS indicator and one for the PP(top \({\mathrm{10}}\%\)) indicator. In addition, Table 11.2 reports a number of statistics that summarize the differences between the results obtained using the WoS journal-based classification system and those obtained using the publication-based classification system. For both the MNCS indicator and the PP(top \({\mathrm{10}}\%\)) indicator, the table presents the Pearson correlation between the results obtained using the two classification systems. Moreover, the table also shows the mean absolute difference between the results and the percentage of units for which the difference is considered to be large. A difference in the MNCS value of a unit of more than 0.5 is regarded as large. In the case of the PP(top \({\mathrm{10}}\%\)) indicator, we regard a difference of more than 5 percentage points as large.

The results in Table 11.2 show that the mean absolute differences are larger at the level of the research groups than at the level of the faculties and the departments. Likewise, the percentage of units with large differences is highest at the research group level. These findings may not be surprising. Research groups on average have a much smaller number of publications than faculties and departments (Table 11.1), and, therefore, the MNCS and PP(top \({\mathrm{10}}\%\)) values of research groups can be expected to be more sensitive to the choice of a classification system than the corresponding values of faculties and departments. Based on the results in Table 11.2 and Figs. 11.1a,b–11.3a,b, it can also be concluded that the PP(top \({\mathrm{10}}\%\)) indicator is more sensitive to the choice of classification than the MNCS indicator .

Based on our results, how sensitive are field-normalized impact indicators to the choice of a field classification system? The answer to this question may depend on the expectations that one has. Some readers may consider the differences between the results obtained using the WoS journal-based classification system and the results obtained using the publication-based classification system to be within an acceptable margin. Others may be concerned to see, for instance, that for about one out of seven research groups the PP(top \({\mathrm{10}}\%\)) indicator increases or decreases by more than 5 percentage points when changing the classification system based on which the indicator is calculated (Table 11.2). Our perspective is that the results illustrate the risk of overinterpreting field-normalized indicators, especially at lower levels of aggregation, such as the research group level. There is no perfect way to correct for differences between fields. Different field normalization approaches make different choices in how they correct for field differences. Each approach is informative in its own way. When working with one specific field-normalization approach, it is essential to keep in mind that this approach offers just one perspective on field normalization and that other approaches will give a different perspective, in some cases even a perspective that may be different in a quite fundamental way.

7 Conclusion

Some critics question whether field normalization is truly attainable. In the literature, this viewpoint is represented by Kostoff [11.123] and Kostoff and Martinez [11.124], who criticize the idea of field-normalized impact indicators, arguing that citation counts of publications should be compared only if publications are very similar to each other. According to Kostoff and Martinez [11.124, p. 61]:

a meaningful ‘discipline' citation average may not exist, and the mainstream large-scale mass production semi-automated citation analysis comparisons may provide questionable results.

In principle, critics make a valid point. Taking their position to the extreme, one could argue that every publication is unique in its own way and, consequently, that any comparison of citation counts of publications is problematic. Likewise, it could be argued that every researcher is unique and that any comparison of publication and citation counts of researchers is, therefore, in some sense unfair.

However, one may also take a more pragmatic perspective on the idea of field normalization. In managing and evaluating scientific research, there is often a need to compare different research units (e. g., research institutions, research groups, or individual researchers). Scientometric indicators, of course, provide an incomplete picture of the units to be compared. Moreover, these indicators are affected by all kinds of distorting factors, for instance, related to the characteristics of the underlying data sources, the peculiarities of the units to be compared, and the nature of the scientific fields in which these units are active. Nevertheless, despite their limitations, scientometric indicators provide useful and relevant information for supporting the management and evaluation of scientific research. In many cases, the usefulness of scientometric indicators can be increased by making corrections for some of the most significant distorting factors, and field differences typically are one such a factor. Field normalization does not correct for all distorting factors, but it corrects at least partly for one of the most important ones. From this point of view, field normalization serves an important practical purpose.

7.1 Strengths and Weaknessesof Different Field-NormalizationApproaches

We have provided an overview of a large number of approaches to field normalization. Although some field-normalization approaches can be considered superior over others, we do not believe there to be a single optimal approach. Instead, there is a trade-off between the strengths and weaknesses of different approaches. Some field-normalization approaches have a high level of technical sophistication. These approaches may, for instance, use an algorithmically constructed publication-based field classification system or they may not need a classification system at all, and instead of the traditional full counting method, these approaches may use a fractional counting method for dealing with co-authored publications. Other field normalization approaches are much more basic. For instance, they rely on the standard journal-based classification system made available in a database such as WoS or Scopus and they handle co-authored publications using the standard full counting method. In general, the more sophisticated approaches can be expected to better correct for field differences than the more basic approaches. On the other hand, however, the more basic approaches tend to be easier to understand and more transparent. This enables users to carefully reflect on what a field-normalized indicator does and does not tell them, and it allows users to recognize the limitations of the indicator. The more sophisticated approaches tend to be black boxes for many users, forcing users to blindly trust the outcomes provided by these approaches. Due to the low level of transparency, it is difficult for users to understand the limitations of the more sophisticated approaches and to interpret the outcomes obtained using these approaches in the light of these limitations.

As a general rule, in situations in which in-depth reflection on scientometric indicators is desirable or even essential, for instance, when indicators are used to support the evaluation of individual researchers, we recommend the use of simple and transparent field-normalization approaches. Complex non-transparent approaches should not be used in such situations. On the other hand, there are also situations in which the use of more advanced field-normalization approaches, possibly with a relatively low level of transparency, may be preferable. This could be the case in situations in which scientometric indicators are used at a high level of aggregation, for instance, at the level of entire research institutions or countries, where in-depth reflection on the indicators may hardly be possible, or in situations in which scientometric indicators are used in a purely algorithmic way, for instance, when they are embedded in a funding allocation model.

7.2 Contextualizationas an Alternative Wayto Deal with Field Differences

We end this chapter by pointing out that field normalization is not the only way to deal with field differences in scientometric analyses. When detailed assessments need to be made at the level of individual researchers or research groups, an alternative approach is to use straightforward non-normalized indicators and to contextualize these indicators with additional information that enables evaluators to take into account the effect of field differences [11.125]. For instance, to compare the productivity of researchers working in different fields, one could present non-normalized productivity indicators (e. g., total publication or citation counts during a certain time period) for each of the researchers to be compared. One could then contextualize these indicators by selecting for each researcher a number of relevant peers working in the same field and by also presenting the productivity indicators for these peers. In this way, each researcher's productivity can be assessed in the context of the productivity of a number of colleagues who have a reasonably similar scientific profile.

An advantage of the above contextualization approach could be that it may lead to a less mechanistic way of dealing with field differences. In our experience, field-normalized indicators tend to be used quite mechanistically, with little attention being paid to their limitations. This is problematic, especially at lower levels of aggregation, for instance, at the level of individual researchers or research groups, where field-normalized indicators are quite sensitive to methodological choices, such as the choice of a field classification system. In a research evaluation, the contextualization approach outlined above may encourage evaluators to reflect more deeply on the effect of field differences and to perform inter-field comparisons in a more cautious and thoughtful way. It may also invite evaluators to combine scientometric evidence of field differences with their own expert knowledge of publication, collaboration, and citation practices in different fields of science. Hence, peer review and scientometrics may be used together in a more integrated manner, which can be expected to improve the way in which research is evaluated [11.125].

References

L. Waltman: A review of the literature on citation impact indicators, J. Informetr. 10(2), 365–391 (2016)

C.R. Sugimoto, S. Weingart: The kaleidoscope of disciplinarity, J. Doc. 71(4), 775–794 (2015)

M. Zitt, S. Ramanana-Rahary, E. Bassecoulard: Relativity of citation performance and excellence measures: From cross-field to cross-scale effects of field-normalisation, Scientometrics 63(2), 373–401 (2005)

Q. Wang, L. Waltman: Large-scale analysis of the accuracy of the journal classification systems of Web of Science and Scopus, J. Informetr. 10(2), 347–364 (2016)

W. Glänzel, A. Schubert: A new classification scheme of science fields and subfields designed for scientometric evaluation purposes, Scientometrics 56(3), 357–367 (2003)

É. Archambault, O.H. Beauchesne, J. Caruso: Towards a multilingual, comprehensive and open scientific journal ontology. In: Proc. 13th Int. Conf. Int. Soc. Sci. Informetr., Durban, South Africa, ed. by E.C.M. Noyons, P. Ngulube, J. Leta (2011) pp. 66–77

W. Glänzel, A. Schubert, H.J. Czerwon: An item-by-item subject classification of papers published in multidisciplinary and general journals using reference analysis, Scientometrics 44(3), 427–439 (1999)

L. Bornmann, R. Mutz, C. Neuhaus, H.D. Daniel: Citation counts for research evaluation: standards of good practice for analyzing bibliometric data and presenting and interpreting results, Ethics Sci. Env. Polit. 8(1), 93–102 (2008)

C. Neuhaus, H.D. Daniel: A new reference standard for citation analysis in chemistry and related fields based on the sections of Chemical Abstracts, Scientometrics 78(2), 219–229 (2009)

F. Radicchi, C. Castellano: Rescaling citations of publications in physics, Phys. Rev. E 83(4), 046116 (2011)

T.N. van Leeuwen, C. Calero Medina: Redefining the field of economics: Improving field normalization for the application of bibliometric techniques in the field of economics, Res. Eval. 21(1), 61–70 (2012)

L. Waltman, N.J. van Eck: A new methodology for constructing a publication-level classification system of science, J. Am. Soc. Inf. Sci. Technol. 63(12), 2378–2392 (2012)

J. Ruiz-Castillo, L. Waltman: Field-normalized citation impact indicators using algorithmically constructed classification systems of science, J. Informetr. 9(1), 102–117 (2015)

A. Perianes-Rodriguez, J. Ruiz-Castillo: A comparison of the Web of Science and publication-level classification systems of science, J. Informetr. 11(1), 32–45 (2017)

S.E. Hug, M. Ochsner, M.P. Brändle: Citation analysis with Microsoft Academic, Scientometrics 111(1), 371–378 (2017)

G. Abramo, C.A. D'Angelo: How do you define and measure research productivity?, Scientometrics 101(2), 1129–1144 (2014)

L. Bornmann, R. Haunschild: Normalization of Mendeley reader impact on the reader-and paper-side: A comparison of the mean discipline normalized reader score (MDNRS) with the mean normalized reader score (MNRS) and bare reader counts, J. Informetr. 10(3), 776–788 (2016)

L. Waltman, N.J. van Eck, T.N. van Leeuwen, M.S. Visser, A.F.J. van Raan: Towards a new crown indicator: Some theoretical considerations, J. Informetr. 5(1), 37–47 (2011)

J. Lundberg: Lifting the crown – citation z-score, J. Informetr. 1(2), 145–154 (2007)

R. Fairclough, M. Thelwall: National research impact indicators from Mendeley readers, J. Informetr. 9(4), 845–859 (2015)

R. Haunschild, L. Bornmann: Normalization of Mendeley reader counts for impact assessment, J. Informetr. 10(1), 62–73 (2016)

G. Abramo, T. Cicero, C.A. D'Angelo: Revisiting the scaling of citations for research assessment, J. Informetr. 6(4), 470–479 (2012)

G. Abramo, T. Cicero, C.A. D'Angelo: How important is choice of the scaling factor in standardizing citations?, J. Informetr. 6(4), 645–654 (2012)

J.A. Crespo, N. Herranz, Y. Li, J. Ruiz-Castillo: The effect on citation inequality of differences in citation practices at the Web of Science subject category level, J. Assoc. Inf. Sci. Technol. 65(6), 1244–1256 (2014)

J.A. Crespo, Y. Li, J. Ruiz-Castillo: The measurement of the effect on citation inequality of differences in citation practices across scientific fields, PLOS ONE 8(3), e58727 (2013)

R. Fairclough, M. Thelwall: More precise methods for national research citation impact comparisons, J. Informetr. 9(4), 895–906 (2015)

M. Thelwall, P. Sud: National, disciplinary and temporal variations in the extent to which articles with more authors have more impact: Evidence from a geometric field normalised citation indicator, J. Informetr. 10(1), 48–61 (2016)

M. Thelwall: Three practical field normalised alternative indicator formulae for research evaluation, J. Informetr. 11(1), 128–151 (2017)

L. Bornmann, H.D. Daniel: Universality of citation distributions – A validation of Radicchi et al.'s relative indicator \(c_{f}=c/c_{0}\) at the micro level using data from chemistry, J. Am. Soc. Inf. Sci. Technol. 60(8), 1664–1670 (2009)

G. Vaccario, M. Medo, N. Wider, M.S. Mariani: Quantifying and suppressing ranking bias in a large citation network, J. Informetr. 11(3), 766–782 (2017)

Z. Zhang, Y. Cheng, N.C. Liu: Comparison of the effect of mean-based method and z-score for field normalization of citations at the level of Web of Science subject categories, Scientometrics 101(3), 1679–1693 (2014)

F. Radicchi, C. Castellano: A reverse engineering approach to the suppression of citation biases reveals universal properties of citation distributions, PLOS ONE 7(3), e33833 (2012)

Y. Gingras, V. Larivière: There are neither “king” nor “crown” in scientometrics: Comments on a supposed “alternative” method of normalization, J. Informetr. 5(1), 226–227 (2011)

H.F. Moed: CWTS crown indicator measures citation impact of a research group's publication oeuvre, J. Informetr. 4(3), 436–438 (2010)

T. Opthof, L. Leydesdorff: Caveats for the journal and field normalizations in the CWTS (“Leiden”) evaluations of research performance, J. Informetr. 4(3), 423–430 (2010)

A.F.J. van Raan, T.N. van Leeuwen, M.S. Visser, N.J. van Eck, L. Waltman: Rivals for the crown: Reply to Opthof and Leydesdorff, J. Informetr. 4(3), 431–435 (2010)

P. Vinkler: The case of scientometricians with the “absolute relative” impact indicator, J. Informetr. 6(2), 254–264 (2012)

W. Glänzel, B. Thijs, A. Schubert, K. Debackere: Subfield-specific normalized relative indicators and a new generation of relational charts: Methodological foundations illustrated on the assessment of institutional research performance, Scientometrics 78(1), 165–188 (2009)

H.F. Moed, R.E. De Bruin, T.N. van Leeuwen: New bibliometric tools for the assessment of national research performance: Database description, overview of indicators and first applications, Scientometrics 33(3), 381–422 (1995)

A.F.J. van Raan: Measuring science: Capita selecta of current main issues. In: Handbook of Quantitative Science and Technology Research, ed. by H.F. Moed, W. Glänzel, U. Schmoch (Springer, Dordrecht 2005) pp. 19–50