Abstract

Samples which elements are dependent random variables are considered. This dependence arises due to total external random environment in which sample elements operate. The environment is described by continuous-time finite and ergodic Markov chain. Sample elements are positive random variables, which can be interpreted as lifetime till a failure. If this random variable is greater than value t > 0 and the environment has state i, then failure rate γ i (t; β (i)) is a known function of unknown coefficients \(\beta ^{(i)} = (\beta _{1}^{(i)},\ldots,\beta _{m}^{(i)})\). Maximum likelihood equations are derived for estimators of {β (i)}. A partial case when m = 1 and γ i (t; β (i)) = β i is considered in detail.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Random Environment

- Positive Random Variables

- Likelihood Equations

- Matrix Exponent

- Finite Continuous-time Markov Chain

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

3.1 Problem Setting

The classical sample theory supposes that sample elements are identically distributed and independent (i.i.d.) random variables. Lately a great attention has been granted to dependence in probabilistic structures, for example, dependence between interarrival times of various flows, between service times, etc. Usually it is described by the so-called Markov-modulated processes. They are used widely in environmental, medical, industrial, and sociological researches. We restrict ourselves by a case when elements of the sample are positive random variables. It is convenient to consider them as lifetimes of unreliable elements.

Let us consider sample elements \(\{X_{i},i = 1,\ldots,n\}\), modulated by a finite continuous-time Markov chain (see [6]). For simplicity we say that the elements operate in the so-called random environment. The last is described by an “external” continuous-time ergodic Markov chain \(J(t),t\geqslant 0\), with a final state space \(E =\{ 1,2,\ldots,k\}\). Let λ i, j be the transition rate from state i to state j.

Additionally, n binary identical elements are considered. Each component can be in two states: up(1) and down(0). The elements of system fail one by one, in random order. For a fixed state i ∈ E, all n elements have the same failure rate γ i (t) and are stochastically independent. When the external process changes its state from i to j at some random instant t, all elements, which are alive at time t, continue their life with new failure rate γ j (t). If on interval (t 0, t) the random environment has state i ∈ E, then the residual lifetime τ r − t 0 (up-state) of the rth component, \(r = 1,2,\ldots,n\), has a cumulative distribution function (CDF) with failure rate γ i (t) for time moment t, and the variables \(\{\tau _{r} - t_{0},r = 1,2,\ldots,n\}\) are independent.

We wish to get statistical estimates for the unknown parameters \(\beta ^{(i)} = (\beta _{1,i},\beta _{2,i},\ldots,\beta _{m,i})^{T}\), \(i = 1,\ldots,k\). Note that in the above described process elements of the sample \(\{X_{i},i = 1,\ldots,n\}\) are no i.i.d. anymore, as it is assumed in the classical sampling theory.

Further we make the following suppositions. Firstly, parameters of the Markov-modulated processes {λ i, j } are known. Secondly, with respect to hazard rates γ i (t), a parametrical setting takes place: all γ i (t) are known, accurate to m parameters \(\beta ^{(i)} = (\beta _{1,i},\beta _{2,i},\ldots,\beta _{m,i})^{T}\), so we will write γ i (t; β (i)). Further, we use the (m × k)-matrix \(\beta = (\beta ^{(1)},\beta ^{(2)},\ldots,\beta ^{(k)})\) of unknown parameters. Thirdly, with respect to the available sample: sample elements are fixed corresponding to their appearance, so the order statistics \(X_{(1)},X_{(2)},\ldots,X_{(n)}\) are fixed. Finally, the states of the random environment J(t) are known only for time moments \(0,X_{(1)},X_{(2)},\ldots,X_{(n)}\).

The maximum likelihood estimates (see [5, 8, 9]) for the unknown parameters β are derived. Results of a simulation study illustrate the elaborated technique. Presented paper continues our previous investigations [1, 2].

3.2 Transition Probabilities

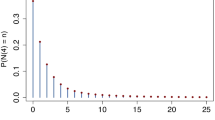

In this section we cite a result from the paper of Andronov and Gertsbakh [3]. Define N(t) as the number of elements which are in the up state at time moment t. Obviously \(P\{N(0) = n\} = 1\). We denote

It has been shown that

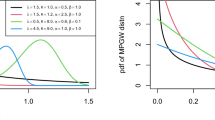

Below we consider a simple time-homogeneous case when \(\gamma _{i}(t;\beta ^{(i)}) =\gamma _{i}(\beta _{i})\,\forall i\varGamma (t,\beta ) =\varGamma (\beta ) =\mathrm{ diag}(\gamma _{1}(\beta ^{(1)}),\ldots,\gamma _{k}(\beta ^{(k)}))\). Therefore,

In this case a solution can be represented by matrix exponent (see [4, 7]):

3.3 Maximum Likelihood Estimates

In the considered case, besides the initial state j(0) of J(0), a sample of size n is given: \((x,j) =\{ (x_{j(r)},j(r)),r = 1,\ldots,n\}\), where x j(r) is the rth order statistic of the sample and j(r) = J(x (r)) is a corresponding state of the random environment. Setting x (0) = 0 we rewrite the log-likelihood function as

Considering gradients with respect to the column vectors β (v), \(v = 1,\ldots,k\), we get maximum likelihood equations

Further, we consider a time-homogeneous case when all rate intensity γ i (β (i)) have one unknown scalar parameter β i only, so \(\gamma _{i}(\beta ^{(i)}) =\beta _{i}\), \(i = 1,\ldots,k\). We will write \(p_{m,i,j}(t - t_{0}) = p_{m,i,j}(t_{0},t)\). Then, the likelihood equations (3.5) have the following form:

where \(\delta _{v,j(r+1)}\) is the Kronecker symbol: \(\delta _{v,j(r+1)} = 1\) if \(v = j(r + 1)\), and \(\delta _{v,j(r+1)} = 0\) otherwise.

Now we must get an expression for the derivative \(\frac{\partial } {\partial \beta ^{(v)}} p_{n-r,j(r),j(r+1)}(x_{(r+1)} - x_{(r)};\beta )\). For that we use an expression for a derivative of a matrix exponent (see Lemma of Appendix). Let D v be a square matrix from zero, where only one non-zero element equals 1 and takes the vth place of a main diagonal. Then, for the homogeneous case when \(\varGamma (\beta ) =\mathrm{ diag}(\gamma _{1}(\beta ),\ldots,\gamma _{k}(\beta )) =\varGamma =\mathrm{ diag}(\beta _{1},\ldots,\beta _{k})\), according to (3.3), we have for \(v = 1,\ldots,k\):

Therefore

where \(M^{\langle v\rangle }\) and \(M_{\langle v\rangle }\) mean γth column and γth row of matrix M.

Now we can use a numerical method for the solution of the likelihood equation (3.6). Note that parameter β v can be non-trivially estimated if state v has been registered as some j(r) = v, \(r = 0,1,\ldots,n\).

3.4 Simulation Study

Below there are the results of a simulation study presented, they are performed for an analysis of the described estimating procedure efficiency. As initial data, data from the paper [3] have been used. Let us describe one. A random environment has three states \((k = 3,\,E =\{ 1,2,3\})\). Transition intensities {λ i, j } from state i to state j, (i, j = 1, 2, 3) are given by a matrix

Let a number of the considered elements n equals 5. For the environment state i ∈ E, all elements have a constant failure rate γ i (t) = β i and they fail independently. Therefore, a last time till a given element failure (for the same state i of the environment) has the exponential distribution with parameter β i . These parameters must be estimated. For that purpose a sample is given. It contains a sequence of n + 1 pairs: \((x,j) =\{ (x_{j(r)},j(r)),\,r = 0,\ldots,n\}\), where x j(r) is the rth order statistic of n-sample, and j(r) = J(x (r)) is an environment state in the instant x (r). The initial pair (x (0), j(0)) equals (0, 1).

All the mentioned sampling data are given and are used in an estimating procedure. Own samples are simulated for the following parameter values: \(\beta = (\beta _{1}\;\beta _{2}\;\beta _{3})^{T} = (0.1\;0.2\;0.3)^{T}\). It is convenient to present these data as 3 × (n + 1) matrix. An example of such matrix for n = 5 is the following:

In the simulation process, samples are generated one by one. Various samples are independent. Each sample corresponds to the appointed initial state of the environment: a sample with number 3i + j corresponds to the initial state \(J(0) = j;\,j = 1,2,3;\,i = 0,\ldots \,\). Further, q such three samples (with the initial states j = 1, 2, 3) form a block, containing 3q samples. A maximum log-likelihood estimate (MLE) \(\tilde{\beta }= (\tilde{\beta _{1}},\,\tilde{\beta _{2}},\,\tilde{\beta _{3}})^{T}\) is calculated for each block.

In broad outline, a procedure is as follows. For each sample, a changing of the environment J(t), t > 0, and instants of element failure x (r), \(r = 1,\ldots,n\), are simulated. Then, for the sample, a logarithm of likelihood function (3.4) and its gradient (3.5) or (3.6) are recorded. These expressions are used for MLE calculation. As an optimization method, the gradient method has been used.

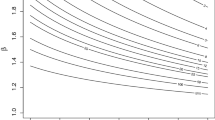

The gradient method is given by the following parameters: n is a sample size (initial number of system elements); b 0 is an initial value of parameter estimate; d is a step of moving along the gradient; \(\varepsilon\) is a maximum module of a difference between sequential values of the parameter estimate β, for which a calculation is ended; L is a limit number of a gradient recalculation during moving from an initial point; K is a number of addends, appreciated in an expansion of the matrix exponent (3.7); 3q is a number of the samples in the block.

A set of such parameters numerical values \((n,b_{0},d,\varepsilon,L,K,q)\) is called an experiment design. Below, the results of simulation study are presented.

In Table 3.1 the corresponding results are presented for the design experiment n = 5, b 0 = (0. 08 0. 22 0. 328)T, d = 0. 015, \(\varepsilon = 0.01\), L = 20, K = 20, r = 5, q = 5 and various values of total block number N. In the first column the initial value of the estimate b 0 = (0. 08 0. 22 0. 328)T is written. The following columns there are the estimate values given by averaging over N blocks. For a big number of the blocks, the coefficients d and \(\varepsilon\) have been changed. Namely, for N = 15, 17, 19, 21 those values equal 0. 002, and for N = 21, additionally, L = 40.

An analysis of the Table 3.1 shows that a convergence to true values (0. 1 0. 2 0. 3) takes place but very slow.

In conclusion we would like to remark that considered approach allows improving probabilistic predictions for functioning of various complex technical and economical systems.

References

Andronov, A.M.: Parameter statistical estimates of Markov-modulated linear regression. In: Statistical Method of Parameter Estimation and Hypotheses Testing, vol. 24, pp. 163–180. Perm State University, Perm (2012) (in Russian)

Andronov, A.M.: Maximum Likelihood Estimates for Markov-additive Processes of Arrivals by Aggregated Data. In: Kollo, T. (ed.) Multivariate Statistics: Theory and Applications. Proceedings of IX Tartu Conference on Multivariate Statistics and XX International Workshop on Matrices and Statistics, pp. 17–33. World Scientific, Singapore (2013)

Andronov, A.M., Gertsbakh, I.B.: Signatures in Markov-modulated processes. Stoch. Models 30, 1–15 (2014)

Bellman, R.: Introduction to Matrix Analysis. McGraw-Hill, New York (1969)

Kollo, T., von Rosen, D.: Advanced Multivariate Statistics with Matrices. Springer, Dordrecht (2005)

Pacheco A., Tang, L.C., Prabhu N.U.: Markov-Modulated Processes & Semiregenerative Phenomena. World Scientific, New Jersey (2009)

Pontryagin, L.S.: Ordinary Differential Equations. Nauka, Moscow (2011) (in Russian)

Rao, C.R.: Linear Statistical Inference and Its Application. Wiley, New York (1965)

Turkington, D.A.: Matrix calculus and zero-one matrices. Statistical and Econometric Applications. Cambridge University Press, Cambridge (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Lemma 3.1.

If elements of matrix G(t) are differentiable function of t, then

Proof.

Lemma 3.1 is true for n = 1 and 2. If one is true for n > 1, then

⊓⊔

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this paper

Cite this paper

Andronov, A. (2014). Markov-Modulated Samples and Their Applications. In: Melas, V., Mignani, S., Monari, P., Salmaso, L. (eds) Topics in Statistical Simulation. Springer Proceedings in Mathematics & Statistics, vol 114. Springer, New York, NY. https://doi.org/10.1007/978-1-4939-2104-1_3

Download citation

DOI: https://doi.org/10.1007/978-1-4939-2104-1_3

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4939-2103-4

Online ISBN: 978-1-4939-2104-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)