Abstract

This chapter describes a design-based research project that developed an early warning system for an undergraduate engineering mentoring program. Using near real-time data from a university’s learning management system, we provided academic advisors with timely and targeted data on students’ academic progress. We discuss the development of the early warning system and detail how academic advisors used it. Our findings point to the value of providing academic advisors with performance data that can be used to direct students to appropriate sources of support.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

As demonstrated throughout this volume, colleges, and universities are increasingly finding value in aggregating and analyzing once disparate sources of data, such as a student’s admissions records, academic history, and use of campus information technologies—all under the rubric of “learning analytics” (LA) (see also, Campbell et al. 2007; Fritz 2011; Goldstein and Katz 2005). In this chapter, we describe a design-based research project that developed Student Explorer, an early warning system (EWS) for an undergraduate engineering advising program. This project was organized around identifying user needs, developing the necessary infrastructure for building Student Explorer, and identifying factors affecting advisors’ decision-making related to the use of Student Explorer.

The EWS described in this chapter represents an application of LA that is gaining popularity across colleges and universities—the near real-time aggregation and analysis of data on students’ use of information technologies, such as Learning Management Systems (LMSs), for the purposes of identifying students in need of academic support (e.g., Beck and Davidson 2001; Macfadyen and Dawson 2010; Morris et al. 2005). One of the many benefits of collecting and analyzing LMS data is that these systems are used by a majority of instructors and students on most campuses in the United States (Dahlstrom et al. 2011; Fritz 2011). While there is increasing interest in using LMS and other related sources of near real-time data, few researchers document the specific ways users make sense of and base decisions on data generated by these systems. Moreover, few researchers connect the ways interested parties, such as academic advisors, provide support strategies to students using data and analyses from LA-based systems (Johnson et al. 2011; Lonn et al. 2012).

Student Explorer aggregates data from an LMS used at a large research university and provides near real-time data from that system to academic advisors in a program called the STEM (Science, Technology, Engineering, and Mathematics) Academy. The STEM Academy is a holistic student development program aimed at increasing the academic success of students who have historically been underrepresented in STEM fields. The STEM Academy is modeled on the Meyerhoff Scholars Program at the University of Maryland-Baltimore County (Maton et al. 2000) and the Biology Scholars Program (BSP) at the University of California, Berkeley (Matsui et al. 2003). Student Explorer provided the program’s three academic advisors with frequent updates on students’ academic progress and streamlined the presentation of data to allow for the immediate identification of students in need of support.

Developing Student Explorer in collaboration with an effective support program, such as the STEM Academy, provided a unique opportunity to advance the field of LA by identifying the ways in which academic advisors can use an EWS to support their interactions with students. In the chapter that follows, we provide a short overview of LA research focusing on prior projects that used data generated by LMSs. In general, LA-based systems using LMS data can be characterized as either providing data directly to students or providing data to an intermediary who then interacts with students. These two characterizations imply different numbers of steps—and different affordances and constraints—related to the ways in which LA-based tools may be thought to affect desired outcomes. In what follows, we describe the development of Student Explorer through the lens of design-based research. Throughout our discussion of Student Explorer’s development and use, we address the following overarching research question: “How did advisors use Student Explorer to inform their support activities with students?” We conclude this chapter by addressing future directions for LA research.

2 Using LMS Data

LMSs are ubiquitous in higher education (Dahlstrom et al. 2011). Depending upon the system, LMSs can track click-level data on a variety of user-actions (e.g., when a student accessed a course discussion, uploaded an assignment, or downloaded a course resource). Given LMS’s ubiquity and the growing potential to track and store data on user-actions, LMS data is a ready source for LA research. While a goal of LA research is to collect and analyze evermore novel sources of data, a further goal of LA research is to explore how individuals can best use these novel sources of data to support their decision-making (Duval 2011; May et al. 2011). In using data from LMSs, there are two distinct LA research agendas. One agenda involves aggregating data from online learning environments and providing these data directly to students; the other direction involves taking similar sources of data and providing them to an intermediary, such as a course instructor or academic advisor, who then acts on that data.

Researchers, such as Judy Kay and Susan Bull, demonstrate the benefits of displaying data directly to students through what they refer to as “open learner models” (see two special issues of International Journal of Artificial Intelligence in Education (2007), volume 17, issues 2 and 3). Another early example of a system that provides data directly to students is the Context-aware Activity Notification System (CANS). Within a distance education context, Goggins et al. (2010) found that students were able to use feedback provided by CANS to identify what their peers were doing, and what they, in turn, might need to do in order to catch up to their peers. Intelligent tutoring systems provide students with real-time directed scaffolding as they work to solve mathematics problems (Koedinger and Corbett 2006). E2Coach at the University of Michigan provides tailored messages to students based on demographic and course performance data (McKay et al. 2012). These messages are designed to motivate students to take specific actions, such as allocating more time to prepare for exams. For direct-to-student LA-based systems, what data is presented to students and how it is presented appears to be an important area of research that is still very much under way.

Following the second approach, Black and Wiliam’s (1998) seminal meta-analysis on formative assessment illustrates the potential for providing data to an intermediary, such as an instructor, to redirect students (for a recent review, see Hamilton et al. 2009). Work by Dawson et al. (2008), is one example of an LA-based system that provides LMS data to an intermediary—an instructor. They observed that when an instructor had data on students’ use of an LMS, it allowed the instructor to identify students who were in need of support. Purdue University’s Signals project is an example of an LA-based system that provides data to both instructors and students. This tool combines two other types of data to the LMS data: student demographic data and student grades (Campbell et al. 2007). These three data sources are formulated into a prediction model that assesses the likelihood of a student’s academic failure. Instructors have the added ability to send messages to students based on a student’s classification (i.e., red, yellow, or green) as designated by the system.

Across both direct-to-student and direct-to-intermediary LA-based systems, the user interacting with the system decides how to follow-up on the feedback. Some of these subsequent actions involve more “steps” than others, which adds complexity to the relationships between use of an LA-based system and intended outcomes. For direct-to-student systems, a necessary next action may be fairly clear, such as completing an extra problem set within an Intelligent Tutoring System, or more nuanced actions extending over time and contexts, such as engaging in more study time based on recommendations made by E2Coach or Signals. For direct-to-intermediary tools, such as the one developed in this project, the intermediary makes sense of the data and identifies potential recommendations for students. Because intermediaries are situated actors, their sensemaking draws on and is affected by multiple factors, such as their familiarity with the students, courses, and even individual instructors. This sensemaking represents an extra step, one that implicates an intermediary’s capacity to make sense of, use, and connect data to specific actions for students. Dependent on the filter provided through the intermediary, the student then makes sense of the recommendation and chooses whether or not to act on that information.

With these multiple steps in mind and to support students’ academic success, we designed Student Explorer to shorten the time frame from when academic advisors first become aware of a student in need of support and their intervention with that student. However, given the multiple steps that are implied by providing data to intermediaries, we are purposefully cautious in making claims about Student Explorer’s impact. Moreover, in these first two phases of the research, we carefully identified where we could collect data (and from whom) in order to support our understanding of Student Explorer’s role across complex interactions among advisors, students, and data. In what follows, we document the ways advisors engaged students differently as a result of having access to Student Explorer.

3 Data and Methods

Our research agenda is organized around principles of design-based research (Brown 1992; Collins 1992; Collins et al. 2004). Design-based research involves “a series of approaches, with the intent of producing new theories, artifacts, and practices that account for and potentially impact learning and teaching in naturalistic settings” (Barab and Squire 2004, p. 2). A distinguishing feature of design-based research is that the development of systems and theories is a collaborative effort among researchers and participants (Cobb et al. 2003). In our work, we collaborated with STEM Academy advisors on the development of Student Explorer and the ways in which Student Explorer could be used to support their existing work.

In the first two years of the STEM Academy, two years before Student Explorer was developed, advisors relied on students’ self-reported grades that students brought to monthly meetings. According to advisors, the monthly meeting schedule did not provide frequent enough interactions between students and advisors. For example, once a student had failed an exam or assignment it was often too late to correct a student’s academic trajectory. We were tasked with developing an EWS that STEM Academy advisors could use, at any point in the semester, to identify students in need of support. Student Explorer, therefore, was developed to increase the frequency with which an advisor contacts students.

3.1 Description of Student Explorer Data

Data used by Student Explorer are drawn primarily from the university’s LMS. The LMS tracks interactions between a user and the system in the form of “events” and “tables.” Events can include anything from the number of times a student accesses a course site to when a student downloads a specific course reading; tables are structured data, such as a course site’s Gradebook (GB). Using data from different events and tables as well as various technological tools (e.g., R, Microsoft Excel, as well as database and web-authoring tools) we engaged in an iterative, collaborative design-based approach to develop Student Explorer.

We collected two generic sources of data from the LMS: grade data and log-in data. Grade data was collected by downloading each course site’s GB and Assignments data. Log-in events were collected by querying the LMS’s data repository and counting the number of times that a student accessed a specific course’s site. Grades and log-in events were aggregated and translated into a variety of visualizations, including figures displaying students’ grades compared to their peers over time along with lists of performances on individual GB entries. This information was updated and sent to advisors on a weekly basis. We developed a three-level classification scheme of Engage (red), Explore (yellow), and Encourage (green) that provided advisors with a simple depiction of the complex relationships between academic performance data, including longitudinal data and intra-course comparisons, and log-in events.

3.2 Methods

Design iterations occurred in two phases, corresponding with two academic semesters. Along with clarifying how LMS data could be integrated into visual displays and classification schemes, we also engaged in a variety of data mining activities between the two academic semesters. These data mining activities were used to identify patterns between a student’s use of the LMS and his or her final course grade. We used functional data analysis techniques (Ramsay et al. 2009) to explore relationships between students’ use of both the LMS in general and specific LMS tools with their final course grades across multiple engineering courses. We estimated smoothing splines for LMS tools used on a site across the 16 weeks of an academic semester using all students in the course and later subsets of students according to final course grade. This process allowed us to create smooth plots of LMS use over time and graphically explore LMS use across (1) final course grades, (2) time, and (3) courses. We also examined the first derivative of each of these plots, which yielded information about the week-to-week changes in course site log-ins and tool use.

To capture how Student Explorer was used by advisors and in their interactions with students, we conducted multiple individual and group interview sessions with advisors. We conducted three interview sessions with STEM advisors where they participated in group-discussions and think-aloud exercises to reveal how they interacted with Student Explorer. Along with these interview sessions, we conducted weekly meetings with STEM Academy advisors and faculty members. These weekly meetings served as regular points of contact and provided opportunities for advisors to describe how they were using Student Explorer.

During these weekly meetings, advisors provided feedback to us about using Student Explorer as a resource for their individual meetings with students. We asked advisors to describe their process of receiving, opening, and using Student Explorer each week. We were especially interested in the types of information available within Student Explorer that advisors used when deciding to contact STEM Academy students. We also discussed interface design issues, such as what additional data might be useful to advisors and why. For example, we would describe possible sources of data and get their feedback on how they would use these data before and after their advising activities with students. It was important in the design process to know the typical workflow process advisors used so that the most useful data were featured immediately upon opening Student Explorer.

Below, we report our results chronologically, specifying the development of Student Explorer and the ways in which it was used by advisors.

4 Development and Use of Student Explorer

4.1 Phase I

4.1.1 Student Explorer Design

In fall 2010, we began working with the STEM Academy advisors to develop an EWS. During this first phase, we conducted a needs assessment to determine what information would be most useful for advisors to support their advising activities. They reported that the most basic need involved having up-to-date grade information on their students. We provided an initial solution to this problem by querying the campus LMS for all course sites that included a STEM Academy student and that used the GB or Assignments tools (we could not track students’ grades unless a course site used either the GB or Assignments tools). We located a large number of courses that fit these criteria, including many of the core engineering and science courses for first- and second-year STEM students. However, some core courses, including first- and second-year mathematics courses, did not use either the GB or Assignments tools. (We were unable to include these courses in Student Explorer due to the lack of LMS data until the most recent iteration of the tool in fall 2012.) In Phase I, we tracked over 150 individual students across 400 courses, with the number of courses per student averaging over 2.6.

The validity of the information provided in the GB and Assignments tools was an additional constraint when working with LMS data. The validity of these data was dependent upon instructors’ actual use of the GB and, more generally, use of the LMS as part of their instruction. For example, some instructors used each GB entry to reflect an assignment’s contribution to the final course grade (e.g., a ten-point assignment was purposefully meant to account for 10 % of a student’s grade out of 100 total points possible for the course). Other instructors applied weights to each GB entry to determine each assignment’s contribution to the final course grade. Additionally, instructors varied the actual entries they posted to the GB or Assignments tools. For example, some instructors only posted homework assignments to the GB and did not post grades that contributed substantially to the final course grade, such as exams. Based on instructors’ idiosyncratic use of the GB and Assignments tools, we reported a non-grade equivalent percent of points earned out of points possible to academic advisors.

In February 2011, after aggregating GB and Assignments data collected from the LMS, we created a multiple sheet Microsoft Excel file for advisors. We designed an “Advisor Summary” sheet that allowed advisors to view all STEM Academy students’ percent of points earned out of points possible for each course in which an STEM Academy student was enrolled. We also created individual sheets for each student–course combination (see Fig. 6.1). These individual sheets provided longitudinal graphical depictions of a student’s developing course grade. We provided this file to advisors starting in March 2011 and updated the information on approximately a bi-weekly basis. Near the end of this chapter, we describe some preliminary impacts of Student Explorer by comparing cumulative GPAs of STEM Academy students versus other students in the College of Engineering before and after Student Explorer was used by advisors.

We developed a classification scheme to highlight students who may be in most need of academic support to help advisors parse the large amount of data. By highlighting those students in the greatest need of support, we specified actions that an advisor could take in relation to that student: Encourage (green), Explore (yellow), and Engage (red). We refined the specific decision rules associated with the classification scheme through three collaboration sessions with all advisors and two interviews with one of the advisors. Classifications were initially generated using two rules: (1) whether a student’s percent of points earned was at or above the various non-grade equivalent thresholds (85, 75, or 65 %) and (2) whether a student was a certain percentage below the course average of percent of points earned (10 % at the low end, 15 % at the high end).

Using only these two rules based on GB data, we found that early versions of Student Explorer were oversensitive in classifying students as Explore (yellow) and Engage (red). Student Explorer was particularly oversensitive in the early weeks of a semester when there were few grade entries available. Advisors, however, expressed benefits from over classifying students as Explore (yellow) or Engage (red) based only on a few early course assignments because it provided them with opportunities to hear students describe their own course performances. Classifying these students in such a way provided the opportunity for advisors to identify and provide support to all of these individuals before they took their first exam or submitted an assignment that contributed substantially to their final grade. Even though Student Explorer classified more students than were actually performing poorly in some courses, little additional harm came to misclassified students because over-sensitivity issues with the classification scheme were attenuated after more points accrued. This was especially true for those courses in which assignments were inherently weighted through the points possible.

While grade information was useful to advisors, we also explored other sources of data from the LMS to help advisors contextualize a student’s course grade. We initially thought that providing information about tools that are predictive of final course grades would be beneficial to advisors. For example, we examined correlations between the degree to which students used specific LMS tools, such as Chat and Discussion, and their final course grades. The general strategy of seeking correlations between a tool’s use and a final course grade is related to a familiar strategy in LA research—developing prediction models to assess the likelihood of academic failure. In order to examine these patterns, we drew upon LMS data from previous semesters of core courses for College of Engineering students. We used functional data analysis to plot changes in students’ use of the LMS in line with their overall course grade. However, we found little evidence that frequently utilized course tools were related to course grades.

Our research efforts surfaced multiple limitations with developing prediction models to assess students’ likelihood of academic success. Unlike some systems, such as Intelligent Tutoring Systems that guide and assess student progress through a well-defined problem space, LMSs and a variety of other online learning technologies are necessarily designed to be content agnostic and dependent on how an instructor integrates them into course-specific activities. Given this reality, patterns in LMS use are often not generalizable across multiple course. For example, if the course site is not an important part of course activities, data from how individual tools are used on these course sites may lead to spurious conclusions related to a student’s academic progress. After analyzing patterns across multiple tools and courses, combined with the threats to validity from generalizing across courses, we began relying less on sources of data that are “predictive” and instead incorporated specific sources of data that advisors found useful for understanding a student’s course performance and for discussing that performance with them.

4.1.2 How Advisors Used Student Explorer

Advisors described their use of Student Explorer during the first phase of the project as “sporadic.” Interestingly, early collaboration sessions where we provided advisors mock-ups of Student Explorer designs proved most beneficial to advisors. After looking at the initial displays, drawn from actual student data, advisors were able to identify students who needed immediate help. Advisors contacted these students and worked with them to identify improvement strategies. One reason advisors gave for not using Student Explorer more regularly during this phase is that they had not yet found a way to integrate it into their regular work practices.

Prior to the implementation of Student Explorer, first-year STEM Academy students met with advisors on a monthly basis and turned in progress reports of their course grades. This process continued even after advisors started receiving Student Explorer reports. Advisors have used these progress reports for multiple years and these sheets had become integrated into their regular advising work. Therefore, advisors initially reported that they did not know what value Student Explorer was adding over and above the students’ self-generated reports. The extra time it took to view and learn how to use Student Explorer was another reason why advisors did not initially integrate it into their regular work practices. We received a few reports early on from advisors that they did not know how to interpret some of the data or how to make it useful for working with students. After Phase I, we spent more time with the advisors—walking through Student Explorer, describing various features, and discussing possible ways to use the system. As a result, many of these issues did not resurface in Phase II and advisors began to more fully integrate Student Explorer into their advising.

Advisors reported that Student Explorer was most useful in tracking the performance of students in their second or third years who were no longer required to attend monthly advising sessions. While not an initial goal of Student Explorer, tracking these students was useful because they were often “under the radar” until final course grades were made available to advisors. Despite collaboration around the design of the interface, advisors also reported that, overall, Student Explorer was not user friendly, with the exception of the red, yellow, and green color coding. These colors helped advisors quickly make sense of the large amounts of data and identify students in the most need of advising.

Based on the results of this first phase of the project, we found that Student Explorer did not work as we intended but did have some beneficial, unintended effects. Unfortunately, the academic advisors did not find that the system provided added benefits over and above the handwritten progress reports for tracking first-year STEM Academy students. It was, however, useful for identifying low performing students beyond their freshman year—an unintended effect. Specifically, these students were contacted more frequently than in previous semesters as a result of advisor’s use of Student Explorer. This increased contact led to more meetings between the advisors and these advanced students, which created more opportunities for these students to avail themselves of academic support services. During the next phase of the design, we worked further with advisors to improve the usability of Student Explorer and to identify ways in which using it led to increased contact with all STEM Academy students.

4.2 Phase II

4.2.1 Improved Student Explorer Design

Throughout the summer of 2011, we worked with advisors to improve the usability of Student Explorer and to identify ways in which using the EWS led to increased contact with all cohorts of the STEM Academy students. We released a new version of Student Explorer to advisors at the start of the fall 2011 semester that included three main changes. First, based on the advisor feedback from the winter 2011 semester, we included labels and grade data for individual assignments on the student report sheets (see Fig. 6.1). While the Engage, Explore, and Encourage classification scheme alerted advisors to those individuals in need of their intervention, it was formerly based solely on relative, intra-course grade measures. As we previously stated, these measures did not distinguish between the importance of GB entries (e.g., tests versus homework assignments), potentially biasing classifications by over-weighting some assignments. The inclusion of individual GB entries specified the source of each grade and helped to clarify for advisors the student’s classification within each course. Second, we incorporated the number of times a student accessed a course site into the classification scheme, using it as a proxy for revealing student effort. Specifically, we used a combination of week-to-week and cumulative access events to classify students on the borderline of grade thresholds. For example, if a student was in the bottom quartile for login events (average of week-to-week and cumulative percentile ranks) relative to their peers and was on the borderline between a B and B-, the student would be classified as Explore (yellow) instead of Encourage (green) (see Fig. 6.2). Third, we delivered updated data into the system on a weekly basis and this gave advisors the opportunity to track students and intervene in a timelier manner. We tracked over 200 individual students across 600 different courses in Phase II.

Student explorer screenshots. (a) Listing of student name, course, STEM Academy group, and STEM Academy mentor. (b) Listing of student percentage points earned in previous weeks. (c) Listing of student percentage points earned in current week, change from previous week, and current week class average. (d) Graph of student’s percentage points earned vs. class average over time. (e) Graph of student’s weekly site visits percentile and cumulative site visits percentile. (f) Listing of student’s individual course assignments and performance

4.2.2 How Advisors Used Student Explorer

Based on the above design modifications, advisors reported using Student Explorer more frequently than they had in Phase I. Moreover, use of Student Explorer led advisors to request that we incorporate more data from the LMS. For example, after a few weeks of regular use, one advisor asked us to aggregate and display students’ first exam scores for one core engineering course outside of the regular, weekly distributions of the data. Advisors found from previous semesters that the first test in this course was important to a student’s final grade. Using this exam data as displayed in Student Explorer, the advisor quickly identified students who did not do well and organized a post-exam session where a graduate student was available to help the students identify what they could do to better perform on the next test.

Advisors found the new design feature of showing the labels and grade data for individual GB entries particularly useful for focusing their interactions with students. Having the labels and grades for individual GB entries allowed advisors to address specific areas of concern within a course as it related to a student’s overall performance in the course. For example, one advisor specifically targeted students’ performances on major exams to help students make decisions about dropping courses, and to discuss the degree to which a student needed to work more closely with the course instructor or teaching assistant to improve his or her grade.

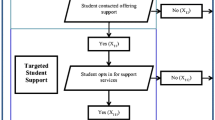

We were also able to identify tentative links between the ways in which advisors used Student Explorer and the frequency with which advisors contacted students. In Phase II, advisors reported contacting all students classified as Engage (red) on a weekly basis. Advisors typically emailed students to schedule a meeting to discuss specific improvement strategies. Advisors reported receiving immediate responses from all first-year STEM Academy students and from approximately half of all STEM Academy students who had been in the program longer than 1 year. Suggested strategies for student improvement included setting up appointments with tutoring services, meeting with a course instructor, attending office hours, and reading through the instructor’s posted PowerPoint slides before and after a lecture. Thus, Student Explorer provided a view of student performance that signaled to advisors which students they might want to contact and what types of issues needed to be addressed. Use of Student Explorer also resulted in a more immediate opportunity to suggest various improvement strategies rather than waiting for regularly scheduled meetings and relying on students’ self-reports.

In what follows, we describe how one advisor used the Student Explorer (1) filter the spreadsheet to identify assigned students, (2) locate all students who were classified as Engage (red), and (3) view each student’s individual grade sheet. After selecting an individual student’s sheet, (4) the advisor would then examine how the student performed on each grade entry. After examining individual entries, (5) the advisor would examine a longitudinal graph of the student’s course grade. The advisor would then (6) email the student requesting a meeting to discuss his or her academic performance. Though individual advisors varied in the processes by which they used Student Explorer and contacted students, in general, advisors stated that they used multiple sources of additional information in conjunction with the data presented in Student Explorer. For example, prior personal and academic histories for each student and the advisor’s own knowledge about specific courses and instructors impacted what they would say in both their initial communications and one-on-one meetings with a student.

4.2.3 Potential Outcomes of Student Explorer Use

In Phase II, we assessed potential outcomes associated with advisors’ use of Student Explorer. For one outcome, we examined the number of first-year students who were likely contacted between their regularly scheduled monthly meetings. The purpose of this analysis was to see whether Student Explorer increased the frequency of advisors’ contact with students. Based on the feedback that advisors gave during interviews, students were contacted by their advisor through e-mail when they were classified as Engage (red). Based on this feedback, we examined three different weeks throughout the semester where no student had a regularly scheduled meeting. These weeks represented opportunities for advisors to contact students that were not present before implementation of Student Explorer. In the first intervening week, 2 of the first-year STEM Academy students and 25 of the second through fourth year students were classified as Engage. In the second intervening week, 3 of the first year students and 23 of the second-fourth year students were classified as Engage, respectively. In the third intervening week, 7 of the first year students and 27 of the second-fourth year students were classified as Engage. Because advisors reported additional contact with all students classified as Engage, these numbers reflect a potential overall increase in the number of times students were contacted by an advisor between regularly scheduled meetings.

Along with identifying whether or not advisors contacted students more frequently as a result of using Student Explorer, we compared cohorts of students before and after advisors implemented the system. For comparison purposes, we selected students’ sophomore grade point averages (GPAs); STEM Academy students’ sophomore year represented the first year that they did not regularly meet with their advisors, which created an opportunity to see the effects of increased communication between advisors and students due to Student Explorer use and not monthly meetings. We speculate that the effects of increased communication would be most noticeable in this key transition year. For this analysis, we compared the means and interquartile ranges for STEM Academy sophomores prior to the adoption of Student Explorer (2008–2009 and 2009–2010 academic years, shown on Fig. 6.3 in red) against STEM Academy sophomores following the adoption of Student Explorer (2010–2011 and 2011–2012 academic years, in Fig. 6.3 in blue). We further drilled down into the data by examining differences based on students’ incoming math ACT scores; we created two groups of students—those who scored below the median of incoming STEM Academy students’ math ACT scores (Fig. 6.3, left) and those who scored above the median (Fig. 6.3, right). As illustrated in Fig. 6.3, across both ACT groups, there were overall increases in sophomore GPAs after Student Explorer adoption. While these data are suggestive, they may also reflect general changes in who got admitted to STEM Academy; we observed a 2-point increase in average ACT scores from 2008 to 2012. We do note, however, that after advisors’ use of Student Explorer, most sophomores achieved a GPA at or above 3.0, which was a primary goal for students in the Academy.

The above analyses are examples of formative assessments used by the research team and STEM Academy collaborators in Phase II. The purpose of these analyses were to create opportunities to have further discussions among the research team and to seed design decisions and speculate on possible supports needed by STEM Academy advisors. One of our goals for future work with Student Explorer is to develop mechanisms for tracking when and how students follow-up on suggestions provided by their academic advisors. We view the GPA data in formative terms, providing potential rationales for ongoing design decisions to be explored collaboratively with STEM Academy advisors.

5 Conclusion

This chapter reported on two phases of a multi-year project aimed at developing an EWS, Student Explorer, for an undergraduate engineering advising program. The advising program served as a strategic research partner providing an authentic context in which to explore important issues related to LA-based interventions. Moreover, the STEM Academy simultaneously provided the opportunity to develop a working product that supported Academy advisors identify students in need of academic support.

Interactions among intermediaries (e.g., academic advisors), students, and an EWS are complex and extend over time—breakdowns within and between interactions can lead to less than desired outcomes. In this project, we developed Student Explorer to influence how an advisor identifies students in need of academic support, with the intent of increasing the frequency with which an advisor contacts students and engages with them in discussions around their academic performances. An important step for LA research more generally will be to further clarify the complex relationships between providing data to interested parties, such as academic advisors and instructors, and improved student outcomes. Generally speaking, there are multiple, possible breakdowns between providing actionable data and positive outcomes. For example, in our research, we described how STEM Academy advisors received and made sense of data on hundreds of students across hundreds of courses. Advisors contacted students and offered recommendations, which students may or may not have acted on. Depending upon what occurs following a recommendation, a student might have performed better than expected on a subsequent course assessment.

Since Phase II, the design of Student Explorer has refined, expanded, made available online, and much of the data processing has been automated. For a discussion of lessons learned about scaling from research project-level implementation to university-level IT support, see Lonn et al. (2013). As Student Explorer was scaled a variety of challenges have emerged, including differing approaches to advising and perspectives on the utility of EWS data for understanding how, when, and why students’ academic performance may be declining. As we address these challenges, we are cognizant of the need to continually communicate with advisors about how they interpret and make recommendations based on the data presented in Student Explorer. Future LA research will benefit from identifying the ways in which system developers, researchers, and data scientists support key actors (advisors, instructors, students) in making sense of and acting on data generated by LA-based interventions.

References

Barab, S. A., & Squire, K. D. (2004). Design-based research: Putting a stake in the ground. Journal of the Learning Sciences, 13(1), 1–14.

Beck, H. P., & Davidson, W. D. (2001). Establishing an early warning system: Predicting low grades in college students from survey of academic orientations scores. Research in Higher Education, 42(6), 709–723.

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education, 5(1), 7–74.

Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. Journal of the Learning Sciences, 2(2), 141–178.

Campbell, J., DeBlois, P., & Oblinger, D. (2007). Academic analytics: A new tool for a new era. Educause Review, 42(4), 40–57. Retrieved from http://net.educause.edu/ir/library/pdf/ERM0742.pdf

Cobb, P., Confrey, J., diSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9–13. 35–37.

Collins, A. M. (1992). Towards a design science of education. In E. Scanlon & T. O’Shea (Eds.), New directions in educational technology (pp. 15–22). Berlin: Springer.

Collins, A. M., Joseph, D., & Bielaczyc, K. (2004). Design research: Theoretical and methodological issues. Journal of the Learning Sciences, 13(1), 15–42.

Dahlstrom, E., de Boor, T., Grunwald, P., & Vockley, M. (2011). The ECAR national study of undergraduate students and information technology, 2011. Boulder, CO: EDUCAUSE.

Dawson, S., McWilliam, E., & Tan, J. P. L. (2008). Teaching smarter: How mining ICT data can inform and improve learning and teaching practice. Hello! Where Are You in the Landscape of Educational technology? Proceedings Ascilite (pp. 221–230). Melbourne. Retrieved from http://www.ascilite.org.au/conferences/melbourne08/procs/dawson.pdf

Duval, E. (2011). Attention please! Learning analytics for visualization and recommendation. Proceedings of the First International Conference on Learning Analytics and Knowledge (pp. 9–17). Banff, Alberta, Canada: ACM.

Fritz, J. (2011). Classroom walls that talk: Using online course activity data of successful students to raise self- awareness of underperforming peers. The Internet and Higher Education, 14(2), 89–97.

Goggins, S., Galyen, K., & Laffey, J. (2010). Network analysis of trace data for the support of group work: Activity patterns in a completely online course. Proceedings of the 16th ACM International Conference on Supporting Group Work (pp. 107–116). Sanibel Island, FL.

Goldstein, P., & Katz, R. (2005). Academic analytics: The uses of management information and technology in higher education—Key findings (key findings) (pp. 1–12). EDUCAUSE Center for Applied Research. http://www.educause.edu/ECAR/AcademicAnalyticsTheUsesofMana/156526

Hamilton, L., Halverson, R., Jackson, S., Mandinach, E., Supovitz, J., & Wayman, J. (2009). Using student achievement data to support instructional decision making (NCEE 2009-4067). Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education. Retrieved from http://ies.ed.gov/ncee/wwc/publications/practiceguides/

Johnson, L., Smith, R., Willis, H., Levine, A., & Haywood, K., (2011). The 2011 Horizon report. Austin, TX: The New Media Consortium. Retrieved from http://wp.nmc.org/horizon2011/

Koedinger, K. R., & Corbett, A. T. (2006). Cognitive tutors: Technology bringing learning science to the classroom. In K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (pp. 61–78). New York: Cambridge University Press.

Lonn, S., Aguilar, S., & Teasley, S. D. (2013). Issues, challenges, and lessons learned when scaling up a learning analytics intervention. Proceedings of the Third International Conference on Learning Analytics and Knowledge (pp. 235–239). Leuven, Belgium: ACM.

Lonn, S., Krumm, A. E., Waddington, R. J., & Teasley, S. D. (2012). Bridging the gap from knowledge to action: Putting analytics in the hands of academic advisors. Proceedings of the Second International Conference on Learning Analytics and Knowledge (pp. 184–187). Vancouver, Canada: ACM.

Macfadyen, L. P., & Dawson, S. (2010). Mining LMS data to develop an “early warning system” for educators: A proof of concept. Computers & Education, 54(2), 588–599.

Maton, K. I., Hrabowski, F. A., III, & Schmitt, C. L. (2000). African American college students excelling in the sciences: College and postcollege outcomes in the Meyerhoff Scholars Program. Journal of Research in Science Teaching, 37(7), 629–654.

Matsui, J., Liu, R., & Kane, C. M. (2003). Evaluating a science diversity program at UC Berkeley: More questions than answers. Cell Biology Education, 2(2), 113–121. doi:10.1187/cbe.02-10-0050.

May, M., George, S., & Prévôt, P. (2011). TrAVis to enhance online tutoring and learning activities: Real-time visualization of students tracking data. Interactive Technology and Smart Education, 8(1), 52–69.

McKay, T., Miller, K., & Tritz, J. (2012). What to do with actionable intelligence: E2Coach as an intervention engine. Paper presented at The 2nd International Conference on Learning Analytics and Knowledge. Vancouver, Canada.

Morris, L. V., Finnegan, C., & Wu, S. (2005). Tracking student behavior, persistence, and achievement in online courses. The Internet and Higher Education, 8(3), 221–231.

Ramsay, J. O., Hooker, G., & Graves, S. (2009). Functional data analysis with R and Matlab. Use R series. New York: Springer.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Krumm, A.E., Waddington, R.J., Teasley, S.D., Lonn, S. (2014). A Learning Management System-Based Early Warning System for Academic Advising in Undergraduate Engineering. In: Larusson, J., White, B. (eds) Learning Analytics. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-3305-7_6

Download citation

DOI: https://doi.org/10.1007/978-1-4614-3305-7_6

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-3304-0

Online ISBN: 978-1-4614-3305-7

eBook Packages: Humanities, Social Sciences and LawEducation (R0)