Abstract

The aim of this chapter is to discuss appropriate scaffolding for metacognitive reflection when learning with modern computer-based learning environments. Many researchers assume that prompting students for metacognitive reflection will affect the learning process by engaging students in more metacognitive behaviour leading to better learning performance. After defining basic constructs and assumptions, an overview of research on prompting metacognitive and self-regulated learning skills during hypermedia learning is presented. On the basis of this overview the design and effects of three kinds of metacognitive support (reflection prompts, metacognitive prompts, training & metacognitive prompts) are presented and discussed. In three experiments with university students, the experimental groups are supported by one of the types of metacognitive prompts, whereas the control groups are not supported. Analysis of learning processes and learning outcomes confirms the positive effects of all three types of metacognitive prompts; however their specific influence varies to a significant degree. The results and their explanations are in line with recent theories of metacognition and self-regulated learning. At the end of the chapter implications for the design of metacognitive support to improve hypermedia learning are discussed. Furthermore, implications for investigating metacognitive skills during hypermedia learning will be derived.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

Recent research in the field of self-regulated learning points out the crucial role of learners’ strategic and metacognitive behaviour (e.g. Boekaerts, Pintrich, & Zeidner, 2000; Schunk & Zimmerman, 1998; Winne, 1996, 2001). Thus, successful learning is not a matter of trial and error but rather a set and specific sequence of metacognitive activities that has to be performed (e.g. Schnotz, 1998). Ideally, successful students perform different metacognitive activities during learning. First, they analyse the situation before they start processing the information. They will orient themselves by skimming the task description, instruction and resources, will specify the learning goals or even break them down into sub-goals, and will plan the ongoing procedure. Based on this analysis the student has to search for relevant information and—especially crucial for self-regulated hypermedia learning—judge whether the information found is really relevant to reach the learning goals. The student then has to extract the information and elaborate it. At the end of the learning activity, the student has to evaluate the learning product, again with respect to the learning goals. These activities are constantly monitored and controlled.

Research of self-regulated learning reveals that many learners have difficulty performing these metacognitive activities spontaneously, which most probably results in lower learning outcomes (Bannert, 2007; Zumbach & Bannert, 2006). So, the key issue of the research project is to develop effective metacognitive instructions, also suggested by other researchers (e.g. Azevedo & Hadwin, 2005; Kramarski & Feldman, 2000). One promising form of instructional support seems to be the use of metacognitive prompts, since they should focus learners’ attention on their own thoughts and on understanding the activities in which they are engaged during the course of learning (Lin, 2001; Lin & Lehman, 1999). Hence it is assumed that prompting students to reflect upon their own way of learning will allow them to activate their repertoire of metacognitive knowledge and skills, which will further enhance hypermedia learning and transfer.

Approaches of Metacognition and Metacognitive Instruction

The obvious definition of metacognition is that of cognition about cognition, and the function of metacognition is to regulate one’s own cognition (Flavell, 1979). More precisely Nelson and Narens(1992) divided cognition into an object level and a meta level. On the meta level a learner builds a mental model of the object level. In multimedia research we use the term mental model to describe analogous mental representations of external representations such as a mental model about a machine (Schnotz & Bannert, 2003). In case of metacognition the mental model is built from the object level, that is, the cognition of the person her/himself. The processes leading to such a mental model of one’s own cognition are named monitoring, whereas processes that alter the cognition at the object level are named control. To sum up, metacognition is defined recursively as cognition about cognition. This implies a mental model of one’s own cognition (the meta level) that is acquired and altered during monitoring processes whereas control processes alter the cognition.

In research about metacognition a distinction has been made between metacognitive knowledge and metacognitive skills (e.g. Ertmer & Newby, 1996; Schraw, 2001). On the one hand, metacognitive knowledge refers to the individual’s declarative knowledge about learning strategies as well as person and task characteristics that are relevant in order to master a specific situation (Flavell & Wellman, 1977). On the other hand, metacognitive skills refer to the self-regulation activities taken place in learning and problem solving (Brown, 1978; Veenman, 2005). Some researchers add a third category named metacognitive experiences (Efklides, 2008; Flavell, 1979) or metacognitive judgments and monitoring (Pintrich, Wolters, & Baxter, 2000). Metacognitive feelings and judgments belong to this category: feelings of knowing, feelings of difficulty, judgments of knowing, judgments of learning, confidence judgments, etc. According to Efklides these feelings and judgments trigger the metacognitive skills, that is, a person feels or judges that there are problems in the learning process and thus begins to use their metacognitive skills. In this research the focus lies on these metacognitive skills of students. There are different processes subsumed to metacognitive skills like goal setting, orientation, planning, strategy selection and use, monitoring the execution of strategies, checking, and reflection (e.g. Pintrich et al., 2000; Veenman, 2005). In this chapter we present results concerning the support of orientation, planning, reflection, and evaluation, and monitoring of strategies using prompts.

In general, metacognitive support aims to increase students’ learning competence by means of systematic instruction in order to improve significantly his or her learning performance. Reviewing current metacognitive training research (e.g. Schunk & Zimmerman, 1998; Veenman, Van-Hout-Wolters, Afflerbach, 2006; Weinstein, Husman, & Dierking, 2000), there are some general principles for effective metacognitive instruction:

-

First of all, metacognitive instruction should be integrated in the domain-specific instruction. Thus, metacognitive activities should not be taught separately as an end of itself but embedded in the subject matter.

-

Secondly, the application and usefulness of instructed metacognitive strategies have to be explained; otherwise students will not use them spontaneously.

-

And, last but not least, it is important that sufficient training time is allotted in order to implement and automate the metacognitive activities just learned.

Another distinction is made by Friedrich and Mandl (1992) with respect to the level of directness of instructional measures:

-

Direct support teaches learning strategies explicitly to the students, that is, the strategies are explained and students practice the use of the strategies. Direct metacognitive support is realised by metacognitive training, which focuses explicitly on teaching metacognitive skills and metacognitive knowledge (e.g. Hasselhorn, 1995).

-

In contrast indirect support measures are embedded into the learning environment, that is, the learning environment is designed in order to promote the use of certain strategies without explaining them explicitly. Metacognitive indirect support offers adequate learning heuristics to the students embedded into the learning environment, which are not explicitly taught. Student’s focus lies on knowledge acquisition on a learning domain and not on metacognitive knowledge and skills per se. The integrated learning heuristics, e.g. metacognitive prompts (Bannert, 2009), stimulate students to apply their metacognitive skills adequately.

The decision whether to design direct or indirect metacognitive instruction strongly depends on the student’s metacognitive competence. Whereas extensive training is necessary for students lacking metacognitive competence, the so-called mediation deficit (e.g. Hasselhorn, 1995), metacognitive prompts seem to be an adequate measure for students already possessing these skills, but who do not perform them spontaneously, the so-called production deficit. The target group of this research project are university students who should already possess the metacognitive skills outlined above due to their wide learning experiences (Paris & Newman, 1990; Veenman et al., 2006). Hence, we assume that unsuccessful hypermedia learning of this target group is more a matter of a production deficit than a mediation deficit. Nevertheless, this is ours and the cited author’s assumption and not an empirical fact. But if we can show that indirect support fosters learning without teaching the required skills explicitly this would give some support for our assumption. Under this assumption it is reasonable that indirect support combined with a short-term intervention should foster learning effectively without more time-demanding direct support.

The aim of this research approach is to provide metacognitive support to improve self-regulated learning, especially when learning with hypermedia. Although metacognitive knowledge and skills are needed when learning without new learning technology, such technology makes the students’ reflective behaviour about their own way of learning more salient (Azevedo, 2005, 2009; Lin, 2001; Lin, Hmelo, Kinzer, & Secules, 1999). For example, in a hypermedia learning environment a successful learner continuously has to decide where to go next and constantly has to evaluate how the information retrieved is related to his/her actual learning goal (Schnotz, 1998). Considering that many students have difficulties in strategic and metacognitive learning behaviour (e.g. Simons & De Jong, 1992), the aim of our research is to provide appropriate scaffolding for metacognitive reflection when learning with hypermedia.

Scaffolding Metacognitive Skills Through Prompts

We define prompts as recall and/or performance aids, which vary from general questions (e.g. “what is your plan?”) to explicit execution instructions (e.g. “calculate first 2 + 2”; Bannert, 2009). They are all based on the central assumption that students already possess the concept and/or processes, but do not recall or execute them spontaneously. Instructional prompts and instructional prompting are measures to induce and stimulate cognitive, metacognitive, motivational, volitional, and/or cooperative activities during learning (Bannert, 2009). They may stimulate the recall of concepts and procedures (e.g. by presenting the cognitive prompt: “What are the basic concepts of Skinner’s operant learning theory?”), or induce the execution of procedures, tactics, and techniques during learning (e.g. by offering a cognitive prompt: “First, calculate the percentage of different countries, then compare.”), or even induce the use of cognitive and metacognitive learning strategies (e.g. by presenting a metacognitive prompt: “Is this in line with my learning goal?”) as well as strategies of resource management (e.g. with motivational prompts: “What are the benefits?” or by presenting group coordination prompts, “Decide first who is the editor, the writer, the reviewer”).

Instructional prompts differ from prototypical instructional approaches since they do not teach new information, but rather support the recall and execution of student’s knowledge and skills. They are often included as support measures in instruction, which is aimed at knowledge acquisition. As illustrated in the few examples presented above instructional prompts include explicit statements that students have to consider during learning and thus differ from worksheets (without such statements) or worked examples.

Paralleling the classification of learning strategies as cognitive learning strategies, metacognitive learning strategies, and resource management strategies (e.g. Weinstein & Mayer, 1986), instructional prompts are classified in this chapter as cognitive prompts if they directly support a student’s processing of information, for example by stimulating memorising/rehearsal, elaboration, organisation, and/or reduction of learning material (e.g. Nückles, Hübner, & Renkl, 2009). Metacognitive prompts are generally intended to support a student’s monitoring and control of their information processing by inducing metacognitive and regulative activities, such as orientation, goal specification, planning, monitoring, and control as well as evaluation strategies (Bannert, 2007; Veenman, 1993). Prompts for resource management ask the learner to ensure optimal learning conditions, such as to have all necessary learning resources at one’s disposal or to organise a well-performing learning group. As our research does not contribute to this issue prompts for resource management are not considered in the following.

To sum up, metacognitive prompts are instructional measures integrated in the learning context that ask students to carry out specific metacognitive activities. In the study of Lin and Lehman (1999) students were prompted at certain times by a pop-up window in a computer simulation, to give reasons for their actions when carrying out experiments in biology. For example, before they started the experiment they first had to answer the question “What is your plan?”, “How did you decide that …?”, etc. stimulating students to perform planning and monitoring activities, which are major metacognitive skills as introduced above. All metacognitive prompts were explained and their usage was trained several weeks before the experiment was conducted. Lin and Lehman obtained significantly higher far-transfer performance for students learning with those prompts compared to the students of the control group learning without prompts.

In the experiments of Veenman (1993), students were also prompted in a simulation environment to perform several metacognitive regulation activities, e.g. to reflect on the results based on the predictions made before the experiment was conducted. These experiments also showed positive significant learning effects for high-ability learners.

Simons and De Jong (1992) carried out several studies in which students had to practice with certain learning questions, “Do I understand this part?” and “Is this in line with the learning goal?”, and learning techniques, e.g. reflection and self-testing. To sum up, they found these learning heuristics effective, especially for older and high-ability students with prior knowledge.

Stark and Krause (2009) investigated the impact of reflective prompts on learning in the domain of statistics using learning material with worked examples. Participants were allowed to decide for each learning task if they wanted to see the worked examples right away or after solving the learning task. Participants in the experimental group were prompted to justify each of their decisions whereas the controls were not prompted. Participants from the prompted group outperformed participants in the control group in solving complex tasks immediately after learning and in a follow-up test but they were not more successful in simple tasks.

Kauffman, Ge, Xie, and Chen (2008) combined cognitive prompts for problem solving with metacognitive prompts for reflection in a Web-based learning environment. Novice students were asked to solve two case studies about classroom management and to present their solution in an e-mail message to a fictive teacher of the class. Problem-solving prompts had an effect on the quality of the solutions and on the quality of writing, whereas prompts for reflection had an effect only for those participants who had received the problem-solving prompts before. Thus, reflection prompts were effective if there was a clear understanding of what participants were asked to reflect on, namely, the problem-solving process that was prompted before.

Stadtler and Bromme (2008) used metacognitive prompts during a search task about medical information in preselected Websites. Laypersons were prompted either to monitor their comprehension or to evaluate the source of the information or both. There were positive effects of monitoring prompts on knowledge about facts and small effects on comprehension. The monitoring prompts may have fostered the detection of comprehension failures and inconsistencies and thus enabled the laypersons to control and optimise their information processing. Evaluation prompts had an effect on the recalled information concerning the sources of the information, that is, participants were more aware of the quality of the information they collected during the task. The results support the conclusion that different metacognitive prompts trigger different learning behaviour.

The effect of prompting over the course of time was investigated in a study by Sitzmann, Bell, Kraiger, and Kanar (2009) in which participants learned about a learning platform over ten sessions in an online learning environment. Participants were randomly assigned to three groups: continuous prompting, prompting in the last five sessions, and no prompting. Participants were prompted concerning their monitoring and evaluation using short questions, which had to be answered on a 5-point-scale (e.g. “Are the study tactics I have been using effective for learning the training material?”). For the continuous prompting group learning performance increased during the first four sessions and then levelled off, whereas for the prompting in the last five sessions group learning performance increased after the prompts were introduced. By contrast, the learning performance of the controls declined over the ten sessions. Results were replicated in a second experiment with different learning material. Additionally, cognitive ability and specific self-efficacy moderated the prompting effects, that is, participants with high cognitive abilities and high specific self-efficacy benefitted more from the prompts than participants with lower cognitive abilities or self-efficacy.

Sitzmann and Ely (2010) prompted their participants while learning about Excel in an online course using metacognitive and motivational/volitional prompts, e.g. “Do I understand all of the key points of the training material?” or “Am I focusing my mental effort on the training material?” Participants had to answer these questions on a 5-point scale. There were five experimental groups: prompts prior to the training, prompts in all four modules of the training, prompts in the first two modules, prompts in the second two modules, and no prompts. Results showed that participants who were prompted continuously had higher learning outcome and less attrition. This effect was mediated by learning time but surprisingly not by strategy use as measured by a questionnaire. Instead, continuous prompting moderated the effect of learning performance on subsequent strategy use and attrition. That is, continuous prompts prevented learners with low learning performance from dropping out and from reducing their strategy use. Therefore, continuously prompting learners may give them the feeling that they can control their learning, leading to less attrition and more strategy use in subsequent learning phases.

As illustrated by these prompting studies, metacognitive prompts require students to explicitly reflect, monitor, and revise the learning process. They focus students’ attention on their own thoughts and on understanding the activities in which they are engaged during the course of learning. Hence it is assumed that prompting students to plan, monitor, and evaluate their own way of learning will allow them to activate their repertoire of metacognitive knowledge and strategies which will as a consequence enhance self-regulated learning and transfer.

Experimental Studies on Metacognitive Prompts

The aim of this research approach is to provide metacognitive support to improve self-regulated learning, especially when learning with hypermedia. The prompting studies outlined above mainly investigate the effects of metacognitive prompts on learning performance by comparing experimentally the effects of metacognitive prompts vs. no prompts (e.g. Veenman, 1993), metacognitive vs. cognitive prompts (Kauffman et al., 2008; Nückles et al., 2009), or metacognitive vs. motivational prompts (e.g. Lin & Lehman, 1999; Sitzmann & Ely, 2010). So far there is little research investigating the effects of different types of metacognitive prompts. Thus, the question of our research is whether different kinds of metacognitive support would lead to different effects in hypermedia learning environments. In particular we asked whether different kinds of metacognitive support will influence the learning process by engaging students in different metacognitive behaviour and if they will increase learning performance.

Design and Effects of Different Types of Metacognitive Prompts

In this research project effects of a range of related types of metacognitive prompts were analysed experimentally using similar design, procedure, and material. In general, the metacognitive support provides prompts stimulating or even suggesting appropriate activities that must be followed by students before, during, and at the end of the learning session. No metacognitive help is offered in the control groups. Three experimental studies (outlined in Table 12.1) were conducted. In this chapter only the main idea, procedures, and results of the different studies will be sketched in order to discuss the main findings with regard to the design and evaluation of effective metacognitive tools supporting self-regulated learning in computer-based leaning environments (CBLEs).

Description of Specific Context, Sample, System, and Methods Used

-

Study 1: Reflection Prompts. 46 undergraduate university students majoring in Psychology and Education participated (mean age = 24.3; SD = 5.23; female: 84.8%). Participants were matched according to prior knowledge, metacognitive knowledge, and verbal intelligence and afterwards randomly assigned to one of the two treatments. Students in the experimental group, the so-called reflection prompting group (n = 24), were prompted by the experimenter to say out loud the reasons why they chose this specific information node. At each navigation step they had to complete the prompted statement “I am choosing this page because …”. Students’ reasons for node selection were for example “because to get an overview”, “because to make a plan”, or “because to monitor learning”, but also “because I just want to go back” or “because I don’t know where I am”. Students were completely free in completing the prompted statements. Students in the control group (n = 22) learned silently, i.e. without such reflection prompting.

-

Study 2: Metacognitive Prompts. 40 undergraduate university students majoring in Psychology and Education (mean age = 22.13, SD = 3.31; female: 67.5%) were randomly assigned to the treatments according to the same learner characteristics as obtained in Study 1. Students in the experimental group, the so-called metacognitive prompting group (n = 20), were prompted by a pop-up window for metacognitive activities that have to be followed during learning. The metacognitive help was designed to initiate and support orientation, planning, and goal specification activities at the beginning of the learning phase, monitoring and regulation activities during learning, and evaluation activities at the end of learning. Before students started learning, the first prompt requested students to orientate themselves, to specify the learning goals, and to make a plan. During learning (15 min afterwards) they were prompted to judge whether the information they processed was really relevant. They were then prompted to monitor and regulate their learning, e.g. to respond to the prompt “Do I understand the section? Am I still on time?” About 7 min before the end of the learning phase, students were prompted to evaluate their learning outcome, for instance by prompting them to check whether the learning goals were reached. No metacognitive support was offered in the control group (n = 20).

-

Study 3: Training and Metacognitive Prompts. 40 undergraduate university students majoring in different fields (mean age = 22.98, SD = 3.63; female: 72.5%) were randomly assigned to the treatments according to the same learner characteristics as obtained in Study 1. Students in the experimental group, the so-called training and metacognitive prompts group (n = 20), were prompted by pop-up windows for metacognitive activities that had to be followed during learning. In contrast to Study 2, these metacognitive activities were explained in detail, demonstrated, and practiced during a short training period right before the learning session. No metacognitive support was offered to the control group (n = 20).

Procedure, Material, and Instruments

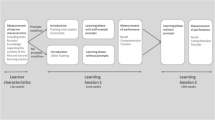

Figure 12.1 visualises the general procedure of all three experiments. About 1 week before the experiment started learner characteristics were obtained by questionnaires. Prior knowledge was measured by a self-developed multiple-choice test, metacognitive knowledge by a modified version of the LIST-questionnaire (Wild, Schiefele, & Winteler, 1992—similar to MSQL, Pintrich, Smith, Garcia, & McKeachi, 1993), verbal intelligence by IST 2000 (Amthauer, Brocke, Liepmann, & Beauducel, 1999), and motivation for achievement and fear of failure by LMT (Hermans, Petermann, & Zielinski, 1978).

All experiments began with an introduction phase during which students learned how to navigate the hypermedia program (see Fig. 12.2). Afterwards students in the experimental group of Study 1 were introduced to the method of reflection prompts in a short training period lasting a total of 10 min. All students of Study 2 and Study 3 were introduced to the method of reading and thinking aloud (Ericsson & Simon, 1993). To students of the experimental group of Study 3 metacognitive activities were explained in detail, demonstrated, and practiced in a short training period right before the learning session lasting a total of 20 min. To practice with the different kinds of metacognitive support students in all studies had to carry out several search tasks within another topic of the learning environment.

Screen capture of the hypermedia system. The learning content was arranged hierarchically, and participants had three possibilities to navigate throughout the program: (a) a hierarchical table of contents placed at the left part of the screen, (b) a guided tour with buttons for the next and the previous page, (c) associatively via hotwords that were placed in the text

Following this, the learning session began. Students had to learn basic concepts of Learning Theories (Study 1 and 2) or Motivational Psychology (Study 3) within a fixed time interval (30, 35, or 45 min). The experimental groups received metacognitive support as sketched above. Students of all treatment groups were completely free in navigating the hypermedia program during their learning sessions, which were videotaped. In Study 2 and 3 they had to read and think aloud during the whole learning sessions.

Immediately after the learning session, learning outcomes were measured by questionnaires. Free recall was measured by counting the basic terms and concepts students wrote down on a blank paper sheet. Knowledge attained was measured by a multiple-choice test including 19 or 22 items, each with 1 correct and 3 false alternatives. Transfer was measured by asking students to apply the basic concepts and principles just learned to solve prototypical problems in educational settings. Answers of 8 items were rated based on a self-developed rating scheme (interrater agreement—Kappa = 0.74). The experiments were conducted in individual learning sessions, which took about 1.5–2 h.

Comparisons of Different Types of Prompts

Metacognitive Activities During Learning

To test whether metacognitive support increased metacognitive behaviour during learning, for Study 1 the LIST metacognition scale was analysed, which was used retrospectively (see Table 12.2). For Study 2 and 3 the video-protocols were analysed to determine the quality with which the students actually performed different activities (listed in Table 12.2). Here analysis subsumes orientation, planning, and goal setting activities, which mainly take place at the beginning of learning. Searching and judgement includes strategic searching behaviour and judging information’s relevance in respect to the learning goals, evaluation refers to activities that are conducted in order to check whether the learning goals are reached, and regulation refers to monitoring and controlling activities that took place during learning. Zero points were given when the activity was not performed at all during the learning session, and 2 points were given when it was performed in optimal quality. For instance, with regard to the category “analysis: goal setting activities”, a student would receive 2 points if she reflected on learning goals, broke them down into adequate subgoals, and wrote them down. A score of 1 point was given when the activity was performed in a wrong way. For example, a student would be given a score of 1 if she/he reflected on the learning goals very superficially by just repeating the instruction, did not articulate subgoals, and did not write down any learning goals. Interrater agreement of two independent raters was Kappa = 0.83 (due to economical reasons, the Kappa statistic was obtained for a subset of 27 subjects).

As one can see in Table 12.2, the experimental treatments differ only with respect to the online measures obtained in Study 2 and 3, but not for the metacognition scale used in Study 1. In Study 2 and 3 students learning with metacognitive support performed the metacognitive activities significantly better. The biggest effect is obtained for analysis activities (i.e. orientation, goal specification, planning), i.e. students in the experimental prompting groups showed more planning activities, whereas students of the control groups often failed to do so. All differences are significant (t-tests for independent groups), except the activities search and judgement in Study 2 and regulation in Study 3. Thus, students of the experimental groups had higher scores on those measures that they were explicitly instructed to fulfil. With respect to metacognitive training research, this result is far from trivial. Often students in the experimental group fail to carry out the instructed metacognitive activities (see also the section about compliance below). Moreover, students in the (non-instructed) control groups could also show these metacognitive activities spontaneously (Bannert, 2005b).

Learning Performance

Table 12.2 also presents the mean performance scores of recall, knowledge, and transfer tasks for each study. As hypothesised, t-test for independent groups revealed a significant effect for the transfer tasks in Study 1 and 3; however no effects were obtained for recall and knowledge test performance in all studies. A similar result was found in the study of Lin and Lehman (1999), discussed above. They only found a significant effect for far-transfer, and not for near-transfer, tasks. Similarly, Stark and Krause (2009) obtained effects for complex but not for simple tasks. Lin and Lehman’s explanation is that solving far-transfer tasks and complex tasks requires deeper understanding and therefore these kinds of tasks are affected the most by metacognitive activities. There was no significant transfer performance effect obtained in Study 2. This unexpected result will be considered in more detail with regard to compliance below.

Compliance and Learning Performance in the Experimental Prompting Groups

Since there is empirical evidence that students often do not use support tools offered in CBLEs as intended (e.g. Clarebout & Elen, 2006) we conducted the following analyses. Video-protocols of three experimental groups were analysed with respect to how well the learners complied with the metacognitive support and how well they performed the activity suggested by each prompt. According to this analysis two groups were distinguished within each experimental group, one containing the students who optimally complied with the metacognitive support and one containing the other students who did not comply or complied but not in the intended way. For each group transfer performance was calculated separately. As one can see in Table 12.3, the groups differed significantly with respect to their compliance. Thus, offering metacognitive support is not sufficient; specific care has to be taken that these instructional prompts are performed in the intended manner in order to increase learning outcomes.

But what are the reasons that only half of the experimental groups, or even less than half, complied fully with the prompts? Surely this is due to the rather short introduction, and an extension of the training time would have improved compliance with metacognitive support, as the general principles for effective metacognitive instruction mentioned above suggest. Looking for specific learner characteristics we found that in all studies there were no significant differences in intelligence and motivation between the compliant and the non-compliant students. In Study 2 prior knowledge corresponds with compliance, that is, students who complied optimally had significantly higher prior knowledge. However, this prior knowledge effect did not occur in Study 1, and much more interestingly, because of the similar metacognitive prompting, also did not occur in Study 3. Thus, it seems that the metacognitive support realised in Study 2 requires a certain amount of prior knowledge in order to achieve better learning outcomes. We assume that these metacognitive prompts cause additional cognitive load, which could be partly compensated by sufficient prior knowledge, i.e. a flexible knowledge base (see Valcke, 2002, for a discussion of metacognitive load and prior knowledge). The more direct support in Study 3 (training + metacognitive prompting), however, seems to compensate for low prior knowledge. We assume that the short training based on Cognitive Apprenticeship principles dealing with the metacognitive prompts in advance reduced students’ specific cognitive load during learning.

Discussion and Implications for Further Research

Results of our three experimental prompting studies are in accordance with the assumptions derived from the recent research on metacognition sketched above. Participants of the experimental groups supported by metacognitive prompts performed more metacognitive activities during learning. They also showed better transfer performance, especially if they complied with the offered support in the intended way.

But why are the effects found in transfer performance only? We suggest that the processes in metacognition stimulated deep elaboration (cf. Craik & Lockhart, 1972), and that deep elaboration is a prerequisite for solving transfer problems. Firstly, let us define transfer as the solution of a problem in a new situation that was not part of the learning material (cf. Detterman, 1993). For example, one of our transfer tasks described a situation in which the behaviour of children is reinforced by their parents. As there was no such situation in the learning material (rather, experiments with rats were mentioned), the subjects “behaviour of children” and “parents” were new to the learners. According to the theory of structure mapping and analogies (Gentner, 1983, 1998) transfer requires that relations between elements have to be applied to new elements. In our example the relation between the behaviour of rats and the food, that is, the principle of reinforcement, has to be applied to the behaviour of children and the reinforcers given by the parents. One prerequisite for transfer is a deep understanding of relations between elements mentioned in the learning material rather than encoding the elements in an unrelated manner or associating them loosely to each other. According to our data about the learning process in experiments 2 and 3, analysis and evaluation of the learning content were stimulated by the prompts (see Table 12.2). Therefore the prompts stimulated the processing of the learning content in more depth, that is, more relations were encoded in long-term memory and transfer is more likely.

Design of Metacognitive Prompting Intervention

By means of a comprehensive video analysis it was shown that only half of the supported sample has dealt with the metacognitive support in an optimal manner. Consequently, it has to be investigated why students do not comply with the metacognitive support. Recent research is beginning to address the question why support devices are often ignored or inadequately used by students (e.g. Bannert, Hildebrand, & Mengelkamp, 2009; Clarebout & Elen, 2006).

To prove the basic assumption of students’ production deficit as mentioned above (Veenman, van Hout-Wolters, & Afflerbach, 2006; Winne, 1996) further investigation into whether the prompts really affect the assumed quantitative and also qualitative improvements in strategy use are necessary. In this context, researchers in this field need to incorporate more in-depth process analysis procedures in their studies to determine how students are really dealing with the presented prompts (Bannert, 2007b; Greene & Azevedo, 2010; Veenman, 2007). For future research, we suggest that descriptive studies based on multi-method assessment methods (e.g. log-file, eye-movement, thinking aloud, and error analysis) need to be conducted more often. By increasing the sample size of the treatment group more statistical power is available for post hoc analyses that compare students with optimal compliance with prompts—if they are present—with students who fail. This comparison would provide richer insights than experimental studies in which nothing is done to assess the actual strategies that are used during the learning processes. Experiments that focus on outcomes and fail to include process analysis seldom directly answer questions such as whether, why, and in what quality and quantity the manipulated prompts are being utilised by the students that are dealing with them. Missing effects in the learning outcome may also be explained by the students’ spontaneous use of strategies in the control group or by undesired or unanticipated effects of the prompting conditions. In brief, we have to further investigate if and how prompts are actually intervening in a student’s learning process (Bannert, 2009).

In this study some students reported after learning that they felt restricted in their own way of learning when they had to consider the demanded activities asked by metacognitive support. Most probably these interventions require additional cognitive capacities, which may also be true for tool use in general (e.g. Calvi & deBra, 1997). We assume that metacognitive prompts cause additional cognitive load, which could be partly compensated by sufficient prior knowledge, i.e. a flexible knowledge base (Sweller, van Merrienboer, & Paas, 1998; Valcke, 2002).

Lack of appropriate responses to prompts can possibly be explained by students’ individual characteristics. Perhaps students’ prior knowledge was too low and, hence, they may be overloaded by additional prompts. Or maybe students’ prior knowledge was quite high, so they did not require any strategy support at all. Also students’ metacognitive knowledge and skills could have affected the adequate use of prompts. The motivational aspects of learning when paired with meta/cognitive variables is another important consideration on the impact of prompts. In this context, care needs to be taken to ascertain whether students are really convinced that complying with the prompts will improve their learning; otherwise they will not use them (Bannert, 2007b; Veenman et al., 2006). Moreover, although there is usually sufficient training with regard to strategy use in advance, feedback on adequate strategy use is rarely provided for students, which may decrease the quantity of prompt usage and may, moreover, impede the quality of their prompted strategy use. This assumption is partly supplied by the work of Roll, Aleven, McLaren, and Koedinger (2007) who provided immediate feedback on students’ help-seeking behaviour with an intelligent tutoring system. There was a lasting improvement on students’ help-seeking behaviour and transfer of the behaviour across different learning domains (Roll, Aleven, McLaren, & Koedinger, 2011), though no improvement in domain learning.

This research focused on instructional measures with very short intervention. In further studies we will investigate whether an extension of the training time will improve compliance with the metacognitive support (e.g. Bannert et al., 2009). In addition, other kinds of metacognitive support, such as adaptive metacognitive prompting by means of pedagogical agents (e.g. Azevedo & Witherspoon, 2009), will be developed and evaluated experimentally.

Moreover, one has to point out that the structure of the hypermedia system was well designed. It included a guided tour, a hierarchical navigation menu, an advanced organiser, a summary, and a glossary. Additionally, it was the so-called closed environment, that is, it did not contain links to external nodes. Furthermore, the learning tasks already included specific learning goals so that generally the learning scenarios were not very complex. We assume that greater effects will be obtained for more complex, open-ended environments.

Analytical Techniques and Methodological Approaches

Metacognitive support such as prompts during hypermedia learning can lead to better learning outcomes (in particular, transfer), but there is a significant amount of non-compliance, suggesting that this kind of support might be even more successful if care is taken that these instructional prompts are performed in the intended manner. Maybe this is one major reason why metacognitive instruction often has no positive effects on learning outcome (e.g. Graesser, Wiley, Goldman, O’Reilly, Jeon, & McDaniel, 2007; Manlove, Lazonder, & De Jong, 2007). In future research one has to control whether students who were instructed and trained to apply metacognitive strategies will really apply them in the transfer session.

Finally, it has to be pointed out that without process analysis (by using thinking-aloud methods) a different picture would have emerged. In Study 2 and 3 we asked students to judge their strategic learning activities retrospectively by means of a questionnaire. In accordance with Veenman’s review (Veenman, 2005), there was no significant correlation between the scales of questionnaires and the activities obtained by the video analysis. Moreover, no significant correlation was obtained between the questionnaire and learning performance. Even though it is rather time consuming, it is necessary to include process analysis in further research on metacognitive support and self-regulated learning (Bannert & Mengelkamp, 2007; Hofer, 2004; Veenman, 2007).

Recent prompting research is progressing rapidly. Overall, we argue that future research has to conduct more in-depth process analysis that incorporates multi-method assessments and, besides cognitive and metacognitive aspects, to account for individual learner characteristics such as motivation and volition. Prompting research, at present, needs more insight into how students actually deal with learning prompts in order to design more individual support and with that to offer more effective types of prompts to the learners.

References

Amthauer, R., Brocke, B., Liepmann, D., & Beauducel, A. (1999). IST 2000—Intelligenz-Struktur-Test 2000. Göttingen: Hogrefe.

Azevedo, R. (2005). Using hypermedia as a metacognitive tool for enhancing student learning? The role of self-regulated learning. Educational Psychologist, 40, 199–209.

Azevedo, R. (2009). Theoretical, conceptual, methodological, and instructional issues in research on metacognition and self-regulated learning: A discussion. Metacognition and Learning, 4, 87–95.

Azevedo, R., & Hadwin, A. F. (2005). Scaffolding self-regulated learning and metacognition: Implications for the design of computer-based scaffolds. Instructional Science, 33, 367–379. Special Issue on Scaffolding Self-Regulated Learning and Metacognition: Implications for the Design of Computer-Based Scaffolds.

Azevedo, R., & Witherspoon, A. M. (2009). Self-regulated learning with hypermedia. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 319–339). Mahwah, NJ: Routledge.

Bannert, M. (2003). Effekte metakognitiver Lernhilfen auf den Wissenserwerb in vernetzten Lernumgebungen [Effects of metacognitive help devices on knowledge acquistion in networked learning environments]. Zeitschrift für Pädagogische Psychologie, 17(1), 13–25.

Bannert, M. (2005a). Designing metacognitive support for hypermedia learning. In T. Okamoto, D. Albert, T. Honda, & F. W. Hesse (Eds.), The 2nd joint workshop of cognition and learning through media-communication for advanced e-learning (pp. 11–16). Tokyo, Japan: Sophia University.

Bannert, M. (2005b). Explorationsstudie zum spontanen metakognitiven Strategie-Einsatz in hypermedialen Lernumgebungen [An exploratory study on spontaneous cognitive strategies in hypermedia learning]. In C. Artelt & B. Moschner (Eds.), Lernstrategien und Metakognition: Implikationen für Forschung und Praxis (pp. 127–151). Münster: Waxmann.

Bannert, M. (2006). Effects of reflection prompts when learning with hypermedia. Journal of Educational Computing Research, 4, 359–375.

Bannert, M. (2007). Metakognition beil Lernen mit Hypermedien [Metacognition and hypermedia learning]. Münster: Waxmann.

Bannert, M. (2007b). Metakognition beim Lernen mit Hypermedia. Erfassung, Beschreibung und Vermittlung wirksamer metakognitiver Lernstrategien und Regulationsaktivitäten. [Metacognition and Learning with Hypermedia]. Münster: Waxmann.

Bannert, M. (2009). Promoting self-regulated learning through prompts: A discussion. Zeitschrift für Pädagogische Psychologie., 23, 139–145.

Bannert, M., Hildebrand, M., & Mengelkamp, C. (2009). Effects of Metacognitive Support Device in Learning Environments. Computers in Human Behavior, 25, 829–835.

Bannert, M., & Mengelkamp, C. (2007). Assessment of metacognitive skills by means of thinking-aloud instruction and reflection prompts. Does the method affect the learning performance? Metacognition and Learning, 3, 39–58.

Boekaerts, M., Pintrich, P. R., & Zeidner, M. (Eds.). (2000). Handbook of self-regulation. San Diego, CA: Academic.

Brown, A. L. (1978). Knowing when, where, and how to remember: A problem of metacognition. In R. Glaser (Ed.), Advances in instructional psychology (pp. 77–165). Hillsdale, NJ: Erlbaum.

Calvi, L., & De Bra, P. (1997). Proficiency-adapted information browsing and filtering in hypermedia educational systems. User Modelling & User-Adapted Interaction, 7, 257–277.

Clarebout, G., & Elen, J. (2006). Tool use in computer-based learning environments: Towards a research framework. Computers in Human Behavior, 22, 389–411.

Craik, F. I. M., & Lockhart, R. S. (1972). Levels of processing: A framework for memory research. Journal of Verbal Learning and Verbal Behaviour, 11, 671–684.

Detterman, D. K. (1993). The case for the prosecution: Transfer as an epiphenomenom. In D. K. Detterman & R. J. Sternberg (Eds.), Transfer on trial: Intelligence, cognition, and instruction (pp. 1–24). Norwood, NJ: Ablex.

Efklides, A. (2008). Metacognition. Defining its facets and levels of functioning in relation to self-regulation and co-regulation. European Psychologist, 13(4), 277–287. doi:10.1027/1016-9040.13.4.277.

Ericsson, K. A., & Simon, H. A. (1993). Protocol analysis: Verbal reports as data. Cambridge: MIT Press.

Ertmer, P. A., & Newby, T. J. (1996). The expert learner: Strategic, selfregulated, and reflected. Instructional Science, 24, 1–24.

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive-developmental inquiry. The American Psychologist, 34, 906–911.

Flavell, J. H., & Wellman, H. M. (1977). Metamemory. In R. Kail & W. Hagen (Eds.), Perspectives on development of memory and cognition (pp. 3–31). Hillsdale, NJ: Erlbaum.

Friedrich, H. F., & Mandl, H. (1992). In H. Mandl & H. F. Friedrich (Eds.), Lern- und Denkstrategien. Analyse und Intervention (pp. 3–54). Göttingen: Hogrefe.

Gentner, D. (1983). Structure-mapping: A theoretical framework for analogy. Cognitive Science: A Multidisciplinary Journal, 7, 155–170.

Gentner, D. (1998). Analogy. In W. Bechtel & G. Graham (Eds.), A companion to cognitive science (pp. 107–113). Oxford: Blackwell.

Graesser, A. C., Wiley, J., Goldman, S. R., O’Reilly, T., Jeon, M., & McDaniel, B. (2007). SEEK Web Tutor: Fostering a critical stance while exploring the causes of volcanic eruption. Metacognition and Learning, 2, 89–105.

Greene, J. A., & Azevedo, R. (2010). The measurement of learners’ self-regulated cognitive and metacognitive processes while using computer-based learning environments’. Educational Psychologist, 45(4), 203–209.

Hasselhorn, M. (1995). Kognitives Training: Grundlagen, Begrifflichkeiten und Desirate. In W. Hager (Ed.), Programme zur Förderung des Denkens bei Kindern (pp. 14–40). Göttingen: Hogrefe.

Hermans, H., Petermann, F., & Zielinski, W. (1978). LMT—Leistungsmotivationstest. Amsterdam: Swets & Zeitlinger.

Hofer, B. (2004). Epistomological understanding as a metacognitive process: Thinking aloud during online searching. Educational Psychologist, 39, 43–55.

Kauffman, D. F., Ge, X., Xie, K., & Chen, C.-H. (2008). Prompting in web-based environments: Supporting self-monitoring and problem solving skills in college students. Journal of Educational Computing Research, 38, 115–137. doi:10.2190/EC.38.2.a.

Kramarski, B., & Feldman, Y. (2000). Internet in the classroom: Effects on reading comprehension, motivation and metacognitive awareness. Educational Media International, 37(3), 149–155.

Lin, X. (2001). Designing metacognitive activities. Educational Technology Research and Development, 49, 1042–1629.

Lin, X., Hmelo, C., Kinzer, C. K., & Secules, T. (1999). Designing technology to support reflection. Educational Technology Research and Development, 47(3), 43–62.

Lin, X., & Lehman, J. D. (1999). Supporting Learning of Variable Corntrol in a Computer-Based Biology Environment: Effects of Prompting College Students to Reflect on their own Thinking. Journal of Research in Science Teaching, 36(7), 837–858.

Manlove, S., Lazonder, A. W., & De Jong, T. (2007). Software scaffolds to promote regulation during scientific inquiry learning. Metacognition and Learning, 2, 141–155.

Nelson, T. O., & Narens, L. (1990). Metamemory: A theoretical framework and new findings. In G. Bower (Ed.), The psychology of learning and motivation (Vol. 26, pp. 125–173). New York: Academic.

Nückles, M., Hübner, S., & Renkl, A. (2009). Enhancing self-regulated Learning by writing learning protocols. Learning and Instruction, 19, 259–271.

Paris, S. G., & Newman, R. S. (1990). Developmental aspects of self-regulated learning. Educational Psychologist, 25, 87–102.

Pintrich, P. R., Smith, D. A. F., Garcia, T., & McKeachi, W. J. (1993). Reliability and predictive validity of the motivated strategies for learning questionnaire (MSLQ). Educational and Psychological Measure-ment, 53, 801–814.

Pintrich, P. R., Wolters, C. A., & Baxter, G. P. (2000). Assessing metacognition and self-regulated learning. In G. Schraw & J. C. Impara (Eds.), Issues in the measurement of metacognition (pp. 43–97). Lincoln, NE: Buros Institute of Mental Measurements.

Roll, I., Aleven, V., McLaren, B., & Koedinger, K. (2007). Designing for metacognition—applying Cognitive Tutor principles to metacognitive tutoring. Metacognition and Learning, 2(2–3), 125–140.

Roll, I., Aleven, V., McLaren, B. M., & Koedinger, K. R. (2011). Improving students’ help-seeking skills using metacognitive feedback in an intelligent tutoring system. Learning and Instruction, 21, 267–280. doi:10.1016/j.learninstruc.2010.07.004.

Schnotz, W. (1998). Strategy-specific information acccess in knowledge acquisition from hypertext. In L. B. Resnick, R. Säljö, C. Pontecorvo, & B. Burge (Eds.), Discourse, tools, and reasoning. Essays on situated cognition. Berlin: Springer.

Schnotz, W., & Bannert, M. (2003). Construction and interference in learning from multiple representation. Learning and Instruction, 13, 141–156.

Schraw, G. (2001). Promoting general metacognitive awareness. In H. Hartman (Ed.), Metacognition in learning and instruction. Theory, research and practice (pp. 3–16). Dordrecht: Kluwer Academic Publishers.

Schunk, D. H., & Zimmerman, B. J. (Eds.). (1998). Self-regulated learning. From teaching to self-reflective practice. New York, NY: Guilford.

Simons, P. R. J., & De Jong, F. P. (1992). Self-regulation and computer-assisted instruction. Applied Psychology: An International Review, 41, 333–346.

Sitzmann, T., Bell, B. S., Kraiger, K., & Kanar, A. M. (2009). A multilevel analysis of the effect of prompting self-regulation in technology-delivered instruction. Personnel Psychology, 62, 697–734. doi:10.1111/ j.1744-6570.2009.01155.x.

Sitzmann, T., & Ely, K. (2010). Sometimes you need a reminder: The effects of prompting self-regulation on regulatory processes, learning, and attrition. The Journal of Applied Psychology, 95, 132–144.

Stadtler, M., & Bromme, R. (2008). Effects of the metacognitive computer-tool metaware on the web search of laypersons. Computers in Human Behavior, 24, 716–737.

Stark, R., & Krause, U.-M. (2009). Effects of reflection prompts on learning outcomes and learning behaviour in statistics education. Learning Environments Research, 12, 209–223.

Sweller, J., van Merrienboer, J., & Paas, F. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296.

Valcke, M. (2002). Cognitive load: updating the theory? Learning and Instruction, 12, 147–154.

Veenman, M. V. (1993). Metacognitive ability and metacognitive skill: Determinants of discovery learning in computeriezed learning environments. Amsterdam: University of Amsterdam.

Veenman, M. V. (2005). The assessment of metacognitive skills: What can be learned from multi-method designs? In C. Artelt & B. Moschner (Eds.), Lernstrategien und Metakognition: Implikationen für Forschung und Praxis [Learning strategies and metacogntion]. Implications for Research and Practice. Münster: Waxmann.

Veenman, M. V. J. (2007). The assessment and instruction of self-regulation in computer-based environments: a discussion. Metacognition and Learning, 2, 177–183.

Veenman, M. J. V., Van Hout-Wolters, B.,& Afflerbach, P. (2006). Metacognition and learning: Conceptual and methodological considerations.Metacognition and Learning, 1, 3–14.

Veenman, M. J. V., Van Hout-Wolters, B., & Afflerbach, P. (2006). Metacognition and learning: Conceptual and methodological considerations. Metacognition and Learning, 1, 3–14.

Weinstein, C. E., Husman, J., & Dierking, D. R. (2000). Self-regulation interventions with a focus on learning strategies. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 727–747). San Diego, CA: Academic.

Weinstein, C. E., & Mayer, R. E. (1986). The teaching of learning strategies. In M. C. Wittrock (Ed.), Handbook of research and teaching (pp. 315–327). New York: Macmillan.

Wild, K. P., Schiefele, U., & Winteler, A. (1992). LIST. Ein Verfahren zur Erfassung von Lernstrategien im Studium (Gelbe Reihe: Arbeiten zur Empirischen Pädagogik und Pädagogischen Psychologie, Nr. 20) [LIST. A Questionaire of learning strategies in university students]. Neubiberg: Universität der Bundeswehr, Institut für Erziehungswissenschaft und Pädagogische Psychologie.

Winne, P. H. (1996). A metacognitive view of individual differences in self-regulated learning. Learning and Individual Differences, 8, 327–353.

Winne, P. H. (2001). Self-regulated learning viewed from models of information processing. In B. J. Zimmerman & D. H. Schunk (Eds.), Self-regulated learning and academic achievement: Theoretical perspectives (2nd ed., pp. 153–189). Mahwah, NJ: Lawrence Erlbaum Associates.

Zumbach, J., & Bannert, M. (2006). Special Issue: Scaffolding cognitive learner control mechanisms in individual and collaborative learning environments. Journal of Educational Computing Research, 4.

Acknowledgement

This research was supported by funds from the German Science Foundation (DFG: BA 2044/1-1, BA 2044/5-1).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Bannert, M., Mengelkamp, C. (2013). Scaffolding Hypermedia Learning Through Metacognitive Prompts. In: Azevedo, R., Aleven, V. (eds) International Handbook of Metacognition and Learning Technologies. Springer International Handbooks of Education, vol 28. Springer, New York, NY. https://doi.org/10.1007/978-1-4419-5546-3_12

Download citation

DOI: https://doi.org/10.1007/978-1-4419-5546-3_12

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4419-5545-6

Online ISBN: 978-1-4419-5546-3

eBook Packages: Humanities, Social Sciences and LawEducation (R0)