Abstract

This chapter reviews various factors that affect the speech-understanding abilities of older adults. Before proceeding to the identification of several such factors, however, it is important to clearly define what is meant by “speech understanding.” This term is used to refer to either the open-set recognition or the closed-set identification of nonsense syllables, words, or sentences by human listeners. Many years ago, Miller et al. (1951) demonstrated that the distinction between open-set recognition and closed-set identification blurs as the set size for closed-set identification increases. When words were used as the speech material, Miller et al. (1951) demonstrated that the closed-set speech-identification performance of young normal-hearing listeners progressively approached that of open-set speech recognition as the set size doubled in successive steps from 2 to 256 words. Clopper et al. (2006) have also demonstrated that lexical factors (e.g., word frequency and acoustic-phonetic similarity) impacting word identification and word recognition are very similar when the set size is reasonably large for the closed-set identification task and the alternatives in the response are reasonably confusable with the stimulus item. Thus the processes of closed-set speech identification and open-set speech recognition are considered to be very similar and both are referred to here as measures of “speech understanding.”

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

8.1 Introduction

This chapter reviews various factors that affect the speech-understanding abilities of older adults. Before proceeding to the identification of several such factors, however, it is important to clearly define what is meant by “speech understanding.” This term is used to refer to either the open-set recognition or the closed-set identification of nonsense syllables, words, or sentences by human listeners. Many years ago, Miller et al. (1951) demonstrated that the distinction between open-set recognition and closed-set identification blurs as the set size for closed-set identification increases. When words were used as the speech material, Miller et al. (1951) demonstrated that the closed-set speech-identification performance of young normal-hearing listeners progressively approached that of open-set speech recognition as the set size doubled in successive steps from 2 to 256 words. Clopper et al. (2006) have also demonstrated that lexical factors (e.g., word frequency and acoustic-phonetic similarity) impacting word identification and word recognition are very similar when the set size is reasonably large for the closed-set identification task and the alternatives in the response are reasonably confusable with the stimulus item. Thus the processes of closed-set speech identification and open-set speech recognition are considered to be very similar and both are referred to here as measures of “speech understanding.”

Additional insight into what is meant by “speech understanding” in this chapter can be gained by considering some of the things that it is not. For example, considerable speech-perception research has been conducted over the past half century in both young and old adults in which the discrimination of minimally contrasting speech sounds, often consonant-vowel or vowel-consonant syllables, has been measured. To illustrate the differences between speech recognition, speech identification, and speech-sound discrimination as used here, consider the following three tasks using the same consonant-vowel syllable, /ba/, as the speech stimulus. In open-set speech recognition, the listener is asked to say or write down the syllable that was heard after the /ba/ stimulus was presented. The range of alternative responses is restricted only by the experimenter’s instructions or the listener’s imagination. In closed-set speech identification, the same /ba/ stimulus is presented, but the listener is given a list of possible answers, such as /ba/, /pa/, /ta/ and /da/, only one of which corresponds to the stimulus that was presented. Finally, in minimal contrast speech-sound discrimination, some acoustic characteristic of the /ba/ stimulus is altered systematically, with the altered and one or more unaltered /ba/ stimuli presented in random sequence. The listener simply indicates whether the stimuli presented in the sequence were the same or different or may be asked to select the “different” stimulus from those presented in the sequence. In the discrimination task, the listener is never asked to indicate the sound(s) heard, only whether they were the same or different. As a result, this task is considered to be conceptually distinct from the processes of speech recognition and speech identification, and we do not consider this form of speech-sound discrimination to be a part of “speech understanding” as used here.

Finally, although definitions of the terms “understanding” and “comprehension” may be similar, speech comprehension is not used interchangeably with speech understanding in this review. Most often, comprehension is assessed with phrases or sentences and involves the deciphering of the talker’s intended meaning behind the spoken message. For example, consider the following spoken message: “What day of the week follows Thursday?” An open-set recognition task making use of this question might ask the listener to repeat the entire sentence and a speech-recognition score could be determined by counting the number of words correctly repeated (or the entire sentence could be scored as correct or incorrect). A closed-set identification task making use of this same speech stimulus might have seven columns of words (one for each word in the sentence), with four alternative words in each column, and the listener would be required to select the correct word from each of the seven columns. Or, for sentence-based scoring, four alternative sentences could be displayed orthographically from which the listener must select the one matching the sentence heard. For a comprehension task, either an open-set or closed-set version can be created. In the open-set version, the listener simply answers the question and a correct answer (“Friday,” in this example) implies correct comprehension. Likewise, four days of the week could be listed as response alternatives and the listener asked to select the correct response to the question (“Friday”) from among the alternatives. Comprehension is a higher-level process than either speech recognition or speech identification. Whereas it is unlikely that accurate comprehension can occur without accurate recognition or identification (e.g., imagine that “Tuesday” was perceived by the listener instead of “Thursday” in this example), accurate recognition or identification does not guarantee accurate comprehension.

In summary, the term “speech understanding” is used here to refer to the task of either open-set speech recognition or closed-set speech identification. It does not include tasks of minimal-contrast speech-sound discrimination or speech comprehension. (The latter topic is treated by Schneider, Pichora-Fuller, and Daneman, Chapter 7).

8.2 Peripheral, Central-Auditory, and Cognitive Factors

As noted in earlier chapters, as humans age, several changes may take place in their auditory systems that could have a negative impact on speech understanding. At the periphery, although age-related changes may occur in the outer and middle ears, these are largely inconsequential for everyday speech communication by older adults. On the other hand, age-related hearing loss (presbycusis) occurs in ∼30% of persons over the age of 60 (see Cruickshanks, Zhan, and Zhong, Chapter 9), which has serious consequences for speech understanding for most individuals. For example, functional deficits that accompany age-related hearing loss (elevated thresholds, reduced cochlear compression, broader tuning) can result in reduced speech understanding. These are related to anatomic and physiological changes in the auditory periphery, primarily affecting the cochlea and auditory nerve of older adults (reviewed in detail by Schmiedt, Chapter 2).

As noted by Canlon, Illing, and Walton, (Chapter 3), age-related changes can also occur at higher centers of the auditory portion of the central nervous system. These modality-specific deficits can, in turn, have a negative impact on speech understanding in older listeners. Moreover, as noted by Schneider, Pichora-Fuller, and Daneman (Chapter 7), there may be amodal changes in the cortex of older listeners that impact cognitive functions, such as speed of processing, working memory, and attention. These changes alone may result in reduced speech understanding in older listeners. However, age-related changes to the auditory periphery also degrade the speech signal delivered to the central nervous system for cognitive and linguistic processing so that the speech understanding problems of older listeners may represent the combined effects of peripheral, central-auditory, and cognitive factors.

In fact, prior reviews of the speech-understanding problems of older adults have framed their reviews around a similar “site of lesion” framework, with peripheral (primarily cochlear), central-auditory, and cognitive “sites” hypothesized as the primary contributors (Committee on Hearing, Bioacoustics, and Biomechanics [CHABA] 1988; Humes 1996). Although there is probably little disagreement among researchers as to the extent of speech-understanding difficulties experienced by older adults, the challenge has been in identifying the nature of the underlying cause(s) of these difficulties.

Of the peripheral, central-auditory, and cognitive explanations of age-related speech-understanding declines, the peripheral explanation in its simplest form (elevated hearing thresholds) is probably the most straightforward. In addition, given that the prevalence of hearing loss in older adults is greater than either central-auditory deficits (Cooper and Gates 1991) or mild cognitive impairment (Lopez et al. 2003; Portet et al. 2006), peripheral hearing loss is the most likely explanatory factor of the speech-understanding problems observed in older adults. If the peripheral explanation fails to account for the measured speech-understanding performance of older adults, then alternative and more complex mechanisms can be considered further.

8.2.1 Peripheral Factors

When evaluating the extent to which hearing loss of cochlear origin accounts for speech-understanding problems in older adults, several approaches have been pursued. Some of the more commonly pursued approaches are reviewed here.

8.2.1.1 Articulation Index Framework

One of the most powerful approaches used to explain the average speech-understanding performance of human listeners is the Articulation Index (AI) framework championed by Harvey Fletcher and his colleagues at Bell Labs in the 1940s and 1950s (French and Steinberg 1947; Fletcher and Galt 1950; Fletcher 1953). There have been many variations of the AI framework developed in the intervening 50 to 60 years. Although too numerous to review in detail here, notable variations include the first American National Standard of the AI (ANSI 1969); the Speech Transmission Index, which used the modulation transfer function to quantify the signal-to-noise ratio (SNR; Steeneken and Houtgast 1980; Houtgast and Steeneken 1985); and the most recent version of the American National Standard (ANSI 1997), in which the index was modified and renamed the Speech Intelligibility Index. Because there is more in common among these various indices than differences, the general expression “AI framework” will be used throughout this review to refer to any one of these indices.

Regardless of the specific version of the AI framework, the key conceptual components are very similar across versions. Central to this framework, for example, is the long-term average spectrum for speech, illustrated by the line labeled “rms” in Figure 8.1. This idealized spectrum represents the root-mean-square (rms) amplitude at each frequency measured using a 125-ms rectangular window and averaged over many seconds of running speech for a large number of male and female talkers. If this framework is to be used to estimate or predict speech-understanding performance of groups of individuals listening to specific speech stimuli, the long-term average spectrum of the actual speech materials spoken by the talker(s) used in the measurements should be substituted for the generic version shown in Figure 8.1. For comparison purposes, the average hearing thresholds for young normal-hearing (YNH) adults have also been provided in Figure 8.1

Root-mean-square (rms) long-term average speech spectrum for normal vocal effort from ANSI (1997) and the 30-dB range of speech amplitudes, 15 dB above and below the rms spectrum, which contribute to speech understanding according to the Articulation Index framework. For comparison, average hearing thresholds for young normal-hearing (YNH) adults along the same coordinates are shown.

A second critical concept in the AI framework is the identification of the range of speech sound levels above and below the rms long-term average spectrum that contribute to intelligibility. One way in which this range has been established has been strictly from acoustical measurements of the distribution of speech levels measured with the 125-ms window during running speech. The acoustical range is then described in terms of the 10th and 90th, 5th and 95th, or 1st and 99th percentiles from these speech-level distributions. Depending on the definition of speech peaks and speech minima used, the ranges derived have varied typically between 30 and 36 dB, with the speech peaks represented in an idealized fashion at either 12 or 15 dB above the long-term average spectrum (French and Steinberg 1947; Fletcher and Galt 1950; Pavlovic 1984). In the current American National Standard (ANSI 1997), a 30-dB range from 15 dB to −15 dB re the long-term average speech spectrum has been adopted, and this is illustrated in Figure 8.1. The basic concept behind the AI framework and its successors is that if the entire 30-dB range of speech intensities is audible and undistorted (i.e., heard and useable by the listener) from ∼100 to 8,000 Hz, then speech understanding will be optimal (typically >95% for nonsense syllables). As the area represented by this 30-dB range from 100 to 8,000 Hz, referred to here as the “speech area,” is reduced or distorted (meaning that portions of the speech are not heard or useable), then the AI decreases, which predicts a decrease in speech understanding. There are many ways in which the speech area can be diminished, including filtering, masking noise, and hearing loss. The power of this approach is that these multiple forms of degradation can be combined and reduced to a single metric, the AI, which is proportional to speech understanding regardless of the specific speech stimuli or the specific form of degradation or combination of degradations.

Before considering two other concepts central to the AI framework, perhaps an example of its application would be useful here. Imagine spectrally shaping a steady-state random noise so that its rms long-term average spectrum matches that of the speech stimulus. Given the matching of the long-term spectra, the adjustment of the overall level of the speech or noise to produce a specific SNR results in an identical change in SNR across the entire spectrum (i.e., the SNR is the same at each frequency). When using nonsense syllables as stimuli to minimize the contributions of higher-level processing, it has generally been found that speech-understanding performance increases above 0% at a SNR of −15 dB and increases linearly with increases in SNR until reaching an asymptote at 15 dB (Fletcher and Galt 1950; Pavlovic 1984; Kamm et al. 1985). SNRs of 10, 0 and −10 dB are illustrated in Figure 8.2, along with the AI values for these conditions (ANSI 1997). In this special case of identical long-term spectra between the noise and the speech stimuli (and no other factors limiting the speech area), the AI can be easily calculated from the SNR alone as follows: AI = (SNR [in dB] + 15 dB)/30 dB. In this case, the AI is the proportion of the speech area in Figure 8.2 that remains visible (audible) in the presence of the competing background noise.

Audibility of the 30-dB speech area across frequency as the signal-to-noise ratio (SNR) is varied from 10 dB (top) to 0 dB (middle) to −10 dB (bottom). The competing noise is steady state and is assumed to have a spectrum identical to that of the speech stimulus. Its amplitude is represented by the shaded grey area. The values calculated for the Articulation Index (AI) framework from ANSI (1997) are also shown.

Another central concept in this framework is acknowledging that actual speech-understanding performance for a given acoustical condition (such as any of the three conditions illustrated in Fig. 8.2) will vary with the type of speech materials used to measure speech understanding. This is illustrated by the transfer functions relating percent correct performance by YNH adults to the AI in Figure 8.3. The functions in the top panel were derived for nonsense syllables, using either an open-set speech-recognition task (Fletcher and Galt 1950) or a closed-set speech-identification task having seven to nine response alternatives per test item (Kamm et al. 1985). The two functions for nonsense syllables in the top panel are very similar and both show monotonic increases in speech understanding with increases in the AI. The functions in Figure 8.3, bottom, were derived from those developed by Dirks et al. (1986) for the Speech Perception in Noise (SPIN) test (Kalikow et al. 1977; Bilger et al., 1984). This test requires open-set recognition of the final word in the sentence. The words preceding the final word provide either very little semantic context (predictability-low [PL] sentences) or considerable semantic context (predictability-high [PH] sentences). The dotted vertical lines in Figure 8.3 correspond to the AI values of 0.17, 0.5, and 0.83 associated with the SNRs of −10, 0, and 10 dB displayed previously in Figure 8.2. The intersections of these dotted vertical lines with the various transfer functions in Figure 8.3 indicate the predicted speech-understanding scores for these conditions and speech materials. Thus when desiring to map AI values to scores for specific sets of speech materials, the transfer function relating the AI to percent correct speech understanding for those materials must be known. In all cases, however, there is a monotonic increase in speech-understanding performance, with increases in AI (except for AI values > ∼0.6 where the SPIN-PH function asymptotes at 100%).

Transfer functions relating the percent correct speech-understanding score to the AI for nonsense syllables (top) and the predictability-low (PL) and predictability-high (PH) sentences of the Speech Perception in Noise (SPIN) test (bottom; Kalikow et al. 1977; Dirks et al. 1986). Dotted vertical lines are the AI values corresponding to those in Figure 8.2 for SNRs of −10, 0, and 10 dB.

The final central concept of the AI framework reviewed here is referred to as the band-importance function. As noted, in addition to the noise masking illustrated in Figure 8.2, another way to reduce the audible speech area is via low-pass or high-pass filtering. Early in the development of this framework, based largely on extensive studies of low-pass and high-pass filtered speech, it was recognized that certain frequency regions contributed more to speech understanding than others (French and Steinberg 1947; Fletcher and Galt 1950). For example, for the open-set recognition of nonsense syllables, acoustic cues in the frequency region from 1,000 to 3,000 Hz contribute much more to speech understanding than do higher- or lower-frequency regions. This is managed within the AI framework by dividing the horizontal (frequency) dimension of the speech area in Figure 8.1 into a specific number of bands and then weighting each band according to its empirically measured importance. The weights assigned to each band sum to 1.0 and are used as multipliers of the SNR measured in each of the bands.

One approach to partitioning the frequency axis would be to simply adjust the upper and lower frequencies of each band so that each band contributed equally to speech understanding; for example, with 20 bands from 100 to 8,000 Hz, each would contribute 1/20 or 0.05 AI (French and Steinberg 1947). If a low-pass filter rendered the 10 highest-frequency bands inaudible while the SNR was 15 dB in the remaining lower-frequency bands, there would be 10 bands with full contribution (0.05) and 10 with no contribution, for a total AI value of 0.5 [(10 × 0.05) + (10 × 0.0)]. Note that the same would hold true for a complementary high-pass filter that rendered the lowest 10 bands inaudible while keeping the SNR optimal (15 dB) in the 10 higher-frequency bands. As demonstrated by the 0-dB SNR in Figure 8.2, middle, this condition also yielded an AI of 0.5. Thus given a single transfer function relating the value of the AI to speech-understanding performance in percent correct (e.g., Fig. 8.3), all three of these conditions (low-pass filtering, high-pass filtering, and 0-dB SNR) resulted in an AI value of 0.5 and would be expected to yield the same speech-understanding score. This again is the power behind the AI framework. Different acoustical factors that impact speech understanding in various ways can be reduced to a single value that is predictive of speech-understanding performance regardless of the way in which the speech area has been modified. It is important to recognize, however, as did the developers of the AI framework, that the predictions are made for average speech-understanding scores for a group of listeners (YNH listeners when initially developed). The index was not designed to predict performance for an individual, although it has been used in this manner, and it does not suggest that specific errors resulting from a given form of distortion will be the same for all listeners, even though their speech-understanding scores might be identical.

How can the sensorineural hearing loss associated with aging be included in the AI framework? This is illustrated in Figure 8.4, top, where the median hearing thresholds for males 60, 70, and 80 years of age (ISO 2000) have been added to the information shown previously in Figure 8.1. In this situation, with conversational level (62.5-dB SPL) speech presented in quiet, the loss of high-frequency hearing with advancing age progressively reduces the audibility of the speech area and decreases speech understanding. The bottom panel illustrates a situation with speech at the same level and the level of a speech-shaped steady-state background noise set to achieve a 0-dB SNR. AI values were calculated from ANSI (1997) for each of the three age groups and both of the listening conditions shown in Figure 8.4, and appear in Table 8.1. The SPIN transfer functions in Figure 8.3, bottom, were then used to estimate speech-understanding scores for low- and high-context sentences from the calculated AI values and these also are provided in Table 8.1. With regard to the low-context SPIN-PL materials, note that as age and corresponding high-frequency hearing loss increase, the AI decreases and so do the predicted speech-understanding scores. This is true for both quiet and noise and is consistent with the progressively diminishing portions of the speech area available to the listener, as illustrated in Figure 8.4. Note that the pattern is somewhat different, however, for the high-context sentences (SPIN-PH). In this case, even though the value of the AI for the 80 year olds in quiet is about half that of the young adults in quiet, the estimated speech-understanding performance is 99-100% for all listener groups. This is because of the broad asymptote at 100% performance in the transfer function for the SPIN-PH materials (Fig. 8.3, bottom). When noise is added, however, a considerable decline in performance is observed for the oldest age group. If one considers the high-context sentences to be most like everyday communication, then the latter pattern of predictions for the 80 year olds is consistent with their most frequent clinical complaint: they can hear speech but can’t understand it, especially with noise in the background.

Depiction of the speech area in quiet (top) and in a background of speech-shaped noise (bottom). Also shown are median hearing thresholds of 60-, 70- and 80-year-old men from ISO (2000).

There have been numerous attempts to make use of the AI framework in research on speech understanding in older adults. In general, for quiet or steady-state background-noise listening conditions, including multitalker babble (≥8 talkers), group results have been fairly well captured by the predictions of this framework (e.g., Pavlovic 1984; Kamm et al. 1985; Dirks et al. 1986; Pavlovic et al. 1986; Humes 2002). Even here, however, large individual differences have been observed (Dirks et al. 1986; Pavlovic et al. 1986; Humes 2002). In a study of 171 older adults listening to speech (nonsense syllables and sentences) with and without clinically fit hearing aids worn in quiet and background noise (multitalker babble), Humes (2002) found that 53.2% of the variance in speech-understanding performance of the older adults could be explained by the AI values calculated for these same listeners. An additional 11.4% of the total variance could be accounted for by measures of central-auditory or cognitive function. For clinical measures of speech understanding, including those used by Humes (2002), test-retest correlations for the scores are typically between 0.8 and 0.9. This suggests that the total systematic variance (nonerror variance) that one could hope to account for would vary between 64 and 81%. Accounting for 53.2% of the total variance in this context suggests that the primary factor impacting speech-understanding performance in these 171 older adults was the audibility of the speech area. The AI concept only takes into consideration speech acoustics and the hearing loss of the listener. Other peripheral dysfunction, such as reduced cochlear nonlinearities (Dubno et al. 2007; Horwitz et al. 2007) or higher-level deficits such as central-auditory or cognitive difficulties, are not incorporated into this conceptual framework. Thus to the extent that the AI framework provides accurate descriptions of the speech-understanding performance of older adults in these listening conditions, age-related threshold elevation and resulting reductions in speech audibility underlie their speech-understanding performance. (As noted, however, higher-level factors did account for significant, albeit small, amounts of additional variance in Humes [2002].)

When the AI framework largely explains performance for older adults and the only listener variable accounted for by the framework is hearing loss, strong correlations should be observed between measured hearing loss for pure tones and speech understanding in older adults. This is consistent with the notion that the listener variable of age contributes substantially less, if at all, to speech understanding. This has, in fact, been observed in many correlational studies of speech understanding in older adults (van Rooij et al. 1989; Helfer and Wilber 1990; Humes and Roberts 1990; van Rooij and Plomp 1990, 1992; Humes and Christopherson 1991; Jerger et al. 1991; Helfer 1992; Souza and Turner 1994; Humes et al. 1994; Divenyi and Haupt 1997a, b, c; Dubno et al. 1997; Jerger and Chmiel 1997; Dubno et al. 2000; Humes 2002; George et al. 2006, 2007). In such studies, the primary listening conditions have been quiet or steady-state background noise (occasionally including multitalker babble). Hearing loss was the primary factor identified as a contributor to the speech-understanding performance of the older adults in each of these studies and typically accounted for 50-90% of the total variance.

Given the primacy of hearing loss as an explanatory factor for the speech-understanding performance of older adults and the fact that hearing loss is the only listener-related variable entered into typical AI frameworks, why bother with a more complex AI framework? There are several reasons to do so. First, there are special listening situations that might dictate the entry of other listener-related variables to obtain a more complete description of speech-understanding performance in noise. For example, Dubno and Ahlstrom (1995a, b) have measured the speech-understanding performance of older adults in the presence of intense low-pass noise. Rather than relying on acoustic measures of SNR in each frequency region, the AI framework provides a means to incorporate expected upward spread of masking from intense low-pass noise and its impact on the speech area when calculating AI values. This has been a feature of this predictive framework from the outset. However, Dubno and Ahlstrom (1995a, b) found that AI predictions were more accurate when the actual masked thresholds of the listener in the presence of the low-pass noise were used rather than relying solely on expected masked thresholds and hearing thresholds. This means that the higher-than-normal masked thresholds of older listeners with hearing loss further reduced their audible speech area, which accounted for their poorer-than-normal speech understanding. Note that these results are consistent with the earlier conclusion that elevated thresholds (in this case, masked thresholds) and limited audibility of the speech area are the primary factors underlying the speech-understanding performance of older adults.

Another example of the utility of the AI framework relates to the dependence of speech-understanding performance on speech presentation level. From the outset, Fletcher and colleagues built into the framework a decrease in the AI and, therefore, speech understanding, at high presentation levels. This has been confirmed more recently by Studebaker et al. (1999), Dubno et al. (2005a, b), and Summers and Cord (2007). For a fixed acoustical SNR, as the speech presentation level exceeds ∼70-dB SPL, speech-understanding performance decreases in YNH listeners (Dubno et al. 2005a,b) as well as in older listeners with mildly impaired hearing (Dubno et al. 2006). With SNR held constant, declines in performance with increases in level were largely accounted for by higher-than-normal masked thresholds and concurrent reductions in speech audibility, as explained earlier. In some studies conducted previously, a constant SPL might be used that is either the same for all listeners, young and old, normal hearing, and hearing impaired or varied with hearing status (lower levels for YNH listeners and higher levels for those with hearing loss). In the latter case, level differences across groups may result, and these level differences may impact the measured speech-understanding performance. In other studies, a constant sensation level might be employed such that individual listeners within a group receive different presentation levels. In such cases, it is critical to model the corresponding changes in speech presentation level and the parallel changes in the AI.

Aside from these specific cases for which the complete AI framework might be needed, the central concepts of the framework are critical to obtaining a clear picture of the impact of various listening conditions on the audibility of the speech area (e.g., Humes 1991; Dubno and Schaefer 1992; Dubno and Dirks 1993; Lee and Humes 1993; Dubno and Ahlstrom 1995a, b; Humes 2002, 2007, 2008). To illustrate this, consider the following common misconception in research on speech understanding in older adults. It is commonly assumed that increasing the presentation level of the speech stimulus without changing its spectrum can result in optimal audibility of the speech area. This misconception is illustrated in Figure 8.5, which is basically identical to Figure 8.2 except that the speech presentation level has been increased to 90-dB SPL. Clearly, although speech energy at this level is well above the low- and mid-frequency hearing thresholds, the median high-frequency thresholds for 70- and 80-year-old men render portions of the speech area inaudible, even at this high presentation level of 90-dB SPL. Furthermore, the reader should keep in mind that median thresholds are shown in Figure 8.5. That is, for 50% of the older adults with hearing thresholds higher than those shown for each age group, still less of the speech area will be audible in the higher frequencies. It should also be kept in mind that this illustration is for undistorted speech in quiet. Recall, moreover, that use of high presentation levels alone can result in decreased speech-understanding performance, even in YNH adults (e.g., Studebaker et al. 1999). It is apparent that use of a high presentation level alone is insufficient to ensure the full audibility of the speech area for listeners with high-frequency hearing loss.

Median hearing thresholds for 60-, 70- and 80-year-old men superimposed on the speech area when the level of speech has been increased from normal vocal effort (62.5-dB SPL) to a level of 90-dB SPL. Note that even at this high presentation level the high-frequency portions of the speech stimulus are not fully audible.

In summary, the AI framework is capable of making quantitative predictions of the speech-understanding performance of older listeners, which are reasonably accurate for quiet listening conditions and conditions including steady-state background noise. To the extent that the AI framework provides an adequate description of the data, elevated thresholds and a reduction in speech audibility (the simplest of the peripheral factors) explain the speech-understanding difficulties of older adults.

Although the AI framework has proven to be an extremely useful tool in understanding the speech-understanding problems of older adults, it is not without limitations. For example, it has primarily been developed from work on speech in quiet at different presentation levels, filtered speech, and speech in steady-state background noise. It has also been applied to reverberation, either alone or in combination with some of the factors noted in the preceding statement, but with less validation of this application. It has not been developed for application to many other forms of temporally distorted speech, such as interrupted or time-compressed speech, or for temporally fluctuating background noise, although there have been attempts to extend the framework to at least some of these cases (e.g., Dubno et al. 2002b; Rhebergen and Versfeld 2005; Rhebergen et al. 2006). Moreover, the AI framework has not been applied and validated on a widespread basis to sound-field listening situations in which binaural processing is involved, although, again, there have been attempts to extend it to some of these situations (e.g., Levitt and Rabiner 1967; Zurek 1993; Ahlstrom et al. 2009). Finally, the framework makes identical predictions for competing background stimuli as long as their rms long-term average spectra are equivalent, but performance can vary widely in these same conditions. For example, AI predictions would be the same for speech with a single competing talker in the background whether that competing speech was played forward or in reverse, yet listeners find the latter masker to be much less distracting and have higher speech-understanding scores as a result (e.g., Dirks and Bower 1969; Festen and Plomp 1990; Humes et al. 2006).

8.2.1.2 Plomp’s Speech-Recognition Threshold Model

The focus in this review thus far has been on listening conditions that are acoustically fixed in terms of speech level and SNR, with the percent correct speech-understanding performance determined for nonsense syllables, words, or sentences. An alternate approach is to adaptively vary the SNR (usually, the noise level is held constant and the speech level varied) to achieve a criterion performance level, such as 50% correct (Bode and Carhart 1974; Plomp and Mimpen 1979a; Dirks et al. 1982). Plomp (1978, 1986) specifically made use of sentence materials and a spectrally matched competing steady-state noise and developed a simple, but elegant, model of speech-recognition thresholds (SRTs) in quiet and noise. Briefly, the SRT (50% performance criterion) for sentences is adaptively measured in quiet and then in increasing levels of noise in a group of YNH listeners. This provides a reference function that is illustrated by the solid line in Figure 8.6. Next, comparable measurements are made in older adults with varying degrees of hearing loss. Hypothetical functions for two older hearing-impaired listeners (HI1 and HI2) are shown as dashed lines in Figure 8.6. Note that both have somewhat similar amounts of hearing loss for speech (elevated SRTs) in quiet and in low noise levels (far left) but that as noise level increases, HI1’s SRT is equal to that of the YNH adults, whereas HI2’s SRT runs parallel to but higher than the normal-hearing function. The elevated parallel function reflects the observation that at high noise levels, HI2 needs a better-than-normal SNR to achieve 50% correct. Plomp (1978, 1986) argued that this reflected additional “distortion” above and beyond the loss of audibility, most likely associated with other aspects of cochlear pathology such as decreased frequency resolution. This SRT model was subsequently supported by a large amount of data on the SRT in quiet and in noise in older adults obtained by Plomp and Mimpen (1979b). The need for a better-than-normal SNR by some older adults for unaided SRT in noise has been clearly established in these studies. However, the need to invoke some form of “distortion,” other than that associated with reduced speech audibility due to hearing loss in the high frequencies, is much less clear.

The AI framework described in Section 2.1.1 offers an alternate interpretation of the higher-than-normal SRT in noise observed in some older adults. Van Tasell and Yanz (1987) and Lee and Humes (1993), for instance, argued that, given the high-frequency sloping nature of the hearing loss in older adults, the “distortion” could instead be a manifestation of the loss of high-frequency portions of the speech area. The argument presented by Lee and Humes (1993) is illustrated schematically in Figure 8.7. For the SRT in noise measurements, YNH listeners typically reach SRT (50% correct) for sentences at a −6-dB SNR. This is shown in Figure 8.7, top, in which the rms level of the noise (shaded region) exceeds the rms level of the speech by 6 dB. This corresponds to an AI value of 0.3. When the speech and noise remain unchanged and are presented to a typical 80-year-old man, his high-frequency sensorineural hearing loss makes portions of the speech spectrum inaudible, as shown in Figure 8.7, middle. This reduction in audible bandwidth lowers the AI to a value of 0.2 and performance can be expected to be below 50% (SRT). As a consequence, the speech level must be raised or the noise level reduced to improve the SNR and reach an AI value of 0.3 for 50% correct performance (or SRT). Using the AI (ANSI 1997) and reducing the noise level to improve the SNR (Fig. 8.7, bottom) resulted in a SNR of −1 dB for the 80-year-old man to achieve 50% correct performance (SRT). Thus the AI framework indicates that to achieve an AI value equal to that of the YNH adults, the 80-year-old man will require a 5-dB better SNR. This can be accounted for entirely, however, by the reduced audibility of the higher frequencies due to the peripheral hearing loss. There is no need to invoke other forms of “distortion” to account for these findings. In fact, Plomp (1986) presented data that support this trend for YNH listeners undergoing low-pass filtering of the speech and noise, with cutoff frequency progressively decreasing in octave steps from 8,000 Hz to 1,000 Hz. That is, as the low-pass filter cut away progressively more of the speech area, the speech level needed to reach SRT (i.e., 50% correct recognition in noise) increased proportionately. The amount of increase in SNR, moreover, is in line with that observed frequently in older adults with high-frequency hearing loss. Identical patterns of results have been observed for YNH listeners listening to low-pass filtered speech using versions of Plomp’s test developed using American English speech materials, such as the Hearing in Noise Test (HINT; Nilsson et al. 1994) and the Quick Speech in Noise (Quick-SIN) test (Killion et al. 2004). This audibility-based explanation of “distortion” is also consistent with the findings of Smoorenburg (1992) from 400 ears with noise-induced hearing loss, a pattern of hearing loss primarily impacting the higher frequencies similar to presbycusis in which hearing thresholds at 2,000 and 4,000 Hz were found to be predictive of SRT in noise. Moreover, Van Tasell and Yanz (1987) demonstrated in young adults with moderate-to-severe high-frequency sensorineural hearing loss that if the audibility of the speech area was restored through amplification, the need for a better-than-normal SNR disappeared in most listeners. Thus the “distortion” and “SNR loss” reported in older adults with high-frequency hearing loss may, in fact, simply be manifestations of the restricted bandwidth or loss of the high-frequency portion of the speech area experienced by older listeners.

Schematic illustration of the need for a better-than-normal SNR at SRT for listeners with high-frequency hearing loss. Top: based on SRT for YNH listeners in Figure 8.6, for a speech Fig. 8.7 (continued) level of 62.5 dB SPL (normal vocal effort), a noise level of 68.5 dB SPL would correspond to SRT (50% correct). This corresponds to a SNR of −6 dB and yields an AI value of 0.3. Middle: median hearing thresholds for an 80-year-old man are added to the same −6-dB SNR shown in top panel. Because the high-frequency hearing loss has rendered some of the speech (and noise) inaudible, the AI for this older adult has been reduced to a value of 0.2. Given that an AI of 0.3 was established as corresponding to 50% correct or SRT in top panel, the older adult would perform worse than 50% correct under these conditions. Bottom: keeping the speech level the same as in top and middle panels, the noise level was decreased 5 dB (to an SNR of −1 dB) to make the AI equal to that of the top panel (0.3) and restore performance to SRT (50% correct). Thus the 80-year-old adult needed a 5-dB better-than-normal speech level to reach SRT.

Of course, the elevated SRT in noise could be a manifestation of some other form of distortion (peripheral or central in origin), but it is not possible to isolate these two possibilities from the basic SRT in noise paradigm alone. Use of the AI framework, together with the SRT in noise measurements, could help disentangle bandwidth restriction from true “distortion.” Another way in which this could be accomplished is to make use of low-pass-filtered speech and noise (with complementary high-pass masking noise) in both YNH and older hearing-impaired adults with the cutoff frequency set to ∼1,500 Hz. In this case, the high frequencies are inaudible to normal-hearing and hearing-impaired listeners alike and a SNR that is higher than that required for broadband speech should be required for the younger listeners with normal hearing. If older hearing-impaired adults still require a better-than normal SNR under the same test conditions, then it should not be due to the restricted bandwidth that was limiting performance in the broadband listening condition but some other “distortion” process. In fact, Horwitz et al. (2002) did just this experiment in six young adults and six older adults with high-frequency sensorineural hearing loss. For broadband (unfiltered) speech in noise, the older group needed about a 12-dB better SNR than the younger adults. When low-pass-filtered speech and noise were used, however, the older adults only needed a SNR that was ∼3-6 dB better than that of the younger group. The interpretation of these results, within the framework described above, is that about half or more of the initial “distortion” measured in the broadband conditions was attributable to bandwidth restriction (high-frequency hearing loss) but that the balance of the deficit could not be accounted for in this way. On the other hand, Dubno and Ahlstrom (1995a, b) found a close correspondence between the performance of YNH and older hearing-impaired listeners for low-pass speech and noise, whereas Dubno et al. (2006) found ∼40-50% of older adults with very slight hearing loss in the high frequencies had poorer performance for low-pass speech and noise than YNH adults. These conflicting group data suggest that there may be considerable individual variation in the factors underlying the performance of older adults. This, in turn, suggests that the use of low-pass-filtered speech in noise might prove helpful in determining whether elevated SNRs in older adults with impaired hearing result from restricted bandwidths or from true “distortion.” Differentiating these causes is important because bandwidth restrictions could be more easily addressed via well-fit amplification than other forms of distortion.

Another approach to disentangling these two explanations for elevated SRTs in noise in older adults is to make sure that the experimental conditions are selected so that the background noise rather than the elevated quiet thresholds is limiting audibility at the higher frequencies in broadband test conditions. This can be accomplished either by the right combination of high noise levels and milder amounts of hearing loss (Lee and Humes 1993) or through spectral shaping of the speech and noise (George et al. 2006, 2007).

8.2.1.3 Simulated Hearing Loss

Another approach to evaluating the role played by peripheral hearing loss in the speech-understanding problems of older adults is more empirical in nature and attempts to simulate the primary features of cochlear-based sensorineural hearing loss in YNH listeners. In most of these studies, noise masking was introduced into the YNH adult’s ear to produce masked thresholds that matched the quiet thresholds of an older adult with impaired hearing. According to the AI framework, these two cases would yield equivalent audibility of the speech area and equivalent performance would be predicted. This has been the case in many such studies measuring speech understanding in quiet and steady-state background noise (Fabry and Van Tasell 1986; Humes and Roberts 1990; Humes and Christopherson 1991; Humes et al. 1991; Dubno and Ahlstrom 1995a, b; Dubno and Schaefer 1995) as well as in several studies using this approach to study the recognition of reverberant speech (Humes and Roberts 1990; Humes and Christopherson 1991; Halling and Humes 2000).

When attempting to simulate the performance of older adults in quiet, however, one of the differences between the older adults with actual hearing loss and the young adults with noise-simulated hearing loss is that only the latter group is listening in noise. The noise, audible only to the younger group with simulated hearing loss, could tax cognitive processes, such as working memory (Rabbit 1968; Pichora-Fuller et al. 1995; Surprenant 2007), and impair performance even though audibility was equivalent for both the younger and older listeners. Dubno and colleagues (Dubno and Dirks 1993; Dubno and Ahlstrom 1995a, b; Dubno and Schaefer 1995; Dubno et al. 2006) addressed this by conducting a series of studies of the speech-understanding performance of YNH and older hearing-impaired listeners in which a spectrally shaped noise was used to elevate the hearing thresholds of both groups. In particular, a spectrally shaped noise was used elevated the hearing thresholds of the (older) hearing-impaired listeners slightly (3-5 dB) and then elevated the pure-tone thresholds of the YNH listeners to those same target levels. This produces equivalent audibility and conditions involving listening in noise for both groups. The results obtained by Dubno and colleagues using this approach have generally revealed good agreement between the performance of young adults with simulated hearing loss and older adults with impaired hearing, with some notable exceptions (Dubno et al. 2006).

8.2.1.4 Factorial Combinations of Age and Hearing Status

Probably the experimental paradigm that has yielded results most at odds with the simple peripheral explanation, especially for temporally degraded speech and complex listening conditions, has been the independent-group design with factorial combinations of age (typically, young and old adults) and hearing status (typically, normal and impaired). This approach provides the means to examine not only the main effects of age and hearing loss but also their interaction. Although the details vary across studies, the general pattern that emerges is that hearing loss primarily determines performance in quiet or in steady-state background noise but that age or the interaction of age with hearing loss impacts performance in conditions involving temporally distorted speech (i.e., time compression, reverberation) or competing speech stimuli (Dubno et al. 1984; Gordon-Salant and Fitzgibbons 1993, 1995, 1999, 2001; Versfeld and Dreschler 2002). It should be noted that the effects of age or the combined effects of age and hearing loss on auditory temporal processing (e.g., Schneider et al. 1994; Strouse et al. 1998; Snell and Frisina 2000) or the understanding of temporally degraded speech (especially time-compressed speech; e.g., Wingfield et al. 1985, 1999) have been observed using other paradigms as well.

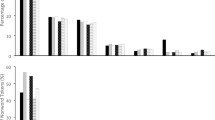

Figure 8.8 illustrates some typical data for each of the four groups common to such factorial designs for quiet (top) and 50% time compression (bottom). Note that the pattern of findings across the four groups differs in the two panels. Figure 8.8, top, illustrates the pattern of mean data observed for a main effect of hearing loss and no effect of age (and no interaction between these two variables). Both hearing-impaired groups perform worse than the two normal-hearing groups on speech-understanding measures in quiet. This pattern has been typically observed in such factorial independent-group studies for speech understanding in quiet, in steady-state noise, and, occasionally, in reverberation. Figure 8.8, bottom, shows the mean speech-understanding scores from the same study (Gordon-Salant and Fitzgibbons 1993) for 50% time compression. A similar pattern of results was obtained in this same study for reverberant speech and interrupted speech (with several values of time compression, reverberation, and interruption examined). The pattern of mean data in Figure 8.8, bottom, clearly reveals that both age and hearing loss have a negative impact on speech-understanding performance. Interestingly, even in this study by Gordon-Salant and Fitzgibbons (1993), correlational analyses revealed strong associations between high-frequency hearing loss and the speech-understanding scores for time-compressed speech and mildly reverberant speech (reverberation time of 0.2 s).

Speech-understanding scores from four listener groups for speech in quiet and 50% time-compressed speech. Values are means ± SD. YNH, young normal hearing; ENH, elderly normal hearing; YHI, young hearing impaired; EHI, elderly hearing impaired. Data are from Gordon-Salant and Fitzgibbons (1993).

It can be very challenging to find sufficient numbers of older adults with hearing sensitivity that is precisely matched to that of younger adults. One way to address this limitation is using the factorial design in conjunction with the AI framework to account for effects of any differences in thresholds between groups of younger and older subjects with normal or impaired hearing (as in Dubno et al. 1984).

In the factorial-design studies cited above, for example, there were differences in high-frequency hearing thresholds between the YNH adults and the elderly normal-hearing adults, especially at the higher frequencies reported. Speech stimuli, however, were presented at stimulus levels that were sufficient to ensure full audibility of the speech stimuli for the normal-hearing groups through at least 4,000 Hz. This assumes that, consistent with the AI framework and the simple peripheral explanation, reduced audibility of the higher frequencies is the only consequence of slight elevations in high-frequency hearing thresholds in the older adults. If, however, elevated thresholds were considered “markers” of underlying cochlear pathology, then use of high presentation levels will not compensate for this difference between the young and old “normal-hearing” groups (Humes, 2007). That is, the young group has no apparent cochlear pathology based on hearing thresholds <5- to 10-dB hearing loss through 8,000 Hz, whereas the older “normal-hearing” group has high-frequency hearing thresholds that often are elevated to at least 15- to 20-dB hearing loss at 4,000 Hz and still higher at 6,000 and 8,000 Hz. Thus although the pattern of findings from factorial independent-group designs for temporally distorted speech are not consistent with the simplest peripheral explanation, given significant differences in high-frequency hearing thresholds between the young and old normal-hearing groups, it is not possible to rule out that second-order peripheral factors were contributing to the age-group differences.

8.2.1.5 Longitudinal Studies

Nearly all of the studies of the speech-understanding difficulties of older adults conducted to date have used cross-sectional designs and multivariate approaches. As noted in this review, these laboratory studies indicate that hearing loss or audibility is the primary factor contributing to individual differences in speech recognition of older adults, with age and cognitive function accounting for only small portions of the variance. These results are consistent with those of large-scale cross-sectional studies reporting that age-related differences in speech recognition were accounted for by grouping subjects according to audiometric configuration or degree of hearing loss (e.g., Gates et al. 1990; Wiley et al. 1998).

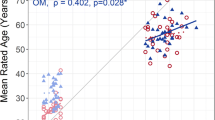

Different conclusions were reached by Dubno et al. (2008b), however, in a longitudinal study of speech understanding using isolated monosyllabic words in quiet (n = 256) and key words in high- and low-context sentences in babble (n = 85). Repeated measures were obtained yearly (words) or every 2-3 years (sentences) over a period of (on average) 7 years (words) to 10 years (sentences). To control for concurrent changes in pure-tone thresholds and speech levels over time for each listener, speech-understanding scores were compared with scores predicted using the AI framework. Recognition of key words in sentences in babble, both low and high context, did not decline significantly with age. However, recognition of words in quiet declined significantly faster with age than predicted by declines in speech audibility. As shown in Figure 8.9, the mean observed scores at younger ages were better than predicted. whereas the mean observed scores at older ages were worse than predicted. That is, as subjects aged, their observed scores deviated increasingly from their predicted scores but only for words in quiet. The rate of decline did not accelerate with age but increased with the degree of hearing loss. This suggested that with more severe injury to the auditory system, impairments to auditory function other than reduced audibility resulted in faster declines in word recognition as subjects aged.

Top: observed word-recognition scores and scores predicted by the AI for laboratory visits 1-13 at which NU#6 scores were obtained plotted as a function of mean age during those visits. Values are means ± SE in percent correct. Bottom: mean differences between observed (obs) and predicted (pred) scores (triangles) for the same laboratory visits plotted as a function of mean age during those visits. A linear regression function fit to obs-pred differences is shown (solid line) along with the slope (%/year). *Significant difference. (Reprinted with permission from Dubno et al. 2008. Copyright 2008, Acoustical Society of America).

These results were consistent with the small (n = 29) longitudinal study of Divenyi et al. (2005), which found that speech-recognition measures in older subjects declined with increasing age more rapidly than their pure-tone thresholds. In the Divenyi et al. (2005) study, however, all 29 older adults selected for follow-up evaluation were among those who had “above-average results” on the large test battery during the initial assessment. Thus it is possible that the decline over time could have been exaggerated by statistical regression toward the mean. In Dubno et al. (2008), on the other hand, all the assessments of speech understanding were unaided, but 31% of the subjects reported owning and wearing hearing aids (only 55% were considered to be hearing-aid candidates). Declines in unaided performance have been observed more frequently among older hearing-aid wearers, even though aided performance remained intact, at least for longitudinal studies over a 2- to 3-year period (Humes et al. 2002; Humes and Wilson 2003). In addition, even though the declines in speech understanding over time observed by Dubno et al. (2008) were more rapid than concomitant declines in pure-tone hearing thresholds, the correlations between high-frequency hearing sensitivity and speech-understanding scores were still −0.60 and −0.64 for the initial and final measurements, respectively (accounting for 36-41% of the variance). More longitudinal studies of speech understanding in older adults are needed before it will be possible to determine if the trends that emerge from this research design are compatible with those from the much more common cross-sectional designs.

8.2.1.6 Limitations of the Peripheral Explanation

To recap, for speech in quiet or speech in steady-state background noise, with the speech presented at levels ranging from 50- to 90-dB SPL, the evidence clearly supports the simplest form of the peripheral explanation (i.e., audibility) as the primary factor underlying the speech-understanding problems of older adults. Even for some forms of temporally distorted speech, such as time compression and reverberation, high-frequency hearing loss is often the primary factor accounting for individual differences in speech-understanding performance among older adults (Helfer and Wilber 1990; Helfer 1992; Gordon-Salant and Fitzgibbons 1993, 1997; Halling and Humes 2000; Humes 2005, 2008). This has also been the case when undistorted speech is presented in temporally interrupted noise (Festen and Plomp 1990; Dubno et al. 2002b, 2003; Versfeld and Dreschler 2002; George et al. 2006, 2007; Jin and Nelson 2006).

In general, however, the relationship between measured speech understanding and high-frequency hearing loss is weaker for temporally distorted speech than for undistorted speech in quiet or steady-state noise. Thus in these conditions involving temporally distorted speech or temporally varying background maskers, high-frequency hearing loss alone accounts for significant, but smaller, amounts of variance, leaving more variance that potentially could be explained by other factors. As noted, the strongest evidence for this probably has come from the studies using independent-group designs with factorial combinations of age and hearing status. In this case, however, given significant differences in high-frequency hearing sensitivity between the younger and older adults with normal hearing, it is possible that second-order effects associated with even mild cochlear pathology (Halling and Humes 2000; Dubno et al. 2006) as well as central or cognitive factors could play a role.

There is other evidence, however, that also supports the special difficulties that older adults have with temporally distorted speech or temporally varying backgrounds. Dubno et al. (2002b, 2003), for example, used a simple modification of the AI to predict speech identification in interrupted noise and ensured that audibility differences among younger and older adults during the “off” portions of the noise were minimized. Although the benefit of masker modulation was predicted to be larger for older than younger listeners, relative to steady-state noise, scores improved more in interrupted noise for younger than for older listeners, particularly at a higher noise level. In this case, factors other than audibility were limiting the performance of the older adults, which may relate to recovery from forward maskers. Similarly, George et al. (2006, 2007) demonstrated that the speech-understanding performance of older adults listening to spectrally shaped speech and noise could not be explained by threshold elevation for fluctuating noise. In addition, they found that other factors such as temporal resolution and cognitive processing could account for significant amounts of individual variations in performance.

Another listening condition for which high-frequency hearing thresholds account for much smaller amounts of variance is the use of dichotic presentation of speech, although, again, hearing sensitivity still accounts for the largest amount of variance among the factors examined (Jerger et al. 1989; Jerger et al. 1991; Humes et al., 1996; Divenyi and Haupt 1997b; Hallgren et al. 2001). For example, in a study involving 200 older adults and 5 measures of speech understanding by Jerger et al. (1991), 3 of the measures included speech in a steady-state background (noise or multitalker babble). Correlations between speech-understanding scores on these three measures and high-frequency hearing loss ranged from −0.73 to −0.78 (accounting for 54-61% of the variance). With monaural presentation of sentences in a background of competing speech (discourse), the correlation with hearing loss decreased slightly to −0.65, accounting for 42% of the variance. Finally, when the latter task was administered dichotically, the correlations with hearing loss dropped further, to −0.55, accounting for 30% of the variance. Interestingly, for these latter two measures of speech understanding, age or a measure of cognitive function emerged as an additional explanatory variable in subsequent regression analyses, whereas hearing loss was the only predictor identified for the three measures of speech understanding in steady-state noise.

8.2.2 Beyond Peripheral Factors

In general, across various studies, pure-tone hearing thresholds account for significant, but decreased, amounts of variance in speech-understanding performance for temporally distorted speech, temporally interrupted background noise, and dichotic competing speech. Therefore, for these conditions, factors beyond simple audibility loss may need to be considered when attempting to explain the speech-understanding problems of older listeners. For example, age-related changes in the auditory periphery (e.g., elevated thresholds, broadened tuning, reduced compression) may reduce the ability to benefit from temporal or spectral “dips” that occur for a single-talker or other fluctuating maskers, beyond simple audibility effects. For each of these listening conditions, higher-level deficits in central-auditory or cognitive processing have also been suggested as possible explanatory factors. For example, older adults have been found to perform more poorly than younger adults on various measures of auditory temporal processing (Schneider et al. 1994; Fitzgibbons and Gordon-Salant 1995, 1998, 2004, 2006; Strouse et al. 1998; Gordon-Salant and Fitzgibbons 1999). Older adults have also demonstrated a general trend toward “cognitive slowing” with advancing age (Salthouse 1985, 1991, 2000; Wingfield and Tun 2001). Either a modality-specific auditory temporal-processing deficit related to auditory peripheral or central changes or general cognitive slowing could explain the difficulties of older adults with temporally degraded speech or temporally interrupted noise.

They could, for that matter, also contribute to the difficulties observed for dichotic processing of speech, but other factors could also be operating here. For example, there are specific auditory pathways and areas activated in the central nervous system when processing dichotic speech and age-related deficits in these areas or pathways could lead to speech-understanding problems (Jerger et al. 1989, 1991; Martin and Jerger 2005; Roup et al. 2006). In addition, there is evidence in support of age-related declines in attention, a cognitive mechanism, which could contribute to the decline in dichotic speech-understanding performance (McDowd and Shaw 2000; Rogers 2000). Furthermore, one could argue that it is not these age-related declines in higher-level auditory or cognitive processing alone but the combination of a degraded sensory input due to the peripheral hearing loss and these higher-level processing deficits that greatly increases the speech-understanding difficulties of older adults (Humes et al. 1993; Pichora-Fuller et al. 1995; Wingfield 1996; Schneider and Pichora-Fuller 2000; Pichora-Fuller 2003; Humes and Floyd 2005; Pichora-Fuller and Singh 2006). In this case, the hypothesis is that there exists a finite amount of information-processing resources and if older adults with high-frequency hearing loss have to divert some of these resources to repair what is otherwise a nearly automatic resource-free process of encoding the sensory input, then fewer resources will be available for subsequent higher-level processing.

One of the challenges in identifying the nature of additional factors contributing to the speech-understanding problems of older adults beyond elevated thresholds is the ability to distinguish between “central-auditory” and “cognitive” factors. Many tests designed to assess “central-auditory” function, for example, are, for the most part, considered to be “auditory” because they make use of sound as the sensory stimulus. As one might imagine, this ambiguity can lead to considerable diagnostic overlap between those older adults considered to have “central-auditory” versus “cognitive” processing problems. For example, Jerger et al. (1989), in a study of 130 older adults, identified half (65) of the older adults as having “central-auditory processing disorder,” but within that group, 54% (35) also had “abnormal cognitive status.”

It has been argued previously (Humes et al. 1992; McFarland and Cacace 1995; Cacace and McFarland 1998, 2005; Humes 2008) that one way of distinguishing between central-auditory and cognitive processing deficits would be to examine the modality specificity of the deficit. A strong correlation between performance on a comparable task performed auditorily and visually argues in favor of a cognitive explanation rather than an explanation unique to the auditory system. Likewise, the lack of correlation between performance in each modality on similar or identical tasks is evidence of modality independence. The “common cause” hypothesis was put forth by Lindenberger and Baltes (1994) and Baltes and Lindenberger (1997) to interpret the relatively high correlations observed among sensory and cognitive factors in older adults. These authors proposed that the link between sensory and cognitive measures increased with age because both functions reflect the same anatomic and physiological changes in the aging brain.

Most often, speech has been the stimulus used to assess central-auditory function in older adults. As noted, the inaudibility of the higher-frequency portions of the speech stimulus can have a negative impact on speech-understanding performance and the presence of such a hearing loss in a large portion of the older population (especially the older clinical population) can make it difficult to interpret the results of speech-based measures of central-auditory function (Humes et al., 1996; Humes 2008). To address this, several recent studies have attempted to eliminate or minimize the contributions of the inaudibility of the higher-frequency regions of the speech stimulus by amplifying or spectrally shaping the speech stimulus (Humes 2002, 2007; George et al. 2006, 2007; Humes et al. 2006, 2007; Zekveld et al. 2007). Moreover, three of these studies also developed visual analogs of the auditory speech-understanding measures to examine the issue of modality specificity (George et al. 2007; Humes et al. 2007; Zekveld et al. 2007) in older adults. For the latter three studies, the trends that emerged were as follows. First, for the recognition of sentences in either a babble background or modulated noise, there was ∼25-30% common variance between the auditory and visual versions of the tasks in older adults. Second, even though speech was made audible in these studies, the degree of high-frequency hearing loss was still the best predictor of speech understanding in all conditions, accounting for ∼40-50% of the variance. This suggests that the hearing loss not only represents a limit to audibility when speech is not amplified but may also serve as a perceptual “marker” for an increased likelihood of other (peripheral) processing deficits in these listeners that might have an impact on aided speech understanding. George et al. (2007) observed a significant correlation between a psychophysical measure of temporal resolution and speech-understanding performance in modulated noise, a finding also observed recently by Jin and Nelson (2006). In addition, Dubno et al. (2002b) observed excessive forward masking in older adults with near-normal hearing and also found negative correlations between forward-masked thresholds and speech-understanding in interrupted noise.

Humes et al. (2007) examined the performance of older listeners using two acoustic versions of “speeded speech.” One version made use of conventional time-compressed monosyllabic words. As noted by Schneider et al. (2005), however, implementation of time compression can sometimes lead to inadvertent spectral distortion of the stimuli and it may be the spectral distortions that contribute to the performance declines of older adults for time-compressed speech. As a result, the other version of “speeded speech” used by Humes et al. (2007) was based on shortening the length of pauses between speech sounds. In this approach, brief but clearly articulated letters of the alphabet were presented that could be combined in sequence to spell various three-, four-, or five-letter words. The duration of the silent interval between each spoken letter was manipulated to vary the overall rate of presentation. The listener’s task was to write down the word that had been spelled out acoustically. Another advantage of this form of speeded speech, in contrast to time compression, was that a direct visual analog of the auditory speeded-speech task could be implemented (i.e., speeded text recognition). In this study, there was again ∼30% common variance between the auditory and visual analogs of “speeded speech” and this common variance was also negatively associated with age. Given the more complex nature of the task, these results could be interpreted as a general age-related cognitive deficit in the speed of processing. There was, however, an additional modality specific component and this was again related (inversely) to the amount of high-frequency hearing loss.

Additional study of commonalities across modalities is needed, but the work completed thus far is sufficient to at least urge caution when interpreting poor speech-understanding performance of some older adults on speech-based tests of “central-auditory processing” as pure modality-specific deficits. The use of sound as the stimulus, or spoken speech in particular, does not make the task an “auditory processing” task. In fact, significant amounts (∼30%) of shared variance have been identified between auditory and visual analogs of some tasks thought to be auditory-specific tasks. Further evaluation of some of the visual analogs developed by Zekveld et al. (2007) and Humes et al. (2007) with older hearing-impaired listeners may result in the development of clinical procedures to help determine how much of an individual’s speech-understanding deficit is due to a modality-specific auditory-processing problem or a general cognitive-processing problem. At the moment, however, there is no specific treatment for either form of higher-level processing deficit and both would likely constrain the immediate benefits provided by amplification designed to overcome the loss of audibility due to peripheral hearing loss.

As noted, dichotic measures of speech understanding represent another area often considered by audiologists to be within the domain of “central-auditory processing” but for which amodal cognitive factors could also play a role. Clearly, the hemispheric specialization for speech and language that gives rise to a right ear advantage in many dichotic listening tasks can be considered auditory specific, or at least speech specific (Kimura 1967; Berlin et al. 1973). However, there is also a general cognitive component to the processing of competing speech stimuli presented concurrently to each ear (e.g., Cherry 1953). Specifically, the ability to attend to a message in one ear or the other can impact performance. Several studies of dichotic speech understanding in older listeners, which also measured some aspect of general cognitive function, have reported significant correlations between these measures (Jerger et al. 1991; Hallgren et al. 2001; Humes 2005; Humes et al. 2006). Humes et al. (2006) measured the ability of young and older adults to identify words near the end of a sentence when a competing similar sentence was spoken by another talker. Various cues signaling the sentence to which the listener should attend were presented either immediately before each sentence pair (selective attention) or immediately after each sentence pair (divided attention). One type of cue, a lexical cue, was used in both a monaural and a dichotic condition. The expected right ear advantage was obtained when the results for the dichotic condition were analyzed. However, there were very strong and significant correlations (r = 0.87 and 0.94) between the monaural scores and dichotic scores for both the 10 YNH listeners and the 13 older adults with various degrees of hearing loss. (Stimuli were spectrally shaped in this study to minimize or eliminate the contributions of audibility loss.) Because there is no modality-specific or speech-specific interhemispheric advantage for the monaural condition, the strong correlations must reflect some other common denominator, perhaps cognitive in nature (attention). In fact, the measures of selective attention were found to be significantly correlated (r = 0.6) with digit-span measures from the older adults, a pattern observed frequently in studies of aging and cognition (Verhaeghen and De Meersman 1998a, b). Again, more work is needed in this area, but there is enough evidence to urge caution in the interpretation of results from dichotic speech-understanding measures. The use of speech does not make such measures “pure” measures of auditory processing and performance may be determined by other amodal cognitive (or linguistic) factors.

To summarize the review to this stage, the peripheral cochlear pathology commonly found in older adults is the primary factor underlying the speech-understanding problems of older adults. The restricted audibility of the speech stimulus, primarily due to the inaudibility of the higher frequencies, is the main contributor, especially for typical conversational listening conditions in quiet and in steady-state noise without amplification. Because the peripheral hearing loss in aging is sensorineural in nature, however, there may also be a reduction in nonlinearities and broadened tuning, and these peripheral factors may also contribute to the speech-understanding problems of older adults. In addition to these second-order peripheral effects, higher-level auditory and cognitive factors can also contribute to the speech-understanding problems of older adults, especially when speech is temporally degraded or presented in a temporally varying background. In fact, it has been argued that these higher-level factors are likely to play a more important role once the speech has been made audible through amplification (Humes 2007).

8.3 Amplification and Speech Understanding

According to the AI framework described previously, speech understanding will be optimal when the entire speech area (long-term average speech spectrum ± 15 dB from ∼100 to 8,000 Hz) is audible. This theoretical objective is illustrated in Figure 8.10 for a hypothetical typical 80-year-old man. For simplicity, it is assumed that the upper end of the dynamic range (loudness discomfort level) for this listener is 95 dB SPL/Hz at all frequencies, the same as that for YNH listeners (Kamm et al. 1978). For the hypothetical case illustrated in Figure 8.10, an 80-year-old man with median hearing loss for that age has sufficient dynamic range to accommodate the full 30-dB range of the speech area (with speech in quiet), except at the very highest frequency (8,000 Hz). For reference purposes, the unaided long-term average speech spectrum is also shown in this panel and the difference between the aided and unaided long-term average spectra provides an indication of the gain at each frequency. The approach described here, one of making the full speech area just audible in aided listening, forms the basis of one of the most popular clinical hearing aid fitting algorithms, the desired sensation level (DSL) approach (Seewald et al. 1993, 2005; Cornelisse et al. 1995). It should be noted, however, that as long as the full speech area is audible and does not exceed discomfort at any frequency, there are many ways in which the speech may be amplified to yield equivalent speech-understanding performance (Horwitz et al. 1991; Van Buuren et al. 1995).