Article Outline

Introduction

Organization

Idiosyncrasies

Formulation

Methods

Review of Solution Approaches

C-GRASP Heuristic

Conclusions

See also

References

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Introduction

The maximum cut problem (max-cut) is one of the simplest graph partitioning problems to conceptualize, and yet it is one of the most difficult combinatorial optimization problems to solve. The objective of max-cut is to partition the set of vertices of a graph into two subsets, such that the sum of the weights of the edges having one endpoint in each of the subsets is maximum. This problem is known to be \( { \mathcal{NP} } \)-complete [18,27]; however, it is interesting to note that the inverse problem, i. e., that of looking for the minimum cut in a graph is solvable in polynomial time using network flow techniques [1]. max-cut is an important combinatorial problem and has applications in many fields including VLSI circuit design [9,32] and statistical physics [5]. For other applications, see [16,21]. For a detailed survey of max-cut, the reader can refer to [33].

Organization

In this paper, we introduce the maximum cut problem and review several heuristic methods which have been applied. In Subsect. “C-GRASP Heuristic” we describe the implementation of a new heuristic based optimizing a quadratic over a hypercube. The heuristic is designed under the C-GRASP (Continuous Greedy Randomized Adaptive Search Procedure) framework. Proposed by Hirsch, Pardalos, and Resende [23], C‑GRASP is a new stochastic metaheuristic for continuous global optimization problems. Numerical results are presented and compared with other heuristics from the literature.

Idiosyncrasies

We conclude this section by introducing the symbols and notations we will employ throughout this paper. Denote a graph \( { G = (V,E) } \) as a pair consisting of a set of vertices V, and a set of edges E. Let the map \( { w\colon E \mapsto \mathbb{R} } \) be a weight function defined on the set of edges. We will denote an edge-weighted graph as a pair (G,w). Thus we can easily generalize an un-weighted graph \( { G = (V,E) } \) as an edge-weighted graph (G,w), by defining the weight function as

We use the symbol “\( { b := a } \)” to mean “the expression a defines the (new) symbol b”. Of course, this could be conveniently extended so that a statement like “\( { (1-\epsilon)/2 := 7 } \)” means “define the symbol \( \epsilon \) so that \( { (1-\epsilon)/2 = 7 } \) holds”. We will employ the typical symbol S c to denote the complement of the set S; further let \( { A \setminus B } \) denote the set-difference, \( { A \cap B^{c} } \). Agree to let the expression \( { x \gets y } \) mean that the value of the variable y is assigned to the variable x. Finally, to denote the cardinality of a set S, we use | S |. We will use bold for words which we define, italics for emphasis, and small caps for problem names. Any other locally used terms and symbols will be defined in the sections in which they appear.

Formulation

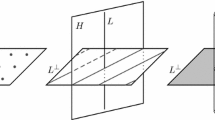

Consider an undirected edge-weighted graph (G,w), where \( { G = (V,E) } \) is the graph, and w is the weight function. A cut is defined as a partition of the vertex set into two disjoint subsets S and \( { \bar{S} := V \setminus S } \). The weight of the cut \( { (S,\bar{S}) } \) is given by the function \( { W\colon S \times \bar{S} \mapsto \mathbb{R} } \) and is defined as

For an edge-weighted graph (G,w), a maximum cut is a cut of maximum weight and is defined as

We can formulate max-cut as the following integer quadratic programming problem:

subject to:

To see this, notice that each subset \( V \supseteq S := \{i \in V: y_{i} = 1\} \) induces a cut \( { (S, \bar{S}) } \) with corresponding weight equal to

An alternative formulation of max-cut based on the optimization of a quadratic over the unit hypercube was given by Deza and Laurent in [12].

Theorem 1

Given a graph \( { G = (V,E) } \) with \( { |V| = n } \), the optimal objective function value of the maximum cut problem is given by

where \( { W = [w_{ij}]_{i,j=1}^{n} } \) is the matrix of edge weights, and \( { e := [1,1, \ldots,1]^{\mathrm{T}} } \) is the unit vector.

Proof 1

Let

denote the objective function from Eq. (7). To begin with, notice that the matrix W has a zero diagonal, i. e., \( { w_{ii} = 0,\: \forall i \in 1,2, \ldots,n } \). This implies that \( { f(x) } \) is linear with respect to each variable, and thus there always exists an optimal solution, x ∗ of (7) such that \( { x^\ast \in \{0,1\}^{n} } \). Therefore, we have shown that

The next step is to show that there is a bijection between binary vectors of length n and cuts in G. Consider any binary vector \( { \hat{x} \in \{0,1\}^{n} } \). Now suppose we partition the vertex set V into two disjoint subsets \( { V_{1} := \{i| \hat{x}_{i} = 0\} } \) and \( { V_{2} := \{i| \hat{x}_{i} = 1\} } \). Then, evaluating the objective function we have

which is equal to W(V 1,V 2), the value of the cut defined by the partition of \( { V = V_{1} \bigcup V_{2} } \) (see Eq. (2) above).

Alternatively, consider any partition of V into two disjoint subsets \( { V_{1},V_{2} \subseteq V } \). That is

Now, we can construct the vector \( { \hat{x} } \) as follows:

Once again, evaluating the objective function on \( { \hat{x} } \), we have

Hence \( { f(\hat{x}) = W(V_{1},V_{2}) } \) and we have the result.Footnote 1 Alas, we have shown the bijection between binary n-vectors and cuts in G. In summary, we have

□

There are several classes of graphs for which max-cut is solvable in polynomial time [25]. These include planar graphs [11], weakly bipartite graphs with nonnegative edge weights [20], and graphs without K 5 minors [4]. The general problem however is known to be \( { \mathcal{APX} } \)-complete [31]. This implies that unless \( { \mathcal{P} = \mathcal{NP} } \), max-cut does not admit a polynomial time approximation scheme [30].

Methods

The maximum cut problem is one of the most well-studied discrete optimization problems [27]. Since the problem is \( { \mathcal{NP} } \)-hard in general, there has been an incredible amount of research done in which heuristic techniques have been applied. Before we present the new heuristic approach, we review some of the prior work that has been done.

Review of Solution Approaches

There have been many semidefinite and continuous relaxations based on this formulation. This was first shown by Lovász in [28]. In 1995, Goemans and Williamson [19] used a semidefinite relaxation to achieve an approximation ratio of .87856. This implication of this work is significant for two reasons. The first is of course, the drastic improvement of the best known approximation ratio for max-cut of 0.5 which had not been improved in over 20 years [36]. Secondly, and perhaps more significantly is that until 1995, research on approximation algorithms for nonlinear programming problems did not receive much attention. Motivated by the work of Goemans and Williamson, semidefinite programming techniques were applied to an assortment of combinatorial optimization problems successfully yielding the best known approximation algorithms for graph coloring [7,26], betweenness [10], maximum satisfiability [13,19], and maximum stable set [2], to name a few [29].

As noted in [16], the use of interior point methods for solving the semidefinite programming relaxation have proven to be very efficient. This is because methods such as the one proposed by Benson, Ye, and Zhang in [6] exploit the combinatorial structure of the relaxed problem. Other algorithms based on the nonlinear semidefinite relaxation include the work of Helmberg and Rendl [22] and Homer and Peinado [24].

The work of Burer et al. in [8] describes the implementation of a rank‑2 relaxation heuristic dubbed circut. This software package was shown to compute better solutions than the randomized heuristic of Goemans and Williamson, in general [16]. In a recent paper dating from 2002, Festa, Pardalos, Resende, and Ribeiro [16] implement and test six randomized heuristics for max-cut. These include variants of Greedy Randomized Adaptive Search Procedures (GRASP), Variable Neighborhood Search, and path‐relinking algorithms [35]. Their efforts resulted in improving the best known solutions for several graphs and quickly producing solutions that compare favorably with the method of Goemans and Williamson [19] and circut [8]. For several sparse instances, the randomized heuristics presented in [16] outperformed circut.

In [25], Butenko et al. derive a “worst-out” heuristic having an approximation ratio of at least 1/3 which they refer to as the edge contraction method . The also present a computational analysis of several greedy construction heuristics for max-cut based on variations of the 0.5‑approximation algorithm of Sahni and Gonzalez [36]. With this, we now move on and describe the implementation of a new heuristic for max-cut based on the new metaheuristic Continuous GRASP [23].

C-GRASP Heuristic

The Continuous Greedy Randomized Adaptive Search Procedure (C-GRASP) is a new metaheuristic for continuous global optimization [23]. The method is an extension of the widely known discrete optimization algorithm Greedy Randomized Adaptive Search Procedure (GRASP) [15]. Preliminary results are quite promising, indicating that C-GRASP is able to quickly converge to the global optimum on standard benchmark test functions. The traditional GRASP is a two-phase procedure which generates solutions through the controlled use of random sampling, greedy selection, and local search. For a given problem Π, let F be the set of feasible solutions for Π. Each solution \( { X \in F } \) is composed of k discrete components \( { a_{1}, \ldots, a_{k} } \). GRASP constructs a sequence {X} i of solutions for Π, such that each \( { X_{i} \in F } \). The algorithm returns the best solution found after all iterations. The GRASP procedure can be described as in the pseudo-code provided in Fig. 1. The construction phase receives as parameters an instance of the problem G, a ranking function \( { g\colon A(X)\mapsto \mathbb{R} } \) (where A(X) is the domain of feasible components \( { a_1, \ldots,a_k } \) for a partial solution X), and a parameter \( { 0<\alpha<1 } \). The construction phase begins with an empty partial solution X. Assuming that \( { |A(X)|=k } \), the algorithm creates a list of the best ranked \( { \alpha k } \) components in A(X), and returns a uniformly chosen element x from this list. The current partial solution is augmented to include x, and the procedure is repeated until the solution is feasible, i. e., until \( { X\in F } \).

The intensification phase consists of the implementation of a hill-climbing procedure. Given a solution \( { X\in F } \), let N(X) be the set of solutions that can found from X by changing one of the components \( { a\in X } \). Then, N(X) is called the neighborhood of X. The improvement algorithm consists of finding, at each step, the element X ∗ such that

where \( { f\colon F\mapsto \mathbb{R} } \) is the objective function of the problem. At the end of each step we make the assignment \( { X^\ast\gets X } \) if \( { f(X)>f(X^\ast) } \). The algorithm will eventually achieve a local optimum, in which case the solution \( { X^\ast } \) is such that \( { f(X^\ast)\ge f(X^\prime) } \) for all \( { X^\prime \in N(X^\ast) } \). X ∗ is returned as the best solution from the iteration and the best solution from all iterations is returned as the overall GRASP solution. GRASP has been applied to many discrete problems with excellent results. For an annotated bibliography of GRASP applications, the reader is referred to the work of Festa and Resende in [17].

C-GRASP pseudo-code adapted from [23]

Like GRASP, the C-GRASP framework is a multi-start procedure consisting of a construction phase and a local search [14]. Specifically, C-GRASP is designed to solve continuous problems subject to box constraints. The feasible domain is given as the n-dimensional rectangle \( S := \{x = (x_{1}, x_{2}, \ldots, x_{n}) \in \mathbb{R}^{n}\colon l \leq x \leq u \} \), where \( l,u \in \mathbb{R}^{n} \) are such that \( l_{i} \leq u_{i} \), for \( { i = 1,2, \ldots,n } \). Pseudo-code for the basic C-GRASP is provided in Fig. 2. Notice that the algorithm takes as input the dimension n, upper and lower bounds l and u, the objective function f, and parameters MaxIters, MaxNumIterNoImprov, NumTimesToRun, MaxDirToTry, and a number \( { \alpha \in (0,1) } \).

To begin with, the optimal objective function value f ∗ is initialized to \( { -\infty } \). The procedure then enters the main body of the algorithm in the for loop from lines 2–21. The value NumTimesToRun is the total number of C-GRASP iterations that will be performed. To begin with, more initialization takes place as the current solution x is initialized as a random point inside the hyperrectangle, which is generated according to a function UnifRand \( { ([l,u)) } \) which is uniform onto [l,u)Footnote 2. Furthermore, the parameter which controls the discretization of the search space, h, is set to 1. Next, the construction phase and local search phases are entered. In line 9, the new solution is compared to the current best solution. If the objective function value corresponding to the current solution dominates the incumbent, then the current solution replaces the incumbent and NumIterNoImprov is set to 0. This parameter controls when the discretization measure h is reduced. That is, after a total of MaxNumIterNoImprov iterations occur in which no solution better than the current best solution is found, h is set to h/2 and the loop returns to line 6. By adjusting the value of h, the algorithm is able to locate general areas of the search space which contain high quality solutions, and then narrow down the search in those particular regions. The best solution after a total of NumTimesToRun iterations is returned as the best solution.

The construction phase of the C-GRASP takes as input the randomly generated solution \( { x \in S } \) (see Fig. 2, line 3). Beginning with all coordinates unfixed, the method then performs a line search on each unfixed coordinate direction of x holding the other \( { n-1 } \) directions constant. The objective function values resulting from the line search solution for each coordinate direction are stored in a vector, say V. An element \( { v_{i} \in V } \) is then selected uniformly at random from the maximum \( { (1-\alpha)100\% } \) elements of V, and the v i coordinate direction is fixed. This process repeats until all n coordinates of x have been fixed. The resulting solution is returned as the C-GRASP solution from the current iteration. For a slightly more detailed explanation of this procedure, the reader is referred to [23].

As for the local search phase, this procedure simulates the role of calculating the gradient of the objective function \( { f(\cdot) } \). As mentioned earlier, gradients are not used in C-GRASP because oftentimes, they are difficult to compute and result in slow computation times. Therefore, the gradient is approximated as follows. Given the construction phase solution x, the local search generates a set of directions and determines in which direction (if any) the objective function improves.

The directions are calculated according to a bijective function T which maps the interval of integers \( { [1,3^{n}) \cap \mathbb{Z} } \) onto their balanced ternary representation. Recall that n is the dimension of the problem under consideration. That is, \( { T\colon [1,3^{n}) \cap \mathbb{Z} \mapsto \{-1,0,1\}^{\mathbb{N}} } \). Clearly, as \( { n \rightarrow \infty } \), the number of search directions grows exponentially. Therefore, only MaxDirToTry directions are generatedFootnote 3 and tested on the current solution. For each direction d, the point \( { \hat{x} := x + hd } \) is constructed and \( { f(\hat{x}) } \) is computed. Recall that h is the parameter which controls the density of the search space discretization. If the constructed point \( { \hat{x} \in S } \) has a more favorable objective value than the current point x, then \( { \hat{x} } \) replaces x, and the process continues. The phase terminates when a locally optimal point \( { x^\ast \in S } \) is found. The point x ∗ is said to be locally optimal if \( f(x^\ast) \ge f(x^\ast + hd) \forall d \in \{1,2, \ldots, \texttt{MaxDirToTry}\} \). Again, for a slightly more in depth description of this procedure, the reader should see the paper by Hirsch et al. [23].

Computational Results

The proposed procedure was implemented in the C++ programming language and complied using Microsoft® Visual C++ 6.0. It was tested on a PC equipped with a 1700 MHz Intel® Pentium® M processor and 1 GB of RAM operating under the Microsoft® Windows® XP environment. The C-GRASP parameters used are provided in Table 1. First, we tested the C-GRASP on 10 instances produced by the Balasundarm–Butenko problem generator in [3]. Though these problems are relatively small, they have proven themselves to be quite formidable against the Multilevel Coordinate Search (MCS) black-box optimization algorithm. We also tested the C-GRASP on 12 instances from the TSPLIB [34] collection of test problems for the traveling salesman problem. These problems are also used as benchmark problems for testing max-cut heuristics [19].

For further comparison, all instances were tested using the rank-2 relaxation heuristic circut [8], as well as with a simple 2-exchange local search heuristic which is outlined in the pseudo-code provided in Fig. 3. The method receives as input a parameter MaxIter indicating the maximum number of iterations to be performed and \( { G = (V,E) } \) the instance of the problem whereupon a maximum spanning tree is found using Kruskal's algorithm [1]. The spanning tree, due to its natural bipartite structure provides a feasible solution to which a swap-based local improvement method is applied in line 5. The local improvement works as follows. For all pairs of vertices (u,v) such that \( { u \in S } \) and \( { v \in \bar{S} } \), a swap is performed. That is, we place \( { u \in \bar{S} } \) and \( { v \in S } \). If the objection function is improved, the swap is kept; otherwise, we undo the swap and examine the next (u,v) pair. The local search was tested on the same PC as the C-GRASP. The circut heuristic was compiled using Compaq® Visual Fortran on a PC equipped with a 3.60 GHz Intel® Xeon® processor and 3.0 GB of RAM operating under the Windows® XP environement.

Table 2 provides computational results of the algorithms on the 10 Balasundarum–Butenko instances from [3]. The first three columns provide the instance name, the number of vertices and the optimal solution. The solutions from the heuristics are provided next. The solutions from the Multilevel Coordinate Search algorithm were provided in [3]. For all of these instances, the time required by the C-GRASP, circut, and the local search to find their best solutions was fractions of a second. Computing times were not listed for the MCS algorithm in [3]. Notice that the 2-exchange local search computed optimal solutions for each of these instances, followed closely by circut which found optimal cuts for all but one problem. As for the continuous heuristics, the C-GRASP found optimal solutions for 5 of the 10 instances while the MCS procedure produced optimal cuts for only 1 instance. For the 5 instances where C-GRASP produced suboptimal solutions, the average deviation from the optimum was 3.54%.

Table 3 shows results of the C-GRASP, local search, and circut heuristics when applied to 12 instances from the TSPLIB collection of test problems for the traveling salesman problem [34]. The first two columns provide the instance name and the size of the vertex set | V |. Next the solutions are provided along with the associated computing time required by the respective heuristic. Notice that for all 12 instances, the three heuristics all found the same solutions. Notice that in terms of computation time, the simplest heuristic, the 2-exchange local search seems to be the best performing of the three methods tested. The rank-2 relaxation algorithm circut is also very fast requiring only 2.99 s on average to compute the solution. On the other hand, the C-GRASP method did not scale as well as the others. We see that there is a drastic increase in the solution time as the number of vertices increases beyond 48.

This is not particularly surprising. The philosophical reasoning behind the slow computation time of the C-GRASP relative to the discrete heuristics being that the C-GRASP is a black-box method and does not take into account any information about the problem other than the objective function. To the contrary, the local search and circut specifically exploit the combinatorial structure of the underlying problem. This allows them to quickly calculate high quality solutions.

Conclusions

In this paper, we implemented a new metaheuristic for the maximum cut problem. In particular, we proposed the use of a continuous greedy randomized adaptive search procedure (C-GRASP) [23], for a continuous formulation of the problem. To our knowledge, this is the first application of C-GRASP to continuous formulations of discrete optimization problems. Numerical results indicate that the procedure is able to compute optimal solutions for problems of relatively small size. However, the method becomes inefficient on problems approaching 100 nodes. The main reason for this is the fact that C-GRASP is a black-box method, in that it does not take advantage of any information about the problem structure. Recall that the only input to the method is some mechanism to compute the objective function. A natural extension of the work presented here is to enhance the C-GRASP framework to take advantage of the structure of the problem at hand. Using a priori information about the problem being considered, one could modify the algorithm to include these properties which would presumably reduce the required computation time.

See also

Combinatorial Test Problems and Problem Generators

Continuous Global Optimization: Models, Algorithms and Software

Derivative-Free Methods for Non-smooth Optimization

Greedy Randomized Adaptive Search Procedures

NP-complete Problems and Proof Methodology

Quadratic Integer Programming: Complexity and Equivalent Forms

Semidefinite Programming: Optimality Conditions and Stability

Semidefinite Programming and the Sensor Network Localization Problem, SNLP

Notes

- 1.

Notice that the result holds even if (without the loss of generality) \( { V_{1} = V } \) and \( { V_{2} = \emptyset } \). In this case, a cut induced by \( { (V_{1},V_{2}) } \) will be a maximum cut if \( { w_{ij} \le 0, \quad\forall i,j \in V } \).

- 2.

This is the “typical” definition of a Uniform distribution. That is, \( { P\,\colon X \mapsto \mathbb{R} } \) is uniform onto [A,B), if, for any subinterval \( { I \subset [A,B) } \), the measure of P −1(I) equals the length of I.

- 3.

uniformly at random

References

Ahuja RK, Magnanti TL, Orlin JB (1993) Network Flows: Theory, Algorithms, and Applications. Prentice-Hall

Alon N, Kahale N (1995) Approximating the independence number via the θ-function. Technical report. Tel Aviv University, Tel Aviv, Israel

Balasundaram B, Butenko S (2005) Constructing test functions for global optimization using continuous formulations of graph problems. J Optim Method Softw 20(4–5):439–452

Barahona F (1983) The max-cut problem in graphs is not contractible to k 5. Oper Res Lett 2:107–111

Barahona F, Grötschel M, Jünger M, Reinelt G (1998) An application of combinatorial optimization to statisical physics and circuit layout design. Oper Res 36:493–513

Benso S, Ye Y, Zhang X (2000) Solving large-scale sparse semidefinite programs for combinatorial optimization. SIAM J Optim 10:443–461

Blum A (1994) New approximation algorithms for graph coloring. J ACM 41(3):470–516

Burer S, Monteiro RDC, Zhang Y (2001) Rank-two relaxation heuristics for max-cut and other binary quadratic programs. SIAM J Optim 12:503–521

Chang KC, Du D-Z (1987) Efficient algorithms for layer assignment problems. IEEE Trans Computer-Aided Des 6:67–78

Chor B, Sudan M (1995) A geometric approach to betweenness. In: Proc. of the 3rd Annual European Symposium Algorithms. pp 227–237

de la Vega WF (1996) max-cut has a randomized approximation scheme in dense graphs. Random Struct Algoritm 8(3):187–198

Deza M, Laurent M (1997) Geometry of cuts and metrics. Springer-Verlag

Feige U, Goemans MX (1995) Approximating the value of two prover proof systems, with applications to max 2sat and max dicut. In: Proc. of the 3rd Israel Symposium on Theory of Computing and Systems. pp 182–189

Feo TA, Resende MGC (1989) A probabilistic heuristic for a computationaly difficult set covering problem. Oper Res Lett 8:67–71

Feo TA, Resende MGC (1995) Greedy randomized adaptive search procedures. J Glob Optim 6:109–133

Festa P, Pardalos PM, Resende MGC, Ribeiro CC (2002) Randomized heuristics for the max-cut problem. Optim Method Softw 7:1033–1058

Festa P, Resende MGC (2002) grasp: an annotated bibliography. In: Ribeiro C, Hansen P (eds) Essays and surveys in metaheuristics. Kluwer, pp 325–367

Garey MR, Johnson DS (1979) Computers and intractability: a guide to the theory of NP-completeness. W.H. Freeman and Company

Goemans M, Williamson DP (1995) Improved approximation algorithms for max-cut and satisfiability problems using semidefinite programming. J ACM 42:1115–1145

Grotschel M, Pulleyblank WR (1981) Weakly bipartite graphs and the max-cut problem. Oper Res Lett 1:23–27

Hager WW, Krylyuk Y (1999) Graph partitioning and continuous quadratic programming. SIAM J Discret Math 12:500–523

Helmberg C, Rendl F (2000) A spectral bundle method for semidefinite programming. SIAM J Optim 10:673–696

Hirsch MJ, Pardalos PM, Resende MGC (2006) Global optimization by continuous GRASP. Optim Lett (in press)

Homer S, Peinado M (1997) Two distributed memory parallel approximation algorithms for Max-Cut. J Parallel Distrib Comput 1:48–61

Kahruman-Anderoglu S, Kolotoglu E, Butenko S, Hicks IV (2007) On greedy construction heuristics for the max-cut problem. Int J Comput Sci Eng (in press)

Karger D, Motwani R, Sudan M (1994) Approximate graph coloring by semidefinite programming. In: 35th Annual Symposium on Foundations of Computer Science. pp 2–13

Karp RM (1972) Reducability among combinatorial problems. In: Miller R, Thatcher J (eds) Complexity of computer computations. Plenum Press, pp 85–103

Lovász L (1979) On the shannon capacity of a graph. IEEE Trans Inf Theory IT-25(1):1–7

Lu H-I (1996) Efficient approximation algorithms for some semidefinite programs. Ph.d. dissertation, Brown University

Papadimitriou CH (1994) Computational complexity. Addison Wesley Longman

Papadimitriou CH, Yannakakis M (1991) Optimization, approximation, and complexity classes. J Comput Syst Sci 43(3):425–440

Pinter RY (1984) Optimal layer assignment for interconnect. J VLSI Comput Syst 1:123–137

Poljak S, Tuza Z (1995) The max-cut problem: a survey. In: Cook W, Lovász L, Seymour P (eds) Special year in combinatorial optimization. DIMACS Series in discrete mathematics and computer science. American Mathematical Society

Reinelt G (1991) TSPLIB - a traveling salesman problem library. ORSA J Comput 3:376–384

Resende MGC, Ribeiro CC (2003) Greedy randomized adaptive search procedures. In: Glover F, Kochenberger G (eds) Handbook of metaheuristics. Kluwer, pp 219–249

Sahni S, Gonzalez T (1976) P-complete approximation problems. J ACM 23(3):555–565

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2008 Springer-Verlag

About this entry

Cite this entry

Commander, C. (2008). Maximum Cut Problem, MAX-CUT . In: Floudas, C., Pardalos, P. (eds) Encyclopedia of Optimization. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-74759-0_358

Download citation

DOI: https://doi.org/10.1007/978-0-387-74759-0_358

Publisher Name: Springer, Boston, MA

Print ISBN: 978-0-387-74758-3

Online ISBN: 978-0-387-74759-0

eBook Packages: Mathematics and StatisticsReference Module Computer Science and Engineering