Abstract

Aspect Emotion Triplet Extraction (ASTE) is a vital branch in the field of NLP that strives to identify triads consisting of aspect words, opinion words, and emotional polarity from sentences. Each triplet contains three elements, namely, aspect words, opinion words and their emotional polarity. How to effectively extract attribute and opinion words and detect the connection between them is extremely important. Early work often focused on only one of these tasks and could not extract triples simultaneously in the same framework. Recently, researchers proposed using the bidirectional machine reading comprehension model (BMRC) to obtain triples of aspects, opinions, and emotions in the same framework. However, the existing BMRC model ignored professional domain knowledge, and the equal treatment training ignored the special contribution of individual instances in sentences to sentences. To this end, this paper proposes a BMRC with knowledge enhancement, which integrates the knowledge of specialized fields into the model, strengthens the connection between specific fields and open fields, increases instance regularization, and pays attention to the contribution of individual instances to sentences. Our experiments on several benchmark data sets have shown that our model has reached the most advanced performance.

Supported by Hebei Provincial Department of Education Fund.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Fine-grained emotion analysis is an important branch of NLP. According to the existing fine-grained emotion analysis model, it aims to mine more detailed opinions and emotions in specific aspects. It includes many tasks: aspect terms (ATE) [4, 10, 13], viewpoint identification terms (OTE) [7, 14, 20], aspect-based sentiment analysis (ASA) [3, 4, 23], aspect - opinion extraction (AOE) [1, 28], etc. Nevertheless, the existing research generally deals with these tasks separately. It is not possible to extract the triple information of sentence, opinion and emotion on the same platform. To completely solve this problem, Peng [13] proposed aspect emotion triple extraction (ATSE), which enables the model to carry out the triplet extraction of scheme opinions within the same framework.

Although these studies have made substantial headway in the extraction of emotional triplets, they still face many great challenges. First, in the BMRC model, we use the training model obtained from the large-scale open domain corpus, which is the general representation of knowledge, making the extraction and realization of emotional triplets in the professional field not good. Second, in the training phase of BMRC model in the past, training examples are often treated equally, and the special contribution of individual examples to sentences is rarely noticed. For example, the “not” in “this food is not delicious” is covered up, and the message of the broken sentence becomes opposite to the original sentence, resulting in the reduction of the accuracy and robustness of the model.

In response to the former problems, we propose a BMRC method based on knowledge enhancement and instance regularization. This task is transformed into a machine reading comprehension model. Through bidirectional complex queries, we can find the triple information of aspects, opinions and emotions of sentences. Different from the general BMRC model, in the pretraining stage, we used data sets that emphasize specific areas and integrated the knowledge map into the model so that the model can be equipped with some professional domain knowledge, strengthen the connection between specific areas and open areas, and diminish the differences between the pretraining model and the downstream task fine-tuning. In addition, we have added an automatic noise coder to the model to recover the damaged marks and calculate the difference between the damaged sentence and the original sentence to provide a clear regular signal to better focus on the contribution of individual instances to the sentence. The key points of our contributions are listed below:

-

The BMRC model incorporates a knowledge map to equip itself with domain-specific expertise, thus fortifying the linkage between particular domains and the broader open domain.

-

An automatic denoising encoder is added to the model to improve the model’s capacity to center on the contribution of individual instances to sentences and improve the robustness and prediction accuracy of the model.

-

Through many experiments on standard data sets, our model has achieved optimal results.

2 Related Work

2.1 Fine Grained Emotional Analysis

Traditional affective analysis tasks are text-level affective analysis tasks, sentence-level affective analysis tasks, and aspect-level affective analysis tasks. Among them, aspect-level affective analysis tasks are aspect-oriented or entity-oriented more fine-grained affective analysis tasks, mainly including aspect-class affective analysis (ACSA), opinion term extraction (AOE), aspect-specific sentiment recognition (ASR). These studies are mainly aimed at the fixed level, and some tasks are extracted separately, ignoring the dependency between different aspects. In an effort to explore the dependency between different tasks, some scholars are committed to coupling different subtasks and proposing effective models. For example, Wang et al. [20] proposed a multilayer attention-based deep learning model for extracting aspect and opinion terms, and [22] proposed a grid marking scheme (GTS), which extracts the emotional polarity of terms through a grid marking task to form opinions. Chen et al. [2] proposed a dual-channel recursive network to extract aspects and opinions. However, these methods cannot extract aspects, feelings and opinions within the same framework. To solve this problem, Peng [13] and others took the lead in transforming ASTE into a machine reading comprehension problem, using a two-stage framework to extract aspects, opinions, and emotions. Liu et al. [17] proposed a robust BMRC method to carry out ASTE tasks. Although these studies have made substantial progress, these methods do not fully consider prior knowledge and only obtain the general representation of knowledge, which makes some differences between the pretraining model and the fine-tuning of downstream tasks, making the extraction and realization of emotional triplets in professional fields not good. At the same time, the individual instances’ contribution to sentences is inadequate during model training.

2.2 Machine Reading Comprehension

Machine reading comprehension (MRC) aims to retrieve the target question in a designated corpus and find the corresponding answer. Over the last few years, many scholars have conducted studies on MRC so that it can better capture the required information from massive information. These MRC models fully learn the corpus and the semantic information of the problem and learn the interaction between the problem and the corpus through various models. For example, viswanatha et al. [18] used LSTM-based memory networks for encoding queries to solve long-distance dependency problems, while Yu et al. [26] also employed various self-attention mechanisms to fully obtain semantic associations between problems and dependencies.The introduction of the pretrained language model BERT has greatly promoted the development of MRC. By training with large-scale pretrained samples, it can better capture deeper semantic features and improve the performance of MRC models.

Over the last few years, MRC has shown a high application trend in many NLP tasks, such as Li [9] and Zhao [29], utilizing the advantages of MRC in semantic understanding and context inference for entity extraction and entity relationship extraction. Similarly, utilizing the advantages of MRC in semantic understanding and contextual inference, we naturally integrate ABSA tasks with machine reading comprehension, enabling a more accurate construction of the relationship between opinions and emotions.

3 Methodology

Problem Formulation

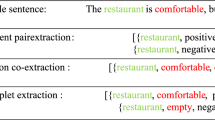

Given a sentence \(\textrm{S}=\left\{ \textrm{x}_1, \textrm{x}_2, \ldots \textrm{x}_{\textrm{n}}\right\} \), where n denotes the word count. In the ASTE task, its goal is to identify the triad collection of aspects, opinions and emotions \(T=\left\{ \left( a_i, o_i, s_i\right) \right\} _{i=1}^{| T |}\), where \(a_i, o_i\) and \(s_i\) represent the ith aspect, opinion and emotion in the sentence,respectively, as shown in Fig. 1.

3.1 BMRC

BMRC performs unrestricted and restrictive queries based on the context and outputs the answers required by the model. Because each triad of ASTE tasks is activated by one aspector opinion, we construct a bidirectional reading comprehension model, carry out unrestricted queries in one direction, extract the corresponding aspect or opinion, carry out restricted queries in two directions, extract the corresponding aspect of each aspect or opinion-opinion pair, and finally classify the emotion of the corresponding opinion of each aspect through a separate emotion classifier to obtain the final aspect-opinion-emotion triple answer. In addition, we improved the model through BERT in the BMRC model. By integrating the knowledge map into the model, we can equip the model with some professional domain knowledge, strengthen the connection between specific domains and open domains, and make the model have richer semantic expression. In the stage of model building, we can restore the damaged mark by adding an automatic denoising encoder and evaluate the distinction between the damaged sentence and the original sentence. To provide clear regular signals, we can model the signals clearly provided by the example contribution and enhance the confidence of model prediction.

Nonrestrictive Query: We query the collection of aspects or opinions contained in the context in one direction (forward or backwards). We extract the collection of aspects \(\textrm{A}=\{\textrm{a}_1, \textrm{a}_2, \ldots , \mathrm {a_n}\}\) by designing the query ”What aspects”; here, n denotes the quantity of aspect words extracted, a represents the nth aspect word in the sentence, and we extract the collection of opinions \(\textrm{O}=\{\textrm{o}_1, \textrm{o} _2, \ldots , \mathrm {o_n}\}\) by designing the query “What options”, where n represents the number of opinion words extracted, and on represents the nth opinion word in the sentence.

For Restricted Queries: We extract the corresponding opinions or aspects according to the aspects or opinions extracted from nonrestricted queries in one direction (forward or backwards). For example, we extract the corresponding opinions according to the aspects by designing the restricted query “What opinions give the aspect?” \(\textrm{O}=\{\textrm{o}_1, \textrm{o}_2, \ldots , \mathrm {o_n}\}\), we design the restricted query “What aspect gives the opinions” to extract the corresponding opinions \(\textrm{A}=\{\textrm{a}_1, \textrm{a}_2, \ldots , \mathrm {a_n}\}\) according to the aspects.

Emotion classification: After determining the aspect - opinion contained in the context through restricted query, we design an emotion classifier to classify the emotion corresponding to the aspect, and finally construct a triplet based on the queried aspect, opinion, and emotion. The whole process is shown in Fig. 2.

Coding Layer of Knowledge Map Fusion

Given a sentence \(S=\{\textrm{x}_1, \textrm{x}_2 \ldots \textrm{x}_n\}\), which contains n tokens, add special tags [CLS: classifier] and [SEP: separator], and give a restrictive query or nonrestrictive query \(\textrm{Q}=\{\textrm{q}_1, \textrm{q}_2 \ldots \mathrm {q_n}\}\), and combine the specially marked sentences and query statements to obtain \(\textrm{X}=\{[\textrm{CLS}], \textrm{q}_1, \textrm{q}_2 \ldots \mathrm {q_n},[\textrm{SEP}], \textrm{x}_1, \textrm{x}_2 \ldots \textrm{x}_n\}\). The coding layer uses BERT to input X as the most input to obtain the context representation of each token. However, the original BERT only obtains general language features from the open domain without considering the specific domain knowledge, which makes the obtained features deviate from the meaning of the sentence, producing knowledge noise and ultimately affecting the accuracy of the answer prediction.

To alleviate knowledge noise, we incorporate domain knowledge through a knowledge map into our model. This helps establish a stronger link between specific and general domains, significantly reduces knowledge noise, and enhances the model’s interpretability. Moreover, the use of a knowledge map can reduce the pretraining cost of the model. As shown in Fig. 3, the coding layer of the original sentence fusion knowledge map consists of four layers, namely, the sentence layer, subembedding layer, position embedding layer and mark embedding layer. We first transform the sentence using a knowledge map to make it a sentence tree rich in knowledge. In addition, to preserve the correct word order of the sentence and contain professional domain information, we use MASK to shield the branches of the sentence tree when embedding the position so that the position is consistent with the original sentence position during the computation of attention weights, but the information of the sentence tree is restored in the token embedding layer. The advantage of doing so is that the sentence does not change its original structure while acquiring rich knowledge and reducing the risk of knowledge. Finally, the generated sentence tree is added with subembedding, position embedding and tag embedding, and finally, each tag is converted into a vector of size H.

Instance Regularization

Although many discriminative language pretraining models (BERT) have made remarkable achievements in NLP, these language pretraining models often adopt the strategy of shielding language (MLM) and achieve noise reduction and denoising by inserting, deleting, replacing and replacing the tags in sentences through tags (such as masks) [8, 19, 24]. However, during the training phase, these strategies treat each instance equally. We did not notice the special contribution of some specific examples to sentences. For example, after the “not” in the sentence “this movie is not good” is shielded, the sentence will have the opposite meaning. Therefore, we added instance regularization in the pretraining stage of the BMRC model and computed the discrepancy between the damaged sentence and the original sentence to obtain a clear regularization signal to improve the robustness of the model. As shown in Fig. 4, we first input the original sequence W, the destroyed sentence sequence W2, and the predicted sequence P into the encoder, and then obtain the corresponding hidden states H, \(\widehat{H}\), \(\widetilde{H}\) by learning different weights. Then, we calculate the distribution difference between the damaged sentence and the original sentence and between the predicted sentence and the original sentence through formula (1) and formula (2). Generally, \(l_1\) and \(l_2\) The larger the size, the higher the mismatch between the damaged sentence sequence, the predicted sentence sequence and the original sentence sequence, which makes the model more significantly update those “more difficult” training examples. KL is the Kullback-Leibler divergence. Finally, we use formula (3) as the loss function, where \(l_3\) is the loss of the MLM model after denoising.

4 Model Algorithm

First, input the given sentence x, and then input the pretrained knowledge graph-based model encoder to obtain the word vector \(\{\textrm{w} 1, \textrm{w} 2 \ldots \textrm{wn}\}\). Then, perform a non restrictive query to obtain the matching aspect and opinion for the given problem, and then perform a restrictive query to extract aspect-opinion pairs. Finally, a sentiment classifier is used to determine the emotional polarity of the matching opinion for the aspect. The entire process will be iterated multiple times until the specified number of training rounds is reached. The specific implementation algorithm is shown in Algorithm 1.

5 Experiment

Data Sets

To validate the efficacy of the BMRC based on knowledge enhancement and case regularization, we will conduct experiments on four benchmark data sets extracted from the SemEval [15] shared task.

Baselines

-

TWSP [13] is a model that divides triplet extraction into two stages. The model first extracts the attributes and views of sentences by constructing a classifier, then calculates the emotional polarity of the views through a multilayer perceptron, and pairs them with the results of the first stage to form a triplet.

-

OTE-MTL [27] is an aspect-level emotion analysis model based on a multitask learning framework. This model first detects the relationship between attributes, opinions and emotions in sentences through LSTM in the prediction phase and then performs reverse traversal of tags to finally achieve the task of triple group extraction.

-

DGEIAN [16] is an aspect-based opinion mining model based on graph-enhanced interactive attention mechanism networks. This model learns more grammatical and semantic dependencies from sentences by designing an interactive attention mechanism and then generates the final triplet through GTS.

Experimental Settings

During the experiment, we used a computer equipped with an Intel(R) Xeon(R) Platinum 8358P CPU and NVIDIA GeForce RTX 3090 graphics card and developed the model using the PyTorch framework. Through a copious number of experiments, we optimized various hyperparameters of the model to obtain the best effect. The specific software and hardware environment is shown in Table 1. See Table 2 for specific hyperparameter settings.

Evaluation Metrics

This article uses accuracy, recall rate, and F1 score as evaluation indicators. These indicators are mainly based on the mixed calculation of indicators in the confusion matrix, where TP denotes the count of positive samples successfully predicted, FP denotes the count of service samples that failed to predict, FN denotes the count of positive samples that failed to predict, and TN denotes the count of negative samples successfully predicted. According to the confusion matrix, the accuracy rates P, R, and F1 can be calculated. The calculation formulas are shown in (4)–(6):

6 Results

The results of our experiments on four benchmark data sets are shown in Table 3. According to the results, our model achieves optimal results on all four benchmark data sets, which indicates that our improvement further improves BMRC’s performance in processing ASTE tasks. On the four benchmark data sets of ASTE-Data, the F1 score of our model is 0.85, 2.71, 5.81 and 0.76 higher than the most advanced BMRC at present. This shows that the effect of our improvement is very significant.

7 Ablation Experiments

First, the model is tested without improving the benchmark data set. The model is based on the representation of BMRC, and then the model introduced into the knowledge map and the example regularization method are gradually superimposed for ablation experiments, and the following questions are answered:

-

How much improvement can the introduction of knowledge graphs and instantiation regularization bring?

-

After introducing knowledge graph and instantiation regularization, in which aspect do we mention the effectiveness?

Experimental Design: Knowledge Augmentation Ablation Experiment Based on Knowledge Graph: Only the original BMRC model was used for the emotion triplet extraction task and compared with the complete BMRC model with knowledge augmentation. At the same time, experiments were repeated on four data sets: 14-Res, 14-Lap, 15-Res, and 16-Res. The prediction accuracy of the model’s aspect extraction, opinion extraction, aspect-opinion pair extraction, and aspect-opinion-sentiment triplet extraction on each data set was recorded, and the impact of knowledge augmentation on model prediction accuracy and generalization was analysed.

Case Regularization Ablation Experiment: Only the original BMRC model is used for the emotion triplet extraction task and compared with the BMRC model added with case regularization. At the same time, experiments are repeated on four data sets, 14-Res, 14-Lap, 15-Res, and 16-Res, and the prediction accuracy of the model with respect to extraction, opinion extraction, aspect-opinion pair extraction, aspect-opinion emotion triplet extraction and other tasks on each data set is recorded. The influence of example regularization on the prediction accuracy and generalization of the model is analysed.

Joint Ablation Experiment of Knowledge Enhancement and Instance Regularization Based on Knowledge Graph: Only the original BMRC model is used for the emotion triple extraction task and compared with the complete experiment of introducing the joint ablation experiment of knowledge enhancement and instance regularization. At the same time, experiments are repeated on four data sets, namely, 14-Res, 14-Lap, 15-Res, and 16-Res, and the model’s aspect extraction, opinion extraction, and aspect-opinion pair extraction on each data set are recorded. The prediction accuracy rate of aspect-opinion-sentiment triple extraction is analysed, and the impact of instance regularization on the prediction accuracy and generalization of the model is analysed.

Experimental Results: From Table 4, it can be perceived that the BMRC model that separately introduces knowledge enhancement based on knowledge graph and instance regularization has obvious improvement in aspect extraction, opinion extraction, aspect-opinion extraction, and aspect-opinion-sentiment triplet extraction tasks, but the BMRC model that only introduces knowledge enhancement based on knowledge graph has better effect in aspect extraction than the BMRC model that only introduces instance regularization, The BMRC model with knowledge enhancement based on knowledge graph is 2.14% and 1.38% higher than the BMRC model with instance regularization in aspect extraction, and the BMRC model with instance regularization only is 0.79% higher in aspect-opinion pair than the BMRC model with knowledge enhancement based on knowledge graph only, which suggests that knowledge enhancement based on knowledge graphs primarily impacts the aspect extraction and opinion extraction of BMRC in the nonrestricted query phase, while instance regularization primarily impacts the performance of restrictive phase aspect-opinion pair extraction. In addition, we can also see from Table 4 that the F1 value of the BMRC model combined with knowledge enhancement based on knowledge map and instance regularization in various tasks is higher than that of the BMRC model that separately introduces knowledge enhancement based on knowledge map and instance regularization, which indicates that knowledge enhancement and instance regularization can complement each other and improve the performance of the model.

8 Conclusion

Based on the BMRC algorithm, this paper improves the triple extraction task of aspect emotion so that the model can effectively extract the triple of aspect, opinion and emotion in the context. With the aim of addressing the current challenges of the emotion triplet extraction task based on BMRC, we added a knowledge map, instance regularization and other measures to improve it. This method can effectively deal with complex ASTE tasks. Furthermore, our model’s effectiveness has been demonstrated through a multitude of ablation experiments.

References

Chakraborty, M., Kulkarni, A., Li, Q.: Open-domain aspect-opinion co-mining with double-layer span extraction. In: Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pp. 66–75 (2022)

Chen, S., Liu, J., Wang, Y., Zhang, W., Chi, Z.: Synchronous double-channel recurrent network for aspect-opinion pair extraction. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 6515–6524 (2020)

Chen, S., Wang, Y., Liu, J., Wang, Y.: Bidirectional machine reading comprehension for aspect sentiment triplet extraction. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 12666–12674 (2021)

Dehong, M., Li, S., Zhang, X., Wang, H.: Interactive attention networks for aspect-level sentiment classification. arXiv preprint arXiv:1709.00893 (2017)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Jiang, Q., Chen, L., Xu, R., Ao, X., Yang, M.: A challenge dataset and effective models for aspect-based sentiment analysis. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 6280–6285 (2019)

Karaoğlan, K.M., Fındık, O.: Extended rule-based opinion target extraction with a novel text pre-processing method and ensemble learning. Appl. Soft Comput. 118, 108524 (2022)

Lewis, M., et al.: Bart: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv preprint arXiv:1910.13461 (2019)

Li, X., Feng, J., Meng, Y., Han, Q., Wu, F., Li, J.: A unified MRC framework for named entity recognition. arXiv preprint arXiv:1910.11476 (2019)

Liu, K., Xu, L., Zhao, J.: Opinion target extraction using word-based translation model. In: Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, pp. 1346–1356 (2012)

Liu, P., Joty, S., Meng, H.: Fine-grained opinion mining with recurrent neural networks and word embeddings. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 1433–1443 (2015)

Mao, Y., Shen, Y., Yu, C., Cai, L.: A joint training dual-MRC framework for aspect based sentiment analysis. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 13543–13551 (2021)

Peng, H., Xu, L., Bing, L., Huang, F., Lu, W., Si, L.: Knowing what, how and why: a near complete solution for aspect-based sentiment analysis. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 8600–8607 (2020)

Pereg, O., Korat, D., Wasserblat, M.: Syntactically aware cross-domain aspect and opinion terms extraction. In: Proceedings of the 28th International Conference on Computational Linguistics, pp. 1772–1777 (2020)

Pontiki, M., et al.: Semeval-2016 task 5: aspect based sentiment analysis. In: ProWorkshop on Semantic Evaluation (SemEval-2016), pp. 19–30. Association for Computational Linguistics (2016)

Shi, L., Han, D., Han, J., Qiao, B., Wu, G.: Dependency graph enhanced interactive attention network for aspect sentiment triplet extraction. Neurocomputing 507, 315–324 (2022)

Shu, L., Li, K., Li, Z.: A robustly optimized BMRC for aspect sentiment triplet extraction. In: Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 272–278 (2022)

Viswanathan, S., Anand Kumar, M., Soman, K.P.: A sequence-based machine comprehension modeling using LSTM and GRU. In: Sridhar, V., Padma, M.C., Rao, K.A.R. (eds.) Emerging Research in Electronics, Computer Science and Technology. LNEE, vol. 545, pp. 47–55. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-5802-9_5

Wang, W., et al.: Structbert: incorporating language structures into pre-training for deep language understanding. arXiv preprint arXiv:1908.04577 (2019)

Wang, W., Pan, S.J., Dahlmeier, D., Xiao, X.: Coupled multi-layer attentions for co-extraction of aspect and opinion terms. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31 (2017)

Wu, S., Fei, H., Ren, Y., Ji, D., Li, J.: Learn from syntax: improving pair-wise aspect and opinion terms extractionwith rich syntactic knowledge. arXiv preprint arXiv:2105.02520 (2021)

Wu, Z., Ying, C., Zhao, F., Fan, Z., Dai, X., Xia, R.: Grid tagging scheme for aspect-oriented fine-grained opinion extraction. arXiv preprint arXiv:2010.04640 (2020)

Xu, L., Li, H., Lu, W., Bing, L.: Position-aware tagging for aspect sentiment triplet extraction. arXiv preprint arXiv:2010.02609 (2020)

Xu, Y., Zhao, H.: Dialogue-oriented pre-training. arXiv preprint arXiv:2106.00420 (2021)

Yan, H., Dai, J., Qiu, X., Zhang, Z., et al.: A unified generative framework for aspect-based sentiment analysis. arXiv preprint arXiv:2106.04300 (2021)

Yu, A.W., et al.: QANet: combining local convolution with global self-attention for reading comprehension. arXiv preprint arXiv:1804.09541 (2018)

Zhang, C., Li, Q., Song, D., Wang, B.: A multi-task learning framework for opinion triplet extraction. arXiv preprint arXiv:2010.01512 (2020)

Zhao, H., Huang, L., Zhang, R., Lu, Q., Xue, H.: SpanMLT: a span-based multi-task learning framework for pair-wise aspect and opinion terms extraction. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp. 3239–3248 (2020)

Zhao, T., Yan, Z., Cao, Y., Li, Z.: Asking effective and diverse questions: a machine reading comprehension based framework for joint entity-relation extraction. In: Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pp. 3948–3954 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Cheng, W., Liu, Y., Yin, Y. (2024). A BMRC Algorithm Based on Knowledge Enhancement and Case Regularization for Aspect Emotion Triplet Extraction. In: Zhang, M., Xu, B., Hu, F., Lin, J., Song, X., Lu, Z. (eds) Computer Applications. CCF NCCA 2023. Communications in Computer and Information Science, vol 1959. Springer, Singapore. https://doi.org/10.1007/978-981-99-8764-1_17

Download citation

DOI: https://doi.org/10.1007/978-981-99-8764-1_17

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-8763-4

Online ISBN: 978-981-99-8764-1

eBook Packages: Computer ScienceComputer Science (R0)