Abstract

Human brain is an exclusive, sophisticated, and intricate structure. Neuro-degeneration is the death of neurons which is the ultimate cause of brain atrophy resulting in multiple neurodegenerative diseases. Neuro-imaging is the most critical method for the detection Alzheimer’s and quantification of brain atrophy. Magnetic resonance imaging (MRI), computed tomography (CT), single-photo emission computes tomography (SPECT), and positron emission tomography (PET) are the widely used neuroimaging techniques to image/estimate altered brain tissue and to assess neurodegeneration associated with Alzheimer’s. Traditionally, neuro-radiologists incorporate clinically useful information and medical imaging data from various sources to interrelate the structural changes, reduction in brain volume, or changes in patterns of brain activity. In recent years, machine learning and artificial intelligence-based approaches continue to garner substantial interest in neurobiology domains and have emerged as powerful tools for the efficient prediction of neurological and psychiatric disorder-related outcomes. Traditional machine learning algorithms show limitations in terms of the data size and image feature extractions. To address such concerns, Deep Learning Algorithms relying on Deep Convolution Networks (DCN) and Recurrent Neural-inspired Networks (RNN) have advanced to more powerful paradigms to solve the complexity of multistate brain imaging data and to provide extensive solutions in the better understanding of mechanistic details of the progression of brain atrophy in Alzheimer’s disease. The rationale of this study is to provide an in-sight to role of Machine learning for AD detection using neuroimaging data.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The introduction of artificial deep neural networks innovations into healthcare could expand access to healthcare by providing new insights into diseases and leading to the discovery of new astonishing findings. Conventional therapeutic diagnosis is often based on sequences visible from medical images. Over the past few years, there is an exponential increase in the development and adoption of the machine learning (ML) and deep learning (DL) based algorithms of artificial intelligence for analysing the dynamic neuroimaging data and aiding physicians to apply automatic neuropathology prediction and diagnosis strategies. Although, machine learning technique remains one of the most popular methods for the extraction of various features of brain images but deep learning methods are currently attracting tremendous attention in neuroimaging because of intelligent algorithmic selection, extraction, and classification of essential anomalous features of brain scans. In this chapter, we provide a comprehensive review of the use of computer-aided diagnosis system for early perdition of neurodegenerative disorders and volumetric analyses of brain lesions using machine learning and deep learning algorithms.

1.1 Neurodegeneration

Neurodegeneration is an umbrella term for the progressive loss of structural or functional integrity of nerve cells, which leads to brain- and nerve-related pathologies, creating disturbances in nervous system and causing neuronal diseases like AD, PD, HD and ALS [1, 2]. Of these disorders, dementia is the most significant cause of disease worldwide, with an estimated 50 million people worldwide suffering from AD and related disorders. Neuro-degenerative diseases are devastating and largely inoperable state strongly linked with age and brain dysfunction [3].

Neurodegenerative diseases are multifactorial disorders caused by a combination of genetic, environmental and lifestyle factors. Neurodegeneration is often associated with protein misfolding that leads to cytotoxicity and disruption of cellular protein homeostasis. Recent studies have shown that the accumulation of abnormal or misfolded proteins such as amyloid-beta (A-beta), tau, and alpha-synuclein (-synuclein), synaptic dysfunction, synaptic loss, neuroinflammation are common pathological processes in major neurodegenerative diseases [4]. It is believed that the combination of transcriptional errors, human gene mutations and environmental factors are involved in the pathogenesis of neurodegenerative diseases. To be precise, the presence of certain risk factor genes makes us more vulnerable and susceptible to neurodegenerative diseases. A study conducted by the University of Glasgow in collaboration with the MRC Protein Phosphorylation and Ubiquitination Unit at the University of Dundee has revealed the role played by a gene called UBQLN2 [3]. The study also mentioned that UBQLN2 removes toxic protein clumps from the body and protects the body from their harmful effects. The researchers found that the main role of UBQLN2 is to help the cells get rid of toxic protein clumps. The protein clumps, which arise as part of the natural aging process, are entangled and disposed off by UBQLN2. However, when this gene is mutated or misfolded, it cannot help the cells to dislodge this toxic protein clump, leading to neurodegenerative diseases [5]. These misfolded or mutated proteins are also called brain-killers.

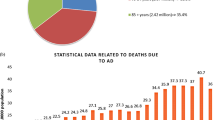

1.2 Consequences

Ageing is the one of the major risk factors for most neurodegeneration-related abnormalities. Ten percent of people over the age of 60 years are affected by neurodegenerative diseases. In addition, with the aging of the population, the prevalence of neurological diseases continues to rise. The World Health Organization (WHO) estimates that 47.5 million people worldwide have dementia and 7.7 million new cases are diagnosed each year. In addition, it is estimated that more than 30 million people suffer from Alzheimer's disease and five times as many from Parkinson’s disease. This number is expected to increase to about 100 million by 2050, which is an alarming increase. In addition, the cost of caring for Alzheimer's patients is expected to increase from $200 billion per year to $1.2 trillion by 2050 [6]. Treatment options for AD are limited, and there are consequently no substantial options to reverse the progression of the disease.

1.3 Medical Imaging

Indeed, medical imaging has changed the face of medical diagnosis. In 1895, Wilhelm Conrad Röntgen, a professor at the University of Würzburg in Germany, changed the world by taking X-rays of his wife Bertha’s hand and was awarded the Nobel Prize for his work [7]. This was the first time it was possible to see inside the human body. In the modern era, medical imaging technology is one of the most rapidly developing medical data sources. Researchers and neuroscientists have begun to observe the inside of the brain in order to understand how it works and functions. Medical imaging has revealed abnormalities affecting specific parts of the brain, such as the frontal and temporal lobes and the limbic system, making diagnosis easier and allowing treatment to begin immediately. Sometimes a disease can be revealed before it is felt. For many diseases, including cancer, the earlier the disease is diagnosed, the better the chances of survival. Doctors can use neuroimaging techniques such as MRI, CT, and diffusion weighting (DW) to diagnose these debilitating conditions in the brain. MRI can identify stroke lesions in a matter of minutes, and MRI images produce higher quality images than regular X-rays or CT scans. The radiological images used in the routine examinations are generally stored and transferred in DICOM (Digital Imaging and Communications in medicine) and NIFTI (Neuro-imaging informatics technology initiative) formats [8].

1.4 Rise in the Use of Artificial Intelligence (AI) in Healthcare for Computer-Aided Diagnosis (CAD) Using ML and DL Based Neuroimaging

The world is changing, and one of the changes that will influence our future is Artificial Intelligence (AI), which is revolutionizing every aspect of human life. The previous machine revolution/machine age expanded the mechanical power of humans. In this new machine revolution, not only human mechanical power but also mental power of humans has been amplified. Computers are going to swap not only physical labor, but also mental labor. Machine learning algorithms enable computers to become smarter by learning skills from past experiences and data. Machine learning has become a key to filling computers with knowledge, and knowledge is what enables brainpower [9]. Deep learning is based on neural networks, an approach to machine learning inspired by the human brain, and began in 2012 with speech recognition for cell phones using neural networks. Since then, breakthroughs have occurred in the field of computer vision [10]. Computers are now able to perform the amazing task of recognizing patterns and contents of images. As a result, computers are playing an increasingly important role in medical diagnosis using medical images [11, 12]. If neurologists could predict neurodegeneration years in advance, what would change? How can artificial deep neural networks help scientists to achieve this goal? In recent years, medical image analysis has provided techniques for recognizing patterns in neuroimages and studying brain connections and their pathological changes for better diagnostic support systems.

The review article is outlined as follows: General background of Alzheimer’s disease: causes and consequences are discussed in Sect. 2. Medical Images used in Diagnostic Approaches for Alzheimer’s disease is discussed in Sect. 3. In Sect. 4, use of AI in computer aided diagnosis using ML and DL is discussed. Section 4 discusses use of various machine learning and deep learning approaches. Section 5 provides the conclusion the study.

2 Alzheimer’s Disease: Causes and Effects

This is the most common cause of cognitive turn down. Alzheimer’s disease can be sporadic or familial. The most important sporadic variant responsible for causing AD is aging [13]. The other important reason for developing AD other than age is due to the increased presence of an allele known as Apolipoprotein-E4 (ApoE4). Apolipoprotein-E4 (ApoE4) has been associated with an increased threat of AD. Another protein known as apolipoprotein E2 (ApoE2) is actually a protective variant in nature against AD and decreases the risk of AD [14]. Apolipoprotein-E4 (ApoE4) harbouring individuals display early onset of AD. In terms of familial factors, there is often a very strong association with what is called Down syndrome, or trisomy 21. Other genes that are associated with development of AD include the presenilin-1gene and presenilin-2 gene. The presenilin-1 gene locates on chromosome 14 and presenilin-2gene locates on chromosome 1. Out of all these factors, age happens to be the most important factor for the development of the AD in patients [15]. To understand the pathophysiology associated with the AD, it is necessary to understand the significance of changes in secretase activity of amyloid-β precursor protein (APP), an important cell surface receptor [16]. There are two possibilities for this difference in secretase activity. The first possibility is that the enzyme α-secretase acts first in the degradation of enzyme alpha-secretase, followed by the enzyme γ-secretase. The second possibility is that the enzyme β-secretase acts first, followed by the activation of the enzyme γ-secretase. In the first possibility, the amyloid-β precursor protein (APP) [17] degrades into a soluble peptide. In the second possibility, on the other hand, the amyloid-β precursor protein (APP) forms an insoluble peptide [18]. This insoluble peptide is called as amyloid-β peptide (Aβ or A-β). This amyloid-β peptide (Aβ) may be involved in the formation of oligomers that aggregate in the cell. Once aggregated within the cell, it may lead to the formation of amyloid-β plaques. These amyloid- β plaques are toxic to neurons. Another protein which is also responsible for AD is Tau protein. Tau protein is responsible for attaching itself to microtubules in order to stabilize the structure of nerve cell bodies. However, when this tau protein is phosphorylated, it begins to detach from the microtubules. The dislodged tau protein begins to aggregate in the cell. This highly phosphorylated tau protein becomes involved in the formation of neurofibrillary tangles. The amyloid beta precursor protein (APP) is believed to be influenced by a gene on chromosome 21. Normal individuals have two copies of chromosome 21; those with three copies of chromosome 21 are more likely to overproduce APP, increasing the likelihood of amyloid-β plaque formation. This condition of having three copies of chromosome 21 is known as Down syndrome. Therefore, many patients with AD often show clinical signs of Down syndrome after the age of 65. Most patients with Down syndrome develop this type of disease by the age of 40. In the disease process of AD, neurons and neuronal networks disintegrate, and many areas of the brain begin to shrink. The final stage of AD is called cerebral atrophy. In this process, the volume of the brain is significantly reduced. Table 1 lists causes and consequences of AD.

3 Neuro-Imaging Used in Diagnostic Approaches for Alzheimer’s Disease

Researchers and neuroscientists aim to look inside the brain in order to understand its functioning, functionality, and pathological and physiological changes. There are several brain imaging techniques and diagnostic tests that can assist the physician create his/her assessment by looking inside the brain. The relevant medical imaging techniques are described below.

For neuroimaging, there are various modalities that can provide a quantitative picture of the structural properties of the brain and complex pathologies. This allows physicians to better understand disease progression and the body's response to treatment. Over the past few years, the fast development of non-invasive neuro-imaging techniques has opened up new possibilities for the investigation of human brain complexities and specialized functionalities [19]. Several techniques including Magnetic Resonance Imaging, diffusion-weighted Magnetic Resonance imaging, Computed Tomography, Single-Photon Emission Computed Tomography, and Positron Emission Tomography play significant role in the scanning of the complex structures of the brain and assessment of neurodegenerative disease patterns [20].

3.1 Magnetic Resonance Imaging Technique (MRI)

It is an imaging technique that uses magnetic fields and radio waves generated by RF coils to image the internal organization of the tissues/organs in the body. A large magnet generates a uniform magnetic field around the body. A large magnet produces uniform magnetic field around the body. The system measures the radio waves emitted by atoms that are subjected to a magnetic field. The appearance of tissue in MR images depends on the chemistry of the specimen and the MR sequence employed. T2-weighted images are the most common MR images, and tissues with high water and fat content have a relatively high number of hydrogen atoms, making them appear brighter and sharper. Bone, on the other hand, appears darker on T2-weighted images [21]. T1-weighted gadolinium-enhanced images (T1-Gd), and Fluid Attenuated Inversion Recovery (FLAIR) are sequences that are commonly used with T2-weighted scans in neuroimaging. A magnetic field has the property of polarizing atoms in the specimen placed in this field. A series of radio wave pulses passes through the region of interest, and stimulate protons to break out of the magnetic field sequence. The protons recalibrate by releasing energy in the form of radio signals, and the sensors catch that energy.

3.2 Computed Tomography (CT)

Computed tomography is a painless diagnostic test that uses 3600 X-rays and a computer to generate images/scans to capture successive images of brain tissue. CT images can be used by doctors to diagnose AD in particular and to pinpoint the exact location of brain damage and neurodegeneration in general. Longitudinal variations in brain volume are allied with longitudinal continuum of memory loss. Inside a CT machine, a gantry rotates around the brain while an X-ray beam is emitted, and a detector measures the amount of radiation absorbed by the body [22]. As the gantry rotates, many images are taken from different perspectives. The collected data is sent to a computer attached to the machine, which uses the information gathered by the detectors to generate images. The various images taken in this way help doctors to diagnose areas that are prone to neurodegeneration.

3.3 Single-Photo Emission Computes Tomography (SPECT)

Single-Photo Emission Computes Tomography technique in psychiatry is creating a revolution by helping out the psychiatrist to save generations. This imaging tool allows us to see the functional metabolism of synapses. The above tool uses gamma rays to visualize the inside of the body. SPECT essentially unravel three things: normal activity, hyper-activity or hypo-activity. As its name suggests, it is based on radioactive material that emits single photons. Technetium is a very important atom in this technology. A particular type of SPECT is DatScan, which uses Ioupane Iodide-123 as a drug to measure dopamine levels in the patient’s brain [23].

3.4 Positron Emission Tomography (PET)

Positron Emission Tomography is an another form of medical imaging tool for measuring the metabolism [24]. By injecting a radioactive tracer into the body by interventional injection, PET can measure blood flow, oxygen consumption, and glucose metabolism to diagnose the stage of disease and reveal brain function in three dimensional formats. In a PET scan, a detector measures photons and uses the information to produce an image that shows the dissemination of fluorodeoxyglucose (a radiopharmaceutical) in the body. Figure 1 representing the methods of gathering images.

4 Use of Artificial Intelligence in Computer Aided Diagnosis (CAD) Based on Machine Learning and Deep Learning Algorithms

The medical community is facing major challenges in the detection and diagnosis of several life threatening diseases. Every year, thousands of patients lose their lives due to various diseases. The best way to save these patients is through early detection and diagnosis of these diseases. However, diseases cannot be diagnosed until symptoms appear, which may be too late. Artificial intelligence is attempts to understand and build intelligent systems by accessing big data, advanced machine learning algorithms, and increasing computing power [25]. In the future, AI may be used to diagnose diseases before they occur and to stop them before they develop. Figure 2 depicts the hierarchy of support system for medical diagnosis, from image acquisition and reconstruction CAD.

The basic idea behind machine learning is to program a computer that can learn on its own through experience “The only source of knowledge is experience”—Albert Einstein. ML uses large data sets to create intelligent systems for decision making. The way ML works is that we feed it images and train it to distinguish between people with advanced disease from healthy people. Because these machines learn with experience, they get better and better as we feed more and more data to make certain these computers become smarter and more precise in diagnosis. The computers can recognize early signs of diseases that human eyes cannot see. Machine learning models are just as good as training data. In machine learning, a huge amount of data set is given to the classifier, which simply does some processing based on the data sets and tries to predict the outcomes. The technology of machine learning has solely enabled computers with new capabilities [26].

Feature engineering: is a process of using machine learning algorithms and knowledge of the data at hand by applying hard-coded transformation to the data before putting it into a model. Feature engineering is very important because machine learning algorithms are not smart enough to learn features on their own. The essence of feature engineering is to make a problem easier by representing it in a simpler way. Since many models have been created over the years by researchers and data scientists, the appropriate choice of model is important. Different models are suitable for different types of data, such as image data, patterns, numerical data, and text data. An excellent rule is considered for training-evaluation split in the following order: 80%-20% or 70–30%. The power of ML is that it can make predictions and differentiate between inputs by using the model in spite of using human opinion and manual-convention. Machine learning uses advanced algorithms to make decisions based on what they have learned and learn from the information provided to these algorithms. Deep learning uses a hierarchical set of algorithms to create a learnable ANN and thus makes intelligent decisions on its own.

Table 2 shows few classical machine learning and deep learning techniques for disease prediction. Both of these descend under the broad category of artificial intelligence. Deep learning algorithms are highly accurate that can assimilate features from unprocessed data with a high degree of accuracy, without the need to explicitly extract features from unprocessed data. Deep learning models are designed to analyze and make decisions in the same way that the human brain thinks and draws conclusions. Computers do not look at images the way humans, computers look at numbers.

4.1 Pre-Processing of MRI and CT Images for Machine Learning

The initial outline resampling, registration and bias field correction are decisive steps in the direction of MIA. Medical images are stored in a format called DICOM (digital imaging and communications in medicine), which can be accessed by an image processing toolbox. In brain imaging, only the brain is considered; tissues outside the brain (skull, fat, skin, etc.) are not necessarily included. Extraneous tissues often create hindrance with learning process and interfere with segmentation, regression, and classification tasks. By using the skull striping algorithm, a brain mask can be generated and the background can be reduced to zero.

-

i.

Re-sampling: To solve the problem of standard resolution, image resampling is necessary. Magnetic resonance images and computed tomography images, like natural images, do not have a standard resolution. 3D-CNNs are trained on data acquired with a resolution of 1 × 1 × 3, and inputting images with a resolution of 1 × 1 × 1 is expected to yield suboptimal results. Therefore, the best quality can be obtained by resampling using B-splines to bring the image closer to the desired standard resolution.

-

ii.

Medical image registration: Medical images are transformed from heterogeneous data sets into a single coordinate organization. Objects in a set of images are aligned by implementing geometric transformations and local displacements, and can be directly compared. This is due to the fact that the intensity of the tissue is not constant between different MR scanners. This needs to be removed and can be done by a process of bias field correction. This can have a very significant impact on the performance of the algorithm if not taken into account in preprocessing.

-

iii.

Bias-field correction: This is a preprocessing that removes the consequence of bias filed. As the name implies, it corrects the low frequency intensity and non-uniform uniformity present in the MR image before classification is performed.

This uneven uniformity is because of the uninvited signals that temper the generated images. This correction filters out biased images that need to be corrected due to the non-uniform magnetic field of the MR system.

-

iv.

Intensity-normalization: This method is useful for eliminating scanner variability. The Fuzzy C-Means (FCM) normalization technique, commonly used in neuroimaging, combines speed and quality and is capable of creating coarse tissue classifications between white matter (WM), gray matter, and cerebrospinal fluid from 3D brain images based on T1-weighted scans. A white matter segmentation mask is generally employed to estimate the average value of white matter with a user-defined constant module. This normalization strategy almost always seems to yield the desired prediction accuracy in brain imaging scans.

-

v.

Image segmentation: One of the most important steps in medical image processing, where machine learning is currently being used, is image segmentation, which attempts to separate either pathological and anatomical structures by specific types of structures.

4.2 Algorithms Based on Machine Learning

Many studies have shown that the onset of neurodegenerative diseases begins 10–20 years before clinical symptoms appear. Although the molecular mechanisms of neurodegenerative diseases vary, many phenomena such as neurite retraction, synaptic dysfunction and destruction, and ultimately neuronal loss, are considered to be characteristic of neurodegenerative diseases. In the last few decades, our understanding of neurodegenerative diseases has been advanced by intense research on many fronts. Genetics and pathogenesis of selective loss of neurons of have contributed significantly, generated a wealth of knowledge which became the base for the development of new technologies and several therapies for neurodegenerative disorders.

However, many studies have used data sets from a small number of patients, or a small number of images or proprietary data sets. Clinically, diseases are recognized either from the patient's symptoms or from medical images such as MRI for AD or DatScan for PD. The most difficult challenge is to identify the disease in its early stages, before symptoms appear. In the past, researchers mainly used their own datasets to study AD and followed computer vision techniques. At that time, classical machine learning tools required feature extraction to be done manually, so when done explicitly, support vector machines (SVMs) were preferred [27].

In 2008, the AD Neuro-imaging initiative (ADNI) released a dataset that included AD patients, mild dementia patients, and healthy older adults. ADNI compiled MRI, FDG-PET, and CSF biomarker testing outcomes for each individual, as well as multiple clinical features over a three-year period. The ADNI dataset is now available in several formats. Currently, the ADNI dataset consists of results from hundreds of subjects and serves as a benchmark for AD detection research. In previous studies, features were selectively extracted from the input images of the dataset, and the features were used to train a model using supervised machine learning classification methods. Even for PD, researchers revealed datasets with a small number of patients, few images or proprietary datasets for automated detection. Depending upon the features and parameters selected, different ML techniques have been implemented for this complicated task. As explained in the previous section, there are several well-known tests that are indicators of PD. The disease is diagnosed entirely by the clinically observed symptoms of the patient or by DatScan. When ML is used for computer-aided diagnosis of PD, it is common to use speech data sets, handwriting analysis, sensor data, image data, etc. Table 3 provides an overview of the contributions of artificial intelligence and machine learning to neurodegenerative disease detection and diagnosis over the past few years. Deep learning is considered as the best approach because it is more robust to noise and can perform implicit feature extraction with low error. Performance of DL becomes greater as the scale of data increases. DL train algorithm that is more accurate with enough data without feature engineering (without explicitly writing the rules). Deep Nets achieve accuracy beyond that of classical ML and scales effectively with more data.

Practical challenges for ML in medical Imaging.

Since most machine learning approaches are based on supervised techniques, training data is critical. Training data is expensive in terms of man-power, cost, time and expertise are required for this task. Training Data manually can be imperfect (wrongly labelled), and manually annotating new data for each test domain is not a practical solution. If data is not perfectly labelled, it will not validate effectively.

4.3 Rise of Deep Learning in CAD

Deep Learning (DL) origin and Growth: DL has been around for quite some time, starting with the McCulloch and Pitts model (Neural Net) in the 1950s, or around 1943 when neural networks were first introduced. From there, it went on to Hebb's Law in 1949, supervised learning by Rosenblatt in 1958, and associative memory in 1980. This concept of associative memory plays an important role in learning and understanding how neural networks associate certain patterns, which means that neural networks learn to understand what a particular class of images looks like. During the 1960s and 1980s, many neuroscientific, biological neuroscientific [22], and mathematical discoveries were made that allowed us to understand how multilayer perceptron feed-forward occurs and how we can use it to perceive objects visually. To understand how we recognize objects, a new term emerged in the 1980s, neo-recognition, or max spooling, which meant reducing the complexity of the network. From this, another concept emerged: backpropagation. Backpropagation refers to how to back propagate errors, but since 2000, we have moved beyond the era of standard neural networks and entered an era called deep learning. Deep learning is a method that has evolved greatly because there are so many connections between networks, which mean that the number of multiplications and nonlinear operations is very large.

The advent of GPU’s based CNN quickly influence and alter memory to accelerate the creation of images in a frame buffer projected for output to a display device. Deep learning neural networks, from neurons to RNNs, CNNs, autoencoders, and deep learning, have been proven to outperform other algorithms in accuracy and speed. Figure 3 shows the time line for deep learning.

4.3.1 Deep Learning in Medical Imaging

DL is being used as a tool to analyze MRIs in two major ways: classification and segmentation. Classification is the process of labelling an MRI image as normal or abnormal, while segmentation is the process of outlining various tissues. Before deep learning, it was actually the more classical ML techniques that helped us the most.

For example, Scikit learn is an open source and widely used Python library that is well developed to guide us when we have data science problems. It helps us to know which method to use, be it classification, regression, dimensionality reduction, etc. Now we can do all this with deep learning. In particular, Medical image analysis is a technique that we use quite a lot, and deep learning based on decision trees works very well. MRI which has a huge impact on deep learning and indeed image reconstruction, is a great modality because it is safe. MRI acquisition is inherently a slow process. Slow acquisition process is good for acquiring images of static objects like brain and bone but not good for moving objects like heart, foetus and liver because MR images are not that fast. There are two ways to acquire MR images: real-time MR images and gated MR images. There are options for acquiring MR images as real-time MR images and gated MR images. Real-time MR images are fast but two-dimensional (2D) and have relatively poor image quality.

Convolution neural network (CNN): is basically a collection of filters in a two-dimensional (2D) array (e.g., a 3 × 3 matrix). When it receives an input image, this 2D array/filter glides over the input image all the way until no other images are visible to browse. This process is called convolution and is referred as a convolutional network. In the convolution setting, the first filter is for identifying patterns such as straight lines and curves. The first layer is for retrieving and extracting patterns from the input image data. Deeper filters enhance CNN's ability to handle more complex pattern recognition related issues [37]. The degree of deepening determines the quality of the information obtained. There has been a clear shift from doctors doing everything manually to computational systems that support and assist them, and in the modern era, deep neural networks (NNs) and CNNs are helping doctors to diagnose problems. In the recent times, CNN algorithms and their variants have become very prominent and have made a significant impact with respect to medical imaging [38, 39]. Due to the variety and large number of images being generated, a sub-stream has been formed which results in a very important aspect of medical imaging diagnosis called computer aided diagnosis. Table 4 shows a brief list of various deep learning applications in medicine. This shift is mainly due to people's desire for better care, improvements, and more accurate outcomes. DL in medicine is an innovative step, ranging from screening for cancer, monitoring diseases to suggesting modified treatments.

4.3.2 Reasons for the Use of Machine Learning and Deep Learning in AD Detection

Before deep learning, there was a time when people started using random forests because they realized that a lot of decision trees can make a very powerful classifier. In 2012, unsupervised pre training, AlexNet, came into existence. AlexNet is the name of a convolutional neural network designed by Alex Krizhevsky. The optimal classification performance obtained using multimodal neuroimaging and fluid biomarkers were coupled using DL. DL algorithms continue to improve in performance and appear to offer promise for diagnostic assessment of AD utilizing multimodal neuroimaging data [40]. AD research that employs deep learning is continually expanding, improving performance by combining more hybrid data types, such as omics data, enhancing transparency with explainable methodologies that contribute knowledge of particular disease-related characteristics and processes. Since then, there has been a significant shift so that random forests are no longer the first choice, and standard convolutional neural networks are the baseline. The deep learning models used these days are arranged in such a way that many hidden layers are stacked on top of each other. The way these hidden layers are stacked makes it possible to learn extremely complex associations between input and output data. This is where the power of deep learning comes in. DL has recently been able to replicate ANNs to achieve multilayer ANN computations. ANNs are compilations of trainable mathematical units that are composed of layers that work together to solve convoluted tasks.

5 Conclusions

It is estimated that each individual has some form of neuro-degenerative disease such as AD, PD, ALS or fronto-temporal dementia. Numerous neuro-degenerative diseases are sequelae of neuro-degeneration process. As a result, the treatment cost is predicted to rise dramatically. Over 30 million people around the world have AD and it is 5 times less as many have PD. The Number of people with AD will increase to about 100 million by 2050 which is quite a tremendous increase. Therefore, it is necessary to examine the challenges and problems from the perspectives of the early detection/diagnosis. In the recent years, machine learning and artificial intelligence have emerged as powerful tools, providing algorithms that can solve classification problems in neuro-imaging data. However, machine learning algorithms have limitations in size of data and feature extractions. Deep Learning algorithms based on deep convolution networks (DCN), long short term memory (LSTM) and recurrent neural networks (RNN) in the recent past have emerged as more powerful paradigms with deep feature extraction layers to solve the complexity of neuro-imaging data and provide a wide range of medical imaging solutions. Therefore, it is clear that algorithms based on deep neural networks (e.g., DCNN) have become a major tool that allows neurologists to have a great impact on patients care by developing software based systems for accurate detection and interpretation of diagnosis through classifications models. Such algorithms in medical imaging enables early as well as quick diagnostic with visualization, treatment planning and outcome evaluation. These algorithms have a great potential to improve the ability to accurately map a patient's tissue sample from the inside to a specific diagnosis. Hybrid models based on DL algorithms such as (RNN, LSTM and 3D-deep convolution neural networks (3D-DCNN)) as a classification model for the early detection and diagnosis of Alzheimer’s are effective. The outcomes of the proposed model are compared with existing algorithms to confirm the effectiveness of the proposed model.

References

Gitler, A.D., Dhillon, P., Shorter, J.: Neurodegenerative disease : models, mechanisms, and a new hope, pp. 499–502 (2017)

Sweeney, P., et al.: Protein misfolding in neurodegenerative diseases: implications and strategies. Transl. Neurodegener. 6(1), 1–13 (2017)

Noble, W., Hanger, D.P., Miller, C.C.J., Lovestone, S.: The importance of tau phosphorylation for neurodegenerative diseases. Front. Neurol. 4(July), 1–11 (2013)

Martin-Macintosh, E.L., Broski, S.M., Johnson, G.B., Hunt, C.H., Cullen, E.L., Peller, P.J.: Multimodality imaging of neurodegenerative processes: part 2, atypical dementias. Am. J. Roentgenol. 207(4), 883–895 (2016)

Boxer, A.L. et al.: Amyloid imaging in distinguishing atypical prion disease from Alzheimer disease, 48855 (2007)

World Health Organization https://www.who.int/news-room/fact-sheets/detail/dementia (10 May 2022)

Panchbhai, A.S.: Wilhelm Conrad Röntgen and the discovery of X-rays: revisited after centennial. J. Indian Acad. Oral Med. Radiol. 27(1), 90 (2015)

Sriramakrishnan, P., et al.: An medical image file formats and digital image conversion. Int. J. Eng. Adv. Technol 9(1S3), 74–78 (2019)

Tagaris, A., Kollias, D., Stafylopatis, A., Tagaris, G., Kollias, S.: Machine learning for neurodegenerative disorder diagnosis—survey of practices and launch of benchmark dataset. Int. J. Artif. Intell. Tools 27(3), 1–28 (2018)

Khan, Y.F., Kaushik, B.: Computer vision technique for neuro-image analysis in neurodegenerative diseases: a survey. In: 2020 International Conference on Emerging Smart Computing and Informatics (ESCI). IEEE (2020)

Oxtoby, N.P., Alexander, D.C.: Imaging plus X: multimodal models of neurodegenerative disease. Curr. Opin. Neurol. 30(4), 371–379 (2017)

Khan, Y.F., Kaushik, B.: Neuro-image classification for the prediction of alzheimer’s disease using machine learning techniques. In: Proceedings of International Conference on Machine Intelligence and Data Science Applications. Springer, Singapore (2021)

Zhang, S. et al.: The Alzheimer’s peptide Aβ adopts a collapsed coil structure in water., 141, 130–141 (2000)

Nelson, T.J., Sen, A.: Apolipoprotein E particle size is increased in Alzheimer’s disease. Alzheimer’s Dement. Diagnosis, Assess. Dis. Monit., 11, 10–18 (2019)

Bohlen, O.V., Halbach, A.S., Krieglstein, K.: Genes, proteins, and neurotoxins involved in Parkinson’s disease. Prog. Neurobiol. 73(3), 151–177 (2004)

Tenreiro, S., Eckermann, K., Outeiro, T.F.: Protein phosphorylation in neurodegeneration: friend or foe? Front. Mol. Neurosci. 7(May), 1–30 (2014)

Hussain, R., Zubair, H., Pursell, S., Shahab, M.: Brain sciences neurodegenerative diseases : regenerative mechanisms and novel therapeutic approaches (2018)

Mori, T., Miyashita, N., Im, W., Feig, M., Sugita, Y.: Molecular dynamics simulations of biological membranes and membrane proteins using enhanced conformational sampling algorithms. Biochim. Biophys. Acta—Biomembr. 1858(7), 1635–1651 (2016)

Salankar, N., et al.: Impact of music in males and females for relief from neurodegenerative disorder stress. Contrast Media Mol. Imaging 2022 (2022)

Szymański, P., Markowicz, M., Janik, A., Ciesielski, M., Mikiciuk-Olasik, E.: Neuroimaging diagnosis in neurodegenerative diseases. Nucl. Med. Rev. 13(1), 23–31 (2010)

Langkammer, C., Ropele, S., Pirpamer, L., Fazekas, F., Schmidt, R.: MRI for iron mapping in Alzheimer’s disease., pp. 189–191 (2014)

Ural, B.: An improved computer based diagnosis system for early detection of abnormal lesions in the brain tissues with using magnetic resonance and computerized tomography images (2019)

Ahlrichs, C., Lawo, M.: Parkinson’s disease motor symptoms in machine learning: a review., November 2013 (2014)

Jones, D.T., Townley, R.A., Graff-radford, J., Botha, H.: Amyloid- and tau-PET imaging in a familial prion kindred., (2018)

Salehi, W., et al.: IoT-based wearable devices for patients suffering from Alzheimer disease. Contrast Media Mol. Imaging 2022 (2022)

Jiang, F., et al.: Artificial intelligence in healthcare: past, present and future (2017)

Singh, L., Chetty, G., Sharma, D.: A novel machine learning approach for detecting the brain abnormalities from MRI structural images, 94–105 (2012)

Li, S., et al.: Hippocampal shape analysis of Alzheimer disease based on machine learning methods. Am. J. Neuroradiol. 28(7), 1339–1345 (2007)

Magnin, B., et al.: Support vector machine-based classification of Alzheimer’s disease from whole-brain anatomical MRI. Neuroradiology 51(2), 73–83 (2009)

Morra, J.H., Tu, Z., Apostolova, L.G., Green, A.E., Toga, A.W., Thompson, P.M.: [2010] Morra—comparison of AdaBoost and support vector machines., 29(1), 30–43 (2011)

Westman, E., et al.: Multivariate analysis of MRI data for Alzheimer’s disease, mild cognitive impairment and healthy controls. Neuroimage 54(2), 1178–1187 (2011)

Wolz, R., et al.: Multi-method analysis of MRI images in early diagnostics of Alzheimer’s disease. PLoS ONE 6(10), 1–9 (2011)

Zhang, D., Shen, D.: Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in Alzheimer’s disease. Neuroimage 59(2), 895–907 (2012)

Liu, M., Zhang, D., Shen, D.: Hierarchical fusion of features and classifier decisions for Alzheimer’s disease diagnosis. Hum. Brain Mapp. 35(4), 1305–1319 (2014)

Liu, S., et al.: Multimodal neuroimaging feature learning for multiclass diagnosis of Alzheimer’s disease. IEEE Trans. Biomed. Eng. 62(4), 1132–1140 (2015)

Zhang, Y., et al.: Detection of subjects and brain regions related to Alzheimer’s disease using 3D MRI scans based on eigenbrain and machine learning. Front. Comput. Neurosci. 9(JUNE), 1–15 (2015)

Aderghal, K., Benois-pineau, J., Afdel, K.: Classification of sMRI for Alzheimer’s disease Diagnosis with CNN : single siamese networks with 2D + ε approach and fusion on ADNI, pp. 494–498 (2017)

Khan, Y.F., Kaushik, B.: Neuroimaging (Anatomical MRI)-based classification of Alzheimer’s diseases and mild cognitive impairment using convolution neural network. In: Advances in Data Computing, Communication and Security, pp. 77–87. Springer, Singapore (2022)

Sethi, M., et al.: An exploration: Alzheimer’s disease classification based on convolutional neural network.“ BioMed Res Int 2022 (2022)

Khan, Y.F., et al.: Transfer learning-assisted prognosis of Alzheimer’s disease and mild cognitive impairment using structural-MRI. In: 2022 10th International Conference on Emerging Trends in Engineering and Technology-Signal and Information Processing (ICETET-SIP-22). IEEE (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Khan, Y.F., Kaushik, B., Koundal, D. (2023). Machine Learning Models for Alzheimer’s Disease Detection Using Medical Images. In: Koundal, D., Jain, D.K., Guo, Y., Ashour, A.S., Zaguia, A. (eds) Data Analysis for Neurodegenerative Disorders. Cognitive Technologies. Springer, Singapore. https://doi.org/10.1007/978-981-99-2154-6_9

Download citation

DOI: https://doi.org/10.1007/978-981-99-2154-6_9

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-2153-9

Online ISBN: 978-981-99-2154-6

eBook Packages: Computer ScienceComputer Science (R0)