Abstract

Recent technological advances have made it possible to collect biomarkers in the same geographic areas where a disease's earliest symptoms occur. Recent technical advances have enabled the collection of biomarkers in areas where early symptoms co-occur. This goal, which is important for finding Alzheimer's disease and its symptoms quickly and accurately, could be achieved in a way that is helpful. It is critical to attain this goal to have a way to treat Alzheimer’s disease and its symptoms. It is critical to recognize Alzheimer's disease and its symptoms accurately and immediately, while also maintaining a high degree of diagnostic accuracy. This goal's significance cannot be overemphasized. This severe impediment must be overcome to go forward. It will be critical to monitor the postsynaptic potential of hundreds of neurons grouped in the same spatial orientation to progress in this direction. This enables the calculation of the entire amount of time that the electrical activity happened during the measurement. This is since the total length of time may be calculated. Time-dependent power spectrum descriptors were employed in this study to provide a differential diagnosis of electroencephalogram signal function. This chapter will be delivered to you as verification of the accomplishments. You will be given this information in the form of a record after the findings have been tallied. Convolutional neural networks will be the focus of the third phase of the discussion on how to categorize people with Alzheimer’s disease. Following that, we'll conclude our investigation into this topic. These networks have just recently, if at all, been created and placed into service. Analyzing the data indicated that the initiative was a resounding and unequivocal success in every way. The absence of negative outcomes may lead to this conclusion. Look at this fantastic example: When convolutional neural networks are used as the analytical technique, the concept of correctness is accurate to an accuracy precision of 82.3%. This demonstrates that our understanding of the concept is correct. There was a lot of success in terms of obtaining the desired degree of precision. Only 85% of cases with moderate cognitive impairment are fully and totally recognized, compared to 75% of the population that is healthy and 89.1% of cases associated with Alzheimer’s disease.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Alzheimer’s disease

- Convolutional neural network

- Electroencephalogram

- Support vector machine

- Long term short memory

1 Introduction

Dementia is a word used to describe a group of symptoms characterized by declines in cognitive ability and changes in behavioral patterns. Dementia is distinguishable from other types of neurodegenerations by the presence of typical cognitive and behavioral deficits. In common usage, the term “dementia” is widely used to refer to all these disorders and symptoms. Alzheimer’s disease (AD) is responsible for seventy percent of all dementia cases reported in the history of humans. As a person approaches the age of 65, the probability of developing this disease increases [1,2,3]. This illness exclusively affects adults over the age of 85 when they first exhibit symptoms. Those over the age of 65 have a threefold higher chance of developing these symptoms. Patients with Alzheimer's disease have no choice but to get palliative care because there is presently no medicine that will lessen the condition's symptoms [4]. Patients have the option to get palliative care if that is what they choose. These medicines, on the other hand, can temporarily slow the progression of the disease, which is great news for both patients and caregivers. This is the case, and I can say that with absolute certainty. It is common practice to provide accuracy scores of up to 90% for existing diagnostic procedures such as neurological tests and medical histories. This is due to the accuracy of these procedures. These processes are so precise because they produce such constant results. This section lacks sufficient sources to back up its assertions. The Alzheimer’s Association supplied comments throughout the study's development, giving them the opportunity to influence the criteria as they were constructed. This section lacks sufficient sources to back up its assertions, the electrode contacts effects considerably increased the group size between 8 and 10 Hz as shown in Fig. 1. There are certainly more references to this somewhere. It's probable that it's mentioned somewhere in the text. The most recent set of guidelines [5] says that neuroimaging should be used along with biomarkers and cerebrospinal fluid to diagnose Alzheimer's disease in people who are already showing signs of it.

The Alzheimer’s Association created this exam because it may be accomplished fast and with little effort on the part of the person executing it. Furthermore, these two examinations examine a candidate's ability to fulfill administrative tasks and other job-related activities. On the other hand, the Rey Auditory Fluency Assessment and the Visual Learning Test are meant to test every skill that has to do with taking care of patients [6]. Aside from Alzheimer's disease, researchers have discovered a variety of additional disorders that can contribute to dementia in certain people [7]. This data may be recorded using an electroencephalogram (EEG). This sort of examination is most often known as an EEG. An electroencephalogram can be used to record this data. Electrodes are implanted on the patient's scalp to acquire reliable measurements of electrical potentials. The level of spatial resolution that an electroencephalogram may achieve is controlled in part by the number of electrodes placed on the scalp as well as the precise placements of those electrodes.

These tests were performed to determine which of these illnesses was more likely to progress to dementia. The primary frequency bands in analytics are typically separated into theta, which runs from 4 to 8 Hz; delta, which runs from 0 to 4 Hz; beta, which runs from 12 to 30 Hz; and alpha, which runs from 8 to 12 Hz. Each frequency band has its own specific data collection based on a wide variety of aspects of brain activity and synchronization [8, 9]. These characteristics are as follows: Several studies [10] have investigated the feasibility of employing EEG in the clinical assessment of dementia and Alzheimer’s disease, it is more often known, has a high temporal resolution, is noninvasive, is very inexpensive, and may be transmitted (about milliseconds). Patients with frontotemporal dementia and healthy controls were created using sLORETA and compared with this study is shown in Fig. 2. The major purpose of the investigations was to compare electroencephalogram (EEG) recordings of individuals with Alzheimer's disease and healthy control volunteers [11, 12]. To do so, the two sets of recordings were evaluated and contrasted. Alzheimer's illness is well known for diminishing EEG signal synchronous change and complexity. This is one of the signs of the illness.

These increases have been employed as identifying markers in EEG recordings for Alzheimer’s disease diagnosis. Previously impossible, it is now feasible to analyze the intricacy of EEG signals using a wide range of scientific methodologies. This was not always the case. In addition to the first positive exponent of Lyapunov [13], the connection factor has garnered a lot of attention [14]. When compared to the EEG signals of age-matched control people, Alzheimer's disease patients’ EEG signals exhibit lower values in several tests, indicating a lower level of complexity. This conclusion was possible because the average age of Alzheimer’s patients is much older than that of the study's controls. Other information-theoretic approaches, most notably those based on entropy, are emerging as potentially valuable EEG indicators for Alzheimer's disease (AD). These methods establish a relationship between the intensity of a signal and how much it varies over time. They contend that analyzing signals that behave erratically is more challenging than analyzing signals that behave regularly. To make sense of the data, recent research [15] on epileptiform EEG data offered a variety of potential detection techniques. These methods were created to detect epileptiform activity. The existing seizure detection approaches rely on manually constructed feature extraction algorithms extracted from EEG data [16]. These approaches were developed by utilizing raw data collected during an epileptic episode. As a result of seizure activity, several solutions were eventually established.

A description of the features that were picked because of the feature extraction approach [17] is necessary to accurately identify the various EEG signals that may be collected by utilizing the various types of classifiers. This is done to prepare for the identification of the various EEG signals that will be coming. To gather features, Hamad et al. employed a technique known as differential wavelet processing. The support vector machine was then utilized to teach the radial reference approach. They ended by demonstrating that the proposed SVM Grey Wolf optimizer can aid in the establishment of an epileptic diagnosis [18]. Subasi and his colleagues developed a hybrid model that refines SVM parameters using genetic algorithms and particle swarm optimization. This was done to enhance efficiency and simplify the procedure. The given hybrid SVM model shows that an electroencephalography is a key tool that neuroscientists use to find seizures [19].

This strategy, however, does not eliminate the factors that influence manual activity selection [20]. This is because a large percentage of the classification’s degree of detail is established at this phase. It has been stated that constructing a classification scheme does not necessitate the elimination of potentially detrimental traits. Furthermore, recent advances in deep learning have demonstrated a fresh strategy to overcome this challenge, which is a favorable development. This topic has recently sparked a lot of attention from a variety of sources. Deep learning commands the respect it does now because of its recent success in differentiating itself as a unique discipline within the larger domains of computer vision and machine learning. It would be a better use of one's time to do feature extraction first. However, a significant proportion of recent research [21] did not employ feature extraction and instead used raw EEG data for the deep learning model. These studies did not make use of feature extraction. Unlike most other research, this one didn’t use “feature extraction” to get its data. TD-PSD is an abbreviation for a time-dependent power spectrum descriptor. Three different forms of sample testing add to the overall EEG input. The MCI, AD, and HC are the three. These methods are referred to as “approaches. Finally, an example of the architecture of a convolutional neural network, often known as a CNN, is shown here with the goal of categorizing patients with Alzheimer’s disease. Since the performance review was given, it can be used to make decisions about the results.

2 Review of Literature

Due to the complexity and nonlinearity of EEG signals, novel machine and signal processing approaches are required [22]. As a direct result of the stunning discoveries in the field of deep learning [23], more difficult abstractive algorithms have been constructed. Now that we have these tools, we can quickly and efficiently delete any data that is no longer needed for our current purposes. This is made possible by the availability of these algorithms. The most recent accessible research on the issue served as a guide for the creation of these methods. These approaches were created because of previous research. The progress to this level has occurred during the last few months. These deep learning algorithms have risen in popularity in recent years, and their applications currently include the generation of video games [24], image processing, audio processing, and natural language processing [25]. Researchers have successfully defined the biological domain using approaches like those described [26]. A deep neural CNN with 13 layers is proposed as a method of distinguishing between “healthy” EEG data, “preictal” EEG signals, and seizure-related EEG signals. A total of 300 EEG signals were analyzed during the study endeavor to achieve the required classification rate of 88.67%. At the time, this was recorded on a computer. Deep neural networks were developed by the same academic team that developed the first depression diagnostic tool based on EEG data [27]. These researchers also thought that their new technology should be based on a deep neural network.

The success rates in the left hemisphere ranged from 93.5% for healthy participants to 96% for depressed people. This is a whole-brain measurement (right hemisphere). A 13-layer CNN model including information from both healthy people and Parkinson’s disease patients yielded an accuracy of 88.25%. Patients with Parkinson's disease and healthy people were picked at random. Long-Term Short-Term Memory. The type of RNN used in this model is called a Recurrent Neural Network (RNN). Before being employed in this study, the pictures were subjected to several pre-processing techniques such as segmentation, registration, smoothing, and normalization. These steps were taken to remove distracting aspects from the photographs, such as the skull.

Visualization of the channels in EEG Linear Data as well as nonlinear data is shown in Figs. 3 and 4 respectively, it is used to categorize features in the model. The model is trained after data preparation by giving it sequential data that has been separated into time increments. This is done throughout the treatment. This is done to guarantee that the model has received an adequate education. After learning this information, the model goes on to provide a projection for the state it will be in over the next six months. While the model is being evaluated, the user gets access to the data for the 18th and 24th months. Once the assessment of these two distinct sets of data is completed, the model will be able to provide a forecast for the subject's status during the 30th month. Analyzing longitudinal data using an RNN approach to identify people with AD and those who are stable. This is accomplished using strategies like those discussed in previous chapters of this work. However, both the input and the function will go through separate preprocessing processes before being exposed to the normalization procedure [28]. When the preprocessing stage is completed, the data will be given to the LSTM and gated recurring units for processing. This will occur after the preprocessing stage is completed. This is done so that the cell can detect the pattern of data flow. This is done to provide the cell with the capacity to understand the pattern of data flow. This is done so that the cell can learn how to recognize data flow patterns.

The effects of nonrecurrent networks, commonly known as multilayer perceptron’s, are compared to the effect models of each data arrangement. The Classification Strategy for EEG Signals Based on Machine Learning is shown in Fig. 5. Many of these trainable parameters are prone to overfitting the training data since they need extended training for sequential data. Unsupervised feature learning employs the concept of unsupervised feature learning as a guiding principle, with the primary purpose of defining AD. Sparse filtering has been advocated as a strategy for better understanding the expressive features of brain images [29]. Students are shown how to use the SoftMax regression technique to categorize the scenarios. The first phase includes three steps, the first two of which are training the sparse filter and calculating its W weight matrix. It may extract the local attributes existing in each sample using learned sparse filtering, Fig. 6 shows a straightforward example of a convolution operation in two dimensions.

The goal of this article is to give a high-level overview of how machine learning is used to analyze EEG data and make clinical diagnoses of neurological illnesses.

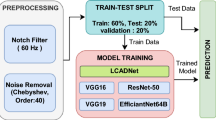

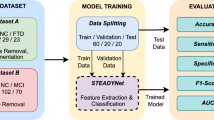

3 The Proposed Methodology

The EEG data will be used at some point in the investigation, providing a more detailed assessment of the disease’s phases than was previously possible. A deep convolution neural network design has been proposed to partition multichannel human EEG signal data into the relevant phases. This might allow for a more precise analysis of human brain function. This would allow a more accurate analysis to be performed [30,31,32]. If this were done, the amount of productivity that could be accomplished when categorizing things would dramatically increase. The following additional components have been incorporated into the scope of this development.

3.1 Extraction of Characteristics

The discrete Fourier transform will most likely be employed to interpret the EEG trace in terms of a frequency function. MRI Brain Slices are Taken from People Suffering from Alzheimer’s Disease is shown in Fig. 7. This will be completed after the sampling representation is completed. This is possible since the EEG signal has been sampled. This will be done as a direct and immediate result of the representative sample that occurred. The successful representation of the EEG wave is expected to result in the successful completion of this study [33,34,35]. According to the Parseval theorem, the “extraction of features” is always the first stage in every transformation. Theorem studies could be helpful in this case because they could give new information about this step in the process. According to this theorem, the full square of the function's transformation and the complete square of the function's transformation are both equal words.

3.2 Proposed Model

This process employs a variety of different algorithms, including KNN, SVM, and LDA, among others. These algorithms are commonly combined with one another. One of the criteria used in the selection process was how well each strategy used machine learning technologies. These elements eventually influenced the decision. This was a critical issue that was examined throughout the decision-making process. According to the data acquired, 51 of the 64 individuals assigned a diagnosis of moderate cognitive impairment had a proper diagnosis identified (MCI). Therefore, the diagnosis accuracy rate is 79.7%. The KNN test, on the other hand, has a sensitivity of 71.9% for detecting whether a person has Alzheimer's disease. The sound samples, which featured the various voices of forty different people, could only correctly identify the people 62.5% of the time. The findings also demonstrate that the correct subgroup of people with MCI is recognized in 63.7% of those who have been diagnosed with the illness. When compared, the KNN method, SVM algorithm, and LDA algorithm may attain accuracy levels of 71.4%, 41.1%, and 43.8%, respectively. This is because there are several methods for classification, such as the KNN technique, the SVM methodology, and the LDA algorithm. The proposed model may be shown in Fig. 8.

To put it another way, the degree of precision that can be attained using KNN is significantly more than the level of accuracy that can be attained via the use of other approaches. After this step is completed, a brand-new EEG signal categorization architecture based on CNN will be installed. This architectural style will be implemented. This architecture will help us in the future. It is possible that a correct evaluation of accuracy would be performed 82.3% of the time utilizing CNN's approach [36,37,38], which predicts accuracy. Because the given CNN outperforms other techniques, the KNN methodology will be the next target pursued as part of this study. This will be completed in order to meet the project's main goal. When compared to other techniques, the CNN presented here performs far better than its competitors [39, 40]. The LDA and SVM got similar values for the area under the curve (AUC) at the same place near the bottom of the spectrum. These are just a handful of the ideas that have been proposed. It has been recommended that the feature extraction technique be modified to utilize a different EEG signal cause for categorization and a reduced number of characteristics. It has also been proposed that training be conducted utilizing the design supplied. These are just a few of the many proposals that have been offered thus far. All of these are recommendations made by various individuals. It's not inconceivable that this will have an impact on accomplishing the stated objectives.

4 Results

Another strategy that might be used is to standardize the zero-order moments of each channel. To achieve this purpose, each channel must first be disassembled into the zero-order moments that comprise it, and then those moments must be standardized. Only then will one be able to achieve this aim. This information is suggested to be critical for each channel in this case. Figure 9 illustrate both the Dropout Rate and The Dense Unit Affect the Proposed Model's Precision. After being utilized as power two times, the spectrum passes through this transformation for whatever reason. For some unknown reason, the spectrum undergoes this transformation twice after being used as power. When you successfully execute this approach a certain number of times in a row, the following will happen: Here’s how a power transformer is made, with the goal of making the domain of all moment-based characteristics the same and minimizing the effect of noise on those parameters: For the sake of this experiment, the researchers have decided to employ a significance level of 0.1. We came to this conclusion after much deliberation. Because of this, and taking into consideration each of these distinct characteristics, the top three identified attributes are as follows:

This is because their “sparseness” is determined by how much vector energy may be found in only a few more components. The following are the immediate repercussions of this behavior: A feature is a vector representation that comprises all objects that are comparable to a zero-sparseness index, such as and. The zero-sparseness index, denoted by the symbol, is one example of this. For all other degrees of sparsity, however, a feature must have a value greater than 0. This is because differentiation causes a feature to be represented as a zero-sparseness index for a vector whose elements are all the same. This is one of the sources of the problem. The following are some of the factors at play in this situation: The entirely linked layers are employed first, followed by the system's hidden layer, which is known for its ability to differentiate signals. This occurs after traveling through the buried layer. The system's next level is reached after navigating the buried layer. When the deep learning layer is completed, the entire linked layer will be built. The final categorization choice is made by this part, which is fully connected to all the other parts. Figure 10 shows the presented CNN’s Accuracy and Loss Figures During Categorization.

These curves were used to identify whether a signal was radio noise or not. These curves were utilized at various phases of the investigation to assess if a signal was radio noise or not. Until recently, no one was aware of the relevance of these curves in relation to the procedures used in the medical industry to make choices. This is because these curves were previously unknown. Figure 11 shows the confusion matrix of the CNN approach that is being proposed to categorize Alzheimer’s patients. Consider, for a minute, a universe in which there are only two types of people: those who are considered healthy and those who are considered abnormal. It is a screening test that we offer to both ill and healthy people, and the range of findings it generates ranges from zero to an immense number scale. In the following discussion, a higher test result indicates a greater risk of having the condition being tested. The risk of this occurring increases with the severity of the consequence.

This causes the curve to develop as a direct and immediate outcome of what happens because of it. This projection is based on the overall percentage of diagnostic procedures that provide false positive results (FPR). A TPR may also be referred to as the sensitivity, recall, or detection probability by those who are educated in the field of machine learning. All of them are different names for the same object. This is evidenced by the fact that the ROC has shifted to the left. If you want to get things rolling, you should start here. Both issues are taken into consideration and considered by the ROC over the course of the inquiry. After computing the TPR and FPR, it is feasible to build a higher-quality consequent curve. This is made feasible by the computation of the TPR and FPR. This may be accomplished by using an algorithm that fits curves to data. To calculate the TPR, divide the value of the objective by Y. To calculate the FPR, divide the value of the target by the total number of observations. After identifying the value of the aim, any of these computations can be performed alone or together. The answers to both equations may be found in a table just below this one, which can be reached by using this table right here.

To capture numerous channels of EEG data, standard monopolar connections of electrodes implanted on the earlobes were used [34]. Since Fig. 3 is meant to show the standard way of doing things, the electrodes used to make an electroencephalogram are often put where they are shown. The fact that the interviewees’ eyes were closed during the process showed that they were sleeping. In this scenario, it's not out of the question that the same hierarchical structure may influence a range of distinct brain regions. This is related to the brain's hierarchical organization. During the recording process, electrodes are put on the head to pick up the electrical impulses that the electroencephalogram makes. We were able to gather data using a signal that was active for 300 s, a total sample size of 300 terabytes, and a sampling rate that ranged from 1024 to 256 samples per second. Each of these aspects has a direct impact on the success of our data collection efforts. For extraction, just the first 180 s of each signal are collected and transformed into 256 samples per second. This restriction was put in place so that we could guarantee the data was comprehensive and correct. The sampling frequency, also known as the sample rate, is the number of samples gathered at regularly spaced-apart intervals throughout the course of a particular length of time. Another name for this metric is the pace at which samples are gathered.

5 Conclusion

These sample sets were gathered using a random sampling approach. Each of these patient groups supplied a unique collection of diagnostic samples, which were then evaluated. KNN, SVM, and LDA are traditional classification approaches that register both the final features and the impact that these features have on the data that is being recorded. The information is also recorded. This is done as a precaution to guarantee that the results are correct. When doing either of these two actions, it is critical to always keep the context of the data in mind. Performance measurement is used to help provide data that confirms and supports the results. This enabled us to obtain the necessary information. The number of artifacts created by the EEG's background activity can be reduced by initially collecting data for each signal for 180 s (i.e., between 60 and 240 s) and then converting that data to 256 samples per second. This approach must be performed between 60 and 240 times. If you execute your computations at 256 samples per second, you will be able to accomplish this work successfully. This is done to ensure that the results are correct. This is done to ensure that the data is as accurate as is practically practicable. The extraction of features from 256 EEG data sets results in the construction of seven value characteristics. This stage represents the completion of the procedure. The KNN test has a sensitivity of 71.9% for detecting whether a person has Alzheimer’s disease. The findings also demonstrate that the correct subgroup of people with MCI is recognized in 63.7% of those who have been diagnosed. When compared, the KNN method, SVM algorithm, and LDA algorithm may attain accuracy levels of 41.4%, 41.1%, and 43.8%, respectively. It is possible that a correct evaluation of accuracy would be performed 82.3% of the time utilizing CNN's approach, which predicts accuracy. KNN methodology will be the next target pursued as part of this study. It has been recommended that the feature extraction technique be modified to utilize a different EEG signal cause for categorization and a reduced number of characteristics.

References

World Health Organization, Alzheimer’s Disease International. Dementia: A Public Health Priority, vol. 2013, World Health Organization, Geneva (2012)

Duthey, B.: Background paper 6.11: Alzheimer disease and other dementias. Public Health Approach Innovation 6, 1–74 (2013)

Prince, M., Wimo, A., Guerchet, M., et al.: The global impact of dementia: an analysis of prevalence, incidence, cost and trends. Alzheimer’s Disease Int. 2015 (2015)

Lee, E.E., Chang, B., Huege, S., Hirst, J.: Complex clinical intersection: palliative care in patients with dementia. Am. J. Geriatr. Psychiatry 26(2), 224–234 (2018)

Gupta, L.K., Koundal, D., Mongia, S.: Explainable methods for image-based deep learning: a review. Arch. Comput. Methods Eng., 1–16 (2023)

Nair, R., Alhudhaif, A., Koundal, D., Doewes, R.I., Sharma, P.: Deep learning-based COVID-19 detection system using pulmonary CT scans. Turk. J. Electr. Eng. Comput. Sci. 29(8), 2716–2727 (2021)

Nair, R., Soni, M., Bajpai, B., Dhiman, G., Sagayam, K.: Predicting the death rate around the world due to COVID-19 using regression analysis. Int. J. Swarm Intell. Res. 13(2), 1–13 (2022). https://doi.org/10.4018/ijsir.287545

Shambhu, S., Koundal, D., Das, P., Sharma, C.: Binary classification of covid-19 ct images using cnn: Covid diagnosis using ct. Int. J. E-Health Med. Commun. (IJEHMC) 13(2), 1–13 (2021)

Mitchell, A.J.: A meta-analysis of the accuracy of the mini-mental state examination in the detection of dementia and mild cognitive impairment. J. Psychiatr. Res. 43(4), 411–431 (2009)

Koundal, D.: Texture-based image segmentation using neutrosophic clustering. IET Image Proc. 11(8), 640–645 (2017)

Anand, V., Gupta, S., Koundal, D., Singh, K.: Fusion of U-Net and CNN model for segmentation and classification of skin lesion from dermoscopy images. Expert Syst. Appl. 213, 119230 (2023)

Kashyap, R.: Machine learning for internet of things. Adv. Wireless Technol. Telecommun., 57–83 (2019). https://doi.org/10.4018/978-1-5225-7458-3.ch003 [Accessed 30 Aug 2022]

Kashyap, R.: Object boundary detection through robust active contour based method with global information. Int. J. Image Mining, 3(1), 22 (2018). https://doi.org/10.1504/ijim.2018.10014063 [Accessed 30 Aug 2022]

Al-Qazzaz, N.K., Ali, S.H.B.M.D., Ahmad, S.A., Chellappan, K., Islam, M.S., Escudero, J.: Role of EEG as biomarker in the early detection and classification of dementia. Sci. World J., 2014, 16 (2014) Article ID 906038

Montine, T.J., Phelps, C.H., Beach, T.G., et al.: National institute on aging-Alzheimer’s association guidelines for the neuropathologic assessment of Alzheimer’s disease: a practical approach. Acta Neuropathol. 123(1), 1–11 (2012)

Wu, L., Wu, L., Chen, Y., Zhou, J.: A promising method to distinguish vascular dementia from Alzheimer’s disease with standardized low-resolution brain electromagnetic tomography and quantitative EEG. Clin. EEG Neurosci. 45(3), 152–157 (2014)

Dubois, B., Feldman, H.H., Jacova, C., et al.: Advancing research diagnostic criteria for Alzheimer’s disease: the IWG-2 criteria. Lancet Neurol. 13(6), 614–629 (2014)

Ferreira, D., Perestelo-Pérez, L., Westman, E., Wahlund, L.O., Sarría, A., Serrano-Aguilar, P.: Meta-review of CSF core biomarkers in Alzheimer’s disease: the state-of-the-art after the new revised diagnostic criteria. Frontiers Aging Neurosci. 6, 47 (2014)

Olsson, B., Lautner, R., Andreasson, U., et al.: CSF and blood biomarkers for the diagnosis of Alzheimer’s disease: a systematic review and meta-analysis. Lancet Neurol. 15(7), 673–684 (2016)

Seeck, M., Koessler, L., Bast, T., et al.: The standardized EEG electrode array of the IFCN. Clin. Neurophysiol. 128(10), 2070–2077 (2017)

Hu, S., Lai, Y., Valdes-Sosa, P.A., Bringas-Vega, M.L., Yao, D.: How do reference montage and electrodes setup affect the measured scalp EEG potentials? J. Neural Eng., 15(2) (2018) Article 026013

Wang, J., Barstein, J., Ethridge, L.E., Mosconi, M.W., Takarae, Y., Sweeney, J.A.: Resting-state EEG abnormalities in autism spectrum disorders. J. Neurodevelop. Disorders, 5(1) (2013)

Faust, O., Acharya, U.R., Adeli, H., Adeli, A.: Wavelet-based EEG processing for computer-aided seizure detection and epilepsy diagnosis. Seizure 26, 56–64 (2015)

Muniz, C.F., Shenoy, A.V., OʼConnor, K.L., et al.: Clinical development and implementation of an institutional guideline for prospective EEG monitoring and reporting of delayed cerebral ischemia. J. Clin. Neurophys., 33(3), 217–226 (2016)

Nishida, K., Yoshimura, M., Isotani, T., et al.: Differences in quantitative EEG between frontotemporal dementia and Alzheimer’s disease as revealed by LORETA. Clin. Neurophysiol. 122(9), 1718–1725 (2011)

Sakalle, A., Tomar, P., Bhardwaj, H., Alim, M.: A modified LSTM framework for analyzing COVID-19 effect on emotion and mental health during pandemic using the EEG signals. J. Healthcare Eng., (2022)

Sakalle, A., Tomar, P., Bhardwaj, H., Iqbal, A., Sakalle, M., Bhardwaj, A., & Ibrahim, W. (2022). Genetic Programming-Based Feature Selection for Emotion Classification Using EEG Signal. Journal of Healthcare Engineering, 2022.

Neto, E., Biessmann, F., Aurlien, H., Nordby, H., Eichele, T.: Regularized linear discriminant analysis of EEG features in dementia patients. Frontiers Aging Neurosci., 8 (2016)

Colloby, S.J., Cromarty, R.A., Peraza, L.R., et al.: Multimodal EEG-MRI in the differential diagnosis of Alzheimer’s disease and dementia with Lewy bodies. J. Psychiatr. Res. 78, 48–55 (2016)

Garn, H., Coronel, C., Waser, M., Caravias, G., Ransmayr, G.: Differential diagnosis between patients with probable Alzheimer’s disease, Parkinson’s disease dementia, or dementia with Lewy bodies and frontotemporal dementia, behavioral variant, using quantitative electroencephalographic features. J. Neural Transm. 124(5), 569–581 (2017)

Navadia, N.R., Kaur, G., Bhardwaj, H., Singh, T., Sakalle, A., Acharya, D., Bhardwaj, A.: Applications of cloud-based internet of things. In: Integration and Implementation of the Internet of Things Through Cloud Computing, pp. 65–84. IGI Global (2021)

Bhardwaj, H., Tomar, P., Sakalle, A., Acharya, D., Badal, T., Bhardwaj, A.: A DeepLSTM model for personality traits classification using EEG signals. IETE J. Res., 1–9 (2021)

L. Sörnmo and P. Laguna, Bioelectrical Signal Processing in Cardiac and Neurological Applications, Academic Press, 2005.

Dauwels, J., Srinivasan, K., Ramasubba Reddy, M., et al.: Slowing and loss of complexity in Alzheimer’s EEG: two sides of the same coin? Int. J. Alzheimer’s Disease 2011, 1–10 (2011), Article 539621

Alberdi, A., Aztiria, A., Basarab, A.: On the early diagnosis of Alzheimer’s disease from multimodal signals: a survey. Artif. Intell. Med. 71, 1–29 (2016)

Malek, N., Baker, M.R., Mann, C., Greene, J.: Electroencephalographic markers in dementia. Acta Neurol. Scand. 135(4), 388–393 (2017)

Adeli, H., Ghosh-Dastidar, S., Dadmehr, N.: Alzheimer’s disease: models of computation and analysis of EEGs. Clin. EEG Neurosci. 36(3), 131–140 (2005)

Jelles, B., van Birgelen, J.H., Slaets, J.P., Hekster, R.E., Jonkman, E.J., Stam, C.J.: Decrease of non-linear structure in the EEG of Alzheimer patients compared to healthy controls. Clin. Neurophysiol. 110(7), 1159–1167 (1999)

Jeong, J., Kim, S.Y., Han, S.H.: Non-linear dynamical analysis of the EEG in Alzheimer’s disease with optimal embedding dimension. Electroencephalogr. Clin. Neurophysiol. 106(3), 220–228 (1998)

Jeong, J., Chae, J.H., Kim, S.Y., Han, S.H.: Non-linear dynamic analysis of the EEG in patients with Alzheimer’s disease and vascular dementia. J. Clin. Neurophysiol. 18(1), 58–67 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Benavides López, D., Díaz-Cadena, A., Chávez Cujilán, Y., Botto-Tobar, M. (2023). Electroencephalogram Analysis Using Convolutional Neural Networks in Order to Diagnose Alzheimer’s Disease. In: Koundal, D., Jain, D.K., Guo, Y., Ashour, A.S., Zaguia, A. (eds) Data Analysis for Neurodegenerative Disorders. Cognitive Technologies. Springer, Singapore. https://doi.org/10.1007/978-981-99-2154-6_7

Download citation

DOI: https://doi.org/10.1007/978-981-99-2154-6_7

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-2153-9

Online ISBN: 978-981-99-2154-6

eBook Packages: Computer ScienceComputer Science (R0)