Abstract

Problem - Both summative and formative assessments are employed in the medical education. Summative assessment (SA) evaluates a student’s learning progress and provide concrete grades or pass/fail decisions. Formative assessment (FA) provide the feedback and improve the students’ learning behavior. However, it is less known about the impact of FA on student’s recognition construction. This study is to exhibit the effects and correlation of FA implementation on students’ cognitive performance in final SA at Jinan University School of Medicine. Intervention – FA based on teaching management platform - Rain Classroom were performed consecutively in two batches of clinical medicine students. Their scores of final SA constructed on Bloom’s taxonomy levels were compared. A questionnaire on the students’ learning activities and perceptions was surveyed at the end of each term. Outcome - The students’ academic performance in 2018 was not statistically significant improved at each Bloom’s taxonomy level after the first round of FA implementation, when compared to the students in 2017 who had been evaluated only by final SA. Further methods were taken to strengthen the students’ active learning, timely feedback from teachers and peers was supplied on Rain Classroom. After the second round of FA, although exhibiting no statistical difference compared to those of the students in 2018, the performance of the students in 2019 was significantly increased compared to the students in 2017 at the analyzing level. The survey manifested that FA and FA built-in feedback cultivated the students’ active learning effectively, reinforced higher-order thinking and problem solving skills which related to higher cognitive levels. Rain Classroom platform facilitates FA implementation. Conclusion – It is necessary that incorporating FA into the regular assessment system for optimal educational output. The development of cognitive abilities should be emphasized when designing the assessments methods. Our FA based on Rain Classroom provides an example for online assessments of blended learning.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Existing Problems in Medical Education Assessment

Training the future physicians is a tremendous undertaking that requires empirically supported learning methods and assessment techniques. Assessment is a pivotal aspect of medical education, which is intended to measure students’ knowledge, skills, and attitudes, therefore it works as a powerful driver of learning for students [1]. Meanwhile, it represents the quality of students and universities to the outside world. Therefore, teachers should strive to assess students in the most beneficial way for them, and to meet the changing demands of the new generations and of the different assessment stakeholders. However, lack in the evaluation of higher level of thinking skills, affective and psychomotor domains, still are the problems perplexing the educators.

There are two types of assessments generally: summative assessment (SA) and formative assessment (FA). Both approaches exhibit various benefits and limitations. SA has been well established, where teachers measure the achievement of learning goals at the end of a course. As the future doctors, medical students must meet minimal safety standards. Naturally, SA has long been regarded as reliable indicator of the learning outcome and academic performance of medical students. FA, however, provides feedback to students that can be used to improve their learning. Generally, educators think FA as assessment for learning (AfL) while SA is assessment of learning (AoL).

The objectives of FA are to inform the students of required knowledge for the future, to identify student’s lack of performance, to assist with improvement in a timely method, and to provide feedback to teachers about how to assist the students. FA exhibits some advantages in medical education. It focuses on specific content, topics or skills, and can be conducted as frequently as possible, it serves the purpose to measure the student’s progress over time, and pertains to long-term competencies and expertise; it can improve students’ understanding of the subject matter, consolidate the learning and reinforce the learning behavior, help them continuously improving, and motivate the students to learn [2, 3]. However, FA was not enough emphasized at medical schools in China. A course of basic medical science - histology & embryology (HE) is taken for an example. A national wide survey about the current situation of HE teaching was done by our team in 2018, the results showed that 32 of the total 66 surveyed medical schools in mainland China had implemented FA in all programs [4]. The results revealed some challenges of FA during the implementation, including interrater reliability, variability in the predictability of performance scores, difficulties to track long-term development of clinical thinking, and timely feedback, etc.

Nowadays, the progress of smart teaching tools and learning management platforms is changing the formats of assessments. The digitalized protocol of assessments can support and grade the instant response of students, provide a timely feedback to students, and generate reports. The embedded assessments into the learning management platform facilitate FA, making it more effective, sensitive and formative. Nevertheless, the exploration in new fields of assessments is emerging except for the improvement of techniques on assessments. How to develop reliable FA formats using these powerful techniques to cultivate high levels of cognitive ability of the medical students, is a great challenge to the educators. In current study, we performed an action research (AR) of FA of HE course based on Rain Classroom, a smart teaching tools, to explore and optimize the feasible assessments which was aimed to improve the higher levels of cognitive abilities.

2 Literature Review

2.1 Assessment in Medical Education

Essentially, assessment is a fundamental component of both learning and teaching. Assessment is defined as a process of collecting and evaluating information to measure students’ progress [5]. Meanwhile, educators are required to provide means of discrimination among students, also assessment is needed to provide institutional quality assurance to key stakeholders. Therefore, assessment is a powerful tool in the armory of the educators. It is well established that assessment shapes the experience of students and influences their behavior more than any other elements of their education, and deserving careful consideration and practicing [6].

When exploring assessment, there are six key questions which should be addressed: why, what, how, when, where and who [7]. Among them, there are plenty of attempts on how to assess the students. SA has an outward focus serving wider societal aims rather than the students. Placing the students in the center of the examination process, however, assessment should foster and motivate student’s learning and equip them with the necessary skills to develop lifelong learning [6]. Cultivation of medical students’ clinical ability and personalized development are the goals of medical education. This is what FA serves to promote rather than simply measures learning. FA provides teachers and students with timely and frequent feedback on mastery of course material and learning objectives. In essence, teachers are sampling student learning and providing feedback based on the results to modify instruction and learning experience. Thus students can use feedback to identify the weaknesses of their study. Both SA and FA can be used in the same modality of assessments, and we should be careful not to miss important opportunities to fully develop the potential of a given assessment. By incorporating FA through provision of feedback and stimulation of reflection, students learning outcomes could be exponentially increased, which is exhibited by SA [8].

Next, what blueprint is used to assess the students whether they acquire the necessary knowledge, skills and attitudes? Constructive alignment ensures that the learning objectives are mapped carefully against assessment to ensure maximum possible validity [9]. The four main elements, which need to be aligned, are content, intended learning outcomes, pedagogies and assessment principles. There are many models we can use for an assessment blueprint including Miller’s pyramid [10], Bloom’s taxonomy [11] and the Dreyfus and Dreyfus [12] spectrum of skills acquisition to name but a few. The common thread to each of these is the evolution of student knowledge from the basic information of a novice to the richness of information of an expert [9].

2.2 Correlation of Assessment with Bloom’s Taxonomy in Medical Education

Bloom’s Taxonomy is widely used in medical educational research to stratify learning activities into different cognitive levels [13, 14]. It categorizes cognitive activities into six hierarchical levels that range from basic recall to higher educational objectives such as application, analysis and evaluation [11]. Bloom’s taxonomy has been adopted as a valuable tool for examining students’ learning and classifying examination questions based on the cognitive levels and skills the questions are attempting to assess. Different modified versions of Bloom’s taxonomy have been published, to better serve as useful tools for specific areas, to assess student performance and rate educational tasks [15, 16].

Anatomy, a core basic medical subject with high clinical relevancy, keeps appealing the teachers to venture into the research of constructing assessment under the frame of learning taxonomy [17]. Phillips et al. conducted a research in attempt to categorize the different levels of cognitive domains of the students on radiological anatomy using a revised version of Bloom’s taxonomy [16]. Zaidi et al. presented a new, subject-specific rating tool for histology MCQs rooted in Bloom’s taxonomy, which allowed the teachers to grade histology MCQs reproducibly according to their cognitive levels and to create more challenging examination problems [13]. They reported the incorporation of microscopic images which were new to the learners was often an effective method of elevating histology MCQs to higher Bloom’s taxonomy levels.

3 Rain Classroom-Based FA Continuum of HE Course

Two rounds of AR cycles of planning, acting, observing, and reflecting were implemented in this project.

3.1 The Structure of FA and Techniques Evolved of HE Course

At international school of Jinan University (JNU), the total HE course is 108 teaching hours for students of clinical medicine (CM) program, which is divided into 27 two-hour theoretical lectures and 18 three-hour practical sessions. The course is modeled as the preliminary medical knowledge to the first-year medical school students, introducing the microstructure of tissues/organs/systems and their embryonic development. Currently, the teaching staffs at most medical school in China use written tests, that is, SA to assess students’ knowledge at the end of the semester. The students are required to take examinations in both HE theory and practice at JNU.

Ideally, we should have a rounded assessment process to address FA and SA simultaneously. At present research, teachers included FA as a part of the grade, which students accumulated toward their final grade in a course. FA was performed in a variety of strategies, the importance of each area of knowledge, skills and attitudes was taken into accounts. General assessment methods used for our FA format were attendance, weekly exercises, drawing/microphotography, 3 mid-semester quizzes, group activities and presentation, and discussion. The results of above components totally occupied 50% of the final marks of the course. The rest 50% was from the final paper test of both theory and practice, which was in the format of SA. The primary SA methods commonly used in undergraduate medical education at JNU are multiple choice questions (MCQ), filling the blanks, short answer questions, essay questions, true or false and case analysis. Each assessment method has a different strength in testing a student’s knowledge, skills or attitudes, but a carefully balanced combination was needed to comprehensively reflect the assessment blueprint. The comprehensive assessment was given at the end of the course.

In the present study, FA was performed with the aid of tech-based Rain Classroom, a WeChat mini program developed by MOOC-CN Education (Tsinghua Holdings Co Ltd, Beijing). Rain Classroom has been linked with the teaching management system of JNU since 2017, which is an easy-manipulated plug-in component of PowerPoint supported by WeChat. By scanning the QR-code with WeChat (Fig. 1), the students can view the PowerPoint file on their mobile, interact with teachers by “Danmu”, “unclear”, or answering the questions on mobile. WeChat is a Chinese online communication platform (Tencent, Shenzhen), which teachers and students are most familiar with. Rain Classroom can promote the comprehensive teaching and learning interaction before, during and after class. During the whole online and offline learning process, Rain Classroom dynamically records all the students’ learning behavior, and gives rise to data, providing innovative solutions for panoramically quantifying the teaching and learning activities [18]. Through analyzing and integrating these data, teachers can analyze the teaching effects and learning outcomes quantitatively, making dynamic adjustment of teaching strategies according to the reports.

3.2 The Construction of SA Based on Bloom’s Cognitive Taxonomy

When developing assessment methods, three criteria are emphasized in the HE syllabus, working as the guideline of assessments, “remembering of basic knowledge”, “synthesizing and applying”, and “analyzing”. Based on the understanding of Bloom’s objective cognitive pyramid (remember, understand, apply, analyze, evaluate and create), assessment formats were constructed. The “remembering of basic knowledge” are corresponding to “remember” and “understand” of Bloom’s taxonomy. “Synthesizing and applying” can be corresponding to “apply”. “Analyzing” can be assessed at the level of “analyze” and “evaluate”. “Create” is not common in medical science. The “remember” and “understand” categories were collapsed into one category called “knowledge recall”, any item in this category was considered a “low” level cognitive item. Items in any of the other categories were considered “high” cognition items [14]. The items were constructed and then evaluated independently by multiple experienced teachers of the course, to categorize according to Bloom’s levels of cognition, lastly was reviewed by the department director. These assessments succeed in fulfilling certain educational objectives. Table 1 showed the composition of written examination of HE course at Medical School of JNU during the last 3 years. Examination results were analyzed over a three-year period (the students in 2017 worked as control, while the students in 2018 and 2019 were treatment groups).

4 Action Research of FA Based on Rain Classroom

4.1 First Round of Action

Student Participants.

Since this was a pilot research, 61 medical students were recruited from International School of JNU, which was the total number of medical students of CM program enrolled in 2018 at international school. The pilot project was approved by the Teaching Affairs Unit of JNU during the first term of 2018–2019 academic year. All the students enrolled in the study gave written consents.

Program Development.

The usage of Rain Classroom was compulsory to the students during the research, the meaningful data of learning and teaching were acquired and recorded by Rain Classroom, the scores obtained contributed to their final assessment. In detail, the homework was delivered online after lecture; the 3 mid-semester quizzes were also distributed by Rain Classroom and asked the students to finish within the specific time. The drawing/microstructure pictures were uploaded and scored by peers when completed.

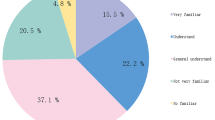

A questionnaire was self-developed to obtain feedback on the FA experience from participating medical students. There were 20 items in the survey, 14 of which used a five-point Likert scale. Students were asked to rank their response based on five points: 1 (strongly disagree), 2 (disagree), 3 (neutral), 4 (agree), and 5 (strongly agree). The survey was piloted by the faculty members of the first author, mainly to ensure the clarity of the questions, and was subsequently revised based on the feedback. The survey was released by Sojump (Ranxing, Changsha, China). All respondents completed the survey online. Their responses were captured anonymously in Sojump which de-identified aggregated information. The results generated through all respondence were collected and reviewed.

All statistical and graphical analyses were performed using the SPSS statistical package, v17.0 (IBM Corp., Armonk, NY). Independent samples t-test (2-tailed) was performed to assess the examination scores. Results of the statistical analyses were presented as the mean ± standard deviation (SD) and the level of statistical significance was accepted to be lower than 0.05 in all analyses. The data obtained from the questionnaires were analyzed using Cronbach’s alpha test to determine the internal consistency of the responses. Kendall’s tau B test was applied to investigate the relationships among the items of the questionnaire.

Observation and Reflection.

The impact of FA of HE course was assessed by analyzing the examination scores achieved by students of CM program in 2018 (n = 61) versus students in 2017 (n = 39, control). Each student’s Bloom’s level scores were subtracted from his/her overall final examination paper, the percentage of correct scores against the total scores of each Bloom’s level part was calculated as the ratio of the scores. Average performance of the whole class for final examination items assessing each Bloom’s level of cognition was calculated. Figure 2A provided the mean performance of knowledge, application, and analyzing HE items for students in 2018 and 2017 respectively. The students in 2018 averaged 75.74 ± 11.46 and the students in 2017 averaged 73.28 ± 12.17 on knowledge items (P = 0.814). For application HE items, the students in 2018 averaged 75.57 ± 13.67 and the students in 2017 averaged 73.77 ± 16.30 (P = 0.915). Finally, for analyzing HE items the students in 2018 averaged 71.46 ± 17.47 and the studetns in 2017 averaged 65.49 ± 19.27 (P = 0.156) (Fig. 2A). The results revealed that the percentage of correct scores of the FA format students was not statistically significantly higher than that of the traditional SA format, at neither of the three Bloom’s levels, though it was shown to be improved at each part of Bloom’s level.

There were totally 53 of the 61 FA participants responded and completed the questionnaire at the end of the study. The items covered two main areas: the student’s attitude and evaluation of FA, the role of Rain Classroom in FA. The Cronbach’s alpha for the 14 items in the questionnaire was 0.951, which implied that the survey tool had a good level of internal consistency and reliability. Kendall’s tau B was used to test the correlation among the items in the questionnaire. The correlation coefficient was found to range from 0.162 to 0.977, which indicated the items in the survey had positive correlations.

Overall, the questionnaire indicated that majority of participants (67.8%) were positive about the FA (3.77 ± 0.97, Q1), especially the feedback supplied by FA, 81.1% of students believed that FA had helped them to know the gap between what they had known and they should have known (4.19 ± 0.74, Q5). Specifically, 71.7% of the students thought that FA enhanced understanding of the knowledge points (3.87 ± 0.92, Q6), and presentation and reports session promoted further understanding and application of the knowledge points (4.08 ± 0.90, Q7). Generally, the students thought Rain Classroom supported the FA well (3.75 ± 0.92, Q9), including FA supported by Rain Classroom provided feedback from teachers more efficiently (3.94 ± 0.91, Q12), enhanced interaction between teachers and students (3.83 ± 0.91, Q14), and Rain Classroom facilitated self-assessment during learning (3.74 ± 1.02, Q11). However, only around 50% of the participants thought that FA acted as learning guidance to the final examination (3.51 ± 0.95, Q2) and FA exhibited better impacts on future clinical thinking than SA did (3.53 ± 1.08, Q8). The survey revealed that the participants generally acknowledged of and benefited from the FA format, but some students didn’t “buy into” FA was positively related to final examination scores and contributed to their future doctor judgements of clinical settings (Table 2). The correlation analyses showed that “FA supported by Rain Classroom enhances interaction between teachers and students” was tightly associated with both “FA enhances understanding of the knowledge points” (r = 0.935, P = 0.00, n = 53), and “check-in system supported by Rain Classroom contributes to high attendance of the students” (r = 0.977, P = 0.00, n = 53).

4.2 Second Round of Action

Student Participants.

For the second round of the study, 58 CM program students from International School of JNU were recruited in the first term of 2019–2020 academic year. The overall syllabus, the course contents, the student evaluation and grading policy, and the faculty teaching staff of the course remained unchanged compared to the first round.

Study Design.

Targeted at problems appeared in the first round, design of FA was adjusted in second round of action research process.

Except for the FA strategies used in the first round, we assigned three essay questions to the students at different time windows of learning. The first question was “using an example to tell the structures and functions of one tissue type”, which was distributed to the students at the end of general histology (the contents on different tissue types in human body). The second question was “using an example to tell the structures and functions of an organ, and further interpret how the tissue types you talked in last homework works in the microanatomic architecture of the organ”, this homework was assigned at the end of special histology (the contents on different organs and systems in human body). The third question was “to tell the embryonic origin and development of the organ and tissue you discussed in last two pieces of homework”, which was asked when completing embryology (the science of human development). These three questions are the main plots of HE course, one built upon other, which needs synthesizing the knowledge periodically and applying into detailed examples. The questions encourage and promote deep learning of the course. The students were required to complete the essay homework at the discussion area of Rain Classroom, then read and commented at least three other homeworks.

Observation and Reflection.

Figures 2B–C provided the mean performance for knowledge, application, and analyzing HE items for students in 2019 via those in 2017, and students in 2019 via those in 2018. The students in 2019 averaged 76.43 ± 11.19 on knowledge items, which showed no statistical significance when compared with students in 2017 (P = 0.632) and 2018 (P = 0.989). For application HE items, students in 2019 averaged 76.10 ± 11.80, exhibiting no significant difference when compared with both students in 2017 (P = 0.811) and 2018 (P = 0.995). However, the students in 2019 averaged 73.71 ± 14.57 on analyzing HE items, showing significance compared with those in 2017 (P = 0.02), while no difference compared to the students in 2018 (P = 0.746, Fig. 2B–C).

The same questionnaire was used for the feedback of Rain Classroom based assessments, and totally 55 participants completed the survey (Table 2). The Cronbach’s alpha for the 14 items in the questionnaire was 0.856. The correlation coefficient was found to range from 0.292 to 0.981 among the first 8 items, and range from 0.334 to 0.940 among items 9–14, which indicated the items in the survey have a positive correlation. The correlation analyses showed that “the students prefer FA to SA” was tightly associated with both “FA has a better impact on future clinical thinking than SA” (r = 0.981, P = 0.00, n = 55), and “multisourced FA can reflect the learning outcomes much comprehensively” (r = 0.959, P = 0.00, n = 55).

5 Discussion

Assessment is widely used in any subject, to ensure that students reach a specified standard, to be called competent in that subject. Moreover, assessment drives learning. Therefore, the desired career-directed learning can be performed, if the correct type of learning opportunities and assessment are adopted. This study showed that for a first-year medical school HE curriculum, the FA formats improved students’ ability to analyze material in the final examination.

The analysis of the questionnaire in this study helped explain why FA promoted the examination performance. From the survey, it can be recognized that FA helped the students to know the gap that they had known and they should have known. Providing students with opportunities of self-correct and self-study is a key element of FA, this process was facilitated by Rain Classroom in this study. When students see the gap between what they thought they knew and what they actually knew, the motivation of learning actually increases. That is effective feedback built-in FA processing, which is quite valuable during learning. Feedback lets students know how they are doing and provide opportunities to adjust and perfect their efforts. Several publication mentioned FA participation was a better predictor of final outcome, even than success in FA, which supporting feedback as the key role of FA, also implying both positive and negative feedback are crucial in learning and development [8]. From the perspectives of educators, feedback from students is collected real time when carrying out FA, used to modify course, and improve teachers’ instructive strategy. Therefore, feedback directed learning and teaching strategies provides opportunities of active learning.

Research demonstrates that students engaged in active learning exercises have improved higher-order thinking and problem solving skills [19], which is also verified in this study. Bloom’s Taxonomy is widely used in medical educational research, to stratify learning activities into different cognitive levels, and has been adopted as a valuable tool for examining students’ learning and to classify examination questions based on the cognitive levels and skills the questions are attempting to assess. In current study, analyzing ability - a higher learning objective was enhanced greatly during FA format teaching strategy. Analysis is where the skills that are commonly thought as critical thinking enters, including the component skills of analyzing arguments, making inferences using inductive or deductive reasoning, judging or evaluating, and making decisions or solving problems [20]. Critical thinking involves both cognitive skills and dispositions. The clinical thinking of medical students is specialized critical thinking, which is required to reach a correct diagnosis and give rise to treatment strategies within a short time and at the lowest cost. This kind of thinking needs specific training during their learning, especially the training of high level cognition.

Most students perceived that Rain Classroom was an effective and attractive tool to support the feedback of FA. Rain Classroom facilitates students’ comparisons of their own and peers’ progress, enables a social connectivity, and creates learning environment of feedback. This was further reflected by the high correlations between “FA supported by Rain Classroom enhances interaction between teachers and students” and “FA enhances understanding of the knowledge points”. As teaching management platform, the Rain classroom is also functionalized in supervision and inspection, the students bought into the “check-in system supported by Rain Classroom”, and guaranteed high attendance. Studies have highlighted that technology sponsors new ways of providing feedback [21]. Providing automatic feedback and repetitive opportunities using online quizzes helps students learn through diagnosing their own mistakes [22]. It is well accepted that medicine is regarded as an experienced science. The nature of the online course provides more opportunities to acquire feedback from repetitive exercise.

One limitation of this study was the learning habits of the students were not surveyed and evaluated, which should be given more consideration and analysis, to optimize the effect of feedback built-in FA. Secondly, besides concentrating on the low level cognitive thinking, an ideal assessment of basic medical sciences should also be able to promote cognitive application of basic medical knowledge, cognitive synthesis of separated medical concept, and general development of professionalism, which might be constrained if built by a single cognitive framework. We recommend the future project aims at integrating different cognitive taxonomy framework for students’ assessments.

6 Conclusion

Effectiveness of using FA in the teaching and learning process of medical sciences has been stressed greatly. The purpose of the current study was to determine the contribution of different formative assessment methods based on Rain Classroom, to the improvement of cognitive abilities at different Blooms’ taxonomy levels. The results showed that using technology based on teaching management tools facilitates FA and FA built-in feedback. When the teachers create an effective learning environment that cultivates higher cognitive levels, FA improves students’ achievements (Fig. 3). Our FA strategies based on Rain Classroom practicing can provide an example for online assessments, especially for the abrupt transition from face to face teaching to online teaching under the outbreak of COVID-19 pandemics.

References

Wormald, B.W., Schoeman, S., et al.: Assessment drives learning: an unavoidable truth? Anat. Sci. Educ. 2(5), 199–204 (2009)

Muhd, A.I., Anisa, A., et al.: Using Kahoot! As a formative assessment tool in medical education: a phenomenological study. BMC Med. Educ. 19(1), 230 (2019)

Sateesh, B.A., Yogesh, A., et al.: Implementation of formative assessment and its effectiveness in undergraduate medical education: an experience at a Caribbean Medical School. MedEdPublish (2018)

Cheng, X., Chan, L.K., Li, H., et al.: Histology and embryology education in China: the current situation and changes over the past 20 years. Anat. Sci. Educ. (2020)

Holmboe, E.S., Sherbino, J., Long, D.M., et al.: The role of assessment in competency-based medical education. Med. Teach. 32(8), 676–682 (2010)

Epstein, R.M.: Assessment in medical education. N. Engl. J. Med. 356(4), 387–396 (2007)

Ann, F.H., Dermot, O.F.: Assessment in medical education; what are we trying to achieve? Int. J. High. Educ. 4(2), 139–144 (2015)

María, T.C., Eva, B., Caseras, X., et al.: Formative assessment and academic achievement in pre-graduate students of health sciences. Adv. Health Sci. Educ. Theory Pract. 14(1), 61–67 (2009)

Khan, K., Ramachandran, S.: Conceptual framework for performance assessment: competency, competence and performance in the context of assessments in healthcare–deciphering the terminology. Med. Teach. 34(11), 920–928 (2012)

Miller, G.E.: The assessment of clinical skills/competence/performance. Acad. Med. 65(9 Suppl.), S63–S67 (1990)

Krathwohl, D.R., et al.: Taxonomy of educational objectives: the classification of educational goals. In: Handbook I: Cognitive domain, Longmans (1956)

Carraccio, C.L., et al.: From the educational bench to the clinical bedside: translating the Dreyfus developmental model to the learning of clinical skills. Acad. Med. 83(8), 761–767 (2008)

Nikki, B.Z., Charles, H., et al.: Climbing Bloom’s taxonomy pyramid: lessons from a graduate histology course. Anat. Sci. Educ. 10(5), 456–464 (2017)

Morton, D.A., Colbert-Getz, J.M.: Measuring the impact of the flipped anatomy classroom: the importance of categorizing an assessment by Bloom’s taxonomy. Anat. Sci. Educ. 10(2), 170–175 (2017)

Thompson, A.R., O’Loughlin, V.D.: The Blooming Anatomy Tool (BAT): a discipline-specific rubric for utilizing Bloom’s taxonomy in the design and evaluation of assessments in the anatomical sciences. Anat. Sci Educ. 8(6), 493–501 (2015)

Phillips, A.W., Smith, S.G., et al.: Driving deeper learning by assessment: an adaptation of the Revised Bloom’s Taxonomy for medical imaging in gross anatomy. Acad. Radiol. 20(6), 784–789 (2013)

Hadie, S.N.H., Abdul, H., et al.: Creating an engaging and stimulating anatomy lecture environment using the cognitive load theory-based lecture model: students’ experiences. J Taibah Univ Med Sci. 13(2), 162–172 (2018)

Ren, Q., Zhang, J., et al.: Research and practice of flipped classroom based on rain classroom under the background of big data. In: Striełkowski, W., Cheng, J. (eds.) International Conference on Management, Economics, Education, Arts and Humanities (MEEAH 2018), pp. 120–124. Atlantis Press (2018)

Stockwell, B.R., Stockwell, M.S., et al.: Blended learning improves science education. Cell 162(5), 933–936 (2015)

Adams, N.E.: Bloom’s taxonomy of cognitive learning objectives. J. Med. Libr. Assoc. 103(3), 152–153 (2015)

Ismail, E., Abdulghani, A., et al.: Using technology for formative assessment to improve students’ learning. Turk. Online J. Educ. Technol. 17(2), 182–188 (2018)

Baeiter, O., Enstroem, E., et al.: The effect of short formative diagnostic web quizzes with minimal feedback. Comput. Educ. 60(1), 234–242 (2013)

Funding

This research was supported by the Pedagogical Reform of High Education at Guangdong Province (2019-60); the research projects of pedagogical reform at Jinan University (JG2019012); and the grant of China Association of Higher Education (2020JXYB08).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, W., Cai, H., Yang, X., Cheng, X. (2020). Medical Teachers’ Action Research: The Application of Bloom’s Taxonomy in Formative Assessments Based on Rain Classroom. In: Lee, LK., U, L.H., Wang, F.L., Cheung, S.K.S., Au, O., Li, K.C. (eds) Technology in Education. Innovations for Online Teaching and Learning. ICTE 2020. Communications in Computer and Information Science, vol 1302. Springer, Singapore. https://doi.org/10.1007/978-981-33-4594-2_10

Download citation

DOI: https://doi.org/10.1007/978-981-33-4594-2_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-33-4593-5

Online ISBN: 978-981-33-4594-2

eBook Packages: Computer ScienceComputer Science (R0)