Abstract

It is important to accurately identify the combustion state of the municipal solid waste incineration (MSWI) processes. Stable state not only can greatly improve the combustion efficiency, but also can ensure safety of the MSWI processes. What’s more, the pollution emission concentration would be greatly reduced. Aiming at the situation that domain experts identify the combustion state in terms of self-experience in the actual MSWI processes, this study proposes an efficient method based on improved deep forest (IDF). First, the image preprocessing methods such as defogging and denoising, were used to preprocess the combustion flame image to obtain a clear one. Then, the multi-source features (brightness, flame and color) were extracted. Finally, the multi-source features were used as the input of cascade forest module in terms of substituting multi-grained scanning module. Therefore, a combustion state recognition model of MSWI processes based on IDF was established. Based on actual flame images of industrial processes, many experiments has been done. The results showed that the constructed model can reach a recognition accuracy of 95.28%.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Municipal solid waste incineration

- Combustion state recognition

- Features extraction

- Improved deep forest

1 Introduction

The generation rate of municipal solid wastes (MSWs) increases year by year [1]. The MSWs which weren’t treated in time have caused serious environmental pollution. Facing the increasingly serious global environmental pollution [2], countries all over the world began to vigorously promote MSWs incineration (MSWI) technology to replace the original composting one [3]. However, when the MSWI technology that imported from developed countries is applied to China, there are a series of “endemic” phenomena [4, 5]. That is to say, at the actual plant, the MSWI processes is generally controlled by domain experts manually. In order to ensure the sufficiency, stability and safety of MSWI processes, it is necessary to accurately identify the combustion state. At present, the partition of combustion state of MSWI processes is mainly based on the location of the flame combustion line. In view of the complex and changeable, it is of great practical significance to take effective and feasible methods to recognize the combustion state.

The combustion flame images collected by acquisition equipment generally contain some noise and interference. Therefore, it is generally necessary to preprocess flame image. By this way, it can restore the real combustion scene as much as possible. To solve this problem, some experts and scholars have carried out detailed researches. A fast image defogging algorithm for a single input image was proposed [6], which can restore a fog-free combustion image. In order to effectively separate the flame and black smoke in the vent flare, reference [7] used different parameters to normalize the torch combustion image. The results shown that when the mean and variance values of three channels were set to 0.5, and the pixel values of the image were scaled to [–1, 1], the flame can be effectively separated from the background area. In order to eliminate the influence of fringe noise on THz image, notch filter was used to eliminate fringe noise [8]. Further, reference [9] verified that the median filter algorithm can effectively remove the noise of image.

Flame image features that are used to reflect the combustion state are diverse. In order to identify the combustion state, feature extraction is necessary. Zhang [10] et al. studied a recognition method of cement rotary kiln combustion state based on flame image. First, the flame image was divided into several target regions. Then, the average gray value, average brightness value and color feature of the target area were extracted. The combustion state identification model is constructed based on these extracted features. As the abnormal working state is easy to occur in the smelting processes of electric melting magnesium furnace, Liu [11] et al. proposed a diagnosis method by using dynamic image of furnace body. First, the spatial features of local sub-block image were extracted. Then, the monitoring indicators of the defined area was combined. Finally, the level of abnormal working state was obtained. Wu et al. [12] proposed a diagnosis method of abnormal working state, in which the spatial and temporal features of images were extracted based on neural network. Although this study realized the automatic labeling based on weighted median filter, the identification method based on flame image in industrial processes is relatively fixed. However, the coupling between different abnormal regions is serious in the MSWI processes.

There are some researches on the identification of combustion state for MSWI processes. Reference [13] extracted 12 features of MSWI flame image, in which the recognition model of combustion state was constructed based on BP neural network. Consistent with the disadvantages of traditional artificial neural network, this method needs lots of training data to achieve a better result [14, 15]. Qiao et al. [16] proposed a combustion state recognition method based on the color moment feature of flame image for the MSWI processes, in which the color moment feature of flame image was extracted by using sliding window and the identification model was built by using the least square support vector machine (LS-SVM). These models either have a slow convergence or have a poor recognition accuracy.

In recent years, Zhou et al. [17] proposed the deep forest (DF) model for classification problem. Compared with other deep learning algorithms based on neural network, DF has a lot of advantages. It can automatically adjust model scale and maintain a good representation learning ability [18, 19]. Subsequently, a large number of researchers have carried out many researches in the field of computer vision. Tang et al. [20] constructed a waste mobile phone recognition model using DF algorithm, which achieved a good recognition accuracy. The classification of hyperspectral images based on DF was studied [21], whose effectiveness was verified based on common hyperspectral dataset with character of sample insufficient. To solve the small sample problem, the DF method has great advantages in accuracy and anti-parameter sensitivity [22]. The above research results show that DF has good modeling effect on small samples and high dimensional dataset. However, the multi-grained module in DF has great computational consumption. This is an urgent problem to be solved.

Motivated by the above problems, this paper proposes a combustion state identification method for the MSWI processes based on improved deep forest (IDF). First, image preprocessing is used to restore fog-free images. Then, the multi-source features such as brightness, flame and color of combustion flame image are extracted. Finally, the extracted multi-source features are used to replace the multi-grained scanning module as the input of cascade forest (CF) module. The efficiency of the model has been verified based on actual flame images of a MSWI plant in Beijing.

2 Processes Description and Modeling Strategy

The MSWs are transported to the plant by the feeder truck. Then, the operator controls the grab throw the MSWs into incinerator. The combustion processes is generally divided into three stages, i.e., drying, combustion and burnout stages. During MSWI processes, the domain experts judge the combustion state by observing the flame image in furnace. Meanwhile, they continuously adjust the grate speed and air volume to keep the combustion state as stable as possible.

Based on the above analysis, this paper proposes a modeling strategy composed of image preprocessing module and improved deep forest (IDF) module, which is shown in Fig. 1. In which, \(\{ I_n (u)\}_{n = 1}^N\) represents the flame images, \(I_n (u)\) represents the \(n{\text{th}}\) image, \(N\) is the number of image samples and \(u\) represents the \(u{\text{th}}\) pixel in the image, \(\{ L_n^{{\text{median}}} (u)\}_{n = 1}^N\) represents the images after preprocessing, \(\{ X_n \}_{n = 1}^N\) represents the extracted feature sets, and \(\hat{y}\) represents the identification results.

The functions of the above modules are shown as follows:

-

①

Image preprocessing module: It is used to eliminate the noise introduced by environment and transmission and to separate the flame from the furnace background.

-

②

IDF module: First, multi-source features such as brightness, flame and color of combustion flame images are extracted. Then, they are used as the input of recognition model based on CF module, in which random forest (RF) and completely random forest (CRF) are used as the basic learners to identify the combustion state. Finally, the prediction results are gotten by using the simple weighting average algorithm.

3 Algorithm Implementation

3.1 Image Preprocessing Module

In MSWI processes, fly ash and smoke are generated ceaselessly. RGB image \(\{ I_n (u)\}_{n = 1}^N\) inevitably contains signal interference and other physical noise. Therefore, before to make feature extraction, image preprocessing is employed to restore the flame image \(\{ L_n^{{\text{median}}} (u)\}_{n = 1}^N\).

Fast Defogging Algorithm Based on Single Image

First, the minimum values of the R, G and B channels from original image \(I_n (u)\) are taken to obtain \(H_n (u)\). By considering the relationship between transmittance \(l_n (u)\) and image \(H_n (u)\), we can get.

where \(A_n\) represents global atmospheric light. Then, a rough estimation of transmittance \(l_n (u)\) can be obtained,

where \(\Psi \in [0,1]\). By set \(\eta = 1 - \Psi\), the following result is obtained,

To prevent the image being too dark or too bright after defogging, we set up \(\tilde{\eta } = \rho h_n^{av}\). In which, \(\rho\) is an adjustable parameter with range \(0 \le \rho \le 1/(h_n^{av} )^{ - 1}\), and \(h_n^{av}\) is the mean of all elements of \(H_n (u)\).

To ensure that \(\Psi\) is positive value, the upper limit is set to be 0.9. Then, we have the relation,

Thus, \(l_n (u)\) is obtained as.

where \(1 - \eta H_n^{{\text{ave}}} (u) \times (A_n )^{ - 1}\) is a rough estimate of \(l_n (u)\) and \(1 - H_n (u) \times (A_n )^{ - 1}\) is the lower limit of \(l_n (u)\).

Then, ambient light \(Z_n (u)\) can be obtained,

The range of \(A_n\) is \((\max (H_n^{{\text{ave}}} (u)),\max (\max_{c \in r,g,b} (I_n^c (u))))\). The mean value of \(A_n\) is directly taken as follows,

Finally, the image after defogging is obtained,

Features Normalization

After features normalization, the flame can be separated from the background. Here, zero mean normalization method is adopted,

where \(\mu_n\) is the mean value, \(\sigma_n\) is the standard deviation, and \(W_n {(}u{)}\) is the normalized image.

Notch Filtering

Noise needs to be eliminated in the frequency domain. The notch filter has a very narrow stop band, whose ideal frequency response is expressed as follow,

After notch filtering, the image \(\{ V_n (u)\}_{n = 1}^N\) can be obtained,

Median Filtering

The isolated noise points caused by fly ash need to be eliminated by median filter. The median filtered image is obtained as follow,

3.2 Improved Deep Forest Module

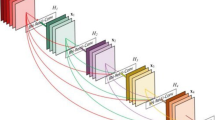

Traditional DNN algorithms usually need large dataset to ensure training results. At the same time, the structure of the trained model is very complex. So it has great limitations in practical application in terms of limit hardware resource. DF is a forest ensemble algorithm composed of multiple forests, which maintains high performance and trainability on small-scale datasets. The structure of traditional DF model includes multi-Grained scanning and CF modules, as shown in Fig. 2.

In Fig. 2, it is assumed that the dimension of the input eigenvector is 400. The purpose of multi-grained scanning module is to deal with the relationship between data and features. So it is used to enhance the performance of CF module. In this paper, it is considered that the combustion state identification needs a real-time performance. To meet this requirement, the multi-grained scanning module is replaced with multi-source feature extraction module.

Extraction of multi-Source Features Sub-module

The flame image usually contains lots of information. It is considered to extract different flame features (size, brightness, color) to express the combustion state.

Brightness Feature

For the flame image of MSWI processes, its brightness feature can be described from different views.

-

(1)

Average value of gray image

First, the original color image is converted into gray image [6], which is shown as follow,

where \((L_{\text{R}}^{{\text{median}}} )_n (u)\), \((L_{\text{G}}^{{\text{median}}} )_n (u)\) and \((L_{\text{B}}^{{\text{median}}} )_n (u)\) represent the color components of R, G and B channels at \(u{\text{th}}\) pixel of the \(n{\text{th}}\) image, respectively.

The average value of gray image is calculated as follow,

-

(2)

Variance of gray image

The variance of gray image is calculated as follow,

$$ {\text{Gray\_var}}_n = \frac{1}{U}\sum_{u = 1}^U {[G_n (u)} - {\text{Gray\_ave}}_n ]^2 . $$(15)

The former two features mainly describe the image brightness in terms of computer vision. Image in HSV color space is closer to the human vision. Therefore, the flame image is transferred from RGB space to HSV space firstly. Then, the brightness feature is extracted from V channel.

-

(3)

Average value of brightness

Here, the image converted to HSV space is represented as \(\{ L_n^{{\text{HSV}}} (u)\}_{n = 1}^N\). The average value of V-channel \((L_{\text{V}}^{{\text{HSV}}} )_n (u)\) is calculated as the brightness feature,

-

(4)

Variance of brightness

$$ {\text{Bright\_ave}}_n = \frac{1}{U}\sum_{u = 1}^U {(L_{\text{V}}^{{\text{HSV}}} )_n (u)} . $$(16)

The variance of brightness is calculated as follow,

Flame Feature

The calculation of flame area is based on V channel.

-

(1)

Effective area of flame

The effective area of flame is defined as the total number of pixels in the image with whose brightness value is greater than the specified threshold \(\theta_{th}\). It is calculated as follow,

$$ A\_v_n = \sum_{u = 1}^U {\Theta [(L_{\text{V}}^{{\text{HSV}}} )_n (u) - \theta_{th} ]} , $$(18)

where \(\Theta ( \cdot )\) is the unit step function.

-

(2)

High temperature area of flame

The high temperature area is defined as the total number of pixels in the image with whose brightness value is greater than the specified threshold \(\omega_{th}\). It is calculated as follow,

Color Feature

Color moment is a simple and effective color feature. The first-order, second-order and third-order moments are used to express the color information in this study.

The first-order moment \(\upsilon_n^{{\text{color}}}\) is calculated as follow,

The second-order moment \(\sigma_n^{{\text{color}}}\) is calculated as follow,

The third-order moment \(\delta_n^{{\text{color}}}\) is calculated as follow,

Finally, the extracted features are combined to obtain the final multi-source feature set, i.e.,

Identification Model Based on Cascade Forest Sub-module

In each layer of CF, there are several forests composed of decision trees. The CF module used in this study contains 2 RFs and 2 CRFs in each layer.

Random Forest Algorithm

RF is a machine learning algorithm in terms of ensemble learning [23]. Bootstrap is used to randomly sample the training sets \(D = {\text{\{ (}}{\bf{x}}_i ,y_i ),i = 1,2, \cdots b\} \in R^{B \times M}\), whose process can be described as.

where \({\text{\{ (}}{\bf{x}}^{j,M^j } ,y^j )_1^b \}_{b = 1}^B\) represents the \(j{\text{th}}\) training subset; \(f_{RSM} ( \cdot )\) represents the bootstrap function, \(m = 1, \cdots ,M^j\), \(M^j\) represents the number of features selected by the \(j{\text{th}}\) training subset in the forest and \(M^j < < M\).

The above function was used J times. Then, the RF training sets can be obtained. The above process is shown as follow,

where J is the number of bootstrap times and the number of DTs in RF. Further, J DTs in RF model are built with the above training subsets. The construction strategy is shown in Fig. 3.

We take the \(j{\text{th}}\) training subset as an example. The number of best segmentation feature \(M_{{\text{sel}}}^j\) and syncopation point \(s\) need to be searched based on Gini index criterion, which can be represented to solve the following optimization problem.

where \(k\) represents the \(k{\text{th}}\) class in labels, and \(p_k\) indicates the proportion of \(k{\text{th}}\) class in the total number of tags, and \(\theta_{{\text{Forest}}}\) indicates the threshold of the number of samples contained in the leaf node.

Based on the above criteria, the optimal variable number and the value of cut-off point are found by traversing all input features. The input feature space is divided into left and right regions. Then, the above process for each region need to be repeated until the leaf node number of samples contained is less than the threshold, or the Gini index is 0. Finally, the input feature space is divided into Q regions. To build the model of classification tree, the following functions are defined,

where \(N_{R_q }\) represents the number of training samples contained in \(R_q\); \({\bf{y}}_{N_{R_q } }^j\) represents the label vector corresponding to the sample feature in \(R_q\); \({{\varvec{c}}}_j^q\) represents the prediction result of the final output of \(R_q\); \(I( \cdot )\) is an indicator function, when \({\bf{x}}^{j,M^j } \in R_q\), \(I( \cdot ){ = }1\);or we have \(I( \cdot ){ = 0}\).

The above steps need to be repeated J times to obtain the RF model,

Completely Random Forest Algorithm

Different from RF, CRF randomly selects any one value of a feature as a split node in the feature space. The obtained CRF model is represented by \(F_{{\text{CRF}}} ( \cdot )\).

Simple Weighting Average

Each layer of CF module uses two \(F_{RF} ( \cdot )\) and two \(F_{{\text{CRF}}} ( \cdot )\) as base learners for cascade learning. The model is constructed by using the idea of stack. For the input samples \(X_n\), the last layer of CF outputs the \(4K\)-dim class distribution vector \({{\varvec{R}}} = [{{\varvec{r}}}_1^{{\text{RF}}} ,{{\varvec{r}}}_2^{{\text{RF}}} ,{{\varvec{r}}}_1^{{\text{CRF}}} ,{{\varvec{r}}}_2^{{\text{CRF}}} ]\). We take the average and maximum value to obtain the final predicted value.

4 Experimental Verification

4.1 Description of Data

The combustion images used in this experiment come from an MSWI plant in Beijing. According to the different positions of combustion line, those images are divided into three combustion states, i.e., forward moving, normal moving and backward moving of the combustion line. The calibration criterion of flame image is shown in Fig. 4. The size of image is 718 × 512. The numbers of samples is 571. The number of samples corresponding to three state are 184, 213 and 174, respectively. The training set, verification set and testing set are divided in a ratio of 2:1:1.

4.2 Results of Experiment

Results of Image Preprocessing

For the studied flame image, the large amount of smoke contained in the image should be removed firstly. The defogging effect is shown in Fig. 5 (b). Thus, the amount of smoke in image is significantly reduced after defogging.

In order to improve the computational efficiency, it is necessary to normalize the image. Figure 5 (c) shows the normalized image. It can be seen that the normalized image achieves the purpose of effectively separating the flame from background. However, there are still serious fringe interference in the image. It will be eliminated by notch filtering, as shown in Fig. 5 (d). Thus, the fringe noise in the filtered image is obviously suppressed. The median filter is used to eliminate small noise. It is shown in Fig. 5 (e).

Identification Results of IDF

The preprocessed image is converted from color space to gray space, which is shown in Fig. 6 (a).

The preprocessed flame image is transformed from RGB space to HSV space. The threshold values of flame effective zone and high temperature zone are selected based on the V-channel, which are shown in Fig. 6 (b). Compared with Fig. 6 (c), it is determined that the threshold of flame effective area is 0.226 and the threshold of high temperature area is 0.941. The experimental results of reference [16] showed that the recognition performance is the best one when the sliding window scale is 1/5 of the image. By experimental verification, the sliding window scale consistent with [16] is finally selected. The extraction results are shown in Fig. 6 (d).

The identification results of combustion state based on IDF are shown in Tables 1–2. It is the mean value of running 20 times.

The results show that the color features have the highest contribution to the effect of recognition. The contribution of flame feature is the lowest one. Compared with the color features, the training results of combined features keep the same effects on the training set. However, combined features have a better effect on the testing set, which shows that it has a stronger generalization.

Comparison of Different Methods

The comparative results with the existing methods are shown in Table 3.

The results show that our method has the highest recognition accuracy. The reasons include: (1) The extracted features have a good expressive ability for the combustion state information contained in the flame image. (2) The tree structure can exert more powerful learning and classification capabilities for features with physical meaning. (3) The transfer of supervised information between cascade layers greatly enhances the learning ability of the model. These results prove the efficiency of our proposed method.

5 Conclusion

A combustion state identification method on MSWI processes is proposed in this paper. The main contributions are shown as follows: (1) A modeling strategy based on image preprocessing and IDF is proposed. (2) Multi-source features with physical meaning are extracted from the flame image to replace the multi-grained scanning module in traditional DF, which reduces the computational consumption. Based on the actual running data, the accuracy on testing set of the proposed method is more than 95%. The future study is to extract the powerful and interesting local features in the flame image.

References

Lu, J., Jin, Q.: A study on methods of municipal solid waste treatment and resource utilization. Res. Conserv. Environ. Protect. 05, 121–122 (2021)

Niu, Y., Chen, F., Li, Y., Ren, B.: Trends and sources of heavy metal pollution in global river and lake sediments from 1970 to 2018. J. Rev. Environ. Contamin. Toxicol. 1-35 (2020)

Bai, B.: A discussion on treatment and utilization of urban waste. J. Intell. Build. Smart City, 12, 120–121 (2021)

Saikia, N., et al.: Assessment of Pb-slag, MSWI bottom ash and boiler and fly ash for using as a fine aggregate in cement mortar. J. Hazard. Mater. 154(1–3), 766–777 (2008)

Korai, M., Mafar, R., Uqaili, M.: The feasibility of municipal solid waste for energy generation and its existing management practices in Pakistan. J. Renew. Sustain. Energy Rev. 72, 338–353 (2017)

Huang, L.: Fast single image defogging algorithm. J. Optoelectr. Laser. 22(11), 1735–1736 (2011)

Xie, X., Gu, K., Qiao, J.: A new anomaly detector of flare pilot based on siamese network. In: 20th Chinese Automation Congress, pp. 2278–2238 (2020)

Zou, Y.: A Research on Fringe Noise Elimination Method of THz Image. Dissertation of Capital Normal University (2009)

Khatri, S., Kasturiwale, H.: Quality assessment of median filtering techniques for impulse noise removal from digital images. In: International Conference on Advanced Computing & Communication Systems, pp. 1–4. IEEE(2016)

Zhang, R., Lu, S., Yu, H., Wang, X.: Recognition method of cement rotary kiln burning state based on Otsu-Kmeans flame image segmentation and SVM. Optik, 243, 167418 (2021)

Liu, Q., Kong, D., Lang, Z.: Diagnosis of abnormal working state of electric melting magnesium furnace based on multistage dynamic principal component analysis. J. Autom. 47(11), 1–8 (2021)

Wu, G., Liu, Q., Chai, T., Qin, S.: Diagnosis of abnormal working state of electric melting magnesium furnace based on time series image and deep learning. J. Autom. 45(8), 1475–1485 (2019)

Zhou, Z.: A Research on Combustion State Diagnosis of Waste Incinerator based on Image Processing and Artificial Intelligence. Dissertation of Southeast University (2015)

Fang, Z.: A high-efficient hybrid physics-informed neural networks based on convolutional neural network. IEEE Trans. Neural Netw. Learn. Syst. 99, 1–13 (2021)

Rubio, J.: Stability analysis of the modified levenberg-marquardt algorithm for the artificial neural network training. IEEE Trans. Neural Netw. Learn. Syst. 99, 1–15 (2021)

Qiao, J., Duan, H., Tang, J.: A recognition of combustion state in municipal solid waste incineration process based on color feature extraction of flame image. In: 30th China Process Control Conference, pp. 1–9 (2019)

Zhou, Z., Feng, J.: Deep forest. arXiv preprint, 1702.08835 (2017)

Molaei, S., Havvaei, A., Zare, H., Jalili, M.: Collaborative deep forest learning for recommender systems. IEEE Access 99, 1 (2021)

Shao, L., Zhang, D., Du, H., Fu, D.: Deep forest in ADHD data classification. IEEE Access 99, 1(2019)

Tang, J., Wang, Z., Xia, H., Xu,Z.H.: Han: Deep forest recognition model based on multi scale features of waste mobile phones for intelligent recycling equipment. In: 31th China Process Control Conference, pp. 10–19 (2020)

Li, M., Ning, Z., Pan, B., Xie, S., Xi, W., Shi, Z.: Hyperspectral Image Classification Based on Deep Forest and Spectral-Spatial Cooperative Feature. Springer, Cham (2017)

Yin, X., Wang, R., Liu, X., Cai, Y: Deep forest-based classification of hyperspectral images. In: 37th Chinese Control Conference, pp. 10367–10372. IEEE (2018)

Bbeiman, L., Quinlan, R.: Bagging predictors. Mach. Learn. 26(2), 123–140 (1996)

Acknowledgments

The work was supported by Beijing Natural Science Foundation (No. 4212032), National Natural Science Foundation of China (No. 62073006).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Pan, X., Tang, J., Xia, H., Li, W., Guo, H. (2022). Combustion State Recognition Method in Municipal Solid Waste Incineration Processes Based on Improved Deep Forest. In: Zhang, H., et al. Neural Computing for Advanced Applications. NCAA 2022. Communications in Computer and Information Science, vol 1637. Springer, Singapore. https://doi.org/10.1007/978-981-19-6142-7_6

Download citation

DOI: https://doi.org/10.1007/978-981-19-6142-7_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-6141-0

Online ISBN: 978-981-19-6142-7

eBook Packages: Computer ScienceComputer Science (R0)