Abstract

For the sake of integrate the ratio of dissolved gas in the oil and the advantages of artificial intelligence technology to enhance the accuracy rate of transformer fault diagnosis, the fault diagnosis method for transformer based on kernel principal component analysis (KPCA)and sparrow search algorithm (SSA)-support vector machine (SVM) was proposed. Firstly, based on the oil dissolved gas analysis (DGA), 24 fault features of the power transformer were extracted, Secondly, KPCA was used for dimensionality reduction to obtain a feature space with lower latitude. The fault diagnosis model of transformers based on SVM was designed with the 8 selected features as inputs, and the parameters in the model were simultaneously optimized by SSA. Then, SSA-SVM was adopted to diagnose the typical working conditions. To prove the superiority of the SSA-SVM diagnostic model combined with KPCA feature space, the comparative experiment of SSA-SVM classifier results in the origin feature space, the KPCA feature space was carried out, the comparison for accuracy of various methods in the KPCA feature space was proceed as assist.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Dissolved gas analysis

- Power transformers

- Fault diagnosis

- Kernel principal component analysis

- Sparrow search algorithm

1 Introduction

The power transformer is one of the most significant electrical equipment in the electric power system. It undertakes the most important task of electric power transmission and voltage change. It will cause local or even large area power cut when it breaks down. And it must be sure to cause a huge financial losses [1]. Consequently, establishing the efficient and accurate transformer fault diagnosis model and mastering its operation state are of significant meaning to enhance the operational level of power transformer and guarantee the secure and dependable operation of power systems [2].

In the aspect of transformer fault diagnosis, Dissolved Gas Analysis (DGA) technology has become one of the most valid means for fault diagnosis of oil-immersed transformers [3]. At present, the transformer fault diagnosis methods based on DGA mainly include traditional methods and methods based on artificial intelligence. Traditional methods include main gas method [4], IEC ratio method [5], Rogers method [6] and David triangle method [7] and so on. All of them have advantages of simple principle, small amount of calculation and easy implementation. So, they play a crucial role in the transformer fault diagnosis. However, these methods are based on on-site experience, so there will be a large error in diagnosis [8].The methods based on artificial intelligence mainly include neural network [9], random forest [10], support vector machine [11, 12] and so on. Compared with traditional means, the above diagnosis methods have the advantages of continuous learning and updating. It can establish the complex curvilinear relations between the transformer fault and DGA gas content. Although the calculation accuracy of traditional methods are improved, there are also some limitations as follow. There are some problems in neural network, such as slow convergence and complex system structure [13]. Random forest has the disadvantages of long training time and over-fitting. Compare with the above two methods, support vector machine has strong generalization performance and can deal with local minimum well. Nonetheless, the diagnosis capability of SVM is influenced by penalty factor and kernel parameter. The diagnosis result will be a great error if the value is selected improperly.

When using artificial intelligence technology for transformer fault diagnosis, most studies are mainly founded on existing DGA ratios (such as IEC, Rogers) as the input characteristic parameter of artificial intelligence model to establish transformer fault diagnosis model. However, there is no unified standard of DGA gas ratio for diagnosis models. According to the literature [14], the effect of transformer fault diagnosis will be affected if use the partial DGA gas ratio. Therefore, in order to combine the advantages of DGA ratio and artificial intelligence methods [15], KPCA and SSA-SVM are proposed in this paper. In this method, 24 characteristic parameters of transformer are extracted on the basis of DGA. Dimensionality reduction and optimization of characteristic parameters by using KPCA. The data after dimensionality reduction were used as samples to train the SVM model. While SSA was used to optimize the model parameters. The transformer fault diagnosis means founded on KPCA and SSA-SVM is established. The real-time and accurate diagnosis of power transformer operation state will be realized.

2 Kernel Principle Component Analysis

Kernel Principle Component Analysis (KPCA) is an algorithm integrating principle component analysis and kernel method. It can make up for the defect that PCA can’t extract the nonlinear structural characteristics. Finally, the nonlinear dimensionality reduction data samples will be realized [16].

The basic idea of KPCA is to transform a nonlinear problem in the input space into a linear problem in the mapping space by introducing a certain nonlinear mapping function [17, 18]. The nonlinear mapping is realized by using the kernel inner product arithmetic in the input space. RBF is used as kernel function in this paper. Suppose to input the spatial dataset X = {xi}, i = 1, 2, 3…, N, the xi is a vector of m * 1. Each observation has m characteristics. The RBF kernel matrix is calculated as:

Centralize the kernel matrix, the centralization formula is:

In the formula, Pm refers to the m × m matrix whose elements are all 1/m.

Next, solve the eigenvalues and eigenvectors of the central kernel matrix \({ }\overline{K}\):

Make sure the s largest eigenvalues λj (j = 1,2…s)and its corresponding eigenvectors vj (j = 1,2…s). The valve of s is defined judging by the cumulative variance contribution rate. The formula is as follow:

The cumulative variance contribution rate, that is the eigenvalue corresponding to the eigenvector as the dimensionality reduction weight. The matrix after the dimensionality reduction is:

3 SVM Parameter Optimization Based on Sparrow Search Algorithm

3.1 Support Vector Machine

SVM is a model with strong generalization ability and is suitable for small specimen learning [19]. It has been extensive used in the domain of fault diagnosis.

SVM was originally proposed to study the linear separable binary classification problem. The most of the cases are nonlinear in fact. The samples of the original input space need to be mapped into the high dimensionality feature space through the nonlinear mapping function, after that the optimum classification super function will be constructed in the space. In this time, the optimization problem is transformed into:

In this formula,\({\upomega }\) is the weight coefficient, C is the penalty parameter. \({\upxi }_{i}\) is the slack variable, which the classification error of training samples was characterized; b is the partial value constant.

The form of the dual optimization is as follows:

In this formula, αi is the Lagrange multiplier, \(y_{i} \in \left\{ { - 1,1} \right\} \) is the category label.

Thus, the optimal classification function of the nonlinear problem is:

K(xi, xj) is the kernel function, different kernel functions can be selected to construct different classification performances of SVM. The common kernel functions are: Linear kernel function, polynomial kernel function, RBF and Sigmoid. The RBF is easier than others, and the classification effect is better, so the RBF was used in the paper. The expression is as follows:

In this formula, the kernel parameter g is a positive real number.

3.2 SSA Algorithm

3.2.1 Algorithm Principle

SSA is a novel swarm intelligence majorization algorithm enlightened with foraging behavior and anti-predation behavior of sparrows in nature [20, 21].

In SSA, each sparrow position corresponds to a feasible solution. Supposes that in a D-dimensional search space, there is a population of N sparrows: X = [xi1,xi2, …, xid], i = 1, 2, … n, xid indicates the location of sparrow i in d-dimension. When sparrows are foraging, there are 3 behavior.

Finders lead the population to search and forage, so the search area is wide. They use the memory to constantly update their location in order to obtain the food sources. Finders take up 10%–20% of the population. And its location is updated as follows:

In this formula, Xij means the location of sparrow i in j-dimension. t means the present Iteration ordinal number. itermax means the maximum iteration ordinal number. α is a uniform random number between(0,1]. R2 \(\in\)[0,1] represents the warning value. ST \(\in\)[0.5,1] represents the safe value, Q is a stochastic number that follows a normal distribution. L indicates the array of 1 × d. The elements of this array are all 1.

In order to obtain the higher fitness, the new entrants follow to the finders to forge constantly. The location is updated as follows:

Xp expresses the optimal location occupied by the finders during the t + 1th iteration. Xworst expresses the global worst location of the tth iteration. i expresses the population size. A represents the matrix of the 1 × d. Each element is randomly evaluated of 1 or −1. A+ = AT(AAT)−1.

Scouts watch for the predators’ threats and warn the population to anti predation. The location is updated as follows:

Xbest is the global best position at present. β means the step length control parameters. It is a stochastic number that follows a normal distribution with a mean value of 0 and a variance of 1. K shows the orientation in which the sparrows move. It is a stochastic number between [−1,1]. fi expresses the fitness value of the i sparrow. fg represents the optimum fitness value of the sparrow population. fw means the worst fitness value of the sparrow population. ε is a minimal constant, in order to avoid the situation of the denominator is 0.

3.2.2 Algorithm Performance Test

To verify the effectiveness of the SSA, test functions Rosenbrock and Ackley were selected to test its performance, and compared with PSO and GA for analysis.

Rosenbrock only has one global optimal value, it doesn’t have the local value. Just like Fig. 1, the formula is as follows:

x \(\in\)[−30,30], the global minimum in the domain is 0.

There are a great quantity of local optimum values in the outer region of the Ackley. The center concave position is the global optimal value of the whole function. Just like Fig. 2, the formula is as follows:

x \(\in\)[−32,32], the global minimum in the domain is 0.

SSA,PSO,GA were used to conduct 30 independent simulation experiments on F1 and F2 test functions. The maximum number of the iterations of each algorithm is 1000. Population size is set to N = 100. The fitness change curves of the 3 algorithms during the optimization of F1 and F2 test functions are shown in the Fig. 3 and 4. Table 1 lists the comparison of test accuracy and stability results of the 3 algorithms for the 2 test functions.

According to the F1(x) Function Fitness Change Curve in Fig. 3, although SSA did not find the optimal fitness value on F1(x), the fitness value is better than PSO, GA obviously.

According to the F2(x) Function Fitness Change Curve in Fig. 4, SSA had reached the optimal fitness value after 13 iterations on F2(x). However, PSO needs 922, GA needs more.

According to the results of the optimal value and average value in Table 1, we can know that the constriction accuracy of SSA is better than PSO and GA on test functions F1 and F2 obviously. SSA algorithm has better stability compared with PSO and GA. We can get the results from the worst difference, median and Std. Deviation.

In conclusion, we can get that SSA algorithm is better than GA and PSO on the part of convergence speed, convergence accuracy and stability through the test of Rosenbrock and Ackley.

3.3 Sparrow Search Algorithm Optimizes SVM Parameters

From the foregoing analysis, the punishment parameter C and kernel function parameter g of SVM are the main factors that affect its fault diagnosis results. In this paper, SSA is used to seek out the best C and g in the training stage of SVM, so as to enhance the fault diagnosis rate of SVM. The steps of SSA optimizes SVM parameters are as follows:

-

(1)

SSA parameters are initialized. Including sparrow population Pop, search space dimension D, maximum iteration number itermax, proportion of sparrows aware of danger SD, proportion of Discovers PD, warning value ST.

-

(2)

Compute the fitness of every sparrow to find the position Xbest corresponding to the best fitness value and the position Xworst corresponding to the worst fitness value at present.

-

(3)

According to the formula (10), (11), and (12), update the positions of finders, entrants and sparrows aware of danger.

-

(4)

Recalculate the fitness value of every sparrow after position renew and compare it with the fitness value in the last iteration. If it is higher than the original fitness value, the new location value is taken as the best fitness value Xbest. Otherwise, the original fitness value remains unchanged.

-

(5)

Determine whether the maximum number of iterations or solving accuracy is attained. If not, return to step (3). If so, the iterative process will be stopped and the sparrow position information of the best fitness will be returned. The optimal combination (C,g).

4 Transformer Fault Diagnosis Based on KPCA and SSA-SVM

4.1 Fault Type Classification and Feature Selection

According to IEC 60599 standard and some actual transformer operation data, the transformer fault mode will be divided into 6 types: medium low temperature overheating T1(<700 ℃), high temperature overheating T2(>700 ℃), low energy discharge D1, partial discharge PD, high energy discharge D2 and normal working condition N.

When the transformer occurs the above thermal or electrical faults will lead to the decomposition of transformer oil and insulating paper. According to the “Oil-immersed transformer State Evaluation and Maintenance Guide” [22], the products include H2, CH4, C2H2, C2H4, C2H6 and other gases. Based on the above 5 gases, a total of 24 gas ratios are generated as the set of features to be selected, as shown in Table 2.

4.2 Concrete Realization Process of Transformer Fault Diagnosis

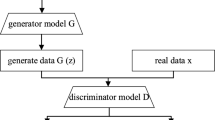

Figure 5 shows the realization procedure of transformer fault diagnosis means founded on sparrow search algorithm to optimize SVM parameters. The specific steps are as follows:

-

(1)

DGA data of various fault patterns during transformer operation are gathered to compose fault sample data;

-

(2)

The numerical dispersion between different DGA ratios maybe large was considered, it may affect the diagnosis is accuracy if it is input directly as a sample. Normalized processing is carried out for all fault sample data according to formula (15).

$$ x_{si} = \frac{{x_{i} - x_{imin} }}{{x_{imax} - x_{imin} }} $$(15)

In this formula, ximin, ximax is the minimum and maximum value before normalization, xi is the value before normalization.

-

(3)

KPCA dimension reduction is performed on normalized sample data to eliminate redundant gas ratio that affects fault diagnosis accuracy. In this paper,KPCA nonlinear dimension reduction technique based on RBF kernel is selected to lessen the dimension of dissolved gas ratio in transformer oil according to formula (1)-(5).

-

(4)

Sample data are randomly separated into training set and test set in proportion. The data after dimensionality reduction is chose as the feature import of the model.

-

(5)

Combined with SSA, a transformer fault diagnose model based on SSA to optimize SVM parameters was established.

-

(6)

Test sets were used to test the diagnosis effect of SSA-SVM model and output fault diagnosis results.

5 Example Analysis

5.1 Fault Sample and Data Pretreatment

In this paper, 240 transformer DGA data with determined state types are selected from reference [23] for fault diagnosis of SSA-SVM model. Firstly, 240 transformer DGA data are converted into the ratios in Table 2 to obtain a 240 * 24 dimensional DGA ratio set. Normalization pretreatment was performed on all ratios according to formula (15) to eliminate differences between different values.

5.2 Analysis of Feature Value Optimization Results Based on Kernel Principal Component Analysis

The normalized 240 * 24 dimensional DGA ratio was optimized for dimensionality reduction, the characteristic values and feature vector of its RBF kernel matrix were calculated, and the characteristic values whose cumulative variance contribution ratio was greater than 85% were selected for analysis to achieve dimensionality reduction. The eigenvalue and variance contribution ratio of RBF kernel matrix are shown in the table, and the variation trend of variance contribution rate with components is shown in Fig. 6.

As can be seen from Table 3 that the cumulative variance contribution of the eight components is 86.4106%. It means these eight components can represent the internal fault characteristics of the transformer. It can be seen from Fig. 6, the change trend of variance contribution ratio progressively decreases, and the variance contribution rate after 8 components has stabilized. Therefore, taking the first 8 components can reflect most of the variable information, which is transformed from 24 indicators to 8 new indicators, so as to reach the aim of dimension lessen.

Further, the distribution of the first principal component in different fault types and normal working conditions in the original feature space and KPCA space is plotted as shown in Fig. 7. It can be displayed from Fig. 7a that the distribution of the first principal component in the original feature space is disordered and there is serious aliasing, which affects the diagnostic performance of the subsequent model; Comparing Fig. 7b with Fig. 7a, it can be seen that the distance between the first principal components becomes larger and the aliasing phenomenon is significantly reduced under the six working conditions after dimensionality reduction. It verifies the effectiveness of KPCA dimensionality reduction method.

5.3 Parameter Optimization

150 groups of failure samples were randomly selected as training samples (25 groups for each type of failure), and the rest of the 90 groups as test samples (15 groups for each type of failure). SSA was used to optimize parameters in the SVM model, and relevant parameters were set as follows: The search range of SVM kernel parameters and penalty factor is [0.01,100], population size Pop = 50, maximum iteration times itermax = 100, search space dimension d = 2, the sparrows aware of danger SD = 0.2, sparrows discovers PD = 0.7, warning value is 0.6. During the optimization of SVM parameters by sparrow search algorithm, the curve of fitness change is shown in Fig. 8.

As can be seen from Fig. 8, when the sparrow search algorithm is employed to optimize the SVM parameters, the optimal fitness curve gradually converges with the increase of the iterations, and converges to the minimum after 9 iterations. It shows that sparrow search algorithm can better resolve the optimization matter of SVM parameters.

5.4 Contrast of Diagnostic Results of Diverse Diagnostic Models in KPCA Dimensionality Reduction

To verify the superiority of the method in the paper, firstly, the diagnosis effects of SSA-SVM in original and KPCA feature spaces are compared, Table 4 shows the diagnosis outcomes of SSA-SVM model in two feature spaces.

According to the data in Table 4, the mean diagnosis accuracy of SSA-SVM in KPCA feature space is 2.22% higher than that in original feature space, and the diagnosis time is reduced by 3.38 s. Through the comparison with that, the SSA-SVM diagnosis method in KPCA feature space is better than the original feature space in both effect and time.

5.5 Comparison of Diagnostic Results of Different Diagnostic Models in KPCA Dimensionality Reduction

In Sect. 4.4, the diagnostic effects of SSA-SVM in original and KPCA feature space are compared. In this section, SVM, GA-SVM, PSO-SVM are selected for comparing with SSA-SVM in KPCA feature space. Different fault diagnosis results of each diagnosis model are contrasted as shown in Table 5.

Seen from Table 5 that in KPCA feature space, the mean diagnostic accuracy of SVM, PSO-SVM, GA-SVM and SSA-SVM are 83.33%, 84.44%, 84.44% and 88.89% respectively; The diagnostic time of them are 0.14 s, 35.79 s, 19.13 s and 9.91 s respectively. It shows that the fault diagnosis outcomes of this means are more accurate than other diagnosis models in KPCA feature space.

5.6 Influence of the Number of Training Samples on Fault Diagnosis Outcomes

To further explain the availability of SSA-SVM, the number of training samples of each type was reduced to 4/5 and 3/5 respectively based on the original training samples. Then the fault diagnosis model was trained and tested. The outcomes are shown in Table 6.

From Table 6 we can see that the diagnosis accuracy of various types of faults is less affected by the reduction of the quantity of training samples. In the case of the test samples in Table 5, when the quantity of various training samples is reduced, the overall fault diagnosis accuracy of SSA-SVM model is still better than that of SVM, GA-SVM and PSO-SVM models in Table 5. It shows that the transformer fault diagnosis model based on SSA-SVM has the merits of good robustness and strong generalization capability. It can still obtain high fault diagnosis accuracy with less training samples.

6 Conclusion

This paper proposes a transformer fault diagnosis method founded on the kernel principal component analysis and the sparrow search algorithm optimization support vector machine, and the main conclusions are as follows:

KPCA is used to reconstruct the feature space and reduce the dimension, and the effective features of transformer fault diagnosis are obtained. Compared with the original feature space, the diagnosis accuracy is improved by 2.22%, the diagnosis time is reduced by 3.38 s, and high-precision diagnosis results are obtained.

Using sparrow search algorithm to optimize SVM parameters can improve the accuracy of SVM fault diagnosis model. Compared with SVM, PSO-SVM and GA-SVM fault diagnosis models, the fault diagnosis model established in the paper has a greater test accuracy (the accuracy rates are 88.89%, 83.33%, 84.44%, 84.44%), which proves the validity of the method in this paper.

In case of training sample reduction, the optimization of SVM fault diagnosis model parameters by sparrow search algorithm can still obtain high fault diagnosis accuracy, which is better than the accuracy of SVM, PSO-SVM and GA-SVM models without training sample reduction. It shows that SSA-SVM model is less affected by the reduction of the quantity of training samples. It proves the generalization of the method in this paper.

References

Zhao, J., Wen, F., Xue, Y., et al.: Architecture of power CPS and its implementation technology and challenges. Autom. Electr. Power Syst. 34(16), 1–7 (2010). (in Chinese)

Han, J., Kong, X., Li, P., et al.: A novel low voltage ride through strategy for cascaded power Electronic transformer. Protect. Control Mod. Power Syst. 4(3), 227–238 (2019)

Dai, J., Song, H., Sheng, G., et al.: Dissolved gas analysis of insulating oil for power transformer fault diagnosis with deep belief network. IEEE Trans. Dielectr. Electr. Insul. 24(5), 2828–2835 (2017)

Ward, S.A.: Evaluating transformer condition using DGA oil analysis. In: 2003 Annual Report Conference on Electrical Insulation and Dielectric Phenomena. Albuquerque, USA, pp. 463–468. IEEE (2003)

Faiz, J., Soleimani, M.: Dissolved gas analysis evaluation in electric power transformers using conventional methods a review. IEEE Trans. Dielect. Electr. Insulat. 24(2), 1239–1248 (2017)

Rogers, R.R.: IEEE and IEC codes to interpret faults in transformers, using gas in oil analysis. IEEE Trans. Electr. Insul. 13(5), 349–354 (2007)

Bakar, N., Abu-Siada, A., Islam, S.: A review of dissolved gas analysis measurement and interpretation techniques. IEEE Electr. Insul. Mag. 30(3), 39–49 (2014)

Shi, X., Zhu, Y., Ning, X., et al.: Transformer fault diagnosis based on deep auto-encoder network. Elect. Power Autom. Equip. 36(5), 122–126 (2016). (in Chinese)

Ruan, L., Xie, J., Gao, S., et al.: Application of artificial neural network and information fusion technology in power transformer condition assessment. High Volt. Eng. 40(3), 822–828 (2014). (in Chinese)

Cheng, Y., Zhang, Z.: Multi-source partial discharge diagnosis of transformer based on random forest. In: Proceedings of the CSEE 2018, vol. 38, no. 17, pp. 5246–5256+5322. (in Chinese)

Zhang, Y., Jiao, J., Wang, K., et al.: Power transformer fault diagnosis model based on support vector machine optimized by imperialist competitive algorithm. Electr. Power Autom. Equip. 38(1), 99–104 (2018). (in Chinese)

Fang, T., Qian, Y., Guo, C., et al.: Research on transformer fault diagnosis based on a beetle antennae search optimized support vector machine. Power Syst. Protect. Control 48(20), 90–96 (2020). (in Chinese)

Wu, J., Ding, H., Ma, X., Yan, B., Wang, X.: Application of improved adaptive bee colony optimization algorithm in transformer fault diagnosis. Power Syst. Protect. Control 48(09), 174–180 (2020). (in Chinese)

Kim, S.W., Kim, S.J., Seo, H.D., et al.: New methods of DGA diagnosis using IEC TC 10 and related databases part 1: application of gas-ratio combinations. IEEE Trans. Dielectr. Electr. Insul. 20(2), 685–690 (2013)

Tian, F., et al.: Transformer fault diagnosis model based on feature selection and ICA-SVM. Power Syst. Protect. Control 47(17), 163–170 (2019). (in Chinese)

Li, J., Chang, Y.: Short-term probabilistic forecasting based on KPCA-KMPMR for wind power. Electr. Power Autom. Equip. 37(2), 22–28 (2017). (in Chinese)

Wang, Y., Wu, J., Ma, S., et al.: Mechanical fault diagnosis research of high voltage circuit breaker based on kernel principal component analysis and Soft Max. Trans. Chin. Electrotech. Soc. 35(Supplement1), 267–276 (2020). (in Chinese)

Li, D., Yang, B., Zhang, Y., et al.: Dimension reduction and reconstruction of multi-dimension spatial wind power data based on optimal RBF kernel principal component analysis. Power Syst. Technol. 44(12), 4539–4546 (2020). (in Chinese)

Samanta, B., Al-Balushi, K.R., Al-Araimi, S.A.: Artificial neural networks and support vector machines with genetic algorithm for bearing fault detection. Eng. Appl. Artif. Intell. 16(7–8), 657–665 (2003)

Xue, J.K., Shen, B.: A novel swarm intelligence optimization approach: sparrow search algorithm. Syst. Sci. Control Eng. 8(1), 22–34 (2020)

Lv, X., Mu, X., Zhang, J., et al.: Multi-threshold image segmentation based on improved sparrow search algorithm. Syst. Eng. Electron. 43(2), 318–327 (2021). (in Chinese)

Guide for condition evaluation of oil-immersed power transformers (reactors): DL/T 1685-2017. National Energy Administration, Beijing, China (2017)

Yin, J.: Research on fault diagnosis method of oil-immersed power transformer based on correlation vector machine. North China Electric Power University (2013)

Acknowledgment

Project supported by Key R&D Program of Hebei Province (20312101D); 2020 General Aviation Additive Manufacturing Collaborative Innovation Center funding project (15).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Han, X., Ma, S., Shi, Z., An, G., Du, Z., Zhao, C. (2022). Fault Diagnosis Method for Transformer Based on KPCA and SSA-SVM. In: He, J., Li, Y., Yang, Q., Liang, X. (eds) The proceedings of the 16th Annual Conference of China Electrotechnical Society. Lecture Notes in Electrical Engineering, vol 891. Springer, Singapore. https://doi.org/10.1007/978-981-19-1532-1_110

Download citation

DOI: https://doi.org/10.1007/978-981-19-1532-1_110

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-1531-4

Online ISBN: 978-981-19-1532-1

eBook Packages: EngineeringEngineering (R0)