Abstract

In the pose estimation of the elliptical ring contour in monocular vision, it is difficult to achieve high precision midline contour extraction and pose estimation, because of the close distance between the inner and outer contours. To ensure measurement accuracy, a high-precision contour separation scheme is proposed in this paper based on Zernike moment detection and the Snake model. Specifically, the sub-pixel edge detection method based on Zernike moment is designed to achieve the rough extraction of internal and external contour, in which the adaptive binarization method is used to obtain the coordinates of the internal and external contours as maskers. Then, combined with mask operation to obtain the coordinates of the separated contours, an iterative contour finding algorithm based on the Snake model is designed to obtain the high-precision inner and outer contour. In addition, an ellipse correction method based on inverse perspective transformation is proposed to solve the problem of difficult matching of ellipse feature points when identifying pixel coordinates of feature points. Finally, the proposed method is applied to the static contact hole oval pose estimation of switchgear on a high voltage power system. The results show that depth measurement error is ±0.5 mm, to moderate repeated measurement precision of ±0.3 mm, indicating that this algorithm can achieve higher accuracy of elliptical ring pose estimation.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Snake model

- Inverse perspective transformation

- Ellipse correction

- Pose estimation

- Fault diagnosis of the circuit breaker

1 Introduction

Visual measurement has been widely used in industrial system detection due to its good stability and accuracy [1, 2, 9]. Configure extraction and feature point matching are two key steps that can determine the accuracy of pose estimation. The key problem of contour extraction is to filter a large number of non-target contours to obtain high precision target contours. The main purpose of feature point matching is to find corners and templates on the target contour to achieve pose estimation.

Common contour extraction methods include edge-based pixel-level detection operators (e.g., Prewitt operator and canny operator [6]) and threshold-based methods (e.g., adaptive binarization [4]). These traditional contour extraction methods are generally pixel-level precision, high efficiency but poor accuracy. Some scholars propose contour extraction based on the moment method [8]. This method is insensitive to noise and can achieve detection accuracy at the sub-pixel level. For example, the rotation invariance of Zernike orthogonal moment can be used to achieve a high precision edge detection, but it generally requires square images with high image quality [3]. For the above methods, one of the common features is that using the classical hierarchical processing technology, i.e., extracting the contour information through the step-by-step process of point-surface-body. This processing method reduces the difficulty of contour extraction but results in insufficient accuracy since the low error is transmitted to the high level. Kass [5] et al. proposed an iterative algorithm of the Snake model. Through the target contour curve energy function with high-low level information, it achieves a higher precision contour location. However, it requires the initial contour with a certain precision as the initial iterative solution.

Many scholars have studied feature point matching of ellipse contour. Song Limei [10] proposed an affine LOG polar coordinate transformation that transforms local concentric ellipses into parallel lines. The obtained image features are used for decoding, which could realize the classification of different ellipse contours, but could not realize the pose estimation. The monocular vision vehicle distance measurement can be realized by the inverse perspective transformation relation of road vanishing points, but it needs to combine the constraint of polygon corner points of the road itself [7].

Inspired by the above analysis, this paper proposes an elliptic ring pose estimation method based on the Snake model and the inverse perspective transformation. Based on the Zernike model, an adaptive threshold binarization is designed to obtain the elliptic ring contour with sub-pixel accuracy. And the precision of the target elliptic contour is enhanced through the Snake model iterative algorithm. To correct the ellipse contour, an inverse perspective transform algorithm based on installation position calibration is proposed to obtain a more accurate estimation of the ellipse contour pose. Experimental results on the dynamic and static contacts of switch cabinet circuit breakers show that the proposed algorithm can achieve a more accurate estimation of the elliptical ring pose because it has less depth error and higher accuracy for moderate repeated measurement.

Notation: Px represents the X-axis coordinates of the center of the ellipse, Py represents the Y-axis coordinates of the center of the ellipse, Ls represents the length of the short axis, Ll represents the length of the long axis, and R represents the rotation angle.

2 Ellipse Ring Contour Extraction Based on Snake Model

In this paper, we extract the initial positions of two ellipse contours by the contour extraction method based on the moment and obtain the coordinates of two mask regions at the same time. Firstly, the original rectangular image is cut into squares required by the Zernike moment detection. The inner and outer ellipse contours are separated by mask operation. The internal and external elliptical contour obtained by Zernike moment detection is used as the initial contour of the Snake model to iteratively search for a more accurate elliptical contour. The final pose estimation contour is obtained by thinning algorithm. The algorithm flow is shown in Fig. 1.

2.1 Rough Contour Extraction

In the rough contour extraction stage, the prediction operation is performed to remove the noise of the original image and the information irrelevant to the contour, which includes the steps of filtering, denoising, and adaptive binarization.

Taking the original image of circuit breaker contact (1600 \(\times \) 1200) as an example, the results are shown in Fig. 2. From the analysis of the local image of the target contour, it can be found that the target elliptic ring can be completely preserved.

Then the filtering schemes such as area filtering, roundness filtering, and center filtering are successively employed to filter the non-target contour.

During the engagement of the circuit breaker’s dynamic and static contacts, the area threshold is set as 90000 of the inner contour of the ellipse when the meshing depth is 0 because the area of the elliptical ring is constantly changing. The area threshold is set as 220,000 of the outer contour when the meshing depth is fully engaged. Filtering parameters are shown in Table 1.

The existence of the non-target ellipse contour makes the target contour easy to be misidentified. Therefore, a non-circular contour filtering method based on roundness evaluation parameters is proposed in this paper. The roundness calculation formula is shown in (1).

where s is the area of the ellipse contour, and c is half of the length of the long axis of the ellipse contour. After contour filtering, the image is shown in Fig. 3. It can be found that the hierarchical filtering scheme has a better effect.

A refinement algorithm-based contour boundary extraction scheme is designed to separate the inner and outer ellipse contours. Through obtaining the filtered elliptic ring inside and outside the contour line, the combination of internal and external contours and line width is set inside and outside boundary elliptical contour. Thus, the coordinates of the mask region of the inner and outer ellipse contour can be obtained, and the inner and outer contour can be separated.

According to Table 2, there is a difference of about 10 pixels in the length of the axes between the inner and outer ellipse contours, and the meshing depth is 0. With the increase of the meshing depth of the dynamic and static contacts, the difference in the length of the two ellipses will be bigger and bigger. Therefore, as long as the depth is 0, the elliptical ring can be separated by thinning the contour boundary, to realize the separation of the elliptical ring in the whole meshing process.

The elliptical ring in Table 2 is refined to obtain a single-pixel elliptical contour, as shown in Fig. 4. It can be seen from the refined renderings that the dividing line obtained by the refinement can better separate the two target contours.

In Table 2, the long and short axis of the outer ellipse contour increase or decrease the width of the boundary line and the inner and outer contour boundary, respectively. They serve as internal and external boundaries. Since the width of the inner and outer boundary is about 10 pixels, the major and minor axis of the outer ellipse contour are increased by 5 pixels as the outer boundary, and the inner contour is reduced by 5 pixels as the inner boundary, as shown in Table 3. After the contour coordinates of the inner and outer boundaries are obtained, the area between the outer boundary and the contour boundary is used as the mask of the outer ellipse contour. The area between the contour boundary and the inner boundary is used as the mask of the internal ellipse contour.

2.2 Refined Contour Extraction

In this subsection, contour extraction is refined to sub-pixel accuracy based on Zernike moment detection and the Snake model.

According to the edge coordinates of the ellipse contour in Table 2, the center of the circle is located near (873,557). The original image is clipped to 1200 \(\times \) 1200 for Zernike detection. The calculation formula of Zernike detection edge point coordinates is shown as follows:

where \( \left[ \begin{array}{c} x_e\\ y_e\\ \end{array} \right] \) is the origin coordinate and N is the size of the template.

A 7 \(\times \) 7 template size is used to construct two corresponding templates M1,1, M2,0. As shown in the detection results in Fig. 5, it can accurately extract the contour of the countersunk hole and effectively filter out the noise edge.

The Zernike moment detection results are cut back according to the original cutting method and then restores to the original size. Mask the image with mask contour, and obtain two independent ellipse profiles, as shown in Fig. 6.

It can be found that the outer and inner contour masks can achieve a more accurate separation of the target ellipse ring contour. The two ellipse contour features obtained by ellipse fitting are shown in Table 4.

For the inner hole of the static contact, the contour of its elliptical ring is concentric in theory. Given this context, the identification accuracy can be judged according to the difference of the center and angle of the two identified contours. As can be seen from Table 4, the coordinate of the center point of the ellipse contour obtained by Zernike moment detection and fitting has a large difference on the X-axis and a small difference on the Y-axis. The rotation angle has a small difference compared with the direct adaptive threshold segmentation method, which is closer to the actual circular contour.

To obtain a more accurate ellipse contour, an ellipse contour searching method is designed based on the Snake model. It iterates through an initial contour to find a more accurate target contour nearby. The cost function is the energy function defined by the snake model. \(I\left( X,Y \right) \), \(C\left( q \right) =C\left( x\left( q \right) ,y\left( q \right) \right) \) is the evolution curve of an image. The energy of the evolution curve is defined as:

where \(E_{int}\) is the internal energy function and \(E_{ext}\) is the external energy function. The smallest E(c) satisfies

Combining the Euler-Lagrange formula of curve C(q) with the Euler-Lagrange formula of (2) can obtain:

By combining (4) and (5), the iterative evolution mode of the curve is shown in

The initial evolution curve of Snake is set as the ellipse contour detected by Zernike moment. The target contour is obtained through the iterative evolution based on the Snake algorithm, and the final elliptical contour is shown in Table 5.

It can be seen from Table 5 that the center point of the ellipse obtained by fitting the two groups of contour data is almost the same. The rotation angle is also slightly different. Compared with the Zernike moment detection method, this method has higher detection accuracy.

After obtaining two sets of ellipse contours, the thinning algorithm is used to extract the middle contour. The refined contour is fitted to get the final target ellipse contour, as shown in Table 6.

3 Ellipse Correction Based on Inverse Perspective Transformation

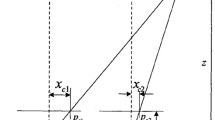

Generally speaking, the initial circular contour will undergo perspective transformation to become an elliptical contour since the camera and the initial circular contour are not parallel. This leads to the problem that there is no feature point for pose estimation. In this section, an ellipse correction method is proposed based on inverse perspective transformation. Firstly, the pose transformation matrix of the camera installation position and the ideal position is obtained through the calibration of the camera installation position. The ideal position is parallel to the camera plane and the object plane. Then the inverse perspective transformation matrix is obtained. Then the approximate circular contour is obtained by inverse transformation of the ellipse contour. The center and endpoints of the circular contour are selected as feature points to realize pose estimation.

3.1 Solving Inverse Perspective Transformation Matrix

In this paper, the installation position calibration is designed, and the correction perspective transformation matrix of the camera installation position and ideal position is solved. To obtain the relationship between the ellipse contour before and after the perspective transformation, the rotation matrix of the calibration matrix is set as the unit matrix, and the perspective transformation matrix is obtained after correction. Through the perspective transformation matrix before and after correction, four groups of points are selected to obtain the coordinates. Thus, the image coordinate relations before and after perspective transformation can be solved to realize ellipse correction.

Through camera position calibration, the rotation matrix \(R_C\) and translation matrix \(T_C\) are obtained.

The general perspective transformation is shown as follows

where u and v are the pixel coordinates of the original image, respectively, x, y, and z are the three-dimensional coordinates after the perspective transformation,x/z,y/z are the two-dimensional coordinates after the perspective transformation, \(\left[ \begin{matrix} a_{11}&{} a_{12}&{} a_{13}\\ a_{21}&{} a_{22}&{} a_{23}\\ a_{31}&{} a_{32}&{} a_{33}\\ \end{matrix} \right] \) is the perspective transformation matrix, \(\left[ \begin{matrix} a_{11}&{} a_{12}\\ a_{21}&{} a_{22}\\ \end{matrix} \right] \) represents the image linear transformation matrix, \(\left[ \begin{matrix} a_{31}&{} a_{32}\\ \end{matrix} \right] \) is the image translation matrix.

Four groups of points in the world coordinate system are selected as shown in Table 7. Before and after ellipse correction, there is a set of pose transformation matrices respectively. In the corrected rotation matrix and pose transformation matrix, the difference lies in the identity matrix. The unit matrix means that no rotation occurs and it is parallel. Before the correction, M is the pose transformation matrix obtained by calibration, i.e.,

The corrected matrix is shown in (9).

The coordinates of the midpoint in Table 7 are multiplied by \(M_b\) and \(M_a\) to obtain the pixel coordinates before and after correction. The inverse perspective transformation matrix M is obtained by four groups of pixel coordinates before and after correction, i.e.,

3.2 Ellipse Correction and Pose Estimation

To realize ellipse pose estimation, it is necessary to find the feature points of the actual 3D model and those corresponding to the 2D image. By transforming the original image, the approximate circular contour is obtained through the inverse perspective transformation matrix. At this time, the center point of the circular contour corresponds to the center point of the 3D model. The ellipse pose estimation is realized through the coordinates of the center point and the endpoint.

The single-pixel ellipse contour obtained in Table 6 is corrected by inverse perspective transformation. The ellipse features obtained by fitting are shown in Table 8.

The PnP algorithm [11] is used to realize pose estimation. The endpoints of the horizontal and vertical axes and the center of the circle of the circular contour are selected as feature points for pose estimation.

The center coordinates of the circular contour are [821.25, 620.02] and the radius is 378.64. The coordinates of the pixels to be matched are \([821.25,620.02]\), [821.25, 241.38], [821.25, 998.66], [1199.89, 620.02], [442.61, 620.02]. The actual coordinates of the 3D object are \([[0, 0, 0], [0, - 17.5, 0], [0, 17.5, 0], [17.5, 0, 0], [- 17.5, 0, 0]]\).

The transformation matrix T between the camera and the static contact is calculated by the solvent method

The rotation vector R is as follows:

By combining the geometric dimensions of the moving and static contacts of the circuit breaker, the deviations in X, Y, and Z directions are obtained, as shown in Table 9.

It can be found from Table 9 that the errors in X, Y, and Z directions are less than 0.2 mm, which conforms to the theoretical state at the beginning of meshing.

4 Experimental Results and Analysis

A switchgear experimental platform is built to verify the accuracy of meshing state detection, as shown in Fig. 7. The actual depth is obtained through scratch measurement. The resin is smeared on the static contact, and the measured scratches are taken out as the actual depth after meshing. Since there is no accurate verification method for moderate deviation, the method of measuring repeated positioning accuracy is adopted to verify the moderate deviation. By constantly pushing the moving contact handcart, the moderate deviation positioning accuracy is measured by changing state, since moderate deviation does not change with the increase of meshing depth in general.

The experimental results are shown in Table 10. From the analysis of the experimental results, it can be seen that the error range between the actual meshing depth and the measuring engagement is within \(\pm 0.5\) mm which is in line with the required accuracy of \(\pm 2.5\) mm.

Where \(A_d\) is the actual meshing depth, and \(M_d\) is the Measuring engagement depth.

The repeated positioning accuracy is used to verify the accuracy of moderate measurements. As shown in Table 11, it can be found that the repeated positioning accuracy of the actual measurement of moderate measurements is about \(\pm 0.3\) mm, which is in line with the experimental environment of actual changes in the measurement of moderate measurements. These experimental results prove the effectiveness of this method for the measurement of center alignment degree.

5 Conclusion

To solve the problem of target contour extraction and feature point matching for elliptical ring pose estimation, a contour extraction method based on Snake, and an ellipse correction method based on inverse perspective transform are proposed in this paper. Compared with the traditional binary contour extraction methods, the contour extracted by this method is close to the contour features of the elliptical ring. The ellipse correction based on inverse perspective transform is used to estimate the ellipse contour with high accuracy. At the same time, the validity and application prospect of the method is verified by the dynamic and static contacts of switchgear as the experimental object.

References

Chen, H., Sun, Y., Gong, Y., Huang, L.: Visual measurement and data analysis of pool boiling on silicon surfaces. J. Chem. Ind. Eng. 90, 1309–1317 (2019)

Chen, P., Huangfu, D., Luo, Z., Li, D.: Visualization analysis of learning attention based on single-image PnP head posture estimation. J. Commun. 39, 141–150 (2018)

Ghosal, S., Mehrotra, R.: Orthogonal moment operators for subpixel edge detection. Pattern Recogn. 26(2), 295–306 (1993)

Hsia, C.H., Lin, T.Y., Chiang, J.S.: An adaptive binarization method for cost-efficient document image system in wavelet domain. J. Imaging Sci. Technol. 64(3), 30401-1–30401-14 (2020)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vision 1(4), 321–331 (1988)

Lee, D.H., Chen, P.Y., Yang, F.J., Weng, W.T.: High-efficient low-cost VLSI implementation for canny edge detection. J. Inf. Sci. Eng. 36(3), 535–546 (2020)

Liu, J., Hou, S., Zhang, K., Yan, X., et al.: Vehicle distance measurement with implementation of vehicle attitude angle estimation and inverse perspective mapping based on monocular vision. Trans. Chin. Soc. Agric. Eng. 34(13), 70–76 (2018)

Lyvers, E.P., Mitchell, O.R., Akey, M.L., Reeves, A.P.: Subpixel measurements using a moment-based edge operator. IEEE Trans. Pattern Anal. Mach. Intell. 11(12), 1293–1309 (1989)

Shi, J., Chen, G., Shen, X.: Visual measurement system for three dimensional rotation attitudes of the air float turntable. Optik 222, 165229 (2020)

Song, L., Chen, C., Chen, Z., Tan, M., Li, D.: Detection and recognition of ring coded markers. Opt. Precis. Eng. 21(012), 3239–3247 (2013)

Tsai, C.Y., Hsu, K.J., Nisar, H.: Efficient model-based object pose estimation based on multi-template tracking and PnP algorithms. Algorithms 11(8), 122 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Qian, C., Wang, G., Zhang, J., Wang, R., Li, P. (2022). Pose Estimation Based on Snake Model and Inverse Perspective Transform for Elliptical Ring Monocular Vision. In: Pan, L., Cui, Z., Cai, J., Li, L. (eds) Bio-Inspired Computing: Theories and Applications. BIC-TA 2021. Communications in Computer and Information Science, vol 1566. Springer, Singapore. https://doi.org/10.1007/978-981-19-1253-5_8

Download citation

DOI: https://doi.org/10.1007/978-981-19-1253-5_8

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-1252-8

Online ISBN: 978-981-19-1253-5

eBook Packages: Computer ScienceComputer Science (R0)