Abstract

The rapid development of software has brought unprecedented severe challenges to software security vulnerabilities. Traditional vulnerability mining methods are difficult to apply to large-scale software systems due to drawbacks such as manual inspection, low efficiency, high false positives and high false negatives. Recent research works have attempted to apply deep learning models to vulnerability mining, and have made a good progress in vulnerability mining filed. In this paper, we analyze the deep learning model framework applied to vulnerability mining and summarize its overall workflow and technology. Then, we give a detailed analysis on five feature extraction methods for vulnerability mining, including sequence characterization-based method, abstract syntax tree-based method, graph-based method, text-based method and mixed characterization-based method. In addition, we summarize their advantages and disadvantages from the angles of single and mixed feature extraction method. Finally, we point out the future research trends and prospects.

This work was supported by the Key Research and Development Science and Technology of Hainan Province(ZDYF202012), the National Key Research and Development Program of China(2018YFB0804701), and the National Natural Science Foundation of China (U1836210).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, with the rapid development of the Internet, the functions of software has brought greater influence. However, the popularization of modern cutting-edge technology has caused more and more complex security problems while providing the convenience for peoples’ life and work [1]. In the process of software development by modules, there are a large number of security. Security vulnerabilities threaten everyone’s personal and property security, and may cause serious consequences such as information disclosure [4]. Even experienced programmers can’t guarantee the absolute security in the process of software development. In the process of vulnerability mining, many aspects need to be considered and studied. Therefore, how to effectively extract the information of vulnerability features is also a topic worthy of in-depth research and discussion.

Compared with other technologies, deep learning performs better in vulnerability mining [6]. Although the application of deep learning in the field of vulnerability mining has achieved a certain number of representative achievements, but the development at this stage is not yet mature. The current research has conducted in-depth research and analysis on the application of deep learning in the field of security vulnerability mining. By summarizing the existing research results of software vulnerability mining based on deep learning [3], we find that there is still a long way to go to realize automatic and intelligent vulnerability mining due to the wide variety of vulnerabilities [8]. Therefore, it can be seen that it is imperative to optimize the design of software vulnerability detection methods. The vulnerability mining model based on mixed representation is applied in the design of software vulnerability detection methods, which is committed to fundamentally improving the efficiency of software vulnerability detection.

This paper is summarized as follows. The second section briefly introduces the working model framework of deep learning applied to data mining. The third section focuses on combing and analyzing the existing feature extraction model methods. The fourth section summarizes and prospects the above work. The main contributions of this paper are as follows: summarize the general framework of deep learning applied to vulnerability mining, classify and describe feature extraction, and look forward to the construction direction of model framework in the future.

2 Relevant Knowledge

In this section, we will give a briefly introduction about the general framework of deep learning-based vulnerability mining. This framework consists of three phases, including data collecting, learning stage and detection phase [2]. The framework of deep learning-based vulnerability mining process is illustrated in Fig. 1. Next, we will give a detailed description about these three phases.

2.1 Data Collecting

A good deep learning model needs a large number of training samples. However, when collected training data is insufficient, the obtained model has a shortcoming of over fitting, which is not suitable for validating other data samples [24]. At present, in the existing work, the sample objects collected for different application scenarios and learning tasks include binary programs, PDF files, C/C++ source code, IOT, etc. The collecting models of these data are uneven, such as fuzzy test generation, etc. For many file formats such as DOC, PDF, SWF, etc., it is a common model to obtain the test input set by using web crawler [14]. At this stage of data collecting, we need to collect a large amount of vulnerability data, most of which come from major open source websites [21].

2.2 Learning Stage

Most of the collected data have more or less problems. It can not be used directly, so the data should be continuously processed and expressed as vector input to ensure the effect of vulnerability detection. Generally speaking, the learning stage consists of three parts, including data pre-processing, data representation and model learning. Detailed descriptions are discussed in the following.

Data Pre-processing. Data pre-processing refers to some data processing before main processing. The collected data in the real world are generally incomplete or inconsistent [13], which can not be directly used, easily lead to unsatisfactory mining results. It can be divided into three parts: data cleaning, data integration and data reduction [15].

Data cleaning “cleans” missing values, smoothing noise data, error data, etc. Data integration [24] refers to the process of combining and storing data from multiple data sources to establish a data warehouse. Data reduction [18] is a kind of data mining, the reduction representation of the data set is obtained by using data specification technology.

Data Representation. Security researchers mainly investigate the performance of various aspects of security vulnerabilities and use different methods to build models, but the extraction process is difficult for the diversity of data. In this paper, we divide existing data representation methods into five categories. We respectively review these five methods and briefly summarize their advantages and disadvantages in Sect. 3. This is the key issue to be discussed in this paper.

Model Learning. As an essential factor of vlunerability mining, a good learning model is important. By combing the existing software vulnerability mining literature based on deep learning, this paper finds that most of the works mainly put forward to new vulnerability mining models from the improvement of data representation. It mainly focuses on the classification based on deep feature representation.

2.3 Detection Phase

The process of the detection phase is similar to data pre-processing and data representation. Firstly, we abstract the extracted data representation module and determine the key points of software vulnerability detection. Then we extract the key points in software vulnerabilities and process the software vulnerability detection data. We also eliminate the detection data irrelevant to the key points of software vulnerabilities and learn the model to quantitatively express the characteristics of software vulnerabilities. Finally, input the results to get the vulnerability mining model. So we realize the software vulnerability detection based on the hybrid deep learning model.

3 Data Representation Methods

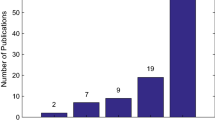

In this section, we give a detailed analysis on data representation methods, including sequence characterization-based method, abstract syntax tree-based method, graph-based method, text-based method and mixed characterization-based method. Moreover, we divide the above methods into two categories, named single feature extraction method and mixed feature extraction method.

3.1 Single Feature Extraction Model

Work [28] describes the process of distinguishing outliers in data preprocessing. However, researchers found that these data still have defects in the process of work, so more accurate data feature extraction methods are needed for more accurate classification.

Sequence Characterization-Based Method. This method mostly informs by lexical analysis of source codes or binary files. In work [23], the library API function is divided into forward and backward calls, generating one or more slices respectively. But only part of the code can be detected and the exact location information of the vulnerability can not be known. In work [22], the deep neural network technology is used to train the rectifier linear unit by random gradient descent method and batch normalization method. It also predicts the vulnerable software components. In addition, the statistical feature selection algorithm is used to reduce the feature and search space. The evaluation results show that the proposed technology can predict vulnerable classes with high precision and recall rate, and has good vulnerability detection ability.

Abstract Syntax Tree (AST) Method. This method is a tree representation of the abstract syntax structure of source code in the process of program compilation. A Novel Neural Source Code Representation based on Abstract Syntax Tree (ASTNN) is proposed in work [9]. Different from the existing processing methods, ASTNN divides the whole AST into a series of small syntax trees. The data in the grammar book is vectorized in a series of ways. Then the RNN model is used to generate the vector representation of the code.

Graph-Based Method. This method mainly mines security vulnerabilities from different program source codes or binary files [19]. Work [5] attempts to represent the program graphically. They use the syntax and semantic information between PDG edges and use GNN to build a vulnerability mining model. The comparison with the model using less structured program representation shows the advantages of modeling known structures. Work [7] expressed the program structure, syntax and semantics in the form of graph, and analyzed the corresponding structure of the program on this basis.

In work [29], improvements are mainly made in face image extraction. After preprocessing with HSV, hierarchical HMAX was used to extract features, which extracts more features than previous work. In work [30], based on the famous Hessian affine feature extraction algorithm, a new local feature descriptor is proposed. This method is used to adapt automatic remote sensing image, which can resist local distortion and greatly improve robustness. In work [31], they mainly study the feature extraction method for quality classification. Based on Support Vector Machine (SVM), SVM-RFE with filter is constructed for experiment and made good progress.

Text-Based Method. This method is to extract the main information of text content, then we vectorize it and convert it into data information that can be used directly [20]. The combination of text mining and deep learning technology is used to realize vulnerability mining, and deep learning is applied to program analysis, which has achieved good detection performance. Work [5] proposes a coding standard for constructing program vector representation, and builds a word frequency statistical model to describe the Java source file disclosure mode. In the program classification task, they further feed back the representation into the deep neural network, and obtain higher accuracy than the “shallow” models (such as logistic regression and SVM). In fact, they only extract rough syntax and semantic information from program source code information, which limits the performance of vulnerability mining model.

In work [26], we proposed to use the attention mechanism algorithm to adaptively perceive the context information and extract the text features from readers’ emotional changes in the reading process. And the convolution threshold recurrent neural network is used to predict readers’ emotions. Work [32] proposed a feature extraction method based on Bag-of-Matrix-Word (BOMW). It extracts from a matrix dictionary and finally counts the frequency of the matrix words to obtain the middle-layer feature of the MFL data matrixes. This improves the effectiveness of features and recognition speed. The advantage of the proposed method not only improves the effectiveness of the features, but also increases the speed.

3.2 Mixed Characterization-Based Method

The major way of data extraction in recent years is close to the mixed characterization-based model. This model refers to the combination of at least two feature representation methods mentioned above, which has higher performance than the four single feature representation methods. It can be seen from work [17] that the prediction accuracy of the deep learning model constructed by CNN and LSTM is higher than the traditional method in the experiment. But there is still a certain gap compared with the mixed characterization-based model constructed by CNN-LSTM. In work [11], using the intermediate representation technology of low-level virtual machine and CNN-RNN to extract the key information of the source code. The results show that the effect is better than the previous experiments. In Work [7], the features in the candidate set samples are abstracted by combining CNN and NLP for vulnerability mining. Therefore, how to integrate multiple features to realize automation and fine-grained vulnerability mining is a research topic worthy of exploration.

In work [25], a two-channel-network model prediction method is proposed. The processed data are put into two parallel convolutional neural networks for feature extraction, and the combination of CNN and LSTM algorithm is used for prediction. The results are the same as work [17] and the mining performance of mixed characterization-based model is better. Work [27] combines work [25] and work [26]. The attention mechanism, CNN and LSTM are used to build a model for photovoltaic prediction.

3.3 Method Comparison

Table 1 is obtained by comparing models of single feature extraction and Mixed characterization-based method. Among them, the sequence characterization-based method mainly extracts features such as identifiers and operators. However, in practical application, due to the large amount of code, only part of the code can be detected, and the extraction effect is not perfect. The abstract syntax tree-based method is to read the source code, merge them into identification tokens according to the regulations, remove blank symbols, comments, etc., and then perform syntax analysis, convert the analyzed array into a tree, and verify the syntax. In this way, data information can be obtained more deeply through node traversal. But the process involves the whole code base, the detection speed is slow, and some identifiers will be deleted when generating the tree, which does not completely match the source code. The graph-based method is to extract features by generating graph structure, which mainly focuses on the logical relationship between data to ensure the integrity of source code to a certain extent, but the detection speed is slow. The text-based method refers to extracting keywords from the text to replace the text information, but it only extracts the semantics roughly. It is easy to ignore the context structure. In contrast, the mixed characterization-based method combines any two or more of the above methods. They can make up for the shortcomings of each other. It also makes the extracted information richer and more complete. How to integrate multiple methods is a problem worthy of further research.

4 Discussion

Traditional machine learning technology needs to extract vulnerability features manually. Then they convert the features into vectors as the input of machine learning algorithm. The technology does not have the ability to automatically extract features from the original data. It heavily depends on expert knowledge for manual work [21]. In the process of combining deep learning with vulnerability mining in the future, I think there are two aspects can be deeply studied.

One is the efficiency and accuracy of vulnerability mining. Firstly, the process of vulnerability mining depends on computing, which is closely related to software scale, hardware system and analysis technology. In the research process, according to different factors, the corresponding countermeasures should be adjusted better to meet the needs and improve efficiency. The other is the automation and intelligence of vulnerability mining. At present, the depth of many studies depends on the experts to solve problems. Automatic vulnerability mining is the Key points and difficulties of current technology research. It plays an important role in realizing automatic vulnerability mining and even network attack and defense.

5 Conclusion

In this paper, we review some representative deep learning-based works of vulnerbaility mining. These methods can be generally divided into five categories: sequence characterization-based method, abstract syntax tree-based method, graph-based method, text-based method and mixed characterization -based method. Meanwhile, we summarize their advantages and disadvantages from the angles of single and mixed feature extraction. Compared to traditional vulnerability mining approaches, deep learning-based methods can realize automatical vulnerability detection without security experts to pre-define mining rules.

Therefore, this paper believes that for different types of problems, we should construct a vulnerability mining model in line with the actual situation. The mixed characterization-based model can extract data information to the greatest extent. In the future, it is an inevitable trend to add deep learning algorithm into the process of vulnerability mining. Automatic and intelligent vulnerability extraction is of far-reaching significance to all aspects of learning and research. More and more accurate information extraction is the premise and foundation of all this. Vulnerability mining based on deep learning is a topic worthy of in-depth discussion.

References

Zhao, H., Li, X., Tan, J., Gai, K.: Smart contract security issues and research status. Inf. Technol. Netw. Secur. 40(05), 1–6 (2021)

Gu, M., et al.: Software secure vulnerability mining based on deep learning. Comput. Res. Dev. 58(10), 2073–2095 (2021)

Li, Y., Huang, C., Wang, Z., Yuan, L., Wang, X.: Overview of software vulnerability mining methods based on machine learning. J. Softw. 31(07), 2040–2061 (2020)

Tao, Y., Jia, X., Wu, Y.: A research method of industrial Internet security vulnerabilities based on knowledge map. Inf. Technol. Netw. Secur. 39(01), 6–13 (2020)

Peng, H., Mou, L., Li, G., et al.: Building program vector representations for deep learning. In: 8th International Conference on Knowledge Science, Engineering and Management, pp. 547–553 (2015)

He, Y., Li, B.: Learning rate strategy of a combined deep learning model. J. Autom. 42(06), 953–958 (2016)

Allamanis, M., Brockschmidt, M., Khademi, M.: Learning to represent programs with graphs. In: International Conference on Learning Presentations, pp. 1–17 (2017)

Wang, L., Li, X., Wang, R., et al.: PreNNsem: A heterogeneous ensemble learning framework for vulnerability detection in software. Appl. Sci. 10(22), 7954 (2020)

Zhang, J., Wang, X., Zhang, H., et al.: A novel neural source code representation based on abstract syntax tree. In: 41st International Conference on Software Engineering, pp. 783–794 (2019)

Wang, H., Li, Han., Li, H.: Research on ontology relation extraction method in the field of civil aviation emergencies. Comput. Sci. Explor. 04(02), 285–293 (2020)

Li, X., Wang, L., Xin, Y., et al.: Automated software vulnerability detection based on hybrid neural network. Appl. Sci. 11(07), 3201 (2021)

Yang, H., Shen, S., Xiong, J., et al.: Modulation recognition of underwater acoustic communication signals based on denoting and deep sparse autoencoder. In: INTER-NOISE and NOISE-CON Congress and Conference Proceedings, pp. 5506–5511 (2016)

Wang, X.: Application of hierarchical clustering based on matrix transformation in gene expression data analysis. Comput. CD Softw. Appl. 15(24), 46–47 (2012)

Zhu, X.: Deep learning analysis based on data collection. Jun. Mid. Sch. World: Jun. Mid. Sch. Teach. Res. 04, 66 (2021)

Liu, M., Wang, X., Huang, Y.: Data preprocessing in data mining. Comput. Sci. 04, 56–59 (2000)

Mohamed, A., Sainath, T., Dahl, G., et al.: Deep belief network for telephone recognition u sing discriminant features. In: IEEE International Conference on acoustics, pp. 5060–5063 (2015)

Wu, F., Wang, J., Liu, J., et al.: Vulnerability detection with deep learning. In: 3rd IEEE International Conference on Computer and Communications, pp. 1298–1302 (2017)

Yu, X., Chen, W., Chen, R.: Implementation of an approximate mining method for data protocol. J. Huaqiao Univ. (NATURAL SCIENCE EDITION) 29(03)29, 370–374 (2008)

Jaafor, O., Birregah, B.: Multi-layered graph-based model for social engineering vulnerability assessment. In: International Conference on Advances in Social Networks Analysis and Mining, pp. 1480–1488 (2015)

Gao, R., Zhou, C., Zhu, R.: Research on vulnerability mining technology of network application program. Mod. Electron. Tech. 41(03), 15–19 (2018)

Lin, Z., Xiang, L., Kuang, X.: Machine Learning in Vulnerability Databases. In: 10th International Symposium on Computational Intelligence and Design (ISCID), pp. 108–113 (2018)

Pang, Y., Xue, X., Wang, H.: Predicting vulnerable software components through deep neural network. In: 12th International Conference on Advanced Computational Intelligence (ICACI), pp. 6–10 (2017)

Zou, Q., et al.: From automation to intelligence: progress in software vulnerability mining technology. J. Tsinghua Univ. (NATURAL SCIENCE EDITION) 58(12), 1079–1094 (2018)

Li, Z., Zou, D., Xu, S., et al. VulDeePecker: A deep learning-based system for vulnerability detection. In: 25th Annual Network and Distributed System Security Symposium(NDSS), pp. 1–15 (2018)

Jian, X., Gu, H., Wang, R.: A short-term photovoltaic power prediction model based on dual-channel CNN and LSTM. Electr. Power Sci. Eng. 35(5), 7–11 (2019)

Zhang, Q., Peng, Z.: Attention-based convolutionalgated recurrent neural network for reader’s emotion prediction. Comput. Eng. Appl. 54(13), 168–174 (2018)

Liu, Q., Hu, Q., Yang, L., Zhou, H.: Research on deep learning photovoltaic power generation model based on time series. Power Syst. Protect. Control 49(19), 87–98 (2021)

Jiang, L., Liu, J., Zhang, H.: Discrimination and compensation of abnormal values of magnetic flux leakage in oil pipeline based on BP neural network. In: Chinese Control and Decision Conference (CCDC), pp. 3714–3718 (2017)

Pisal, A., Sor, R., Kinage, K., Facial feature extraction using hierarchical MAX(HMAX) method. In: International Conference on Computing, Communication, Control and Automation (ICCUBEA), pp. 1–5 (2017)

Sedaghat, A., Ebadi, H.: Remote sensing image matching based on adaptive binning SIFT descriptor. IEEE Trans. Geosci. Remote Sens. 53(10), 5283–5293 (2015)

Liu, X., Tang, J.: Mass classification in mammograms using selected geometry and texture features. New SVM-Bas. Feature Select. Meth. 8(3), 910–920 (2014)

Jiang, L., Liu, J., Zhang, H., Xu, K.: MFL data feature extraction based on KPCA-BOMW Model. In: 31st Chinese Control and Decision Conference (CCDC), pp. 1025–1029 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, Y. et al. (2022). A Review of Data Representation Methods for Vulnerability Mining Using Deep Learning. In: Cao, C., Zhang, Y., Hong, Y., Wang, D. (eds) Frontiers in Cyber Security. FCS 2021. Communications in Computer and Information Science, vol 1558. Springer, Singapore. https://doi.org/10.1007/978-981-19-0523-0_22

Download citation

DOI: https://doi.org/10.1007/978-981-19-0523-0_22

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-0522-3

Online ISBN: 978-981-19-0523-0

eBook Packages: Computer ScienceComputer Science (R0)