Abstract

Convolutional neural network (CNN) is widely used in computer vision and image recognition, and the structure of the CNN becomes more and more complex. The complexity of CNN brings challenges of performance and storage capacity for hardware implementation. To address these challenges, in this paper, we propose a novel 3D array architecture for accelerating CNN. This proposed architecture has several benefits: Firstly, the strategy of multilevel caches is employed to improve data reusage, and thus reducing the access frequency to external memory; Secondly, performance and throughout are balanced among 3D array nodes by using novel workload and weight partitioning schemes. Thirdly, computing and transmission are performed simultaneously, resulting in higher parallelism and lower hardware storage requirement; Finally, the efficient data mapping strategy is proposed for better scalability of the entire system. The experimental results show that our proposed 3D array architecture can effectively improve the overall computing performance of the system.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, with the continuous development of artificial intelligence and deep learning technology, convolutional neural network (CNN) has received great attention and extensive research, because of its excellent performance in wide range applications such as computer vision, image recognition and classification. However, due to higher precision requirements in complicated application scenarios, the CNN-layer has gradually deepened, and thus the network structure has become more and more complex [1,2,3]. Correspondingly, hardware implementation of CNN becomes power and area consuming. As a result, it is desired to design CNN hardware which can achieve high parallel computing capability and high efficiency of data transmission/storage.

The existing hardware platforms for implementing convolutional neural networks have their own advantages and disadvantages. The commonly used hardware platforms for performing CNN are central processing unit (CPU), application specific integrated circuit (ASIC), Graphic processing unit (GPU) and Field programming gate array (FPGA). Although the cost of traditional CPU is relatively low, it has disadvantages on low processing speed and high power consumption when performing deep learning algorithm. ASIC accelerates deep learning algorithms by customized hardware, but it has disadvantages including long design cycle, high cost, and low flexibility. GPU can realize the acceleration of deep learning algorithm through parallel computing, but its disadvantage is high power consumption. By contrast, FPGA can accelerate deep learning algorithm with reasonable power consumption. FPGA is reconfigurable chip and can achieve tradeoff between flexibility and efficiency. It is one of the mainstream hardware acceleration methods commonly used in deep learning algorithms. Therefore, we will study hardware acceleration method for convolutional neural network algorithms based on FPGAs.

In the past few years, a lot of hardware acceleration works have been proposed. The acceleration of convolution layer and full connection layer can be realized by mathematical transformation optimization method [4,5,6,7,8]. Parallel optimization [9] carries out parallel processing according to the dependence of data in convolutional neural network. Loop expansion [10] determines the utilization rate of data in on-chip cache by changing the way of data segmentation and mapping in on-chip cache. The cyclic block optimization [11] improves the computational communication ratio and reduces the access to off-chip memory, thus reducing the overall power consumption of the chip. Loop pipelining technology [12,13,14] uses deeper pipelining strategy to increase the speed of algorithm implementation. In recent years, to deal with the increasing amount data of large-scale deep CNN, more and more attention [15,16,17] has been paid to the algorithm acceleration based on FPGA cluster. Although these methods can perform algorithm acceleration, there exists several design difficulties on hardware such as efficient parallel computing and data storage/access scheme.

In this paper, we propose a novel 3D-CNN-array architecture to implement high-performance hardware acceleration platform. By partitioning data into different array nodes, the 3D-CNN-array can accelerate every single CNN convolution layer. In addition, it can calculate multi-layers convolution of CNN simultaneously via combining the overall array nodes of the architecture. Compared to conventional CNN acceleration method, the cost of the entire 3D-CNN-array architecture is lower. Moreover, the proposed architecture can achieve higher computing speed and larger memory size than convention method.

2 Background and Preliminaries

This section will introduce the architecture of multi-dimension array and the data partition strategy within parallel model.

2.1 Multi-dimensional Array Architecture

With the increase of convolution layers and the complexity of the network structure, the computing amount of the algorithm increase significantly. In order to improve the speed of reasoning and training, hardware platform with multi-dimensions architecture is used to accelerate the algorithm. The commonly used multi-dimensional topology is shown in Fig. 1.

At present, many researchers focus on convention architecture to accelerate deep learning algorithm. With the deepening of the network, higher performance is required for conventional hardware platform. The number of nodes in one-dimensional and two-dimensional architecture is limited by the transmission bandwidth between nodes. By contrast, the scalable three-dimensional (3D) array can broaden the transmission bandwidth. It can achieve higher performance by adding nodes in terms of specific algorithm requirements. When convolution is implemented by 3D array, the input data is partitioned and sent to array nodes. The input data can be broadcasted to multiple array nodes, which is determined by the specific data partition strategy and the algorithm.

2.2 Data Partition Strategy Within Parallel Model

Data parallelism and model parallelism are two common parallel models in distributed machine learning system [18]. Different devices, in data parallelism (Fig. 2a), have multiple copies of the same model. Different data is assigned to each device. The calculation results are obtained by combing the partial results of all devices. In model parallelism (Fig. 2b), different devices are responsible for different parts of the network model. For example, different network layers or different parameters in the same layer are assigned to different devices.

3 Our Proposed 3D-CNN-Array

Based on the aforementioned 3D array architecture and data partition strategy in Sect. 2, in this section, we propose a scalable 3D-CNN-array architecture by hardware platform for accelerating CNN.

Conventional hardware architecture, such as single node CNN architecture, can accelerate CNN with abundant resource such as high computing and storage capability. By contrast, the 3D-CNN-array architecture can accelerate CNN with resource-constrained devices. The 3D-CNN-array architecture is consisted of multiple nodes (Fig. 3). Data can only be transmitted between two adjacent nodes. The calculation results of last 3D-CNN-array layer can be sent to the first layer nodes. The end-to-end connection forms a ring-shaped 3D array with size of M * N * C. The number of M, N, and C in the 3D-CNN-array topology can be adjusted in terms of algorithm requirements, which enables the scalability of the architecture.

The CNN data is partitioned and mapped to each array node according to specific strategy. High-speed communication module between adjacent nodes is used to accelerate the data transmission. In this paper, the proposed architecture adopts the parallel model of data parallel and model parallel. Combined with data partition strategy, the scalable 3D-CNN-array architecture is fabricated.

3.1 Convolution on Single 3D-CNN-Array Node

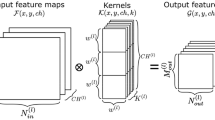

A dedicated computing architecture is designed for each 3D-CNN-array node. It can effectively improve the efficiency of convolution by data reusage. The convolution computing architecture designed in this paper is based on the eyeriss [19,20,21] framework. The detail of this architecture is shown in Fig. 4.

Convolution of single node involves DDR, memory, and PE array. The PE array is consisted of 192 PEs. In terms of characteristics of network and hardware resource, the size of the PE array can be adjusted to meet specific requirements. A single PE contains buffer module, DSP module, and control modules. The buffer module is employed to store data from CNN and temporary results. DSP module is mainly used for multiplication and addition of convolution operations. The control module is worked as a controller of PE.

The PE array is divided into 16 PE blocks to process convolution. Each PE block contains 3 * 3 PEs. In order to improve the data reusage, the input data such as weight, feature map, and partial sum are rearranged (Fig. 5). Both weight and feature map are broadcasted to every PE block. The same weight is sent to each PE in a row (Fig. 5a), while feature map is sent to PE along diagonal of PE block (Fig. 5b). The results of each PE will be sent to the adjacent PE for accumulation (Fig. 5c). Combing with 16 PE blocks, convolution is performed by a single 3D-CNN-array node. Similarly, the CNN is implemented by the cooperation of multi-nodes of 3D-CNN-array. The total memory size of all nodes in 3D-CNN-array is larger than conventional single high performance device. In addition, little volume data fed to each PE acquires limit hardware resource. Therefore, the design can meet the requirements of performance and storage by combing resource-constrained multi-nodes.

For the purpose of reducing access frequency to external memory, strategies for data reusage are designed. Firstly, since the weight is simultaneously fed to four PEs in a row of PE block, a buffer can be shared by the four PEs to store weight, and thus saving on-chip memory resources (Fig. 6). Secondly, every PE has an exclusive buffer to store the required data. Multi-level memory built by the shared buffer and the exclusive buffer can store enough data for convolution. This strategy can reduce the overhead caused by frequently access to external memory, improving the data reusage and performance.

According to the designed architecture, the partial results of each node should be transmitted to adjacent node. An optimization method is designed to achieve tradeoff between computing and data transmission. That is, computing and transmission can be performed simultaneously. During the process of convolution in a node, the partial results should be transmitted to adjacent node while the computing is ongoing. This method can share memory of a single node with other three nodes in a 3D-CNN-array layer, reducing the storage requirements for a single node.

3.2 Data Partition Strategy

In this paper, data partition strategy is based on the combined parallel model of data parallel and model parallel. Weight and feature map of each CNN layer is partitioned in terms of parallel model. In data parallel model, data partition can cause overhead on storage and transition, which decreases the performance of the entire system. Convolution of a single CNN layer will decrease the feature map size and increase the channel number. This will also lead to extra overhead on data transmission. In addition, the workload imbalance of will become severe. Therefore, the strategy that data parallel followed by model parallel is adopted in this work to improve the overall performance.

The transition of the partition strategy between data parallel and model parallel is aided by the high-speed communication module between nodes. The output of data parallel is stored in four 3D-CNN-array nodes which are numbered 1, 2, 3, and 4. The data in each node is divided into four blocks (a, b, c, d) along the channel direction. The transition process of partition strategy is shown in Fig. 7.

The transition is implemented with two steps. Firstly, partial data such as data block a2 and a4 is transmitted to adjacent node and reach an intermediate state 2. Secondly, the rest data such as data block a3 is transmitted. The output of the transition is sent to the corresponding nodes of adjacent 3D-CNN-array layer. This data partition strategy can perform convolution in four nodes simultaneously, which enables the scalability of the 3D-CNN-array architecture.

3.3 Contribution of the 3D-CNN-Array

The unique contribution of our proposed 3D-CNN-array architecture is summarized as follow. Firstly, the strategy of multilevel caches is employed to improve data reusage, and thus reducing the access frequency to external memory; Secondly, performance and throughout are balanced among 3D array nodes by using novel workload and weight partitioning schemes; Thirdly, computing and transmission are performed simultaneously, resulting in higher parallelism and lower hardware storage requirement; Finally, the efficient data mapping strategy is proposed for better scalability of the entire system. An appropriate hardware platform can be fabricated in terms of specific requirements of algorithms based on the scalability.

4 Hardware Implementation of Proposed 3D-CNN-Array

In the previous sections, the architecture of the proposed 3D-CNN-array has been presented in detail. In this section, we will introduce the hardware implementation of this architecture by FPGA.

Figure 8(a) is a neural network acceleration hardware platform based on FPGA. It is a three-dimensional structure with size of 2 * 2 * 3. According to the 3D-CNN-array, the results of the third layer nodes in FPGA array are sent to the first layer nodes for computing, thus forming a ring-shaped three-dimensional FPGA array.

As shown in Fig. 8(b), the feature map is extracted from the data parallel and model parallel. The first and third layer of 3D-CNN-array architecture are connected via high-speed communication interfaces. The hardware system is consisted of 12 FPGA nodes and 24 high-speed optical fiber interfaces.

In terms of the characteristics of the architecture, the desired FPGA should meet the following conditions: multiple high-speed communication interfaces, relatively low price, and essential resources. Finally, The XC7A100T of the Xilinx Aritix 7 series is chosen. The development board is shown in Fig. 9. Table 1 shows the main hardware resource of the XC7A100T-2FGG484I FPGA board.

4.1 Implementation of Single Node Module

The improvement of ImageNet-based deep learning algorithms (or models) are mostly related to the model size. For one thing, the larger the model is, the higher network accuracy is. For another, larger networks may have better results. As the network becomes deeper and deeper, there are millions of parameters in a model. When floating-point is used, the required storage space is unaffordable for mobile hardware acceleration systems. In addition, the multiplication and addition of floating-point data can exhaust DSP resources. The calculation time consumed by floating-point computing is bottleneck for hardware acceleration. Therefore, to address this problem, the given CNN algorithm is transformed from floating-point 32 bits to 8 bits width. It mainly transforms the feature map, weights, and bias of pre-trained network model of the convolutional layer. The transform process involves mathematical statistics and mathematical operations, which do not need to retrain the transformed results. The 8-bit integer data is mapped to the original 32-bit data with little accuracy loss. The accuracy achieved by the transformed data can reach 95%.This method can achieve higher parallelism with limited DSP resource.

The implementation of single node is divided into several modules. It includes system control module, global cache module, DDR control module and PE array module. The system control module is the overall control of the single node. Its functions include fetching instruction set and parsing instruction set. The global buffer module is used to pre-store the input arrangement data and convolutional layer operation results, in which the communication module is also included. The DDR control module functions as the controller of DDR. The PE array module is of great importance in our design. It is consisted of weight buffer module, input buffer module, PE operation control module, PE multiplication and addition module, partial sum accumulation module, PE configuration module. It is used to arrange and control feature map, weight, bias, and partial sum. In addition, it is used to update input parameters of the convolution operation.

4.2 High Speed Communication Module Between Nodes

Considering the high-speed data transmission between nodes, GTP (Gigabit transceiver) high-speed communication interface supported by atrix-7 series FPGA is adopted. This interface is commonly used for data transmission of serial interface, and the maximum speed can reach 6.6 Gb/s. High speed transceivers support a variety of standard protocols. Here, Aurora, an open and free link layer protocol provided by Xilinx company, is used for point-to-point serial data transmission.

Due to the bit width of the internal interface of the protocol is 32 bits while the transmitted data is 8 bits, it is needed to combine 8 bits into 32 bits. If the data length is not integer times of four, it should be supplemented to an integer time of four before conversion. When the data transmission is received, the 32-bit data should be converted to 8-bit. According to the valid signal of the data, data integrity and accuracy can be ensured. The block diagram of the full-duplex communication diagram structure is shown in Fig. 10.

Communication transmission includes three modules: sending module, Aurora module and receiving module. The sending module includes a conversion module and a sending sub-module. The conversion module is used to convert 8-bit data to 32-bit. The sending sub-module is responsible for sending the data to the Aurora module. The receiving module includes a receiving sub-module and a reverse conversion module. The receiving sub-module is mainly used to buffer the data from Aurora module, and the reverse conversion module converts the data into a suitable bit width and sends it back to the internal buffer.

5 Experimental Results

To evaluate the performance of the proposed 3D-CNN-arry architecture, Tiny-YOLO convolution neutral network is selected for experiment. The Tiny-YOLO network is a simplified version of the YOLO network. It has 9 convolutional layers. The convolution kernels size of the first eight layers is 3 * 3, while the last layer of convolution kernels is 1 * 1. The detection principle of Tiny-YOLO is the same as YOLO, and its network structure is shown in Fig. 11. The size of the input image is 416 * 416, and the output of the last convolution layer consists of 125 feature images in which each image size is 13 * 13.

The software Modelsim and Vivado are used to evaluate the performance of the single-layer Tiny-YOLO network with the system clock of 200 MHz. In the experiment, multiple FPGAs function as the nodes of the distributed system to accelerate network. Each FPGA has 240 DSPs for multiplication and addition operation. The size of PE array is a 24 * 8, the width of input and output data is 8 bits.

Table 2 shows the PE utilization used by each single Tiny-YOLO layer. The input channel number of first layer is 3, while it is 4 when data arrangement is completed. The PE utilization during operation is 75%. The convolution core size of the last layer is 1 * 1. Since only the first row of the PE array is used, the PE utilization by the last layer is 33.3% while the rest layers are 100%.

Figure 12 shows the calculation time of the Tiny-YOLO network in a single FPGA node and a 2 * 2 FPGA array. Calculation time refers to the time that from input to convolution operation in a single FPGA, and the time for relu and max pooling. According to the data statistics in Fig. 12, the time consumed by 2 * 2 FPGA array to process single convolution layer is nearly 4 times as long as single FPGA. This is caused by boundary partition and padding. Therefore, based on these experimental results, it can be observed that the proposed 3D-CNN-array architecture can effectively improve system performance by combing multi-nodes with limited hardware resources.

Since FPGA based implementation of 3D-CNN-array architecture is ongoing, convolution cannot be performed on entire 3D FPGA array. However, simulation is implemented on CPU to perform convolution. Table 3 shows the consumed time on single CNN layer by 2D-CNN-array and 3D-CNN-array, respectively.

Aa shown in Table 3, the time consumed by 3D-CNN-array is not in proportion to 2D-CNN-array on single layer. The reason may be that 3D array consumes less time on computing while more time is paid on data transmission.

6 Future Work

Due to the heavy workload of implementing the entire 3D-CNN array, only part of architecture is implemented (2 * 2 array is completed) at present, and the overall performance evaluation of 3D architecture will be implemented in the future work. Based on the simulation results on CPU, we will continue our work to complete the 3D array and analyze the actual performance.

7 Conclusion

In this paper, the novel 3D-CNN-array architecture is proposed and is analyzed. Moreover, the efficient parallel operation mode and the workload balance strategy of inter-/intra-node operation and transmission are discussed. The feasibility of the proposed architecture is verified by a popular CNN model Tiny-YOLO. The experimental results show that the parallelism of PE array of proposed 3D CNN array architecture is improved by 4 times, as compared to conventional single-node CNN architecture. The computational efficiency of FPGA array for single-layer network is nearly 4 times higher than that of a single FPGA. Simulation of 3D array is performed on CPU, and the performance is compared and analyzed. The high data reusage reduces the access frequency of external memory and speeds up the single node computing, which also reduces the overall power consumption of the system.

References

Alex K., Ilya S., Geoffrey E.: ImageNet classification with deep convolutional neural networks. In: International Conference on Neural Information Processing Systems, vol. 25 (2012)

Karen, S., Andrew, Z.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. 1409, 1–14 (2014)

Kaiming, H., Xiangyu, Z., Shaoqing, R., Jian, S.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR 2016, pp. 770–778 (2016)

Adrian, M., Caulfield, E.S., Chung, A.P.: A cloud scale acceleration architecture. In: 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), p. 1. IEEE Computer Society (2017)

Jeremy, B., SungYe, K., Jeff, A.: clCaffe: OpenCL accelerated Caffe for convolutional neural networks. In: IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW). IEEE (2016)

Jialiang, Z., Jing, L.: Improving the performance of OpenCL based FPGA accelerator for convolutional neural network. In: The ACM/SIGDA International Symposium (2017)

Chen, Z., Zhenman, F., Peichen, P.: Caffeine: towards uniformed representation and acceleration for deep convolutional neural networks. In: IEEE/ACM International Conference on Computer aided Design (2017)

Liqiang, L., Yun, L., Qingcheng, X.: Evaluating fast algorithms for convolutional neural networks on FPGAs. In: IEEE 25th Annual International Symposium on Field Programmable Custom Computing Machines (FCCM) (2017)

Lili, Z.: Research on the Acceleration of Tiny-yolo Convolution Neural Network Based on HLS. Chongqing University (2017)

Yufei, M., Yu, C., Sarma, V., Jae, S.: Optimizing loop operation and data ow in FPGA acceleration of deep convolutional neural networks. In: Proceedings of the ACM/SIGDA International Symposium on Field Programmable Gate Arrays FPGA 2017, pp 45–54 (2017)

Chen, Z., Peng, L., Guangyu, S., Yijin, G., Bingjun, X., Jason, C.: Optimizing FPGA based accelerator design for deep convolutional neural networks. In: Proceedings of the ACM/SIGDA International Symposium on Field Programmable Gate Arrays FPGA 2015, pp. 161–170 (2015)

Marimuthu, S., Jawahar, N., Ponnambalam, S.: Threshold accepting and ant-colony optimization algorithms for scheduling m-machine flow shops with lot streaming. J. Mater. Process. Technol. 209(2), 1026–1041 (2009)

YunChia, L., Mfatih, T., Quan, K.: A discrete particle swarm optimization algorithm for the no wait flowshop scheduling problem. Comput. Oper. Res. 35(9), 2807–2839 (2008)

Nicholas, G., Chelliah, S.: A survey of machine scheduling problems with blocking and no wait in process. Oper. Res. 44(3), 510–525 (1996)

Charles, E., Ekkehard, W.: GANGLION a fast field programmable gate array implementation of a connectionist classifier. IEEE J. Solid-State Circuits 27(3), 288–299 (1992)

Jocelyn, C., Steven, P., Francois, R., Boyer, P.Y.: An FPGA based processor for image processing and neural networks. In: Microneuro, p. 330. IEEE (1996)

Clement, F., Berin, M., Benoit, C.: NeuFlow: a runtime reconfigurable dataflow processor for vision. In: Computer Vision and Pattern Recognition Workshops (2011)

Geng, T., Wang, T., Li, A., Jin, X., Herbordt, M.: FPDeep: scalable acceleration of CNN training on deeply-pipelined FPGA clusters. Trans. Comput. 14(8), 1143–1158 (2020)

Motamedi, M., Gysel, P., Akella, V., Ghiasi, S.: Design space exploration of FPGA based deep convolutional neural networks. In: Proceedings of the Asia and South Pacific Design Automation Conference ASPDAC, pp. 575–580 (2016)

Jiang, L.I., Kubo, H., Yuichi, O., Satoru, Y.: A Multidimensional Configurable Processor Array Vocalise. Kyushu Institute of Technology (2014)

Chen, Y.H., Krishna, T., Emer, J.S., Eyeriss, S.V.: An Energy efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid State Circuit 52, 127–138 (2016)

Acknowledgement

This work was supported by the Hundred Talents Program of Chinese Academy of Sciences under grant No. Y9BEJ11001. This research was primarily conducted at Suzhou Institute of Nano-Tech and Nano-Bionics (SINANO).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Ji, Y. et al. (2022). A Scalable 3D Array Architecture for Accelerating Convolutional Neural Networks. In: Sun, F., Hu, D., Wermter, S., Yang, L., Liu, H., Fang, B. (eds) Cognitive Systems and Information Processing. ICCSIP 2021. Communications in Computer and Information Science, vol 1515. Springer, Singapore. https://doi.org/10.1007/978-981-16-9247-5_7

Download citation

DOI: https://doi.org/10.1007/978-981-16-9247-5_7

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-9246-8

Online ISBN: 978-981-16-9247-5

eBook Packages: Computer ScienceComputer Science (R0)