Abstract

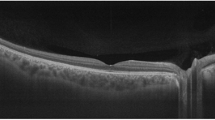

Optical coherence tomography (OCT) uses the interference between the light waves reflected by the reference and sample arms to obtain spatial information on tissue microstructure, which is used to construct an in-vivo cross-sectional image. OCT has become an essential part of daily practice in the field of ophthalmology and glaucoma over the past 30 years. This success was possible by tremendous advances of its technology toward better sensitivity and faster scan speed from Time-domain to Spectral-domain and Swept-source OCT. This chapter was written to describe the basic principles of OCT and how they enable its various applications, which are crucial to understand how such advances of OCT have been possible in the past, and why OCT still has boundless potential for future growth.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Modern progress in medicine has largely been driven by the advent of tomographic imaging techniques such as X-ray computed tomography (CT), magnetic resonance imaging (MRI), and ultrasound imaging. These can generate a cross-sectional image of living tissue, thereby locating the diseased portion. Optical coherence tomography (OCT), meanwhile, can be described as the optical analog of ultrasound imaging (Huang et al. 1991; Fercher et al. 2003; Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019). In ultrasound imaging, a sound pulse is launched, the echoes of which are measured to create a cross-sectional image. In OCT, correspondingly, a light pulse is launched, but its reflections—in the aspect of its delays—cannot be measured directly because of its fast velocity; instead, the interference between the light waves reflected by the reference and sample arms, respectively, is measured to obtain spatial information on tissue microstructure. To understand this more deeply, let us start with light and its wave-like behavior.

2 Light

Light is an electromagnetic phenomenon. A particle that carries an electric charge (q0) will generate an electric field (E) with different amounts of force (Fe) on a charge at different locations: \( \raisebox{1ex}{$E$}\!\left/ \!\raisebox{-1ex}{${q}_0$}\right.={F}_e \). This in turn will generate an electromagnetic field that is well explained by Maxwell’s equations (Brezinski 2006a) as follows:

-

1.

Gauss’ law for electric fields: ∇ ∙ D = ρ, which means that the net outward flow (D) through a closed surface is equal to the total charge (ρ) enclosed by that surface.

-

2.

Gauss’ law for a magnetic field: ∇ ∙ B = 0, which means that the divergence of the magnetic flux (B) is always zero and that there are no isolated magnetic changes.

-

3.

Faraday’s law: \( \nabla \times E=-\raisebox{1ex}{$\partial B$}\!\left/ \!\raisebox{-1ex}{$\partial t$}\right. \), which means that a magnetic field (B) change over time (t) produces an electric field (E).

-

4.

Ampere’s law: \( \nabla \times H=J+\raisebox{1ex}{$\partial D$}\!\left/ \!\raisebox{-1ex}{$\partial t$}\right. \), which means that a changing electric field (D) produces a magnetic field (H).

That is, a changing electric field produces a magnetic field, whereas a changing magnetic field produces an electric field. Since the two fields recreate each other indefinitely, a light can propagate in free space as an oscillating wave with the electric and magnetic fields located perpendicularly to each other (Fig. 1a). Each light, as an oscillating wave, has a frequency (f) and wavelength (λ): c = f × λ. The energy transmitted by the light is proportional to the frequency: E = h × f (h =Planck’s constant).

Interference and Michelson interferometer. By mutual production, electric and magnetic waves can propagate eternally in space with a fixed oscillatory component: a frequency (a; figure taken from Wikipedia). The summation of two waves with the same frequency (b) can produce constructive (c) or destructive (d) interference according to a phase delay. The interference can be measured using the Michelson interferometer (e). The pattern of interference is determined by twice the length difference between M1 and M2 (round trip)

2.1 Interference

A point in a wave is determined by its spatial location (x) and time (t) (Fig. 1b).

W1(x, t) = A cos (kx − ωt), where A is the peak amplitude, \( k=\raisebox{1ex}{$2\pi $}\!\left/ \!\raisebox{-1ex}{$\lambda $}\right. \) is the wavenumber, and ω = 2πf is the angular frequency of the wave. A second wave with a phase delay (φ) is W2(x, t) = A cos (kx − ωt + φ).

The summation of the two waves is as follows:

This equation reveals that the new wave shares the same frequency and wavelength (both k and ω are unchanged). The amplitude of the new wave is determined by a factor of \( 2\cos \frac{\varphi }{2} \). When φ is an even multiple of π, \( \left|\cos \frac{\varphi }{2}\right|=1 \), and so the amplitude is doubled, which phenomenon is known as constructive interference (Fig. 1c). When φ is an odd multiple of π, \( \left|\cos \frac{\varphi }{2}\right|=0 \), and so the amplitude becomes zero, which phenomenon is known as destructive interference (Fig. 1d) (Brezinski 2006b).

More generally, if both the initial wave (W1) and the summation of the initial and reflected waves (W1 + W2) are provided, the phase delay (φ) of W2 can be calculated. The Michelson Interferometer is designed to measure this interference phenomenon (Fig. 1e). A beam splitter directs the course of light against two different mirrors (M1 and M2). Both mirrors reflect the lights: they share the same frequency and wavelength, but the lengths of their light paths are different (Fig. 1e). If the two mirrors reflect the lights equally, the length of difference alone determines the phase delay.

2.2 Coherence

To this point, calculations were based on light with a single frequency (monochromatic light). But real light sources are never monochromatic, even laser sources being quasi-monochromatic, for two reasons. First, light is produced by atomic transitions from a higher-energy state to a lower one, and the energy is directly related to the frequency: E = h × f. The problem is that while the atoms are in the excited state, they are colliding, which results in the loss or gain of some energy. So, the energy and the frequency vary, they are not fixed, even from the beginning. Second, the atoms emitting light are moving in different directions, which fact, given that the interaction of light with a moving object results in a Doppler shift, induces a frequency shift (Brezinski 2006b).

Coherence is a measure of inter-wave correlation. Since a wave is dependent on spatial location (x) and time (t), coherence is measured in both the spatial and temporal aspects. Here, let us focus on temporal coherence. As the frequencies of different waves get closer and closer, the time during which the waves are in unison–the coherence time–will become longer and longer. On the other hand, the lights have a broader spectrum of frequencies, the lower the chances are that the waves will be in unison, and, correspondingly, the shorter the coherence time becomes. The coherence length is the distance that light travels during the coherence time; it signifies that the properties of the beam retain relatively constant characteristics only over that unit of length. Using the Michelson interferometer, the length difference can be detected only within the limit of the coherence length. Therefore, the coherence length is directly associated with the axial resolution, and that is why low-coherence light, which has broader spectral bandwidth, is preferred for Time-domain OCT.

2.3 Diffraction

Diffraction refers to various phenomena that occur when a wave encounters an obstacle or a slit, the aperture effectively becoming a secondary source of the propagating wave. Therefore, even a well-focused, aberration-free converging lens never focuses light to a single point but always has a trace of diffraction (Brezinski 2006b). Diffraction of a circular aperture produces the bright central irradiance surrounded by the series of concentric rings: the former is known as the Airy spot which contains 84% of the total irradiance, and the latter is known as the Airy pattern. Since the system can only resolve the structures to the width of the Airy function, the diffraction determines the resolution limit of the system. It is particularly important for the lateral resolution which is essentially dependent on the optics of the imaging device. A larger diameter lens and shorter wavelengths result in smaller Airy spot and therefore higher resolutions (Brezinski 2006b).

2.4 Tissue Interactions

When light is within materials rather than in a vacuum, it interacts with atoms and molecules, and this interaction is best described as the dipole moment: separation of opposite charges (Brezinski 2006c). Then, the electrical dipole oscillates and generates a second propagating electromagnetic wave with a different velocity. This velocity change in the light is known as refractive index: \( n=\raisebox{1ex}{$c$}\!\left/ \!\raisebox{-1ex}{$v$}\right. \).

The refractive index is frequency dependent: in a medium, different wavelengths will travel at different speeds, and this fact is called dispersion. Although dispersion can be used to decompose the light into its different frequency components as in Spectral-domain OCT, dispersion generally needs to be compensated for in OCT systems because dispersion makes the different wavelengths return at slightly different times.

When the impinging electromagnetic waves are near the resonance frequency, the electron absorbs the energy of a photon of a specific frequency and goes to a higher-energy state, a phenomenon known as absorption. More generally, atoms that are exposed to light absorb light energy and re-emit light in different directions with differing intensities, a phenomenon known as scattering. Keep in mind, meanwhile, that refractive index, dispersion, absorption, and scattering are related to each other in that they are all frequency (f) dependent.

3 Time-Domain OCT

The basic principle of Time-domain OCT is that of the Michelson interferometer’s use of the light of a low-coherence source (Fig. 2a) (Huang et al. 1991; Fercher et al. 2003; Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019; Brezinski 2006d). By replacing one of the mirrors with a sample, one can measure the interference of back-scattered light from the sample and the reflected light from the reference mirror. The back-reflected lights from the two arms (reference and sample) are combined and interfere only if the optical path lengths match within the coherence length. Interference fringe bursts, roughly the amplitudes of the interference, are detected by the photodiode. For each sample point, the reference mirror is scanned in the depth (z) direction, and a complete depth profile is generated at the beam position: this is the A-scan (amplitude scan). For a cross-sectional image, the scan beam is moved laterally across the line, and repeats A-scans in the same way: this is the B-scan (named after “brightness scan” in ultrasonography) (Fig. 2b) (Fercher et al. 2003; Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019).

Working principle of Time-domain OCT (TD-OCT). For the axial image, a light from the light source is split into the reference beam and the central beam. Back-reflected light from both arms is combined again and recorded by the detector. To record one depth profile of the sample (an A-scan), the reference arm needs to be scanned (a). This has to be repeated for each lateral scan position to construct a volume scan (b). Therefore, the lateral resolution of OCT depends on the focusing ability of the probing beam: which is to say, the optics of the system. Figures reprinted from (Aumann et al. 2019)

To compute the axial resolution, the wavelength profile of a light source must be considered, because it determines the coherence length. Let us assume a Gaussian spectrum of wavelengths, then the wavelength profile is determined by its wavelength (λ0) and its spectral bandwidth (∆λ). Usually, the spectral bandwidth is described by the full width at half-maximum (FWHM): the width at the intensity level equal to half the maximum intensity. Then, the axial resolution (along the z axis) in free space equals the round-trip coherence length of the source light (Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019; Brezinski 2006d):

This means, at the theoretical level at least, that a better axial resolution is dependent only on the light source: a shorter wavelength (λ0) and broader spectral bandwidth (∆λ).

The lateral resolution is determined by the spot size of the probing beam, which is dependent on the optics of the imaging device (Fig. 2b; NA = numerical aperture of focusing lens) (Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019).

Tight focusing would increase the lateral resolution (via the increase of the NA) at the expense of focusing depth (the axial range). The confocal parameter, b, is twice the Rayleigh length (zR):

Tight focusing will reduce the beam waist (ω0: radial size of beam), and the axial range.

Time-domain OCT has several limitations. First, it requires movement of the reference arm corresponding to the z-axis location of the sample arm, which critically limits the scan speed. Second, only the light reflected from a thin tissue slice within the coherence length contributes to the OCT signal, while the detection system records, at each axial location over the full spectral bandwidth, the summated power reflected from all depth locations. To handle this glut of information, Fourier-domain OCT was introduced (Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019; de Boer et al. 2017a).

4 Fourier-Domain OCT

A Fourier series is an expansion of a periodic function in terms of an infinite sum of sines and cosines. Let us imagine two waves with different frequencies but the same amplitude and phase.

W1(x, t) = A cos (k1x − ω1t) and W2(x, t) = A cos (k2x − ω2t.)

The summation of the two waves is as follows:

This equation clearly shows that the summated wave has two oscillating components: one with a rapidly varying part and the other with a slowly varying envelope (Fig. 3a) (Aumann et al. 2019; Brezinski 2006d). This means that the individual frequency components still exist, even after the summation of the waves. A Fourier series states that every singular frequency component can be traced back from the complex summated waves.

Working principle of Fourier-domain OCT (FD-OCT). Despite the mixing of diverse frequencies, the summated waves (red waves) still bear the original individual components (a). With formulaic calculation, each component can be extracted from the mixed waves: Fourier-transformation (b; figure taken from Wikimedia). To understand how it works, waves should be described in the complex number system (c; figure taken from Wikipedia). In this polar coordinate system, any point in the waves is described by its real number portion and its imaginary number portion. Then, the waves can be translated into circular movement (phase information is translated into the angle from the x-axis, and the amplitude is translated into the distance from the reference point). Based on the Euler’s equation, any point in the unit circle is cosφ + i sin φ, and can be translated as eiφ (c; figure taken from Wikipedia). Therefore, any wave can be transformed to rotate along the unit circle by simply multiplying eiφ. The frequency profile is squeezed out during the integral process because the hills and valleys of complex waves are counterbalanced during the integration except for when the winding frequencies match exactly those of the individual frequency components. This means that although Fourier-domain OCT receives signals as summated waves, their individual frequency components can be back-calculated and isolated systematically. The total waves are obtained either by a spectrometer (a spectrometer-based OCT: Spectral-domain OCT) or by rapid sweeping of wavelengths from the source (Swept-source OCT). Both implementations record an interference spectrum that carries the depth information of the sample. Fast Fourier Transformation is then used to transform the interference signal into an A-scan (d; figures reprinted from (Aumann et al. 2019))

4.1 Fourier Transformation

Fourier transformation is a function that enables a perspective change from the time- or spatial domain to the frequency domain (Fig. 3b).

To understand why this function acts as a Fourier transformation, it is required to understand the method of manipulating values on a two-dimensional plane using complex numbers: a point (a, b) = a + bi. This manipulation is particularly useful in the aspect of circular transformation since every point in the unit circle (cosθ + i ∙ sin θ) corresponds to eiθ (Fig. 3c; Euler’s equation). This means that one can transpose any function along the circle in the polar coordinate system by multiplying eiθ. During the Fourier transformation, e−2πi is multiplied in order to wrap a function g(t) along the circle, and an integral is used to squeeze out its frequency profile. As the original function is transposed along the circle, the infinite length of function is winding along the circle over and over, and its hills and valleys are counterbalanced during the integral process except for when the winding frequencies match exactly with those of the individual waves of g(t). At this moment, all the hills are arranged on the one side and the valleys on the other side, thereby the transformed function \( \hat{g}(f) \) has spikes on those specific frequencies. After obtaining the frequency profiles from the mixed waves, the individual waves can be extracted and analyzed according to their frequencies (Fig. 3b).

The Fourier transform of the spectrum provides a back-reflection profile as a function of depth (Fig. 3d) (Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019). Depth information is obtained by the different interference profiles from the different path lengths in both arms: larger differences in the optical path length result in higher-frequency interference signals (Brezinski 2006d; de Boer et al. 2017a; Fercher et al. 1995; Leitgeb et al. 2000).

I(k) is the total inference signal, G(k) is the spectral intensity distribution of the light source, z0 is the offset of the reference plane from the object surface, z is the axial location, a(z) is the backscattering coefficient of the object signal with regard to the offset z0, and n is the refractive index. The first term is the direct-current term, and the second term encodes the depth information z: as the light goes deeper, the frequency of oscillation gets higher. The third term describes the mutual interference of all elementary waves; in fact, it can be neglected, since the second term is much higher than the third term in a strongly scattered medium (Brezinski 2006d; de Boer et al. 2017a; Fercher et al. 1995; Leitgeb et al. 2000).

4.2 Spectral-Domain OCT

In this OCT mode’s early development stage, it was called “spectral radar” (Ha Usler and Lindner 1998; Andretzky et al. 1999, 2000); this term probably best describes its mechanism. The photodiode detector is replaced by a spectrometer, which separates the different wavelength components spatially, and these lights are received by a charged-coupled device (CCD) (Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019; Brezinski 2006d; de Boer et al. 2017a; Fercher et al. 1995; Leitgeb et al. 2000). Therefore, only a narrow optical bandwidth corresponding to a long coherence length is measured by each detector element (de Boer et al. 2017a; Fercher et al. 1995; Leitgeb et al. 2000).

The strength of Spectral-domain OCT is speed. It does not require a moving reference arm, and the depth information is obtained all at once. High scan speed enables averaging, which reduces speckle noise (mutual interference of a set of coherent waves) and fluctuations in background noise. With n-times averaging, the signal-to-noise (SNR) ratio increases by \( \sqrt{n} \) times. Therefore, Spectral-domain OCT provides higher sensitivity with better resolution than can Time-domain OCT (Leitgeb et al. 2003; Choma et al. 2003).

Spectral-domain OCT, however, has several weaknesses as well (Liu and Brezinski 2007). First, all Fourier-domain OCTs suffer diminished sensitivity with increases in imaging depth: this is the roll-off phenomenon. During Fourier transformation, a deeper axial location is encoded with a higher-frequency panel, but it is more difficult to capture a signal with a higher frequency. Further, in Spectral-domain OCT, the finite pixel size of the CCD array and the finite spectral resolution of the spectrometer limit the range of measurable frequency. Therefore, a deeper location beyond the obtainable CCD frequency range cannot be measured. Second, Spectral-domain OCT is limited by the capacity of the CCD, which has a limited spectral range. If a spectral range is too small, it cannot capture the full spectrum, and if it is too large, the pixel spacing is reduced, which results in a reduced measurement range without axial resolution improvement. Third, Fourier transform links z (depth) and k (frequency) spaces, whereas k (frequency) and λ (wavelength) have a nonlinear relationship: \( k=\raisebox{1ex}{$2\pi $}\!\left/ \!\raisebox{-1ex}{$\lambda $}\right. \). Therefore, the signal is unevenly sampled in k space but evenly sampled in λ space, which results in broadening of the point spread function. Fourth, dispersion, which is also frequency dependent, has to be corrected by software. Fifth and finally, Spectral-domain OCT is more vulnerable to motion artifacts, due to the integration process of the CCD.

4.3 Swept-Source OCT

Many problems of Spectral-domain OCT are related to the use of the CCD rather than the photodiode detector. In Swept-source OCT, by contrast, the broadband light source is replaced by an optical source that rapidly sweeps a narrow line-width over a broad range of wavelengths (Fercher 2010; Popescu et al. 2011; Drexler et al. 2014; Fujimoto and Swanson 2016; Aumann et al. 2019; Brezinski 2006d; de Boer et al. 2017a; Chinn et al. 1997). Therefore, only a narrow optical bandwidth corresponding to a long coherence length is measured sequentially in time by the single detector element (de Boer et al. 2017a; Chinn et al. 1997).

Swept-source OCT has the merit of using the photodiode detector rather than the CCD. But it is also subject to other innate weaknesses of Fourier-domain OCT (Liu and Brezinski 2007). Swept-source OCT, like Spectral-domain OCT, suffers the roll-off phenomenon, which is related to the instantaneous linewidth of the light source and the detection bandwidth of the analog-to-digital conversion (photodiode and digitizer) (de Boer et al. 2017a). Technologically, it is much easier to achieve a narrow instantaneous line width with a tunable light source than to manufacture a spectrometer covering a more-than-100 nm optical bandwidth with very high spectral resolution. Therefore, roll-off is easier to overcome in Swept-source OCT than in Spectral-domain OCT.

5 Further OCT Modifications

5.1 Enhanced Depth Imaging

OCT imaging of the deep layers of the optic nerve head complex is disturbed by (1) high scattering of the retinal pigment epithelium and (2) the roll-off phenomenon incurred with Fourier-domain OCT. These problems could be partially evaded by using the enhanced depth imaging (EDI) technique. By shifting the position of the reference mirror, the optimum imaging position is moved to the lower part of the sample, and the characteristic roll-off is reversed in depth: that is, deeper layers have smaller differences in optical path lengths, and thus are encoded in lower frequencies. Unfortunately, the EDI technique cannot handle the scattering obstacle (Aumann et al. 2019; Spaide et al. 2008; Mrejen and Spaide 2013).

5.2 OCT Angiography

Using the Doppler shift of the OCT signals, the flow in the vessels (mainly due to the flow of erythrocytes) can be visualized by OCT angiography (An and Wang 2008; Jia et al. 2012). This will be addressed in a separate chapter of this book.

5.3 Visible-Light OCT

Usually, OCT uses a light source in the near-infrared wavelength (800–1300 nm). By reducing the wavelength to visible light (450–700 nm), the axial resolution increases about 8 times and the lateral resolution increases about 1.6 times (Aumann et al. 2019; Nafar et al. 2016). Also, the spectral information can be used to determine the oxygen saturation levels of the vessels (Yi et al. 2015). This technique, however, needs careful correction for chromatic aberrations, and may potentially incur photo-chemical action, bleaching of the photopigments, and, thus too, subject discomfort. Inaccessibility below the intact retinal pigment epithelium (due to strong absorption) is a critical problem (Aumann et al. 2019).

5.4 OCT Elastography

Tissue elasticity, described by the Young modulus, can be measured by Brillouin scattering. But the wavelength shift is extremely small, and so a laser with a very narrow spectral band is required. Still, in the early developmental stage, OCT elastography doubtlessly will provide information on tissues’ mechanical loadings someday (Aumann et al. 2019; Scarcelli and Yun 2012; Kirby et al. 2017).

5.5 Polarization-Sensitive OCT

When light is passed through tissue, the sample is capable of generating an altered state of back-reflected polarized light. Therefore, the polarization state of the back-scattered light gives additional information about the sample. The polarization of the light can be described with the Jones formalism (de Boer et al. 2017b): with calculation of a vector (to describe the direction of polarization) and a matrix (Jones matrix J; the polarization changing properties of a medium), the polarization state can be drawn. Polarization-sensitive OCT can be used for measuring tissue with high birefringence (Aumann et al. 2019; de Boer et al. 2017b). Currently, it incorporates swept-source technology, which enables better resolution (Yamanari et al. 2008; Braaf et al. 2014).

5.6 Adaptive Optics OCT

OCT system resolution is dependent on the light source for the axial direction and on the optical system for the lateral direction: currently about 3 μm in the axial direction, and about 9 μm in the lateral direction. The low resolution in the lateral direction is due to the fact that the diffraction-limited optical resolution is determined by the numerical aperture (NA), which can be maximized by pupil dilation. Optical aberration, however, increases rapidly with pupil diameter. In adaptive optics OCT, the optical aberrations are corrected using a deformable mirror. Consequently, the resolution of <3 μm in all dimensions can be achieved, which enables cellular imaging (Aumann et al. 2019; Kumar et al. 2013; Liu et al. 2017).

5.7 High-speed OCT

High scanning speed can reduce motion artifacts, increase A-scan density so as to improve digital lateral resolution, and increase the SNR ratio with averaging as in Fourier-domain OCT (Aumann et al. 2019). Currently, these goals are being achieved in two ways: (1) increasing the rate of sweep of the light source: Fourier Domain Mode Locked (FDML) Lasers with an MHz Sweep Rate (Huber et al. 2006; Klein et al. 2012), and (2) lateral parallelization of OCT data acquisition: Parallel OCT or Line-field Fourier-domain OCT, which illuminates tissue with either a line or over a full area, where each lateral pixel records the depth structure (Nakamura et al. 2007; Fechtig et al. 2015). For extension of this technology, Full-field Swept-source OCT has been developed. By replacing the line detector with a 2D image sensor, a complete 3D volume stack is constructed in just one sweep of the laser (Hillmann et al. 2016, 2017).

6 Conclusion

In the last paragraph of an epoch-making article (Huang et al. 1991) that coined the term “OCT,” the authors stated that “Because OCT is an optical method, a variety of optical properties can be utilized to identify tissue structure and composition.” In that paper, they presented only a prototype of Time-domain OCT but predicted the boundless development of future OCT in such manifestations as Spectral-domain OCT and Polarization-sensitive OCT. However, it took decades for their prediction to come true, because it required advances in the signal processing mechanism (including Fast Fourier transformation), higher speed of data transfer and conversion, and a sustainable light source of a specific spectrum as well. Notably, the light still has many and various useful properties to be discovered (or problematic ones to be coped with). Those useful properties will enable the development of new OCT iterations in the future.

References

An L, Wang RK. In vivo volumetric imaging of vascular perfusion within human retina and choroids with optical micro-angiography. Opt Express. 2008;16(15):11438–52.

Andretzky P, Lindner M, Herrmann J, et al. Optical coherence tomography by spectral radar: dynamic range estimation and in-vivo measurements of skin: SPIE; 1999.

Andretzky P, Knauer M, Kiesewetter F, Haeusler G. Optical coherence tomography by spectral radar: improvement of signal-to-noise ratio: SPIE; 2000.

Aumann S, Donner S, Fischer J, Müller F. Optical Coherence Tomography (OCT): principle and technical realization. In: Bille JF, editor. High resolution imaging in microscopy and ophthalmology: new frontiers in biomedical optics. Cham: Springer; 2019. p. 59–85.

Braaf B, Vermeer KA, de Groot M, Vienola KV, de Boer JF. Fiber-based polarization-sensitive OCT of the human retina with correction of system polarization distortions. Biomed Opt Express. 2014;5(8):2736–58.

Brezinski ME. Light and electromagnetic waves. In: Brezinski ME, editor. Optical coherence tomography. Burlington: Academic Press; 2006a. p. 31–55.

Brezinski ME. Interference, coherence, diffraction, and transfer functions. In: Brezinski ME, editor, Optical coherence Tomography. Burlington: Academic Press; 2006b. p. 71−94.

Brezinski ME. Light in matter. In: Brezinski ME, editor. Optical coherence tomography. Burlington: Academic Press; 2006c. p. 57–69.

Brezinski ME. Optical coherence tomography theory. In: Brezinski ME, editor. Optical coherence tomography. Burlington: Academic Press; 2006d. p. 97–145.

Chinn SR, Swanson EA, Fujimoto JG. Optical coherence tomography using a frequency-tunable optical source. Opt Lett. 1997;22(5):340–2.

Choma M, Sarunic M, Yang C, Izatt J. Sensitivity advantage of swept source and Fourier domain optical coherence tomography. Opt Express. 2003;11(18):2183–9.

de Boer JF, Leitgeb R, Wojtkowski M. Twenty-five years of optical coherence tomography: the paradigm shift in sensitivity and speed provided by Fourier domain OCT [Invited]. Biomed Opt Express. 2017a;8(7):3248–80.

de Boer JF, Hitzenberger CK, Yasuno Y. Polarization sensitive optical coherence tomography – a review [Invited]. Biomed Opt Express. 2017b;8(3):1838–73.

Drexler W, Liu M, Kumar A, Kamali T, Unterhuber A, Leitgeb R. Optical coherence tomography today: speed, contrast, and multimodality. J Biomed Optics. 2014;19(7):071412.

Fechtig DJ, Grajciar B, Schmoll T, et al. Line-field parallel swept source MHz OCT for structural and functional retinal imaging. Biomed Opt Express. 2015;6(3):716–35.

Fercher AF. Optical coherence tomography – development, principles, applications. Z Med Phys. 2010;20(4):251–76.

Fercher AF, Hitzenberger CK, Kamp G, El-Zaiat SY. Measurement of intraocular distances by backscattering spectral interferometry. Opt Commun. 1995;117(1):43–8.

Fercher AF, Drexler W, Hitzenberger CK, Lasser T. Optical coherence tomography – principles and applications. Rep Prog Phys. 2003;66(2):239–303.

Fujimoto J, Swanson E. The development, commercialization, and impact of optical coherence tomography. Invest Ophthalmol Vis Sci. 2016;57(9):Oct1–oct13.

Ha Usler G, Lindner MW. “Coherence radar” and “spectral radar”-new tools for dermatological diagnosis. J Biomed Opt. 1998;3(1):21–31.

Hillmann D, Spahr H, Pfäffle C, Sudkamp H, Franke G, Hüttmann G. In vivo optical imaging of physiological responses to photostimulation in human photoreceptors. Proc Natl Acad Sci. 2016;113(46):13138–43.

Hillmann D, Spahr H, Sudkamp H, et al. Off-axis reference beam for full-field swept-source OCT and holoscopy. Opt Express. 2017;25(22):27770–84.

Huang D, Swanson EA, Lin CP, et al. Optical coherence tomography. Science. 1991;254(5035):1178–81.

Huber R, Wojtkowski M, Fujimoto JG. Fourier Domain Mode Locking (FDML): a new laser operating regime and applications for optical coherence tomography. Opt Express. 2006;14(8):3225–37.

Jia Y, Morrison JC, Tokayer J, et al. Quantitative OCT angiography of optic nerve head blood flow. Biomed Opt Express. 2012;3(12):3127–37.

Kirby MA, Pelivanov I, Song S, et al. Optical coherence elastography in ophthalmology. J Biomed Opt. 2017;22(12):1–28.

Klein T, Wieser W, André R, Pfeiffer T, Eigenwillig C, Huber R. Multi-MHz FDML OCT: snapshot retinal imaging at 6.7 million axial-scans per second: SPIE; 2012.

Kumar A, Drexler W, Leitgeb RA. Subaperture correlation based digital adaptive optics for full field optical coherence tomography. Opt Express. 2013;21(9):10850–66.

Leitgeb R, Wojtkowski M, Kowalczyk A, Hitzenberger CK, Sticker M, Fercher AF. Spectral measurement of absorption by spectroscopic frequency-domain optical coherence tomography. Opt Lett. 2000;25(11):820–2.

Leitgeb R, Hitzenberger C, Fercher A. Performance of fourier domain vs. time domain optical coherence tomography. Opt Express. 2003;11(8):889–94.

Liu B, Brezinski ME. Theoretical and practical considerations on detection performance of time domain, Fourier domain, and swept source optical coherence tomography. J Biomed Opt. 2007;12(4):044007.

Liu YZ, South FA, Xu Y, Carney PS, Boppart SA. Computational optical coherence tomography [Invited]. Biomed Opt Express. 2017;8(3):1549–74.

Mrejen S, Spaide RF. Optical coherence tomography: imaging of the choroid and beyond. Surv Ophthalmol. 2013;58(5):387–429.

Nafar Z, Jiang M, Wen R, Jiao S. Visible-light optical coherence tomography-based multimodal retinal imaging for improvement of fluorescent intensity quantification. Biomed Opt Express. 2016;7(9):3220–9.

Nakamura Y, Makita S, Yamanari M, Itoh M, Yatagai T, Yasuno Y. High-speed three-dimensional human retinal imaging by line-field spectral domain optical coherence tomography. Opt Express. 2007;15(12):7103–16.

Popescu DP, Choo-Smith LP, Flueraru C, et al. Optical coherence tomography: fundamental principles, instrumental designs and biomedical applications. Biophys Rev. 2011;3(3):155.

Scarcelli G, Yun SH. In vivo Brillouin optical microscopy of the human eye. Opt Express. 2012;20(8):9197–202.

Spaide RF, Koizumi H, Pozzoni MC. Enhanced depth imaging spectral-domain optical coherence tomography. Am J Ophthalmol. 2008;146(4):496–500.

Yamanari M, Makita S, Yasuno Y. Polarization-sensitive swept-source optical coherence tomography with continuous source polarization modulation. Opt Express. 2008;16(8):5892–906.

Yi J, Liu W, Chen S, et al. Visible light optical coherence tomography measures retinal oxygen metabolic response to systemic oxygenation. Light Sci Appl. 2015;4(9):e334.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Lee, K.M. (2021). Principles of OCT Imaging. In: Park, K.H., Kim, TW. (eds) OCT Imaging in Glaucoma. Springer, Singapore. https://doi.org/10.1007/978-981-16-1178-0_1

Download citation

DOI: https://doi.org/10.1007/978-981-16-1178-0_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-1177-3

Online ISBN: 978-981-16-1178-0

eBook Packages: MedicineMedicine (R0)