Abstract

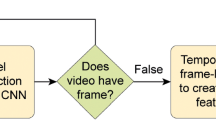

In order to detect different images under different distortion conditions, this paper classifies image distortion types and proposes video image distortion detection and classification based on convolutional neural networks. By segmenting the input video image to obtain a small block image, and then using the active learning feature of the convolutional neural network, the positive and negative cases are equalized, the adaptive learning rate is slowed down and the local minimum problem is solved. The type of distortion is mainly predicted by the SoftMax classifier image, and then the video image prediction type obtained by the majority voting rule is used. The objective quality evaluation algorithm based on image distortion type and convolutional neural network is analyzed. Using LIVE (simulation standard image library) and actual monitoring video library, the final accurate results of the two performance tests are not much different. The overall classification accuracy rate is significantly higher than other algorithms. After the positive and negative case equalization and adaptive learning rate are introduced, it is found that the CNN classification accuracy can be significantly improved. The final test results also confirm that this method can actively learn image quality features, and improve the accuracy of video image classification detection. It is applicable to image quality detection of all video image distortion conditions, and has strong practicability and robustness.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

With the widespread adoption of video surveillance systems and the rapid growth of the size of network video users, video images are increasingly used to represent and communicate information. However, the quality of video images may introduce various distortion problems due to equipment damage, camera shake or environmental factors, such as excessive or too dark anomalies caused by improper exposure setting. The introduction of these distortions will hinder people. Obtaining information from video images, especially in video surveillance systems, can cause serious distortions in monitoring. Therefore, in order to monitor and optimize the video image acquisition, transmission and processing, it is important to study the distortion detection of video images and classify them for timely processing. Video image distortion detection can be divided into subjective detection and objective detection. Subjective detection is the most reliable method, but it is susceptible to objective conditions and subjective emotions, resulting in unsatisfactory and time-consuming test results. Therefore, objective detection methods are more favored in practical applications. Currently, there are some objective detection methods designed for different types of distortion. In the past, the Sobel operator based edge energy sharpness detection algorithm, image analysis based color shift detection method and adaptive noise evaluation algorithm based on noise point neighborhood pixel gray value were used to blur and color the video image respectively. And noise is detected. Some researchers have proposed some image processing-based algorithms to detect the blur, color cast, stripes, snowflakes, and wrong screens of the images in the surveillance video. Wang Zhengyou et al. used a spread function (LSF) to obtain a point spread function (PSF) to detect defocused images. The above algorithms can only detect specific image distortion problems. When the distortion type of the video image changes, a new algorithm needs to be selected. Therefore, it is necessary to study a detection algorithm commonly used for all types of video image distortion types.

The above algorithms can only detect specific image distortion problems. When the distortion type of the video image changes, a new algorithm needs to be selected. Therefore, it is necessary to study a detection algorithm commonly used for all types of video image distortion types. Here we briefly review the existing related methods and analyze them. Fang et al. proposed a quality evaluation method for contrast distortion without reference, which is based on some statistical characteristics of natural images, including mean, variance, skewness, peak and Information entropy. Although these two methods can predict the quality of the image, there are certain deficiencies. For example, the characteristics of the two methods are artificially designed, and the number of features is also large, so that the calculation time complexity is high. It is easy to cause problems such as over-fitting. Conversely, in this paper, our proposed method is based on convolutional neural networks. By reasonably designing convolutional neural networks and training networks, we can automatically learn quality-related features, thus achieving higher prediction performance, and the network. The model can be directly applied after one training, and the calculation time complexity is greatly reduced, designed to provide reference for similar research.

1 Convolutional Neural Network Contrast Distortion Image Quality Evaluation Method

1.1 Positive and Negative Example Equalization

The positive and negative case equalization method can artificially increase the data volume of the sample, avoiding the decrease of the classification accuracy rate due to the uneven distribution of the sample, and is the simplest and most commonly used method for preventing over-fitting. The actual surveillance video library used in the experiment contains 1640 normal video, 455 fuzzy abnormal video, 122 color cast abnormal video, 23 over bright abnormal video and 40 over dark abnormal video. Since the distribution of different types of samples is not uniform, the positive and negative equilibrium of the monitoring video library is realized by using two affine transformations of Ar (rotation) and As (size scaling). It mainly includes the following processes:

-

(1)

Calculate the ratio of the positive sample number Np and the negative sample number Nn:

$$ \rho = \frac{{N_{\rho } }}{{N_{n} }} $$(1)When the value of p is within a reasonable interval, the sample distribution can be considered to be more uniform, and the classification performance of the algorithm will not be affected. According to the experimental results, this reasonable interval is set: \( \tilde{\rho } = [\tfrac{\sqrt 2 }{2},\sqrt 2 ] \).

-

(2)

At the \( \rho \in \tilde{\rho } \) time, the operation is stopped; otherwise, the sample is subjected to positive and negative case equalization. K denotes a meta-sample, and \( {K^{\prime}} \) denotes a new sample after K undergoes affine transformation:

$$ K^{\prime} = A_{r} A_{s} K $$(2)\( A_{r} = \left[ \begin{aligned} \cos \theta ,\sin \theta \hfill \\ - \sin \theta ,\cos \theta \hfill \\ \end{aligned} \right](\theta \in [0,2\pi ]),A_{S} = \left[ \begin{aligned} s,0 \hfill \\ 0,s \hfill \\ \end{aligned} \right](s \in [\tfrac{\sqrt 2 }{2},\sqrt 2 ]) \) , \( \theta \) And \( s \) indicates the rotation angle and scaling.

-

(3)

\( \theta \) And \( s \) Repeat step (2) with random values until the end \( \rho \in \tilde{\rho } \).

1.2 Local Contrast Normalization

Local contrast normalization can avoid supersaturation of neurons, enhance the generalization of the network, effectively eliminate the influence of brightness and contrast variance on the network, and greatly reduce the dependence between adjacent factors. Before the network training, this paper firstly compares the extracted image blocks with local contrast, and sets the brightness value of the image (i, j) to I(i, j), and the localized normalized brightness value is I’ (i, j), the normalization method can be expressed as:

In the formula: \( i \in \{ 1,2, \ldots ,M\} ,\;j \in \{ 1,2, \ldots ,N\} ,\;{\text{M}}\;\text{and}\;{\text{N}} \) are the dimensions of the image block respectively, C is a constant value of 1, and the denominator is avoided to be 0, \( \mu ,\sigma \) which is the mean and standard deviation of the image block pixel values, respectively.

1.3 LRel Function

Previous literature studies have suggested that cortical neurons are rarely in maximum activation and that the activation function can be modeled using the ReL function. A neuron with a nonlinear characteristic of the ReL function is called a ReLU. The use of ReLU in deep neural networks makes it easier for networks to obtain sparse representations of data, achieve convergence faster, and reduce training time. However, the ReLU function has a potential disadvantage: Since the gradient-based optimization algorithm does not adjust the unactivated neuron weights, when a neuron is inactive, its gradient value is 0, resulting in this nerve. Yuan will never be activated. Therefore, there are previous literatures that propose LReL functions based on the ReL function, as shown in the following formula:

Where: a is a small non-zero constant, which makes the LReL function still obtain a smaller non-zero gradient value when the neuron is inactive, which enhances the robustness of the optimization algorithm. When a is 0, the ReLU function and the LReL function are equivalent. In this study, the LReL function is used as the activation function of the convolution layer, taking a = 0.01.

1.4 Training Network

The weights \( \theta = \left[ {W1, \, W2, \, \ldots , \, Wl} \right] \) in the convolutional neural network can be learned by minimizing the cost function. The mathematical expression of the cost function is:

Where: the training sample size is N; the total number of sample categories is K, 1{·} represents the indicative function, 1 is the true proposition value, and 0 is the false proposition value. \( x^{(i)} \) and \( y^{(i)} \) the input image block and its label. In the network training process, a random gradient descent algorithm and a backpropagation algorithm are used to minimize the cost function and update the weight. The momentum term is introduced to speed up the learning. The initial value of the momentum term \( P_{s} = \, 0.9 \). After 10 training, the linear decrease \( P_{s} = \, 0.5 \) remains unchanged. The \( \varepsilon_{0} \) learning rate is initialized to 0.1. According to the adaptive change of the loss function value, the learning rate is reduced by half when the cost function value enters the lag phase. The adaptive learning rate formula is expressed as:

The training method is batch processing, and the batch processing amount is 100 weight update:

The last fully connected layer of the convolutional neural network uses the dropout method, which randomly sets the output value of the hidden unit to 0 with a probability of 0.5 when training the network, and hides the output of the unit when predicting the category of the test sample with the trained network. The value is halved. Using the dropout method can reduce the complex adaptability between neurons, forcing neurons to learn more robust features and prevent over-fitting of neural networks. At the same time, with different dropout masks, dropout can achieve a model averaging in deep neural network structures. After the network training is completed, according to the majority voting rule, the prediction class of the input image is determined by the majority of the image blocks corresponding to the input image.

2 Image Quality Objective Evaluation Algorithm Based on Image Distortion Type and Convolutional Neural Network

2.1 Convolutional Neural Network Design

In recent years, convolutional neural networks have achieved great success in dealing with computer vision problems, greatly improving the performance of prediction. In image quality evaluation, quality evaluation algorithms based on convolutional neural networks have also been proposed to verify convolution. Neural networks are used for the effectiveness of quality assessment questions. The convolutional neural network designed in this paper, the network input is an image, including three convolutional pooling layer units and three fully connected layers, respectively as 1~3 convolutional layer, 1~3 pooling layer, 1~3 Fully connected layer, the network output is a fraction, indicating the quality of the image. In the network, we use the ReLU function as the activation function of the neuron, and the formula is as follows:

In the formula: extract the feature graph representation in the j-layer network \( C_{j + 1} \), the j-th layer network weight representation \( W_{j} \); the offset parameter representation \( B_{j} \), the convolution operation representation \( \otimes \); the maximum function represents max, and the pooling method we select the maximum pooling, i.e.:

Where: a pooled area of the feature map represents R; the maximum value in the valued area represents: \( C_{j + 1} \).

The specific configuration information of each layer of the network (see Table 1), as can be seen from the table, the input is a gray image block with a size of 32 × 32, and the output is the output of the last fully connected layer and the fully connected layer., a value that represents the quality of the image.

2.2 Convolutional Neural Network Training

2.2.1 Preparing Training Samples

After the convolutional neural network structure is defined, we train the network to have the ability to evaluate quality. We first need to prepare the training samples and the quality labels corresponding to the samples. We use the existing contrast distortion image library CID2013 to train us. The network model covers a total of 400 contrast-distortion images in CID2013. Each image corresponds to a subjective quality score of 768 × 512 resolution. By dividing each image into 32 × 32 blocks, 384 image blocks are finally obtained. The 400 frames can obtain 153,600 image blocks, and the SSIM value between each of the distorted image blocks and its original image block is calculated, and the quality of the distorted image block is marked, so that the convolutional neural network can be trained.

2.2.2 Network Training

After preparing the training data, we import the training data into the convolutional neural network to train the network. First, use the norm to define the loss function of the network training:

Where: x is the input training image block, W represents the weight of the network, x quality mark represents y, which is the SSIM value, f(x, W) represents the input x, and the convolutional neural network output value is minimized J, Train the entire convolutional neural network. Here, the gradient descent (SGD) backpropagation algorithm is mainly used, and the weight update process formula is as follows:

2.2.3 Image Quality Prediction

After the convolutional neural network is trained, the network is used to evaluate the quality of the new contrast-distorted image. Since the training uses a 32 × 32 image block size, the new image is divided into non-overlapping 32 × 32 image blocks, and then each image block is input into the network, and the network will give the quality score of the image block., denoted as qi, which represents the output quality of the i-th image block. Assuming that the image has a total of N image blocks, then we use the mass mean of all image blocks to define the quality of the entire image, indicating:

3 Test Results and Analysis

3.1 Experimental Data

In the research of image quality evaluation, three statistical indicators are usually used to evaluate the prediction performance of the proposed algorithm, which are Spearman rank correlation coefficient (SROCC), Kendall rank correlation coefficient (KROCC), and Pearson linear correlation coefficient (PLCC). SROCC, KROCC and PLCC are the consistency of the quality score given by the calculation algorithm and the subjective quality score given by the tester. The closer the consistency is to 1, the higher the performance of the algorithm.

The actual monitoring video library comes from the security city’s safe city project, which includes 1640 normal video, 455 fuzzy abnormal video, 122 color cast abnormal video, 23 over-bright abnormal video, and 40 dark abnormal video. Excessive and too dark anomalies in the video library are caused by improper exposure settings, and subjective detection is easier to discriminate. In the color cast video, there are many kinds of color cast problems such as reddish “yellow”, “green” and “blue”, which are caused by the error between the image captured by the camera and the real color of the object. The cause of the blurred video is the most complicated, by motion. Fuzzy “defocus blur” gray layer occlusion and other factors are generated. In the experiment, the video image is generated from the actual monitoring video library video, there are different resolutions, and the positive and negative samples are equalized by one analysis above. Obtain a more evenly distributed experimental sample (see Fig. 1) as part of the video image of the actual surveillance video library.

3.2 Evaluation Method

According to the traditional method of image classification, this paper calculates the classification error between the predicted category and the real category of the image, and uses the confusion matrix to obtain the classification accuracy of various images and all images, in order to analyze the experimental results. In this paper, the experimental samples are randomly divided into three subsets, of which 60% are training sets, 20% are validation sets, and the remaining 20% are test sets. Using cross-validation training, the training set, verification set, and test set are absolutely isolated from the image content.

3.3 Simulation Standard Image Library Experimental Results Analysis

In order to more intuitively describe which types are easily confused when sorting, the Simulation Standard Image Library (LIVE) test focuses on the confusion matrix between classifications of various types. The confusion matrix is listed as the algorithm classification prediction type, the behavior is true distortion type, and the value in the matrix represents the confusion probability between the distortion types on the corresponding row and column (see Table 2). Compare the method and BIQI, DIIVINE, and BRISQUE to the various types of distorted images in the simulation standard image library test and the overall classification accuracy. The classification accuracy of this method is better than that of the other three methods. The experimental results show that the proposed method can overcome the influence of image content on image distortion detection, and actively learn image quality related features, and has good recognition performance for various types of distorted images.

3.4 Analysis of Experimental Results of Actual Monitoring Video Library

In this paper, the confusion matrix of classification prediction between various types of images in the actual monitoring video library test set is compared with the above. The method of introducing positive and negative example equalization method and adaptive learning rate before and after this method, various video images and overall classification Accuracy (see Table 3). It can be found that the method has good subjective consistency for the classification and detection of video images, and the overall classification accuracy rate reaches 92.88%. After introducing the positive and negative case equalization method and the adaptive learning rate, the network performance is greatly improved. The experimental results show that the proposed method can achieve better classification and detection results for the actual surveillance video images, and has strong practicability.

3.5 Parameter Comparison Experiment Results Analysis

Through the control variable method, the experiment uses the actual monitoring video library to detect the influence of the size of the image block and convolution kernel on the classification performance of the proposed method. The image block mainly includes two aspects of the size of the image block and the number of image blocks.

-

(1)

Image block size. The experiment compares the effect of different size image blocks on the classification accuracy. The results show that if there is no overlapping sampling, the number of image blocks will change with the size of the image block. The larger the size of the image block, the more the number Less, does not meet the principle of control variable law. Therefore, in the experiment, the overlapping sampling method is adopted, and the sampling step size is fixed to 32, and the influence of the edge of the image is removed, and the size of the image block is changed, and the number thereof remains substantially unchanged. When the size of the block is 56 × 56, the classification accuracy is up to 92.88%.

-

(2)

The step size of the sampling. In order to observe the influence of different numbers of image blocks on the network performance, the size of the image block is fixed to 56 × 56 in the experiment, and different number of image blocks are obtained by changing the sampling step size. The overall classification accuracy rate shows that the sampling step size is 32. When the sampling step size is 32, the overall classification accuracy is the highest.

-

(3)

Convolution kernel size. The effect of the size of the convolution kernel on the performance of the algorithm was tested without changing the other structures of the algorithm. The experimental results show that under different convolution kernel conditions, the overall classification accuracy of the algorithm is higher, and the classification accuracy is smaller with the size of the convolution kernel. The experimental results show that the size of the convolution kernel has little effect on the performance of the algorithm.

Although FTQM only refers to part of the original information, we can speculate that this part of the information can be used to evaluate the image quality of contrast distortion. On the contrary, the full reference method can refer to all the information, which contains a lot of redundant information. The information is ineffective for contrast distortion, so the overall evaluation performance is degraded. Observing the non-reference evaluation method, the performance of all non-reference methods is reduced. The proposed method exceeds the FANG method, and the accuracy is improved to close to 0.5, achieving excellent prediction performance. The three methods of NIQE, IL-NIQE and BQMS have poor prediction performance, and the results are all below 0.3. The final analysis found that the full reference and semi-reference methods have achieved high prediction accuracy, and the indicators show that the prediction results exceed 0.9. Compared with the non-reference method, we see that the FANG method has the lowest performance and IL-NIQE can achieve better prediction. performance. The accuracy of NIQE and BQMS prediction is general. The proposed method achieves the best prediction performance with an accuracy of about 0.6.

4 Conclusion

The key to video image quality detection and classification and image quality evaluation is the quality of feature extraction. Some two-step frameless reference image quality evaluation algorithms, such as BIQI, DIIVINE, BRISQUE, etc., must first identify the distorted image and then evaluate the image quality. Based on the statistical characteristics of distorted images, BIQI uses the image distortion type obtained by SVM classifier. DIIVINE can use wavelet transform to decompose the distorted image, then use Gaussian scale hybrid model to fit the wavelet coefficients, and use the fitting parameters as feature vectors to identify the distorted image. BRISQUE extracts the spatial statistical features based on the normalized luminance coefficient distribution, and uses the support vector machine to obtain the image distortion category. Some researchers use natural images and their corresponding normalized luminance images and local standard deviation images as input, use autocorrelation mutual information to describe the correlation of adjacent pixels of input images, and combine multi-directional and multi-scale analysis to extract mutual information features. Achieve classification of distorted images. In addition, there are some methods for extracting distorted image features, such as extracting the mean, variance, gradient, entropy and other perceptual features of the image. This paper introduces an image quality evaluation method specifically for contrast distortion. In this method, we design a convolutional neural network including a three-layer convolution layer “three-layer pooling layer and three-layer full-connection layer. The network allows it to automatically extract features related to contrast distortion to predict image quality. Finally, we verify the effectiveness of the proposed method and surpass the same quality evaluation method. However, through the experimental results, we can find that the proposed method still has room for improvement in prediction performance, so in the future work, we will further study the better evaluation method of contrast distortion and continue to improve the accuracy of quality prediction. In summary, the convolutional neural network (SNN) algorithm is used to detect and identify the four types of distortion, such as excessive anomaly, over-dark anomaly, color cast and blur, which appear in the actual surveillance video image. For the over-fitting and local minimum problems, the positive and negative example equalization method and adaptive learning rate are introduced to improve the learning ability of the network, which has better convergence and robustness.

References

Fan, C., Zhang, Y., Feng, L., et al.: No reference image quality assessment based on multi-expert convolutional neural networks. IEEE Access 6, 8934–8943 (2018)

Wang, H., Fu, J., Lin, W., et al.: Image quality assessment based on local linear information and distortion-specific compensation. IEEE Trans. Image Process. 26(2), 915–926 (2017)

Eerola, T., Lensu, L., Kälviäinen, H., et al.: Study of no-reference image quality assessment algorithms on printed images. J. Electron. Imaging 23(6), 061106 (2014)

Visual quality assessment: recent developments, coding applications and future trends. APSIPA Trans. Signal Inf. Process. 2, e4 (2013)

Ma, L.J., Zhao, C.H.: An effective image fusion method based on nonsubsampled contourlet transform and pulse coupled neural network. Adv. Mater. Res. 756–759, 3542–3548 (2013)

Wang, Z., Ma, Y., Cheng, F., et al.: Review of pulse-coupled neural networks. Image Vis. Comput. 28(1), 5–13 (2010)

Zhang, L., Zhang, L., Bovik, A.C.: A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process. 24(8), 2579–2591 (2015)

Chetouani, A., Beghdadi, A., Deriche, M.: A hybrid system for distortion classification and image quality evaluation. Signal Process. Image Commun. 27(9), 948–960 (2012)

Yong, C., Qiang, F., Feng, S.: Sparse image fidelity evaluation based on wavelet analysis. J. Electron. Inf. Technol. 37, 2055–2061 (2015)

Wang, H.: A new algorithm for integrated image quality measurement based on wavelet transform and human visual system. Proc. SPIE Int. Soc. Opt. Eng. 6034, 60341K-1–60341K-7 (2006)

Chang, C.C., Lin, M.H., Hu, Y.C.: A fast and secure image hiding scheme based on lsb substitution. Int. J. Pattern Recogn. Artif. Intell. 16(04), 399–416 (2002)

Singh, P., Chandler, D.M.: F-MAD: a feature-based extension of the most apparent distortion algorithm for image quality assessment. Proc. SPIE Int. Soc. Opt. Eng. 8653, 86530I (2013)

Mou, X., Imai, F.H., Xiao, F., et al.: SPIE Proceedings [SPIE IS&T/SPIE electronic imaging - San Francisco airport, California, USA (Sunday 23 January 2011)] digital photography VII - Image quality assessment based on edge. Digit. Photogr. VII 7876, 78760N (2011)

Zhu, L.: Image quality evaluation method based on structural similarity. In: Proceedings of SPIE - The International Society for Optical Engineering, vol. 6790, pp. 67905L-67905L-10 (2007)

Hassen, R., Wang, Z., Salama, M.M.A.: Image sharpness assessment based on local phase coherence. IEEE Trans. Image Process. 22(7), 2798–2810 (2013)

Dony, R.D., Coblentz, C.L., Nabmias, C., et al.: Compression of digital chest radiographs with a mixture of principal components neural network: evaluation of performance. RadioGraphics 6(6), 1481–1488 (1996)

Oliveira, S.A.F., Alves, S.S.A., Gomes, J.P.P., et al.: A bi-directional evaluation-based approach for image retargeting quality assessment. Comput. Vis. Image Underst. 168, 172–181 (2017). S1077314217302035

Dendi, S.V.R., Dev, C., Kothari, N., et al.: Generating image distortion maps using convolutional autoencoders with application to no reference image quality assessment. IEEE Signal Process. Lett. 26, 1 (2018)

Bin, J., Jiachen, Y., Zhihan, L., et al.: Wearable vision assistance system based on binocular sensors for visually impaired users. IEEE Internet Things J. 6, 1 (2018)

Hui, C., Chaofeng, L.I.: Stereoscopic color image quality assessment via deep convolutional neural network. J. Front. Comput. Sci. Technol. 12, 1315–1322 (2018)

Rehman, A.U, Rahim, R., Nadeem, M.S., et al.: End-to-end Trained CNN Encode-Decoder Networks for Image Steganography (2017)

Ding, Y., Deng, R., Xie, X., et al.: No-reference stereoscopic image quality assessment using convolutional neural network for adaptive feature extraction. IEEE Access 6, 37595–37603 (2018)

Long, M., Ouyang, C., Liu, H., et al.: Image recognition of Camellia oleifera diseases based on convolutional neural network and transfer learning. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 34(18), 194–201 (2018)

Li, Y., Liu, D., Li, H., et al.: Convolutional neural network-based block up-sampling for intra frame coding. IEEE Trans. Circuits Syst. Video Technol. 28(9), 2316–2330 (2017)

Jia, C., Wang, S., Zhang, X., et al.: Content-aware convolutional neural network for in-loop filtering in high efficiency video coding. IEEE Trans. Image Process. 28, 3343–3356 (2019)

Ahn, N., Kang, B., Sohn, K.A.: Image distortion detection using convolutional neural network (2018)

Jongyoo, K., Anh-Duc, N., Sanghoon, L.: Deep CNN-based blind image quality predictor. IEEE Trans. Neural Netw. Learn. Syst. 30, 1–14 (2018)

Mngenge N A . An adaptive quality-based fingerprints matching using feature level 2 (minutiae) and extended features (pores) (2013). Nelwamondo F.v.prof

Miao, Z., Xu, H., Chen, Y., et al.: An Intelligent computational algorithm based on neural network for spatial data mining in adaptability evaluation. Proc. SPIE Int. Soc. Opt. Eng. 7146, 71461 (2009)

Yuan, C.H., Zhang, M., Gao, S.W., et al.: Research on method of state evaluation and fault analysis of dry-type power transformer based on self-organizing neural network. Appl. Mech. Mater. 303–306, 562–566 (2013)

Acknowledgement

This work was jointly supported by the Key Research and Development Project of Gan-zhou, the name is “Research and Application of Key Technologies of License Plate Recognition and Parking Space Guidance in Intelligent Parking Lot”, the Education Department of Jiangxi Province of China Science and Technology research projects with the Grant No. GJJ181265.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Deng, L., Gu, F., Xie, S. (2020). Research on Intelligent Algorithm for Image Quality Evaluation Based on Image Distortion Type and Convolutional Neural Network. In: Li, K., Li, W., Wang, H., Liu, Y. (eds) Artificial Intelligence Algorithms and Applications. ISICA 2019. Communications in Computer and Information Science, vol 1205. Springer, Singapore. https://doi.org/10.1007/978-981-15-5577-0_61

Download citation

DOI: https://doi.org/10.1007/978-981-15-5577-0_61

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-5576-3

Online ISBN: 978-981-15-5577-0

eBook Packages: Computer ScienceComputer Science (R0)