Abstract

One of the main problems faced by the photometric stereo method is that several measurements are required, as this method needs illumination from light sources from different directions. A solution to this problem is the color photometric stereo method, which conducts one-shot measurements by simultaneously illuminating lights of different wavelengths. However, the classic color photometric stereo method only allows measurements of white objects, while a surface-normal estimation of a multicolored object using this method is theoretically impossible. Therefore, it is necessary to convert a multi-colored object to a single-colored object before applying the photometric stereo. In this study, we employ the intrinsic image decomposition for conversion. Intrinsic image decomposition can produce the intrinsic image which is not affected by the reflectance. Since the intrinsic image is the image with white object, we can obtain the surface normal by applying the conventional photometric stereo algorithm to the intrinsic image. To demonstrate the effectiveness of this study, a measurement device that can realize the multispectral photometric stereo method with seven colors is employed instead of the classic color photometric stereo method with three colors.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Photometric stereo method estimates the normal by the brightness of pictures by changing the direction of the light source. Therefore, the photometric stereo method is not suitable for modeling a moving object. To measure the shape of a moving object, the color photometric stereo method, which employs several colored light sources, was developed. Such method involves placing light sources of red, green, and blue colors in three different directions, which simultaneously illuminate the target object. Common color photometric stereos suffer from the problem that they cannot be applied to the objects which have multiple kinds of albedo. This paper employs the intrinsic image decomposition in order to overcome this problem. The intrinsic image decomposition decomposes an image into an illumination image and an albedo image. The decomposition is based on the Retinex theory, which explains the human perception that the strong edge is due to the albedo difference. The illumination image is independent to the albedo. Common color photometric stereos cannot be applied to the objects with multiple albedos, while our method can, since our method applies the intrinsic image decomposition beforehand.

2 Related Work

The photometric stereo method [35, 43] estimates the normal of the surface of an object by illuminating the object and analyzing the resulting shadings on the object’s surface. This method requires capturing three pictures with different light source directions. Therefore, it is impossible to measure a dynamic object. This problem can be resolved using the color photometric stereo method. In such method, lights are simultaneously illuminated from red, green, and blue light sources, and one picture photographed with an RGB color camera is captured. Such one-shot photograph enables the measurement of a dynamic object.

The color photometric stereo method [8, 20, 44] (also known as shape-from-color) was developed in the 1990s. Since then, various studies [1, 3,4,5,6,7, 10, 13, 14, 18,19,20,21, 29, 32, 33, 40, 41] have been conducted in this regard. However, many problems are inherent in the color photometric stereo method. Many researchers in the past have struggled with this method, and even till recently, it has been an ongoing problem. The principle problem of the color photometric stereo method is the fact that it can only be used with white objects. This is an inevitable problem as long as lights are illuminated from three colored light sources to estimate the surface normal.

Recently, various techniques have been proposed to apply the color photometric stereo method to multicolored objects. Roubtsova et al. [33] applied the color photometric stereo method to objects with arbitrary BRDF (bidirectional reflectance distribution function) by incorporating the Helmholtz Stereo method. However, the principle of this method does not allow for real-time measurement. Therefore, an optical flow is required to measure a dynamic object. Kim et al. [19] and Gotardo et al. [12] also tracked dynamic objects using optical flow, and estimated the surface shape of objects by utilizing several images taken at different times. Fyffe et al. [10] proposed a color photometric stereo method that employs six band cameras and three white color sources. This method pre-measures the reflectance of various objects to prepare a database, and calculated four bases. Using this technique, it is possible to obtain an analytic solution, as there are six unknowns and six equations. Anderson et al. [1] estimated the object color using the normal of multi-view stereo. Their technique incorporates the framework of region segmentation, where the number of the regions is automatically determined based on the Bayesian information criterion. Chakrabarti et al. [4] calculated the histogram of the object color candidates, chose only the limited number of colors that gained most votes, and evaluated the normal by postulating that the object is composed of these limited number of colors. Jiao et al. [18] divided a picture into super pixel regions and estimated the normal by postulating that the object color inside each region is uniform. Miyazaki et al. [25] formulated a cost function of multispectral photometric stereo with several number of constraints, and obtained the surface normal by minimizing it.

In this paper, the problem faced by the color photometric stereo method is solved using a different approach from those used in previous studies. Most of color photometric stereo methods used three lights with red, green, and blue colors and observed the object with an RGB color camera. In our study, seven lights with different wavelengths are used to illuminate the object, which is then observed by a seven-band multispectral camera [25]. Conventional color photometric stereo suffers from multi-colored object, while our method overcomes this problem using the intrinsic image decomposition. Miyazaki et al. [25] suffers from the parameter tuning, since the adequate weights of each constraint of the cost function are required. On the other hand, our method is less affected by such problem, since the conventional photometric stereo can be applied, which does not need any parameter tuning, after the intrinsic image decomposition is applied.

3 Image Formulation

Although the fundamental theory is given in several number of literatures [28, 30], we briefly explain the formulation of the problem. Suppose that we lit a single parallel light source (infinite-far point light source) whose spectral distribution is represented as delta function, the pixel brightness \(I_c\) can be represented as follows.

\(\mathbf {n}\) is a normal vector and \(\mathbf {l}_c\) is the light source direction vector of channel c. Hereinafter, we call \(A_c\) albedo. Note that the camera sensitivity and light source brightness are included in \(A_c\).

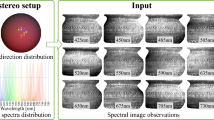

As shown in Fig. 1, this study conducts a photoshoot of a multicolored object using seven channels. Following Eq. (1), the brightness is obtained from this photoshoot as follows.

The surface normal \(\mathbf {n}\) is a 3D vector; however, the degree-of-freedom is two because it is constrained to be a unit vector (such constraint reduces one degree-of-freedom). Albedo \(A_c\) is represented by seven parameters. There are seven equations, as shown in Eq. (2), and nine unknown parameters (\(A_0\), \(A_1\), ..., \(A_6\), \(n_x\), \(n_y\), \(n_z\), s.t., \(n_x^2+n_y^2+n_z^2=1\), namely seven for albedo and two for surface normal). Therefore, color photometric stereo, without any assumption or constraint, is an ill-posed problem (Fig. 2).

The most commonly used assumption is to limit the color of the target objects to white (\(A_0=A_1=\ldots =A_6\)). If we set \(\mathbf {s} = A_c \mathbf {n}\) and if we ignore the shadow, the surface normal \(\mathbf {s}\) (scaled with albedo) can be directly solved.

As is shown above, the color photometric stereo for white objects, or in other words, the conventional photometric stereo can directly solve the surface normal, without iterative optimization nor additional constraints such as smoothness constraints. However, this paper analyzes the methods with multi-colored objects. Therefore, we have to convert the image of multi-colored object to the image of single-colored object.

4 Multispectral Color Photometric Stereo Method

In this section, we describe our proposed method. The subsections are ordered in the same order as the processing step.

4.1 Channel Crosstalk

The 7-channel image captured by the system shown in Sect. 5.1 is contaminated by a so-called channel crosstalk. Therefore, we first remove the channel crosstalk beforehand, and use the processed image for input image. We skip to explain the process [25].

4.2 Edge of Multiple Albedos

The boundary of the regions where the albedo changes has large difference in observed brightness. We detect the boundary of multiple albedos using edge detection technique. Since we have to simply detect the brightness difference, we should use the edge detection algorithm which simply use the brightness difference without any additional post-processing technique. Therefore, Sobel filter [11] is one of the best choice for our purpose.

Since a single light source is illuminated for each channel, there is a shadow in the image. Edge cannot be detected in shadow region from a single channel image, however, we have 7 channels with different light source direction. Voting the Sobel edge of all channels makes the edge robust to noise. From the edge image of each channel \(e_i(x,y)\), we robustly detect the edge e(x, y) as follows.

Note that an edge image is a binary image. The binary image is obtained from thresholding the brightness calculated by Sobel filter. The threshold is automatically calculated using Otsu method [11]. The adequate threshold might be different for each local area of the image, thus, the threshold is calculated for each local patch.

4.3 Intrinsic Image Decomposition

Intrinsic image decomposition [2, 9, 22, 23, 36, 37, 42, 46] is known as an approach which decompose a single image into illumination image and reflectance image (Fig. 3). Illumination image and reflectance image are called intrinsic images. Illumination image represents the effect of illumination, shading, reflection, shadow, and highlight. Reflectance image represents the albedo, namely, the reflectance of diffuse reflection.

Unlike the conventional photometric stereo, color photometric stereo is impossible to be applied to an object with multiple albedo (reflectance). This is because that each light source has different color. Most of the existing techniques apply color photometric stereo to white objects. In order to overcome this problem, we calculate the illumination image from the captured image using the intrinsic image decomposition. Since the illumination image is independent to albedo, the image is suitable to be used for color photometric stereo.

The basic formulation of the intrinsic image decomposition is as follows (Fig. 4).

Here, \(\nabla ^2 I_\mathrm {out} = 0\) is called Laplace equation, and represents the smoothness constraint. Also, \(\nabla ^2 I_\mathrm {out} = \nabla ^2 I_\mathrm {in}\) is called Poisson equation, and represents the identity constraint which outputs the same image as input image. Equation (5) smoothly connects the boundary of different albedos, thus, the output image is seamless at the edge of different albedos.

Discretization of \(\nabla ^2 I\) is as follows.

Equation (6) is formed between the interest pixel and the four-neighbor pixels, and all five pixels should be either edge pixels or non-edge pixels. However, some pixels have both edge pixels and non-edge pixels in four-neighbor pixels. Suppose that the pixel in interest is p, and one of the neighboring pixel is q. Equation shown below is the same as Eq. (6), and is obtained from the average of \(I_p-I_q\) for four-neighbor pixels, \(q_1\), \(q_2\), \(q_3\), and \(q_4\).

The formulation should be adequately adjusted depending on the relationship between neighboring pixels. For example, if the pixel (x, y) is the non-edge pixel and the pixels \((x+1,y)\) and \((x,y-1)\) are the edge pixels, the formulation will be as follows (Fig. 5).

Putting the above formulae all together results in the following.

As is done in above example, we formulate adequately for each pixel depending on the relationship between neighboring pixels.

If we concatenate the formula for all pixels, we have a linear system shown below.

where i(x, y) depends on the condition of each pixel.

The structure of Eq. (9) is the same as \(\mathbf {Ax}=\mathbf {b}\), and thus, we can obtain the closed-form solution \(\mathbf {x}=\mathbf {A}^{-1}\mathbf {b}\) from the inverse of the matrix using sparse matrix library. We used Eigen library for sparse matrix library, and used LU decomposition for calculating the inverse of a matrix.

Our formulation use the natural boundary condition (Neumann condition) for the boundary condition of Poisson equation. The constraint condition is formulated for relative values between neighboring pixels. Therefore, the solution obtained has an ambiguity with a certain constant offset. We solve the ambiguity by calculating the offset value such that the output brightness will be as close as possible to the input brightness.

In order to avoid the influence of outlier, we calculated the offset using median of the difference between the input brightness \(I_\mathrm {in}\) and the output brightness \(\tilde{I}_\mathrm {out}\). After that, the obtained constant value is added to all pixels.

4.4 Photometric Linearization

The illumination image obtained in Sect. 4.3 has no albedo, and has solely the reflections. Conventional photometric stereo drastically degrades the estimation precision of surface normal if there is shadow and specular reflection in the image. The illumination image obtained by intrinsic image decomposition also includes shadow and specular reflection. An approach called photometric linearization [16, 24, 26, 27, 34, 38, 39, 45] can remove the specular reflection. We skip to explain the detailed algorithm of photometric linearization.

4.5 Surface Normal Estimation

Since the albedo is canceled (Sect. 4.3), the severe problem of color photometric stereo, that it cannot be applied to multi-albedo objects, has been extinguished. Same as the most of the existing color photometric stereos which treat with white objects solely, we now have the intrinsic image of multi-colored object which looks like a single-colored object. As a result, the conventional photometric stereo can produce a closed-form solution of surface normal (Eq. (3)).

4.6 Calculating Height from Surface Normal

Surface normal is a differentiation of a shape. Such partial differentiation equation can be represented by Poisson equation. Integrating the Poisson equation, we obtain the height from the surface normal. We skip to explain the detail [15, 17, 31].

5 Experiment

5.1 Experimental Setup

The camera used for this experiment is an FD-1665 3CCD multi-spectral camera by FluxData, Inc., USA, as shown in Fig. 6. Figure 7 shows the spectral sensitivity of the camera. The light source directions were determined prior to the experiment by photographing a mirrored ball. The locations of the light sources and the camera were then left unchanged. The experiment was conducted in a darkroom. Figure 8 shows the experimental environment.

5.2 Experimental Result

First, we applied our method to simulationally-generated sphere. Input image is a single image with 7 channels, though we instead show 7 monochromatic images in Fig. 9(a). The sphere is consisted of several colored materials, and each light has different colors. Therefore, each material appears in different brightness for each channel, which is the fundamental problem of color photometric stereo. Using the edge image (Fig. 9(b)), intrinsic image can be calculated, which has no albedo difference (Fig. 9(c)). As a result, we can obtain the surface normal (Fig. 9(d)) easily using the basic algorithm of photometric stereo. Surface normal in Fig. 9(d) is represented in pseudo-color, where x, y, and z components are converted to red, green, and blue. Figure 9(e) shows the integrated height.

The error of this result (Fig. 9) is shown in Fig. 10. Since this is a simulationally-generated data, we know the ground truth, and we can evaluate the estimation error. The error is represented as the angle between the surface normal of the ground truth and the surface normal of the proposed method. Figure 10(a) shows the error of conventional photometric stereo, which can only estimate white objects, and Fig. 10(b) shows the proposed method, which can be applied to multi-colored object. The average error of the conventional color photometric stereo was 0.0638 [rad], while that of our method was 0.0501 [rad]. This proves that our approach is adequate for color photometric stereo problem.

Next, we applied our method to real objects. First of all, a spherical object (Fig. 11(a)) is measured. After the edge detection (Fig. 11(b)), intrinsic image is calculated (Fig. 11(c)). The estimated shape is shown in Fig. 11(e). The estimated surface normal (Figs. 11(d) and 12(c)) is closer to the true surface normal (Fig. 12(a)) than to the surface normal calculated by conventional photometric stereo (Fig. 12(b)). The result shows that the conventional color photometric stereo suffers from the albedo difference while our method successfully connects the difference of albedo thanks to the intrinsic image decomposition.

The bird object shown in Fig. 13(a) only has diffuse reflection, while the doll object shown in Fig. 14(a) has strong specular reflection. The edge image, the intrinsic image, the surface normal, and the shape are shown in Figs. 13(b) and 14(b), Figs. 13(c) and 14(c), Figs. 13(d) and 14(d), and Figs. 13(e) and 14(e), respectively. The shape in most part is successfully estimated, which empirically proves the usefulness of our method. However, some part which has strong specular reflection resulted in erroneous shape. Photometric linearization (Sect. 4.4) usually requires tens of hundreds of images, while our method has only 7 images. Assembling a hardware with more than 7 colored lights and with multispectral camera with more than 7 channels will be the future work of our hardware apparatus.

6 Conclusion

In this study, surface normal estimation of multicolored objects was conducted by the multi-spectral color photometric stereo method using intrinsic image decomposition. Note that the conventional color photometric stereo method is an ill-posed problem for multicolored objects. Intrinsic image decomposition solved this problem. We employed the measurement hardware that illuminates the object with seven different spectra and captured the image by a seven-band multispectral camera.

The disadvantage of our method is that the algorithm is divided in several steps. The error of the previous step affects the succeeding processes. The future work will be to construct a unified framework which is not consisted of multiple processes.

References

Anderson, R., Stenger, B., Cipolla, R.: Color photometric stereo for multicolored surfaces. In: International Conference on Computer Vision, pp. 2182–2189 (2011)

Bell, M., Freeman, E.T.: Learning local evidence for shading and reflectance. In: IEEE International Conference on Computer Vision (2001)

Brostow, G.J., Stenger, B., Vogiatzis, G., Hernández, C., Cipolla, R.: Video normals from colored lights. IEEE Trans. Pattern Anal. Mach. Intell. 33(10), 2104–2114 (2011)

Chakrabarti, A., Sunkavalli, K.: Single-image RGB photometric stereo with spatially-varying albedo. In: International Conference on 3D Vision, pp. 258–266 (2016)

Drew, M.S.: Reduction of rank-reduced orientation-from-color problem with many unknown lights to two-image known-illuminant photometric stereo. In: Proceedings of International Symposium on Computer Vision, pp. 419–424 (1995)

Drew, M.S.: Direct solution of orientation-from-color problem using a modification of Pentland’s light source direction estimator. Comput. Vis. Image Underst. 64(2), 286–299 (1996)

Drew, M.S., Brill, M.H.: Color from shape from color: a simple formalism with known light sources. J. Opt. Soc. Am. A: 17(8), 1371–1381 (2000)

Drew, M., Kontsevich, L.: Closed-form attitude determination under spectrally varying illumination. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 985–990 (1994)

Funt, B.V., Drew, M.S., Brockington, M.: Recovering shading from color images. In: Sandini, G. (ed.) ECCV 1992. LNCS, vol. 588, pp. 124–132. Springer, Heidelberg (1992). https://doi.org/10.1007/3-540-55426-2_15

Fyffe, G., Yu, X., Debevec, P.: Single-shot photometric stereo by spectral multiplexing. In: IEEE International Conference on Computational Photography, pp. 1–6 (2011)

Gonzalez, R.C., Woods, R.E.: Digital Image Processing, p. 716. Addison Wesley, Reading (1993)

Gotardo, P.F.U., Simon, T., Sheikh, Y., Mathews, I.: Photogeometric scene flow for high-detail dynamic 3D reconstruction. In: IEEE International Conference on Computer Vision, pp. 846–854 (2015)

Hernandez, C., Vogiatzis, G., Brostow, G.J., Stenger, B., Cipolla, R.: Non-rigid photometric stereo with colored lights. In: IEEE International Conference on Computer Vision, p. 8 (2007)

Hernández, C., Vogiatzis, G., Cipolla, R.: Shadows in three-source photometric stereo. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5302, pp. 290–303. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88682-2_23

Horn, B.K.P., Brooks, M.J.: The variational approach to shape from shading. Comput. Vis. Graph. Image Process. 33(2), 174–208 (1986)

Ikehata, S., Wipf, D., Matsushita, Y., Aizawa, K.: Photometric stereo using sparse Bayesian regression for general diffuse surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 39(9), 1816–1831 (2014)

Ikeuchi, K., Horn, B.K.P.: Numerical shape from shading and occluding boundaries. Artif. Intell. 17(1–3), 141–184 (1981)

Jiao, H., Luo, Y., Wang, N., Qi, L., Dong, J., Lei, H.: Underwater multi-spectral photometric stereo reconstruction from a single RGBD image. In: Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, pp. 1–4 (2016)

Kim, H., Wilburn, B., Ben-Ezra, M.: Photometric stereo for dynamic surface orientations. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6311, pp. 59–72. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15549-9_5

Kontsevich, L., Petrov, A., Vergelskaya, I.: Reconstruction of shape from shading in color images. J. Opt. Soc. Am. A: 11, 1047–1052 (1994)

Landstrom, A., Thurley, M.J., Jonsson, H.: Sub-millimeter crack detection in casted steel using color photometric stereo. In: International Conference on Digital Image Computing: Techniques and Applications, pp. 1–7 (2013)

Levin, A., Weiss, Y.: User assisted separation of reflections from a single image using a sparsity prior. IEEE Trans. Pattern Anal. Mach. Intell. 29(9), 1647–1654 (2007)

Matsushita, Y., Nishino, K., Ikeuchi, K., Sakauchi, M.: Illumination normalization with time-dependent intrinsic images for video surveillance. IEEE Trans. Pattern Anal. Mach. Intell. 26(10), 1336–1347 (2004)

Miyazaki, D., Ikeuchi, K.: Photometric stereo under unknown light sources using robust SVD with missing data. In: Proceedings of IEEE International Conference on Image Processing, pp. 4057–4060 (2010)

Miyazaki, D., Onishi, Y., Hiura, S.: Color photometric stereo using multi-band camera constrained by median filter and ollcuding boundary. J. Imaging 5(7), 29 (2019). Article no. 64

Mori, T., Taketa, R., Hiura, S., Sato, K.: Photometric linearization by robust PCA for shadow and specular removal. In: Csurka, G., Kraus, M., Laramee, R.S., Richard, P., Braz, J. (eds.) VISIGRAPP 2012. CCIS, vol. 359, pp. 211–224. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-38241-3_14

Mukaigawa, Y., Ishii, Y., Shakunaga, T.: Analysis of photometric factors based on photometric linearization. J. Opt. Soc. Am. A: 24(10), 3326–3334 (2007)

Nicodemus, F.E., Richmond, J.C., Hsia, J.J., Ginsberg, I.W., Limperis, T.: Geometrical considerations and nomenclature of reflectance. In: Wolff, L.B., Shafer, S.A., Healey, G. (eds.) Radiometry, pp. 940–145. Jones and Bartlett Publishers Inc. (1992)

Petrov, A.P., Kontsevich, L.L.: Properties of color images of surfaces under multiple illuminants. J. Opt. Soc. Am. A: 11(10), 2745–2749 (1994)

Quéau, Y., Mecca, R., Durou, J.-D.: Unbiased photometric stereo for colored surfaces: a variational approach. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 4359–4368 (2016)

Quéau, Y., Durou, J.-D., Aujol, J.-F.: Normal integration: a survey. J. Math. Imaging Vis. 60(4), 576–593 (2018). https://doi.org/10.1007/s10851-017-0773-x

Rahman, S., Lam, A., Sato, I., Robles-Kelly, A.: Color photometric stereo using a rainbow light for non-lambertian multicolored surfaces. In: Cremers, D., Reid, I., Saito, H., Yang, M.-H. (eds.) ACCV 2014. LNCS, vol. 9003, pp. 335–350. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-16865-4_22

Roubtsova, N., Guillemaut, J.Y.: Colour Helmholtz Stereopsis for reconstruction of complex dynamic scenes. In: International Conference on 3D Vision, pp. 251–258 (2014)

Shum, H.-Y., Ikeuchi, K., Reddy, R.: Principal component analysis with missing data and its application to polyhedral object modeling. IEEE Trans. Pattern Anal. Mach. Intell. 17(9), 854–867 (1995)

Silver, W.M.: Determining shape and reflectance using multiple images. Master’s thesis, Massachusetts Institute of Technology (1980)

Tan, R.T., Ikeuchi, K.: Separating reflection components of textured surfaces using a single image. IEEE Trans. Pattern Anal. Mach. Intell. 27(2), 178–193 (2005)

Tappen, M.F., Freeman, W.T., Adelson, E.H.: Recovering intrinsic images from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 27(9), 1459–1472 (2005)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc. B 58, 267–288 (1996)

Tomasi, C., Kanade, T.: Shape and motion from image streams under orthography: a factorization method. Int. J. Comput. Vis. 9(2), 137–154 (1992). https://doi.org/10.1007/BF00129684

Vogiatzis, G., Hernández, C.: Practical 3D reconstruction based on photometric stereo. In: Cipolla, R., Battiato, S., Farinella, G.M. (eds.) Computer Vision: Detection, Recognition and Reconstruction. SCI, vol. 285, pp. 313–345. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-12848-6_12

Vogiatzis, G., Hernandez, C.: Self-calibrated, multi-spectral photometric stereo for 3D face capture. Int. J. Comput. Vis. 97, 91–103 (2012). https://doi.org/10.1007/s11263-011-0482-7

Weiss, Y.: Deriving intrinsic images from image sequences. In: IEEE International Conference on Computer Vision (2001)

Woodham, R.J.: Photometric method for determining surface orientation from multiple images. Opt. Eng. 19(1), 139–144 (1980)

Woodham, R.J.: Gradient and curvature from photometric stereo including local confidence estimation. J. Opt. Soc. Am. 11, 3050–3068 (1994)

Wu, L., Ganesh, A., Shi, B., Matsushita, Y., Wang, Y., Ma, Y.: Robust photometric stereo via low-rank matrix completion and recovery. In: Kimmel, R., Klette, R., Sugimoto, A. (eds.) ACCV 2010. LNCS, vol. 6494, pp. 703–717. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-19318-7_55

Xie, X., Zheng, W., Lai, J., Yuen, P.C.: Face illumination normalization on large and small scale features. In: IEEE Conference on Computer Vision and Pattern Recognition (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Hamaen, K., Miyazaki, D., Hiura, S. (2020). Multispectral Photometric Stereo Using Intrinsic Image Decomposition. In: Ohyama, W., Jung, S. (eds) Frontiers of Computer Vision. IW-FCV 2020. Communications in Computer and Information Science, vol 1212. Springer, Singapore. https://doi.org/10.1007/978-981-15-4818-5_22

Download citation

DOI: https://doi.org/10.1007/978-981-15-4818-5_22

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-4817-8

Online ISBN: 978-981-15-4818-5

eBook Packages: Computer ScienceComputer Science (R0)