Abstract

Computational requirements of Artificial Neural Networks (ANNs) are so vastly different from the conventional architectures that exploring new computing paradigms, hardware architectures, and their optimization has gained momentum. ANNs use large number of parallel operations because of which their implementation on conventional computer hardware becomes inefficient. This paper presents a new design methodology for Multi-operand adders. These adders require multi-bit carries which makes their design unique. Theoretical upper bound on the size of sum and carry in a multi-operand addition for any base and any number of operands is presented in this paper. This result is used to design modular 4-operand, 4-bit adder. This module computes the partial sums using a look-up-table. These modules can be connected in a hierarchical structure to implement larger adders. Method to build a 16 bit 16 operand adder using this basic 4-bit 4-operand adder block is presented. Verilog simulation results are presented for both 4 × 4 and 16 × 16 adders. Design strategy used for the 16 × 16 adder may further be extended to more number of bits or operands with ease, using the guidelines discussed in the paper.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Artificial Intelligence

- Deep Learning

- Hardware accelerators

- Hardware optimization

- Massive parallelism

- Multi-operand addition

- Neural computing

- Neural network processor

1 Introduction

Accelerating developments in Artificial Intelligence (AI) present many new design challenges. Processor architectures for Deep Learning (DL) are characterized by large number of low precision parallel operations using distributed memory. Artificial Neural Networks (ANNs) are designed to behave like biological neuronal bundles. Each of these neurons in the bundle performs a non-linear transformation on input data and communicates the results to hundreds to thousands of other neurons. The number of synapses on a typical neuron in a human brain is about thirty five thousand while that of mice, another mammal, is said to be around fifteen thousand [1]. Each neuron performs scaling, summation and a non-linear transformation on the input data supplied by the synapses to the cell. Therefore, architectures supporting such operations are expected to be very different than Princeton or Harvard architectures generally used in contemporary processors. The design goals for the neural processors will be influenced by the type and size of data being processed as well as the end application. Modern deep neural networks use several types of neural networks arranged in a hierarchy. For example, Convolutional Neural Network (CNN) has layers of convolution neurons, non-linear activation layers, pooling layers and fully connected layers in several iterations. Traditional processors are designed to consume more time in computation compared to the time taken for transfer of data. However, in modern deep learning networks, most of the time is spent in data communication between layers. As neural hardware becomes more capable, the number of synapses is bound to increase, directly increasing the time required to communicate among various neurons, over limited transfer lines. Accessing a central memory, to read and write from compute nodes (representing neurons) and distributing the output to nodes on the next layer, etc. can present several bottlenecks and hence design challenges. The problem becomes more severe as several layers of neural hardware compete to get data from a common pool of memory. Needless to say, design of communication channels to meet such demand poses several design issues. Neural processors tend to use segmented memory or compute-in-memory architectures to overcome these bottlenecks.

Neurons in a new type of neural networks called Auto Resonance Network (ARN), called nodes, register the input and respond to input in the neighborhood of the registered input. Hierarchical Auto Resonance Networks use resonators in multiple layers [2, 3]. Implementing ARN or other neural networks in hardware requires identification of basic sets of operations in popular neural computations. For example, Theano is a library in Python in which, mathematical computations can be carried out on multi-dimensional arrays. Some of the instructions available in Theano support machine learning operations such as activation functions (softmax, reLu, tanh and sigmoid), multi class hinge loss and tensor operations such as mean, sum, min, max, reshape, flatten and many more. It is reported that, Theano implementations of machine learning operations are 1.8 times faster compared to the implementations in NumPy [4], suggesting that efficiency concerns in machine learning are critical to success of a neural network processor. Auto Resonance Networks suggested by our working group require resonators, multi-operand adders and path search networks in several layers as required by the end application [2].

Most of the companies working on the design of custom neural processors have specific architectures in mind. For example Cambricon [5] supports simple vector and matrix operations at hardware level while the latest Google TPUs [6] are designed for the Tensorflow framework. Tensorflow is largely focused on Convolution Neural Network and Long Short Term Memory architectures. TrueNorth from IBM [7] is designed with a perceptron as a basic unit, suggesting its suitability for Multilayer Perceptron (MLP) implementations. There are many new types of neural structures being proposed, e.g., Generative Adversarial Networks [8], Capsule Networks [9] etc. and therefore, identification and design of modules required for neural hardware becomes necessary.

The number of parallel computations to be performed can be estimated by looking at how typical CNN systems are designed. For example, AlexNet [10] uses more than 650 thousand neurons. Each neurons in the first layer of convolutions use 363 input values (11 × 11 × 3) followed by a layer of filter neurons with 1200 inputs (5 × 5 × 48). Speed advantage using Graphic Processing Units (GPUs) has been reported to be 20–60 times the single CPU time. More modest MNIST digit classification using two layers of ARN use neurons with 49 inputs in first layer to achieve an accuracy of 93% with as little as 50 × 10 training samples [3]. Currently, most of AI cloud services are run on GPUs and TPUs configured as accelerators. On a rough estimate, a typical MLP network requires 10–20M multiplications. Processing demands of contemporary CNNs are at least an order higher [11, 12]. In general, the industry is moving towards compute intensive SIMD and MIMD streaming architectures that will reconfigure the hardware to suit the configuring instruction set or end application. This is clearly different than the issues addressed by RISC and CISC computers used today in everyday life. This implies that processor designs have to evolve beyond traditional processor design strategies and architectures like RISC/CISC.

This paper is divided into 5 sections. Discussion on the impact of massive parallelism of neural networks on hardware implementation and the need for multi-operand addition is presented in Sect. 2. Section 3 presents theoretic considerations for implementation of multi-operand adder. Implementation of a modular 4 bit 4 operand adder, its use in building a 16 bit 16 operand adder is discussed in Sect. 4. Results and conclusions are presented at the end in Sect. 5.

2 Impact of Massive Parallelism on Hardware Design

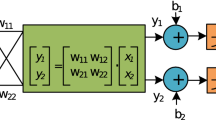

Each neuron in a neural network performs a weighted sum followed by a non-linear activation function. For a neuron with N-inputs, and one output, typically, N additions have to be performed to compute the output. In Deep Neural Networks (DNNs), the number of inputs is large. For example, in our implementation of ARN based MNIST image classifier, the input size N is 49 [3]. In Alexnet, the first convolution layer has 363 inputs [10]. Hence, computing the output involves multiple additions and multiplications. The total number of neurons in such networks is also very large. In Alexnet, the total number of neurons is in excess of 650,000. Implementing such networks on conventional hardware would yield a very slow performing network. Therefore, GPU implementations use sequential multiply-and-add operation to introduce pipelines that can speed such processing.

Linear memory organization can also severely impede the transfer of data between large number of neurons and their compute cores. Working with a single or a few busses to transfer large data sets would make it a bottleneck in the computation. On the other hand, it is possible to implement large number of high speed serial data transfer lines. This will improve the data transfer rate compared to conventional bus oriented architectures. In-core memory organization will allow local storage of values reducing overall need to move the data between memory and compute-cores. There could be several other memory and core organizations that become advantageous in massively parallel architectures. These need to be explored, studied and implemented.

Neural networks perform non-linear operations on the applied data. The case of vanishing or exploding numbers is common in such operations. For example, common activation functions like sigmoid \( (1/\left( {1 + exp\left( { - x} \right)} \right) \), hyperbolic tan (\( tanh\left( x \right) \)), softmax \( (x|\forall y: f\left( y \right) \le f\left( x \right)) \) have limited range of numbers where the output changes significantly. Therefore using limited accuracy is often sufficient in neural networks. Many of the neural network implementations therefore use low precision arithmetic over high precision. One such implementation is reported in [14].

Massive parallelism also has an impact on size of silicon required to implement necessary hardware while maintaining throughput. Earlier, we did mention that use of high speed serial transfer is a preferred solution instead of multiple parallel busses. In one of our earlier works, we have demonstrated that using large number of serial multipliers is more area-time efficient compared to parallel multipliers [13]. This is because the area required to implement a serial multiplier is much smaller than that of a parallel multiplier. So, while a parallel multiplier is performing a single computation, several serial multipliers are performing at a time. This ratio flips in favor of serial multipliers after a particular limit. That limit is fairly small when compared to the number of resources required for deep learning neural networks.

Further, in conventional processor architectures, math and logic operations are performed on two operands. As the number of operations increases, the operands have to be continuously moved between registers and cache, which reduces the overall speed. Therefore, it is necessary to explore multi-operand math operations. Some of the challenges in the design of multi-operand adder for Convolutional Neural Networks have been discussed in [15]. Multi-operand operations were simply an overhead in traditional computations, except in some scientific computing like multi-parameter simulations. Other requirement was in graphics, which was already addressed by the powerful MIMD GPUs. Therefore, most of the designers delegated such operations to software, following the RISC guidelines. However, the computational scenario in last couple years has changed so dramatically that immediate attention needs to be given to design of such operations.

Some of the issues related to design of multi-operand systems are (a) selection of number of operands, (b) modularity in design, (c) computation of carry, (d) area optimization, etc. Apart from these, complexity of computation in implementing multi-operand operation is also a factor. Systolic arrays for performing multiple operations over several steps have been described in literature. However, not enough attention has been given to multi-operand operations even in modern processor designs like that of Google TPU, possibly because of a technological bias in favor of two operand operations. In this paper, a modular multi-operand adder that can add 16 numbers as one integrated operation is discussed. The design is modular and uses 4-bit 4-operand addition as a basic operational unit. Hardware design and simulation results are also presented.

3 Multi-operand Addition

The basic design of a multi-operand adder follows a procedure similar to paper-pencil approach. Add the column and carry the overflow to left-side columns. In case of two operands, the overflow is limited to a single column (bit or digit). However, in case of multi-operand adder, carry can extend to more than one column on the left side. Designing a multi-operand adder therefore needs an estimate of how many columns will be affected by addition of numbers in a column. To illustrate the effect, consider 3-operand base-10 addition. The maximum number a column can generate is 3 × 9 = 27, with 2 as carry. However, if there are 15 operands, the maximum value is 15 × 9 = 135, with 13 as carry. Now, this means, the carry extends to two columns. The question now is how many operands will make the carry extend to 3 columns. This by induction can be continued to any number of columns and operands (digits and operands). In essence, it is necessary to know the upper bound on the size of sum and carry columns in a multi-operand addition. Such an upper bound on the size of carry is given by the following theorem.

Theorem:

An upper bound on value of the carry is numerically equal to the number of operands minus one, irrespective of the number of digits or the number system used. i.e., if there are N operands, the upper bound on the value of carry is \( N - 1 \).

Proof:

For \( N = 2 \), the result is obvious. We have been using a 1 bit carry in all two-operand adders. For any \( N \), the maximum sum is sum of the maximum value of operands, i.e., for \( N = 3 \), maximum sum is 9 + 9 + 9 = 27, with 2 as carry, and 7 as sum. Notice that the value of carry is independent of the number of digits. For 3 digits, the sum is 2997. This is because the LSB sum is less by the same amount as the value required to make higher digits equal to 9, i.e., \( \left( {N - 1} \right) \). Therefore the sum of most significant digit will yield a sum equal to \( N \) times 9 plus the \( \left( {N - 1} \right) \) from carry.

The logic holds for any number of digits as well as number base used to calculate the sum. Figure 1 show the results for N = 3, 5, 8, 9 and 16, with upper bound on the carry given by N − 1, i.e., 2, 4, 7, 8 and 15. In Fig. 1, carry for N = 3 is 102, 210, 216. Similarly, for N = 5 the bound is 4, i.e., 1002, 410, 416 and for N = 16, the upper bound is 15, i.e., 11112, 1510 and 1516. Notice that the carry for N = 16 in binary is 11102, as this is a reduction in size of carry, the upper bound still holds. This occurs every time the sum columns add to full zero because the next addition will not produce a carry. This depends on the number of digits and N.

In general, for a base of k, and N rows, maximum value of column sum without carry is \( N\left( {k - 1} \right) mod\, k \), or \( \left( {k - N} \right) mod\, k \). Generated carry is

This value is always less than or equal to \( \left( {N - 1} \right) \), depending on \( N > k \) or \( N \le k \). Therefore, the upper bound is \( \left( {N - 1} \right) \).

In case we include the column sum with carry (full adder), maximum value in the column is \( \left( {k - 1} \right) \). Therefore, we can consider the column sum as corresponding to \( N + 1 \) rows, giving an upper bound on the carry as less than \( N \) or as \( N - 1 \), when \( N > k \). This proof is sufficient for our work now. A more rigorous proof using modular arithmetic will be given elsewhere. Knowing this upper bound on carry is useful in making sufficient resources available for implementation of multi-operand adder.

As an example, consider a simple 4-bit 4-operand (4 × 4) adder. Corresponding to four operands, from the above theorem, maximum size of carry is 3 which can be represented by two bits in binary system, or two columns to the left. Therefore, for a 4 × 4 adder, the number of output bits 2 + 4 = 6. If we consider a single bit addition, there will be 3 output bits. The algorithm uses this observation to construct the 4 × 4 adder.

If we consider a single column of 4 operands, maximum number of 1’s in the partial sum is 4 (1002). From the above theorem, 2 bits carry is sufficient. So, the carry will be of the form xx2 which we can write as 0xx2. Actual values of the carry bits will depend on the data bits. There are two observations to be made here. First observation is that three bits are sufficient to represent a 4-operand column sum. Second observation comes from the fact that an upper bound on the sum of these two numbers is 1002 + 0112 = 1112. This is an important result, which shows that 3 bits are always sufficient to perform any 4 operand single column addition.

As there are only 4 operands or 4 bits per column, a 4-input 3-output lookup table (LUT) can be constructed to implement column addition quickly. Table 1 gives the details of this LUT. Essentially, the algorithm performs addition of bits in one column of multiple operands at a time and repeats the process for all the columns. The algorithm is simple, modular and straight forward. A 4 × 3 look-up-table is used as the basic block of multi-operand adder. This LUT is used to count the number of one’s in the input. A 3 bit 2-operand adder is used to compute the carry to next column.

4 Implementation of Multi-operand Adder

4.1 4 × 4 Adder Operation

The implamentation details of 4 × 4 adder is shown in Fig. 2. The algorithm of the 4 × 4 adder is illustrated in the Fig. 2(a). From the earlier discussion it is clear that the sum of column data is computed separately and added to the pending carry. This will generate 1 bit column sum and 2 bit carry. The carry is used to calculate next column sum. The process is repeated for four columns.

Figure 2(b) shows the structure of one-column adder for 4 operands. The two carry bits and the sum bit are shown as output. On initialization, the carry buffer is cleared and data from first column is applied to the LUT. The three bit output as given in Table 1 is available at one of the inputs to the 3-bit adder. The other input comes from the carry buffer. The MSB of this operand to 3-bit adder is always zero. Two MSB bits of the 3-bit adder are copied to carry buffer and LSB is shifted to sum register. The iterations can be implemented in software or in hardware. A hardware implementation of a 4 × 4 adder is shown in Fig. 3. Organization of the column adder and percolation of carry bits is illustrated in Fig. 3(a). The control of data flow in the 4 × 4 adder is shown in Fig. 3(b).

A verilog simulation model was developed to test the correctness of concept. The simulation results are shown in Fig. 4. The timing diagram shows loading of four numbers and the output. The numbers are 4hA + 4hF + 4h3 + 4 × 9 = 6h25 corresponding to 10 + 15 + 3 + 9 = 37 which is seen in the simulation output. The computation takes 5 clocks. The critical delay is due to propagation of carry between columns.

4.2 Optimized Logic Implementation of LUT

Generally, LUT is implemented as a 4 input 3 output memory. In such a design, the address decoder and 16 × 3 bit static RAM occupies lot of space. Hence a direct optimized logic implementation can easily replace such LUT. This implementation is much faster and occupies less space. One such circuit is given in Fig. 5

4.3 Implementation of 16 Bit 16 Operand Adder

Jumping back to the neural network implementation, the number of operands for addition depends on the type of the network. However, implementing 16 operand adder may be considered to speed up the addition process at the output of the neuronal cell. In this section, we will discuss the implementation of a 16 bit 16 operand adder, suitable for the number format described in [14].

4.4 16-Bit 16-Operand Adder

The idea of 4-operand, 4-bit adder explained in earlier section can be easily extended to any number of bits and to any number of operands. We will explain the implementation of 16 × 16 adder. The 4 × 4 module is extended to 4 × 16(4-operand, 16-bit) by increasing the number of iterations to 16 (see Fig. 2(a)). The 16 × 16 input is divided into four groups of 4 × 16 each. Each of 4 × 16 modules will produce a sum of 16-bits and a carry of 2 bits. These sum and carry of all the four modules are again grouped into two 4 × 16 modules as shown in Fig. 6. The thicker lines indicate the carry where as the thinner lines indicate the sum. Simulation result of 16 × 16 adder is shown in Fig. 7. It may be observed from the simulation result that it takes about 37 clock cycles to complete the 16 × 16 addition.

5 Results and Conclusion

This paper presents design and implementation of multi-operand adders for use in neural processors and accelerators. A theorem to calculate an upper bound on the size of carry and its proof is presented in Sect. 2. The results can be applied to multi-operand addition in any base system for any number of rows and columns of data. The results of this theorem have been used to implement a 4 × 4 adder. This basic design can be arranged in a hierarchy to implement larger adders. As an illustration, the module has been used to implement a 16 × 16 adder. The basic column adder has been implemented as a LUT. An optimized version of this LUT, implemented as a combinational counting circuit is also presented. Column additions are implemented in a loop. FSM required to implement the loop is also presented in the paper. All these designed have been implemented in verilog and the results have been verified.

The algorithm presented here works in two separate stages: The first stage consists of column sum of applied data. The second stage consists of adding the carry bits generated in the first stage to get the final result. It may be noted that the first stage can be implemented in parallel and the second stage can be pipelined to improve the overall performance of the adder. Other optimization possibilities also exist.

Further work on optimization of the multi-operand adder is in progress.

References

Defelipe, J., Alonso-Nanclares, L., Arellano, J.: Microstructure of the neocortex: comparative aspects. J. Neurocytol. 31, 299–316 (2002). https://doi.org/10.1023/A:1024130211265

Aparanji, V.M., Wali, U.V., Aparna, R.: Pathnet: a neuronal model for robotic motion planning. In: Nagabhushan, T.N., Aradhya, V.N.M., Jagadeesh, P., Shukla, S., Chayadevi, M.L. (eds.) CCIP 2017. CCIS, vol. 801, pp. 386–394. Springer, Singapore (2018). https://doi.org/10.1007/978-981-10-9059-2_34

Mayannavar, S., Wali, U.: A noise tolerant auto resonance network for image recognition. In: Gani, A.B., et al. (eds.) ICICCT 2019. CCIS, vol. 1025, pp. XX–YY. Springer, Cham (2019)

Bergstra, J., et al.: Theano: a CPU and GPU math compiler in Python. In: Proceedings of the 9th Python in Science Conference (SCIPY) (2010)

Liu, S., et al.: Cambricon: an instruction set architecture for neural networks. In: ACM/IEEE 43rd Annual International Symposium on Computer Architecture (2016)

Jouppi, N.P., et al.: In-datacenter performance analysis of a tensor processing unit. In: 44th International Symposium on Computer Architecture (ISCA), Toronto, Canada, June 2017

Akopyan, F., et al.: TrueNorth: design and tool flow of a 65mW 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput. Aided Des. Intergr. Circ. Syst. (2015). https://doi.org/10.1109/TCAD.2015.2474396

Goodfellow, I., Pouget-Abadie, J., Mirza, M., et al.: Generative Adversarial Nets, Achieves, Cornell University Library (2014). https://arxiv.org/pdf/1406.2661.pdf

Hinton, G.E., et al.: Dynamic routing between capsules. In: 31st Conference on Neural Information Processing Systems, NIPS 2017, Long Beach, CA, USA (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Pereira, F., et al. (eds.) Advances in Neural Information Processing Systems, NIPS 25, pp. 1097–1105. Curran Associates, Inc. (2012)

Hijazi, S., Kumar, R., Rowen, C.: Using Convolutional Neural Networks for Image Recognition. IP Group, Cadence, San Jose (2015)

Farabet, C., Poulet, C., Han, J.Y., LeCun, Y.: CNP: an FPGA based processor for convolutional networks. IEEE (2009)

Mayannavar, S., Wali, U.: Performance comparison of serial and parallel multipliers in massively parallel environment. IEEE Xplore Digital Library, December 2018, in press

Mayannavar, S., Wali, U.: Hardware implementation of activation function for neural network processor. IEEE Xplore Digital Library, January 2018, in press

Abdelouahab, K., Pelcat, M., Berry, F.: The challenge of multi-operand adders in CNNs on FPGAs; how not to solve it. In: SAMOS XVIII, Pythagorion, Samos Island, Greece, 15–19 July 2018. Association for Computing Machinery (2018)

Acknowledgements

The authors would like to thank C-Quad Research, Belagavi for all the support.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Mayannavar, S., Wali, U. (2019). Design of Hardware Accelerator for Artificial Neural Networks Using Multi-operand Adder. In: Gani, A., Das, P., Kharb, L., Chahal, D. (eds) Information, Communication and Computing Technology. ICICCT 2019. Communications in Computer and Information Science, vol 1025. Springer, Singapore. https://doi.org/10.1007/978-981-15-1384-8_14

Download citation

DOI: https://doi.org/10.1007/978-981-15-1384-8_14

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-1383-1

Online ISBN: 978-981-15-1384-8

eBook Packages: Computer ScienceComputer Science (R0)