Abstract

In this study, servers are equipped with terminal baffles to optimize the thermal environment within data center and achieve energy conservation. The data center model is established by Airpak 3.0 packages based on an actual running data center. In the original model, the rack with the highest heat load (16.98 kW) is selected as the research object, while the exhaust airflow distribution of that rack is obtained. In order to optimize the exhaust airflow distribution, the optimization models with 8-cm baffles and six different baffle angles (0°, 15°, 30°, 45°, 60°, 75°) are established based on the original model. The results show that, although the use of 0° server baffles deteriorates the thermal environment, airflow distribution and cooling efficiency are improved to varying degrees when the inclination angle of the 8-cm baffles increases from 15 to 75°. The optimum thermal environment is achieved when the baffle angle is 45°. Under this circumstance, the maximum drop of rack hotspots reaches 2.5 °C.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The increasing energy consumption in data centers (DCs) and its associated cost and environmental impacts emphasizes the importance of alternative energy conservation strategies, while airflow management is regarded as one of the effective approaches to improve thermal environment and cooling efficiency in DCs [1]. According to Lu et al. [2], airflow management is defined to be the approach to keep the operating parameters (IT equipment) within the recommended ranges with minimum energy consumption. Many researchers have studied airflow management to optimize thermal environment and improve cooling efficiency in DCs.

Schmidt and Lyengar [3] numerically analyzed the suitability of a raised floor air supply and overhead air supply design in very high-density data centers. The results showed that under-floor air supply design was appropriate for higher chilled-air supply cases to produce cooler rack intake air, while overhead air supply design was suitable for lower chilled-air supply cases to yield a cooled rack intake temperature. Yuan et al. [4] investigated and improved the airflow performance of airflow distribution system in DC by applying under-floor flexible baffles (UFBs). The results show that the use of UFBs can efficiently reduce rack hotspot and make the exhaust air temperature distribution more uniform. Furthermore, Zhang et al. [5] investigated the optimization of airflow organization in a small-scale data room with cold aisle closure. The results show that not only the cold aisle closure can eliminate by-pass air, but also it can optimize thermal environment. In addition, Ni et al. [6] numerically analyzed the thermal environment and airflow organization in a small DC and investigated the optimum airflow distribution conditions. The results show that, under the optimum airflow condition, the thermal environment and cooling efficiency were successfully improved and the energy consumption decreased. Yuan et al. [1] experimentally and numerically investigated the impacts of flexible baffles attached on the front door of racks on the optimization of thermal environment. They concluded that the use of flexible baffles on the front door of racks could effectively improve the thermal environment and weaken rack hotspots.

All these researches all used airflow management to contribute to the optimization of thermal environment and achievement of energy saving in DCs. The novelty of this paper is to optimize airflow by setting baffles on the lower-side terminal of servers. The aim of this research is to compare the effects of different baffle angle on the airflow distribution and energy saving.

2 Methods

2.1 Data Center Description

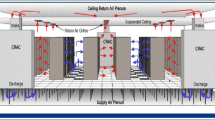

The studied DC is located in Nanjing, China, and it works 24/24, 365 days/year providing network service for a campus. Figure 1 shows the 3-D view of the studied DC. The dimension of the DC is 11 m (L) × 8 m (W) × 4 m (H) with under-floor plenum height of 0.45 m. The air-supplying mode of the computer room air-conditioners (CRACs) is under-floor air supply and direct air return, while the aisle configuration mode is closed aisle containment and open hot aisle. The dimension of CAC is 5.4 m (L) × 1.8 m (W) × 2.2 m (H). There are two rows of 18 racks in total, which are evenly placed face to face on both sides of the CAC. The geometric dimensions of the rack are the same, whose size is 0.6 m (L) × 1.2 m (W) × 2.2 m (H). The supply air temperature of CRACs is 22 °C, while the supply air speed is 5.33 m/s.

2.2 Numerical Description

In this study, Airpak 3.0 package is used to establish the DC model based on an actual operating DC located in a campus, in Nanjing, China. All the geometry sizes and facility parameters are set corresponded to the actual DC. The specification and parameters are summarized in Table 1. As shown in Table 2, there are seven cases in total in this study. The baffle angle is defined as the angle between baffle and horizontal plane. In case 2, the baffles are parallel to the horizontal plane; thus, the baffle angle is 0°. For each case, the simulation converged in about 2 h with an Intel Core i7 processor.

Concerning the scale of DC, racks, servers, and other conditions, standard k–ε model is applied in this paper. This model has been widely recognized by many researchers and has gotten good simulation results [7,8,9]. There are four assumptions proposed to simplify the matter: (i) ignorance of air leakage, (ii) no radiation effects, (iii) air is incompressible fluid, and (iv) the flow was considered as steady turbulent flow. The convergence criteria for flow and continuity are 10–3, while that for energy is 10–6. The mesh type was set as unstructured hexahedral, while the meshes around the servers and vents were generated by pre-object meshing parameters. An enhanced wall treatment is used to solve the near-wall region.

A second-order upward scheme was used to discretize the convective term, while a semi-implicit method for a pressure-linked equation algorithm is used to achieve the pressure–velocity coupling. The data center was considered a confined space envelope with a defined solid-wall boundary. CRACs supplied cooling air through the perforated tiles. Thus, the velocity inlets should be the perforated tiles. The raised floor, the walls, and the ceiling were regarded as adiabatic boundaries, while the servers were comprised of five partitions and four uniform heat sources. In addition, the back doors of rack were regarded to be the velocity outlets. The mesh number for 7 cases was all beyond 1,800,000. Airpak packages could automatically check the mesh quality, while more than 95% of the meshes had a quality of 1; thus, the mesh quality was satisfactory.

Equations (1)–(4) express the governing equations for incompressible fluid. The equations for continuity, momentum, and energy conservation are shown as follows.

Continuity equation:

Momentum equation:

where p is the static pressure, T is the stress tensor, and \(\vec{g}\) is the gravitational body force.

The stress tensor T is given by

Energy conservation equation:

where \(v_{\text{eff}}\) and \(k_{\text{eff}}\) are the effective fluid viscosity and thermal conductivity, respectively; \(\vec{g}\) is the gravitational acceleration vector, and S represents the volumetric heat generation.

3 Results and Discussion

Figure 2 shows the exhaust air temperature distribution of rack B4 in seven different cases. It can be seen that there was an obvious heat accumulation in the lower part of the rack. When the baffles parallel to servers were set at the lower-side terminal of servers, the phenomenon of heat accumulation became worse. However, when the baffle angle increased from 0 to 45°, the heat accumulation was slowly alleviated. However, when the angle continuously increased from 45 to 75°, thermal environment is deteriorated again. Thus, the optimum thermal distribution appeared when 45°, and 8-cm baffles were applied. Under this circumstance, temperature distribution at the upper and lower parts of the server was more uniform, while the heat accumulation was successfully eliminated.

Figure 3 shows the temperature of rack hotspot at the rack B4 in seven different cases. According to Yuan et al. [1], the rack hotspot was defined to be the point where the maximum exhaust temperature of each rack was located. As shown in the figure, the temperature of rack hotspot stood at 32.6 °C in case 1 and increased dramatically to a peak of 34.5 °C in case 2 with 8-cm and 0° baffles, where there was an exponential decline to an all-time low of 30.7 °C in case 5 with 8-cm and 45° baffles. However, when the baffle angle continued to grow from 45 to 75°, the temperature of rack hotspot in the rack B4 recovered to 31.5 °C in case 7. Thus, case 5 with 8-cm and 45° baffles performed the best in terms of rack hotspot temperature. The temperature drop of rack hotspot reached 1.9 °C, which reduces the risk of the damages to servers.

Figure 4 shows the temperatures in six different heights of the rack B4 in seven cases. The temperatures of six different heights in case 2 and case 3 were all bigger than those in case 1. In case 4, and 6–7, more than one temperature was bigger than that in case 1. Thus, all these cases could not meet the requirement of temperature drop. However, only in case 5, all the temperatures in six different heights were lower than that in case 1. Therefore, the application of 8-cm and 45° baffles in case 5 could realize temperature drop of all six heights in the rack B4 at the same time.

4 Conclusions

The studied data center is an actual running one located in Nanjing, China. The numerical model is established totally based on the geometric sizes and operating parameters of the actual data center. The conclusions of this study are as follows:

-

The application of baffles set at the lower-side terminal of servers can optimize the airflow distribution to varying degrees, when the baffle angle is bigger than 0°.

-

The optimum thermal environment can be achieved when 8-cm and 45° baffles are applied, while the temperature distribution at the upper and lower parts of the server is more uniform and the heat accumulation was successfully eliminated.

-

Under the optimum condition, all the temperatures of six different heights of rack drops, while the temperature drop of rack hotspot reached 1.9 °C reducing the risk of the damages to servers.

References

Yuan, X.L., Wang, Y., Liu, J.X., Xu, X.J., Yuan, X.H.: Experimental and numerical study of airflow distribution optimisation in high-density data centre with flexible baffles. Build. Environ. 140, 128–139 (2018)

Lu, T., Lü, X., Remes, M., Viljanen, M.: Investigation of air management and energy performance in a data center in Finland: Case study. Energy Build. 43, 3360–3372 (2011)

Schmidt, R., Lyengar, M.: Comparison between underfloor supply and overhead supply ventilation designs for data center high-density clusters. Build. Eng. 13, 115–125 (2007)

Yuan, X.L., Liu, J.X., Yang, Y.J., Wang, Y., Yuan, X.H.: Investigation and improvement of air distribution system’s airflow performance in data centers. Procedia Eng. 205, 2895–2902 (2017)

Zhang, M.R., An, Q.S., Long, Z.W., Pan, W.X., Zhang, H., Cheng, X.L.: Optimization of airflow organization for a small-scale datacenter based on the cold aisle closure. Procedia Eng. 201, 1893–1900 (2017)

Ni, J., Jin, B.W., Zhang, B., Wang, X.W.: Simulation of thermal distribution and airflow for efficient energy consumption in a Small Data Centers. Sustainability 9, 664 (2017)

Cruz, E., Joshi, Y.: Coupled inviscid-viscous solution method for bounded domains: application to data-center thermal management. Int. J. Heat Mass Transf. 85, 181–194 (2015)

Song, Z.H, Murray, B.T., Sammakia, B.: Long-term transient thermal analysis using compact models for data center applications. Int. J. Heat Mass Transf. 71, 69–78 (2014)

Lu, H.J., Zhang, Z.B., Yang, L.: A review on airflow distribution and management in data center. Energy Build. 179, 264–277 (2018)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 51806096) and the Natural Science Foundation of the Jiangsu Higher Education Institutions of China (Grant No. 18KJB560007).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Yuan, X., Zhou, X., Wang, Y., Liu, J., Xu, X., Tao, Y. (2020). Numerical Analysis of Airflow Distribution in Data Center with Server Baffles. In: Wang, Z., Zhu, Y., Wang, F., Wang, P., Shen, C., Liu, J. (eds) Proceedings of the 11th International Symposium on Heating, Ventilation and Air Conditioning (ISHVAC 2019). ISHVAC 2019. Environmental Science and Engineering(). Springer, Singapore. https://doi.org/10.1007/978-981-13-9528-4_4

Download citation

DOI: https://doi.org/10.1007/978-981-13-9528-4_4

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-9527-7

Online ISBN: 978-981-13-9528-4

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)