Abstract

Neuroeconomics combines classical economics and neuroscience to deepen our understanding about the role played by human brain in economic decision-making. Neuroeconomics provides a mechanistic, mathematical and behavioural framework to understand choice-making behaviour. The aim of this chapter is to organise and synthesise the current research on neural correlates of decision-making, identify major themes and trends in literature, and present potential areas of research. Based on an extensive literature review, this chapter presents the primary areas of research related to neurobiology of economic decision-making. The themes have been organised around role of reinforcement learning systems in valuation and choice, value-based decision-making, decision-making under conditions of risk and ambiguity and intertemporal discounting. In addition to this, social preferences and context-dependencies in decision-making have also been discussed. Finally, fallacies in interpretations, methodological issues in neuroeconomics research, current concerns and future directions have been summarised.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Neuroeconomics combines classical economics and neuroscience to deepen our understanding about the role played by human brain in economic decision-making (Fehr et al. 2005; Goetz and James III 2008). Classical economics is the “science of choice, constrained by scarce resources and institutional structure” (Camerer 2013, p. 426) and neuroeconomics provides a mechanistic, mathematical and behavioural framework to understand these choices (Glimcher and Rustichini 2004).

The revealed preference approach (Sameulson 1938) which used observable choices to infer unobservable preferences (subject to certain axiomatic constraints) fundamentally changed the field of economics. Human behaviour was attributed to maximisation of some utility function which could be rigorously tested through empirical means. But the psychological and/or neurobiological accounts of preference were ignored for several decades.

Economic theorists were confounded by the emergence of numerous axioms aimed at explicating the same arrays of choices. A powerful way to adjudicate among theories was to insist that theorists must articulate specific predictions about underlying neural correlates (Glimcher and Fehr 2014). Herbert Simon’s assertion that humans are boundedly rational (Simon 1955; 1997), Kahneman and Tversky’s modelling of choices in real-life (1979), and Gigerenzer and Todd’s (1999) findings on the trade-off between efficient choice, computational complexity and use of simple heuristics in decision-making highlighted that humans often failed to execute optimal courses of actions in their daily lives. In parallel, advances in neuroscience and cognitive psychology revealed the constraints of neural activity (in a way defining the boundaries of boundedly rational decision-making) and thus supported the development of neuroeconomics.

The interest of economists in psychology and neurobiology dates back to late nineteenth century when Thorstein Veblen (1898) posited in his essay, “Why is Economics Not an Evolutionary Science?”, that economic behaviour can be understood by studying the underlying mechanisms which created those behaviours. There were also fantastic ideas, by earlier economists including Edgeworth, Fisher, and Ramsey (see Colander 2007), about developing “hedonimeters” to link biology and choices directly. However, we had to wait till late 1990s and early 2000s to see significant growth in the study of neuroeconomics.

Evolutionary theory posits that the singular goal of any behaviour of natural organisms is maximisation of inclusive fitness which maximises the survival of the organism’s genetic code. Accordingly, the primary function of the nervous system, which mediates behaviour, is to produce motor responses, under conditions of uncertainty, that yield the highest possible inclusive fitness for an organism. Economic theory provides us with the optimal decision for a given context through a rational computational route. This optimum economic solution combined with the goal of nervous system to make decisions aligned with inclusive fitness could help us in understanding the spectrum of human behaviour (Glimcher 2003).

Neuroeconomics today informs research in a range of management disciplines including finance (Efremidze et al. 2017; Frydman et al. 2014), consumer behaviour (Berns and Moore 2012; Egidi et al. 2008), organisational behaviour (Beugré 2009; Lori 2017), strategy (Hodgkinson and Healey 2011), and others.

1.1 Anatomy and Essential Features of the Brain: Significance for Neuroeconomics

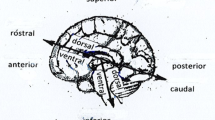

Detailed examination of the brain anatomy, from a neuroeconomic perspective, has centred around basal ganglia and cerebral cortex, which form part of the telencephalon or forebrain. While cerebral cortex is a much more recently evolved structure, the basal ganglia is more evolutionary ancient. Some of the sub-regions of the basal ganglia like the striatum which consists of the caudate and putamen,Footnote 1 the globus pallidus, substantia nigra pars reticulateFootnote 2 and the dopaminergic systemFootnote 3 figure prominently in neuroeconomic studies. The amygdalaFootnote 4 along with hypothalamus,Footnote 5 which are parts of the telencephalon, receive inputs from many sensory systems and in turn intimate and regulate activity in several frontal cortical areas (Glimcher 2014a). A general anatomy of the brain, with relevant regions, is presented in Fig. 1.

Certain features of the brain stand out from a neuroeconomic perspective, namely modularity of the brain, neuronal stochasticity, synaptic plasticity and asymmetric information flow.

The behavioural and cognitive abilities of the brain are deemed to be the outcome of a complex multi-tiered processing system involving independent sub-processes which interconnect with other systems through well-defined inputs and outputs. Fodor (1983)Footnote 6 highlighted the presence of such independent modules, which appear to handle a certain class of information, perform specific computations and pass on their outputs to other modules for further processing (Glimcher 2014a).

The strong limits imposed on the firing rates of cortical neurons define the upper bounds on the amount of information that a neuron can transmit. The stochastic nature of neuronal firing connects the analysis of neuronal activity to random utility or discrete choice approach. Synaptic plasticity is the ability of synapses to strengthen or weaken over time. The biochemical mechanism by which information is stored in the nervous system over periods of days or longer is a process of synaptic modification (Glimcher 2014a).

Selective or asymmetric flow of information can lead to time lags in various parts of the brain receiving the same information. Evolutionary processes prefer conservation of energy and this imposes certain limitations on the brain which is the most energy-intensive organ in the human body. These limitations present themselves in the form of limited neural connectivity constraining free flow of information in the brain (Alonso et al. 2014).

1.2 Tools and Methods in Neuroeconomics

Techniques used by neuroeconomists to observe brain activity can be broadly classified into two categories: measurement techniques, which measure changes in brain function, while a subject engages in an experimental task, and manipulation techniques, which examine how disruptions in neural processing of specific regions change choices and related behaviour. Measurement techniques are “correlational”, while manipulation techniques are deemed as “causal” in their approach (Ruff and Huettel 2014).

Neuroscientists typically select a research method based on requisite temporal resolution, spatial resolution and invasiveness (Ruff and Huettel 2014). The methods employed to identify the neural foundations of economic behaviours involve a wide range of techniques, such as neurophysiological measures, neuroimaging studies, neuromodulation (including neurostimulation), brain lesion analysis. The commonly used tools are fMRI, fNIRS, EEG, MEG, PET, TMS, tDCS, single-unit recording, pharmacological interventions, and brain lesion studies (See Crockett and Fehr 2014; Efremidze et al. 2017; Houser and McCabe 2014; Kable 2011; Lin et al. 2010; Ruff and Huettel 2014; Vercoe and Zak 2010; Volk and Köhler 2012; Zhao and Siau 2016). A brief summary of the primary tools and methods used in neuroeconomics has been presented in Exhibit (Table 1).

Other methods such as eye tracking (fixations and saccades), scanpaths, pupil size, blink rate, skin conductance rate, pulse rate and blood pressure have also been used to detect biological and emotional responses to stimuli in decision-making contexts (Zhao and Siau 2016).

Besides these, in non-human primates and rats, invasive methods such as microstimulation and optogenetics are used to detect and manipulate electrical activity in neurons with the help of inserted microelectrodes, intracranial light application or genetic engineering (Ruff and Huettel 2014).

Since different methods have unique strengths and weaknesses, current research, in the quest to understand the neurological underpinnings of human behaviour, makes an attempt to combine different techniques, either sequentially or in parallel, in order to achieve complementary support for its findings (Zhao and Siau 2016).

In this chapter, the primary research themes in neuroeconomic decision-making have been identified and organised around role of reinforcement learning systems in valuation and choice, value-based decision-making, decision-making under conditions of risk and ambiguity and intertemporal discounting. In addition to this, social preferences and context-dependencies in decision-making have also been discussed. Finally, fallacies in interpretations, methodological issues in neuroeconomics research, current concerns and future directions have been summarised.

2 Role of Reinforcement Learning Systems in Valuation and Choice: Evidence from Neuroeconomics

Natural organisms are constantly challenged to optimise their behaviour in various environments. With the help of the computational frameworks provided by reinforcement learning (RL) systems, they rely on prior experiences to make predictions about the current context and choose appropriate behaviours which can lead to rewards or to avoidance of punishments. Since the computational algorithms of RL appear to have distinct neural correlates (such as the phasic activity of dopamine neurons) (Dayan and Niv 2008), neuroeconomists believe that an understanding of neural systems can contribute to the comprehension of choice behaviour. The neutrally distinct learning systems are detailed below.

2.1 Reinforcement Learning Systems

2.1.1 Pavlovian Learning

Pavlov (1927) discovered that when a stimulus is repeatedly paired with a reward or punishment, the stimulus by itself can trigger behaviour associated with the reward or create aversion for the punishment, in subsequent trials. As an example, if the reward is a food item, then the stimulus can elicit salivation, or in the case of pain as punishment the stimulus will elicit a withdrawal reflex. Pavlovian conditioning makes the organism learn to make predictions about the likely occurrence of significant events on the basis of preceding stimuli. Brain imaging studies have shown a strong association of several brain structures, particularly the amygdala, the ventral striatum and the orbitofrontal cortex, in this learning process (Gottfried et al. 2003; O’Doherty et al. 2002).

2.1.2 Instrumental Conditioning

Instrumental Conditioning associates actions with outcomes. These associations are shaped by reinforcement rules, and strengthen or weaken depending on the desirability of the outcome. The purpose is to make action choices such that they optimise goal achievement (Dayan and Niv 2008). Learning in instrumental systems is correlated with activity in the ventral striatum (Daw and O’Doherty 2014).

Habitual Learning Habitual Learning is a special case of instrumental learning where the learner repeats actions which have previously resulted in “satisfaction” without resorting to discrete evaluations with every new instance of the action. This implicit neural autopilot is activated for repeated low-value decisions but is incapable of analysing new contexts or re-evaluating previously experienced ones. Habitual actions are also indifferent to short-term value changes and hence display short run choice elasticities of close to zero (Camerer 2013).

It has been demonstrated that increasing activity in right posterolateral striatum over the course of training relates to the emergence of habitual control (Tricomi et al. 2009). Diffusion tensor imaging has also shown significant correlation of the strength of connectivity between right posterolateral striatum and premotor cortex and the preference of habitual responding over goal-directed behaviour (de Wit et al. 2012).

The above three systems can be categorised as model-free RL where experiences are used to learn about values directly in order to arrive at optimal solutions without resorting to estimations or reference to a world model.

2.1.3 Goal-Directed or Model-Directed Learning

Goal-directed learning is classified as a model-based learning system, wherein experiences are used to construct a model which is a best approximation of the transitions and outcomes in the environment. Unlike model-free systems which require direct learning, goal values can be inferred from indirect methods like deliberation and communication. This method appears to be unique to humans (Daw and O’Doherty 2014). In a model-directed system, goal values associated with different choices are integrated with the help of abstract information, to arrive at an inclusive choice. The ventromedial prefrontal cortex (vmPFC), which has been of keen interest in the study of neuroeconomics due to numerous reports of correlation with expected value/utility, is associated with goal-directed learning (Balleine and O’Doherty 2010). Studies have also observed that expected value correlates in vmPFC comply more with model-based (versus model-free) values (Daw et al. 2011). Further, with the help of diffusion tenor imaging, it has been shown that goal-directed choice behaviour is correlated with the strength of interconnections between ventromedial prefrontal cortex and dorsomedial striatum (de Wit et al. 2012).

The above findings associate specific and segregated neural systems with each type of learning. However, these systems also interact among themselves, in a facilitatory or adversarial manner, to mediate the control of actions. For instance, the amygdala and ventral striatum have been implicated in human studies in Pavlovian-instrumental transfer (PIT); an example of a facilitatory interaction, where a Pavlovian association between a cue and reward can trigger an instrumental association between the reward and action (Prevost et al. 2012; Talmi et al. 2008). The ventral striatum has also been highlighted in scenarios where instrumental choice has been weakened by aversive Pavlovian cues (Chib et al. 2012). Further, lesion studies indicate that activity in the basolateral amygdala and ventral striatum is correlated with conditioned reinforcement effect (Cador et al. 1989). Similarly, neuropsychological models of addiction and compulsive behaviours have revealed a relationship between habitual and goal-directed systems, with over-active habitual systems overwhelming goal-directed choices and behaviour (Everitt and Robbins 2005).

2.2 Dopamine Reward Prediction Error

Prediction error, which denotes the difference between the predicted and the actual reward, is a key variable in learning systems. A large enough quantum of prediction error triggers more learning to update predictions. The more recent rewards get weighed more heavily compared to prior rewards, and the latter’s weight declines exponentially with lag (Daw and Tobler 2014). According to Camerer (2013, p. 429), such reward values closely resemble an “economic construct of a stable utility for a choice”.

-

Studies have posited that dopamine plays a role in the reward prediction error of a particular event by signalling changes in the anticipated value of rewards. Dopamine neurons regulate their firing rates in sync with the reward prediction error with a positive surprise leading to a higher firing rate and vice versa, while rewards received on predicted lines do not lead to any change in firing rates (Bayer and Glimcher 2005; Nakahara et al. 2004). This implies that the dopamine neurons encode the reward prediction error. Studies have identified neurons which code the values of specific actions in dopaminergic target areas, that keep track of which actions have just been produced, and even pass on expectations about what rewards can be anticipated in the immediate future (Lau and Glimcher 2008). Further experiments have revealed that interventions which stimulate the dopaminergic system can influence the rate of learning (Pessiglione et al. 2006). These studies suggest that the dopaminergic system is used to imprint into the brain the subjective values of goods and actions which are learned from experience.

2.3 Future Directions

Learning systems play an important role in valuation and choice. However, how and why a particular system dominates behaviour at a certain point is still largely unanswered. Understanding is also limited about how neural regions interconnect with each other to compete or collaborate. More clarity is needed on the relationship between model-based and model-free reinforcement learning systems. While there has been some hypothesis to explain the competition between goal-directed and habitual behaviours, these have yet to be mapped to neural mechanisms. Similar associations need to be elucidated for Pavlovian vs. Instrumental competition (Daw and O’Doherty 2014).

Studies have shown when reward systems change, organisms do not “unlearn” previously learned predictive associations; they rather acquire a new association paradigm which might suppress the earlier relationship. There is lack of clarity on how this process fits in with the RL framework.

Current research in neuroeconomics has considered simple classical and instrumental conditioning cases. However, there are questions around how the dopaminergic system operates in more complicated scenarios like multiple actions contributing to an outcome/outcomes, or in hierarchical tasks, which remain unanswered and provide interesting avenues for future research.

3 Value-Based Decision-Making

A biological framework, based on how millions of neurons interact in different brain systems, to understand choice behaviour is a major quest in neuroeconomic research. Value-based decision-making refers to a process in which choices are guided by subjective valuation of available options (Camerer 2013; Platt and Glimcher 1999; Rangel and Hare 2010). Two separate academic streams have emerged in decision-making research. Sensory neuroscientists (see Newsome, Britten, and Movshon 1989) study perceptual decision-making through the lens of sensation, while motor neuroscientists (see Glimcher 2003) approach the problem from the perspective of subjective valuation, that is idiosyncratic preferences or internal representations that guide choice. In this section, we follow the subjective valuation path and explore the basic principles and neural correlates of value-based decision-making. We also consider associated streams of research here such as cost-based decision-making and debates around utility measurement.

3.1 Valuation and Choice

Sequentially arranged neural mechanisms of valuation and choice combine together to create the value-based decision-making framework. The valuation mechanism learns, stores and retrieves the value of actions in consideration, and the choice mechanism using the output of the valuation circuit generates an actual choice from all available options (Glimcher 2014b). Valuation mechanism incorporates idiosyncratic preferences like those over risk and time through a variety of mechanisms including reinforcement learning processes. Choice mechanisms are focussed on choosing from the available choice set that element which has the highest value to us.

The Choice Circuit and its Neural Correlates Understanding the biological frameworks through which the neoclassical choice behaviour theory operates its argmax operation, to identify the highest valued option in the current choice set, has been a principal goal of neuroeconomic research over the last two decades. The current consensus says that subjective values are encoded through the topographical features of the brain and scalar qualities are encoded in neural firing rates. Stochasticity of neural firing rates could be responsible for the kind of variability in behaviour as described by the random utility theory models (see McFadden 2005) of economics.

Research has shown that signals closely related to subjective values have been detected from neurons in three interconnected topographic maps, namely the superior colliculus, frontal eye fields and the lateral intraparietal area (Ding and Hikosaka 2006; Platt and Glimcher 1999). Further research has also discovered that under simulated conditions neurons encode expected utility (reward magnitude/probability), not choice probability and further, in a stochastic manner which could account for unpredictability in behaviour. This lends support to both neuroscientific and economic explanations of decision variables (Dorris and Glimcher 2004). Also firing rates, which encode subjective value while organisms are considering their choice set, reconfigure to encode the choice once a decision has been made (Louie and Glimcher 2010).

The Valuation Circuit and its Neural Correlates Study of dopamine neurons and fMRI signals have discovered that activity in the striatum and the ventromedial prefrontal cortex consistently predicts people’s preferences (Chib et al. 2009; Kable and Glimcher 2007; Levy and Glimcher 2011; Sanfey et al. 2003). Human preferences for various types of rewards are encoded in a single common neural currency. The subjective value signals generated by these areas always correlate with and predict choice behaviour. A complex network of brain areas contributes to the subjective value signals generated in the medial prefrontal cortex and the striatum. For instance, dorsolateral prefrontal cortex provides critical inputs for valuing social cooperation and goods that require or invoke self-control processes, while orbitofrontal cortex contributes to valuation of many consumable rewards. Neurons in amygdala play a critical role in the emotional regulation of value by generating contextual effects of fear and stress.

Many researchers support a multi-stage model which describes how decision-making is determined by subjective valuation signals (Levy and Glimcher 2012; Platt and Plassmann 2014). Accordingly, first varied features of choices are combined to create subjective valuation signals. The latter are transformed into action valuation signals. Finally, a comparison is made between the two valuation signals, and stored assessments are updated to improve future choices. These stages do not necessarily operate in sequence nor are fully separable.

3.2 Cost-Based Decision-Making

Neural mechanisms have been shown to play an active part in discriminating between costs associated with choice. These costs can be broadly divided into energy costs (that is, effort expended in pursuing a choice) and costs related to delay in rewards. The anterior cingulate cortex, the orbitofrontal cortex, and the striatum are the key brain areas associated with cost-based decision-making. There is evidence to show that effort and delay costs are handled by different neural mechanisms (Denk et al. 2005; Prevost et al. 2010; Wallis and Rushworth 2014). Model-based fMRI and computational modelling studies have also demonstrated that individuals are differentially sensitive to cognitive and physical effort with amygdala playing an important role in valuing rewards associated with cognitive effort.

3.3 Debates Around Utility Measurement

Measurement and comparison of reward utility for economic decision-making is complicated as the kind of utility can be as varied as experienced utility, decision utility, anticipated or predicted utility, and remembered utility (see Berridge and Aldridge 2008, for a taxonomy of reward utilities). Some clarity has emerged regarding the neural correlates of few types of utility, while confusion remains around others. For instance, research shows that the orbitofrontal cortex is robustly associated with experienced utility. But debates still continue about whether dopamine mediates a pure form of decision utility or that of remembered utility as a prediction error mechanism of reward learning (Bayer and Glimcher 2005; Berridge 2012; Glimcher 2011; Niv et al. 2012). The entanglement and conflation of distinct utility measures also leads to misrepresentations. For instance, decision utility which alludes to “wanting”, and experienced utility which is synonymous with “liking”, are frequently regarded in the same vein, though they are associated with unique neural systems and occur at distinctive points in time. Integration of diverse forms of utility remains a challenge given the multidimensionality of the problem (Witt and Binder 2013).

3.4 Future Directions

Theoretical models and empirical studies have begun to link traditionally divergent models of perceptual decision-making and valuation-based decision-making. While results of valuation circuits have been linked and generalised to discrete and simple choices, we are not yet sure whether these circuits can explain more abstract and complex choices. Further, even though these ubiquitous circuits have been generalised to the study of decision-making, we still do not know their general boundary conditions. Glimcher (2014b) recommends more investigations into models of valuation circuit and its interactions with choice circuit. Valuation circuits are riddled with redundancies, and it would be interesting to investigate whether these contribute to different stages in the decision-making process and thus represent different mental states (Platt and Plassmann 2014). Another interesting line of research will be to integrate the pharmacological results with those from neurophysiology to determine how neuromodulators such as dopamine affect neuronal encoding (Wallis and Rushworth 2014).

4 Intertemporal Choice

Intertemporal choices, choosing between outcomes occurring at different points in time, is associated with complex neural mechanisms, which encode proximate rewards and predict and evaluate distal rewards (Camerer 2013).

4.1 Intertemporal Discounting

Across species which have been tested, it has been noted that there is a consistent discounting of future rewards when compared with immediate rewards. Vast majority of studies have found that discounting of future outcomes tends to follow hyperbolic and quasi-hyperbolic functions rather than exponential functions (Ainslie and Haendel 1983; Soman et al. 2005). Similar results have been obtained while researching impulsive behaviour among addicts (Ainslie 1975).

fMRI experiments based on dual-systems hypothesis have observed that different sets of brain areas are activated while considering intertemporal choices. It has been observed that ventral striatum (VS), posterior cingulate cortex (PCC), and ventromedial prefrontal cortex (vmPFC) demonstrate higher activation while considering immediate reward choices when compared to choices involving only delayed rewards. Simultaneously, posterior parietal cortex (PPC) and lateral prefrontal cortex (LPFC) are activated to greater extent than other regions for a spectrum of choices across the board. Kable and Glimcher (2010) disputed the dual-system interpretation and contended that active brain regions might be reacting to larger subjective value of immediate rewards and not to the immediacy. Blood-oxygen-level-dependent (BOLD) activity in VS, PCC and vmPFC seems to support this contention as these regions show higher correlation with subjective values in comparison with objective reward dimensions (Kable and Glimcher 2007). While the role of LPFC and PPC is still disputed, multiple studies have affirmed that in intertemporal choice, VS, PCC, and vmPFC play a significant role (Ballard and Knutson 2009; Kable and Glimcher 2010; Pine et al. 2009). Peters and Büchel (2010) have suggested that a relationship exists between working memory, time preferences and patience, which adds salience to future rewards.

4.2 Self-control

Dual-system accounts of intertemporal choices are closely linked to notions of self-control. Thaler and Shefrin (1981) have postulated that a battle for internal control rages on between a myopic “doer” and a farsighted “planner”. While the “doer” can be associated with the limbic system, the “planner” is identified with the prefrontal cortex. Hare et al. (2009) examined the neural circuitry associated with the planner-doer and self-control dimensions and found a strong correlation between dorsolateral prefrontal cortex (DLPFC) and self-control choices. DLPFC’s significance in determining self-control has been corroborated by experiments where temporary disruption of the region by transcranial magnetic stimulation (TMS) led subjects to become more impatient. Left DLPFC has also been associated with a range of task-related functions involving working memory and inhibitory control (Camerer 2013; Peters and Büchel 2010).

4.3 Future Directions

Questions remain about the neural correlates of intertemporal discounting. It is possible that brain regions which get activated while appraising immediate rewards are different from those engaged during discounting calculations (Harrison 2008). Considerable research efforts are being directed towards resolving the debate between dual-system and unified system of discounting. While small neural units could be indifferent to subjective values, it is possible that the sum of activity across these units could lead to prominent BOLD activity across a wider brain region. This hypothesis remains open for examination. Further, within the same brain region, different areas show different rates of discounting (Tanaka et al. 2004), which calls for future examination.

Harrison (2008) also emphasizes the need for more precise operationalisation of variables studied in intertemporal discounting, such as front end delay on earlier options, as related confounding leads to competing explanations for apparently huge discount rates.

Demographic and socio-economic factors also seem to play role in intertemporal choice with Westerners displaying larger time-discount rates than Easterners. Future studies can investigate the interactions between biological, cultural and socio-economic factors in intertemporal choice (see Read and Read 2004; Takahashi et al. 2010).

5 Risky Choice

Choice under risk and ambiguity constitutes a distinctive form of decision-making as choice options yield multiple outcomes with varying probabilities (Camerer 2013). In this section, the neural processes and mechanisms involved in choice under risk and ambiguity are examined.

Regardless of the uncertainty, choice options have to be assigned values for comparison and selection. Value assignments can be either outcomes and probabilities or standard statistical measures of probability distribution like mean, variance and skewness. Brain areas engaged in value encoding like dopamine neurons, striatum, orbitofrontal cortex and medial prefrontal cortex have been associated with processing of probability and outcome information (Tobler and Weber 2014).

5.1 Choice Under Risk, Ambiguity, Gains and Losses

Ambiguity is characterised by lack of knowledge of outcome distribution, so a gamble with unknown pay-offs is ambiguous, while a gamble with well-defined pay-offs and probability distributions is termed risky (Smith et al. 2002). Numerous studies and experiments have shown that humans avoid ambiguity in both gains and losses. We prefer the riskier gambles only in loss situations while avoiding them in gain situation (Tversky and Kahneman 1992; Smith et al. 2002).

Pay-off structure (gain/loss) is held separable, if not independent, from belief structure (ambiguity/risk), in economics and decision theory. Research by Smith et al. (2002) has shown brain activity in neocortical dorsomedial system while processing loss information in risk-based gambles, and in ventromedial region under other conditions of risk-gain and ambiguous-gain or ambiguous-loss. The researchers have postulated from this findings that brain areas underlying the belief and pay-off structures, while being dissociable, are functionally integrated and interact with each other.

Breiter et al. (2001) suggested brain structures, distinct from Smith et al. (2002), associated with decision-making and expectancy; these included amygdala, hippocampus, nucleus accumbens, orbitofrontal cortex, ventral tegmentum and sublenticular extended amygdala. Ambiguity, as compared to risk, displays increased BOLD activity in orbitofrontal cortex (Hsu et al. 2005; Levy et al. 2010), amygdala (Hsu et al. 2005) and in some studies also in parietal cortex (Bach et al. 2011), and this higher level of activity could be signalling that information is missing (Tobler and Weber 2014).

Experiments have shown that subjective value of risky and ambiguous choice options is commonly coded in the medial prefrontal cortex, posterior cingulate cortex and the striatum (Levy et al. 2010). It is also possible that individual regions, while being engaged in representing decomposed components of risky choice models, interact with common regions for valuation of choice options (Tobler and Weber 2014). Research also shows that individual differences in choice behaviour can be predicted based on relative activation of the brain areas identified above (Huettel et al. 2006).

5.1.1 Loss Aversion

Tom, Fox, Trepel, and Poldrack’s (2007) study showed strong association between differences in value-related neural activity and degree of loss aversion inferred behaviourally. Studies by Yacubian et al. (2006) highlighted neural activity related to gains in ventral striatum, while temporal lobe regions (lateral to striatum) and amygdala showed activity related to losses. Camerer (2013) and De Martino et al. (2010) demonstrated that patients with inhibited activity in bilateral amygdala due to lesions did not exhibit any loss aversion. Some researchers used diverse physiological methods to examine these associations. For instance, Hochman and Yechiam (2011) demonstrated that the increase in heart rate and pupil dilation correlated significantly with losses relative to comparable gains, while Sokol-Hessner et al. (2009) showed (with the help of skin conduction response) that “perspective-taking” helps in diminishing loss-aversive behaviour.

5.1.2 Endowment Effect

Neuroeconomic research of endowment effectFootnote 7 is of recent vintage and is still primarily focussed on identifying basic neural correlates. Weber et al.’s (2007) subjects demonstrated higher activation in amygdala during the selling trials of digital copies of songs, relative to buying trials. Kahneman et al. (1990), in order to adjust for the relative wealth positions of the seller and buyer in an endowment effect scenario, offered a “choice price” option.Footnote 8 fMRI studies conducted by Knutson et al. (2008), using high-value consumer goods, have found neural activity to indicate that selling prices were greater than choice prices, which in turn were greater than buying prices. The medial prefrontal cortex activation, which is known to have a negative correlation with losses and a positive correlation with gains (see Knutson et al. 2003), in this experiment showed consistent negative correlation with buying and choice prices and positive correlation with selling prices. Further, negative correlation with buying prices was much stronger than with choice prices.

Knutson et al. (2008) found that activation in right insula was positively and significantly correlated with endowment effect estimates. Since activation of insula is closely identified with distress (Masten et al. 2009; Sanfey et al. 2003), these results support the hypothesis that quantum of distress experienced by participants is positively correlated with endowment effect. However, subsequent studies by Tom et al. (2007) could not find any evidence of neural activity within insula or amygdala which are supposed to be related to negative emotions. Rather they found a direct correlation, positive for gain and negative for losses, in the ventromedial prefrontal cortex and dorsal and ventral striatum. Additionally, asymmetric neural activity for higher quantum of reduction for losses compared to quantum of increase in profits was observed as expected with behavioural loss aversion. Some of these results have been questioned given the limitations of fMRI (Knutson and Greer 2008). A strictly behavioural study comparing loss aversion behaviour of participants with inhibited amygdala, who could not process fear, and normal participants offered further contradictory evidence (De Martino et al. 2010). While non-lesioned subjects exhibited standard loss-aversive behaviour, subjects with lesions in amygdala did not exhibit any loss aversion. A supplemental study found that the differences in loss aversion were limited only to loss situations and were not exhibited in gain situations.

5.2 Statistical Moments and Value Assignments

Studies have suggested that varied brain regions allow for coding of statistical measures like mean and variance of rewards (Platt and Huettel 2008). The statistical moments of reward distributions can be weighed and integrated to create choice values, and choice behaviour can be thereby examined (Camerer 2013; Tobler and Weber 2014). But objective valuations of multiple statistical measure decompositions are scarce due to limitations in experimental design. However, electrophysiological and neuroimaging of single cells using specialised designs have shown that mean-variance decompositions appear to be implemented in the orbitofrontal cortex while insula appears to decompose risk processing by separate representations of variance and skewness risk (Tobler and Weber 2014).

5.3 Future Directions

Understanding of the neural mechanisms underlying choice under risk and ambiguity is still at fairly rudimentary levels. It is still not clear whether single or multiple neural systems are engaged simultaneously or whether and how measures of probability and magnitude are decomposed at the neural level. Dissociations reported in the fMRI domain still await confirmatory evidence from other methods.

6 Context Effects

Standard deterministic models of normative choice, like expected utility theory, assume that choices are largely independent of context. However, numerous studies have shown that a number of contextual factors, like size of choice set, framing of decision problem, temporal history, play a critical role in the decision-making process (Louie and De Martino 2014). Decision-making is a dynamic process subject to the spatial and temporal context of the choice itself.

Contextual effects can occupy a wide spectrum including emotional states, motivational states, environmental situations and the like (Buehler et al. 2007; Witt and Binder 2013). Mental states include cognitive overload, attention levels, physiological states such as hunger, exhaustion, pain, discomfort and emotions like fear or anxiety. These states could act as constraints or sources of helpful information. For instance, fear could serve as a useful signal for immediate danger or noise. Fear and anxiety can also force a bias for quick resolution of uncertainty (Camerer 2013; Caplin and Leahy 2001). Contextual factors also include deprivation states such as sleep deprivation which leads to slow, noisy decisions (Camerer 2013; Menz et al. 2012). High states of deprivation can lead to “hot” visceral conditions where physiological factors can have an overriding influence on future estimation. In such conditions, future visceral states are not properly accounted for leading to discrepancies between different kinds of utility (Witt and Binder 2013).

6.1 Neural Correlates of Context-Dependent Valuation

Neurobiological constraints on neural activity, which defines the minimum and maximum activity levels, could be the underlying source of contextual effects (Louie and De Martino 2014). Influence of contextual factors has been observed in ventromedial prefrontal cortex, a region that is also associated with computation of goal values (Plassmann et al. 2008) and neural coding of prediction error signal (Nieuwenhuis et al. 2005).

De Martino et al. (2006) postulated that framing effects in decision-making is moderated by the emotional system through an affect heuristic. This was based on their discovery of significant activation of bilateral amygdala when a typical choice is made. But it was not conclusive whether the amygdala activity is associated with a choice input, or moderation of emotional system during the choice process, or post-choice. They also discovered a strong correlation between of framing effects and ventromedial prefrontal cortex (vmPFC) activation and concluded that vmPFC plays a prominent role in evaluating and integrating emotional and cognitive information during choice behaviour.

Sokol-Hessner et al. (2013) experiment using the reverse contrast method yielded more clarity and showed heightened activity in the insula, anterior cingulate cortex and dorsolateral prefrontal cortex. These areas are also implicated in conflict resolution, emotion regulation and response inhibition. Insula is an active participant of encoding bodily sensations (especially discomfort) which is consistent with the feelings of discomfort experienced while making risky decisions.

Recent studies have suggested that framing evokes different kinds of affect and overriding of this affect leads to limiting the framing effect (Miu and Crişan 2011). Susceptibility of individuals to framing effect has shown significant variability. Evidence suggests that the medial orbitofrontal cortex (mOFC) plays a key role in controlling the framing effect, by modulating the amygdala approach avoidance signal, leading to more consistent and context independent decisions (De Martino et al. 2006). mOFC lesions in macaques result in strong framing effects in choice behaviour (Noonan et al. 2010). It has also been shown that amygdala’s susceptibility to framing effect can be modulated by a specific polymorphism of the serotonin transporter gene (SERT). This has been linked to a reduced functional connectivity between mOFC and amygdala, essentially indicating that participants carrying the SS polymorphism carry a reduced ability to counteract the biasing influence (Hariri et al. 2002; Louie and De Martino 2014).

Stress (even artificially induced) can increase risk aversion (see Porcelli and Delgado 2009). Viewing of negative emotion-laden images prior to choice-making influences risk aversion (Kuhnen and Knutson 2011). Stimulation of dorsolateral prefrontal cortex leads to increased risk aversion (Fecteau et al. 2007), while disruption of the same leads to decreased risk aversion (Knoch et al. 2006a).

However, context-dependency also introduces a fundamental ambiguity that there is no correspondence between value quantities and firing rates as the given value could be influenced by a variety of contextual factors. In fact, it is still not clear whether and how contextual neural coding underlies context-dependency at the behavioural level (Louie and De Martino 2014).

6.2 Do Emotions Play a Role in Decision-Making? Neural Evidence

Emotions play a significant role in decision-making and are integral to success. The emotional content of heuristic decision-making increases with the complexity of decisions (Forgas 1995; Lo and Repin 2002). This has been neurologically substantiated with lesion studies on patients with impaired ventromedial cortex. The impairment “degrades the speed of deliberation and also degrades the adequacy of choice” (Bechara and Damasio 2005, p. 339). Emotions like anticipated disappointment and regret act as learning signals influencing future decisions (Coricelli et al. 2007; Steiner and Redish 2014). It has also been observed that dread of an anticipated negative experience is linked to neural activity related to physical pain (Dayan and Seymour 2009).

Even though influence of emotions in decision-making is undisputed, relatively few studies have measured or manipulated emotional variables during decision-making (Lempert and Phelps 2014). Some of the difficulties in studying emotions relate to the neurological anatomy. The ventromedial cortex can be divided into caudal/posterior and rostral/anterior areas. While the former exhibit a direct connection with regions moderating emotions, the latter are indirectly connected (Ongur and Price 2000). The caudal/posterior areas process events with high probability and the rostral/anterior areas process the less probable outcomes. Given the indirect connections, outcomes with lower probability would require to be more emotionally intense, in order to produce a significant emotional reaction (Bechara and Damasio 2005; Goetz and James III 2008). This creates confounding problems.

Emotion modulates decision-making through two paths: incidental affect or through inclusion into the value computation process. Research has identified distinct affective processes that incidentally influence decisions (Lempert and Phelps 2014). For instance, stress impacts the prefrontal cortex function whereby the decision process becomes more habitual and automatic. As mentioned earlier, in affect-as-information model (Schwarz and Clore 1983), the affect acts as additional information, even when it is irrelevant, which influences the judgement or decision. Consequently, even subliminal manipulations of emotions have an impact on valuation of the choice set (Lempert and Phelps 2014).

6.3 Future Directions

Reference points, used to evaluate gains and losses in a relative manner, are a construct which is widely used to explain contextual effects, but little is known about the actual neurobiology underlying the computation of reference points. Flexibility of emotions, adaptive in one circumstance and maladaptive in another, leading to flexibility in decision-making is also relatively unexplored in neuroeconomic research. There is still very limited information about explicit linking of the neural circuits and emotions with value representations (Lempert and Phelps 2014). Impact of other factors, like drugs and cognitive overloads, which modulate emotions and consequently moderate behavioural loss aversion is also underexplored (Paulus et al. 2005).

7 Examining Social Preferences: Game Theory, Empathy and Theory of Mind

Game theory examines how individual players make decisions in a multi-player environment where decisions of one player can impact the opportunities and pay-offs of other players. This is an interesting research area for neuroeconomics as game theory links individual decisions to group level outcomes through precise structures (Houser and McCabe 2014). Neuroeconomic studies can help answer some of the key problems in social decision-making such as examining the incentives for individual decisions, influence of emotions in decision-making or how behaviour is a function of the bounded rationality of participants (Fehr et al. 2005).

7.1 Social Preferences in Decision-Making: Neural Evidence

7.1.1 Competition and Cooperation

Studies have shown that humans prefer cooperation as opposed to defection in social dilemmas, notwithstanding equivalent monetary gains, thereby indicating a desire for benefits beyond monetary gains (Fehr et al. 2005; Fehr and Camerer 2007). Neuroimaging studies have revealed that dorsal striatum is activated in cooperative scenarios (Rilling et al. 2002), while ventromedial prefrontal cortex and ventral striatum are activated in competitive scenarios (Cikara et al. 2011; Dvash et al. 2010). Interestingly, the latter also figure dominantly in the “reward”-related neural networks.

Votinov et al. (2015) examined neural activity in strictly competitive games where one player’s outcome is negatively correlated with the outcome of her/his opponent. They discovered two distinct neural activation areas associated with the two types of winning, one by direct increase in pay-offs (in ventromedial prefrontal cortex, typically associated with rewards) and the other by loss avoidance (in precuneus and temporoparietal junction, typically associated with mentalising and empathy). Both types of winnings also showed activation in striatum.

Reputation plays an important role in repeated games with private information. Studies by Bhatt et al. (2012) found direct correlation of amygdala activity (associated with threat and risk perception) with doubts of credibility of the other player. Evidence was also found that beliefs were updated with data from recent behaviour in a continuous process. Separate but interconnected neural structures appear to mediate the uncertainty of the other players’ behavioural reputation. Delgado et al. (2005) observed differential neural activation in caudate nucleus and several other areas in response to cooperative and competitive behaviour by opponents. Interestingly difference in activity was not observed where the other player had a good reputation to start with. This resonates with the view that subsequent “bad” behaviour is excused when the player has been “good” initially (Camerer and Hare 2014).

Lambert et al. (2017) investigated the role of neuromodulators (oxytocin) in improving the accuracy of social decision-making in complex situations. In an assurance game, simulating a win–win environment, oxytocin increased nucleus accumbens activity and seemed to facilitate cooperation. In a chicken game, simulating a win–lose environment where aggression while desirable could be fatal if the partner also responds with aggression, oxytocin down-regulated the amygdala and increased the valence of cues to decide on aggression or retreat moves.

7.1.2 Inequity Aversion and Reciprocity in Social Dilemmas

Sanfey et al.’s (2003) fMRI study demonstrated that insula activity is positively correlated with propensity to reject unfair offers in an Ultimatum Game. The activation of insula (and its correlation with negative affect) indicates that negative emotions drive rejection decisions in Ultimatum Game. Knoch et al. (2006b) found evidence for the crucial role of right DLPFC in mediating between self-interest and fairness impulses. In this study, the right DLPFC, implicated in overriding or weakening self-interest impulses, was inhibited with repetitive transcranial magnetic stimulation (rTMS) and subjects were found to be more willing to act selfishly. Moreover, where the opponent was a computer, which tends to inhibit the reciprocity motive, the right DLPFC disruption showed no behavioural effect.

DeQuervain et al. (2004) found evidence that people have a preference for punishing norm violations. Neural activity in a two-player sequential social dilemma game, where the players had the opportunity to punish the partner for abuse of trust, was observed using positron emission tomography (PET) imaging. It was noted that subjects experienced the punishing as satisfactory or rewarding and that the quantum of anticipated satisfaction and level of punishment was positively correlated (Fehr et al. 2005).

7.1.3 Trust

While oxytocin is associated with trust levels, it does not seem to change the evaluation of the other player’s trustworthiness. It seems to operate more at the level of subject’s preferences by making the subject more optimistic and inhibiting their exploitation aversion making them more receptive to the risk of being exploited (Bohnet and Zeckhauser 2004; Fehr et al. 2005; Kosfeld et al. 2005).

Krueger et al. (2007) indicated that two different trust mechanisms are in play in a repeated two-player trust game. The initial mechanism involving anterior paracingulate cortex makes way for spatial activation in the later stages. This suggests the operation of a conditional trust system in the later stages of the game, while avoiding trust in the early stages when temptations to defect are high.

7.1.4 Attitude Towards Strategic Uncertainty

The uncertainty of another person’s actions in a cooperation task is referred to as strategic uncertainty (Ekins et al. 2013). Chark and Chew (2015) used a combined behavioural and neuroimaging approach to study response to strategic uncertainty as compared to non-strategic uncertainty. Their findings indicate that subjects showed aversion to strategic ambiguity in competitive environments and were ambiguity seeking in cooperative environments. They also exhibited source preference (self-regarding) rather than social preference (other-regarding) in their valuation of expected utility.

7.2 Association with Theory of Mind and Empathy

Decision-making in a game theory situation presupposes that players build a theory of mind or engage in mentalising about other players which helps to understand their motivations, preferences and thereby predict their actions (McCabe and Singer 2008; Singer and Fehr 2005). While theory of mind is a cognitive understanding of another person’s mental state, empathy refers to the “ability to share the feelings and affective states of others” (Singer and Tusche 2014; p. 514). Comparative study of brain areas involved when humans play against each other, and when humans play with computers, has shown involvement of medial prefrontal lobe in mentalising. Empathy-related activation has been observed in anterior cingulate cortex and anterior insula. Empathy studies have shown that people tend to positively value pay-offs when the other player has played fairly and negatively value the other player’s pay-off during unfair play. This indicates that people prefer to punish unfair opponents and choose to cooperate with fair ones (Fehr and Gächter 2000).

7.3 Pharmacological Manipulations of Social Preferences

Pharmacological manipulations have highlighted some causal effects. For instance, increased testosterone drives more fair offers (Eisenegger et al. 2010); increased serotonin and benzodiazepine lead to reduced rejection rates (Crockett and Fehr 2014; Gospic et al. 2011). Prosocial behaviour and accuracy of higher-order decision-making are enhanced by oxytocin administration (Kosfeld et al. 2005; Lambert et al. 2017).

7.4 Future Directions

Bhatt and Camerer’s (2005) and Mohr et al. (2010) studies establish a link between differential insula activity and first- and second-order beliefs. This association can support future research on self-referential bias in second-order beliefs and in risk-related decision-making.

In-depth research is required to understand the neurological factors which modulate empathetic brain responses to better understand the conditions under which prosocial and anti-social behaviour is fostered (Singer and Tusche 2014). More detailed examination of the theory of mind and its neural correlates could provide linkages between strategic thinking and neural activity (Camerer and Hare 2014). Understanding the neural basis of exceptional skills in bargaining which contribute to game play success, can aid in building valuable management skills and competencies such as strategic thinking and negotiation. Explorations of linkages between different brain regions can bring more specificity and depth to our understanding of the relationship between relevant neural circuitry and social decision-making.

8 Discussion and Future Research Directions

Neuroeconomics started gaining traction with the rise of the “neuroessentialism” view (Racine et al. 2005) that the definitive way of explaining human psychological experience can be through understanding the brain and its activity. This has been fostered by rising capabilities and accessibility of neuroimaging techniques like fMRI. With well-developed experimental designs researchers can explore the neural substrates of behaviour and understand the mental processes which lead to certain behaviour. This helps in developing better theories and also in testing theories in multiple ways (Becker et al. 2011; Volk and Köhler 2012).

In this section, we discuss the fallacies researchers need to guard against, methodological issues in neuroeconomics research, concerns and future directions.

8.1 Frequent Fallacies

“Forward inference” involves manipulating a specific psychological function and identifying its localised effects in brain activity (Huettel and Payne 2009). However, some researchers engage in the “reverse inference” while interpreting experimental results. Reverse inference involves fallacious analysis as the concerned researcher selects activated brain regions and makes inferences about the mental processes associated with it. This results in low predictive power as specific brain regions can be related with multiple cognitive processes (Poldrack 2006). While reverse inference may be used to generate novel hypotheses about potential neural correlates (Poldrack 2011), researchers need to be circumspect in its use and design appropriate boundaries to enhance specificity of neural studies (Hutzler 2014; Volk and Köhler 2012). The other common fallacy to guard against is mereological fallacy which ascribes psychological processes like memory, perception, thinking, imagery, belief, consciousness to the brain, while they might be emerging from interaction of multiple parts of the body (Bennett and Hacker 2003; Powell 2011).

8.2 Methodological Issues

Researchers have highlighted some methodological concerns regarding design, execution and statistical analysis of neuroeconomics experiments. A brief summary of the same has been shared here.

To begin with, statistical analysis of neural data can often be challenging due to very small sample sizes (Fumagalli 2014; Ortmann 2008), resulting in “data sets in which a few brains contribute many observations at each point in time, and in a time-series” (Harrison 2008; p. 312). Neuroeconomic predictions are valid only over very limited time intervals (spanning few hundred milliseconds) making it irrelevant both for real-world use and for other economists (Fumagalli 2014).

Experimental results can be contaminated through experimenter-expectancy or demand characteristics effects as in neuroeconomics laboratories researchers, not blind to research questions and hypotheses, may provide instructions to subjects (Ortmann 2008). Other factors which reduce signal-to-noise ratio of the research findings include artificiality of laboratory settings (Ortmann 2008) and the use of deception in experiments (see Coricelli et al. 2005; Knoch et al. 2006a; Rilling et al. 2002; Sanfey et al. 2003). Issues relating to representativeness of stimuli which figured in behavioural economics apply to current neuroeconomics experiments as well (Ortmann 2008).

Resolution capabilities of the available imaging techniques are not able to capture the physiological heterogeneity of many neural structures and circuits, limiting our understanding of the complex functions of these structures (Fox and Poldrack 2009). Further, invasive data-collection techniques like repetitive transcranial magnetic stimulation can lead to unknown, irreversible damage in experimental subjects (Ortmann 2008).

Socio-demographic diversity is often lacking in sample sets which makes creating consensus on the applicability of results across contexts challenging (Harrison 2008). Further social, cognitive and affective capacities change over the course of life due to different developmental trajectories of the respective underlying neural structures. Initial studies have shown the potential benefits of investigating the developmental aspects of neuroeconomics (Steinbeis et al. 2012; Singer and Tusche 2014).

Concerns have been raised that researchers are driven more by the availability of new technology rather than by deeper understating of the underlying brain function or of what they are recording. As a result, when data are being collected or analysed, errors may go undetected, leading to inaccurate results. Research results may lead to overstated or implausible claims or may be reinterpreted to fit a previously held view (Cabeza and Nyberg 2000; Harrison 2008; Ortmann 2008; Ruff and Huettel 2014).

Triangulation through multiple research methods like pharmacological interventions, cross-cultural designs and genetic-imaging approaches could be helpful in arriving at a holistic comprehension of neural interconnections and can lead to a better understanding of the underlying affective, motivational and cognitive processes in decision-making (Singer and Tusche 2014; Volk and Köhler 2012).

8.3 Neuroeconomic Research: Concerns and Future Directions

Technology available currently imposes limitations on our understanding of the brain’s neurobiological complexity. For example, we are not able to capture the structural features of many brain regions at a granular level nor are we able to grasp the extensive heterogeneity which resides at the cellular level (Fox and Poldrack 2009).

Neuroeconomics has identified the neurological underpinnings of many behaviours examined through prospect theory and provides strong evidence that “anomalies” are real. Prospect theory interprets risk attitudes in multiple ways like loss aversion, diminishing sensitivity to money and probability. During empirical neuroeconomic research, we run the risk of conflating these factors. We also need to establish whether an activity is causal and necessary for the presence of the phenomena and is not just an effect of these factors. This calls for a combined approach of identifying the neural correlates and establishing their necessity for the presence of a phenomenon (Fox and Poldrack 2009).

Some researchers have questioned the appropriateness and relevance of game theory contexts in understanding the reality of social preferences (see Binmore 2007; Levitt and List 2007). Use of subjective labels such as “trust” and “trustworthiness” to describe the behaviour of participants in laboratory experiments can lead to misperception around motives and can lead to situations where labelling is confused with explanation. Precise operationalisation of variables is needed to control for possible confounds (Harrison 2008; Rubinstein 2006).

Context-dependencies are difficult to untangle given their multiplicity and simultaneity. Huettel and Payne (2009) urge researchers to be cautious in their interpretations, given the multidimensionality of context effects, and to be circumspect in providing overgeneralised explanations of results.

One key concern around neuroeconomic research is whether the validity of results obtained from simple games and choices can be extended to more complicated contexts (Ortmann 2008). While it is commonly believed that results obtained in a laboratory setting may not find resonance in field, Volk and Köhler (2012) highlight the availability of strong empirical evidence that majority of laboratory results demonstrate generalizability in “real world”.

9 Conclusion

Neuroeconomics has managed to grow beyond the initial scepticism: whether it will add any value beyond correcting the “errors” believed to pervade economics and whether it will provide better answers to traditional questions. Real-world behaviour of economic agents in decision-making situations often tends to be suboptimal and deviates from rational optimal behaviour. Study of neural circuitry helps us comprehend the computations an agent makes, and how those computations are made. This knowledge might help us in understanding the deviations from optimal behaviour in a more fundamental way. Paradoxically, as Glimcher (2003) states, the value of neuroeconomic research might be enhanced by studying precisely those events when economic agents do not behave in the optimal manner, as predicted by theory.

Notes

- 1.

The ventral striatum plays an important role in encoding of option values during choice tasks.

- 2.

The core circuit of basal ganglia receives information from frontal cortex (through caudate and putamen), and post-processing, transfers it back to frontal cortex (through globus pallidus and substantia nigra pars reticulate).

- 3.

There is substantive evidence that the dopamine-releasing neurons in the dopaminergic system encode a reward prediction error signal which aids reinforcement learning.

- 4.

The amygdala has been implicated in a host of studies related to psychological states.

- 5.

The hippocampus figures repeatedly in neuroscientific studies of learning and memory.

- 6.

Fodor insisted that the modularity of brain should be considered only in the context of psychological studies. Nevertheless, his propositions gained significant attention in the neurobiological community.

- 7.

Endowment effect refers to the difference between the minimum amount a person is prepared to receive in order to give away something she/he owns (selling price), and the maximum amount a person is prepared to give in order to acquire the same (buying price).

- 8.

Choice price refers to the indifference price point where subjects are neutral between getting goods and an equivalent amount of money.

References

Ainslie, G. (1975). Specious reward: A behavioral theory of impulsiveness and impulse control. Psychological Bulletin, 82(4), 463–496.

Ainslie, G., & Haendel, V. (1983). The motives of the will. In E. Gottheil, K. A. Druley, T. E. Skoloda, & H. M. Waxman (Eds.), Etiologic aspects of alcohol and drug abuse (pp. 119–140). Springfield, IL: Thomas.

Alonso, R., Brocas, I., & Carrillo, J. D. (2014). Resource allocation in the brain. Review of Economic Studies, 81, 501–534.

Bach, D. R., Hulme, O., Penny, W. D., & Dolan, R. J. (2011). The known unknowns: neural representation of second-order uncertainty, and ambiguity. Journal of Neuroscience, 31, 4811–4820.

Ballard, K., & Knutson, B. (2009). Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage, 45(1), 143–150.

Balleine, B. W., & O’Doherty, J. P. (2010). Human and rodent homologies in action control: cortico-striatal determinants of goal-directed and habitual action. Neuropsychopharmacology, 35, 48–69.

Bayer, H. M. & Glimcher, P. W. (2005). Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron, 47(1), 129–141.

Becker, W. J., Cropanzano, R., & Sanfey, A. G. (2011). Organizational neuroscience: Taking organizational theory inside the neural black box. Journal of Management, 37, 933–961.

Bennett, M. R., & Hacker, P. M. S. (2003). Philosophical foundations of neuroscience. Malden, MA: Blackwell Publishing.

Berns, G. S., & Moore, S. E. (2012). A neural predictor of cultural popularity. Journal of Consumer Psychology, 22, 154–160.

Berridge, K. C. (2012). From prediction error to incentive salience: mesolimbic computation of reward motivation. European Journal of Neuroscience, 35(7), 1124–1143.

Berridge, K. C., & Aldridge, J. W. (2008). Decision utility, the brain and pursuit of hedonic goals. Social Cognition, 26(5), 621–646.

Bhatt, M., & Camerer, C. F. (2005). Self-referential thinking and equilibrium as states of mind in games: FMRI evidence. Games and Economic Behavior, 52(2), 424–459.

Bhatt, M. A., Lohrenz, T., Camerer, C. F., & Montague, P. R. (2012). Distinct contributions of the amygdala and parahippocampal gyrus to suspicion in a repeated bargaining game. PNAS Proceedings of the National Academy of Sciences of the United States of America, 109, 8728–8733.

Bechara, A., & Damasio, A. R. (2005). The somatic marker hypothesis: A neural theory of economic decision. Games and Economic Behavior, 52, 336–372.

Beugré, C. D. (2009). Exploring the neural basis of fairness: A model of neuro-organizational justice. Organizational Behavior and Human Decision Processes, 110(2), 129–139.

Binmore, K. (2007). Does game theory work? The bargaining challenge. Cambridge, MA: MIT Press.

Bohnet, I., & Zeckhauser, R. (2004). Trust, risk and betrayal. Journal of Economic Behavior & Organization, 55(4), 467–484.

Breiter, H. C., Aharon, I., Kahneman, D., Dale, A., & Shizgal, P. (2001). Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron, 30, 619–639.

Buehler, R., McFarland, C., Spyropoulos, V., & Lam, K. C. (2007). Motivated prediction of future feelings: effects of negative mood and mood orientation on affective forecasts. Personality and Social Psychology Bulletin, 33(9), 1265–1278.

Cabeza, R. & Nyberg, L. (2000). Imaging cognition II: An empirical review of 275 PET and FMRI studies. Journal of Cognitive Neuroscience, 12, 1–47.

Cador, M., Robbins, T., & Everitt, B. (1989). Involvement of the amygdala in stimulus reward associations: interaction with the ventral striatum. Neuroscience, 30(1), 77–86.

Camerer, C. F. (2013). Goals, Methods, and Progress in Neuroeconomics. Annual Review of Economics, 5, 425–455.

Camerer, C. F., & Hare, T. A. (2014). The neural basis of strategic choice. In P. W. Glimcher & E. Fehr (Eds.), Neuroeconomics: Decision making and the brain (2nd ed., pp. 479–492). New York: Academic Press.

Caplin, A., & Leahy, J. (2001). Psychological expected utility theory and anticipatory feelings. The Quarterly Journal of Economics, 116(1), 55–79.

Colander, D. (2007). Retrospectives: Edgeworth’s hedonimeter and the quest to measure utility. Journal of Economic Perspectives, 21(2), 215–225.

Chark, R., & Chew, S. H. (2015). A neuroimaging study of preference for strategic uncertainty. Journal of Risk and Uncertainty, 50(3), 209–227.

Chib, V. S., Rangel, A., Shimojo, S., & O’Doherty, J. P. (2009). Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. Journal of Neuroscience, 29, 12315–12320.

Chib, V. S., De Martino, B., Shimojo, S., & O’Doherty, J. P. (2012). Neural mechanisms underlying paradoxical performance for monetary incentives are driven by loss aversion. Neuron, 74(3), 582–594.

Cikara, M., Botvinick, M. M. & Fiske, S. T. Us versus them: Social identity shapes neural responses to intergroup competition and harm. Psychological Science, 22, 306–313 (2011).

Coricelli, G., Critchley, H. D., Joffily, M., O’Doherty, J. P., Sirigu, A. & Dolan, R. J. (2005). Regret and its avoidance: A neuroimaging study of choice behavior. Nature Neuroscience, 8, 1253–1262.

Coricelli, G., Dolan, R.J. & Sirigu, A. (2007), Brain, emotion and decision making: The paradigmatic example of regret. Trends in Cognitive Sciences, 11(6), 258–265.

Crockett, M. J., & Fehr, E. (2014). Pharmacology of economic and social decision making. In P. W. Glimcher & E. Fehr (Eds.), Neuroeconomics: Decision making and the brain (2nd ed., pp. 259–279). New York: Academic Press.

Daw, N. D., & O’Doherty, J. P. (2014). Multiple Systems for Value Learning. In P. W. Glimcher & E. Fehr (Eds.), Neuroeconomics: Decision making and the brain (2nd ed., pp. 393–410). New York: Academic Press.

Daw, N. D., & Tobler, P. N. (2014). Value learning through reinforcement: the basics of dopamine and reinforcement learning. In P. W. Glimcher & E. Fehr (Eds.), Neuroeconomics: Decision making and the brain (2nd ed., pp. 283–298). New York: Academic Press.

Daw, N. D., Gershman, S. J., Seymour, B., Dayan, P., & Dolan, R. J. (2011). Model-based influences on humans’ choices and striatal prediction errors. Neuron, 69(6), 1204–1215.

Dayan, P., & Niv, Y. (2008). Reinforcement learning: The good, the bad and the ugly. Current Opinion in Neurobiology, 18, 1–12.

Dayan, P., & Seymour, B. (2009). Values and actions in aversion. In P. W. Glimcher, C. F. Camerer, E. Fehr, & R. A. Poldrack (Eds.), Neuroeconomics: Decision making and the brain. New York: Academic Press.

Delgado, M.R., Miller, M.M., Inati, S., & Phelps, E.A. (2005). An fMRI study of reward- related probability learning. NeuroImage, 24(3), 862–873.

De Martino, B., Camerer, C., & Adolphs, R. (2010). Amygdala damage eliminates monetary loss aversion. PNAS Proceedings of the National Academy of Sciences of the United States of America, 107(8), 3788–3792.

De Martino, B., Kumaran, D., Seymour, B., & Dolan, R. J. (2006). Frames, biases, and rational decision-making in the human brain. Science, 313, 684–687.

Denk, F., Walton, M. E., Jennings, K. A., Sharp, T., Rushworth, M. F., & Bannerman, D. M. (2005). Differential involvement of serotonin and dopamine systems in cost-benefit decisions about delay or effort. Psychopharmacology (Berl), 179, 587–596.

DeQuervain, D., Fischbacher, U., Treyer, V., Schellhammer, M., Schnyder, U., Buck, A., et al. (2004). The neural basis of altruistic punishment. Science, 305, 1254–1258.

Ding, L., & Hikosaka, O. (2006). Comparison of reward modulation in the frontal eye field and caudate of the macaque. Journal of Neuroscience, 26, 6695–6703.

Dorris, M. C., & Glimcher, P. W. (2004). Activity in posterior parietal cortex is correlated with the subjective desirability of an action. Neuron, 44, 365–378.

Dvash, J., Gilam, G., Ben-Ze’ev, A., Hendler, T. & Shamay-Tsoory, S. G. (2010). The envious brain: The neural basis of social comparison. Human Brain Mapping, 31, 1741–1750.

de Wit, S., Watson, P., Harsay, H. A., Cohen, M. X., van de Vijver, I., & Ridderinkhof, K. R. (2012). Corticostriatal connectivity underlies individual differences in the balance between habitual and goal-directed action control. Journal of Neuroscience, 32, 12066–12075.

Efremidze, L., Sarraf, G., Miotto, K., & Zak, P. J. (2017). The neural inhibition of learning increases asset market bubbles: Experimental evidence. Journal of Behavioral Finance, 18(1), 114–124.

Egidi, G., Nusbaum, H. C., & Cacioppo, J. T. (2008). Neuroeconomics: Foundational issues and consumer relevance. In C. P. Haugtvedt, P. M. Herr, & F. R. Kardes (Eds.), Handbook of Consumer Psychology (pp. 1177–1214). New York: Psychology Press.

Eisenegger, C., Knoch, D., Ebstein, R. P., Gianotti, L. R., Sa ́ndor, P. S., & Fehr, E. (2010). Dopamine receptor D4 polymorphism predicts the effect of L-DOPA on gambling behavior. Biological Psychiatry, 67(8), 702–706.

Ekins, W. G., Caceda, R., Capra, C. M., & Berns, G. S. (2013). You cannot gamble on others: Dissociable systems for strategic uncertainty and risk in the brain. Journal of Economic Behavior & Organization, 94, 222–233.

Everitt, B. J., & Robbins, T. W. (2005). Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nature Neuroscience, 8(11), 1481–1489.

Fecteau, S., Knoch, D., Fregni, F., Sultani, N., Boggio, P., & Pascual-Leone, A. (2007). Diminishing risk-taking behavior by modulating activity in the prefrontal cortex: A direct current stimulation study. Journal of Neuroscience, 27, 12500–12505.

Fehr, E., & Camerer, C. F. (2007). Social neuroeconomics: The neural circuitry of social preferences. Trends in Cognitive Science, 11, 419–427.

Fehr, E., Fischbacher, U., & Kosfeld, M. (2005). Neuroeconomic foundations of trust and social preferences: Initial evidence. Neuroscientific Foundations of Economic Decision-Making, 95(2), 346–351.

Fehr, E. & Gächter, S. (2000). Fairness and retaliation: The economics of reciprocity. Journal of Economic Perspectives, 14, 159–181.

Fodor, J. A. (1983). The Modularity of Mind. Cambridge, MA: MIT Press.

Forgas, J. (1995). Mood and judgment: The affect infusion model (AIM). Psychology Bulletin, 117, 39–66.

Fox, C. R., & Poldrack, R. A. (2009). Prospect theory and the brain. In P. W. Glimcher, C. F. Camerer, E. Fehr, & R. A. Poldrack (Eds.), Neuroeconomics: Decision making and the brain. New York: Academic Press.

Frydman, C., Barberis, N., Camerer, C. F., Bossaerts, P., & Rangel, A. (2014). Testing theories of investor behavior using neural data. Journal of Finance, 69(2), 907–946.

Fumagalli, R. (2014). Neural findings and economic models: Why brains have limited relevance for economics. Philosophy of the Social Sciences, 44(5), 606–629.

Gigerenzer, G., Todd, P. M., & ABC Research Group. (1999). Simple heuristics that make us smart. New York: Oxford University Press Inc.

Glimcher, P. W. (2003). Decisions, uncertainty, and the brain: The science of neuroeconomics. Cambridge, MA: MIT Press.

Glimcher, P. W. (2011). Foundations of Neuroeconomic Analysis. New York, New York: Oxford University Press.

Glimcher, P. W. (2014a). Introduction to neuroscience. In P. W. Glimcher & E. Fehr (Eds.), Neuroeconomics: Decision making and the brain (2nd ed., pp. 63–75). New York: Academic Press.

Glimcher, P. W. (2014b). Value-based decision making. In P. W. Glimcher & E. Fehr (Eds.), Neuroeconomics: Decision making and the brain (2nd ed., pp. 373–391). New York: Academic Press.

Glimcher, P. W. & Fehr, E. (2014). Introduction: A brief history of neuroeconomics. In P. W. Glimcher, & E. Fehr, (Eds.), Neuroeconomics: Decision making and the brain, (2nd ed., pp. xvii–xxviii). New York: Academic Press.

Glimcher, P. W., & Rustichini, A. (2004). Neuroeconomics: The consilience of brain and decision. Science, 306, 447–452.

Goetz, J., & James, R. N., III. (2008). Human choice and the emerging field of neuroeconomics: A review of brain science for the financial planner. Journal of Personal Finance, 6(4), 13–36.

Gospic, K., Mohlin, E., Fransson, P., Petrovic, P., Johanesson, M., & Ingvar, M. (2011). Limbic justice amygdala involvement in immediate rejection in the ultimatum game. PLoS Biology, 9(5), 1–8.

Gottfried, J. A., O’Doherty, J., & Dolan, R. J. (2003). Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science, 301(5636), 1104–1107.

Hare, T. A., Camerer, C. F., & Rangel, A. (2009). Self-control in decision-making involves modulation of the vmPFC valuation system. Science, 324(5927), 646–648.