Abstract

The purpose of this chapter is to provide comprehensive and useful guidelines for the methods of the functional connectivity analysis (FCA) for electroencephalogram (EEG) and its application. After presenting the detailed procedure for the FCA, we described various methods for quantifying functional connectivity. The problem of volume conduction and the means to diminish its confounding effects on the FCA was thoroughly reviewed. As a useful preprocessing for the FCA, spatial filtering of the time-series measured on the scalp or transformation to current densities on cortical surface were described. We also reviewed ongoing efforts toward developing FC measures which are inherently robust to the volume conduction problem. Finally, we illustrated the procedures for determining significance of the FC among specific pair of regions, which exploit surrogate data generation or the characteristics of event-related data.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Cognition and behavior is enabled by coordinated and integrated activities of neuronal populations of relevant regions in the brain. Beyond spatial and temporal pattern of brain activation, investigating the interaction between those neuronal populations, i.e., the functional connectivity analysis (FCA), is essential for proper understanding of human brain function [6, 17, 49, 56]. Now the FCA is regarded as one of the major tools for functional brain imaging.

In functional neuroimaging studies, mainly using functional magnetic resonance imaging (fMRI), intrinsic cortical networks such as default mode and saliency networks, have been identified during both resting state and task performance by the FCA [7, 17, 55]. The functional brain network is obviously dynamic although most fMRI-based FCA studies so far implicitly assumed static functional connectivity pattern. Dynamic FCA of fMRI blood oxygen level-dependent (BOLD) signals is currently under active investigation [26].

Considering the intrinsic limitation in temporal resolution of fMRI, electrophysiological recordings of neural activity are better suited for the dynamic FCA, especially for the investigation of short-term neural phenomena with temporal resolution of millisecond scale. Either invasive or noninvasive recording techniques can be used for the FCA. However, noninvasive methods, i.e. electroencephalogram (EEG) and magnetoencephalogram (MEG), are to be used for human behavioral/cognitive neuroscience studies under experimental task or task-free resting state.

The EEG/MEG signals are obtained from an array of sensors placed on the scalp, so the spread of electromagnetic fields prohibits direct interpretation of spatial origin of the signals from a single channel. Localization of cortical current sources is obtained by solving an electromagnetic inverse problem [4, 33, 41], and it may be applied prior to the FCA to investigate the connectivity between specific brain regions [20, 46]. This may be even crucial for valid interpretation of the FCA results in that functional connection between specific cortical regions can be identified. Source imaging techniques using distributed source models are being combined with various measures of the FC, providing significant results on cognitive, behavioral, and clinical results [1, 2, 11, 25, 29, 39]. The high temporal resolution of EEG/MEG can also be utilized to investigate coupling between different rhythms in various frequency bands present within neural activities.

The FC measures should reflect the association of neural activities in different brain regions. Hence, they should quantify the correlation and/or causality between the time-series of neural activities of multiple brain areas [6, 15, 42, 47]. Linear correlation coefficient is still one of the most commonly used measure of the FCA for fMRI. Various measures have origins from various disciplines such as statistical signal processing, nonlinear dynamics, and information theory, and they have been adopted for the FCA analysis in order to deal with complicated interaction between neuronal populations [6, 15, 42, 47].

It is recognized that oscillatory neural activities represent formation of local neuronal populations [9], and underlie dynamic coordination of brain function and synaptic plasticity [6, 50, 60]. Therefore, the interaction between oscillatory rhythmic activities should provide valuable insights on inter-regional communication among neuronal population, and MEG and EEG are the most suitable for this purpose. Novel measures for better analysis of the couplings between rhythmic activities are under active research and being applied for the FCA of EEG/MEG [5, 20, 24], exploiting the high resolution of these electrophysiological signals. Beyond coupling of rhythms within a single frequency band, cross-frequency couplings have been explored by quantifying either phase-phase or phase-amplitude couplings [10, 45, 59].

The purpose of this review article is to provide comprehensive and useful guidelines on the methods and to illustrate application of the FCA for EEG. Although the target is on EEG, the contents may be useful for the FCA of MEG as well. The focus is on how the FCA can be properly applied to cognitive neuroscience studies and clinical investigations. The merits and pitfalls of each FC measures are to be illustrated so that the readers may find this article useful to select the best method among many options available.

2 Procedure for the FCA

Figure 6.1 illustrates the detailed procedure for the FCA of EEG. Multichannel signals are preprocessed, primarily for the removal of artifacts including eye blinks and movements, muscle activity, and skin potentials. Bandpass filtering is often applied to extract the oscillatory rhythms within the frequency bands of interest. For the FCA between cortical regions, the time-series in the sensor space are projected onto the cortical source space using distributed source imaging techniques [4, 33, 40]. The multiple time-series are then subject to the calculation of FC measures between channels or cortical regions, which yields a functional connectivity matrix. Each element of the matrix quantifies the connectivity between two specific regions.

Sometimes the elements of the FC matrix are transformed to either 1 or 0 by determining the significant and insignificant connections by comparing the threshold level determined by surrogate data [15, 30, 54]. Then statistical comparisons are applied in order to determine the significant differences among experimental conditions or subject groups. Multivariate pattern analysis based on machine-learning can also be applied so that the information regarding conditions or groups can be decoded from the connectivity matrix [31, 35]. The adjacency matrix can be regarded as a graph with nodes and edges [8, 53], and thus, the pattern of the connectivity can be further characterized by graph theory [13, 14, 51, 57].

3 FC Metrics

There are many FC metrics with different theoretical backgrounds such as statistical signal processing, time-series forecasting, information theory, and nonlinear dynamics [6, 15, 42, 44]. It is often unclear which method should be used. They can be categorized by their features including theoretical basis, directionality, and signal domains. Table 6.1 summarizes various FC metrics to be described in depth in this review article, in terms of these features.

3.1 Cross-Correlation Function (CCF)

CCF is defined as the linear correlation between two signals represented as a function of the time delay between them. The CCF between two signals, x(t) and y(t), is calculated as follows:

Here, N is the total number of samples of the signals, and τ is the time delay between the two signals. \(\bar{x}\) and \(\sigma_{x}\) denote mean and standard deviation of the signal x, respectively. The CCF ranges between −1 (perfect inverse correlation) and 1 (perfect correlation), and equals zero for the case of no correlation at the time delay τ. The CCF at time delay of 0 is the Pearson’s correlation coefficient.

3.2 Coherence

The coherence represents the linear correlation between two signals x and y calculated in the frequency domain, which is calculated as follows:

Here \(\left\langle \cdot \right\rangle\) indicates average over a predetermined time interval. \(S_{x,y}\) represents the cross spectral density function (CSDF) of two signals, x and y, which is derived by Fourier transform of the CCF in Sect. 6.3.1. The definition of coherence includes the normalization of CSDF \(S_{x,y}\) by auto-spectral density functions, \(S_{x,x}\) and \(S_{y,y}\), so that the range of coherence becomes between 0 and 1.

It should be noted that the coherence is still sensitive to spectral power even though its definition contains the normalization by spectral powers of the two signals. Thus, it is often unclear whether the coherence at a specific frequency is dominated by powers of the signals and/or phase relationships between them [30].

3.3 Phase Locking Value (PLV)

PLV measures the degree of phase locking between two signals over time, by observing whether the phase difference between them is relatively constant within a temporal interval. Prior to calculating the PLV, the signals are first transformed into narrowband signal in the frequency band of interest (e.g., theta or gamma band) by bandpass filtering. The instantaneous phase angle, \(\phi (t)\) is calculated from the narrowband signal \(x(t)\) and its Hilbert transform, \(\tilde{x}(t)\), as follows [23, 30]:

The PLV between two signals x and y is calculated by averaging the phase difference over N time points as follows [30]:

Here, \(\phi_{x} (t)\) and \(\phi_{y} (t)\) represent the instantaneous phase angles for each time point t for two signals, x and y, respectively. PLV ranges between 0 (no synchronization) and 1 (perfect synchronization).

3.4 Phase Lag Index (PLI)

The PLI was developed to mitigate the spurious phase synchrony resulting from common sources, due to volume conduction or active reference electrodes [52]. This will be described in detail later in Sect. 6.4. The PLI is defined to quantify the asymmetry of the distribution of phase differences between two signals (i.e. either positive or negative phase differences). This asymmetry implies the presence of non-zero phase difference (i.e., time lag) between two signals. If the phase synchrony is due to the common sources, the phase differences are expected to be symmetrically distributed around zero.

The calculation of PLI is similar to that of the PLV, and involves bandpass filtering and Hilbert transform as follows [52]:

here sign represents the sign of the phase difference (i.e., −1 for negative, 1 for positive, and 0 for zero values, respectively). The PLI ranges between 0 (no synchronization) and 1 (perfect synchronization).

3.5 Mutual Information (MI)

MI quantifies the amount of information that two signals share each other based on a basic measure of information, Shannon entropy [48]. Shannon entropy is defined as the average amount of information (or code) which is necessary to encode a discrete variable [42, 48]. The entropy \(H(X)\) is calculated as follows:

Here, \(p(x_{i} )\) is the probability of the values of the signal x in the ith bin, and n represents the number of bins used to construct a histogram which approximates the probability density function (PDF) of x. The entropy is positive and has a unit in bits, and unrelated to the temporal structure of the signal. It is important to estimate the appropriate number of bins, since the approximation of PDF by a histogram is sensitive to the number of bins [15]. Diaconis and Freedman [16] suggested a guideline for an optimal number of bins as follows [16]:

where Qx is the range between the 25th and the 75th percentiles of data distribution X, n represents the total number of data points, and max(x) and min(x) are the maximum and minimum values of x, respectively.

From the entropies of the two signals x and y, and their joint entropy, i.e., H(X), H(y), and H(X,Y), MI is calculated as follows:

Also, \(H(X,Y)\) is the joint entropy between two signals, and defined as follows:

where \(p(x_{i} ,y_{j} )\) is the joint probability of the values of the signal x in the ith bin and the signal y in the jth bin. If there is no relationship between two signals at all, X and Y are independent, and thus, the joint probability \(p(x_{i} ,y_{j} )\) is equivalent to \(p(x_{i} )p(y_{j} )\). Hence, the joint entropy \(H(X,Y)\) will be \(H(X) + H(Y)\), and the MI becomes zero. Otherwise, the MI should be positive and would show the maximum value when two signals are equal.

3.6 Granger Causality (GC)

The idea of GC is that signal x causes signal y if the prediction error of y estimated by autoregressive (AR) modeling is significantly reduced when it is estimated by joint AR modeling of x and y [19]. This can be assessed by comparing the univariate and bivariate AR models for the two signals, x and y.

The univariate AR models for each signal, x and y, are described as follows [42]:

Here, p denotes the number of lagged observations included in the model (i.e., model order), and ax,n and ay,n are the model coefficients at time lag n, and ex and ey are the prediction error for each signal estimated by the model. The prediction error depends on the past values of the signal.

Alternatively, the joint, bivariate AR model of x and y is as follows:

Here, p is the model order, a and b contain the coefficients of the model, and ex,y and ey,x denote the prediction errors of the signals estimated by the model. Here the prediction error depends on the past values of both signals.

The prediction performances of the univariate and bivariate models can be compared quantitatively from the variances of the prediction errors as follows:

Here, \(\text{var} ( \cdot )\) denotes the variance.

The Granger causality between two signals, x and y, is calculated as the log-ratio of variances follows:

The prediction error of y should not be reduced whether x is considered or not for the estimation of y, if there exist no causal influence from x to y. This implies that the variances \(V_{y\left| y \right.}\) and \(V_{{y\left| {y,x} \right.}}\) are identical, and thus, \(GC_{x \to y}\) is close to zero. On the other hand, causal influence of x to y reduces the prediction error of y when x is considered. Hence, \(GC_{x \to y}\) becomes a positive value. The GC measure is directional. If the GCs of both directions are high, it can be interpreted as a bidirectional connectivity [42].

3.7 Partial Directed Coherence (PDC)

PDC is a frequency domain equivalent of the GC, based on multivariate autoregressive (MVAR) modeling of multichannel signals [3]. Let’s assume that the simultaneously recorded m channel signals \({\mathbf{x}}(t) = [x_{1} (t), \ldots ,x_{m} (t)]^{T}\) can be described by an MVAR model as follows:

here, p is the model order, \({\mathbf{A}}_{n} = \left[ {\begin{array}{*{20}c} {a_{1,1} (n)} & \cdots & {a_{1,m} (n)} \\ \vdots & \ddots & \vdots \\ {a_{m,1} (n)} & \cdots & {a_{m,m} (n)} \\ \end{array} } \right]\) is the matrix of model coefficients at time lag n, and \({\mathbf{e}}(t) = [e_{1} (t), \ldots ,e_{m} (t)]^{T}\) is a multivariate Gaussian white noise with zero mean and covariance matrix \({\varvec{\Sigma}}\). The model coefficients \(a_{m,m}\) indicate the influence among the signals (e.g., \(a_{1,2} (n)\) is the influence of \(x_{2} (t - n)\) on \(x_{1} (t)\)).

This time domain representation can be transformed into frequency domain by Fourier transform (FT). \({\bar{\mathbf{A}}}(f) = {\mathbf{I}} - {\mathbf{A}}(f) = [{\bar{\mathbf{a}}}_{1} (f){\bar{\mathbf{a}}}_{2} (f) \ldots {\bar{\mathbf{a}}}_{m} ]\), where A(f) is the FT of the model coefficients and \(\bar{a}_{i,j} (f)\) is the i, jth element of \({\bar{\mathbf{A}}}(f)\). The PDC from signal \(x_{i}\) to signal \(x_{j}\) can be calculated as follows:

where H indicates the transpose and complex conjugate operator. Thus, the PDC quantifies relative strength of the influence of the signal \(x_{i}\) on the signal \(x_{j}\) at frequency f.

Another metric based on a MVAR model, directed transfer function (DTF), was proposed [27]. The DTF is quite similar to the PDC metric in that it reveals causal relations between time-series based on a MVAR model. However, the DTF can be calculated from the transfer function matrix, H, instead of A for the PDC calculation, where those two matrices are related as \(H(f) = \bar{A}^{ - 1} (f)\). Because of the matrix inversion, DTF demands higher computational loads and may suffer from numerical imprecisions due to potential ill-conditioning of \(\bar{A}(f)\) [3]. If the structure of the matrix \(H(f)\) is preserved upon inversion, the DTF and PDC lead to identical results for the effective connectivity [3].

4 Volume Conduction Problem

As explained above, there exist numerous methods for the FCA of EEG (and MEG), which originated from various theoretical backgrounds. Considering the possibility of combining various preprocessing, FC measure, and postprocessing methods available, the choice of appropriate FCA method is far from obvious in most applications since each method has its own pros and cons, rendering the interpretation of the results ambiguous.

There exist several issues that deserve caution when interpreting the FCA results. For example, the estimated FC may reflect the true neuronal interaction or not. This is related to the fact that EEG (MEG as well) signals include both relevant and irrelevant signals and/or noises. Moreover, it is not possible to make sure whether the observed connectivity is due to direct or indirect one through an unobserved pathway. Besides, common reference problem and low signal-to-ratio causes significant amount of errors. In addition to the noise or artifact, the FCA results may be affected by the difference of signal-to-noise ratio between channels. Especially this has a huge effect on the estimation of information flow direction. A recent review paper provides a detailed discussion on these issues focusing on oscillatory coupling [6].

Methods have been developed to overcome aforementioned issues. For example, in the case of the FCA based on coupling between rhythmic oscillatory neural activities, the volume conduction problem may be alleviated from the fact that the phases of two rhythmic signals at any pair of locations are different by either 0° or 180°, since the effect of volume conduction and field spread can be regarded as instantaneous [36]. The measures of oscillatory coupling taking this into account have been developed, e.g., PLI [52], imaginary coherence [36], and phase slope index (Nolte et al. [37]). Converting scalp EEGs to current densities on cortical surfaces may be greatly helpful since common factors in the signals are significantly reduced. It is investigated which methods for the cortical source localization and FCA provide best results for the FCA [20, 22]. In this section, we try to provide a guideline to reduce the confounding effects of volume conduction in the FCA.

4.1 FCA Between the Signals from Surface Electrodes

An EEG electrode placed on scalp surface captures electric potential at a specific location on the scalp. Multiple cortical sources distributed over a wide area on cortical surface contribute to the voltage at a point on the scalp. Conversely, the electric current caused by a localized cortical current source is propagated to a wide area on the scalp. This field spread or volume conduction problem prohibits a rigorous FCA using scalp EEG, and incorrectly emphasizes the functional connections between proximate regions. Figure 6.2 shows examples of volume conduction effect. A single current source (A) affects more than one electrode (1 and 2). Also, the electromagnetic field originating from a single source (B) spreads to multiple adjacent electrodes (2 and 3) through brain tissues such as cerebrospinal fluid, dura, scalp, and skull. These common sources lead to spurious connectivity between scalp EEG channels even though all the cortical current sources are independent [6, 15, 38, 52]. Hence, caution should be made when calculating and interpreting the FC metrics.

Unpredictable phenomena may occur due to the volume conduction effect as illustrated in Fig. 6.3 which is generated from actual 64 channel EEG recordings during an auditory oddball task [12]. First, the phase differences between the EEGs from two nearby electrodes, Fpz and Fp1, were found to be concentrated at zero degree (Fig. 6.3a). Second, it was also found that the strength of connectivity is inversely correlated to the distance between two electrodes (Fig. 6.3b). In particular, the PLV between two closest neighbors showed almost perfect locking (i.e., PLV was close to 1). It was also observed that the connectivity strength is significantly correlated to the spectral power (Fig. 6.3c). Although these are only a few among the potential problem of the FC analysis using surface EEG, at least they should be checked to verify whether the conclusions are made by spurious effects of volume conduction.

Examples of spurious connectivity (PLV) due to the volume conduction. a Distributions of phase differences between two EEG channels in gamma band (30–50 Hz), solid lines denote the phase difference between two channels at a single temporal point, the length of the black arrow corresponds to the magnitude of average PLV. b Correlation between PLV and inter-electrode distance in gamma band. c Correlation between PLV and spectral power in gamma band

4.2 Spatial Filtering

Surface Laplacian is a method to estimate the amount of current source density (CSD) at the scalp, and behaves as a spatial highpass filter [43]. The potential distribution of EEGs on the scalp usually have low spatial frequency component due to volume conduction. When the surface Laplacian is properly used, the spurious low spatial frequency components may be reduced. As a result, its confounding effect on FC may be eliminated as well (For review of the algorithm of surface Laplacian, see [28, 43]).

We found that the spurious effects of volume conduction illustrated in Fig. 6.4 were greatly reduced or eliminated by applying surface Laplacian before the FC analysis. After applying the surface Laplacian, the phase differences between two nearby channels (Fpz and Fp1) were much more widely distributed (Fig. 6.4a), in contrast to the previous case of raw EEGs where the phase differences were concentrated around zero degree (Fig. 6.3a). The PLV was decreased to 0.55 after applying surface Laplacian, from 0.86. The correlation between the FC strength and inter-electrode distance was mitigated (from −0.787 to −0.467, Fig. 6.4b). The correlation between the spectral power and the FC in a frequency band became insignificant as well (Fig. 6.4c). In conclusion, the surface Laplacian may provide a partially useful method to reduce the confounding effects of the volume conduction in FC analysis of surface EEG.

Spatial filtering (surface Laplacian) can reduce the spurious effects of volume conduction. a Phase difference between two nearby channels became widely distributed after applying surface Laplacian. The correlation between PLV and inter-electrode distance b and between PLV and spectral power c became reduced compared to Fig. 6.3

4.3 FC Measures Robust to Volume Conduction Effect

Another solution is to use the FC metrics which is inherently robust to the volume conduction [34, 36, 38, 52, 58]. Nunez et al. [38] proposed a modified version of coherence, called the reduced coherence. It is calculated by subtracting the random coherence from the measured coherence. Alternatively, partial coherence was introduced, which removes the linear effect of the third time-series (considered as common source) from the coherence calculated from a pair of time-series [34].

Nolte et al. [36] suggested imaginary coherence (ImC). It is based on the hypothesis that the imaginary part of coherence (i.e., the non-zero phase difference) cannot be affected by the volume conduction [36]. In the same vein, Stam et al. [52] proposed the PLI based on how much the non-zero phase differences are distributed to negative (phase lag) or positive (phase lead) sides of the x axis on the complex plane [52]. More recently, an extended version of PLI, called the weighted PLI (WPLI), was suggested to take into account the magnitude as well as the distribution of the phase differences [58].

Figure 6.5 illustrates the advantage of PLI to mitigate the volume conduction effects, as compared to the PLV. The phase differences between EEGs of two nearby channels Fpz and Fp1 are distributed symmetrically around zero degree, which resulted in much lower value of PLI as compared to the PLV (0.09 vs. 0.86). In addition, the correlation between the FC and the inter-electrode distance became drastically reduced to −0.174, from −0.787 in the case of PLV (Fig. 6.5b). The correlation with the spectral power became insignificant as well (Fig. 6.5c). All the results in Fig. 6.5 shows that spurious FC due to the volume connection can be alleviated by using the PLI. Vinck et al. [58] pointed out the problems of the PLI. Temporal discontinuity may occur when there exist small perturbations which lead to phase lags from phase leads (and vice versa) between two time-series. Also, the estimation of PLI is statistically biased, hence its calculation may suffer from the small sample size [52, 58]. Modified versions of the PLI (weighted PLI and debiased weighted PLI) have been proposed to overcome these limitations [58].

The spurious effects of volume conduction can be reduced by using phase lag index (PLI). a Distribution of phase difference between two time-series. The red and blue colors indicate phase lead and phase lag, respectively. b Correlation between PLI and inter-electrode distance. c Correlation between PLI and spectral power

5 EEG FC Analysis on Source Space

Spatial filters such as surface Laplacian can be applied before the FCA to lessen the effect of volume conduction as shown above. More recently, the reconstruction of cortical current sources is performed prior to the FCA by solving an inverse problem [4, 33, 40]. This provides time-series of cortical current densities at numerous vertices on cortical surface, which enables the calculation of FC measures among cortical regions. The FCA at the cortical source space is advantageous also for the better explanation of the obtained results, since each pair of connection has anatomical interpretation [46].

Figure 6.6 illustrates the detailed procedure of FCA on cortical source space, where solutions of an electromagnetic inverse problem are used to estimate the cortical sources and reconstruct their temporal dynamics. Among several approaches proposed so far, methods based on a distributed cortical source model are appropriate since they aim to provide cortical current time-series at every cortical location (Fig. 6.6b). The most popular ones include the minimum norm estimate (MNE) and its variants (weighted MNE, wMNE), low resolution brain electromagnetic tomography (LORETA), and standardized LORETA (sLORETA). Beamforming methods are also applicable. It is also feasible that the mixed cortical sources due to the volume conduction are ‘demixed’ by blind source separation [21].

Estimated time-series represent current densities on cortical surface, and they are subject to FC measure calculation. Spatial sampling is commonly used to reduce the number of time-series, or regions of interest (ROIs) are selected before the FCA. The ROI selection is of crucial importance, and based on either a prior knowledge (Fig. 6.6c, image source: http://freesurfer.net) or the results of functional neuroimaging (Fig. 6.6d). Often, the most important ROIs are determined and the cortical maps which represent the crucial regions functionally connected to those ROIs.

Hassan et al. [20] reported a comparative study on the processing methods for the FCA on cortical source space [20]. They showed that the results are highly dependent on the selected processing methods as well as the number of electrodes. The combination of wMNE and PLV was found to yield the most relevant result. Their results imply that an optimal combination of source estimation and FCA is essential to correctly identify the functional cortical networks, and thus, the EEG source FCA should be performed carefully in terms of the detailed processing method.

It should be noted that there exist some cases where the spurious result is unavoidable. For example, when the FCA is performed on preselected ROIs, inappropriate ROI selection should lead to incorrect conclusions. The signal-to-noise ratio affects the FCA and may vary systematically according to experimental condition, thereby incorrect significant difference among conditions may be unavoidable.

Aforementioned two-step approaches, consisting of source estimation and FC measure calculation, may yield undesirable incorrect results as has been shown by simulation studies [21], due to several reasons. The unmixing of the scalp EEG signals is far from being perfect regardless of the methods of source estimation. Schoffelen and Gross [46] provides a review of methods for the FCA in cortical source space, focusing on selecting FC measure and region of interests (ROI) [46]. It is warranted that the field spread effect is not completely removed in the source space so that the FCA results should be carefully interpreted. The use of FC measures which are inherently insensitive to the instantaneous mixing, such as imaginary part of coherence (imagcoh), can be recommended to alleviate this problem [46].

Marzetti et al. [32] developed a method to decorrelate the reconstructed sources using principal component analysis (PCA) [32]. Assuming orthogonality between the estimated sources, further demixing is performed using an algorithm called minimum overlap component analysis. The locations of interacting sources are estimated under minimum overlap constraint after identifying the spatial topography of interacting sources from the sensor-space cross-spectral density. Gomez-Herrero et al. [18] presented a method for effective connectivity estimation based on the independent component decomposition of the residuals of the MVAR model, which are probably due to the field spread [18]. The spatial topography of the interacting sources is obtained from the ICA mixing matrix. More recently, Haufe [21] proposed a novel measure of effective connectivity based on physiologically-motivated model of interacting sources and sparse connectivity graph [21]. A one-shot calculation method for the blind source separation and inverse source reconstruction, which yields the source time series, their spatial distribution, and the connectivity structure.

6 Determination of Significance

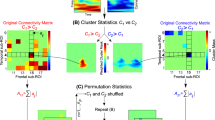

The calculated FC metrics may include false positives due to several confounding effects such as residual artifacts, volume conduction, and common reference. Hence it is important to determine statistical significance. As shown in Fig. 6.7a, null distribution of the FC can be generated from a surrogate data obtained by random shuffling and used to determine significance, which is often defined by the upper 5 or 1% of the null distribution.

Several methods can be used to generate the surrogate data from the experimental data [15, 30, 54]. Random shuffling of the time samples of one of the two time-series destroys the temporal structure. If all time samples are randomly shuffled and the temporal structure is completely destroyed, the null distribution obtained from the surrogate data may result in excessively high false positive rate, i.e., inflate the statistical significance. This can be understood from the fact that the FC measure calculated from any experimental data would be much higher than those calculated from surrogate data, in which the temporal structure is completely destroyed. An alternative is illustrated in Fig. 6.7b, which is called ‘time-shift’ method [15]. Here one time-series is separated into two segments at a randomly chosen temporal point, and then, a new surrogate time-series is generated by exchanging temporal positions of those two segments.

Instead of the random shuffling in time domain, it can also be performed in the frequency domain as shown in Fig. 6.7c [54]. Briefly, the procedure includes fast Fourier transform (FFT), shuffling the phase of the signal in the frequency domain, and then, the inverse FFT. The amplitude spectrum is preserved, but any nonlinear structure is destroyed after this procedure [54].

In the event-related data with a plenty of trials, shuffling the order of trials of the second time-series provides an alternative method to surrogate data [30]. Figure 6.8 shows an example for the phase-based FC metrics. For experimental recordings for which phase synchrony are expected, the phase differences between two time-series would be narrowly distributed. Contrarily, the phase differences from surrogate data would be widely distributed randomly, and thus, may provide a null distribution of FC values. This method does not require a prior hypothesis on the time-series such as linearity and stationarity, however, when the trial-to-trial variability of phase relationships between two time-series is relatively low, it can be so conservative that many FC values may be incorrectly rejected, resulting in high false negative rate.

Generation of a surrogate data by shuffling trials for an event-related data. Each grey thin solid line on the circles represents the phase difference between two time-series at each temporal point for a single trial. The black thick solid lines on the circles represent the vector sum of the phase differences over trials, and their lengths mean the phase synchronization strength

7 Conclusions

In this review, we tried to describe essential factors for successful FCA using multichannel EEG (and MEG as well) time-series. After illustrating the detailed procedure for the FCA, we presented various methods for quantifying functional connectivity. Especially, the FC measures based on oscillatory interactions among neuronal population was described comprehensively, due to its importance for elucidating coordinated activities of brain networks and synaptic plasticity. The problem of volume conduction and the means to diminish its confounding effects on the FCA was thoroughly reviewed. Spatial filtering of the time-series measured on the scalp or transformation to current densities on cortical surface, which are performed as a preprocessing for the FCA, were described. Also, we reviewed ongoing efforts toward developing FC measures which are inherently robust to the volume conduction problem. Finally, we illustrated the procedures for determining significance of the FC among specific pair of regions, which exploit surrogate data generation or the characteristics of event-related data. We hope that this review would provide guidelines for the better application of the FCA and the development of novel methods.

References

A.R. Anwar, M. Muthalib, S. Perrey et al., Effective connectivity of cortical sensorimotor networks during finger movement tasks: a simultaneous fNIRS, fMRI, EEG study. Brain Topogr. 29, 645–660 (2016)

F. Babiloni, F. Cincotti, C. Babiloni et al., Estimation of the cortical functional connectivity with the multimodal integration of high-resolution EEG and fMRI data by directed transfer function. Neuroimage 24, 118–131 (2004)

L.A. Baccalá, K. Sameshima, Partial directed coherence: a new concept in neural structure determination. Biol. Cybern. (2001). https://doi.org/10.1007/PL00007990

S. Baillet, J.C. Mosher, R.M. Leahy, Electromagnetic brain mapping. IEEE Signal Process. Mag. 18, 14–30 (2001). https://doi.org/10.1109/79.962275

E. Barzegaran, M.G. Knyazeva, Functional connectivity analysis in EEG source space: the choice of method. PLoS ONE 12, e0181105 (2017). https://doi.org/10.1371/journal.pone.0181105

A.M. Bastos, J.-M. Schoffelen, A tutorial review of functional connectivity analysis methods and their interpretational pitfalls. Front. Syst. Neurosci. 9, 175 (2016). https://doi.org/10.3389/fnsys.2015.00175

R.L. Buckner, F.M. Krienen, B.T.T. Yeo, Opportunities and limitations of intrinsic functional connectivity MRI. Nat. Neurosci. 16, 832–837 (2013). https://doi.org/10.1038/nn.3423

E. Bullmore, O. Sporns, Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198 (2009). https://doi.org/10.1038/nrn2575

G. Buzsáki, A. Draguhn, Neuronal oscillations in cortical networks. Science 304, 1926–1929 (2004)

R.T. Canolty, R.T. Knight, The functional role of cross-frequency coupling. Trends Cogn. Sci. 14, 506–515 (2010). https://doi.org/10.1016/j.tics.2010.09.001

L. Canuet, R. Ishii, R.D. Pascual-Marqui et al., Resting-state EEG source localization and functional connectivity in schizophrenia-like psychosis of epilepsy. PLoS ONE 6. https://doi.org/10.1371/journal.pone.0027863

J.W. Choi, K.S. Cha, J.D. Choi et al., Difficulty-related changes in inter-regional neural synchrony are dissociated between target and non-target processing. Brain Res. 1603, 114–123 (2015). https://doi.org/10.1016/j.brainres.2015.01.031

J.W. Choi, K.M. Jang, K.Y. Jung et al., Reduced theta-band power and phase synchrony during explicit verbal memory tasks in female, non-clinical individuals with schizotypal traits. PLoS ONE 11, 1–18 (2016). https://doi.org/10.1371/journal.pone.0148272

J.W. Choi, D. Ko, G.T. Lee et al., Reduced neural synchrony in patients with restless legs syndrome during a visual oddball task. PLoS ONE 7, 1–9 (2012). https://doi.org/10.1371/journal.pone.0042312

M.X. Cohen, Analyzing Neural Time Series Data: Theory and Practice (The MIT Press, Cambridge, Massachusetts, 2014)

P.W. Diaconia, D. Freedman, Consistency of Bayes estimates for nonparametric regression: normal theory. Bernoulli 4, 411–444 (1998)

K.J. Friston, Functional and effective connectivity: a review. Brain Connect 1, 13–36 (2011). https://doi.org/10.1089/brain.2011.0008

G. Gómez-Herrero, M. Atienza, K. Egiazarian, J.L. Cantero, Measuring directional coupling between EEG sources. Neuroimage 43, 497–508 (2008). https://doi.org/10.1016/j.neuroimage.2008.07.032

C.W.J. Granger, Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438 (1969)

M. Hassan, O. Dufor, I. Merlet et al., EEG source connectivity analysis: from dense array recordings to brain networks. PLoS ONE 9, (2014). https://doi.org/10.5281/zenodo.10498

S. Haufe, Towards EEG Source Connectivity Analysis (Berlin Institute of Technology, Berlin, Germany, 2012)

S. Haufe, V.V. Nikulin, K.-R. Müller, G. Nolte, A critical assessment of connectivity measures for EEG data: a simulation study. Neuroimage 64, 120–133 (2013). https://doi.org/10.1016/j.neuroimage.2012.09.036

C.S. Herrmann, M.H.J. Munk, A.K. Engel, Cognitive functions of gamma-band activity: memory match and utilization. Trends Cogn. Sci. 8, 347–355 (2004). https://doi.org/10.1016/j.tics.2004.06.006

A.-S. Hincapié, J. Kujala, J. Mattout et al., The impact of MEG source reconstruction method on source-space connectivity estimation: a comparison between minimum-norm solution and beamforming. Neuroimage 156, 29–42 (2017). https://doi.org/10.1016/j.neuroimage.2017.04.038

J.F. Hipp, D.J. Hawellek, M. Corbetta et al., Large-scale cortical correlation structure of spontaneous oscillatory activity. Nat. Neurosci. (2012). https://doi.org/10.1038/nn.3101

R.M. Hutchison, T. Womelsdorf, E.A. Allen et al., Dynamic functional connectivity: promise, issues, and interpretations. Neuroimage 80, 360–378 (2013). https://doi.org/10.1016/j.neuroimage.2013.05.079

M.J. Kaminski, K.J. Blinowska, A new method of the description of the information flow in the brain structures. Biol. Cybern. 65, 203–210 (1991)

J. Kayser, C.E. Tenke, Principal components analysis of Laplacian waveforms as a generic method for identifying ERP generator patterns: II. Adequacy of low-density estimates. Clin. Neurophysiol. 117, 369–380 (2006). https://doi.org/10.1016/j.clinph.2005.08.033

S. Khan, A. Gramfort, N.R. Shetty et al., Local and long-range functional connectivity is reduced in concert in autism spectrum disorders. Proc. Natl. Acad. Sci. U S A. 110, 3107–3112 (2013). https://doi.org/10.1073/pnas.1214533110

J.-P. Lachaux, E. Rodriguez, J. Martinerie, F.J. Varela, Measuring phase synchrony in brain signals. Hum. Brain Mapp. 8, 194–208 (1999)

Y.-Y. Lee, S. Hsieh, Classifying different emotional states by means of EEG-based functional connectivity patterns. PLoS ONE 9, (2014). https://doi.org/10.1371/journal.pone.0095415

L. Marzetti, C. Del Gratta, G. Nolte, Understanding brain connectivity from EEG data by identifying systems composed of interacting sources. Neuroimage 42, 87–98 (2008). https://doi.org/10.1016/j.neuroimage.2008.04.250

C.M. Michel, M.M. Murray, G. Lantz et al., EEG source imaging. Clin. Neurophysiol. 115, 2195–2222 (2004). https://doi.org/10.1016/j.clinph.2004.06.001

T. Mima, T. Matsuoka, M. Hallett, Functional coupling of human right and left cortical motor areas demonstrated with partial coherence analysis. Neurosci. Lett. 287, 93–96 (2000)

W. Mumtaz, S. Saad, A. Ali et al., A machine learning framework involving EEG-based functional connectivity to diagnose major depressive disorder (MDD). Med. Biol. Eng. Comput. (2017). https://doi.org/10.1007/s11517-017-1685-z

G. Nolte, O. Bai, L. Wheaton et al., Identifying true brain interaction from EEG data using the imaginary part of coherency. Clin. Neurophysiol. 115, 2292–2307 (2004). https://doi.org/10.1016/j.clinph.2004.04.029

G. Nolte, A. Ziehe, V.V. Nikulin et al., Robustly Estimating the Flow Direction of Information in Complex Physical Systems. (2007). https://doi.org/10.1103/physrevlett.100.234101

P.L. Nunez, R. Srinivasan, A.F. Westdorp et al., EEG coherency I: statistics, reference electrode, volume conduction, Laplacians, cortical imaging, and interpretation at multiple scales. Electroencephalogr. Clin. Neurophysiol. 103, 499–515 (1997)

S. Palva, J.M. Palva, The role of local and large-scale neuronal synchronization in human cognition, in Multimodal Oscillation-Based Connectivity Theory, ed. by S. Palva (Springer International Publishing Switzerland, 2016), pp. 51–67

R.D. Pascual-Marqui, Review of methods for solving the EEG inverse problem. Int. J. Bioelectromagn. Print Issue ISSN 1:75–86 (1999)

R.D. Pascual-Marqui, C.M. Michel, D. Lehmann, Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 18, 49–65 (1994)

E. Pereda, R. Quian Quiroga, J. Bhattacharya, Nonlinear multivariate analysis of neurophysiological signals. Prog. Neurobiol. 77, 1–37 (2005). https://doi.org/10.1016/j.pneurobio.2005.10.003

F. Perrin, J. Pernier, O. Bertrand, J.F. Echallier, Spherical splines for scalp potential and current density mapping. Electroencephalogr. Clin. Neurophysiol. 72, 184–187 (1989). https://doi.org/10.1016/0013-4694(89)90180-6

V. Sakkalis, Review of advanced techniques for the estimation of brain connectivity measured with EEG/MEG. Comput. Biol. Med. 41, 1110–1117 (2011). https://doi.org/10.1016/j.compbiomed.2011.06.020

P. Sauseng, W. Klimesch, W.R. Gruber, N. Birbaumer, Cross-frequency phase synchronization: a brain mechanism of memory matching and attention. Neuroimage 40, 308–317 (2008). https://doi.org/10.1016/j.neuroimage.2007.11.032

J.M. Schoffelen, J. Gross, Source connectivity analysis with MEG and EEG. Hum. Brain Mapp. 30, 1857–1865 (2009). https://doi.org/10.1002/hbm.20745

A.K. Seth, A.B. Barrett, L. Barnett, Toolbox granger causality analysis in neuroscience and neuroimaging. J. Neurosci. 35, 3293–3297 (2015). https://doi.org/10.1523/JNEUROSCI.4399-14.2015

C.E. Shannon, A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423 (1948). https://doi.org/10.1002/j.1538-7305.1948.tb01338.x

M. Siegel, T.H. Donner, A.K. Engel, Spectral fingerprints of large-scale neuronal interactions. Nat. Rev. Neurosci. 13, 121–134 (2012). https://doi.org/10.1038/nrn3137

W. Singer, Synchronization of cortical activity and its putative role in information processing and learning. Annu. Rev. Physiol. 55, 349–374 (1993)

C.J. Stam, W. De Haan, A. Daffertshofer et al., Graph theoretical analysis of magnetoencephalographic functional connectivity in Alzheimer’s disease. Brain 132, 213–224 (2009). https://doi.org/10.1093/brain/awn262

C.J. Stam, G. Nolte, A. Daffertshofer, Phase lag index: assessment of functional connectivity from multi channel EEG and MEG with diminished bias from common sources. Hum. Brain Mapp. 28, 1178–1193 (2007). https://doi.org/10.1002/hbm.20346

C.J. Stam, J.C. Reijneveld, Nonlinear biomedical physics graph theoretical analysis of complex networks in the brain. Nonlinear Biomed. Phys. 1, 1–19 (2007). https://doi.org/10.1186/1753-4631-1-3

J. Theilier, S. Eubank, A. Longtin et al., Testing for nonlinearity in time series: the method of surrogate data. Phys. D 58, (1992)

M.P. Van Den Heuvel, H.E. Hulshoff Pol, Exploring the brain network: a review on resting-state fMRI functional connectivity. Eur. Neuropsychopharmacol. 20, 519–534 (2010). https://doi.org/10.1016/j.euroneuro.2010.03.008

F. Varela, J.-P. Lachaux, E. Rodriguez, J. Martinerie, The brainweb: phase synchronization and large-scale integration. Nat. Rev. Neurosci. 2, 229–239 (2001)

F. Vecchio, F. Miraglia, C. Marra et al., Human brain networks in cognitive decline: a graph theoretical analysis of cortical connectivity from EEG data. J. Alzheimer’s Dis. 41, 113–127 (2014). https://doi.org/10.3233/JAD-132087

M. Vinck, R. Oostenveld, M. Van Wingerden et al., An improved index of phase-synchronization for electrophysiological data in the presence of volume-conduction, noise and sample-size bias. Neuroimage 55, 1548–1565 (2011). https://doi.org/10.1016/j.neuroimage.2011.01.055

B. Voytek, M. D’esposito, N. Crone, R.T. Knight, A method for event-related phase/amplitude coupling. Neuroimage 64, 416–424 (2013). https://doi.org/10.1016/j.neuroimage.2012.09.023

L.M. Ward, Synchronous neural oscillations and cognitive processes. Trends Cogn. Sci. 7, 553–559 (2003). https://doi.org/10.1016/j.tics.2003.10.012

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Choi, J.W., Kim, K.H. (2018). Methods for Functional Connectivity Analysis. In: Im, CH. (eds) Computational EEG Analysis. Biological and Medical Physics, Biomedical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-13-0908-3_6

Download citation

DOI: https://doi.org/10.1007/978-981-13-0908-3_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-0907-6

Online ISBN: 978-981-13-0908-3

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)