Abstract

More and more tax administrations are using algorithms and automated decision-making systems for taxpayer profiling and risk assessment. While both provide benefits in terms of better resource allocation and cost-efficiency, their use is not free from legal concerns from the perspective of EU data protection and human rights legislation. Since 2017 the Polish National Revenue Administration has been using STIR—a data analytics tool to detect VAT fraud in nearly real-time. STIR calculates a risk indicator for every entrepreneur on the basis of his financial data. If an entrepreneur is considered at high risk of being involved in VAT fraud, the National Revenue Administration may impose severe administrative measures. From the taxpayer perspective, STIR operates in a black-box manner as the way in which the risk indicators are calculated is not disclosed to the general public. Although STIR’s effectiveness in fighting VAT fraud would be reduced if the algorithms that it uses were publicly known, it is questionable whether STIR complies with the principle of proportionality and the right to explanation, both of which are mandated under the EU data protection regulation and human rights legislation.

A shorter version of this chapter was first published by Tax Analysts in Tax Notes International, volume 95, number 12 (16 September 2019).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Algorithmic decisions may produce discriminatory results. As algorithms learn from observation data, if this data is biased, this will be picked up by the algorithm. For example, a recruiting tool developed by one large company tended to discriminate against women for technical jobs. The company’s hiring tool used artificial intelligence to give job candidates scores ranging from one to five. As most resumes came from men, the system taught itself that male candidates were preferable. It penalized resumes that included the word “women’s”. The discussion of biased algorithmic decisions is outside the scope of this chapter.

- 2.

In linear models, the outcome is calculated by multiplying the value of each factor by its relevant weight and then summing up all the results. Examples of linear models are logistic and linear regressions.

- 3.

Decision tree is created by recursively segmenting a population into smaller and smaller groups.

- 4.

Neural networks are a set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns in data. Deep learning involves feeding a lot of data through multi-layered neural networks that classify the data based on the outputs from each successive layer.

- 5.

Ensemble models are large collections of individual models, each of which has been developed using a different set of data or algorithm. Each model makes predictions in a slightly different way. The ensemble combines all the individual predictions to arrive at one final prediction.

- 6.

Google has developed AlphaGo, a computer system powered by deep learning, to play the board game Go. Although AlphaGo made several moves that were evidently successful, its reasoning for making certain moves has been described as “inhuman” since no human could comprehend the decision-making rationale.

- 7.

Overfitting refers to a modeling error that occurs when a function corresponds too closely to a particular set of training data and does not generalize well on new unseen data.

- 8.

VAT Gap is the difference between expected VAT revenues and VAT actually collected. It provides an estimate of revenue loss due to tax fraud, tax evasion and tax avoidance, but also due to bankruptcies, financial insolvencies or miscalculations.

- 9.

SAF-T (Standard Audit File for Tax) is a standardized electronic file that the OECD developed in 2005 to facilitate the electronic exchange of tax and accounting information between taxpayers and tax authorities. As the trend toward digitalizing tax compliance progressed, the OECD’s SAF-T idea began to receive increasing attention across the globe. Many EU countries implemented their own version of the SAF-T file that significantly deviates from the OECD standard.

- 10.

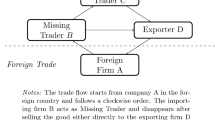

Carousel fraud is a common form of missing trader fraud where fraudsters exploit the VAT rules providing that the movement of goods between Member States is VAT-free. In carousel fraud, VAT and goods are passed around between companies and jurisdictions, similar to how a carousel revolves.

- 11.

Act of 24 November 2017 on Preventing the Use of the Financial Sector for VAT fraud (Ustawa z dnia 24 listopada 2017 r. o zmianie niektórych ustaw w celu przeciwdziałania wykorzystywaniu sektora finansowego do wyłudzeń skarbowych).

- 12.

The Clearing House is an entity of the Polish payment system infrastructure. It is responsible for ensuring complete and reliable interbank clearing in PLN and EUR, providing services supporting cashless payments, providing common services and R&D for the Polish banking sector, and providing services supporting participation of the banking sector in the programs for public administration.

- 13.

In 2019, the authorization to impose administrative measures was extended to the heads of tax and customs offices and directors of the regional chambers of the revenue administration.

- 14.

National Revenue Administration 2019.

- 15.

National Revenue Administration 2020.

- 16.

Other circumstances in which personal data processing is lawful without an individual’s consent include, for example, (1) processing that is necessary for the performance of a contract to which the individual is party or in order to take steps at the request of the individual prior to entering into a contract; or (2) processing that is necessary for the performance of a task carried out in the public interest.

- 17.

Wallace 2017.

- 18.

Article 29 Data Protection Working Party 2018.

- 19.

ECHR 2019.

- 20.

ECHR, 24 April 2007, Matyjek v. Poland [2007] ECHR 317; ECHR, 9 October 2008, Moiseyev v. Russia, [2008] ECHR 1031.

- 21.

ECHR, 22 June 2000, Coëme and Others v. Belgium, [2000] ECHR 250.

- 22.

ECHR, 25 July 2000, Mattoccia v. Italy, [2000] ECHR 383.

- 23.

CJEU, 17 December 2015, WebMindLicenses Kft. v. Nemzeti Adó- és Vámhivatal Kiemelt Adó- és Vám Főigazgatóság, C-419/14.

References

Article 29 Data Protection Working Party (2018) Guidelines on Automated Individual Decision-Making and Profiling for the Purposes of Regulation 2016/679. Adopted on 3 October 2017. As last Revised and Adopted on 6 February 2018. https://ec.europa.eu/newsroom/article29/items/612053 Accessed 28 June 2021.

ECHR (2019) Guide on Article 6 of the European Convention on Human Rights, Right to a fair trial (criminal limb). https://www.echr.coe.int/documents/guide_art_6_criminal_eng.pdf Accessed 28 June 2021.

National Revenue Administration (2019) 2018 Annual Report on Preventing the Use of Banks and Credit Unions for VAT fraud purposes (Sprawozdanie za 2018 rok w zakresie przeciwdziałania wykorzystywaniu działalności banków i spółdzielczych kas oszczędnościowo-kredytowych do celów mających związek z wyłudzeniami).

National Revenue Administration (2020) 2019 Annual Report on Preventing the Use of Banks and Credit Unions for VAT fraud purposes (Sprawozdanie za 2019 rok w zakresie przeciwdziałania wykorzystywaniu działalności banków i spółdzielczych kas oszczędnościowo-kredytowych do celów mających związek z wyłudzeniami).

Wallace N (2017) EU's Right to Explanation: A Harmful Restriction on Artificial Intelligence. https://www.techzone360.com/topics/techzone/articles/2017/01/25/429101-eus-right-explanation-harmful-restriction-artificial-intelligence.htm Accessed 28 June 2021.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 T.M.C. Asser Press and the authors

About this chapter

Cite this chapter

Bal, A. (2022). Black-Box Models as a Tool to Fight VAT Fraud. In: Custers, B., Fosch-Villaronga, E. (eds) Law and Artificial Intelligence. Information Technology and Law Series, vol 35. T.M.C. Asser Press, The Hague. https://doi.org/10.1007/978-94-6265-523-2_12

Download citation

DOI: https://doi.org/10.1007/978-94-6265-523-2_12

Published:

Publisher Name: T.M.C. Asser Press, The Hague

Print ISBN: 978-94-6265-522-5

Online ISBN: 978-94-6265-523-2

eBook Packages: Law and CriminologyLaw and Criminology (R0)