Abstract

In his logic of action, Krister Segerberg has provided many insights about how to formalize actions. In this chapter I consider these insights critically, concluding that any formalization of action needs to be thoroughly connected to the relevant reasoning, and in particular to temporal reasoning and planning in realistic contexts. This consideration reveals that Segerberg’s ideas are limited in several fundamental ways. To a large extent, these limitations have been overcome by research that has been carried out for many years in Artificial Intelligence.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The philosophy of action is an active area of philosophy, in its modern form largely inspired by [1]. It considers issues such as (1) The ontology of agents, agency, and actions, (2) the difference (if any) between actions and bodily movements, (3) whether there are such things as basic actions, (4) the nature of intention, and (5) whether intentions are causes. Many of these issues have antecedents in earlier philosophical work, and for the most part the recent literature treats them using traditional philosophical methods.

These questions have been pretty thoroughly thrashed out over the last fifty years. Whether or not the discussion has reached a point of diminishing returns, it might be helpful at this point to have a source of new ideas. It would be especially useful to have an independent theory of actions that informs and structures the issues in much the same way that logical theories based on possible worlds have influenced philosophical work in metaphysics and philosophy of language. In other words, it might be useful to have a logic of action.

But where to find it? A logic of action is not readily available, for instance, in the logical toolkit of possible worlds semantics. Although sentences expressing the performance of actions, like Sam crossed the street, correspond to propositions and can be modeled as sets of possible worlds, and verb phrases like cook a turkey can be modeled as functions from individuals to propositions, this does not take us very far. Even if it provides a compositional semantics for a language capable of talking about actions, it doesn’t distinguish between Sam crossed the street and Judy is intelligent, or between cook a turkey and ought to go home. As far as possible worlds semantics goes, both sentences correspond to propositions, and both verb phrases to functions from individuals to propositions. But only one of the sentences involves an action and only one of the verb phrases expresses an action.

Krister Segerberg’s approach to action differs from contemporary work in the philosophy of action in seeking to begin with logical foundations. And it differs from much contemporary work in philosophical logic by drawing on dynamic logic as a source of ideas.Footnote 1 The computational turn makes a lot of sense, for at least two reasons. First, as we noted, traditional philosophical logic doesn’t seem to provide tools that are well adapted to modeling action. Theoretical computer science represents a relatively recent branch of logic, inspired by a new application area, and we can look to it for innovations that may be of philosophical value. Second, computer programs are instructions, and so are directed towards action; rather than being true or false, a computer program is executed. A logical theory of programs could well serve to provide conceptual rigor as well as new insights to the philosophy of action.

2 Ingredients of Segerberg’s Approach

There are two sides to Segerberg’s approach to action: logical and informal. The logical side borrows ideas from dynamic logic.Footnote 2 The informal side centers around the concept of a routine, a way of accomplishing something. Routines figure in computer science, where they are also known as procedures or subroutines, but Segerberg understands them in a more everyday setting; in [23], he illustrates them with culinary examples, in [17] with the options available to his washing machine. Cookbook recipes are routines, as are techniques for processing food. Just as computer routines can be strung together to make complex programs, everyday routines can be combined to make complex plans.

It is natural to invoke routines in characterizing other concepts that are important in practical reasoning: consider agency, ends, intentions, and ability, Agency is the capacity to perform routines. Ends are desired ways for the future to be—propositions about the future—that can be realized by the performance of a routine. An agent may choose to perform a routine in order to achieve an end; such a determination constitutes an intention. When circumstances allow the execution of a routine by an agent, the agent is able to perform the routine. These connections drive home the theoretical centrality of routines.

Dynamic logic provides a logical theory of program executions, using this to interpret programming languages. It delivers methods of intepreting languages with complex imperative constructions as well as models of routines, and so is a very natural place to look for ideas that might be useful in theorizing about action.

3 Computation and Action

To evaluate the potentiality of adapting dynamic logic to this broader application, it’s useful to begin with the computational setting that motivated the logic in the first place.

3.1 The Logic of Computation

Digital computers can be mathematically modeled as Turing machines. These machines manipulate the symbols on an infinite tape. We can assume that there are only two symbols on a tape, ‘0’ and ‘1’, and we insist that at each stage of a computation, the tape displays only a finite number of ‘1’s. The machine can change the tape, but these changes are local, and in fact can only occur at a single position of the tape. (This position can be moved as the program is executed, allowing the machine to scan its tape in a series of sequential operations.)

Associated with each Turing machine is a finite set of “internal states:” you can think of these as dispositions to behave in certain ways. Instructions in primitive “Turing machine language” prescribe simple operations, conditional on the symbol that is currently read and the internal state. Each instruction tells the machine which internal state to assume, and whether (1) to move the designated read-write position to the right, (2) to move it to the left, or (3) to rewrite the current symbol, without moving left or right.

This model provides a clear picture of the computing agent’s computational states: such a state is determined by three things: (1) the internal state, (2) the symbols written on the tape, and (3) the read-write position. If we wish to generalize this picture, we could allow the machine to flip a coin at each step and to consult the result of the coin flip in deciding what to do. In this nondeterministic case, the outcome of a step would in general depend on the observed result of the randomizing device.

In any case—although the Turing machine model can be applied to the performance of physically realized computers—Turing machine states are not the same as the physical states of working computer. For one thing, the memory of every realized computer is bounded. For another, a working computer can fail for physical reasons.

A Turing machine computation will traverse a series of states, producing a path beginning with the initial state and continuing: either infinitely, in case the computation doesn’t halt, or to a final halting state. For deterministic machines, the computation is linear. Nondeterministic computations may branch, and can be represented by trees rooted in the initial state.

In dynamic models, computation trees are used to model programs. For instance, consider the program consisting of the two lines in Fig. 1.Footnote 3

The first instruction binds the variable \(x\) to 1. The second nondeterministically binds \(y\) either to 0 or to 1. Let the initial state be \(\sigma \), and let \(\tau [x:n]\) be the result of changing the value of \(x\) in \(\tau \) to \(n\). Then the program is represented by the computation tree shown in Fig. 2.

3.2 Agents, Routines, and Actions

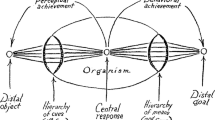

Transferring this model of programs from a computational setting to the broader arena of humanlike agents and their actions, Segerberg’s idea is to use such trees to model routines, as executed by deliberating agents acting in the world. The states that figure in the “execution trees” will now be global states, consisting not only of the cognitive state of the agent, but also of relevant parts of the environment in which the routine is performed. And rather than computation trees, Segerberg uses a slightly more general representation: sets of sequences of states.Footnote 4 (See [21], p. 77.)

For Segerberg, routines are closely connected to actions: in acting, an agent executes a chosen routine. Some of Segerberg’s works discuss actions with this background in mind but without explicitly modeling them. The works that provide explicit models of action differ, although the variations reflect differences in the theoretical context and purposes, and aren’t important for our purposes. Here, I’ll concentrate on the account in [31].

If a routine is nondeterministic, the outcome when it is executed will depend on other concurrent events, and perhaps on chance as well. But—since the paths in a set \(S\) representing a routine include all the transitions that are compatible with running the routine—the outcome, whatever it is, must be a member of \(S\). Therefore, a realized action would consist of a pair \(\langle S,p \rangle \) where \(S\) is a routine, i.e. a set of state-paths, and \(p\in S\). In settings where there is more than one agent, we also need to designate the agent. This leads us to the idea of ([31], p. 176), where an individual action is defined as a triple \(\langle i,S,p \rangle \), where \(i\) is an agent, \(S\) is a set of state-paths, and \(p\in S\). I’ll confine myself in what follows to the single-agent case, identifying a realized action with a pair \(\langle S,p \rangle \).

Consider one of Segerberg’s favorite example of an action—someone throws a dart and hits the bullseye. An ordinary darts player does this by executing his current, learned routine for aiming and throwing a dart. When the action is executed, chance and nature combine with the routine to determine the outcome. Thus, execution of the same routine—which could be called “trying to hit the bullseye with dart \(d\)”—might or might not constitute a realized action of hitting the bullseye.

This idea has ontological advantages. It certainly tells us everything that can be said about what would happen if a routine is run. It clarifies in a useful way the distinction between trying to achieve an outcome and achieving it. It can be helpful in thinking about certain philosophical questions—for instance, questions about how actions are individuated. (More about this below, in Sect. 9.) And it makes available the theoretical apparatus of dynamic logic. But it is not entirely unproblematic, and I don’t think that Segerberg has provided a solution to the problem of modeling action that is entirely definitive. I will mention three considerations.

Epistemology. The idea has problems on the epistemological side; it is not so clear how an agent can learn or compute routines, if routines are constituted by global states. Whether my hall light will turn on, for instance, when I flip the switch will depend (among other things) on details concerning the power supply, the switch, the wiring to the bulb, and the bulb itself. Causal laws involving electronics. and initial conditions concerning the house wiring, among other things, will determine what paths belong to the routine for turning on the light.

While I may need causal knowledge of some of these things to diagnose the problem if something goes wrong when I flip the switch, knowing how to do something and how to recover from a failure are different things. I don’t have to know about circuit breakers, for instance, in order to know how to turn on the light.

Perhaps such epistemological problems are similar to those to which possible worlds models in general fall prey, and are not peculiar to Segerberg’s theory of action.

Fit to common sense. Our intuitions about what counts as an action arise in common sense and are, to some extent, encoded in how we talk about actions. And it seems that we don’t think and talk about inadvertance in the way that Segerberg’s theory would lead us to expect. Suppose, for instance, that in the course of executing a routine for getting off a bus I step on a woman’s foot. In cases like this, common sense tells us that I stepped on her foot. I have done something here that I’m responsible for, and that at the very least I should apologize for. If I need to excuse the action, I’d say that I didn’t mean to do it, not that I didn’t do it. But Segerberg’s account doesn’t fit this sort of action; I have not in any sense executed a routine for stepping on the woman’s foot.Footnote 5

Logical flexibility and simplicity. A contemporary logic of action, to be at all successful, must go beyond illuminating selected philosophical examples. Logics of action are used nowadays to formalize realistic planning domains, to provide knowledge representation support for automated planning systems.

Formalizing these domains requires an axiomatization of what Segerberg called the change function, which tells us what to expect when an action is performed. In general, these axiomatizations require quantifying over actions. See, for instance, [3, 14, 34]. Most of the existing formalisms treat actions as individuals. Segerberg’s languages don’t provide for quantification over actions, and his reification of actions suggests a second-order formalization.

As far as I can see, a second-order logic of actions is not ruled out by the practical constraints imposed by knowledge representation, but it might well be more difficult to work with than a first-order logic. In any case, first-order theories of action have been worked out in detail and used successfully for knowledge representation purposes. Second-order theories haven’t.

For philosophical and linguistic purposes, I myself would prefer to think of actions, and other eventualities, as individuals. Attributes can then be associated with actions as needed. In a language (for instance, a high-level control language for robot effectors) that accommodates motor routines, nothing would prevent us from explicitly associating such routines with certain actions. But for many planning purposes, this doesn’t turn out to be important. We remain more flexible if we don’t insist on reifying actions as routines.

3.3 From Computation to Agency

Segerberg’s approach is problematic in more foundational respects. The assumption that computational states can be generalized to causally embedded agents who must execute their routines in the natural world is controversial, and needs to be examined critically.

It is not as if the thesis that humans undergo cognitive states can be disproved; the question is whether the separation of cognitive from physical states is appropriate or useful in accounting for action. This specific problem in logical modeling, by the way, is related to the much broader and more vague mind-body problem in philosophy.

In (nondeterministic) Turing machines, an exogenous variable—the “oracle”—represents the environment. This variable could in principle dominate the computation, but in cases where dynamic logic is at all useful, its role has to be severely limited. Dynamic logic is primarily used for program verification, providing a method of proving that a program will not lead to undesirable results.Footnote 6 For this to be possible, the execution of the program has to be reasonably predictable. This is why software designed to interact strongly with an unpredictable environment has to be tested empirically. Dynamic logic is useful because many programs are designed either not to interact at all with the environment or to interact with it in very limited and predictable ways. For this reason, program verification is not very helpful in robotics, and we have to wonder whether the sorts of models associated with dynamic logic will be appropriate.

Humans (and robots), are causally entangled in their environments, interacting with them through a chain of subsystems which, at the motor and sensory interfaces, is thoroughly intertwined. When I am carving a piece of wood, it may be most useful to regard the hand (including its motor control), the knife, and the wood as a single system, and difficult to do useful causal modeling with any of its subsystems. In such cases, the notion of a cognitive state may not be helpful. Indeed, global states in these cases—whether they are cognitive, physical, or combinations of the two—will be too complex to be workable in dealing even with relatively simple realistic applications.

Take Segerberg’s darts example, for instance. Finding a plausible level of analysis of the agent at which there is a series of states corresponding to the routine of trying to hit the bullseye is problematic. Perhaps each time I throw a dart I think to myself “I am trying to hit the bullseye,” but the part of the activity that I can put in words is negligible. I let my body take over.

Doubtless, each time I throw a dart there are measurable differences in the pressure of my fingers, the angle of rotation of my arm, and many other properties of the physical system that is manipulating the dart. Almost certainly, these correspond to differences in the neural motor systems that control these movements. At some level, I may be going through the same cognitive operations, but few of these are conscious and it is futile to describe them. To say that there is a routine here, in the sense that Segerberg intends, is a matter of faith, and postulating a routine may be of little value in accounting for the human enterprise of dart-throwing.Footnote 7 The idea might be more helpful in the case of an expert piano player and a well-rehearsed phrase from a sonata, because the instrument is, at least in part, discrete. It is undoubtedly helpful in the case of a chess master and a standard chess opening.

Of course, human-like agents often find offline, reflective planning to be indispensable. Imagine, for instance, preparing for a trip. Without advance planning, the experience is likely to be a disaster. It is still uncertain how useful reflective planning will prove to be in robotics, but a significant part of the robotics community firmly believes in cognitive robotics; see [8, 14].

Reflective planning is possible in the travel example because at an appropriate level of abstraction many features of a contemplated trip are predictable and the number of relevant variables is manageable. For instance, a traveler can be reasonably confident that if she shows up on time at an airport with a ticket and appropriate identification, then she will get to her destination. The states in such plans will represent selected aspects of stages of the contemplated trip (such as location, items of luggage, hotel and travel reservations). It is in these opportunistic, ad hoc, scaled-down planning domains that the notion of a state can be useful, and here the sort of models that are found in dynamic logic make good sense.

But the example of planning a trip illustrates an important difference between the states that are important in practical deliberation and the states that are typically used in dynamic logic. In dynamic logic, execution is computation. The model in view is that of a Turing machine with no or very limited interaction with an exogenous environment, and the programs that one wants to verify will stick, for the most part, to information processing. In this case, executions can be viewed as successions of cognitive states. In planning applications, however, the agent is hypothetically manipulating the external environment. Therefore, states are stages of the external world, including the initial state and ones that can be reached from it by performing a series of actions. I want to emphasize again that these states will be created ad hoc for specific planning purposes and typically will involve only a small number of variables.

With the changes in the notion of a state that are imposed by these considerations, I believe that Segerberg’s ideas have great value in clarifying issues in practical reasoning. As I will indicate later, when the ideas are framed in this way, they are very similar to themes that have been independently developed by Artificial Intelligence researchers concerned with planning and rational agency.

Segerberg’s approach illuminates at least one area that is left obscure by the usual formalizations of deliberation found in AI. It provides a way of thinking about attempting to achieve a goal. For Segerberg, attempts—cases where an agent runs a routine which aims at achieving a goal—are the basic performances. The theories usually adopted in AI take successful performances as basic and try to build conditions for success into the causal axioms for actions. These methods support reasoning about the performance of actions, but not reasoning about the performance of attempts, and so they don’t provide very natural ways to formalize the diagnosis of failed attempts. (However, see [11].)

3.4 Psychological Considerations

Routines have a psychological dimension, and it isn’t surprising that they are important, under various guises, in cognitive psychology. It is well known, for instance, that massive knowledge of routines, and of the conditions for exploiting them, characterizes human expertise across a wide range of domains. See, for instance, the discussion of chess expertise in [35].

And routines are central components of cognitive architectures—systematic, principled simulations of general human intelligence. In the soar architecture,Footnote 8 for instance, where they are known as productions or chunks, they are the fundamental mechanism of cognition. They are the units that are learned and remembered, and cognition consists of their activation in working memory.

Of course, as realized in a cognitive architecture like soar, routines are not modeled as transformations on states, but are symbolic structures that are remembered and activated by the thinking agent. That is, they are more like programs than like dynamic models of programs.

This suggests a solution to the epistemological problems alluded to above, in Sect. 3.2. Humans don’t learn or remember routines directly, but learn and remember representations of them.

4 Deliberation

Logic is supposed to have something to do with reasoning, and hopefully a logic of action would illuminate practical reasoning. In [23], Segerberg turns to this topic. This is one of the very few places in the literature concerning action and practical reasoning where a philosopher actually examines a multiple-step example of practical reasoning, with attention to the reasoning that is involved. That he does so is very much to Segerberg’s credit, and to the credit of the tradition, due to von Wright, in which he works.

Segerberg’s example is inspired by Aristotle. He imagines an ancient Greek doctor deliberating about a patient. The reasoning goes like this.

-

1.

My goal is to make this patient healthy.

-

2.

The only way to do that is to balance his humors.

-

3.

There are only two ways to balance the patient’s humors: (1) to warm him, and (2) to administer a potion.

-

4.

I can think of only two ways to warm the patient: (1.1) a hot bath, and (1.2) rubbing.

The example is a specimen of means-end reasoning, leading from a top-level goal (Step 1), through an examination of means to an eventual intention. (This last step is not explicit in the reasoning presented here.) For Segerberg, goals are propositions; the overall aim of the planning exercise in this example is a state of the environment in which the proposition This patient is healthy is true.

This reasoning consists of iterated subgoaling—of provisionally adopting goals, which, if achieved, will realize higher-level goals. A reasoning path terminates either when the agent has an immediate routine to realize the current goal, or can think of no such routine.Footnote 9 As alternative subgoals branch from the top-level goal, a tree is formed. The paths of the tree terminating in subgoals that the agent can achieve with a known routine provide the alternative candidates for practical adoption by the agent. To arrive at an intention, the agent will need to have preferences over these alternatives.

According to this model of deliberation, an agent is equipped at the outset with a fixed set of routines, along with an achievement relation between routines and propositions, and with knowledge of a realization relation between propositions. Deliberation towards a goal proposition \({\mathbf{p}_0}\) produces a set of sequences of the form \(\mathbf{p}_0,\ldots ,\mathbf{p}_n\), such that \(\mathbf{p}_{i+1}\) realizes \(\mathbf{p}_i\) for all \(i\), \(0\le i <n\), and the agent has a routine that achieves \(\mathbf{p}_n\).

This model doesn’t seem to fit many planning problems. The difficulty is that agents often are faced with practical problems calling for routines, and deliberate in order to solve these problems. We can’t assume, then, that the routines available to an agent remain fixed through the course of a deliberation. This leads to my first critical point about Segerberg’s model of practical reasoning.

4.1 The Need to Plan Routines

The difficulty can be illustrated with simple blocks-world planning problems of the sort that are often used to motivate AI theories of planning. In the blocks-world domain, states consist of configurations of a finite set of blocks, arranged in stacks on a table. A block is clear in state \(s\) if there is no block on it in \(s\). Associated with this domain are certain primitive actions.Footnote 10 If \(b\) is a block then  is a primitive action, and if \(b_1\) and \(b_2\) are blocks then

is a primitive action, and if \(b_1\) and \(b_2\) are blocks then  is a primitive action.

is a primitive action.  can be performed in a state \(s\) if and only if \(b\) is clear in \(s\), and results in a state in which \(b\) is on the table, i.e., a state in which

can be performed in a state \(s\) if and only if \(b\) is clear in \(s\), and results in a state in which \(b\) is on the table, i.e., a state in which  is true.

is true.  can be performed in a state \(s\) if and only if \(b_1\not =b_2\) and both \(b_1\) and \(b_2\) are clear in \(s\), and results in a state in which \(b_1\) is on \(b_2\), i.e., a state in which

can be performed in a state \(s\) if and only if \(b_1\not =b_2\) and both \(b_1\) and \(b_2\) are clear in \(s\), and results in a state in which \(b_1\) is on \(b_2\), i.e., a state in which  is true.Footnote 11

is true.Footnote 11

Crucially, a solution to the planning problem in this domain has to deliver a sequence of actions, and (unless the agent has a ready-made multi-step routine to achieve its blocks-world goal) it will be necessary to deal with temporal sequences of actions in the course of deliberation. Segerberg’s model doesn’t provide for this. According to that model, routines might involve sequences of actions, but deliberation assumes a fixed repertoire of known routines; it doesn’t create new routines. And the realization relation between propositions is not explicitly temporal.

Of course, an intelligent blocks-world agent might well know a large number of complex routines. For any given blocks-world problem, we can imagine an agent with a ready-made routine to solve the problem immediately. But it will always be possible to imagine planning problems that don’t match the routines of a given agent, and I think we will lose no generality in our blocks-world example by assuming that the routines the agent knows are simply the primitive actions of the domain.

Nodes in Segerberg’s trees represent propositions: subordinate ends that will achieve the ultimate end. In the more general, temporal context that is needed to solve many planning problems, this will not do. A plan needs to transform the current state in which the planning agent is located into a state satisfying the goal. To do this, we must represent the intermediate states, not just the subgoals that they satisfy.

Suppose, for instance, that I am outside my locked office. My computer is inside the office. I want to be outside the office, with the computer and with the office locked. To get the computer, I need to enter the office. To enter, I need to unlock the door. I have two methods of unlocking the door: unlocking it with a key and breaking the lock. If all I remember about the effects of these two actions is the goal that they achieve, they are both equally good, since they both will satisfy the subgoal of unlocking the door. But, of course, breaking the lock will frustrate my ultimate purpose. So Segerberg’s account of practical reasoning doesn’t allow me to make a distinction that is crucial in this and many other cases.

The moral is that the side-effects of actions can be important in planning. We can do justice to this by keeping track not just of subgoals, but of the states that result from achieving them. Deliberation trees need to track states rather than propositions expressing subgoals.Footnote 12

Consider, now, the deliberation problem posed by Figs. 3 and 4. The solution will be a series of actions transforming the initial state in Fig. 3 into the goal state in Fig. 4.

It is possible to solve this problem by reasoning backwards from the goal state, using means-end reasoning. But any solution has to involve a series of states beginning with the initial state, and at some point this will have to be taken into account, if only in the termination rule for branches.

This problem can be attacked using Segerberg’s deliberation trees. We begin the tree with the goal state pictured in Fig. 3. We build the tree by taking a node that has not yet been processed, associated, say, with state \(s\), and creating daughters for this node for each nontrivial actionFootnote 13 that could produce \(s\), associating these daughters with the appropriate states.Footnote 14 A branch terminates when its associated state is the initial state.

This produces the (infinite) tree pictured in Fig. 5. States are indicated in the figure by little pictures in the ellipses. Along the rightmost branch, arcs are labeled by the action that effects the change.

The rightmost branch corresponds to the optimal solution to this planning problem: move all the blocks to the table, then build the desired stack. And other, less efficient solutions can be found elsewhere in the tree.

In general, this top-down, exhaustive method of searching for a plan would be hopelessly inefficient, even with an improved termination rule that would guarantee a finite tree. Perhaps it would be best to distinguish between the search space, or the total space of possible solutions, and search methods, which are designed to find an acceptable solution quickly, and may visit only part of the total space. Segerberg seems to recommend top-down, exhaustive search; but making this distinction, he could easily avoid this recommendation.

We have modified Segerberg’s model of deliberation to bring temporality into the picture, in a limited and qualitative way, and to make states central to deliberation, rather than propositions. This brings me to my second critical point: how can a deliberator know what is needed for deliberation?

5 Knowledge Representation Issues

To be explanatory and useful, a logical account of deliberation has to deliver a theory of the reasoning. Human agents engage in practical reasoning, and have intuitions about the correctness of this reasoning—and, of course, the logic should explain these intuitions. But AI planning and robotic systems also deliberate, and a logic of practical reasoning should be helpful in designing such systems. This means, among other things, that the theory should be scaleable to realistic, complex planning problems of the sort that these systems need to deal with.

In this section, we discuss the impact of these considerations on the logic of practical reasoning.

5.1 Axiomatization

In the logic of theoretical reasoning, we expect a formal language that allows us to represent illustrative examples of reasoning, and to present theories as formalized objects. In the case of mathematical reasoning, for instance, we hope for formalize the axioms and theorems of mathematical theories, and the steps of proofs. But we also want a consequence relation that can explain the correctness of proofs in these theories.

What should we expect of a formalization of practical reasoning, and in particular, of a formalization of the sort that Segerberg discusses in [23]?

Segerberg’s medical example, and the blocks-world case discussed in Sect. 4.1, show that at least we can formalize the steps of some practical reasoning specimens. But this is a relatively shallow use of logic. How can bring logical consequence relations to bear on the reasoning?

In cases with no uncertainty about the initial state and the consequences of actions, we can at least hope for a logical consequence relation to deliver plan verification. That is, we would hope for an axiomatization of planning that would allow us to verify a correct plan. Given an initial state, a goal state, and a plan that achieves the goal state it should be a logical consequence of the axiomatization that it indeed does this. This could be done in a version of dynamic logic where the states are states of the environment that can be changed by performing actions, but it could also be done in a static logic with explicit variables over states. In either case, we would need to provide appropriate axioms.

What sort of axioms would we need, for instance, to achieve adequate plan verification for the blocks-world domain? In particular, what axioms would we need to prove something like

Here, \({ Achieves}(a,\phi ,\psi )\) is true if performing the action denoted by \(a\) in any state satisfying \(\phi \) produces a state that satisfies \(\psi \). And \(a;b\) denotes the action of performing first the action denoted by \(a\) and then the action denoted by \(b\). The provability of this formula would guarantee the success of the blocks-world plan discussed in Sect. 4.1.

Providing axioms that would enable us to prove formulas like (1) divides into three parts: (i) supplying a general theory of action-based causality, (ii) supplying specific causal axioms for each primitive action in the domain, and (iii) supplying axioms deriving the causal properties of complex actions from the properties of their components.

All of these things can be done; in fact they are part of the logical theories of reasoning about actions and plans in logical AI.Footnote 15

As for task (ii), it’s generally agreed that the causal axioms for an action need to provide the conditions under which an action can be performed, and the direct effects of performing the action. We could do this for the blocks-world action  , for instance, with the following two axioms.Footnote 16

, for instance, with the following two axioms.Footnote 16

Task (i) is more complicated, leading to a number of logical problems, including the Frame Problem. In the blocks-world example, however, the solution is fairly simple, involving change-of-state axioms like (A3), which provides the satisfaction conditions for  after a performance of PutOn

\((x,y)\).

after a performance of PutOn

\((x,y)\).

Axiomatizations along these lines succeed well even for complex planning domains. For examples, see [14].

5.2 Knowledge for Planning

The axiomatization techniques sketched in Sect. 5.1 are centered around actions and predicates; each primitive action will have its causal axioms, and each predicate will have its associated change-of-state axioms. This organization of knowledge is quite different from the one suggested by Segerberg’s theory of deliberation in [23], which centers around the relation between a goal proposition and the propositions that represent ways of achieving it.

Several difficulties stand in the way of axiomatizing the knowledge used for planning on the basis of this idea.

The enablement relation between propositions may be too inchoate, as it stands, to support an axiomatization. For one thing, the relation is state-dependent. Pushing a door will open it if the door isn’t latched, but (ordinarily) will open it if it isn’t latched. Also, the relation between enablement and temporality needs to be worked out. Segerberg’s example is atemporal, but many examples of enablement involve change of state. It would be necessary to decide whether we have one or many enablement relations here. Finally, as in the blocks-world example and many other planning cases, ways of doing things need to be computed, so that we can’t assume that all these ways are known at the outset of deliberation. This problem could be addressed by distinguishing basic or built-in ways of doing things from derived ways of doing things. I have not thought through the details of this, but I suspect that it would lead us to something very like the distinction between primitive actions and plans that is used in AI models of planning.

6 Direction of Reasoning

If Segerberg’s model of practical reasoning is linked to top-down, exhaustive search—starting with the goal, creating subgoals consisting of all the known ways of achieving it, and proceeding recursively—it doesn’t match many typical cases of human deliberation, and would commit us to a horribly inefficient search method.

For example, take the deliberation problem created by chess. If we start by asking “How can I achieve checkmate?” we can imagine a very large number of mating positions. But no one plans an opening move by finding a path from one of these positions to the opening position. Nevertheless reasoning of the sort we find in chess is fairly common, and has to count as deliberation.

As I said in Sect. 4.1, it might be best to think of Segerberg’s deliberation trees as a way of defining the entire search space, rather than as a recommendation about how to explore this space in the process of deliberation. But then, of course, the account of deliberation is very incomplete.

7 Methodology and the Study of Practical Reasoning

The traditional methodology relates philosophical logic to reasoning either by producing semantic definitions of validity that are intuitively well motivated, or by formalizing a few well-chosen examples that illustrate how the logical theory might apply to relatively simple cases of human reasoning.

Artificial Intelligence offers the opportunity of testing logical theories by relating them to computerized reasoning, and places new demands on logical methodology. Just as the needs of philosophical logic—and, in particular, of explaining reasoning in domains other than mathematics—inspired the development of nonclassical logics and of various extensions of classical logic, the needs of intelligent automated reasoning have led to logical innovations, such as nonmonotonic logics.

The axiom sets that are needed to deal with many realistic cases of reasoning, such as the planning problems that are routinely encountered by large organizations, are too large for checking by hand; they must be stored on a computer and tested by the usual techniques that are used to validate the components of AI programs. For instance, the axiom sets can be used as knowledge sources for planning algorithms, and the performance of these algorithms can be tested experimentally.Footnote 17

This methodology is problematic in some ways, but if logic is to be applied to realistic reasoning problems—and, especially, to practical reasoning, there really is no alternative. Traditional logical methods, adopted for mathematical reasoning, are simply not adequate for the complexity and messiness of practical reasoning.

My final comment on Segerberg’s logic of deliberation is that, if logic is to be successfully applied to practical reasoning the logical theories will need to be tested by embedding them in implemented reasoning systems and evaluating the performance of these systems.

8 Intention

Intentions connect deliberation with action. Deliberation attempts to prioritize and practicalize desires, converting them to plans. The adoption of one of these plans is the formation of an intention. In some cases, there may be no gap between forming an intention and acting. In other cases, there may be quite long delays. In fact, the relation between intention and dispositions to act is problematic; this is probably due, in part at least, to the fact that the scheduling and activation of intentions, in humans, is not entirely conscious or rational.

Since the publication of Anscombe’s 1958 book [1], entitled Intention, philosophers of action have debated the nature of intention and its relation to action. For a survey of the literature on this topic, see [33]. Illustrating the general pattern in the philosophy of action that I mentioned in Sect. 1, this debate is uninformed by a logical theory of action; nor do the philosophers engaging in it seem to feel the need of such a theory.

In many of his articles on the logic of action,Footnote 18 Segerberg considers how to include intention in his languages and models. The most recent presentation of the theory, presented in [31], begins with the reification of actions as sets of state paths that was discussed above in Sect. 3.2. The theory is then complicated by layering on top of this a temporal modeling according to which a “history” is a series of actions. This introduces a puzzling duality in the treatment of time, since actions themselves involve series of states; I am not sure how this duality is to be reconciled, or how to associate states with points along a history.

I don’t believe anything that I want to say will be affected by thinking of Segerberg’s histories as series of states; I invite the reader to do this.

The intentions of an agent with a given past \(h\), within a background set \(H\) of possible histories, are modeled by a subset \(\text {int}_H(h)\) of the continuations of \(h\) in \(H\) (i.e., a subset of \(\{g\,:\; hg\in H\}\)). This set is used in the same way sets of possible worlds are used to model propositional attitudes—the histories in \(\text {int}_H(h)\) represent the outcomes that are compatible with the agent’s intentions. For instance, if I intend at 9am to stay in bed until noon (and only intend this), my intention set will be the set of future histories in which I don’t leave my bed until the afternoon.

Segerberg considers a language with two intention operators, \(\mathbf{int}^{\circ }\) and \(\mathbf{int}\), both of which apply to terms denoting actions. (This is the reason for incorporating actions in histories.) The gloss of \(\mathbf{int}^{\circ }\) is “intends in the narrow sense” and the gloss of \(\mathbf{int}\) is simply “intends.” But there is no explanation of the two operators and I, for one, am skeptical about whether the word ‘intends’ has these two senses. There seems to be an error in the satisfaction conditions for \(\mathbf{int}\) on ([31], p. 181), but the aim seems to be a definition incorporating a persistent commitment to perform an action. I’ll confine my comments to \(\mathbf{int}^{\circ }\); I think they would also apply to most variants of this definition.

\(\mathbf{int}^{\circ }(\alpha )\) is satisfied relative to a past history \(h\) in case the action \(\alpha \) is performed in every possible future \(g\) in the intention set \(\text {int}_H(h)\). This produces a logic for \(\mathbf{int}^{\circ }\) analogous to a modal necessity operator.

The main problem with this idea has to do with unintended consequences of intended actions. Let \(\text {fut}_{h,\alpha }\) be the set of continuations of \(h\) in which the action denoted by \(\alpha \) is performed. If \(\text {fut}_{h,\beta }\subseteq \text {fut}_{h,\alpha }\), then \(\mathbf{int}^{\circ }(\alpha )\rightarrow \mathbf{int}^{\circ }(\beta )\) must be true at \(h\). But this isn’t in fact how intention acts. To adapt an example from ([4], p. 41), I can intend to turn on my computer without intending to increase the temperature of the computer, even if the computer is heated in every possible future in which I turn the computer on.

Segerberg recognizes this problem, and proposes ([31], p. 184) to solve it by insisting that the set of future histories to which \(\text {int}_H(h)\) is sensitive consist of all the logically possible continuations of \(h\). This blocks the unwanted inference, since it is logically possible that turning on the computer won’t make it warmer. But, if the role of belief in forming intentions is taken into account, I don’t think this will yield a satisfactory solution to the problem of unintended consequences.

Rational intention, at any rate, has to be governed by belief. It is pointless, for instance, to deliberate about how to be the first person to climb Mt. McKinley if you believe that someone else has climbed Mt. Mckinley, and irrational to intend to be the first person to climb this mountain if you have this belief.Footnote 19

This constraint on rational intention would be enforced, in models of the sort that Segerberg is considering, by adding a subset \(\text {bel}_H(h)\) of the continuations of \(h\), representing the futures compatible with the agent’s beliefs, and requiring that \(\text {int}_H(h)\subseteq \text {bel}_H(h)\): every intended continuation is compatible with the agent’s beliefs. Perhaps something short of this requirement would do what is needed to relate rational intention to belief, but it is hard to see what that would be.

But now the problem of unintended consequences reappears. I can believe that turning on my computer will warm it and intend to turn the computer on, without intending to warm it.Footnote 20 I conclude that Segerberg’s treatment of intention can’t solve the problem of unintended consequences, if belief is taken into account.Footnote 21

Unlike modal operators and some propositional attitudes, but like many propositional attitudes, intention does not seem to have interesting, nontrivial logical properties of its own, and I doubt that a satisfaction condition of any sort is likely to prove very illuminating, if we are interested in the role of intention in reasoning.

We can do better by considering the interactions of intention with other attitudes—intention-belief inconsistency is an example. But I suspect that the traditional methods of logical analysis are limited in what they can achieve here. Bratman’s work, as well as work in AI on agent architectures, suggests that the important properties of intention will only emerge in the context of a planning agent that is also involved in interacting in the world. I’d be the last to deny that logic has an important part to play in the theory of such agents, but it is only part of the story.

9 The Logic of Action and Philosophy

I will be brief here.

In [22, 27] Segerberg discusses ways in which his reification of actions might be useful in issues that have arisen in the philosophical literature on action having to do with how actions are to be individuated. I agree that the logical theory is helpful in thinking about these issues. But philosophers need philosophical arguments, and for an application of a logical model to philosophy to be convincing, the model needs to be motivated philosophically. I believe that Segerberg’s models need more thorough motivation of this sort, and particularly motivation that critically compares the theory to other alternatives.

On the other hand, most philosophers of action claim to be interested in practical reasoning, but for them the term seems to indicate a cluster of familiar philosophical issues, and to have little or nothing to do with reasoning. Here there is a gap that philosophical logicians may be able to fill, by providing a serious theory of deliberative reasoning, analyzing realistic examples, and identifying problems of philosophical interest that arise from this enterprise. Segerberg deserves a great deal of credit for seeking to begin this process, but we will need a better developed, more robust logical theory of practical reasoning if the interactions with philosophy are to flourish.

10 The Problem of Discreteness

I want to return briefly to the problem of discreteness. Recall that Segerberg’s theory of agency is based on discrete models of change-of-state. Discrete theories are also used in the theory of computation and in formalisms that are used to model human cognition, in game theory, and in AI planning formalisms. Computational theories can justify this assumption by appealing to the discrete architecture of digital computers. It is more difficult to do something similar for human cognition, although in [38] Alan Turing argues that digital computers can simulate continuous computation devices well enough to be practically indistinguishable from them.

Perhaps the most powerful arguments for discrete theories of control and cognitive mechanisms is that we don’t seem to be able to do without them. Moreover, these systems seem to function perfectly well even when they are designed to interact with a continuous environment. Discrete control systems for robot motion illustrate this point.

But if we are interested in agents like humans and robots that are embedded in the real world, we may need to reconsider whether nature should be regarded, as it is in game theory and as Segerberg regards it, as an agent. Our best theory of how nature operates might well be continuous. Consider, for instance, an agent dribbling a basketball. We might want to treat the agent and its interventions in the physical process using discrete models, but use continuous variables and physical laws of motion to track the basketball.Footnote 22

11 Conclusion

I agree with Segerberg that we need a logic of action, and that it is good to look to computer science for ideas about how to develop such a logic. But, if we want a logic that will apply to realistic examples, we need to look further than theoretical computer science. Artificial Intelligence is the area of computer science that seeks to deal with the problems of deliberation, and logicians in the AI community have been working for many years on these problems.

I have tried to indicate how to motivate improvements in the theory of deliberation that is sketched in some of Segerberg’s works, and how these improvements lead in the direction of action theories that have emerged in AI. I urge any philosopher interested in the logic of deliberation to take these theories into account.

Notes

- 1.

Segerberg’s work on action is presented in articles dating from around 1980. See the articles by Segerberg cited in the bibliography of this chapter.

- 2.

See [9].

- 3.

Now we are working with Turing machines that run pseudocode, and whose states consist of assignments of values to an infinite set of variables. This assumption is legitimate, and loses no generality.

- 4.

Corresponding to any state-tree, there is the set of its branches. Conversely, however, not every set of sequences can be pieced together into a tree.

- 5.

Further examples along these lines, and a classification of the cases, can be found in [2]. I believe that an adequate theory of action must do justice to Austin’s distinctions.

- 6.

See, for instance, [7].

- 7.

Such routines might be helpful in designing a robot that could learn to throw darts, but issues like this are controversial in robotics itself. See [6] and other references on “situated robotics.”

- 8.

See [13].

- 9.

There are problems with such a termination rule in cases where the agent can exercise knowledge acquisition routines—routines that can expand the routines available to the agent. But this problem is secondary, and we need not worry about it.

- 10.

In more complex cases, and to do justice to the way humans often plan, we might want to associate various levels of abstraction with a domain, and allow the primitive actions at higher levels of abstraction to be decomposed into complex lower-level actions. This idea has been explored in the AI literature; see, for instance, [15, 37]. One way to look at what Segerberg seems to be doing is this: he is confining means-end reasoning to realization and ignoring causality. He discusses cases in which the reasoning moves from more abstract goals to more concrete goals that realize them. But he ignores cases where, at the same level of abstraction, the reasoning moves from a temporal goal to an action that will bring the goal about if performed.

- 11.

In this section, we use italics for predicates and SmallCaps for actions.

- 12.

We can, of course, think of states as propositions of a special, very informative sort.

- 13.

An action is trivial in \(s\) if it leaves \(s\) unchanged.

- 14.

Reasoning in this direction is cumbersome; it is easier to find opportunities to act in a state \(s\) than to find ways in which \(s\) might have come about. Evidently, Segerberg’s method is not the most natural way to approach this planning problem.

- 15.

- 16.

I hope the notation is clear. \([a]\) is a modal operator indicating what holds after performing action denoted by \(a\).

- 17.

- 18.

- 19.

For more about intention-belief inconsistency, see ([5], pp. 37–38).

- 20.

If this example fails to convince you, consider the following one. I’m a terrible typist. When I began to prepare this chapter, I believed I would make many typographical errors in writing it. But when I intended to write the chapter, I didn’t intend to make typographical errors.

- 21.

- 22.

For mixed models of this kind, see, for instance, [16].

References

Anscombe, G. (1958). Intention. Oxford: Blackwell Publishers.

Austin, J. L. (1956–57). A plea for excuses. Proceedings of the Aristotelian Society, 57, 1–30.

Brachman, R. J., & Levesque, H. (2004). Knowledge representation and reasoning. Amsterdam: Elsevier.

Bratman, M. E. (1987). Intentions, plans and practical reason. Cambridge: Harvard University Press.

Bratman, M. E. (1990). What is intention? In P. R. Cohen, J. Morgan, & M. Pollack (Eds.), Intentions in communication (pp. 15–32). Cambridge, MA: MIT Press.

Brooks, R. A. (1990). Elephants don’t play chess. Robotics and Autonomous Systems, 6(1–2), 3–15.

Clarke, E. M., Grumberg, O., & Peled, D. A. (1999). Model checking. Cambridge, MA: MIT Press.

Fritz, C., & McIlraith, S. A. (2008). Planning in the face of frequent exogenous events. In Online Poster Proceedings of the 18th International Conference on Automated Planning and Scheduling (ICAPS), Sydney, Australia. http://www.cs.toronto.edu/kr/publications/fri-mci-icaps08.pdf.

Harel, D., Kozen, D., & Tiuryn, J. (2000). Dynamic logic. Cambridge, MA: MIT Press.

Lifschitz, V. (1987). Formal theories of action. In M. L. Ginsberg (Ed.), Readings in nonmonotonic reasoning (pp. 410–432). Los Altos, CA: Morgan Kaufmann.

Lorini, E., & Herzig, A. (2008). A logic of intention and attempt. Synthése, 163(1), 45–77.

McGuinness, D. L., Fikes, R., Rice, J., & Wilder, S. (2000). An environment for merging and testing large ontologies. In A. G. Cohn, F. Giunchiglia, & B. Selman (Eds.), KR2000: Principles of knowledge representation and reasoning (pp. 483–493). San Francisco: Morgan Kaufmann.

Newell, A. (1992). Unified theories of cognition. Cambridge, MA: Harvard University Press.

Reiter, R. (2001). Knowledge in action: Logical foundations for specifying and implementing dynamical systems. Cambridge, MA: MIT Press.

Sacerdoti, E. D. (1974). Planning in a hierarchy of abstraction spaces. Artificial Intelligence, 5(2), 115–135.

Sandewall, E. (1989). Combining logic and differential equations for describing real-world systems. In R. J. Brachman, H. J. Levesque, & R. Reiter (Eds.), KR’89: Principles of knowledge representation and reasoning (pp. 412–420). San Mateo, CA: Morgan Kaufmann.

Segerberg, K. (1980). Applying modal logic. Studia Logica, 39(2–3), 275–295.

Segerberg, K. (1981). Action-games. Acta Philosophica Fennica, 32, 220–231.

Segerberg, K. (1982a). Getting started: Beginnings in the logic of action. Studia Logica, 51(3–4), 437–478.

Segerberg, K. (1982b). The logic of deliberate action. Journal of Philosophical Logic, 11(2), 233–254.

Segerberg, K. (1984). Towards an exact philosophy of action. Topoi, 3(1), 75–83.

Segerberg, K. (1985a). Models for action. In B. K. Matilal & J. L. Shaw (Eds.), Analytical philosophy in contemporary perspective (pp. 161–171). Dordrecht: D. Reidel Publishing Co.

Segerberg, K. (1985b). Routines. Synthése, 65(2), 185–210.

Segerberg, K. (1988). Talking about actions. Studia Logica, 47(4), 347–352.

Segerberg, K. (1989). Bringing it about. Journal of Philosophical Logic, 18(4), 327–347.

Segerberg, K. (1992). Representing facts. In C. Bicchieri & M. L. D. Chiara (Eds.), Knowledge, belief, and strategic interaction (pp. 239–256). Cambridge, UK: Cambridge University Press.

Segerberg, K. (1994). A festival of facts. Logic and Logical Philosophy, 2, 7–22.

Segerberg, K. (1995). Conditional action. In G. Crocco, L. F. nas del Cerro, & A. Herzig (Eds.), Conditionals: From philosophy to computer science (pp. 241–265). Oxford: Oxford University Press.

Segerberg, K. (1996). To do and not to do. In J. Copeland (Ed.), Logic and reality: Essays on the legacy of Arthur Prior (pp. 301–313). Oxford: Oxford University Press.

Segerberg, K. (1999). Results, consequences, intentions. In G. Meggle (Ed.), Actions, norms, values: Discussion with Georg Henrik von Wright (pp. 147–157). Berlin: Walter de Gruyter.

Segerberg, K. (2005). Intension, intention. In R. Kahle (Ed.), Intensionality (pp. 174–186). Wellesley, MA: A.K. Peters, Ltd.

Segerberg, K., Meyer, J. J., & Kracht, M. (2009). The logic of action. In E. N. Zalta (Ed.), The Stanford encyclopedia of philosophy, summer (2009th ed.). Stanford: Stanford University.

Setiya, K. (2011). Intention. In E. N. Zalta (Ed.), The Stanford encyclopedia of philosophy, spring (2011th ed.). Stanford: Stanford University.

Shanahan, M. (1997). Solving the frame problem. Cambridge, MA: MIT Press.

Simon, H. A., & Schaeffer, J. (1992). The game of chess. In R. J. Aumann & S. Hart (Eds.), Handbook of game theory with economic applications (Vol. 1, pp. 1–17). Amsterdam: North-Holland.

Stefik, M. J. (1995). An introduction to knowledge systems. San Francisco: Morgan Kaufmann.

Sutton, R. S., Precup, D., & Singh, S. (1999). Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112(1–2), 181–211.

Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59(236), 433–460.

Turner, H. (1999). A logic of universal causation. Artificial Intelligence, 113(1–2), 87–123.

Wooldridge, M. J. (2000). Reasoning about Rational Agents. Cambridge, UK: Cambridge University Press.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Thomason, R.H. (2014). Krister Segerberg’s Philosophy of Action. In: Trypuz, R. (eds) Krister Segerberg on Logic of Actions. Outstanding Contributions to Logic, vol 1. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-7046-1_1

Download citation

DOI: https://doi.org/10.1007/978-94-007-7046-1_1

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-7045-4

Online ISBN: 978-94-007-7046-1

eBook Packages: Humanities, Social Sciences and LawPhilosophy and Religion (R0)