Abstract

It has long been argued that information communication technologies (ICT) have a role to play in school science classrooms. The edited collections by Barton (Teaching secondary science with ICT. Open University Press, Cambridge, 2004) and Rodrigues (Multiple literacy and science education: ICTS in formal and informal learning environments, IGI Global, Hershey, 2010) provide an informative overview of the field, illustrating options, opportunities and challenges. In this chapter, I focus on one option, the use of multimedia-based simulations, and I describe the opportunities and challenges that can be seen when these simulations are used to support chemistry education. I based the chapter on data collected over the last ten years and will use findings from a variety of projects involving simulations in chemistry classrooms to illustrate opportunities and challenges.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

14.1 Introduction

Understanding chemistry, with or without information communication technologies (ICT), poses several distinctive challenges. For example, the learner has to engage with the abstract, often microscopic nature of the subject, in order to interpret concrete reactions, often depicted through macroscopic everyday situations, and then represent these interactions in the form of symbolic notation. Not surprisingly, teachers have relied on teaching aids to help them address these challenges. Over the last few decades, one of these teaching aids has come to include ICT incorporating various multimedia.

Mayer et al. (2003) define multimedia learning as the use of at least two different types of media (graphics, audio, video, text) in presenting information. In my view, multimedia forms of ICT have gained a foothold in chemistry education, primarily it could be argued, because the multimedia technology affords an opportunity to better visualize the relationships between the microscopic, macroscopic and symbolic levels of chemistry. However, it can also be argued that the use of multimedia enables a more dynamic approach in chemistry classroom teaching and learning. So, whereas in the past teachers of chemistry may have relied on ball and stick models, now three-dimensional animated visualization tools for subatomic matter is used to illustrate particular facets. For example, animated visualization tools are used to illustrate chemical reaction mechanisms in a more dynamic way (Ng 2010).

The dynamic potential of three-dimensional visualization tools is not the sole reason for promoting animations and simulations. Technology use in science lessons advances work production by relieving students from laborious manual processes while generating more accurate and reliable data (Rogers and Finlayson 2003). Where in the past students may have had to repeat time-consuming and sometimes tedious wet lab experiments, now it is argued ICT speeds up the process and results in the generation of reliable data. Indeed, Wardle (2004) suggests that the technology provides repeatable interaction and provides instant visual feedback. Trindade et al. (2002) suggest three-dimensional virtual environments help students, with high spatial aptitude, to acquire better understanding of particular concepts. Eilks et al. (2010) posit that informed software that allows for seamless interchange between tables, charts, graph and model display has the potential to support conceptual linking between these representations.

In addition to the body of literature identifying and documenting the dynamic potential of animations and simulations in chemistry lessons, the literature also reports on studies that identified the potential of ICT to motivate students. There is plenty of evidence to suggest that technology use in classrooms improves motivation and engagement, resulting in ongoing participation (Deaney et al. 2003; Koeber 2005). Given this body of evidence (see Barton 2002, 2004; Rodrigues 2010), it is easy to understand why ICT has found its way into mainstream chemistry classroom use. Furthermore, the potential of simulation-based technology to support the development of an ability to make informed connections between the macroscopic, microscopic and symbolic levels of chemistry has probably also resulted in increasing simulation deployment in chemistry classroom practices.

However, in tandem with the literature identifying potential opportunities and strengths, other literature identifies issues and challenges. For example, some suggested that the requirement for a high transfer rate may result in a limited attention span (Ploetzner et al. 2008). Another example of an issue can be seen where Testa et al. (2002) suggested that real-time graphs produce ‘background noise’ and are not ‘cleaned’ of superfluous details/irregularities, which may make these real-time graphs difficult to interpret. It was suggested that picture use in multimedia learning processes may not be beneficial in every case (Schnotz and Bannert 2003), and according to Schwartz et al. (2004) and Azevedo (2004), the use of non-linear learning environments may result in inadequate meta-cognitive competencies. Eilks et al. (2010) state that illustrations must be scientifically reliable, and they should take care not to encourage the development of incorrect or conflicting explanations. Most of us assume these two aspects (scientifically reliable and not encouraging the development of alternative explanations) are obvious, and yet research (see Hill 1988) has shown that even static illustrations, often found in school textbooks, fail to meet these two criteria.

Obviously, therefore, it is important for education software designers to consider research findings relating to learning and pedagogy when designing software intended to be used within classrooms. Designers’ theories and views of learners and the assumptions designers make mean that content and pedagogy are intertwined well before they get into a classroom (Segall 2004). In essence, the manner in which a simulation has been designed and packaged is influenced by that design team’s views of learning theories, processes and practices. The assumptions that design teams made with regard to how their software would or could be used may not be realized if the simulation is used by teachers and students with different views of learning theories, processes and practices, and in environments that do not support the design team’s views of those elements.

The influence of ICT on student motivation has also been a strong driver in educational circles, but the literature also signals a difference in student interest levels in formal ICT-influenced environments in comparison with interest levels in informal ICT-influenced environments. Unfortunately, although learning through doing simulations or games has scope to provide powerful learning tools, the attempts to replicate levels of engagement and challenge found in games’ design have met with limited success in the classroom.

Many pupils consider games developed for school use to be pseudo games perhaps because they are repetitive, simplistic and poorly designed or because the range of activity is limited (Kirriemuir and McFarlane 2004). Not surprisingly, discerning students, with experience of computer games, view educational games as limited. Kirriemuir and McFarlane (2004) suggest that instead of trying to replicate computer games for the education context, more should be done to understand the game experience and that should be used to design environments that support learning.

Not surprisingly, over the last couple of decades, research has reported on the influence of particular design elements on engagement and learning. For example, Clarke and Mayer (2003) reported a modality principle and posited that graphical information explained by onscreen text and audio narration led to cognitive overload and was therefore detrimental to learning. Others, like Ginns (2005) and Moreno (2006), substantiated this modality principle. But in more recent times, studies are emerging that suggest there is no difference in performance based on the presence or absence of audio narration (see Sanchez and Garcia-Rodicio 2008). The discrepancy in evidence (i.e. audio narrative augmenting or diminishing performance) has often been explained by two theories.

Paivios’ dual coding theory (2006) premise is that multiple references to information with connections between the verbal and non-verbal (imagery) processing result in an improvement in the learning process. In contrast, Chandler and Sweller’s (1991, 1992) idea regarding what has often been called the ‘split attention’ effect (learner addressing multiple information sources before trying to integrate the segments to make them intelligible), and their idea regarding what is termed ‘redundancy’ suggests that disparate sources may generate cognitive overload. Though the explanations provided by Paivios (2006) and by Chandler and Sweller (1991, 1992) may appear to be contradictory, they all make sense. As a result, the jury is still out as to which explanation has more currency.

So, informed by these conflicting views and the ongoing debate regarding simulation design issues, years ago in collaboration with others, I began investigating facets of simulation design. I analysed subject matter representations commonly found in simulations, and I considered the impact of these representations on user patterns of behaviour.

In this chapter, I present findings from a series of related but independent studies within this ongoing venture investigating the relationship between patterns of student behaviour and the design aspects of some chemistry simulations designed to support learning of school/college-based chemistry. I present these studies as stand-alone snapshots of the projects because the projects involved different teams, used unrelated samples, employed different methods and procedures and adopted a variety of new analytical frameworks. The studies are presented in terms of case study methodology and findings before I go on to present conclusions based on a collective of findings drawn from these various case studies. To maintain anonymity, names that appear in the chapter are pseudonyms, and sometimes I simply refer to their identification number or initials. At the end of the transcripts, there are codes that allow me to identify the source of the transcript.

14.2 Study A: Periodic Table CD-ROM and Student Engagement

Digital literacy includes the ability to use application software tools in a fashion that enables the user to perform and accomplish specific tasks (Ng 2010). In study A, together with some colleagues I explored factors likely to influence the development of this notion of digital literacy. Study A was conducted in Australia and involved one, girls-only, class. We introduced an European multimedia award-winning CD-ROM on the periodic table to this class. We asked the teacher to make this CD-ROM available on an individual basis for at least 20 min, at least twice a week. The CD-ROM began with an introductory screen, which presented the girls with three options. They could click on a button entitled periodic table, a button entitled elements or a button entitled quiz. The periodic table button took the girls to a screenshot of the standard periodic table, which was interactive in the sense that they could then select elements to review or consider patterns within the table. The element table allowed them to key in the name of an element, and a screen containing data pertaining to that element would then appear. The quiz button basically provided a screen with a further three options: 5-min quiz, 90-s quiz or sudden death quiz.

Data collection in study A was fairly traditional in the sense that it included surveys, interviews and observation. The girls’ engagement with the CD-ROM was videotaped and analysed, and they completed pre- and post-activity questionnaires, which included basic questions about the periodic table. The analysis of the data was also fairly traditional in that it used a grounded theory approach. Fuller project details can be found in Rodrigues (2003).

The analysis showed that the extent of commitment and purpose appeared to be determined by the students’ perception of the required outcomes. The students ignored the ‘periodic table’ and ‘elements’ buttons and opted for the ‘quiz’. Once on the quiz screen, they ignored the ‘5-min’ and ‘90-s’ quiz options and chose the ‘sudden death’ quiz. At the end of their access period, they were keen to engage in dialogue with their peers in order to compare their scores. There was no notable change in their understanding of the chemistry of the periodic table. Students navigated a safe and repeatable route and did not have a favourable disposition towards risk taking and inquiry. In terms of findings, what we learnt from this project was that when given free access, there was a fairly standard pattern of behaviour. Given these findings, I worked with four software designers to produce another CD-ROM on the periodic table, and the issues, challenges and outcomes regarding that development process can be read in Rodrigues (2000). But in essence, the process highlighted the fact that while a conversation appeared to be shared between the developers and researcher, in reality the interpretation of particular language differed significantly. Hence, for example, constructivist terms were interpreted in terms of hands-on construction. While the Rodrigues (2000) project adds to the literature reporting on issues to do with system design, Barker (2008) describes models calculated to assist designers when they design multimedia products for school use.

14.3 Study B: Comparing Video and Simulations With and Without Text Explanations

Study B involved collaboration between Mary Ainley, a psychologist who was interested in the concept of ‘interest’ in science education, and me (a science educator interested in simulation design features). The project we developed was also the start of a change in the type of research methodology I would use to explore simulation use in chemistry. Up until this point, my projects had relied on interviewing simulation users after observing them or asking them to complete surveys after they used simulations.

In study B, we used a mechanism that allowed us to track the user pathways and generate records for their choices when given options. When users logged on, they were presented with a screen welcoming them and familiarizing them with the process. They supplied information about gender, age, experience and interests. They completed another screen that asked them some basic chemistry questions about the states of matter. They were then presented with preview screens before they selected a particular option, and they were asked to indicate their perceived level of interest in the given preview. In essence, they had four options: option 1 – a short video clip; option 2 – a video clip and text that explained the video clip; option 3 – a simulation; and option 4 – a simulation and the text explanation.

The user could opt out at any time. Then they completed an online post-survey and an online post-chemistry test. They could also review all four options or view one or any combination before proceeding to the next cohort of four options. In reality, the video clips showed mundane events (ice cubes melting or water boiling), and the simulations depicted these events on a microscopic level. Their choices were logged by the computer. Hence, while we did not observe them (in an intrusive manner by sitting within the vicinity), we were in effect observing their behaviour by tracking their choice patterns.

The use of this custom-designed package allowed us to track 11 Australian male and 11 Australian female students’ engagement as they explored and used animation and video clips with or without accompanying text and on the topics of dissolving, melting and boiling (fuller project details can be found in Rodrigues et al. 2001). Students said they selected their own route through the programme. However, the tracks showed that 16 of the 22 students followed the prescribed sequential presentation route. Given the ordinariness of the video footage, it was notable that these 11- to 13-year-old students preferred viewing video clips rather than animations. The number of students viewing animations declined between selecting topic 1 and topic 2, and seven students never selected the microscopic level animations. Fifteen students cited ‘video and text’ as the most helpful presentation type to understand the science concepts. There was a marked increase in the use of text explanations between students selecting their first topic and their second topic. Most students selected topics because they were interested in them, but they selected presentation styles because of their perceived functionality and utility.

14.4 Study C: Observing Student Engagement with Simulations

In study C, student volunteers aged between 14 and 15 years from two schools in Scotland were digitally video recorded while using various online chemistry-based simulations. The students were given 5 mins to use (restart, repeat or review were possibilities) two randomly allocated simulations, which in most cases lasted less than a minute. As they used the simulation, a camera located just behind their shoulder recorded the screen activity and any talk between the students. These digital records were replayed to them, and their retrospective accounts were sought via a semi-structured retrospective interview technique.

There were two cohorts involved in this study. In one cohort, the digital records were obtained when individual students worked with the simulation. In the second cohort, the students worked in pairs. There were two reasons for the pair or individual option. Convenience was an instrumental factor (in terms of access to hardware), and this influenced whether students worked in pairs or individually. In addition, we were interested in seeing whether pupils would discuss aloud their potential actions when working in pairs. We felt this might help and provide a means to access pupil thinking at the time. Almost as soon as the students finished using two randomly allocated simulations, the digital records were played back to them. The students were asked to view the digital records and were then asked to explain their actions. These explanations were also recorded and transcribed later. Further details for this project can be found in Rodrigues (2007).

Unlike the previous two studies, in this case I used conversation/discourse analysis to guide data analysis, as the focus was on interactions and procedures as they emerged. This project was really an attempt to find out why particular decisions were made (rather than only what decisions were made). A best-fit heuristic method (Hutchby and Wooffitt 1998) was possible because of the sample size of 21 volunteers. This allowed for a review of all transcripts.

An initial analysis of Study C identified several design factors that influenced student engagement. These were prior knowledge, distraction (redundant segments) and vividness (items that stand out), logic and instructions. Secondary analysis of the transcripts also showed what I have chosen to describe as:

-

Selective amnesia (seen but quickly forgotten).

-

Attention capture (a function of redundancy, vividness, prior knowledge and instruction design).

-

Inattentional blindness (missing items when engrossed). Inattentional blindness refers to an experience where someone is engrossed in an attention-demanding task to the extent that they fail to notice what may often be considered more than obvious (Pizzighello and Bressan 2008).

The transcripts show that some instructions are ‘viewed’ or read by the student, but they are very quickly forgotten (selective amnesia), and that other instructions that include cues to support informed use of the simulation, provided by the designers to guide the students, are missed (inattentional blindness) by the students.

-

Researcher:Did you notice that it had instructions, like the instructions had numbers on them?

-

H:No.

-

S:No.

-

Researcher:No. So, like on here there was a number one, a two,

-

H:I saw that bit, saw that bit down there.

-

Researcher:What bit?

-

H:That number four down there.

-

Researcher:What about number three then?

-

H:Where is number three?

-

(SMA HS)

-

A:I didn’t really notice the number sequence no.

-

Researcher:So the order that you were doing it in was…

-

A:Was largely by the spacing, by where they were positioned.

-

(DHS 1)

The simulations were allocated randomly, and five pairs used a simulation illustrating a molecular level depiction for a reaction between an acid (HCl) and an alkali (NaOH). In the simulation, coloured spheres represent the ions/molecules in sodium hydroxide solution and hydrochloric acid. The following excerpt transcript is taken from a retrospective conversation between the researcher and two students as their digital record was replayed to them. It is representative of comments made by all five pairs/triad who used this simulation.

-

Researcher:Ok. Oh ok. So what do you think was happening?

-

Student 1:They were joining together. Or something.

-

Student 2:Becoming neutral.

-

Student 1:Neutrons.

-

Researcher:What was becoming neutral?

-

Student 1:The protons.

-

Student 2: And the minus ones.

-

Student 1: Electrons.

-

(SMA SJ)

As the transcript shows, these two students forgot that this simulation depicted an acid–base reaction. Instead, they are of the opinion that it represented atomic structure. Their attention was captured by the positive and negative symbols that remained on screen for the duration of the simulation, which they associated with simple protons and electrons rather than representing the charge on the ions. It could be argued that the students in using the phrase ‘becoming neutral’ were implying that they understood this to be an acid–base neutralization reaction. However, their follow-up comments indicate this is not the case; instead, they used the phrase neutral to refer to protons or electrons forming neutral subatomic particles, a completely different concept to that intended by the simulation. The text at the start of the sequence specifically stated that this simulation represented an acid base neutralization reaction. So, while the students confirmed they had read the information drawing attention to the fact that this was a neutralization reaction, they promptly forgot it as is evidenced when they described their interpretation of the reaction.

14.5 Study D: Tracking Student Engagement with Online Simulations

In order to explore the aspects identified in study C further, two of the simulations (titration and metals) were modified to take into account two of the three aspects: (1) distraction (redundant segments) and vividness (items that stand out) and (2) logic and instructions. The third aspect, prior knowledge, I felt was beyond my control in this particular study, though I felt I could collect information about their prior chemistry and ICT experience.

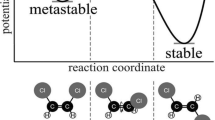

Study D was a collaboration with Eugene Gvodzenko, a mathematics educator. For study D, instead of generating a front of screen record of the screen activity, I wanted to develop a behind-the-scenes online tracking system for three versions of the same simulation. We asked Professor Thomas Greenbowe for access to the codes for the two simulations, and I asked Eugene to modify the codes to create three versions of the simulation and to allow us to track activity as the user used the simulation. The system Eugene created allowed us to randomly allocate one version of the two simulations and to record student activity as the allocated simulation was used. For study D, we opted for a behind-the-scenes recording of activity for three reasons. First, we believed that a behind-the-scenes track may be less intrusively gained and may generate more reliable data. Second, in many nations, collecting images of school children is being discouraged. By logging activity through behind-the-scenes tracks instead of filming the screen helped us address this issue. Third, we could make a private Internet URL available to a cohort who may choose not to use the simulation within a classroom, and this would make filming them impractical. The simulations were the creation of Professor Greenbowe, and he, very kindly, agreed to provide the code that enabled Eugene to modify the presentation of the titration simulations. Two simulations, a metal reactivity (metals immersed in a metal compound solution and metals immersed in an acid) and an acid–base titration, were used in this study. Three versions of each of the two simulations were created (Fig. 14.1).

So, for example, a user would have to complete the pre-questionnaire before being randomly allocated to either a titration or metal simulation. They would then complete a pre-simulation questionnaire (specific to the chemistry topic for that simulation). They would then be randomly allocated to a version of the simulation. The computer would keep a track of the time they spent on each stage and go on to track the time they spent on particular elements of the simulation while they were using the simulation. When they opted out, they would be asked to complete a post-simulation test before exiting the programme.

In this chapter, we present findings for the acid–base titration simulation. There were three versions: the original version, version 2 which had a pre-text to encourage students to pay attention to particular aspects and version 3 which simply altered the position of elements on the screen. The following additional instructions appeared on the web page before version 2 of the titration simulation has been loaded:

When you click on the button below you will see a simulation that represents a titration. To make the simulation work you must follow the numbered instructions in sequence. So start with instruction 1, then 2, then 3, etc. Some instructions have tabs. You must place the mouse on the tab and drag it open.

In version 3 of the titration simulation, the instruction for control −3 ‘Select the Acid and Base’ was converted from a ‘pull-out tab’ menu to a fixed position one, open menu. It also contained the instructions in a reading pattern that had a horizontal sequence of left to right.

Our convenience sample of volunteers was drawn from four schools and one tertiary institution in Scotland. We did not collect any data to identify the volunteers on a personal level, but they were automatically anonymously given an individual code, and the different institutions were recognized by the logging system. In this chapter, we only provide findings from those who submitted a questionnaire and went on to interact with a simulation. The volunteers provided their age, gender, science subject (science, chemistry, physics, biology) and class/tertiary level and indicated their previous ICT experience. They completed five multiple-choice chemistry questions before and after the simulation use.

There were 19 volunteers aged from 13 to 15 years (second year of secondary school) and 15 participants aged 16 and over, who were randomly allocated to a titration simulation. There were roughly equal numbers of male and female volunteers (17 females and 16 males) using this simulation. Two volunteers did not supply details about their age or gender.

The actual number randomly allocated to version 1 was very small, so in this chapter, we concentrate on the differences observed in the tracks for volunteers using version 2 and version 3. What we found was that there were notable differences in activity between these two versions.

Our findings show that five first year science undergraduates used version 2, and only one of them reached step 4 in the simulation version 2. In contrast, version 3 resulted in 12 of the 16 volunteers using the designer sequence in version 3. Four users chose the button 3, but their approach did not follow the sequence when using version 3. Therefore, all participants using version 3 found the instruction 3, regardless of age. Unfortunately, in contrast, ten of the version 2 volunteers had tracks with chaotic patterns. Furthermore, 10 of the 14 volunteers who were randomly allocated to version 2 had tracks that showed that they missed the instruction 3 – the tab pull-out menu. (This supports study C findings which also suggested that students missed the instruction 3.) Instruction 3 was fundamental to ensuring progress. In fact, without it the simulation simply could not proceed.

In contrast, all but 3 of the 16 volunteers had track data that showed that they followed the designer-intended sequence when they used version 3 of the simulation. This would suggest that having something as simple as a fixed screen menu makes a difference to progress through a simulation. Furthermore, the tracks for all volunteers who had been randomly allocated to version 3 of the titration show that they found (and used) the instruction 3. In contrast, several volunteers who were allocated to version 2 failed to find the instruction 3 and some of those who did took over 2–3 min to find the instruction 3, despite the fact that version 2 gave advance organizers and specific notice indicating the sequence to follow and the tab menu. Further details for this project can be found in Rodrigues (2011).

14.6 Discussion

All four studies (A, B, C and D) highlight the need to consider how the simulation product appears to students. Obviously, making it a pleasurable and enjoyable experience would more than likely make it more engaging. And the level, type, quality and quantity of accessible feedback will influence the scope of the product in supporting interaction. However, in designing simulations, the level, type, quality and quantity of accessible feedback needs careful consideration. As the findings for students involved in study B showed, many students cited ‘video and text’ as the most helpful presentation type to understand the science concepts. Given the ordinariness of the video footage, it was notable that these 11–13-year-old students preferred viewing video clips rather than animations. This may have been due to what some students reported as the difficulty they faced in attempting to read the screen information and observe the screen action at the same time. The ordinariness of the video footage may have provided an opportunity for them to simply focus on reading the text. Indeed, students’ aversion to ‘risk taking’ was also seen in the fact that students navigated a safe and repeatable route when they used the periodic table in study A. The students in study A and study B did not appear to have a favourable disposition towards inquiry. In our future research, we hope to explore whether students in formal learning environments balance or consider engaging and motivating digital software against the need to access information to support their learning. Students’ behaviour in the periodic table (study A) suggests the students opted for engagement (often more interested in their score on the quiz rather than what they learnt about the periodic table by doing the quiz). In contrast, students’ behaviour in the physical change (study B) suggests the students opted for information gathering, preferring to view water boiling or ice melting rather than watching animated atoms and molecules when reading text about the process.

The appearance of material also has a vital part to play in terms of engagement and progress. All four studies showed that students were keen to make progress through the simulation. However, studies C and D showed that vivid items caught the eye and tended to result in students failing to see the designer-intended instruction sequence. In addition, studies C and D in particular highlighted the impact of inattentional blindness.

The most famous example of inattentional blindness is the Chabris and Simons (2010) gorilla and basketball team study (the study was replicated and is easily accessed via Youtube video clips). In the Chabris and Simons (2010) original study, observers are asked to view a digital record and count the number of ball passes made by one of the teams. During the course of the digital record, a gorilla-suit-wearing-participant saunters through the basketball teams. The Chabris and Simons (2010) study showed that a significant number of people viewing the digital record fail to see the gorilla. While our projects were not so dramatic, our findings are similar. In studies C and D, our findings showed that users engaging with these attention-demanding simulation tasks failed to notice what may have been considered obvious by the software designers.

In addition, our findings would suggest some simulation users demonstrate selective amnesia. In study D, the volunteers who were randomly allocated to the version 2 of the titration software received hints about following the sequence and accessing the tab menu. Yet, many simply forgot to pay heed to this instruction. In study C, students clearly stated that they read specific information that appeared on the screen regarding what the simulation was depicting (neutralization reaction). And yet when they were asked to describe what the simulation was depicting, many failed to relate the movement of the spheres to neutralization and instead identified the simulation as an atomic level depiction (protons, neutrons and electrons). This may have been compounded by the fact that the simulation depicted the spheres with charges, which remained on screen for the duration of the simulation. This finding also suggests that vividness is important. The users noticed the charges just as they, using the titration simulation, noticed the icons or symbols that appeared in red as indicated in study C.

Much of the e-learning rhetoric in chemistry education has for many years alluded to notions of learner control, proactive learning or increased student engagement and motivation. There have been discussions on how e-learning tools have the potential to promote learning. Further, we have seen literature signalling the role and influence of e-learning design functions, such as the intuitive signals provided by icons or the scope for user initiative and self-pace through user-friendly navigation. Perhaps what we also now need to consider in more detail is the fundamental design of interactive, simulation-based, learning systems that are intended for use in chemistry classrooms. There is literature reporting on issues designers need to consider. For example, Barker (2008) suggests that at present there are ten factors that designers are encouraged to consider. These factors include (1) learning theory mix, (2) instructional position mix, (3) machine character mix, (4) environmental factors, (5) mode of use, (6) locus of control, (7) extent of intervention, (8) aesthetic features, (9) content and (10) the role of technology. Barker (2008) acknowledges that his list of factors is fairly general and applies to the development of interactive learning resources in a fairly generic way.

The findings from research presented in this chapter are an attempt to provide more detail with regard to the factors that need to be considered when developing interactive resources for chemistry education.

14.7 Conclusion

While I acknowledge that not all learning can be engineered, and some learning is often serendipitous, I think it is important that we ensure that the learning environments that are deployed in order to help engineer learning (in our case, learning chemistry) do not inadvertently defeat the object of the exercise. The findings from the first-phase project suggested that e-assessment involving the use of multimedia or iconic or symbolic representation in chemistry education will have to take great care if it is to ensure that what it is assessing is the students’ chemistry capability and not their (lack of) information processing skills that rely on shared symbol identification or on the ability to follow the designers’ logic of instructions.

Our research findings suggest that designers developing materials for use in chemistry education should ensure a balance that addresses the following four aspects:

-

1.

Use words/symbols and images, but when they do so, they should ensure that they do not support inattentional blindness or build in a subconscious value system to the words/symbols by keeping some words/symbols in for longer durations or permanently. The research findings showed, for example, that text informing students about the nature of the simulation (neutralization) was soon forgotten or missed when the students engaged with a simulation that kept charge symbols in the simulation for the duration of the simulation, which led them to believe the simulation represented atomic structure.

-

2.

Ensure the timing or location of images and narrative is close so as to minimize selective amnesia. The research findings showed that some users who were given instructions that directed them to follow particular sequences before they commenced using the simulation failed to take heed of the instruction and the majority was therefore unable to complete a titration simulation.

-

3.

Present oral rather than on-screen text when depicting unfamiliar symbolic content when designing simulations. Research findings showed that simulations which students perceived to be of interest were not pursued when they were unable to digest the written information that accompanied screen action. Instead, they reverted to viewing screens with familiar macroscopic events that allowed them to read the text confidently that they would not have missed any screen action.

-

4.

Consider the aesthetics but also consider the value system afforded to elements within the design that draw attention due to their size, colour or location. Research findings showed that vivid items (prominent red buttons) and location (tab menus) affected progress when using an acid and base titration simulation.

Our findings and the literature in general suggest that cognitive load may be a problem in classrooms, but in recreational computer use (games mainly), cognitive load is not an issue. This conflict in view may be due to students’ beliefs, their assessment of the purpose of the task and the resulting mindful or mindless engagement. Their perception of the type of goal set for them and their perception of the purpose of the task in the e-science classroom may lead them to focus on performance goals (as was seen in our studies A and B). In some of the literature relating to the area of prior knowledge, there is a suggestion that students have to use higher-order information processing skills in science classrooms where ICT is used (as was seen in our studies C and D). However, the fact that reports (see, e.g. Cuban 2001) continue to signal that practice involving the use of various technologies has not changed significantly, the development of digital literacy in science education environments is most likely incidental. Digital literacy needs to be taught more explicitly in science classrooms if the available technology is to do more than simply provide increased amounts of better-presented data in chemistry classrooms. The student must make connections between coming to know and understand (cognition), make decisions about how they feel about the task (affect) and decide how to translate cognition and affect into intentional behaviour (conation). In a science lesson, these elements may not be triggered or used automatically by simply immersing the student in an e-learning environment. So, while multimedia technology has the potential to support learning in chemistry classrooms, it is only likely to be realized if classroom practice, expectation and behaviour change.

References

Azevedo, R. (2004). Using hypermedia as a metacognitive tool for enhancing student learning? The role of self-regulated learning. Educational Psychologist, 40(4), 199–209.

Barker, P. (2008). Re-evaluating a model of learning design. Innovations in Education and Teaching International, 45(2), 127–141.

Barton, R. (2002). Teaching secondary science with ICT. Cambridge: Open University Press.

Barton, R. (2004). Teaching secondary science with ICT. Cambridge: Open University Press.

Chabris, C., & Simons, D. (2010). The Invisible Gorilla: And other ways our intuitions deceive us. New York: Crown Publishers.

Chandler, P., & Sweller, J. (1991). Cognitive load theory and the format of instruction. Cognition & Instruction, 8(4), 293–240.

Chandler, P., & Sweller, J. (1992). The split-attention effect as a factor in the design of instruction. British Journal of Educational Psychology, 62(2), 233–246.

Clarke, R., & Mayer, R. (2003). E-Learning and the science of instruction. Proven guidelines for consumers and designers of multimedia learning, California: Pfeiffer.

Cuban, L. (2001). Oversold and underused: Computers in schools 1980–2000. Cambridge, MA: Harvard University Press.

Deaney, R., Ruthven, K., & Hennessy, S. (2003). Pupil perspectives on the contribution of information and communication technology to teaching and learning in the secondary school. Research Papers in Education, 18(2), 141–165.

Eilks, I., Witteck, T., & Pietzner, V. (2010). Using multimedia learning aids from the Internet for teaching chemistry – Not as easy as it seems? In S. Rodrigues (Ed.), Multiple literacy and science education: ICTS in formal and informal learning environments (pp. 49–69). Hershey: IGI Global.

Ginns, P. (2005). Meta-analysis of the modality effect. Learning and Instruction, 15, 313–331.

Hill, D. (1988). Misleading illustrations. Research in Science Education, 18(1), 290–297.

Hutchby, I., & Wooffitt, R. (1998). Conversation analysis: Principles, practices and applications. Cambridge: Polity Press.

Kirriemuir, J., & McFarlane, A. (2004). Report 8: Literature review in games learning. A report for NESTA Futurelab. http://www.nestafuturelab.org/research/reviews/08_01.htm

Koeber, C. (2005). Introducing multimedia presentations and a course website to an introductory sociology course: How technology affects student perceptions of teaching effectiveness. Teaching Sociology, 33(3), 285–300.

Mayer, R. E., Sobko, K., & Mautone, P. D. (2003). Social cues in multimedia learning: Role of speaker’s voice. Journal of Educational Psychology, 95, 419–425.

Moreno, R. (2006). Does the modality principle hold for different media? A test of the methods-affects-learning hypothesis. Journal of Computer Assisted Learning, 22, 149–158.

Ng, W. (2010). Empowering students to be scientifically literate through digital literacy. In S. Rodrigues (Ed.), Multiple literacy and science education: ICTS in formal and informal learning environments (pp. 11–31). Hershey: IGI Global.

Paivio, A. (2006). Mind and its evolution; a dual coding theoretical interpretation. Mahwah: Lawrence Erlbaum Associates, Inc.

Pizzighello, S., & Bressan, P. (2008). Auditory attention causes visual inattentional blindness. Perception, 37(6), 859–866.

Ploetzner, R., Bodemer, D., & Neudert, S. (2008). Successful and less successful use of dynamic visualizations. In R. Lowe & W. Schnotz (Eds.), Learning with animation – Research implications for design (pp. 71–91). New York: Cambridge University Press.

Rodrigues, S. (2000). The interpretive zone between software designers and a science educator: grounding instructional multimedia design in learning theory. Journal of Research on Computing in Education, 33, 1–15.

Rodrigues, S. (2003). Conditioned pupil disposition, autonomy, and effective use of ICT in science classrooms. Kappa Delta Phi: The Educational Forum, 67(3), 266–275.

Rodrigues, S. (2007). Factors that influence pupil engagement with science simulations: The role of distraction, vividness, logic, instruction and prior knowledge. Chemical Education Research and Practice, 8, 1–12.

Rodrigues, S. (Ed.). (2010). Multiple literacy and science education: ICTS in formal and informal learning environments. Hershey: IGI Global.

Rodrigues, S. (2011). Using chemistry simulations: Attention capture, selective amnesia and inattentional blindness. Chemistry Education Research and Practice, 12(1), 40–46.

Rodrigues, S., Smith, A., & Ainley, M. (2001). Video clips and animation in chemistry CD-ROMS: Student interest and preference. Australian Science Teachers Journal, 47, 9–16.

Rogers, L., & Finlayson, H. (2003). Does ICT in science really work in the classroom? Part 1, the individual teacher experience. School Science Review, 84(309), 105–111.

Sanchez, E., & Garcia-Rodicio, H. (2008). The use of modality in the design of verbal aids in computer based learning environments. Interacting with Computers, 20, 545–561.

Schnotz, W., & Bannert, M. (2003). Construction and interference in learning from multiple representations. Learning and Instruction, 13(2), 117–123.

Schwartz, N., Andersen, C., Hong, N., Howard, B., & McGee, S. (2004). The influence of metacognitive skills on learners’ memory of information in a hypermedia environment. Journal of Educational Computing Research, 31(1), 77–93.

Segall, A. (2004). Revisiting pedagogical content knowledge: The pedagogy of content/the content of pedagogy. Teaching and Teacher Education, 20(5), 489–504.

Testa, I., Monroy, G., & Sassi, E. (2002). Students’ reading images in kinematics: The case of real-time graphs. International Journal of Science Education, 24, 235–256.

Trindade, J., Fiolhais, C., & Almeida, L. (2002). Science learning in virtual environments: A descriptive study. British Journal of Educational Technology, 33(4), 471–488.

Wardle, J. (2004). Handling and interpreting data in school science. In R. Barton (Ed.), Teaching secondary science with ICT (pp. 107–126). Cambridge: Open University Press.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer Science+Business Media B.V.

About this chapter

Cite this chapter

Rodrigues, S. (2012). Using Simulations in Science: An Exploration of Pupil Behaviour. In: Tan, K., Kim, M. (eds) Issues and Challenges in Science Education Research. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-3980-2_14

Download citation

DOI: https://doi.org/10.1007/978-94-007-3980-2_14

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-3979-6

Online ISBN: 978-94-007-3980-2

eBook Packages: Humanities, Social Sciences and LawEducation (R0)