Abstract

In this work is presented one new method for invariant object representation based on the Inverse Pyramidal Decomposition (IPD) and modified Mellin-Fourier Transform (MFT). The so prepared object representation is invariant against 2D rotation, scaling, and translation (RST). The representation is additionally made invariant to significant contrast and illumination changes. The method is aimed at content-based object retrieval in large databases. The experimental results obtained using the software implementation of the method proved its efficiency. The method is suitable for various applications, such as detection of children sexual abuse in multimedia files, search of handwritten and printed documents, 3D objects, represented by multi-view 2D images, etc.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

One of the most important tasks, which the creators of contemporary computer visual systems have to solve, is related to objects description, which ensures their exact and reliable classification. Objects description should satisfy contradictory requirements [1, 2]: to be invariable to object rotation, translation and scaling; lighting (respectively – contrast) changes; noises in the image; low intra-class and high inter-class dispersion; maximum compactness of the description and low computational complexity of the operations performed.

The objects descriptions could be divided into two basic groups: local and global. The first group represents the local features of the objects, related to the structure of the visible part of their surface (salient points, contours, edges, texture, etc.) [3, 4]. These descriptions are not resistant enough against noises and have high dimensionality and computational complexity. The second group comprises characteristics, which represent the general qualities of the visible surface, related for example, to object’s geometrical parameters (invariable moments), coefficients of the contour lines’ Fourier decomposition, global brightness histograms, coefficients of the generalized Hough transform, coefficients of the Mellin-Fourier transform, etc. [5–8]. The advantages of these descriptions are their insensibility to noises and the big compactness, but their computational complexity is relatively high.

The basic methods for invariant object representation with respect to 2D rigid transforms (combinations of rotation, scaling, and translation, RST) are given in significant number of scientific publications. Accordingly, 2D objects in the still grayscale image are depicted by descriptors of two basic kinds: “shape boundary” and “region”. To the first kind (shape boundary) are assigned the chain codes, Fourier descriptors; Generalized Hough Transform and Active Shape Model [7]. The skeleton of a shape can be derived by the Medial Axis Transform. To the second kind (region) are assigned some geometric characteristics, such as for example: area, perimeter, compactness, dispersion, eccentricity, etc., zero- and first-order statistical moments, centre of gravity, normalized central moments, seven rotation-invariant moments, Zernike polynomial rotation- and scale-invariant, affine transform invariant in respect to position, rotation and different scales along the coordinate axes, co-occurrence texture descriptor, etc.

The histogram descriptor is proved to be robust to changes of object’s rotation, scale, and partial changes in the viewing direction. The structural information however is lost in the histogram. To solve this problem, the combination of Discrete Wavelet Transform (DWT) or Discrete Fourier Transform (DFT) with the feature extraction method is proposed. For the extraction of the rotation-scale-translation (RST) – invariant features are developed descriptors, based on the log-polar transform (LPT) used to convert rotation and scaling into translation [8] and on the 2D Mellin-Fourier Transform (2D-MFT) [9]. As it is known, the modules of the spectrum coefficients, obtained using the 2D-MFT, are invariant with respect to the RST-transforms of the 2D objects in the image. The basic problem for the creation of the RST-invariant descriptors, in this case is the large number of spectrum coefficients, which have to be calculated [10, 11]. With regard to the necessity to reduce their number, and respectively – the time needed for the calculation without decreasing the objects description accuracy, should be solved significant number of problems, regarding the choice of the most informative MFT coefficients and the way of creating the corresponding vector descriptor.

In this work is offered new algorithm for global description of pre-segmented objects in a halftone image. This algorithm differs from the known algorithms in the same group: it requires lower number of computations when compared to the famous MFT and permits multi-layer access which enhances the search by content in indexed image databases. The new algorithm is based on the methods of the inverse pyramid decomposition [12] and of the truncated modified discrete MFT [13, 14], which are combined.

This work is arranged as follows: in section “Invariant Object Representation with Pyramid of Coefficients Based on the Truncated Modified Mellin-Fourier Transform” is described the method for invariant object representation based on pyramid of coefficients, calculated using the truncated Mellin-Furier transform; in section “Search by Content of Closest Objects in Image Databases” is given the new approach for search-by-content of closest object in image database; in section “Fast Search of Closest Vector in the Image Database” – the fast search of closest vector in the image database, in section “Experimental Results” are shown some experimental results, and in section “Conclusions” are given the Conclusions.

Invariant Object Representation with Pyramid of Coefficients Based on the Truncated Modified Mellin-Fourier Transform

The algorithm for 2D object representation is aimed at the preparation of the vector description of the segmented object, framed by a square window.

The description should be invariant to 2D rotation (R), scaling (S), translation (T) and contrast (C) changes. As a basis for the RSTC description is used the discrete 2D Modified MFT (2D-MMFT). As it is known, the MFT comprises DFT, Log-pol transform (LPT) and DFT again. In order to provide multi-layer search-by-content in the image database, the 2D-MMFT coefficients are arranged in a pyramid, called Inverse Pyramid Decomposition (IPD).

The algorithm, presented below, is aimed at digital halftone images, and comprises the following stages:

For the initial (lowest) IPD level:

- Step 1.:

-

The pixels B(k,l) of the original halftone image of size M × N are transformed into bi-polar:

$$\displaystyle{ L(k,l) = B(k,l) - (B_{\max } + 1)/2 }$$(4.1)for \(\mathrm{k} = 0,1,\ldots,\mathrm{M} - 1\) and \(\mathrm{l} = 0,1,..,\ \mathrm{N} - 1\), where Bmax = 255 is the maximum value in the pixel quantization scale.

- Step 2.:

-

The image is processed with 2D Discrete Fourier Transform (2D-DFT). The Fourier matrix is of size n × n(n = 2m). The value of n defines the size of the window, used to select the object image. For the invariant object representation are used the complex 2D-DFT coefficients, calculated in accordance with the relation:

$$\displaystyle{ \mathrm{F(a,b)} =\sum \limits _{ \mathrm{k}=0}^{n-1}\sum \limits _{ \mathrm{l}=0}^{n-1}\mathrm{L(k,l)}\exp \{ -\mathrm{ j}[(2\pi /\mathrm{n})(\mathrm{ka} + lb)]\} }$$(4.2)for \(a = 0,1,..,n - 1\) and \(b = 0,1,..,n - 1\).

The transform comprises two consecutive operations: one-dimensional transform of the pixels L(k,l), first – for the rows and after that – for the columns of the object image. Since

and

the 2D-DFT is performed as two consecutive one-dimensional DFTs:

-

For the fixed values of \(k = 0,1,..,n - 1\) and using the 1D-Fast Fourier Transform (1D-FFT) are calculated the intermediate spectrum coefficients:

$$\displaystyle{ \begin{array}{l} F(k,b) =\sum \limits _{ l=0}^{n-1}L(k,l)\exp \{ - j[2\pi \mathrm{(lb/n)}]\} = \\ =\sum \limits _{ \mathrm{l}=0}^{\mathrm{n}-1}\mathrm{L(k,l)}\cos [2\pi \mathrm{(lb/n)}] - j\sum \limits _{\mathrm{l}=0}^{\mathrm{N}-1}\mathrm{L(k,l)}\sin [2\pi \mathrm{(lb/n)}]\end{array} }$$(4.3) -

For \(b = 0,1,\ldots,\,n - 1\) and using the 1D-FFT again, are calculated the final Fourier coefficients:

$$\displaystyle{ \begin{array}{l} F(a,b) =\sum \limits _{ k=0}^{n-1}F(k,b)\exp \{ - j[2\pi \mathrm{(ka/n)}]\} =\sum \limits _{ \mathrm{k}=0}^{\mathrm{n}-1}F(k,b)\cos [2\pi \mathrm{(ka/n)}]- \\ -j\sum \limits _{\mathrm{k}=0}^{\mathrm{n}-1}F(k,b)\sin [2\pi \mathrm{(ka/n)}] =\mathrm{ A_{F}(a,b)} -\mathrm{ jB_{F}(a,b)}\end{array} }$$(4.4)where A F (a,b) and B F (a,b) are the real and the imaginary components of F(a,b) correspondingly.

- Step 3. :

-

The Fourier coefficients are then centered in accordance with the relation:

$$\displaystyle{ F_{0}(a,b) = F\left (a -\tfrac{n} {2},b -\tfrac{n} {2} \right )\ \mathrm{for\ a,\ b} = 0,1,..,\ \mathrm{n} - 1. }$$(4.5) - Step 4. :

-

For the next operations some of the Fourier coefficients are retained in accordance with the rule:

$$\displaystyle{ \mathrm{F_{0R}(a,b)} = \left \{\begin{array}{c} \mathrm{F}_{0}(a,b),if(a,b) \in \mathit{retained\;region;} \\ 0\quad \quad \quad -\quad \mathrm{\;\;}\quad \mathit{in\;all\;other\;cases.}\end{array} \right. }$$(4.6)The retained coefficients’ area is a square with a side H ≤ n, which envelopes the centre (0,0) of the spectrum plane (H – even number). For H < n, and \(a,b = -(H/2),\,-(H/2) + 1,\ldots,-1,0,1,\ldots,(H/2) - 1\), this square contains low-frequency coefficients only.

- Step 5.:

-

The modules and phases of coefficients \(\mathrm{F_{0R}(a,b)} =\mathrm{ D_{F_{0R}}}(\mathrm{a},b)\mathrm{{e}}^{\mathrm{j}\varphi _{\mathrm{F_{0R}}}(\mathrm{a},b)}\) are calculated:

$$\displaystyle{ \mathrm{D_{F_{0R}}(a,b)} = \sqrt{{[A_{F_{0R } } (a, b)]}^{2 } + {[B_{F_{0R } } (a, b)]}^{2}} }$$(4.7)$$\displaystyle{ \varphi _{\mathrm{F_{0R}}}\mathrm{(a,b)} = \mathit{arctg}[B_{F_{0R}}(a,b)/A_{F_{0R}}(a,b)] }$$(4.8) - Step 6.:

-

The modules \(\mathrm{D_{F_{0R}}(a,b)}\) of the Fourier coefficients \(\mathrm{F_{0R}(a,b)}\) are normalized in accordance with the relation:

$$\displaystyle{ \mathrm{D(a,b)} = p\ \ln D_{F_{0R}}(a,b) }$$(4.9)where p = 64 is the normalization coefficient.

- Step 7.:

-

The coefficients D(a,b) are processed with Log-Polar Transform (LPT). The centre (0,0) of the polar coordinate system (ρ, θ) coincides with the centre of the image of the Fourier coefficients’ modules D(a,b) (in the rectangular coordinate system). The transformation of coefficients D(a,b) from the rectangular (a,b) into the polar (ρ, θ) coordinate system is performed changing the variables in accordance with the relations:

$$\displaystyle{ \rho =\log \sqrt{\mathrm{{a}}^{2 } +\mathrm{ {b}}^{2}},\;\;\theta =\mathrm{ arctg(b/a)} }$$(4.10)

The coordinate change from rectangular into polar is quite clear in the continuous domain, but in the discrete domain the values of ρ and θ should be discrete as well.

Since a and b can only have discrete values in the range \(a,b = -(H/2),\ldots,-1,0,1,\ldots,(H/2) - 1,\) some of the coefficients D(ρ, θ) will be missing. At the end of the transform, the missing coefficients D(ρ i , θ i ) are interpolated using the closest neighbors D(a,b) in the rectangular coordinate system (a,b) in horizontal or vertical direction (zero-order interpolation).

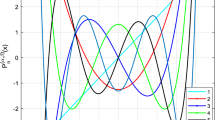

The number of discrete circles in the polar system with radius ρ i is equal to the number of the discrete angles θ i for i = , 2, . . , H. The size (in rectangular coordinates) of the side of the square H inscribed in the LPT matrix is calculated so, that to ensure maximum part of the coefficients to be transferred without change. For this, the LP transform is modified in accordance with Fig. 4.1, calculating the radius of the circumscribed circle in correspondence to the relation:

The smallest step Δ ρ between two concentric circles (the most inside) is calculated as follows:

The square, with side H, which defines the retained coefficients’ area. As a result, for the discrete radius ρ i and angle θ i for each circle are obtained the relations:

Thus, instead of the logarithmic relation used in the famous LP transform to set the values of the magnitude bins (radiuses), in Step 7 here is used the operation “rising on a power”. The so modified LP transform we called Exponential Polar Transform (EPT).

After the EPT and the interpolation of the D(a,b) coefficients is obtained one new, second matrix, which contains the coefficients D(x,y) for \(\mathrm{x,\ y} = 0,1,2,..,\mathrm{H} - 1\).

- Step 8.:

-

The second 2D-DFT is performed for the matrix with coefficients D(x,y), in accordance with the relation:

$$\displaystyle{ \mathrm{S(a,b)} = \frac{1} {{H}^{2}}\sum \limits _{x=0}^{H-1}\sum \limits _{ y=0}^{H-1}\mathrm{D_{ I}}(x,y)\exp \{ -\mathrm{ j}[(2\pi /H)(x\mathrm{a} + yb)]\} }$$(4.15)for \(a = 0,..,H - 1\) and \(b = 0,..H - 1.\)

The second 2D-DFT is performed in correspondence with Eqs. 4.3 and 4.4, applying consecutively the 1D-DFT on the rows of the matrix [D] first, and then – on the columns of the intermediate matrix obtained.

- Step 9.:

-

The modules of the complex coefficients S(a,b) are then calculated:

$$\displaystyle{ \mathrm{D_{S}(a,b)} = \sqrt{{[A_{S } (a, b)]}^{2 } + {[B_{S } (a, b)]}^{2}} }$$(4.16)where A S (a,b) and B S (a,b) are the real and the imaginary component of S(a,b) correspondingly.

With this operation the Modified MFT is finished.

The processing then continues in the next step with one more operation, aimed at achieving the invariance against contrast changes. In result is obtained the RSTC invariant object representation.

- Step 10.:

-

The modules DS(a,b) of the Fourier coefficients S(a,b) are normalized:

$$\displaystyle{ \mathrm{D_{S_{0}}(a,b)} = B_{\max }(D_{S}(a,b)/D_{S\max }(a,b)), }$$(4.17)where D Smax(a, b) is the maximum coefficient in the matrix [D S (a, b)].

- Step 11.:

-

The vector for the RSTC-invariant object representation is based on the use of coefficients \(\mathrm{D_{S_{0}}(a,b)}\) of highest energy in the amplitude spectrum 2D-MFT of size H × H. One example test image (Caltech database “Faces” [15]) and its corresponding 2D-MFT spectrum obtained with the software implementation of the method are shown correspondingly on Fig. 4.2a and b. For the extraction of the retained coefficients is used the mask, shown on Fig. 4.2c (the part, colored in yellow, corresponds to the area of the complex-conjugated coefficients,). The shape of the mask approximates the area, where the energy of the mean 2D-MFT spectrum is concentrated.

The parameter α of the mask defines the number of retained coefficients in correspondence with the relation:

The components v m for m = 1, 2, . . , R of the corresponding RSTC-invariant vector: \(\mathbf{V }_{0} = {[v_{01},v_{02},..,\,v_{0R}]}^{T}\) are defined by coefficients \(\mathrm{D_{S_{0R}}(a,b)}\), arranged as one-dimensional massif after lexicographic tracking of the 2D-MFT spectrum in the mask area, colored in blue.

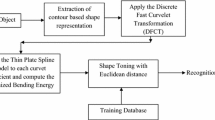

The vector \(\mathbf{V }_{0}\) is the RST-invariant description of the processed image for the initial (zero) IPD level. The block diagram of the algorithm for vector calculation in a square window of size n × n is shown on Fig. 4.3.

For the calculation of the vector \(\mathbf{V }_{1}\) for the next IPD level (one) of the processed image is performed inverse Modified Mellin-Fourier transform for the coefficients DS(a,b). In result is obtained the approximation \(\hat{L}(k,l)\) of the processed image. For this is performed the following:

- Step 1. :

-

The denormalized coefficients are calculated:

$$\displaystyle{ D{^\prime}_{S}(a,b) = [D_{S_{0}}(a,b)D_{S}(a,b)_{\max }]/B_{\max }. }$$(4.19) - Step 2. :

-

The complex coefficients are then calculated:

$$\displaystyle{ \mathrm{S{^\prime}(a,b)} =\mathrm{{ D{^\prime}_{S}(a,b)e}}^{-\mathrm{j}\varphi _{\mathrm{S}}\mathrm{(a,b)}} }$$(4.20) - Step 3. :

-

The so calculated coefficients are processed with inverse 2D-DFT:

$$\displaystyle{ \mathrm{D{^\prime}(x,y)} =\sum \limits _{ \mathrm{a}=0}^{\mathrm{H}-1}\sum \limits _{ \mathrm{b}=0}^{H-1}S{^\prime}(a,b).\exp \left \{\mathrm{j}\left [ \frac{2\pi } {\mathrm{H}}(\mathrm{xa} +\mathrm{ yb})\right ]\right \} }$$(4.21)for \(\mathrm{x,\ y} = 0,1,2,..,\mathrm{H} - 1\).

- Step 4. :

-

Then coefficients D′(x,y) are processed with inverse EPT, after replacing the variables (x,y) by (a,b) correspondingly:

$$\displaystyle{ \begin{array}{l} \mathrm{a_{i}}(\rho _{i},\theta _{i}) = [\mathrm{x}(\rho _{i},\theta _{i})]\cos [\mathrm{y}(\rho _{i},\theta _{i})], \\ \mathrm{b_{i}}(\rho _{i},\theta _{i}) = [\mathrm{x}(\rho _{i},\theta _{i})]\sin [\mathrm{y}(\rho _{i},\theta _{i})].\end{array} }$$(4.22)In result are obtained the values of coefficients \(\mathrm{D{^\prime}(a_{i},b_{i})}\), from which after interpolation are restored the missing coefficients in the rectangular coordinate system (a, b). For this is used zero interpolation (each of the missing coefficients D′(a,b) is replaced by the closest one from the group of the existing coefficients in horizontal or vertical direction in the system \((\mathrm{a}_{i},\mathrm{b}_{i})\)).

- Step 5. :

-

The values of the interpolated coefficients D′(a,b) are denormalized in correspondence with the relation:

$$\displaystyle{ \hat{D}_{F_{0R}}(a,b) =\exp [\mathrm{D{^\prime}(a,b)/p}]. }$$(4.23) - Step 6.:

-

The retained complex coefficients are calculated:

$$\displaystyle{ \hat{\mathrm{F}}_{\mathrm{0R}}(\mathrm{a,b}) =\hat{\mathrm{ D}}_{\mathrm{F_{0R}}}\mathrm{{(a,b)e}}^{-\mathrm{j}\varphi _{\mathrm{F_{0R}}}(\mathrm{a},b)} }$$(4.24) - Step 7.:

-

The coefficients \(\hat{\mathrm{F}}_{\mathrm{0R}}(\mathrm{a,b})\) are supplemented with zeros in accordance with the rule below:

$$\displaystyle{ \hat{\mathrm{F}}_{0}(\mathrm{a,b}) = \left \{\begin{array}{c} \hat{\mathrm{F}}_{\mathrm{0R}}(a,b),\;if(a,b) \in \mathit{retained\;region;} \\ 0\quad \quad \;\;\;\,\, -\quad \;\;\;\;\;\;\;\mathit{in\;all\;other\;cases.}\end{array} \right. }$$(4.25) - Step 8. :

-

Second, inverse 2D-DFT on the coefficients \(\hat{\mathrm{F}}_{0}(a,\mathrm{b})\) is performed:

$$\displaystyle{ \hat{\mathrm{L}}(\mathrm{k,l}) =\sum \limits _{ a=-\tfrac{\mathrm{n}} {2} }^{\tfrac{\mathrm{n}} {2} -1}\sum \limits _{b=-\tfrac{\mathrm{n}} {2} }^{\tfrac{\mathrm{n}} {2} -1}\hat{\mathrm{F}}_{0}(a,b)\exp \{j[2\pi /n(\mathrm{k}a +\mathrm{ l}b)]\} }$$(4.26)for \(k = 0,1,..,n - 1\) and \(l = 0,1,..,n - 1.\)

As a result is obtained the approximated image with pixels \(\hat{\mathrm{L}}(\mathrm{k,l})\) in the frame of the window of size n × n, which contains the object.

For the First IPD level is performed the following:

-

The difference image E0(k,l) is calculated:

$$\displaystyle{ E_{0}(k,l) = L(k,l) -\hat{ L}(k,l)\ \mathrm{for}\ \mathrm{k,l} \in \left (-\frac{n} {2},\ldots 0\ldots, \frac{n} {2} - 1\right ). }$$(4.27) -

The so obtained difference image is divided into four equal sub-images and each is after that processed with the already described direct Mellin-Fourier transform, following steps 2–8. The only difference is that in this case each sub-image is a square of size n/2.

-

In a way, similar with Step 11, are calculated the corresponding RSTC vectors for the next (second) IPD level. The length of the vectors is four times smaller. The so calculated vectors are used to compose the general vector for the processed pyramid level – correspondingly \(\mathbf{V }_{1}\) – whose length is equal with that of the vector \(\mathbf{V }_{0}\), calculated for the zero pyramid level.

With this, the building of the two-level pyramid decomposition is finished. As a result are obtained the vectors \(\mathbf{V }_{0}\) and \(\mathbf{V }_{1}\), which are after that used for the search of the closest object in the image database (DB). Each image in the DB is represented by vectors, calculated in similar way for each of the pyramid levels, following the algorithm, presented above. For each IPD level is calculated a corresponding vector, which carries the information about the fine details, which represent the form of the object in the image.

The algorithm for calculation of RSTC-invariant vectors, presented above, could be generalized for IPD of m = lg2n levels. The block diagram of IPD-MMFT for \(\mathrm{p} = 0,1,..,\ \mathrm{m} - 1\) is shown on Fig. 4.4. In each consecutive decomposition level is obtained the corresponding vector \(\mathbf{V }_{p}\), which presents information for more and more small details, which represent the form of the object in the image L, of size \(\mathrm{n} \times \mathrm{ n}\). It is supposed, that the object is cropped from a larger image after corresponding preprocessing based on segmentation by color, form, etc.

Search by Content of Closest Objects in Image Databases

The search of closest objects in image databases (DB) for the image request is based on the detection of the minimum squared Euclidean distance (EUD) d between their RSTC vectors. For two R-dimensional vectors \(\mathbf{V }_{i}\) and \(\mathbf{V }_{j}\) this distance is defined by the relation:

where \(v_{i}(m),v_{j}(m)\) are the mth components of vectors \(\mathbf{V }_{i},\mathbf{V }_{j}\) for i ≠ j.

The decision for the image request classification, represented by the R-dimensional RSTC-vector \(\mathbf{V }\) is taken on the basis of the image classes in the DB and their RSTC-invariant vectors:

-

P β – the number of vectors in the DB;

-

Q – the number of image classes.

After that, a classification rule is applied, based on the K-nearest neighbors (k-NN) and “majority vote” algorithms [5]:

where K is an odd number; \(\mathrm{d_{k}}\left (\mathbf{V },\mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\right )\) is the squared Euclidian distance between vectors \(\mathbf{V }\) and \(\mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\) for \(\mathrm{k} = 1,\ 2,\ \ldots,\ \mathrm{K};\ \mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\) is the kth vector with index \(\alpha _{\beta _{ k}}\) from the class \({C_{\beta }}_{k}\), which is at minimum distance from the query \(\mathbf{V }\) (the indices \(\alpha _{\beta _{k}},\ \beta _{k}\) and β 0 are in the ranges: \([1,P_{\beta _{k}}]\) – for \(\alpha _{\beta _{k}}\), and [1, Q] – for β k and β 0). The class \(C_{\beta _{0}}\) of the vector \(\mathbf{V }\) in Eq. 4.30 is defined by the most frequent value β 0 of the indices β k of the vectors \(\mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\):

Here h(β k ) is the histogram of the indices β k , for the relations in Eq. 4.31.

For the enhancement of the object search, the smallest distance for the image request is calculated to a group of images, using the vectors defined for the zero level of the corresponding couple of pyramid decompositions. After that the smallest distance is calculated in the so selected group only, using the vectors for the next (first) pyramid level of the corresponding pyramids, etc.

Fast Search of Closest Vector in the Image Database

In order to perform the search of closest vector \(\mathbf{V }_{j}\ (\mathrm{j} = 1,2,..,\mathrm{J})\) to the vector request \(\mathbf{V }\) in the database (DB) with \(J = Q\sum _{\beta =1}^{Q}P_{\beta }\) vectors could be used the approach in [16], based on the EUD between R-dimensional vectors:

where \({\left\|\boldsymbol{\mathrm{V}}\right\|}^{2} =\sum \limits _{ m=0}^{R-1}{[v(m)]}^{2},\,\;{\left\|\boldsymbol{\mathrm{V}}_{\mathrm{j}}\right\|}^{2} =\sum \limits _{ m=0}^{R-1}{[v_{j}(m)]}^{2}\).

The modules of vectors \(\mathbf{V }\) and \(\mathbf{V }_{j}\) in the equation above are not related to \(d_{E}(\mathbf{V },\mathbf{V }_{j})\), but their scalar product is related as follows:

The vectors \(\mathbf{V }_{j}\ (\mathrm{j} = 1,2,..,\mathrm{J})\) are calculated in advance for the images used as a training set for each image class and are stored as additional information (metadata) in the DB. In case, that the function f(j) has a maximum for some value of the variable j = j0, the corresponding distance \(d_{E}(\mathbf{V },\mathbf{V }_{j_{0}})\) is minimum. For the exact detection of j0 is accepted the value, for which j0 = min. The closest vector \(\mathbf{V }_{j_{0}}\) in the DB to the vector request \(\mathbf{V }\) could be defined in accordance with the rule:

Additional acceleration for the calculations in Eq. 4.34 is achieved, when the following suggestions are taken into account:

-

In case, that v(m) ≥ 0and v j (m) ≥ 0 the following relation is satisfied:

$$\displaystyle{ \sum _{m=0}^{R-1}\mathrm{v(m)} \times \mathrm{ v_{ j}(m)} <\mathrm{ v}_{\max }\sum \limits _{m=0}^{R-1}\mathrm{v_{ j}(m)}\,, }$$(4.35)where:

$$\displaystyle{ \mathrm{v}_{\max } =\max \{ v(m)\}\ \mathrm{for\ m} = 0,1,..,\ \mathrm{R} - 1. }$$(4.36)

Then, for the so-called “modified” squared Euclidean distance \(D_{E}(\mathbf{V }_{i},\mathbf{V }_{j})\) (MEUD) follows:

Then Eq. 4.34 could be transformed as follows:

-

In case, that the components v(m) and v j (m) have positive and negative values, they should be transformed in such a way, that to have positive values only:

$$\displaystyle{ \mathrm{v{^\prime}(m)} = v(m) +\min \{ v(m),v_{j}(m)\}, }$$(4.39)$$\displaystyle{ \mathrm{v_{j\;}} = v_{j} +\min \{ v(m),v_{j}(m)\}\;\mathrm{for}\;\mathrm{m} = 1,\,2,..\,,\mathrm{R} - 1. }$$(4.40)

In this case, for the modified distance \(D_{E}(\mathbf{V },\mathbf{V }_{j})\) is obtained:

and the rule, represented by Eq. 4.38 is changed accordingly:

where \(\mathrm{v}{^\prime}_{\max } =\max \{ v{^\prime}(m)\}\) for \(\mathrm{m} = 0,1,..,\mathrm{R} - 1\)

The rule for the classification of the vector \(\mathbf{V }\) (Eq. 4.30), based on the K-NN and MEUD is transformed as follows:

where

Comparing Eqs. 4.30, 4.32, and 4.33 with Eqs. 4.43, 4.44, and 4.45 respectively follows, that the use of the similarity criterion \(\mathrm{S_{k}}\left (\mathbf{V },\mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\right )\) instead of \(\mathrm{d_{k}}\left (\mathbf{V },\mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\right )\) does not influence the classification results for the vector \(\mathbf{V },\) but the number of multiplications is reduced \((\mathrm{n} \times \mathrm{ J})\) times, with retained number of sums. Besides, the corresponding sum \(\sum \limits _{m=0}^{R-1}\mathrm{v}_{\alpha _{\beta _{\mathrm{ k}}}}^{{^\prime}\beta _{\mathrm{k}}}\mathrm{(m)}\) for each vector \(\mathbf{V }_{\alpha _{\beta _{ k}}}^{\beta _{k}}\) could be defined in advance and saved in the DB metadata. In result, the computational complexity is significantly reduced and the classification of the vector-request \(\mathbf{V }\)-enhanced.

In order to improve the similarity arrangement for the first K-nearest vectors to the vector-request, in Eq. 4.43 is possible to use the criterion “Cosine similarity” (CSim) [10, 17], instead of \(\mathrm{S}_{k}\)(.). In correspondence to this criterion, the nearness between the two vectors, \(\mathbf{V }\) and \(\mathbf{V }_{j}\) could be evaluated using the relation:

In order to avoid “square root” operations, Eq. 4.46 could be transformed as Squared Cosine Similarity (SCSim) = (CSim)2. Further reduction of the needed calculations is achieved using the Modified Squared Cosine Similarity (MSCSim), defined as follows:

Then, in Eq. 4.43 the term S k (.) is substituted by MSCSim(.). In result, the number of multiplications, needed for the calculation of MSCSim is reduced n times, and the search of the closest vector in a DB, containing J vectors, to the vector request, is enhanced (n.J) times. The efficiency of the presented approach for enhanced search of closest vector grows up with the increasing of the number of vectors in the DB.

Experimental Results

For the experiments was used the software implementation of the method in \(\mathrm{C} + +\), Windows environment. Significant part of the experiments aimed to prove the efficiency of the Modified Mellin-Fourier Transform. For the experiments was used the well-known test image “Lena”, 256 × 256 pixels, 8 bpp. The experiments were performed for various values of the main parameters: the side of the subscribed circle, the number of discrete radiuses, etc.

On Fig. 4.5a is shown the experimental image “Lena”, on which are indicated the points, which participate in the EPT (the black points are not retained and the image is restored after corresponding interpolation). On Fig. 4.5b is shown the discretization grid of LPT, and on Fig. 4.5c – the points, which participate in the LPT (the used points here are marked as black). This experiment confirms the efficiency of the new approach, because in the well-known LPT the retained central part of the processed image is smaller.

Some of the experimental results performed with the same test image “Lena”, are given below on Figs. 4.6 and 4.7.

The next part of the experiments was aimed at the content-based object retrieval. For this were used three specially developed image databases of the Technical University of Sofia: the first contained 180 faces of adult people, the second – 200 faces of adult people and children and the third – more than 200 scanned documents. Most of the faces in the databases are cropped from larger images. These photos were taken in various lighting conditions with many shadows, different views, etc. Very good results were obtained for search of similar faces in the databases. In the test database of adults, were included the images “Lena”, rotated in 90∘ and 270∘ and scaled up cropped part of the same original test image.

The image request (one of the cropped images from the test image “Lena”) was the upper-left one in Fig. 4.8. The experiments proved the method efficiency. The results obtained confirm the RST-invariance of the method representation: the first five images are of the test image “Lena” – the first two are scaled up and cropped; the next three are the original, and the same image rotated on 180∘ and 90∘ correspondingly.

The experiments aimed at the detection of children sexual abuse in multimedia files need additional pre-processing. For this, color image segmentation was first performed, in order to detect naked parts of human bodies and then these parts were extracted from the images and defined as individual objects. After that the object search in the corresponding database was initiated. Special attention was paid to ability for children and adults faces recognition. The experiments confirm that this recognition is successful enough.

On Fig. 4.9 are shown some of the results obtained for search of child’s face in a mixed database, containing 200 faces of children and adults. Each image in the database was classified as belonging to one of these two classes. The experiments were performed under following conditions: \(\mathrm{B}_{\max } = 255,\ \mathrm{K} = 11\) and H = 128. In accordance with Eq. 4.18 the size R = 888 of RSTC vector is calculated for α = 20.

The images on Fig. 4.9 are the closest to the image request (upper-left) in the test database. The experiment confirmed the method reliability when the searched face is of same person: the images 1, 2, and 4 in the first row are of the same child as the image request. The situation is same with images 6, 7, and 10 in spite of the fact that in the database comprised photos of more than 40 children. The error in this search result is one face only – the image 8. In some cases is possible to get large number of wrong images in the selection. In order to solve possible uncertainties, the final decision is taken in correspondence with Eq. 4.28 – i.e., with longer vector.

Significant attention was paid for the detection of 3D objects, represented by several multi-view 2D images. For this, was used the special database of the Technical University of Sofia, containing more than 200 multi-view 2D images of various 3D objects. All images were of size 256 × 256 pixels; 24 bpp. Each object was represented by four views, placed at equal distances in a sector of 20∘. Another set of four images was taken from positions, placed at 5∘ up. For illustration, here is used a small database of four objects (Fig. 4.10), represented by 30 images. Each object had four views. More views (4.8) were used in the database for the object “Cup” only. Each image represented the object in different scale.

The experiments proved that the 3D object recognition is reliable in a view angle of 20∘. On Fig. 4.11 are shown the results obtained for one of the test images. The image request (“Mug”) is at the upper left corner of Fig. 4.11, and after it on the same row follow the closest images detected in the database (the names of the images are in accordance with their number in the database). The remaining closest images are arranged in the next two rows.

The graphic representation of the distances, calculated for the corresponding vectors of the objects represented by the 2D images in the database, is shown on Fig. 4.12. It is easy to notice, that the first closest images (No. 1–3, corresponding to images 12–14 from Fig. 4.11) are of the same object. Besides, they are the only images of this object in the test database. The vectors of next closest images are at much larger distance, which proves their belonging to another object. This example was used for illustration purposes only. In real application tasks, special decision rules should be set, developed in accordance with the objects features.

Another group of experiments was aimed at the analysis of scanned documents. The database of scanned documents, comprised images of scanned texts, and signatures (Q = 2). The database contained more than 100 samples (P > 100) of each class; texts comprised examples of Latin and Cyrillic alphabets, printed and handwritten texts. All images were of size 256 × 256 pixels, grayscale (8 bpp, n = 256); B max = 255. The experiments were performed for two versions of vector generation: 1 st version: the size of the retained coefficients square (H) equal to 96; 2 nd version: the size of the retained coefficients square (H) equal to 128. In Figs. 4.13 and 4.14 are shown results obtained for one of the test images (the image request is the upper left one) and the closest K = 11 images. In most cases (90 %) the information provided for H = 96 was enough for the right classification, but for some test images we had small number of mistakes. In Fig. 4.13 is given the result for version 1. There are three mistakes, i.e. the last three images (signatures) were classified as text, instead as belonging to the class of signatures. As it is seen in Fig. 4.14, the use of version 2 (H = 128) ensured the right decision (there are no mistakes at all). One of the images in the DB (the second in Figs. 4.13 and 4.14) was the same as the image request, but with changed contrast. In both experiments it was qualified as closest, which proves the method invariance to contrast changes.

Conclusions

In this paper is presented a method for invariant object representation with Modified MFT based on the IPD. The main differences from the famous MFT are: (a) the first DFT is performed for limited number of coefficients only. In result is obtained an approximated image representation, suitable for the object representation; (b) instead of the Log-Pol Transform, here was used the Exponential-Polar Transform (EPT), in accordance with the description in Step 7 of the algorithm. As a result, the part of the participating points from the matrix of the Fourier coefficients’ modules is larger (i.e. bigger central part of the image participates in the EPT and correspondingly – in the object description). The number of coefficients, used for the object representation is additionally limited in accordance with the vector length selection. Besides, the new transform is invariant to contrast changes because of the normalization performed in Step 10.

In result, the MMFT described above has the following advantages over the MFT:

-

The number of transform coefficients used for the object representation is significantly reduced and from this naturally follows the lower computational complexity of the method, which permits real-time applications.

-

The choice of coefficients, used for the vector calculation offers wide possibilities by setting large number of parameters, each of relatively wide range, which permits the method use in various applications: content- and context-based object retrieval in image databases, face recognition, etc.

-

A new, simplified algorithm for fast search of closest vector in large image databases is developed.

-

The method permits to detect similarity of 3D objects, represented by multiple 2D views, in a view angle of 200. Additional experiments show that the view angle could be wider for 3D objects of high symmetry.

The new approach, presented in this work, permits reliable object detection and identification in various positions, lighting conditions and view points.

References

Nixon M, Aguado A (2002) Feature extraction and image processing. Newness, Oxford

Costa L, Cesar R (2001) Shape analysis and classification: theory and practice. CRC, LLC, Boca Raton

Lowe D (2005) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Carneiro G, Jepson A (2004) Flexible spatial models for grouping local image features. CVPR 2:747–754

Theodoridis S, Koutroumbas K (2009) Pattern recognition, 4th edn. Academic, Amsterdam/Boston/Heidelberg/London/New York/Oxford/Paris/San Diego/San Francosko/ Singapore/Sidney/Tokyo

Schmid C, Dorko G, Lazebnik S, Mikolajczyk K, Ponce J (2005) Pattern recognition with local invariant features. In: Chen CH, Wang PS-P (eds) Handbook of pattern recognition and computer vision. World Scientific, Singapore/Hackensack, pp. 71–92

Cootes T, Taylor C, Cooper D (1995) Active shape models-their training and application. CVIU 61(1):38–59

Lu Z, Li D, Burkhardt H (2006) Image retrieval based on RST-invariant features. IJCSNS 6(2A):169–174

Reddy B, Chatterji B (1996) An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans Image Process 5(8):1266–1271

Javidi B (ed) (2002) Image recognition and classification: algorithms, systems and applications. Marcel Dekker, New York

Derrode S, Ghorbel F (2001) Robust and efficient Fourier-Mellin transform approximations for gray-level image reconstruction and complete invariant description. Comput Vis Image Underst 83(1/1):57–78. Elsevier

Kountchev R, Rubin S, Milanova M, Todorov Vl, Kountcheva R (2009) Non-linear image representation based on IDP with NN. WSEAS Trans Signal Process 9(5):315–325

Kountchev R, Todorov Vl, Kountcheva R (2010) RSCT-invariant object representation with modified Mellin-Fourier transform. WSEAS Trans Signal Process 6(4):196–207

Kountchev R, Rubin S, Milanova M, Kountcheva R (2011) Invariant object description with inverse pyramid based on the truncated modified discrete Mellin-Fourier transform. In: The 2011 IEEE international conference on information reuse and integration (IEEE IRI’11), Las Vegas, pp 360–365

Caltech database “Faces”. http://www.vision.caltech.edu/Image_Datasets/faces/. Accessed 26 June 2013

Wu K, Lin J (2000) Fast VQ encoding by an efficient kick-out condition. IEEE Trans Circuits Syst Video Technol 10(1):59–62

Qian G, Sural S, Gu Y, Pramanik S (2004) Similarity between euclidean and cosine angle distance for nearest neighbor queries. In: Proceedings of the 2004 ACM symposium on applied computing, Nicosia, pp 1232–1237

Acknowledgements

This paper was supported by the System Research and Application (SRA) Contract No. 0619069. This work was also supported in part by the Joint Research Project Bulgaria-Romania (2010–2012): “Electronic Health Records for the Next Generation Medical Decision Support in Bulgarian and Romanian National Healthcare Systems”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Wien

About this chapter

Cite this chapter

Kountchev, R., Rubin, S., Milanova, M., Kountcheva, R. (2013). Invariant Object Representation Based on Inverse Pyramidal Decomposition and Modified Mellin-Fourier Transform. In: Özyer, T., Kianmehr, K., Tan, M., Zeng, J. (eds) Information Reuse and Integration in Academia and Industry. Springer, Vienna. https://doi.org/10.1007/978-3-7091-1538-1_4

Download citation

DOI: https://doi.org/10.1007/978-3-7091-1538-1_4

Published:

Publisher Name: Springer, Vienna

Print ISBN: 978-3-7091-1537-4

Online ISBN: 978-3-7091-1538-1

eBook Packages: Computer ScienceComputer Science (R0)