Abstract

The aim of this paper is to present the use of methods supporting group decision making for the construction of air quality measurement networks. The article presents a a case study of making group decisions related to the construction of a hybrid network for measuring air quality in Gdańsk. Two different methods of data processing were used in the decision making process. The first one is using fuzzy modeling for quantitative data processing to assess the quality of PM10 measurement data. The other is using trust metrics for the IoT nodes of four different measurement networks. The presented example shows the complexity of the decision making process itself as well as the choice of the method. The authors deliberately used both the quantitative and qualitative methods in the decision making process to show the need to search for the right method by decision-makers.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Data collection of environmental data, such as the level of air pollution, through the civic IoT networks poses a challenge when it comes to data quality. Data is often collected on a voluntary basis, from measuring units of various quality, unevenly distributed throughout the area. The authors experienced this problem first-hand while constructing the air quality monitoring network in the area of Gdansk, Poland.

This problem may be approached through a combination of technological and procedural methods, such as over-sampling, self-cleaning, certification or regular service visits. Those methods however introduce a high cost that has not been acceptable within the scope of the project.

Instead, the authors explored the ability to distinguish between data of various quality through data post-processing, where data collected from various measuring units are cross-verified before being released for the use. This ability has been achieved within the project through a series of decisions. The focus of this paper is on the way group decision making has been used throughout the project to reach the objective of improving the data quality.

The initial approach presented in this paper is based on the group of experts developing the model of data analysis that is based on fuzzy logic. This approach naturally invokes the notion of group decision making, as a group of experts had to agree on details of method (such as the fuzzy function).

The second approach was based on the use of methods derived from the trust management area, such as reputation-based systems or consensus-based systems to the problem at hand. Here, decision-making has been invoked twice: once to agree on the approach and details, but then also to delegate the actual decision-making to the automated system.

The paper is structured as follows. It started from the background information and the formulation of the research problem in the context of measurement of air quality. The brief introduction to social group decision-making is followed by the detailed presentation of the project used to collect data about air quality. Next, the solution based on expert cooperation and the use of fuzzy logic is presented and results are discussed. This is followed by the discussion of the possible use of other methods inspired by trust management. Conclusions close the paper.

2 Group Decision-Making

Group decision-making is a process where decisions are made by a group of people. However, some of its elements can be also used to facilitate the operation of the automated process, where group decision-making is used as a metaphor.

The group decision-making process is a complex one [16] in which at least two experts representing knowledge in a given field participate and determine their preference for making a joint decision [25, 26]. To assess this complexity, the challenge of group decision making has to be approached early in the project.

During the project described in this paper, the problem of group decision making became important. Decisions had to be made regarding the assessment of confidence in PM10 monitoring networks and their nodes, on the basis of a number of measurements, different in terms of value and quality of data, derived from different networks. For that, a technical solution had to be found. Hence experts were called to select the most appropriate solution.

As the question regarding the quality of data has been put forward to experts, two decision-making processes became visible: the human one, within the group of experts on what is the best approach to the problem and an automated one related to the processing itself. While the project started with the first approach, it eventually sought the automated solution, potentially supported by some human involvement, that mimics human group decision-making.

Due to the fact that a much larger group of experts took part in complex decision-making situations, the decision-making process requires the following steps:

-

Selection of experts

-

Evaluation of the complexity of the system which is assessed by experts

-

Developing decisions using methods used by experts

Within the scope of this project, the project management sought a mixed group of experts from universities and the industry to work collaboratively on the problem.

In addition to expert knowledge, it is necessary to assure access to data relevant to the decision-making process. In the current world in which Big Data is widely available, one should consider how much the knowledge of experts involved in the decision process and its effects are actually derived from this knowledge of data. It should also be considered and to what extent the decision-making process depends on access to data and their size.

For this project, preliminary data gathered at the early stages of the network has been used by experts to ascertain the applicability of various methods.

Existing research highlights the importance of decision-making problems as well as the complexity of group decisions related to the uncertainty and knowledge of experts. Available studies on group decision problems are also the basis for the analysis of uncertainty in the case of monitoring nodes.

The following methods are used in group decision making: fuzzy modeling, preference analysis or classical semantic analysis. During the analysis, the decision making process in measurement networks was considered using the classical semantic analysis [16] both for the analysis of decision-making processes as well as decision modeling. For example, Wang and Hao [27] proposed using proportional language. Also [5, 9] presents a linguistic approach to making decisions.

It seems that the use of linguistic analysis is still the preferred path to the analysis of group decision-making processes. Therefore, on the one hand, classical methods of knowledge representation are used, such as instructions, rules, association rules, as well as methods of data mining [9, 27, 29]. It also includes the Bayes classification and grouping of data and grouping. For this reason, group decision making is a search area where you can also determine the suitability of linguistic processing methods [22]. It is also the direction of research, the results of which are presented in this work.

The use of case studies are important for decision-making, as it both informs the experts as well as indicate available solutions. For this reason, the presented article presents a case study of the construction of a measurement network and then shows examples of methods that can be (and were) used in the decision-making process. Therefore, this article should be treated as a case study showing the decision-making environment, the methods used and the decision-makers’ solutions used in the decision-making process. For this reason, in the next part of the work presents the decision making environment of the project implemented in Gdańsk related to the construction of a hybrid quality measurement network.

After the installation of four monitoring networks in Gdańsk, the problem appeared how to assess the quality of the obtained data. The project had to deal with three low cost civic monitoring networks, generating low quality measurements off an opportunistically yet densely distributed set of units, and one automatic network with a small number of monitoring stations generating data of high quality.

The situation was further complicated by the fact that civic units were often left unattended for a prolonged period of time, leading to the further degradation of the quality of measurements. However, the objectives of the project could have been satisfied only with data from all the networks, so low quality nodes could not have been excluded.

In this situation, data mining methods had to be applied to data streams from monitoring units before releasing processed data for the use. The challenge of a decision-making process was thus to select the appropriate method or methods.

The project made certain assumptions about the approach to data mining that can be described as follows.

-

The quality of data is a function of the operation of the monitoring unit. That is, at any point in time, the given unit produces data of certain quality. Such quality may change in time (both degrade and improve), but the unit itself submits no malicious results.

-

The location of monitoring units can be both planned and unplanned, but the network itself is dense enough to provide more than one measurement from different units, at least for certain area.

Therefore it should be possible to determine the function that processes data from several sensors into a value that, with a given degree of accuracy, represents the actual value.

3 Motivation – the ‘City Breathes’ Project

The project that motivated this work was conducted in the IBM Advanced Research Center (IBM CAS) in Gdańsk, Poland. IBM CAS is a research environment located in the university. It integrates both specialists from IBM as well as university employees. In this environment, research projects are carried out for business partners as well as for the benefit of the host city.

The Center is able both to implement a web-based solution based on IoT nodes and, on the other hand, to produce software that supports the implementation of these works. For the city, CAS implements projects for the development of city management systems, according to the Smart Cities strategy. CAS employees also deal with the construction of mobile applications for IBM Rational products. They also deal with the construction of reference models supporting the software development cycle.

As a part of the research conducted by CAS, a project was launched with a public benefit organization such as NGO - FRAG (Gdańsk Agglomeration Development Forum) in Gdańsk under the name “Miasto Oddycha” (City Breathes) [7]. The aim of this project was to build a network of civic IoT nodes with the main purpose of the local monitoring of the PM10 dust concentration. A hybrid monitoring network consisting of several IoT networks and nodes was created, larger and more varied than the existing Armaag public station network.

The assumption of the project is that civic IoT nodes are to complement the existing monitoring network allowing for detailed measurement in selected locations. As the result, the total number of IoT nodes, including all networks, has doubled. During the network construction process, IoT nodes were made available to residents to encourage them to participate in the project.

The research project with NGO included the installation and implementation of four monitoring networks for the measurement of air pollution in Gdańsk by creating a Civic Measurement Network. The development of the network as well as the time of installation of IoT nodes was dependent on the level of involvement of residents in the process of installing measuring nodes on their properties. It was also contingent on the decision of FRAG.

In the first stage, measuring nodes purchased by one of the early business partners were used. Next, nodes manufactured as part of the student project at the School of Banking in Gdańsk were included in the monitoring network. Subsequently, existing measuring nodes of the City Hall were included. The next stage was the invitation to the Luftdaten project from Germany.

The selection of the Internet of Things nodes as well as the network development was coordinated by NGOs. While making decisions, the expansion of existing networks, adding new networks, improving the operation of individual IoT nodes as well as the expansion of automatic networks were taken into account. Both NGO representatives, city representatives and representatives of the university were involved in the decision making process.

Two different methods for the evaluation of existing measurement network nodes and extension possibilities were evaluated in the process of decision making, taking into account the quality of measurements obtained. It was found that the further development of the network will be possible based on the analysis of the quality of measurements. Next, the decision makers were given a solution allowing for the assessment of trust in the measurement stations that they could apply taking into account both the automatic stations which IoT nodes and measurement networks provided by individual partners.

4 The Civic Measurement Network

The Civic Measurement Network merges four monitoring networks: Armaag, WSB, Luftdaten and Airly. While sharing the same area of the city of Gdansk, they focused on different aspects of the monitoring, leading to nodes that are often incomparable in terms of cost, reliability, measurement process or quality of data.

4.1 The Armaag Network

The Armaag network [4] uses nine measurement stations for automatic measurement of PM10 dust and other substances: sulfur dioxide, nitric oxide as well as ammonia and benzene. The quality of data is considered high, in terms of reliability, veracity and accuracy. An example of the Armaag measurement node is shown on Fig. 1. The figure also shows the location of measurement stations and sample PM10 measurement results obtained in hourly cycles.

Analysis of the distribution of these measurement stations indicates that their number is inadequate to the needs of the area. Specifically, the question often arise about the level of PM10 dust in any place in the city, not just near the installed stations.

Significant costs of the Armaag measurement station limit the installation capacity of a larger number of such stations in Gdańsk. However, from the point of view of the quality of the civic network, the use of data from this small number of Armaag automatic stations can be crucial for the assessment of the quality of measurements at selected locations, as it can serve as a reference network, specifically if combined with pollution spreading model. This model can be used to locally estimate the concentration of dust in the air.

4.2 The WSB Network

Another monitoring network has been created as a part of a student project at WSB University. This network is built using IoT nodes that use Arduino Uno and Raspberry PI microcontrollers, selected for their ability to handle measurement sensors used to measure PM10 concentration. The process of building the node, the selection of software and the calibration of IoT nodes, created the conditions for the evaluation of the possibility of using this type of measurement stations in the construction of low-cost networks using low-cost measuring sensors.

The construction process (Fig. 2) also showed how complex it is to develop such a node. However, once created, the process of constructing of IoT nodes to measure PM10 is relatively simple and repeatable, allowing to produce nodes relatively fast.

4.3 The Luftdaten Network

The Luftdaten project was created as part of the Open Knowledge Lab Stuttgart group of researchers and journalists programmers in Stuttgart [17]. Its implementation resulted from the analysis of significant air pollution in this city and the need to conduct continuous monitoring. The starting point for the construction of this network was the assumption that the sensor with the microcontroller should not cost more than 30 €. Eventually a network consisting of 300 independent IoT nodes measuring PM10 and PM2.5 pollutants in Stuttgart was created. Then the idea was transferred to other cities in Germany and Europe.

Currently, there are over 5600 such IoT nodes in Europe, of which 4,000 are installed in Germany. In Gdańsk, the installation of IoT PM10 and 2.5 nodes from Luftdaten was conducted through self-installation. During meetings with residents organized by the Civic Hub it was shown how the measurement of PM10 dust is carried out, but also the way of installing these IoT nodes. It turned out that due to the simplicity of the node, each of the residents after one meeting is able to install their own IoT node to measure PM10. Residents were also informed about the limitations associated with such a measurement method, such as low accuracy of measurement. Figure 3 shows the IOT node from Luftdaten and the site with measurement data for Gdańsk.

4.4 The Airly Network

The final monitoring network is Airly [1]. It is using small size IoT nodes similar to Lufdaten, aimed at local measurements and at a dense network. The installation of these measurement stations and their use was preceded by a thorough analysis of the need to install such measuring nodes in the city.

There are several reasons for those units to be installed. Because of their number, they allowed for the user of mathematical modelling that enabled predictions of air quality up to 24 h in advance. It also allowed to study the impact of various activities on the level of pollution. The network also increased residents’ awareness of the pollution. The problem of air pollution in Poland is particularly important, as World Health Organization (WHO) standards are exceeded many times. Another reason is the issue of safety, i.e. indicating both places where the level of air pollution is low, as well as indicating what should be done to ensure safety for children, through the education of parents.

It was also important that by creating a dense measuring network one could inform all those who are interested in outdoor activities like runners, cyclists and athletes in order to plan time of their activities so that the level of air pollution would be relatively low. Further, the network allows for dynamic response to changes, so that proper planning can take place. Figure 4 shows Airly stations and a map of pollution spreading.

5 Preliminary Data Analysis

Experts require data to make decisions, and in case of this project, they used measurements taken from the network, and applied simple forms of analysis to determine the nature of a problem. Their primary intention was to provisionally validate the assumption that only some stations degrade the overall quality of data. Experts worked with data similar to presented below.

Currently, the network consists of 23 stations of four different types. Some stations generate data at regular yet infrequent intervals of 30 or 60 min, some generate it less regularly, in about 5 min intervals. There is no guarantee that all readings always reach the server, so there are some missing readings. In total, the network generated more than 2,000 readings in any 24 h period.

The scatterplot of the recent readings of the PM 10 level over the relatively typical 24 h period is shown on Fig. 5. It is visible from the plot that, despite being geographically distributed, the majority of readings follow the similar pattern of gradual increases and decreases, defined by the general geography of the Gdansk area. However, there are some definitive outliers that cannot be easily explained.

If data cleansing [21] is applied to this kind of data set, it is likely to eliminate definitive outlier readings, i.e. probably those reporting the excessively high level of PM10. However, there may be problems with readings that gradually increase, specifically those towards the right side of the scatterplot, where it may be hard to discern between true outliers and local trends.

Closer examination of selected stations (Fig. 6) shows that, against the typical backdrop demonstrated by station “1”, only few stations such as “51” and “59” show unusual patterns of readings. That is, the majority of outliers and suspiciously-looking trends can be attributed to a small number of stations, thus validation one of the assumptions. If eliminated, or at least reduced in their impact on the final outcome, all remaining readings will provide information of higher quality. Thus the problem of data quality seems to lie with particular stations, not with individual readings.

6 Fuzzy Logic Analyzis

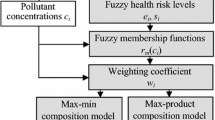

In the decision-making process, a quantitative approach was initially used. The second assumption, as indicated earlier, was about the coverage. That is, the network must have a sufficient number of nodes to compensate for the unreliability of some. Experts determined that fuzzy modeling can be used to determine the number of nodes necessary to ensure higher quality of measurements. Only then trust-based methods can be used.

The initial group decision was to use fuzzy modelling, and the process was supported by the expert knowledge of the group. The range of measurements from July and August 2018 has been collected and used. This data set consisted of hourly data from the Armaag automatic stations and two measurement networks (Airly, Luftaden). Minute aggregation for hourly data from measurement stations built by students of the College of Banking was also included in the data set. These data allowed to construct a fuzzy model whose aim was to estimate the accuracy of measurement data obtained by individual IoT nodes.

To achieve this, the concentration with the highest accuracy of PM10 was determined with the fuzzy model. Then, these values were compared to the PM10 values obtained from the measurement stations. It was possible because the initial processing procedure was used using the clustering mechanisms. The application of these mechanisms created the conditions for estimating the data distribution and the use of standardization and normalization processes for data from the IoT nodes.

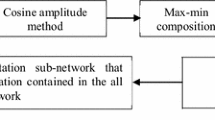

The principle was adopted that the choice of the final Data Mining method is a consequence of the preliminary data analysis. This preliminary data analysis presented in Fig. 7 shows the grouping at low, medium and high values from all networks.

The outcome of the preliminary data analysis indicated that the use of the fuzzy modelling can improve quality of the results from the network, thus satisfying one of the objectives of this step. Further, the use of fuzzy modelling was also considered to support the assessment of trust using data pre-processing based on clustering and evaluation of the quality of this data.

Such grouping of data creates conditions for building the functions of belonging and locating in the vertices of the membership functions in the centres of gravity of the pre-processed data. The centre of gravity method was used to place the apex of the membership function. Next, the fuzzy modelling procedure was applied, in which both the application process which took into account small average and high values based on expert knowledge was taken into account. The built-in rules were developed using the complete model construction procedures in which the number of rules was dependent on the number of input variables as well as on the granulation of these variables.

It was assumed that the number of input variables depends on the number of data obtained from four measurement stations. It was assumed that the value of the output variable is the exact value of the measurement. It was assumed that the station created with the use of a fuzzy model will allow to estimate the most accurate value. Figure 8 shows the results of measurements obtained with a fuzzy measurement station. It turns out that measurement errors are high both in the case of using sensor stations as well as automatic ones.

The analysis of measurement data coming from four measurement stations as well as automatic data stations obtained by means of a fuzzy model was the basis for defining trust in various elements of monitoring networks.

As the result, group decisions were made by the representatives of the Gdańsk Agglomeration Development Foundation regarding the possibility of expanding the existing monitoring network by additional monitoring stations. This decision was guided by the understanding of the quality of the data obtained. It stated that IoT nodes with a lower quality of measurement should form the basis for the further development of the network. The need to install additional monitoring stations was indicated, creating an even more extensive measurement network. Group decisions were also made in the construction of a fuzzy model in which the mechanism of inference was based on expert knowledge.

Further, with regard to the accuracy of measurements, decisions were also made when constructing and evaluating hourly data. Because hourly data was obtained on the basis of minute data and hence considered either taking into account the average measurement, the maximum value, the minimum value or the median. The choice of the average was based on the analysis of other hourly data and required expert assessments. In this expert assessment, other assessments were also taken regarding average values that were not published anywhere. It was assumed that all measuring networks with cheap measuring sensors acquire minute data and the hourly value is presented. However, none of the PM10 data providers presented the method under which the hourly value was determined on the basis of minute values.

The use of the fuzzy model used in the work allowed to estimate the accuracy of measurements of individual stations for the Gdańsk measurement networks. It also enabled the indication of those stations whose measurement quality is the lowest and indicated the necessity of extending the measurement networks by those whose measurement accuracy is high. It also enabled the support of the decision-making process regarding the expansion of the network with new IoT nodes.

7 Trust-Based Systems

As preconditions have been met, it was possible to introduce the notion of trust-based analysis to reduce the impact of ill-behaving nodes-stations. This was an important step from the perspective of a decision-making process. It is worth noting that up to now, the focus of the decision-making process was on a group of human, whether experts or decision-makers. Data itself was the subject of decisions, but parameters guiding those decisions were made by humans. Trust-based approach required experts to delegate at least some of their knowledge to the automated processing agents, something that was no apparent from the start [11].

Trust has been introduced here as a metaphor. That is, the experts did not assume that nodes are somehow ‘trustworthy’ in a human sense of this word, but merely observed that applying the simplified notion of trustworthiness to nodes can be beneficial for the overall network.

Trust-based approach required certain re-positioning of the problem. First, measurement data is no longer treated as a fact (whether true or not), but merely as an opinion of the node. Second, trustworthiness of the node must be automatically derived from data delivered by node, with no human intervention [14].

Research area of computational trust management is concerned with the collection, processing and the dissemination of trustworthiness and trust in its computational form (see [7] for an overview). It is inspired by social sciences that view trust as one of the major social enablers [10], but it has also its roots in information security where trust is seen as a foundation of applicable security measures [2]. Research such as Semantic Web [24] combined trust and provenance to determine the quality of information on the Web.

Trust and trustworthiness have several domain-specific definitions [18, 19], sometimes conflicting. If applied to the problem of data quality in in monitoring networks, one can define trust as the extent of rational reliance on monitoring data received from the unit. That is, the user of the data stream can trust data within the stream up to certain level, determined by trustworthiness of data. For example, more trustworthy data may be met with more trust in a form of important decisions being made while less trustworthy data will be met with less trust, for example with ignoring such data.

Note that trust management techniques can be incorporated into data processing of monitoring data in more than one way. While it may be expected to attribute trustworthiness to monitoring units, and use the provenance of data to determine trustworthiness of data, it is also possible to combine data of various levels of trustworthiness into a fuzzy set, or to post-process data with different level of trustworthiness into a more coherent (and more trustworthy) ones, in a manner know e.g. from the reputation-based systems.

Trust-based schemes can be classified depending on the source of trust that they can handle and it is useful to briefly describe such classification to determine which scheme can be applied to improve data quality, as discussed below.

7.1 Authority-Based Schemes

These are the very popular schemes, known primarily from information security [2]. Trustworthiness of a particular unit is determined here by the authority external to the scheme. For example, an administrator of a computer system may determine trustworthiness of its users and grant them different levels of access. Changes in trustworthiness must be again monitored by the external procedure (e.g. vetting) and corrections must be made manually.

If applied to the monitoring network, authority-bases scheme implies that trustworthiness of units should be determined by e.g. a group of experts. There is certain potential in such approach, as the experts already noticed that e.g. the Armaag network is expected to deliver more trustworthy results. However, authority-based scheme alone cannot provide flexibility and responsiveness when it comes to other networks.

7.2 Reputation-Based Schemes

The class of reputation-based schemes is both wide and popular [23], especially if one considers such schemes as Facebook’s ‘likes’, eBay’s reputation or Spotify recommendations. They all operate by automatically collating information about the extent of perceived trustworthiness from observers/consumers, centrally processing it into a reputation metric of producers/providers and distributing such reputation to interested parties, where it is accepted as the approximation of a trustworthiness of producers.

It is characteristic to those schemes that the trustworthiness is determined automatically by the system (without human intervention), automatically responds to changes in the behavior of producers (as reported by observers) and delivers an objective (or at least intra-subjective) understanding regarding the reputation.

Schemes do not value all opinions to the same extent, as not all opinions are always objective, well-intentioned and expressed. Thus schemes have to assess not only the trustworthiness of producers but also the trustworthiness of consumers, decreasing the impact of ill-behaving consumer on the overall trustworthiness. Schemes may also implement certain ageing of opinions, so that older opinions weight less towards the overall reputation. This allows to capture long-term changes in the behavior of the producer.

Reputation-based schemes, while inspired by social systems, found several applications in technology as well. All technological applications are underpinned by the same notion of providers delivering some services with varying degrees of trustworthiness and consumers, generating statements about individual experiences with providers, where consumers also have a varying degree of trustworthiness when it comes to the quality of their opinions. In some applications, the same technical component can play both roles: providers and customers.

Such approach is used e.g. in trust-based routing [15], where routers deliver service of different quality while components that wants to have their traffic routed can select the most trustworthy providers, while gossiping about their experience. Similarly, trust-based service composition [6] allows consumers to choose the service provider that they can trust on the basis of the experience of others. Cooperation among technical agents [28] uses trustworthiness to select the most trustworthy agents in open systems.

In the case of monitoring networks, such scheme should be able to calculate the reputation of the monitoring unit, on the basis of information submitted from other units. The main challenge lies in the fact that for the system to operate, there is a need to recreate the stream of opinions from what is currently only the stream of measurements. Let’s consider a cluster two monitoring units that should produce similar results, but that deliver different ones. Assuming that no other information is available, it is unlikely to be possible to automatically determine which data is more trustworthy (i.e. which data more closely reflect the physical reality). Either unit may be trustworthy or not.

7.3 Evidence-Based Schemes

Evidence-based schemes operate by focusing on acquiring and retaining evidence about one’s trustworthiness while deferring the determination of the level of such trustworthiness to interested parties (consumers). Each consumer can ten make their own decision regarding the trustworthiness of others on the basis of the available evidence. Contrasting with reputation-based schemes, there is no commonly shared notion of trustworthiness nor globally available reputations, so that decisions can be subjective.

Decision-making process, while left to consumers, can be quite complex (see e.g. [12]), generally mimicking rational human reasoning. The scheme itself is concerned only with the preservation of evidence. For that end, it uses technologies that increases confidence in data.

Blockchain technology (such as Bitcoin [20]) is the current example of such scheme at work. Nodes within the blockchain network manage the distributed database of evidence that is ever-growing, public and immutable. Individual nodes, however, have a duty to parse such database and resolve by themselves whether individual transactions.

Considering the challenge of data quality in the monitoring network, it is unlikely that the evidence-based scheme can be directly applied, as it is the objective of the project to achieve common understanding of trustworthiness. However, the fact that all measurement data (hence ‘the evidence’) will be stored in a database creates an opportunity to explore various algorithms to determine trustworthiness out of the common pool of evidence.

7.4 Introducing Trust into the Analysis of Data Quality

As trust is a social and psychological construct, it can be applied to data quality in monitoring networks only by analogy. As already mentioned, the key premise of the introduction of trust and trust-based reasoning will be the ability to automatically grade monitoring units and data they produce with certain level of trustworthiness. It does not imply that units have any kind of volition, but in a manner known from other trust-based solutions (e.g. [6]), trustworthiness will be attributed to nodes as a convenient metric. Such trustworthiness should be understood as the ability of the node to produce correct data.

Of three schemes presented earlier (authority-based, reputation-based and evidence-based), the architecture of the monitoring network lends itself to the reputation-based one. However, reputation-based systems flourish only if they are provided with the abundance of opinions that have comparable semantics, large base of responders, preferably separated from those who provide the service, of reasonable variety, and that are easy to process. Thus the main challenge for monitoring networks lies in extending the base of responders and introduce variety. For that, some elements of the authority-based scheme can be used.

It is therefore proposed here that there will be a way of introducing some initial variety into the level of trustworthiness of nodes, in the arbitrary way. Initially, this can be done manually (in a form of a bootstrap process), attributing initial higher trustworthiness to those nodes that are technically able to produce more correct results. For example, it has been already mentioned that one of the networks used in the experiment has nodes that produce results of higher quality.

This can be complemented by the (again, arbitrary and possibly expert-based) rule-based process that decrease trustworthiness of those nodes that produce apparently incorrect measurements, such as technically impossible values, physically impossible changes, unlikely drift etc. All those situations tend to indicate technical problems with the node, so that it is only fitting to use them to indicate the loss of trust in data produced by the node.

Apart from this bootstrapping activity and certain weeding off misbehaving nodes, nodes should be left to themselves to figure out which one is more trustworthy. Nodes do not produce separate opinions about other nodes, only a stream of measurement data. Therefore it is necessary to generate the stream of opinions about other nodes from such data stream.

Such generation may e.g. lead to opinions in a form of expectations that one node may have about measurements from other nodes, both past and future. Thus every node will not only produce results, but - indirectly - will also produce expectations regarding measurements that will be (or were) obtained by other nodes.

This task can be achieved only because nodes measure certain physical phenomena, such as the concentration of PM10 in the air. Those phenomena follow physical rules that make certain combination of measurements less believable than others. The already developed pollution spreading model incorporates this knowledge and allows to statistically interpolate the concentration of various pollutants from available measurements.

Due to the nature of both the phenomenon and the model, the confidence in such opinions decrease both in time and in space. Thus the confidence is higher when it comes to short-term predictions near the sensor, and becomes lower when the model has to predict the concentration ahead in time or far from the sensor.

Thus it is possible to determine two different aspects of trustworthiness of the node: one that is related to its ability to report and another that is related to the quality of its data. The former is the synthesis of the outcome of authority-based activities and the ability to make correct predictions, as dictated by the model. Note that, contrasting with social reputation-based systems, this trustworthiness is determined per relationship and per measurement. That is, the same node can have a different level of trustworthiness as a reporter in relation to different nodes, as those nodes can be at different distance. Further, the trustworthiness may vary depending on the temporal distance between the current and the predicted measurement.

Once the node can provide opinions about the trustworthiness of other nodes, it will be possible to introduce known algorithms to determine the trustworthiness of nodes when it comes to data quality.

7.5 The Use of Fuzzy Logic in Trust-Based Systems

As it has been discussed earlier, the project already used fuzzy modelling as a first approach, with good results. It is therefore useful to consider extending the use of fuzzy logic when it comes to trust based approach.

The use of fuzzy logic to determine the extent of trustworthiness has been already a research subject (e.g. [3, 13]). The key advantage is that fuzzy reasoning handles uncertainty and imprecision effectively, in a manner that can be easily comprehended by humans.

Fuzzy logic can be effectively used in recommendation-based systems to deliver the final value of trustworthiness, specifically when evidence of trustworthiness are incomparable or fuzzy by themselves. Further, it can be used to express a wide range of trust-related situations such as the lack of information or distrust (i.e. in nodes that seem to be overtaken by the adversary) [8].

Several aspects of the proposition presented here lend themselves to fuzzy values and fuzzy processing. For example, the level of confidence in the predictions provided by the model can be expressed in a form of fuzzy values and can be then a subject of processing according to the rules of fuzzy logic. In a similar manner, the reasoning about trustworthiness can be presented as fuzzy reasoning.

Consequently, it may be beneficial to implement fuzzy reasoning as a computational foundation of the trust-based approach.

8 Conclusion

This paper presents a case study of a process of decision-making that was used to resolve the challenge of data quality in heterogeneous monitoring networks that deal with air quality. Networks consist of a limited number of automatic stations with high measurement accuracy and a large number of low-cost measuring nodes with low measurement quality.

The approach assumed that data quality is a function of a monitoring unit that can be expressed as a trustworthiness of this unit. Following an expert-based group decision making, initial results were obtained through fuzzy modelling of both minute and hourly data, to determine trustworthiness of units.

Analysis of minute data indicated the need to use the median operator as the one that best corresponds to the value obtained from other measuring nodes. Group decisions were also made in the assessment of fuzzy rules built for the purpose of determining interpolated values. On the basis of this assessment, the trustworthiness of nodes was proposed as a solution to the problem of data quality. The accuracy of automatic station measurements was taken into account.

It seems that in the future the application of fuzzy modelling should take place both for the assessment of the location of measurement stations and for the assessment of measurement accuracy.

Complementing the solution could be the use of reputation-based schemes that are based on computational forms of trust. Such schemes were presented and discussed in this paper. If applied, the quality of data can be still expressed as trustworthiness of monitoring node, verified by measurements taken by other nodes, and consolidated using the physical model of the phenomena that are measured.

The authors expect that the combination of human-based group decision making and the automated processing controlled by computational trust algorithms may deliver significantly improved results.

References

Airly. https://airly.eu/pl/

Anderson, R.: Security Engineering: A Guide to Building Dependable Distributed Systems. Wiley, Hoboken (2001)

Aref, A., Tran, T.: A hybrid trust model using reinforcement learning and fuzzy logic. Comput. Intell. 34, 515–541 (2018). https://doi.org/10.1111/coin.12155(2018)

Armaag. https://armaag.gda.pl/

Chabik, J., Orłowski, C., Sitek, T.: Intelligent knowledge-based model for IT support organization evolution. In: Szczerbicki, E., Nguyen, N.T. (eds.) Smart Information and Knowledge Management, pp. 177–196. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-04584-4_8

Chang, E., Dillon, T., Hussain, F.: Trust and Reputation for Service-Oriented Environments: Technologies for Building Business Intelligence and Consumer Confidence. Wiley, Hoboken (2006). ISBN: 978-0470015476

City Breathes. https://frag.org.pl/portfolio/trojmiastooddycha/

Cofta, P.: Trust, Complexity and Control: Confidence in a Convergent World, Wiley (2007). https://doi.org/10.1002/9780470517857. ISBN: 9780470061305

Dong, Y.C., Xu, Y., Yu, S.: Linguistic multiperson decision making based on the use of multiple preference relations. Fuzzy Sets Syst. 160, 603–623 (2009)

Fukuyama, F.: Trust: The Social Virtues and the Creation of Prosperity, Touchstone Books (1996). ISBN: 0684825252

Golbeck, J.: Computing with Social Trust. Springer, London (2009). https://doi.org/10.1007/978-1-84800-356-9. ISBN: 978-1-84996-793-8

Górski, J., Cyra, Ł., Jarzębowicz, A., Miler, J.: Representing and appraising Toulmin model arguments in trust cases. In: Grasso, F. (et al.) The 8th International Workshop on Computational Models of Natural Argument (CMNA 8), Patras, Greece, 21 July 2008, 18th European Conference on Artificial Intelligence, 1 1, pp. 26–30 (2008)

Griffiths, N.: A fuzzy approach to reasoning with trust, distrust and insufficient trust. In: Klusch, M., Rovatsos, M., Payne, T.R. (eds.) CIA 2006. LNCS (LNAI), vol. 4149, pp. 360–374. Springer, Heidelberg (2006). https://doi.org/10.1007/11839354_26

Hewitt, E., Carpenter, J.: Cassandra: The Definitive Guide, 2nd edn. O’Reilly Media, Sebastopol (2016)

Jensen, Christian D., Connell, P.O.: Trust-based route selection in dynamic source routing. In: Stølen, K., Winsborough, W.H., Martinelli, F., Massacci, F. (eds.) iTrust 2006. LNCS, vol. 3986, pp. 150–163. Springer, Heidelberg (2006). https://doi.org/10.1007/11755593_12

Kacprzyk, J.: Group decision making with a fuzzy linguistic majority. Fuzzy Sets Syst. 18, 105–118 (1986)

Luftdaten homepage: https://luftdaten.info/

McKnight, D.H., Chervany N.L.: The Meanings of Trust. In: University of Minnesota (1996). http://www.misrc.umn.edu/wpaper/wp96-04.htm

Nafi, K.W., Shekha kar, T., Hossain, A., Hashem, M.M.A.: An advanced certain trust model using fuzzy logic and probabilistic logic theory. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 3(12) (2012)

Nakamoto, S.: Bitcoin: A Peer-to-Peer Electronic Cash System (2008). https://bitcoin.org/bitcoin.pdf. Accessed 18 Dec 2018

Osborne, J.W.: Best Practices in Data Cleaning: A Complete Guide to Everything You Need to Do Before and After Collecting Your Data. Sage Publications (2012). ISBN: 978-1412988018

Pastuszak, J., Orłowski, C.: Model of Rules for IT Organization Evolution. In: Nguyen, N.T. (ed.) Transactions on Computational Collective Intelligence IX. LNCS, vol. 7770, pp. 55–78. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-36815-8_3

Resnick, P., Zeckhauser, R., Friedman, R., Kuwabara, K.: Reputation systems. Commun. ACM 43(12), 45 (2000)

Richardson, M., Agrawal, R., Domingos, P.: Trust management for the semantic web. In: Fensel, D., Sycara, K., Mylopoulos, J. (eds.) ISWC 2003. LNCS, vol. 2870, pp. 351–368. Springer, Heidelberg (2003). https://doi.org/10.1007/978-3-540-39718-2_23

Rodríguez, R., Martınez, S., Herrera, F.: A group decision making model dealing with comparative linguistic expressions based on hesitant fuzzy linguistic term sets. Inf. Sci. 241, 28–42 (2013)

Rodríguez, R.M., Martínez, L., Herrera, F.: Hesitant fuzzy linguistic term sets for decision making. IEEE Trans. Fuzzy Syst. 20, 109–119 (2012)

Wang, J.H., Hao, J.: A new version of 2-tuple fuzzy linguistic representation model for computing with words. IEEE Trans. Fuzzy Syst. 14(3), 435–445 (2006)

Wierzbicki, A.: Trust and Fairness in Open, Distributed Systems. Studies in Computational Intelligence, vol. 298. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-13451-7. ISBN: 978-3-642-13450-0

Wu, Z.B., Xu, J.P.: Possibility distribution-based approach for MAGDM With hesitant fuzzy linguistic information. IEEE Trans. Cybern. 46, 694–705 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer-Verlag GmbH Germany, part of Springer Nature

About this chapter

Cite this chapter

Orłowski, C., Cofta, P., Wąsik, M., Welfler, P., Pastuszka, J. (2019). The Use of Group Decision-Making to Improve the Monitoring of Air Quality. In: Nguyen, N., Kowalczyk, R., Mercik, J., Motylska-Kuźma, A. (eds) Transactions on Computational Collective Intelligence XXXIV. Lecture Notes in Computer Science(), vol 11890. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-60555-4_9

Download citation

DOI: https://doi.org/10.1007/978-3-662-60555-4_9

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-60554-7

Online ISBN: 978-3-662-60555-4

eBook Packages: Computer ScienceComputer Science (R0)