Abstract

Microphysicalism seems the undisputed paradigm not only in solid-state physics and condensed matter physics, but also within many other branches of science that use computer simulations: The reduced description on the micro level seems epistemically favorable. This chapter investigates whether this view can be defended even in those cases where an ontological reduction is not under dispute. It will be argued that only when the mathematical models exhibit scale separation, is the reduced description on the level of the constituents a fruitful approach.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Lyapunov Exponent

- Direct Numerical Simulation

- Scale Separation

- Explanatory Reduction

- Adiabatic Elimination

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In many areas of philosophy, microphysicalism seems an undisputed dogma. As Kim (1984, p. 100) puts it: “ultimately the world—at least, the physical world—is the way it is because the micro-world is the way it is […].” But not only on the ontological, but also on the epistemic level, there is a clear preference for a micro-level description over a macro-level one. At least within the physical sciences, the governing laws on the micro-level are often seen as somewhat ‘simpler’ than the macro-level behavior: here universal laws can be applied to the movement of individual atoms or molecules, while the macro-level seems to behave in a rather intricate way.

Condensed matter physics and particularly solid state physics as its largest branch provide examples at hand: equilibrium and non-equilibrium statistical mechanics together with electromagnetism and quantum mechanics are used to model the interactions between individual atoms or molecules in order to derive properties of macro-phenomena like phase transitions, e.g. the melting of water or the onset of laser activity. More generally, the whole study of complex systems—for example, within the complexity theory, various theories of self-organization such as Haken’s synergetics, or Prigogine’s non-equilibrium thermodynamics—builds on the reductionist paradigm.

In all these cases, the ontological reduction is not disputed. A solid body is made up from atoms; a complex system is even defined by its constituents: it is made up of multiple interconnected parts. As indicated in the above citation by Kim, this often leads philosophers to claim an ontological priority for the micro level. This priority has recently been called into question by Hüttemann (2004). But what does this imply about a possible explanatory or epistemic priority of the micro over the macro description?

This paper leaves questions regarding the ontological priority aside and focusses on scientific models in which the ontological micro-reduction is not under dispute. I address whether and how an ontological reduction may entail an explanatory reduction. Hence, this paper is not about explanatory or epistemic reduction in its most general sense, but zooms in on a specific type of reductionist explanation, or rather prediction, in which quantitative information about macro-level quantities such as temperature or pressure are derived from the micro-level description. It will be shown that this is the case only for certain types of micro-reductionist models, namely those exhibiting scale separation. A separation of scales is present in the mathematical micro-model when the relevant scales differ by an order of magnitude.Footnote 1 To illustrate what scale separation amounts to, consider as a very simple example, discussed in more detail later in the paper, the motion of the Earth around the Sun. This can be treated as a two-body problem as the forces exerted on the Earth by all other celestial bodies are smaller by orders of magnitude than the force exerted by the Sun. We have a separation of scales here where the relevant scale is force or energy. Relevant scales may also be time, length, or others.

The epistemic pluralism I aim to defend on the basis of this analysis is not just another example of a non-reductionist physicalism. Rather it suggests how to draw a line between emergence and successful micro-reduction in terms of criteria the micro-formulation has to fulfill. It is argued that one should consider scale separation as a specific class of reduction. It will be shown that the identified criterion, scale separation, is of much broader relevance than simply the analysis of condensed matter systems. Hence in this sense, these fields do not occupy a special position concerning their methodology. Scale separation as a criterion for epistemically successful micro-reduction has important implications, particularly for the computational sciences where micro-reductionist models are commonly used.

The following Sect. 5.2 introduces the type of reductionist models this paper is concerned with, i.e. mathematically formulated micro-reductionist models in which the ontological reduction is not under dispute. The criteria of successful reduction will be detailed in terms of what is referred to as ‘generalized state variables’ of the macro system. Section 5.3 discusses two similar mathematical models in non-equilibrium physics that are formulated on a micro level to predict certain macro quantities, namely: the semi-classical laser theory in solid-state physics and current models of turbulent flows in fluid dynamic turbulence. It is argued that the relevant difference in the mathematical models is that while the former exhibits scale separation, the latter does not. The absence of scale-separation, as will be shown, renders the contemporary micro-reductionist approaches to turbulence unsuccessful, while the semi-classical laser theory allows for quantitative predictions of certain macro variables. The laser is chosen as a case study because despite being a system far from equilibrium it allows for quantitative forecasts. Section 5.4 puts the debate in a broader context, showing that a separation of scales is not only relevant for complex systems, but also for fundamental science. Section 5.5 generalizes the concept to local scale separation, i.e. cases where more than one scale dominates. Section 5.6 discusses the importance and implications the special role of scale-separation in micro-reduction has for various questions within the philosophy of science, such as emergent properties and the use of computer simulations in the sciences.

2 Explanation and Reduction

Solid state physics derives the macro-properties of solids from the material’s properties on the scale of its atoms and molecules and thus seems a paradigm example of a (interlevel or synchronic) reductionist approach within the sciences. Within the philosophy of science, this claim is, however, controversial: as pointed out by Stöckler (1991) well-established tools within the field, such as the so-called ‘slaving principle’ of Haken’s theory of synergetics, are seen by proponents of reductionist views as clear-cut examples for reduction, while anti-reductionists use the very same examples to support their views.

In order to make sense of those contradictory views, we need to analyze in more detail what reduction refers to: speaking of a part-whole relation between the atoms and the solid body refers to ontological statements, while the relation between the macroscopic description of the solid body that is derived from the evolution equation of its atoms and molecules refers to the epistemic description. In Sect. 5.2.1 I want to follow Hoyningen-Huene (1989, 1992, 2007) and distinguish methodological, ontological, epistemic, and explanatory reduction.Footnote 2 The criteria of a ‘successful’ micro reduction will be spelled out in Sect. 5.2.2: I focus on the prediction of certain macro-features of the system in quantitative terms. Throughout this paper the focus is on micro-reductions as a part-whole relation between reduced \(r\) (macro) and reducing level \(R\) (micro), \(r \to R\); however, the reduced and reducing descriptions do not need to correspond to micro and macroscopic descriptions.

2.1 Types of Reduction

Methodological reduction concerns the methodologies used to study the reduced and reducing phenomena. For example a methodological reductionism within biology assumes that biological phenomena can only be adequately studied with the methods of chemistry or physics.

Ontological reduction is concerned with questions about whether the phenomena examined on the reduced \(r\) and the reducing level \(R\) differ as regards their substantiality. The classical example, the reduction of the phenomena of thermodynamics to those of statistical mechanics, is clearly a case of ontological reduction: a thermodynamic system such as a gas in a macroscopic container is made up of its molecules or atoms. Exactly the same is true for a solid body that reduces ontologically to its constituents.

Such an ontological reduction can be distinguished from epistemic reduction. The latter considers the question as to whether knowledge of the phenomena on the reduced level \(r\) can be ascribed to knowledge of the \(R\)-phenomena. As in the thermodynamic example, the knowledge of the \(R\)-phenomena are typically formulated in terms of (natural) laws. Together with certain other specifications, like bridge rules that connect the vocabulary of the reduced and reducing description, the \(R\)-laws can be used to derive the laws of \(r\). Along these lines it is often said that the laws of thermodynamics (\(r\)) reduce to those of statistical mechanics (\(R\)). Note however that unlike ontological reduction, even this example of statistical mechanics and thermodynamics is not an undisputed example of epistemic or explanatory reduction.

From this epistemic reduction we may also ask whether the \(r\)-phenomena can be explained with the help of the resources of the reducing level \(R\). This is referred to as explanatory reduction. Note that when we assume, like Nagel (1961) did in his seminal writing on reduction, a deductive-nomological model of explanation, then explanation coincides with deduction from natural laws, and hence explanatory reduction coincides with epistemic reduction. However not all types of explanations are of the deductive-nomological type (see Wimsatt 1976).

Note that even in cases where law-like explanations exist on the level of the reducing theory, the correspondence rules may be in need of explanation. Hence, despite a successful epistemic reduction, we may not accept it as an explanation and hence as an explanatory successful reduction. Hoyningen-Huene mentions the example of epistemic reduction of psychological states to neuronal phenomena. The correspondence rule relates certain psychological sensations like the sentiment of red to certain neural states. Why this sentiment relates to a certain neural state, remains in need of an explanation.Footnote 3

As Hoyningen-Huene points out, a generic explication of explanatory reduction needs to remain vague because it needs to leave room for various types of explanations. For the remainder of this paper, the focus is on a specific type of explanatory reduction that is detailed in the following Sect. 5.2.2. Though the analysis remains in the realm of mathematical modeling, I want to refer to the reduction as ‘explanatory’ instead of ‘epistemic’, because in many areas of science it is not so much laws of nature or theories that play a central role, but models (see Morgan and Morrison 1999). Nonetheless, theories may play a central role in deriving the models. I will argue that the micro-level \(R\)-description needs to entail certain features in order for an undisputed ontological reduction to lead to a successful explanatory reduction.

2.2 Quantitative Predictions and Generalized State Variables

Complexity theory provides an excellent example of a methodological reduction: chaos theory or synergetics, first developed in the context of physical systems, are now also applied to biological or economic systems (e.g. Haken 2004). Ontological reduction is not under dispute here; my concern is with uncontested examples of ontological micro-reduction. For these I will investigate in more detail the epistemic or explanatory aspects of the reduction. My main thesis is that ontological reduction does not suffice for explanatory reduction, not even in those cases where a mathematical formulation of the micro-level constituents can be given. In this Sect. 5.2.2 I explicate in more detail what I characterise as a successful explanatory reduction, thereby distinguishing an important subclass of reduction that has not yet received attention within the philosophy of science.

A scientific model commonly does not represent all aspects of the phenomena under consideration. Within the literature on models and representation, often the term ‘target system’ is used to denote that it is specific aspects that our scientific modeling focuses on. Consider a simple example of a concrete model, namely a scale model of a car in a wind tunnel. This model does not aim to represent all aspects of the car on the road. Rather the target system consists of a narrow set of some of the car’s aspects, namely the fluid dynamical characteristics of the car, such as its drag. The model does not adequately represent and does not aim to adequately represent other features of the car, such as its driving characteristics. This also holds true for abstract models and more generally for any theoretical description. This seemingly obvious feature of scientific investigation has important consequences. For our discussion of explanatory reduction, it implies that we need to take a closer look at which aspects of the \(r\)-phenomena we aim to predict from the micro description \(R\).

In what follows I want to focus on one specific aspect that is important in a large number of the (applied) sciences, namely the prediction of certain quantitative aspects of the macro system such as the temperature and pressure at which a solid body melts. This information is quite distinct from many investigations within complexity theory. Here, the analysis on the microlevel often gives us information on the stability of the system as a whole. Chaos theory allows us to predict whether a system is in a chaotic regime, i.e. whether the system reacts sensitively to only minute changes in certain parameters or variables. Here, for example, the Lyapunov exponents provide us with insights into the stability of the macro system. The first Lyapunov exponent characterizes the rate at which two infinitesimal trajectories in phase space separate. The conditions under which this information can be derived are well understood and detailed in Oseledets’ Multiplicative Ergodic theorem (Oseledets 1968).

The information about the Lyapunov exponent is quantitative in nature insofar as the exponents have a certain numerical value. However, this quantitative information on the reduced level only translates into a qualitative statement about the macro system’s stability, not quantitative information about its actual state. My concern here is much more specific: it focuses on when a micro-reductionist model can be expected to yield information of the system’s macro properties that are described in quantitative terms. I refer to those quantities that are related to the actual state of the system as ‘generalized state variables’. The term ‘state variable’ is borrowed from thermodynamics and denotes variables that have a unique value in a well-defined macro state. Generalized state variable indicates that unlike thermodynamics, the value may depend on the history of the system.Footnote 4 So the question to be addressed in the following is when a micro-reductionistic model can yield quantitative information about the generalized state variables of the macro system?

3 Predicting Complex Systems

I will contend in the following that a separation of scales is the requirement for successful micro-reduction for complex systems with a large number of interacting degrees of freedom on the microlevel. Therefore I argue that one should distinguish a further class of micro models, namely those that exhibit scale separation. Depending on the phenomenon under consideration and on the chosen description, these scales can be time, length, energy, etc.

A very simple example, the movement of the Earth around the Sun modeled as a two body problem, is used to illustrate the concept of scale separation in Sect. 5.3.1. Arguments for scale separation as a condition for quantitative predictions on the generalized state variables are developed by two case studies: I compare two similar, highly complex, non-equilibrium dynamical systems, namely the laser (Sect. 5.3.2) and fluid dynamic turbulence (Sect. 5.3.3). I claim that while the former exhibits scale separation, the latter does not and this will be identified as the reason for a lack of quantitative information regarding the macro level quantities in the latter case.

3.1 Scale Separation in a Nutshell

Before beginning my analysis, let me expand a little on a common answer to the question of when mathematical models are able to lend themselves to quantitative forecasts. Recall the oft-cited derivation of the model of the Earth’s motion around the Sun from Newton’s theory of gravitation and the canonical formulation of classical mechanics in terms of Newton’s laws. This may be seen as a micro-reductionist approach in the sense introduced above: The goal is to derive information on the macro level, e.g. on the length of the day-night cycle, from the reduced micro-level that consists of planetary motions. The Earth-Sun motion clearly is a model allowing for quantitative predictions. The common answer as to why this is so is that the evolution equations are integrable. As early as 1893 Poincaré noted that the evolution equations of more than two bodies that interact gravitationally are no longer integrable and might yield chaotic motion (Poincaré 1893, pp. 23–61). One common answer to the question as to whether or not quantitative forecasts of the state variables are feasible thus seems to depend on the complexity of the system: complex systems, i.e. systems with a large number of degrees of freedom (in this case larger than two) that are coupled to each other via feedbacks, and hence exhibit nonlinear evolution equations of their variables, resist quantitative predictions.

To deal with these complex systems, we have to rely on complexity theory or chaos theory in order to determine information about the stability of the systems and others dynamical properties. But as noted above, we are often not interested in this information on the system’s stability alone, but want quantitative information on generalized state variables. Although, strictly speaking, the motion of the Earth around the Sun is a multi-body problem it is possible to reduce its motion to an effective two-body problem. So the important question is when the two-body approximation is adequate. And this is not addressed by Poincare’s answer. Note that the model of planetary motion goes beyond Newton’s theory insofar as it entails additional assumptions—such as neglecting the internal structure of the planets, specific values for the bodies’ masses, or neglecting other bodies such as other planets or meteorites. With the model comes a tacit knowledge as to why these approximations are good approximations: the motion of the Earth can well be described by its motion around the Sun only because the forces exerted on the Earth by other bodies, even by the large planets like Saturn and Jupiter, are smaller by orders of magnitude than the force exerted by the Sun. If there were large scale objects, say, of the size of Jupiter or Saturn that orbit around some far away center, but come very close to the orbit of the Earth from time to time, then the reduced description in terms of two bodies would break down.

Thus the model of the Earth-Sun motion works because the forces exerted on the Earth by all other bodies, planets asteroids etc. are smaller by orders of magnitude than the force exerted on the Earth by the presence of the Sun. With information about the distance between the relevant bodies, the forces can be directly translated in terms of work or energy. In other words, the micro model exhibits a separation of the requisite energy scales. Generally speaking, scale separation may refer to any scale like energy, time, length, or others, depending on the formulation of the micro model.

3.2 Lasers

The so-called “semi-classical laser theory”, originally developed by H. Haken in 1962, is a very successful application of the micro reductionist paradigm detailed in Sect. 5.2. The properties of the macro-level laser are derived from a quantum mechanical description of the matter in the laser cavity and its interaction with light which is treated classically; the propagation of light is derived from classical electromagnetism. As Haken (2004, p. 229) puts it “[t]he laser is nowadays one of the best understood many-body problems.” As I will show in this section, the key feature of the micro description that allows for a successful reduction is the display of scale separation.

A laser is a specific lamp capable of emitting coherent light. The acronym ‘laser’, light amplification by stimulated emission of radiation, explicates the laser’s basic principle:

-

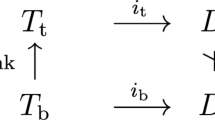

The atoms in the laser cavity (these may be a solid, liquid, or gas) are excited by an external energy source. This process is called pumping. It is schematically depicted in Fig. 5.1 for the most simple case of a two-mode laser: atoms in an excited state. On relaxing back to their ground state, the atoms emit light in an incoherent way, just like any ordinary lamp.Footnote 5

-

When the number of excited atoms is above a critical value, laser activity begins: an incident light beam no longer becomes damped exponentially due to the interaction with the atoms, rather, the atoms organize their relaxation to the ground state in such a way that they emit the very same light in terms of frequency and direction as the incident light beam. This is depicted in Fig. 5.1: the initial light beam gets multiply amplified as the atoms de-excite, thereby emitting light that in phase, polarization, wavelength, and direction of propagation is the same as the incident light wave—the characteristic laser light. One speaks of this process also as self-organization of the atoms in the laser medium.

The laser is discussed here because it is a fairly generic system: first, it is a system that features a large number of interacting degrees of freedom. The incoming light beam interacts with the excited atoms of the laser medium in the cavity; the number of atoms involved—all the atoms in the laser cavity—is of the order of the Avogadro number, i.e. of the order of \(10^{23}\). Secondly, it is a system far from equilibrium in that the ‘pumping’ energy must be supplied to keep the atoms in the excited state. Thirdly, the system is dissipative as it constantly emits light.

The light is described classically in terms of the electric field \(\varvec{E}(\varvec{x},t)\) which obeys Maxwell’s equations, and decomposed into its modes \(E_{k} (t)\), where \(k = 1,2, \ldots\) labels the respective cavity mode. The semi-classical laser equations describe the propagation of each mode in the cavity as an exponentially damped wave propagation through the cavity, superimposed by a coupling of the light field to the dipole moments \(\alpha_{i} (t)\) of the atoms and stochastic forces that incorporate the unavoidable fluctuations when dissipation is present. The dipole moment of atom \(i\) is itself not static, but changes with the incident light and the number of electrons in an excited state. To be more precise, the evolution equation of \(\alpha_{i} (t)\) couples to the inversion \(\sigma_{i} (t)\) which is itself not static, but dependent on the light field E. Here the index \(i\) labels the atoms and runs from 1 to \(O(10^{23} )\).

This leaves us with the following evolution equations:

where \(F_{\lambda } (t)\), \(\Gamma _{\lambda } (t)\) and \(\Gamma _{\sigma ,\lambda } (t)\) denote stochastic forces.

The details and a mathematical treatment can be found in Haken (2004, pp. 230–240). For our purposes it suffices to note that as it stands, the microscopic description of the matter-light interaction is too complex to be solved even with the help of the largest computers available today or in the foreseeable future: for every mode \(k\) of the electric field, we have in Eq. (5.1) a system of the order of \(10^{23}\) evolution equations, namely three coupled and thus nonlinear evolution equations for each atom of the cavity.

The methodology known as the ‘slaving principle’ reflects the physical process at the onset of the laser activity and allows us to drastically simplify the micro-reductionist description. Generally we will expect the amplitude of the electric field E to perform an exponentially damped oscillation. However, when the inversion \(\sigma_{i}\) and thus the number of atoms in excited states increases, the system suddenly becomes unstable and laser activity begins, i.e. at some point coherent laser light will be emitted. This means that the light amplitude becomes virtually undamped and thus the internal relaxation time of the field is very long. In fact, when this happens the amplitude of the electric field is by far the slowest motion of the system. Hence the atoms, which move very fast compared to the electric field, follow the motion of the light beam almost immediately. One says that the electric field enslaves all other degrees of freedom, i.e. the inversion and the dipole moment of all the involved atoms. Hence, the term ‘slaving principle’ describes a method for reducing the number of effective degrees of freedom. This allows elimination of the variables describing the atoms, i.e. the dipole moments \(\alpha_{i}\) and the inversion \(\sigma_{i}\). From the set of laser equations above, one then obtains a closed evolution equation for the electric field E that can be solved.

The crucial feature of the model that enables the elimination of the atomic variables is that the relaxation time of the atomic dipole moment is smaller by far than the relaxation time of the electric field. Expressed differently: the relevant time scales in this model separate. Consequently, the onset of laser activity at a fixed energy level can be predicted from the micro-reductionist model that describes the atoms and the light with the help of quantum mechanics and electromagnetism.

3.3 Fluid Dynamic Turbulence

In order to stress that indeed the separation of (time) scales is the relevant feature that allows for a successful micro-reduction in the sense defined in Sect. 5.2, I contrast the semi-classical laser theory with the description of fluid dynamic turbulence. The two case studies are similar in many respects: both are systems far from equilibrium, both are distinguished by a large number of degrees of freedom, and the interacting degrees of freedom give rise to nonlinear evolution equations. Moreover, in the current description, both systems do not display an obviously small parameter that allows for a perturbative treatment.

The equivalent equations for fluid motion are the Navier-Stokes equations which in the absence of external forcing, are:

where \(U_{i}\) denotes the velocity field, Re is a dimensional number that characterizes the state of the flow (high Re corresponds to turbulent flows), and \(D/Dt = \partial /\partial t + U_{i} \partial /\partial x_{i}\) the substantial derivative. Unlike the laser Eq. (5.1), these are not direct descriptions on the molecular level, but mesoscopic equations that describe the movement of a hypothetical mesoscopic fluid particle, maybe best understood as a small parcel of fluid traveling within the main flow. The Navier-Stokes equations are the micro-reductionist descriptions I want to focus on and from which one attempts to derive the macro-phenomena of turbulence by the evolution equations governing its constituents.Footnote 6

For a fully developed turbulent flow, the Reynolds number Re is too large to solve these equations analytically or numerically.Footnote 7 This is similar to the semi-classical laser theory. In the latter case the slaving principle provided us with a method for reducing the number of degrees of freedom, something this is not yet possible for fully developed turbulent flows.

While there exist fruitful theoretic approaches to turbulence that can successfully model various aspects of it, these remain vastly unconnected to the underlying equations of motion (5.2) that express the micro-reductionist description. Theoretical approaches are based on scaling arguments, i.e. on dimensional analysis (Frisch 1995), or approach the issue with the help of toy models: Recognizing certain properties of Eq. (5.2) as pivotal in the behavior of turbulence, one identifies simpler models that share those properties and that can be solved analytically or numerically (Pope 2000). Various models of turbulence in the applied and engineering sciences make use of simplified heuristics (Pope 2000). In Sect. 5.5, I will come back to one of these approaches, the so-called ‘large eddy simulations’ that underly many numerical simulations of turbulence, where local scale separation is discussed as a weaker requirement than scale separation.

Despite the success of these theoretical approaches to turbulence, it remains an unsolved puzzle as to how to connect the toy models or the scaling laws to the underlying laws of fluid motion (Eq. 5.2). Common to all these approaches to turbulence is that they are models on the macro-level and are not derived from the micro-level model expressed by the Navier-Stokes equations. The reductive approach in the case of turbulence has not yet been successful, which leaves turbulence, at least for the time being, as “the last unsolved problem in classical physics” (Falkovich and Sreenivasan 2006). In order to analyse as to why this is so, let us take a closer look at the physics of a turbulent flow.

Turbulence is characterized by a large number of active length or time scales which can be heuristically associated to eddies of various sizes and their turnover time, respectively (see Fig. 5.2). The simplest heuristic picture of turbulence is that eddies of the size of the flow geometry are generated: these eddies are then transported along by the mean flow, thereby breaking up into smaller and smaller eddies. This so-called ‘eddy cascade’ ends in the smallest eddies in which the energy of the turbulent motion is again dissipated into energy of the mean flow. It is the existence of such a range of active scales that spoils the success of the micro-reductionistic approach. When we focus on the eddies’ turn-over time the analogy, or rather the discrepancy, with the laser becomes most striking: for the laser, at the point of the phase transition there is one time-scale that slaves all other degrees of freedom (as these are smaller by orders of magnitude), but there exists a large number of active time-scales in a turbulent flow.

Fluid dynamic turbulence: large eddies of the size of the flow geometry are created and transported by the mean flow (here from right to left), thereby breaking up into smaller eddies. Within the smallest eddies (left), the turbulent energy is dissipated into mean energy of the flow. Note that within the so-called ‘inertial range’, eddies of various sizes coexist

To summarize, two complex, non-equilibrium systems were addressed: The laser and fluid dynamic turbulence. The mathematical formalization in both descriptions is fairly similar, both are problems within complex systems theory. However, while the first problem allows one to derive macroscopic quantities of interest from the micro-model, for turbulence this is not possible. The reason for this is a lack of separation of time and length scales because in turbulent flows eddies of various sizes and various turn-over times are active.

4 Scale Separation, Methodological Unification, and Micro-Reduction

A separation of scales is featured in many complex systems. Indeed, the term ‘slaving principle’ was originally coined within the semiclassical description of the laser, but is now widely applied in various areas of complex systems, chaos or catastrophe theory (e.g. Zaikin and Zhabotinsky 1970). The methodology coincides with what is sometimes referred to as ‘adiabatic elimination’. The slaving principle has been also applied successfully to mathematical models within biology and the social sciences (Haken 2004). We may see its use as an example of a successful methodological reduction. However, in order to argue that micro-reduction with scale separation constitutes an important class of explanatory reduction, one must ask whether this is a useful concept only within the analysis of complex systems. After all, it is often argued that condensed matter physics is special within the physical sciences in that it relates to statistical mechanics or thermodynamics and cannot be fully reduced to the theories of the four fundamental forces, i.e. electromagnetic, gravitational, strong, and weak interaction. In the following Sect. 5.4.1 I therefore want to show that scale separation is also an essential characteristic of successful micro-reductions in other areas of physics. The section shows how the idea of a separation of scales can also accommodate scale-free systems like critical systems within equilibrium statistical mechanics.

4.1 Fundamental Laws: Field Theories and Scale Separation

We can show how scale separation is a key issue for deriving quantitative predictions about macro phenomena from a micro-reductionistic approach even in areas with an elaborate theoretical framework such as quantum chromodynamics (QCD) and quantum electrodynamics (QED). Despite their differences, these two theories are technically on a similar advanced level: Both are gauge theories, both are known to be renormalizable, we understand how a non-Abelian gauge theory like QCD differs from the Abelian theory of QED, and so forth. For QED we can derive good quantitative predictions. Indeed, perturbative models deriving from quantum field theory constitute the basis for large parts of quantum chemistry. However, for QCD, this is only possible at small distances or, equivalently, in the high energy regime. This is due to the fact that, while the coupling constant α, i.e. a scalar-valued quantity determining the strength of the interaction, is small for electromagnetic interactions, in fact, \(\alpha_{\text{QED}} \approx 1/137 \ll 1\), the coupling constant \(\alpha_{\text{QCD}}\) for strong interactions is large, except for short distances or high energies. For example, it is of the order of 1 for distances of the order of the nucleon size. Hence, speaking somewhat loosely, since field theories do not contain the term ‘force’, for strongly interacting objects, the different forces can no longer be separated from each other.

It is the smallness of the coupling constant in QED that allows a non-field theoretical approach to, for example, the hydrogen atom: fine and hyperfine structure are nicely visible in higher order perturbation theory. The heuristic picture behind the physics of strong interactions, as suggested by our account of scale separation, is that here the (chromo-electric) fine structure and hyperfine structure corrections are of the order of the unperturbed system, i.e. the system lacks a separation of the relevant scales. More generally as regards modern field theories, one may see the success of so-called ‘effective field theories’ in contemporary theoretical particle physics as an application of the principle of scale separation (Wells 2013).

4.2 Critical Phenomena

Section 5.3 introduced the concept of scale separation by means of the laser which involves a non-equilibrium phase transition. Also, equilibrium phase transitions as described by the theory of critical phenomena can be understood in terms of a separation of scales, in this case length scales. I focus on this below because it shows how scale-free systems may originate from scale separation.

A typical example of a continuous equilibrium phase transition is a ferromagnet that passes from non-magnetic behavior at high temperatures to magnetic behavior when the temperature decreases below the critical Curie temperature. While the description of such equilibrium statistical mechanical systems is formulated on a micro scale of the order of the atomic distance within the ferromagnet, one is actually interested in the macroscopic transition from a nonmagnetic to a magnetic phase, for which many of the microscopic details are irrelevant.Footnote 8 Within critical theory, one speaks here of ‘universality classes’ when different microscopic realizations yield the same macroscopic properties.

For a long time such phase transitions remained an unsolved problem due to their remarkable features, in particular the universal behaviour of many system at the critical point, i.e. at the phase transition. Classical statistical mechanics simply fails to account for these phenomena due to density fluctuations. Let us take a closer look at the physics at the point of the phase transition.

With the help of the renormalization group we can integrate out the microscopic degrees of freedom. This procedure yields a course-grained description similar to that encountered in deriving the Navier-Stokes equations. The central point in the Ising model, a crude model of a magnet, is an invariance at the critical point, namely: the free energy is invariant under coarse-graining. This is so because the system is self-similar at the point where the phase transition occurs: no characteristic length scale(s) exists as one of the correlation lengths, i.e. the typical correlations between the spins in the magnet diverges. The diverging correlation length is indeed necessary for the renormalization procedure to work. The renormalization approach is similar to a mean field description, however self-similarity implies that the renormalization procedure is exact: we study not the original system at some length scale \(l_{1}\) with a large number of degrees of freedom, but a rescaled one at length \(l_{2}\) which gives us exactly the same physics.

This invariance that is based on a diverging correlation length is what distinguishes the Ising model from the Navier-Stokes case and makes it similar to the semi-classical laser theory insofar as we can reformulate the diverging correlation length as an extreme case of a separation of scales. A diverging scale can be understood as a limiting case of scale separation: one scale goes to infinity and the behavior of the whole system is determined by only this scale as the other degrees of freedom are, in the language of non-equilibrium phase transitions, slaved by the diverging scale.Footnote 9

5 Perturbative Methods and Local Scale Separation

Discussions of various branches of physics have shown how scale separation is a universal feature of an explanatory successful micro-reduction. In this Section I briefly sketch how to generalize the concept of scale separation to the weaker requirement of local separation of scales. The universal behavior we encountered in the preceding section in critical theory, for example, is then lost. But as will be shown, non-standard perturbative accounts as well as some standard techniques in turbulence-modeling actually build on local scale separation.

As the introductory example of the movement of the Earth around the Sun showed, more traditional methodologies which may not be connected with reduction, such as perturbative accounts, may also be cast in the terms of scale separation. Such perturbation methods work when the perturbation is small or, in other words, the impacts of the higher perturbative expansions are smaller by orders of magnitude than the leading term.

In singular perturbation theory, as applied, for example, to so-called ‘layer-type problems’ within fluid dynamics, the condition that the perturbation is ‘small’ is violated within one or several layers at the boundary of the flow or in the interior of the spatio-temporal domain. The standard perturbative account that naively sets the perturbation to zero in the whole domain fails as this would change the very nature of the problem. The key to solving such problems—which is lucidly illustrated in Prandtl’s (1905) boundary layer theory—is to represent the solution to the full problem as the sum of two expansions: loosely speaking, the solution in one domain is determined by the naive perturbative account, while the solution in the other domain is dominated by the highest derivative. I want to refer to this and the following examples as ‘local scale separation’ as it is still the separation of the relevant scales that allows for this approach; however, the scales do not separate on the entire domain.Footnote 10

Regular perturbation theory can also fail when the small perturbations sum up; this happens when the domain is unbounded. The techniques used here—multiple scale expansion and the method of averaging—again draw on local scale separation. For a technical account on the non-standard perturbative methods discussed here see Kevorkian and Cole (1996).

But local scale separation is not just the decisive feature that makes non-standard perturbative approaches work, it is also used more broadly in scientific modeling. An example invovles the ‘large-eddy simulations’, an approach to fluid dynamic turbulence that is very popular within the engineering sciences. Here the large-scale unsteady turbulent motion is represented by direct numerical integration of the Navier-Stokes equations that are believed to describe the fluid motion. Direct numerical simulation of all scales is computationally too demanding for most problems; thus within the large-eddy simulations the impact of the small-scale motion of the eddies is estimated via some heuristic model. This separate treatment of the large and the small scale motion is only possible when the so-called ‘inertial range’ (see Fig. 5.1) is large enough so that the motion of the smallest eddies inside the dissipation range, which are expected to have universal character in the sense that they are not influenced by the geometry of the flow, and the motion of the largest eddies inside the energy containing range can indeed be separated. Cast in terms of scale-separation, large-eddy simulations thus work when the typical scales of dissipative range are much smaller than those of the energy range (cf. Fig. 5.1; Pope 2000, p. 594f.).

6 Reduction, Emergence and Unification

The case studies in this paper exemplify how scale separation is the underlying feature of successful micro-reductionist approaches in different branches of physics, and how scale separation provides a unified view of various different methods used in diverse branches: the slaving principle, adiabatic elimination, standard and non-standard perturbative accounts, and critical theory. Success was thereby defined in Sect. 5.2 in epistemic terms: it amounts to deriving quantitative information of generalized state variables of the macro system from the micro-description. Also, in other areas of science where micro-reductionist models are employed and these models are formulated in mathematical terms, scale separation plays a crucial role.Footnote 11 In the remainder I want to address some of the implications the central role of scale separation has for the philosophical debates in condensed matter physics and complex systems theories, as well as the debates on reduction and modeling in the sciences more generally.

Consider first the question of whether condensed matter physics, and particularly solid state physics, as well as complex systems analysis, occupy a special position within the physical sciences. It was shown that scale separation is the underlying feature not only when applying the slaving principle or adiabatic elimination but also in perturbative accounts or in QED. This clarifies why the often articulated idea that condensed matter physics or complexity theories play a special methodological role cannot be defended as a general claim (see Kuhlmann 2007; Hooker et al. 2011).

What results is a kind of scepticism regarding a reorganization of the sciences according the various methods used by each (e.g. Schmidt 2001). To some extent this may not be a fruitful line to pursue because for many methods the underlying idea, namely scale separation, is the same in different areas: seemingly different methodologies like adiabatic elimination or the slaving principle are just one way of expressing the fundamental fact that the system under consideration exhibits scale separation.

As mentioned before, well-established analytic tools like the slaving principle in Haken’s theory of synergetics are seen by proponents of a reductionistic view as clear-cut examples for reduction, while anti-reductionists use the very same examples to support the contrary point of view. Identifying scale separation as the characteristic feature of an explanatory successful micro-reduction, adds more nuance to the picture: the analysis of the semi-classical laser theory was able to reveal how the slaving principle, adiabatic elimination, and others are good examples of methodological reduction. Moreover it showed that the application of the scaling principle turns an undisputed ontological micro-reduction into a successful explanatory one. Micro-reduction with scale separation thus provides not only the basis for a unified methodology used over various branches of the mathematical sciences, but also an important specific class of an epistemic or explanatory reduction. It also showed that questions of ontological reduction must be clearly separated from epistemic or explanatory aspects. Despite uncontested micro-reduction on an ontological level, complex systems without scale separation may provide examples of emergent epistemic features. Fluid dynamic turbulence provides a case at hand. I hence want to adopt a pluralistic stance concerning the question of epistemic priority of either the macro or micro level.Footnote 12

This epistemic pluralism has important implications for contemporary computational sciences. It was the improvement of numerical methods and the sharp increase in computational power over the last decades that boosted the power of micro-reductionist approaches: computers made it possible to calculate micro-evolution equations that are more accessible than the equations that describe the maco behaviour. A field like solid-state physics owes large parts of its success to the employment of computers. The same is true for many other fields. For example, contemporary climate models try to resolve the various feedback mechanisms between the independent components of the overall climate system in order to predict the macro quantity ‘global mean atmospheric temperature’. For models with such practical implications it becomes particularly clear why information about the stability of the system does not suffice, rather, what is needed are generalized state variables at the macro level.

Hence, the type of information many computer simulations aim for are quantitative predictions of generalized state variables. The use of these models, however, often does not distinguish between an epistemic or explanatory successful reduction and an ontological reduction. As my analysis has shown, even for cases where the ontological reduction is undisputed, as in condensed matter physics or climatology, there are serious doubts whether a micro-reductionist explanation is successful if the scales in the micro-model do not separate. This is the case for certain fluid dynamic problems and hence for climatology. The problem casts doubt on the often unquestioned success of numerically implemented micro-models.

This doubt also seems to be shared by scientists who have begun to question the usefulness of what appears to be the excessive use of computer simulations. In some fields where the reductionist paradigm was dominant one can see the first renunciations (e.g. Grossmann and Lohse 2000) of this approach. Just as it is not helpful to describe the motion of a pendulum by the individual motion of its \(10^{23}\) atoms, micro-reductionist models that are numerically implemented are not a panacea for every scientific problem, despite the undisputed ontological reduction to the micro-description.

Notes

- 1.

The term ‘scale separation’ is borrowed from the theory of critical phenomena, but applied more generally in this paper.

- 2.

Hoyningen-Huene distinguishes further types of reduction which for the purpose of this paper that focuses on mathematically formulated models and theories are not of relevance.

- 3.

It should be noted that when paradigm cases of reduction like thermodynamics are contested it is often the bridge rules that are problematic.

- 4.

This feature will only become relevant in the latter sections of this paper, when I briefly touch on the applicability of my approach beyond many-body systems.

- 5.

Note that this phase transition is not adequately described within the semiclassical approach as the emission of normal light due to spontaneous emission necessitates a quantum mechanical treatment of light.

- 6.

The Navier-Stokes equations can be derived from the micro-level description given by Liouville’s equation that gives the time evolution of the phase space distribution function. The derivation of the Navier-Stokes equation from even more fundamental laws, is, however, not the concern of this paper.

- 7.

There have been, however, recent advances in direct numerical simulations of the Navier-Stokes equations in the turbulent regime. However, the investigated Reynolds number today are below those investigated in laboratory experiments and it takes weeks or month of CPU. Also boundaries pose a severe challenge to the direct numerical simulations of the Navier-Stokes equations (see Pope 2000).

- 8.

Note that here I only claim that this reduction is successful in so far as it allows quantitative predictions of certain macro-variables. I do not address the question as to whether the micro-model offers an encompassing explanation, as discussed, for example, in Batterman (2001).

- 9.

This is the reason why, strictly speaking, the renormalization procedure works only at the critical point. The more remote the system is from the critical point, the more dominant become finite fluctuations of the correlation length that spoils the self-similarity.

- 10.

Thanks to Chris Pincock for pointing out this aspect.

- 11.

- 12.

Compare Hüttemann (2004) for a critical discussion on the ontological priority of the micro level.

References

Batterman, R.W.: The Devil in the Details: Asymptotic Reasoning in Explanation, Reduction and Emergence. Oxford University Press, New York (2001)

Black, F., Scholes, M.: The pricing of options and corporate liabilities. J. Polit. Econ. 81, 637–654 (1973)

Drossel, B., Schwabl, F.: Self-organized critical forest-fire model. Phys. Rev. Lett. 69, pp. 1629–1632 (1992)

Falkovich, G., Sreenivasan, K.R.: Lessons from hydrodynamic turbulence. Phys.Today 59(4), 43–49 (2006)

Frisch, U.: The Legacy of A. N. Kolmogorov. Cambridge University Press, Cambridge (1995)

Grossmann, S., Lohse, D.: Scaling in thermal convection: a unifying theory. J. Fluid Mech. 407, 27–56 (2000)

Haken, H.: Synergetics. Introduction and Advanced Topics. Springer, Berlin, Heidelberg (2004)

Hooker, C.A., Gabbay, D., Thagard, P.,Woods, J.:Philosophy of Complex Systems, vol. 10. Handbook of the Philosophy of Science, Elsevier, Amsterdam (2011)

Hoyningen-Huene, P.: Epistemological reduction in biology: intuitions, explications and objections. in: Hoyningen-Huene, P., Wuketits, F.M. (eds.) Reductionism and Systems Theory in the Life Sciences, pp. 29–44. Kluwer, Dordrecht (1989)

Hoyningen-Huene, P.: On the way to a theory of antireductionist arguments. in: Beckermann, A., Flohr, H., Kim, J. (eds.) Emergence or Reduction? Essays on the Prospects of Nonreductive Physicalism, pp. 289–301. de Gruyter, Berlin (1992)

Hoyningen-Huene, P.: Reduktion und Emergenz. in: Bartels, A., Stöckler, M. (eds.) Wissenschaftstheorie, pp. 177–197. Mentis, Paderborn (2007)

Hütteman, A.: What’s Wrong With Microphysicalism?. Routledge, London (2004)

Kuhlmann, M.: Theorien komplexer Systeme: Nicht-fundamental und doch unverzichtbar,. in: Bartels, A., Stöckler, M. (eds.) Wissenschaftstheorie, pp. 307–328, Mentis, Paderborn (2007)

Kevorkian, J., Cole, J.D.: Multiple Scale and Singular Perturbation Methods. Springer, New York (1996)

Kim, J.: Epiphenomenal and supervenient causation. Midwest Stud. Philos 9, 257–270 (1984); reprinted in: Kim J.: Supervenience and Mind, pp. 92–108. Cambridge University Press, Cambridge

Morgan, M., Morrison, M.C. (eds.): Models as Mediators: Perspectives on Natural and Social Science, Cambridge University Press, Cambridge (1999)

Nagel, E.: The Structure of Science. Routledge and Kegan Paul, London (1961)

Oseledets, V.: A multiplicative ergodic theorem. Characteristic Lyapunov exponents of dynamical systems. Trudy Moskov. Mat. Ob 19, 179 (1968)

Poincaré, J.H.: Leçons de mécanique céleste : professées à la Sorbonne, Tome I: Théorie générale des perturbations planétaires. http://gallica.bnf.fr/ark:/12148/bpt6k95010b.table (1893)

Pope, S.B.: Turbulent Flows. Cambridge University Press, Cambridge (2000)

Prandtl, L.: Über Flüssigkeitsbewegung bei sehr kleiner Reibung. In: Verhandlungen des dritten Internationalen Mathematiker-Kongresses, pp. 484–491. Heidelberg, Teubner, Leipzig (1905)

Schmidt, J.C.: Was umfasst heute Physik?—Aspekte einer nachmodernen Physik. Philosophia naturalis 38, 271–297 (2001)

Stöckler, M.: Reductionism and the new theories of self-organization. In: Schurz, G., Dorn, G. (eds.) Advances of Scientific Philosophy, pp. 233–254. Rodopi, Amsterdam (1991)

Wells, J.: Effective Theories in Physics. Springer, Dordrecht (2013)

Wimsatt, W.C.: Reductive explanation: A functional account. In: Cohen, R.S., Hooker, C.A., Michalos, A.C., van Evra, J.W. (eds.) PSA 1974, Proceedings of the 1974 Biennial Meeting, Philosophy of Science Association, pp. 671–710. Reidel, Dordrecht, Boston (1976)

Zaikin, N., Zhabotinsky, A.: Concentration wave propagation in two-dimensional liquid phase self oscillating system. Nature 225, 535–537 (1970)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Hillerbrand, R. (2015). Explanation Via Micro-reduction: On the Role of Scale Separation for Quantitative Modelling. In: Falkenburg, B., Morrison, M. (eds) Why More Is Different. The Frontiers Collection. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-43911-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-662-43911-1_5

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-43910-4

Online ISBN: 978-3-662-43911-1

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)