Abstract

Often we can describe the macroscopic behaviour of systems without knowing much about the nature of the constituents of the systems let alone the states the constituents are in. Thus, we can describe the behaviour of real or ideal gases without knowing the exact velocities or places of the constituents. It suffices to know certain macroscopic quantities in order to determine other macroscopic quantities. Furthermore, the macroscopic regularities are often quite simple. Macroscopic quantities are often determined by only a few other macroscopic quantities.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Often we can describe the macroscopic behaviour of systems without knowing much about the nature of the constituents of the systems let alone the states the constituents are in. Thus, we can describe the behaviour of real or ideal gases without knowing the exact velocities or places of the constituents. It suffices to know certain macroscopic quantities in order to determine other macroscopic quantities. Furthermore, the macroscopic regularities are often quite simple. Macroscopic quantities are often determined by only a few other macroscopic quantities. This fact is quite remarkable, as Jerry Fodor noted:

Damn near everything we know about the world suggests that unimaginably complicated to-ings and fro-ings of bits and pieces at the extreme micro-level manage somehow to converge on stable macro-level properties. […] [T]he ‘somehow’, really is entirely mysterious […] why there should be (how there could be) macro level regularities at all in a world where, by common consent, macro level stabilities have to supervene on a buzzing, blooming confusion of micro level interactions (Fodor 1997, p. 161).

The puzzle is that on the one hand macroscopic behaviour supervenes on the behaviour of the constituents, i.e. there is no change in the macroscopic behaviour without some change at the micro-level. On the other hand not every change in the states of the constituents leads to a change on the macro-level. To some extent the macro-behaviour is independent of what is going on at the micro-level. The questions we will address in this paper is whether there is an explanation for the fact that as Fodor put it the micro-level “converges on stable macro-level properties”, and whether there are lessons from this explanation for other issues in the vicinity.

Various metaphors have been used to describe this peculiar relation of the micro-level to the macro-level. The macro-behaviour has been said to be “autonomous” (Fodor 1997), it has been characterized as “insensitive to microscopics” or due to “higher organizing principles in nature” (Laughlin and Pines 2000, p. 261) and above all macroscopic behaviour is said to be “emergent”, where emergence implies novelty, unexplainability and irreducibility. There is no consensus about how best to define the concept of emergence—partly due to the fact that there is no uncontroversial set of paradigmatic examples and partly due to the fact the concept of emergence plays a role in a variety of philosophical and scientific contexts (For a discussion see Humphreys and Bedau 2008, Introduction).

As a consequence of these conceptual difficulties we will in the first part of the paper focus on the notion of the stability of macro-phenomena. One obvious advantage of this terminological choice consists in the fact that stability as opposed to emergence allows for degrees. Even though there is no precise concept of emergence it seems uncontroversial that whether or not some behaviour falls under this concept should be an all or nothing affair. The very word “stability” by contrast allows for behaviour to be more or less stable. However, in the second part of the paper we will also take up some issues that play a role in debates about emergence.

Even though the concept of stability has been used in various different senses within the philosophy of science recently, there is a clear conceptual core: Stability is a property we attribute to entities (things, properties, behaviour, sets, laws etc.) if the entity in question does not change even though other entities, that are specified, do change. This definition captures the notions of stability that have been recently been discussed in various contexts. Woodward and Mitchell call laws or causal generalizations “stable” relative to background conditions if they continue to hold, even though the background conditions change (Mitchell 2003, p. 140; Woodward 2007, p. 77). Lange calls a set of statements stable if their truth-value would remain the same under certain counterfactual changes (Lange 2009, p. 29). What we are interested in this paper is the stability of the behaviour of macro-systems vis-à-vis changes with respect to the micro-structure of the system. So the changes we consider concern not external or background conditions but rather system-internal features.

An explanation for the stability of macro-phenomena is not only an interesting project in itself. We do think that our discussion of stability throws some light on some issues that play a role in debates about emergence, as we will explain from Sect. 10.5 onwards. Furthermore our account of stability is of wider significance for other areas of philosophy. It is apparent that Fodor’s seminal papers on the autonomy of the special sciences is largely motivated by the observation that macro-behaviour is stable under some changes on the micro-level. This in turn motivated the position of ‘non-reductive’ physicalism in the philosophy of mind. Even though what we will finally present is not a non-reductive but rather a reductive account of stability, this account nevertheless vindicates what we take to be two of the core-intuitions that motivate non-reductive physicalism: (1) There are macro-level properties that are distinct from micro-level properties. (2) Macro-level properties are dependent on micro-level properties (See Rudder-Baker 2009, p. 110 for a similar characterisation of non-reductive physicalism that does not refer explicitly to the failure of reduction). Even though we will not discuss this point in any detail we would like to suggest that it is the stability of macro-behaviour that makes distinct macro-properties possible.

Fodor’s question, as to how it is possible that extremely complex behaviour at an atomistic level could (for many macroscopic systems we know of) give rise to stable macroscopic behaviour has in recent years been transformed into a wider and more ambitious question, viz. how it is possible that even microscopically very different systems manage to exhibit the same type of stable macroscopic behaviour. In a still wider context it has been discussed by Batterman, most notably in his paper “Multi-realizablity and Universality” (2000) as well as Batterman (2002). His central claim is that the notion of universality used to describe the surprising degree of insensitivity of critical phenomena in the vicinity of continuous phase transition points can also be used to explain how special science properties can be realized by quite heterogeneous systems. Indeed, the renormalization group (RNG) explanation of the universality of critical phenomena was advocated as a paradigm that could account for the multi- realizability of macro-behaviour in general. While we agree that RNG provides an explanation of a certain kind of stability of macro-behaviour, we wish to point out that the case of critical phenomena is very special, in that it is restricted to phenomena that obtain in the vicinity of 2nd order phase transitions, but not elsewhere. Batterman himself is aware of this problem (See Batterman 2011). A similar remark pertains to Morrison’s recent paper on emergence (Morrison 2012). Her account of emergence and stability in terms of symmetry breaking pertains only to a restricted class of those systems that exhibit stable behaviour.

For a more general explanation of the stability of macro-phenomena of individual systems, but also across classes of similar systems, other principles must therefore be invoked. In this paper we propose to rationalise the stability of macro-behaviour by pointing out that observations of macro-behaviour are usually observations on anthropomorphic scales, meaning that they are results of coarse-grained observations in both space and time. That is, they involve averages, both over individual behaviours of huge numbers of atomistic components constituting the system under study, and averages of their behaviour over time scales, which are very large compared to characteristic time-scales associated with motion at the micro-level. In fact we shall point out that large numbers of constituent atomistic components, beyond their role in observations on anthropomorphic scales, are also a prerequisite for the very existence and stability of ordered states of matter, such as crystals or magnetic materials.

Just to give an impression of orders of magnitude involved in descriptions of macroscopic amounts of matter, consider a cubic-millimetre of a gas at ambient temperature and pressure. It already contains approximately 2.7 × 1016 gas molecules, and the distance between constituents particles is so small that the typical time between two successive scattering events with other molecules would for each of the molecules be of the order of 10−10 s, entailing that equilibration times in such systems are very short.

Indeed, the traditional way of taking the time-averaging aspect associated with observations of macro-behaviour into account has been to consider results of such observations to be properly captured by values characteristic of thermodynamic equilibrium, or—taking a microscopic point of view—by equilibrium statistical mechanics. It is this microscopic point of view, which holds that a probabilistic description of macroscopic systems using methods of Boltzmann-Gibbs statistical mechanics is essentially correct that is going to form a cornerstone of our reasoning. Indeed, within a description of macroscopic systems in terms of equilibrium statistical mechanics it is essential that systems consist of a vast number of constituents in order to exhibit stable, non-fluctuating macroscopic properties and to react in predictable ways to external forces and fields. In order for stable ordered states of matter such as crystals, magnetic materials, or super-conductors to exist, numbers must (in a sense we will specify below) in fact be so large as to be “indistinguishable from infinity”.

Renormalization group ideas will still feature prominently in our reasoning, though somewhat unusually in this context with an emphasis on the description of behaviour away from criticality.

We will begin by briefly illustrating our main points with a small simulation study of a magnetic system. The simulation is meant to serve as a reminder of the fact that an increase of the system size leads to reduced fluctuations in macroscopic properties, and thus exhibits a clear trend towards increasing stability of macroscopic (magnetic) order and—as a consequence—the appearance of ergodicity breaking, i.e. the absence of transitions between phases with distinct macroscopic properties in finite time (Sect. 10.2). We then go on to describe the mathematical foundation of the observed regularities in the form of limit theorems of mathematical statistics for independent variables, using a line of reasoning originally due to Jona-Lasinio (1975), which relates limit theorems with key features of large-scale descriptions of these systems (Sect. 10.3). Generalizing to coarse-grained descriptions of systems of interacting particle systems, we are lead to consider RNG ideas of the form used in statistical mechanics to analyse critical phenomena. However, for the purpose of the present discussion we shall be mainly interested in conclusions the RNG approach allows to draw about system behaviour away from criticality (Sect. 10.4). We will briefly discuss the issue how the finite size of actual systems affects our argument (Sect. 10.5). We will furthermore discuss to what extent an explanation of stability is a reductive explanation (Sect. 10.6) and will finally draw attention to some interesting conclusions about the very project of modelling condensed matter systems (Sect. 10.7).

2 Evidence from Simulation: Large Numbers and Stability

Let us then begin with a brief look at evidence from a simulation, suggesting that macroscopic systems—which according to our premise are adequately characterised as stochastic systems—need to contain large numbers of constituent particles to exhibit stable macroscopic properties.

This requirement, here formulated in colloquial terms, has a precise meaning in the context of a description in terms of Boltzmann-Gibbs statistical mechanics. Finite systems, are according to such a description, ergodic. They would therefore attain all possible micro-states with probabilities given by their Boltzmann-Gibbs equilibrium distribution and would therefore in general also exhibit fluctuating macroscopic properties as long as the number of constituent particles remains finite. Moreover ordered phases of matter, such as phases with non-zero magnetization or phases with crystalline order would not be absolutely stable, if system sizes were finite: for finite systems, ordered phases will always also have a finite life-time (entailing that ordered phases in finite systems are not stable—in a strict sense. However, life-times of ordered states of matter can diverge in the limit of infinite system size.

Although real world systems are clearly finite, the numbers of constituent particles they contain are surely unimaginably large (recall numbers for a small volume of gas mentioned above), and it is the fact that they are so very large which is responsible for the fact that fluctuations of their macroscopic properties are virtually undetectable. Moreover, in the case of systems with coexisting phases showing different forms of macroscopic order (such as crystals or magnetic systems with different possible orientations of their macroscopic magnetisation), large numbers of constituent particles are also responsible for the fact that transitions between different manifestations of macroscopic order are sufficiently rare to ensure the stability of the various different forms of order.

We are going to illustrate what we have just described using a small simulation of a magnetic model-system. The system we shall be looking at is a so-called Ising ferro-magnet on a 3D cubic lattice. Macroscopic magnetic properties in such a system appear as average over microscopic magnetic moments attached to ‘elementary magnets’ called spins, each of them capable of two opposite orientations in space. These orientations can be thought of as parallel or anti-parallel to one of the crystalline axes. Model-systems of this kind have been demonstrated to capture magnetic properties of certain anisotropic crystals extremely well.

Denoting by \( s_{i} (t) = \pm 1 \) the two possible states of the i-th spins at time t, and by \( \varvec{s}(t) \) the configuration of all \( s_{i} (t) \), one finds the macroscopic magnetisation of a system consisting of \( N \) such spins to be given by the average

In the model system considered here, a stochastic dynamics at the microscopic level is realised via a probabilistic time-evolution (Glauber Dynamics), which is known to converge to thermodynamic equilibrium, described by a Gibbs-Boltzmann equilibrium distribution of micro-states

corresponding to the “energy function”

The double sum in this expression is over all possible pairs \( (i,\,j) \) of spins. In general, one expects the coupling strengths \( J_{ij} \) to decrease as a function of the distance between spins \( i \) and \( j \), and that they will tend to be negligibly small (possibly zero) at distances larger than a maximum range of the magnetic interaction. Here we assume that interactions are non-zero only for spins on neighbouring lattice sites. One can easily convince oneself that positive interaction constants, \( J_{ij} > 0 \), encourage parallel orientation of spins, i.e. tendency to macroscopic ferromagnetic order. In (10.2) the parameter \( \beta \) is a measure of the degree of stochasticity of the microscopic dynamics, and is inversely proportional to the absolute temperature \( T \) of the system; units can be chosen such that \( \beta = 1/T \).

Figure 10.1 shows the magnetisation (10.1) as a function of time for various small systems. For the purposes of this figure, the magnetisation shown is already averaged over a time unit.Footnote 1

1st row magnetisation of a system of \( N = 3^{3} \) spins (left) and \( N = 4^{3} \) spins (right); 2nd row magnetisation of a system of \( N = 5^{3} \) spins (left) and \( N = 5^{3} \) spins (right) but now monitored for 105 time-steps. The temperature \( T \) in these simulations is chosen as \( T = 3.75 \), leading to an equilibrium magnetization \( \varvec{K} \) in the thermodynamic limit

The first panel of the figure demonstrates that a system consisting of \( N = 3^{3} = 27 \) spins does not exhibit a stable value of its magnetisation. Increasing the number of spins \( N = 4^{3} = 64 \) that the system appears to prefer values of its magnetisation around \( S_{N} (\varvec{s}(t)) \simeq \pm 0.83 \). However, transitions between opposite orientations of the magnetization are still very rapid. Increasing numbers of spins further to \( N = 5^{3} = 125 \), one notes that transitions between states of opposite magnetization become rarer, and that fluctuations close to the two values \( S_{N} (\varvec{s}(t)) \simeq \pm 0.83 \) decrease with increasing system size. Only one transition is observed for the larger system during the first \( 10^{4} \) time-steps. However, following the time-evolution of the magnetisation of the \( N = 5^{3} = 125 \) system over a larger time span, which in real-time would correspond to approximately 10−7 s, as shown in the bottom right panel, one still observes several transitions between the two orientations of the magnetization, on average about 10−8 s apart.

The simulation may be repeated for larger and larger system sizes; results for the magnetization (10.1) are shown in the left panel of Fig. 10.2 for systems containing \( N = 16^{3} \) and \( 64^{3} \) spins. The Figure shows that fluctuations of the magnetisation become smaller as the system size is increased. A second effect is that the time spans over which a stable (in the sense of non-switching) magnetisation is observed increases with increasing system size; this is shown for the smaller systems in Fig. 10.1. Indeed, for a system containing \( N = 64^{3} = 262{,}144 \) spins, transitions between states of opposite magnetization are already so rareFootnote 2 that they are out of reach of computational resources available to us, though fluctuations of the magnetization about its average value are still discernible. Note in passing that fluctuations of average properties of a small subsystem do not decrease if the total system size is increased, and that for the system under consideration they are much larger than those of the entire system as shown in the right panel of Fig. 10.2.

Magnetisation of systems containing \( N = 16^{3} \) and \( N = 64^{3} \) spins showing that fluctuations of the average magnetization of the system decreases with system size (left panel), and of a system containing \( {\text{N}} = 64^{3} \) spins, shown together with the magnetization of a smaller subsystem of this system, containing only \( N_{s} = 3^{3} \) spins (right panel)

The present example system was set up in a way that states with magnetisations \( S_{N} { \simeq } \pm 0.83 \) would be its only (two) distinct macroscopic manifestations. The simulation shows that transitions between them are observed at irregular time intervals for small finite systems. This is the macroscopic manifestation of ergodicity. The time span over which a given macroscopic manifestation is stable is seen to increase with system size. It will diverge—ergodicity can be broken—only in the infinitely large system. However, the times over which magnetic properties are stable increase so rapidly with system size that it will be far exceeding the age of the universe for systems consisting of realistically large (though certainly finite) numbers of particles, i.e. for \( N = {\mathcal{O}}(10^{23} ) \).

Only in the infinite system limit would a system exhibit a strictly constant non-fluctuating magnetisation, and only in this limit would one therefore, strictly speaking, be permitted to talk of a system with a given value of its magnetisation. Moreover, only in this limit would transitions between different macroscopic phases be entirely suppressed and only in this limit could the system therefore, strictly speaking, be regarded as macroscopically stable.

However our simulation already indicates that both properties can be effectively attained in finite (albeit large) systems. The systems just need to be large enough for fluctuations of their macroscopic properties to become sufficiently small as to be practically undetectable at the precision with which these are normally measured, and life-times of different macroscopic phases (if any) must become sufficiently large to exceed all anthropomorphic time scales by sufficiently many orders of magnitude. With respect to this latter aspect of macroscopic stability, reaching times which exceed the age of the universe could certainly be regarded as sufficient for all purposes; these are easily attained in systems of anthropomorphic dimension.

So, there is no explanation of why a finite system would exhibit a strictly constant non-fluctuating magnetisation. Thermodynamics, however, works with of such strictly constant, non-fluctuating properties. This might appear to be a failure of reduction: The properties thermodynamics assumes finite macroscopic systems to have, cannot be explained in terms of the properties of the parts, their interactions. This, however, would be the wrong conclusion to draw. It is essential to note that we do understand two things and these suffice for the behaviour of the compound being reductively explainable: Firstly, we can explain on the basis of the properties of the parts and their interactions why finite systems have a (fairly) stable magnetisation, such that no fluctuations will occur for times exceeding the age of the universe if the systems are sufficiently large. Thus we can explain the observed macro-behaviour reductively. Secondly, we can explain why thermodynamics works even though it uses quantities defined in the thermodynamic limit only: Even though the strictly non-fluctuating properties that thermodynamics works with do not exist in real, i.e. finite systems they are (i) observationally indistinguishable from the properties of finite systems and (ii) we theoretically understand how in the limit \( N \to \infty \) fluctuations disappear, i.e. the non-fluctuating properties arise. We would like to argue that this suffices for a reductive explanation of a phenomenon.

In what follows we describe how the suppression of fluctuations of macroscopic quantities in large systems can be understood in terms of statistical limit theorems. We follow a reasoning originally due to Jona-Lasinio (1975) that links these to the coarse grained descriptions and renormalization group ideas, starting in the following section with systems of independent identically distributed random variables, and generalizing in Sect. 10.4 thereafter to systems of interacting degrees of freedom.

3 Limit Theorems and Description on Large Scales

Large numbers are according to our reasoning a prerequisite for stability of macroscopic material properties, and only in the limit of large numbers we may expect that macroscopic properties of matter are also non-fluctuating. Early formulations of equations of state of macroscopic systems which postulate deterministic functional relations, e.g. between temperature, density and pressure of a gas, or temperature, magnetisation and magnetic field in magnetic systems can therefore claim strict validity only in the infinite system limit. They are thus seen to presuppose this limit, though in many cases, it seems, implicitly.

From a mathematical perspective, there are—as already stressed by Khinchin in his classical treatise on the mathematical foundations of statistical mechanics (Khinchin 1949)—two limit theorems of probability theory, which are of particular relevance for the phenomena just described: (i) the law of large numbers, according to which a normalized sum of N identically distributed random variables of the form (10.1) will in the limit \( N \to \infty \) converge to the expectation \( \mu = \langle s_{k} \rangle \) of the \( s_{k} \), assuming that the latter is finite; (ii) the central limit theorem according to which the distribution of deviations from the expectation, i.e., the distribution of \( S_{N} - \mu \) for independent identically distributed random variables will in the limit of large \( N \) converge to a Gaussian of variance \( \sigma^{2} /N \), where \( \sigma^{2} = \langle s_{k}^{2} \rangle - \langle s_{k} \rangle^{2} \) denotes the variance of the \( s_{k} \).Footnote 3

The central limit theorem in particular implies that fluctuations of macroscopic quantities of the form (10.1) will in the limit of large \( N \) typically decrease as \( 1/\sqrt N \) with system size \( N \). This result, initially formulated for independent random variables may be extended to so-called weakly dependent variables. Considering the squared deviation \( \left( {S_{N} - \langle S_{N} \rangle } \right)^{2} \), one obtains its expectation value

and the desired extension would hold for all systems, for which the correlations \( C_{k,\ell } \) are decreasing sufficiently rapidly with “distance” \( |k - \ell | \) to ensure that the sums \( \sum\nolimits_{\ell = 1}^{\infty } |C_{k,\ell } | \) are finite for all \( k \).

The relation between the above-mentioned limit theorems and the description of stochastic systems at large scales are of particular interest for our investigation, a connection that was first pointed out by Jona-Lasinio (1975).Footnote 4 The concept of large-scale description has been particularly influential in the context of the renormalization group approach which has led to the our current and generally accepted understanding of critical phenomena.Footnote 5

To discuss this relation, let us return to independent random variables and, generalising Eq. (10.1), consider sums of random variables of the form

The parameter \( \alpha \) fixes the power of system size N by which the sum must be rescaled in order to achieve interesting, i.e., non-trivial results. Clearly, if the power of N appearing as the normalization constant in Eq. (10.5) is too large for the type of random variables considered (i.e. if α is too small), then the normalized sum (10.5) will almost surely vanish, \( S_{N} \to 0 \), in the large N limit. Conversely, if the power of N in Eq. (10.5) too small (\( \alpha \) too large), the normalized sum (10.5) will almost surely diverge, \( S_{N} \to \pm \infty \), as \( N \) becomes large. We shall in what follows restrict our attention to the two important cases \( \alpha = 1 \)—appropriate for sums of random variables of non-zero mean—and \( \alpha = 2 \)—relevant for sums of random variables of zero mean and finite variance. For these two cases, we shall recover the propositions of the law of large numbers (\( \alpha = 1 \)) and of the central limit theorems \( \left( {\alpha = 2} \right) \) as properties of large-scale descriptions of (10.5).

To this end we imagine the \( s_{k} \) to be arranged on a linear chain. The sum (10.5) may now be reorganised by (i) combining neighbouring pairs of the original variables and computing their averages \( \bar{s}_{k} \), yielding \( N^{\prime } = N/2 \) of such local averages, and by (ii) appropriately rescaling these averages so as to obtain renormalised random variables \( s_{k}^{\prime } = 2^{\mu } \bar{s}_{k} \), and by expressing the original sum (10.5) in terms of a corresponding sum of the renormalised variables, formally

so that

One may compare this form of “renormalization”—the combination of local averaging and rescaling—to the effect achieved by combining an initial reduction of the magnification of a microscope (to the effect that only locally averaged features can be resolved) with an ensuing change of the contrast of the image it produces.

By choosing the rescaling parameter \( \mu \) such that \( \mu = 1 - 1/\alpha \), one ensures that the sum (10.5) remains invariant under renormalization,

The renormalization procedure may therefore be iterated, as depicted in Fig. 10.3: \( s_{k} \to s_{k}^{\prime } \to s_{k}^{\prime \prime } \to \ldots \), and one would obtain the corresponding identity of sums expressed in terms of repeatedly renormalised variables,

Repeated enlargement of scale: the present example begins with a system of 24 random variables symbolised by the dots in the first row. These are combined in pairs, as indicated by frames surrounding two neighbouring dots. Each such pair generates a renormalised variable, indicated by 12 dots of lighter shade in the second row of the figure. Two of those are combined in the next step as indicated by frames around pairs of renormalised variables, thereby starting the iteration of the renormalization procedure

The statistical properties of the renormalised variables \( s_{k}^{\prime } \) will, in general be different from (though, of course, dependent on) those of the original variables \( s_{k} \), and by the same token will the statistical properties of the doubly renormalised variables \( s_{k}^{\prime \prime } \) be different from those of the \( s_{k}^{\prime } \), and so on. However, one expects that statistical properties of variables will after sufficiently many renormalization steps, i.e., at large scale, eventually become independent of the microscopic details and of the scale considered, thereby becoming largely independent of the statistical properties of the original variables \( s_{k} \), and invariant under further renormalization. This is indeed what happens under fairly general conditions.

It turns out that for sums of random variables \( s_{k} \) with non-zero average \( \mu = \langle s_{k} \rangle \) the statement of the law of large numbers is recovered. To achieve asymptotic invariance under repeated renormalization, one has to choose \( \alpha = 1 \) in (10.5), in which case one finds that the repeatedly renormalised variables \( s_{k}^{\prime \prime \prime \prime \ldots } \) converge under repeated renormalization to the average of the \( s_{k} \), which is thereby seen to coincide with the large \( N \) limit of the \( S_{N} \).

If sums of random variables of zero mean (but finite variance) are considered, the adequate scaling is given by \( \alpha = 2 \). In this case the repeatedly renormalised variables \( s_{k}^{\prime \prime \prime \prime \ldots } \), and thereby the \( S_{N} \), are asymptotically normally distributed with variance \( \sigma^{2} \) of the original variables \( s_{k} \), even if these were not themselves normally distributed. The interested reader will find details of the mathematical reasoning underlying these results in Appendix 10.8 below.

Let us not fail to mention that other stable distributions of the repeatedly renormalised variables \( s_{k}^{\prime \prime \prime \prime \ldots } \), thus of the \( S_{N} \)—the so-called Lévy \( \alpha \)-stable distributions (Feller 1968)—may be obtained by considering sums random variables of infinite variance. Although such distributions have recently attracted some attention in the connection with the description of complex dynamical systems, such as turbulence or financial markets, they are of lesser importance for the description of thermodynamic systems in equilibrium, and we shall therefore not consider these any further in what follows.

Interestingly, the asymptotics of the convergence to the stable distributions under repeated renormalization described above can be analyzed in full analytic detail for the presently considered case of sums of independent random variables. As demonstrated in Appendix 10.9, this allows to quantify the finite size corrections to the limiting distributions in terms of the scaling of high-order cumulants with inverse powers of system size \( N \).

4 Interacting Systems and the Renormalization Group

For the purpose of describing macroscopic systems the concept of large-scale descriptions of a system, used above to elucidate the two main limit theorems of mathematical statistics, needs to be generalised to interacting, thus correlated or dependent random variables. Such a generalisation was formulated at the beginning of the 1970s as renormalization group approach to interacting systems.

Starting point of this approach is the Boltzmann-Gibbs equilibrium distribution of microscopic degrees of freedom taking the form (10.2). The idea of the renormalization group approach to condensed matter systems is perhaps best explained in terms of the normalisation constant \( Z_{N} \) appearing in (10.2), the so-called partition function. It is related to the dimensionless free energy \( \overline{f}_{N} \) of the system via \( Z_{N} = {\text{e}}^{{ - N\overline{f}_{N} }} \) and thereby to its thermodynamic functions and properties.Footnote 6 To this end the partition function in (10.2) is written in the form

in which \( \overline{H}_{N} (\varvec{s};\varvec{K}) \) denotes the dimensionless energy function of the system, i.e., the conventional energy function multiplied by the inverse temperature \( \beta \), while \( \varvec{K} \) stands for the collection of all coupling constants in \( H_{N} \) (multiplied by \( \beta \)). These may include two-particle couplings as in (10.3), but also single-particle couplings as well as a diverse collection of many-particle couplings. Renormalization investigates, how the formal representation of the partition function changes, when it is no longer interpreted as a sum over all micro-states of the original variables, but as a sum over micro-states of renormalised variables, the latter defined as suitably rescaled local averages of the original variables in complete analogy to the case of independent random variables.

In contrast to the case of independent variables, geometric neighbourhood relations play a crucial role for interacting systems, and are determined by the physics of the problem. E.g., for degrees of freedom arranged on a \( d \)-dimensional (hyper)-cubic lattice, one could average over the \( b^{d} \) degrees of freedom contained in a (hyper)-cube \( B_{k} \) of side-length \( b \) to define locally averaged variables, as illustrated in Fig. 10.4 for \( d = 2 \) and \( b = 2 \), which are then rescaled by a suitable factor \( b^{\mu } \) in complete analogy to the case of independent random variables discussed above,

The partition sum on the coarser scale is then evaluated by first summing over all micro-states of the renormalised variables \( \varvec{s}^{\prime } \) and for each of them over all configurations s compatible with the given \( \varvec{s}^{\prime } \), formally

where \( P(\varvec{s}^{\prime } ,\,\varvec{s}) \ge 0 \) is a projection operator constructed in such a way that \( P(\varvec{s}^{\prime } ,\,\varvec{s}) = 0 \), if \( \varvec{s} \) is incompatible with \( \varvec{s}^{\prime } \), whereas \( P(\varvec{s}^{\prime } ,\,\varvec{s}) > 0 \), if \( \varvec{s} \) is compatible with \( \varvec{s}^{\prime } \), and normalised such that \( \sum\nolimits_{{\varvec{s}^{\prime } }} P(\varvec{s}^{\prime } ,\,\varvec{s}) = 1 \forall \varvec{s} \). The result is interpreted as the partition function corresponding to a system of \( N^{\prime } = b^{ - d} N \) renormalised variables, corresponding to a dimensionless energy function \( \overline{H}_{{N^{\prime } }} \) of the same format as the original one, albeit with renormalised coupling constants \( \varvec{K} \to \varvec{K}^{\prime } \), as expressed in (10.12). The distance between neighbouring renormalised degrees of freedom is larger by a factor \( b \) than that of the original variables. Through an ensuing rescaling of all lengths \( \ell \to \ell /b \) one restores the original distance between the degrees of freedom, and completes the renormalization group transformation as a mapping between systems of the same format.

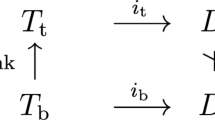

As in the previously discussed case of independent random variables, the renormalization group transformation may be iterated and thus creates not only a sequence of repeatedly renormalised variables, but also a corresponding sequence of repeatedly renormalised couplings

As indicated in Fig. 10.5, this sequence may be visualised as a renormalization group ‘flow’ in the space of couplings.

The renormalization transformation entails a transformation of (dimensionless) free energies \( \overline{f}_{N} (\varvec{K}) = - N^{ - 1} \ln Z_{N} \) of the form

For the present discussion, however, the corresponding transformation of the so-called correlation length \( \xi \) which describes the distance over which the degrees of freedom in the system are statistically correlated, is of even greater interest. As a consequence of the rescaling of all lengths, \( \ell \to \ell /b \), in the final step of the renormalization transformation, one obtains

Repeated renormalization amounts to a description of the system on larger and larger length scales. The expectation that such a description would on a sufficiently large scale eventually become independent of the scale actually chosen would correspond to the finding that the renormalization group flow would typically approach a fixed point: \( \varvec{K} \to \varvec{K}^{\prime } \to \varvec{K}^{\prime \prime } \to \ldots \to \varvec{K}^{*} \). As in the case of the renormalization of sums of independent random variables exponent \( \mu \) in the rescaling operation in Eq. (10.11) must be judiciously chosen to allow approach to a fixed point describing non-trivial large scale behaviour.

The existence of fixed points is of particular significance in the limit of infinitely large system size \( N = N^{\prime } = \infty \), as in this limit Eq. (10.15), \( \xi_{\infty } (\varvec{K}^{*} ) = b\xi_{\infty } (\varvec{K}^{*} ) \) will for \( b \ne 1 \) only allow for the two possibilities

The first either corresponds to a so-called high-temperature fixed point, or to a low-temperature fixed point. The second possibility with infinite correlation length corresponds to a so-called critical point describing a continuous, or second order phase transition. In order to realise the second possibility, the initial couplings \( \varvec{K} \) of the system must be adjusted in such a way that they come to lie precisely on the critical manifold in the space of parameters, defined as the basin of attraction of the fixed point \( \varvec{K}^{*} \) for the renormalization group flow. If the system’s initial couplings \( \varvec{K} \) are not exactly on the critical manifold, but close to it, repeated renormalization will result in a flow that visits the vicinity of the fixed point \( \varvec{K} \), but is eventually driven away from it (see Fig. 10.5). Within an analysis of the RG transformation that is linearized in the vicinity of \( \varvec{K}^{*} \) this feature can be exploited to analyse critical behaviour of systems in the vicinity of their respective critical points and quantify it in terms of critical exponents, and scaling relations satisfied by them (Fisher 1974, 1983).

The distance from the critical manifold is parameterized by the so-called “relevant couplings”.Footnote 7 Experience shows that relevant couplings typically form a low-dimensional manifold within the high-dimensional space of system parameters \( \varvec{K} \). For conventional continuous phase transitions it is normally two-dimensional—parametrized by the deviation of temperature and pressure from their critical values in the case of gasses, by corresponding deviations of temperature and magnetic field in magnetic systems, and so forth.Footnote 8 The fact that all systems in the vicinity of a given critical manifold are controlled by the same fixed point does in itself have the remarkable consequence that there exist large classes of microscopically very diverse systems, the so-called universality classes, which exhibit essentially the same behaviour in the vicinity their respective critical points (Fisher 1974, 1983).

For the purpose of the present discussion, however, the phenomenology close to off-critical high and low-temperature fixed points is of even greater importance. Indeed, all non-critical systems will eventually be driven towards one of these under repeated renormalization, implying that degrees of freedom are virtually uncorrelated on large scales, and that the description of non-critical systems within the framework of the two limit theorems for independent variables discussed earlier is therefore entirely adequate, despite the correlations over small distances created by interactions between the original microscopic degrees of freedom.

5 The Thermodynamic Limit of Infinite System Size

We are ready for a first summary: only in the thermodynamic limit of infinite system size \( N \to \infty \) will macroscopic systems exhibit non-fluctuating thermodynamic properties; only in this limit can we expect that deterministic equations of state exist which describe relations between different thermodynamic properties as well as the manner in which these depend on external parameters such as pressure, temperature or electromagnetic fields. Moreover, only in this limit will systems have strictly stable macroscopic properties in the sense that transitions between different macroscopic phases of matter (if there are any) will not occur in finite time. Indeed stability in this sense is a consequence of the absence of fluctuations, as (large) fluctuations would be required to induce such macroscopic transformations. We have seen that these properties can be understood in terms of coarse-grained descriptions, and the statistical limit theorems for independent or weakly dependent random variable describing the behaviour averages and the statistics of fluctuations in the large system limit, and we have seen how RNG analyses applied to off-critical systems can provide a rationalization for the applicability of these limit theorems.

Real systems are, of course, always finite. They are, however, typically composed of huge numbers of atomic or molecular constituents, numbers so large in fact that they are for the purposes or determining macroscopic physical properties “indistinguishable from infinity”: fluctuations of macroscopic properties decrease with increasing system size, to an extent of becoming virtually undetectable in sufficiently large (yet finite) systems. Macroscopic stability (in the sense of absence of transitions between different macroscopic phases on time-scales which exceed the age of the universe) in large finite systems ensues.

One might argue that this is a failure of reduction, and in a sense this is true (as mentioned before). Strictly non-fluctuating properties as postulated or assumed by thermodynamics do only exit in the thermodynamic limit of infinite system size \( N \to \infty \). On the basis of finitely many parts of actual systems it cannot be explained why systems have such properties. We suggest that one should bite the bullet and conclude that strictly non-fluctuating properties as postulated by thermodynamics do not exist. But as already mentioned this is less of a problem than it might appear. There are two reasons why this is not problematic. First: We can explain on the basis of the properties of the parts and their interactions of actual finite systems why they have stable properties, in the sense that fluctuations of macroscopic observables will be arbitrarily small, and that ergodicity can be broken, with life-times of macroscopically different coexisting phases exceeding the age of the universe. Thus the observed behaviour of the system can be reductively explained. We do have a micro-reduction of the observed behaviour. Second: We furthermore understand how the relevant theories, thermodynamics and statistical mechanics, are related in this case. They are related by the idealization of the thermodynamic limit of infinite system size \( N \to \infty \) and we have an account of how de-idealization leads to the behaviour statistical mechanics attributes to finite systems. The stability we observe, i.e. the phenomenon to be explained, is compatible both with the idealization of infinite system size and with the finite, but very large, size of real systems. The fact that strict stability requires the infinite limit poses no problem because we are in a region where the difference between the finite size model and the infinite size model cannot be observed.

The role of the thermodynamic limit and the issue of stability of macroscopic system properties are more subtle, and more interesting, in the case of phase transitions and critical phenomena. To discuss them, it will be helpful to start with a distinction.

What we have discussed so far is the stability of macroscopic physical properties vis-á-vis incessant changes of the system’s dynamical state on the micro-level. Let us call this “actual stability” and contrast it with “counterfactual stability”. Counterfactual stability is the stability of the macro-behaviour with respect to non-actual counterfactual changes a system’s composition at the micro-level might undergo: e.g., in a ferro-magnetic system one might add next-nearest neighbour interactions to a system originally having only nearest neighbour interactions, and scale down the strength of the original interaction in such a manner that would leave the macroscopic magnetization of a system invariant.

The notion of counterfactual stability applies in particular to the phenomenon of universality of critical phenomena. Critical phenomena comprise a set of anomalies (algebraic singularities) of thermodynamic functions that are observed at second order phase transitions in a large variety of systems. Critical phenomena, and specifically the critical exponents introduced to characterize the non-analyticities quantitatively have various remarkable properties. For instance, critical exponents exhibit a remarkable degree of universality. Large classes of systems are characterized by identical sets of critical exponents, despite the fact that interactions at the microscopic level may be vastly different. Within the RNG approach described in the previous section this is understood as a global property of a renormalization group flow: all systems with Hamiltonians described by couplings \( \varvec{K} \) in the vicinity of a given critical manifold will be attracted by the same RNG fixed point \( \varvec{K}^{*} \), and therefore exhibit identical critical exponents.

The case of critical behaviour is thus a special and particularly impressive case of (counterfactual) stability. Note, however, that even though the notion of universality provides a notion of stability (see Batterman 2002, p. 57ff), the range it applies to is fairly restricted and does not cover all the cases Fodor had in mind when he was referring to the stability of macro-level. In particular, universality of critical phenomena as uncovered by the RNG approach only refers to asymptotic critical exponents,Footnote 9 describing critical singularities only in the immediate vicinity of critical points. The thermodynamic properties of a system not exactly at its critical point will, however, be influenced by the presence of irrelevant couplings, and thus show properties which are system-specific, and not universal within universality classes. We will discuss some further notes of caution in Sect. 10.6 below.

The case of critical phenomena requires special discussion also with respect to the infinite system limit. We have seen that the thermodynamic limit is a prerequisite for systems to exhibit non-fluctuating macroscopic physical properties, but that this type of behaviour is well approximated (in the sense of being experimentally indistinguishable from it) in finite sufficiently large systems. Thermodynamically, phase transitions and critical phenomena are associated with non-analyticities in a system’s thermodynamic functions. Given certain uncontroversial assumptions, such non-analyticities cannot occur in finite systems (cf. Menon and Callendar 2013, p. 194) in the canonical or grand-canonical ensembles of Statistical Mechanics. For phase transitions to occur, and systems to exhibit critical phenomena it thus appears that an “ineliminable appeal to the thermodynamic limit and to the singularities that emerge in that limit” (Batterman 2011, p. 1038) is required.

Is this an appeal to idealizations that differs from other cases? To discuss this question, we need to return to the finite N versions of the RG flow Eqs. (10.14) and (10.15) for the free energy and the correlation length, respectively. It is customary to use the linear extent \( L \) of the system rather than the number of particles \( N = L^{d} \) (assuming hypercubic geometry), to indicate the finite extent of the system, and to rewrite the finite-\( L \) RG flow equations in the form

and

These reformulations already indicate that the inverse \( L^{ - 1} \) of the system’s linear dimension \( L \) is a relevant variable in the RNG sense due to the final rescaling \( \ell \to \ell /b \) of all lengths (thus \( L^{ - 1} \to bL^{ - 1} > L^{ - 1} \)) in the final RG step. The condition for the appearance of this additional relevant variable to be the only modification of the RNG transformation is that the system must be sufficiently large that the RG-flow in the space of couplings \( \varvec{K} \to \varvec{K}^{\prime } \to \varvec{K}^{\prime \prime } \to \ldots \) is itself unmodified by the finite size of the system. In a real-space picture of RG, it requires in particular all renormalized couplings to be embeddable in the system (for details, see Barber 1983). A finite value of \( L \) then implies that a finite system can never be exactly critical in the sense of exhibiting an infinite correlation length. As the relevant variable \( L^{ - 1} \) is non-zero, the system is driven away from the critical manifold under renormalization, and indeed coarse graining is impossible beyond the scale \( L \) set by the system size. If carried through, this finite-size modification of the RNG transformation gives rise to so-called finite-size-scaling theory (FSS) which describes in quantitative detail the way in which critical singularities are rounded due to finite size effects (Barber 1983). The analysis is more complicated than, but conceptually fully equivalent to the finite size scaling analysis for the statistical limit theorems described in Appendix 10.9. In particular, variables that are irrelevant in the RG sense are scaled with suitable inverse powers of \( L \) in finite systems, the powers being related to the eigenvalues of the linearized RG transformation (in complete analogy to the situation described in Appendix 10.9). Thus while proper singularities of thermodynamic functions disappear in systems of finite size, deviations from the behaviour described in terms of the corresponding infinite-system singular functions will become noticeable only in ever smaller parameter regions around “ideal” critical points, as system sizes grow, and will in sufficiently large systems eventually be indistinguishable from it using experiments of conceivably attainable precision. In this sense, the infinite system idealization of singular behaviour of thermodynamic functions in the vicinity of critical points is an idealization, which is controllable in large systems in a well-defined sense, which does not appear to be fundamentally different from that of non-fluctuating thermodynamic functions and absolute stability discussed earlier.

As before there is no explanation of why a finite system would exhibit phase transitions in the strict sense. Phase transitions as defined by thermodynamics do only exist in the thermodynamic limit of infinite system size \( N \to \infty \). On the basis of finitely many parts of actual systems it cannot be explained why systems have such properties. The same applies to the universal behaviour of systems in the same universality classes. Strictly speaking universality only obtains in the thermodynamic limit. Neither the occurrence of phase transitions nor universality can be explained in terms of the properties of the parts, their interactions. This might appear to be a failure of reduction. That would, however, be the wrong conclusion to draw. Again, in both cases (the occurrence of phase transitions and the universal behaviour at the critical point) we do understand two things and these suffice for the behaviour being reductively explainable: Firstly, in the case of phase-transitions, we can explain on the basis of the properties of the parts and their interactions why finite systems exhibit behaviour that is observationally indistinguishable from strict phase-transitions, which involve non-analyticities (For this point see also Kadanoff 2013, p. 156 or Menon and Callendar 2013, pp. 212–214). Thus we can explain the observed macro-behaviour reductively. In the case of universality, finite-size-scaling theory makes available reductive explanations of the observed similarities in the macro-behaviour of different kinds of systems. Secondly, we can explain why thermodynamics works as well as it does even though it uses quantities defined in the thermodynamic limit only: Even though neither phase transitions as defined in thermodynamics do not exist in real, i.e. finite systems nor the phenomenon of universality (in the strict sense), they are (i) observationally indistinguishable from the properties and behaviour of finite systems and (ii) we theoretically understand how in the limit \( N \to \infty \) phase transitions and universal behaviour (in the strict sense) would arise. In short: We idealize, but we understand how the idealizations work (For a discussion of some of these points see also Butterfield 2011, Sect. 7 as well as Menon and Callender 2013, Sect. 3.2).

6 Supervenience, Universality and Part-Whole-Explanation

In the previous section we have argued that we do have reductive explanations of the observed behaviour that in thermodynamics is described in terms of phase transitions and universal behaviour. In this section we will deal with a possible objection. It might be argued that for a reductive explanation of the macro-behaviour the properties of the constituents have to determine the properties of the compound. However, in the cases we are discussing, no such determination relation obtains. In this section we would like to reject this claim.

When the macro behaviour of physical systems is stable, many details of the exact micro-state are irrelevant. This is particularly impressive in the case of universality.

Margret Morrison argues that if the microphysical details are irrelevant, the phenomenon in question is not reducible to its microphysical constituents and that the supervenience relation is “inapplicable in explaining the part-whole aspects” of such phenomena (Morrison 2012, p. 156). Morrison classifies universal behaviour at phase transitions as “emergent”, characterizing emergence as follows:

…what is truly significant about emergent phenomena is that we cannot appeal to microstructures in explaining or predicting these phenomena, even though they are constituted by them (Morrison 2012, p. 143).

Even though we agree with Morrison that universal phenomena are in an interesting sense ontologically independent of the underlying microstructure we reject her claim that this violates the “reductionist picture” (Morrison 2012, p. 142). We will focus on one line of her argument. What Morrison calls the “reductionist picture” entails the claim that the constituents properties and states determine the behaviour of the compound system. The reductionist picture entails (or presupposes) the supervenience of the properties of the compound on the properties (and interactions) of the parts. Only if the constituents’ properties and interactions determine the compounds properties can we reductively explain the latter in terms of the former. One problem for the reductionist, according to Morrison, is the failure of supervenience.

Why would one suppose that supervenience fails in the case of stable macro-behaviour and universal behaviour in particular? In Morrison’s paper we find two arguments. The first has to do with the thermodynamic limit. The stable macro-behaviour to be explained presupposes an infinite number of constituents. Real systems are finite. The behaviour to be explained is not determined by the behaviour of the finite number of constituents. We have already dealt with this issue in the previous section and have indicated why we are not convinced by this line of argument. We thus move to her second argument:

If we suppose that micro properties could determine macro properties in cases of emergence, then we have no explanation of how universal phenomena are even possible. Because the latter originate from vastly different micro properties, there is no obvious ontological or explanatory link between the micro and macro levels (Morrison 2012, p. 162).

We fail to see why it should be impossible that vastly different micro-properties determine the same micro-property. To illustrate: the integer 24 may be obtained as a sum of smaller integers in many different ways \( \left( {24 = 13 + 11 = 10 + 14 = 9 + 8 + 7 \ {\text{etc.}}} \right) \). However, this is not a valid argument for the claim that the summands fail to determine the sum. Similarly, the fact that a multiplicity of micro-states gives rise to the same macro-state is no objection to the claim that the micro-state determines the macro-state.

In fact, the simulation in Sect. 10.2 and the explanations in Sects. 10.3 and 10.4 aim at explaining how this is possible. The simulation in Sect. 10.2 has illustrated that, by simply increasing the sample size, fluctuations decrease and macroscopic modifications become more and more rare, i.e. the macro-state becomes more and more stable despite of changes in the micro-states. In Sect. 10.3 we discussed an explanation for this phenomenon for the case of non-interacting constituents/variables. It is in virtue of the central limit theorem that fluctuations of macroscopic observables decrease with the number \( N \) of constituents as \( \frac{1}{\sqrt N } \) for large system sizes, and that stable behaviour exists for large systems. In Sect. 10.4 we moved to the case of interacting variables/constituents. Again, what is provided is an explanation for why so many features of the micro-system are irrelevant. RNG explains how systems that are characterized by very different Hamilton operators nevertheless give rise to very similar macro-behaviour. This only works because, given a particular Hamiltonian, i.e. the micro-properties, the macro-behaviour is fixed and thus determined. If supervenience would indeed fail it would be indeterminate how the Hamiltonian (which represents the properties of the constituents and their interaction) would behave in phase space under renormalization. The RNG-theory explains universality by showing that a whole class of Hamiltonians is attracted by the same fixed point under iterated renormalizations. If the macro-behaviour of the systems in question would not supervene on the micro-structure, the RNG-explanation would not get started.

These explanations tell us why and in which sense certain features of the constituents or their interactions become irrelevant: note that irrelevance in the RNG sense acquires a technical meaning, which coincides with the plain-English meaning of the word only as far as the determination of asymptotic critical exponents (in infinitely large systems) is concerned.

The fact that certain features of the constituents are irrelevant in the technical RNG sense does therefore not imply that the properties and states of the constituents fail to influence the macro-behaviour. Rather, it is only a small number of features of these that does the work for asymptotic critical exponents. Interestingly, these same features are also responsible for driving an off-critical system away from critical RNG fixed points and towards one of the fixed-points at which the (coarse-grained) system appears as a collection of uncorrelated degrees of freedom, for which the limit theorems for uncorrelated or weakly correlated random variables provide an appropriate description.

Whenever a system is not exactly at its critical point, there will always be a residual effect of the so-called irrelevant variables on thermodynamic behaviour. A finite system, in particular, is never exactly at a critical point, as \( 1/L \) (with \( L \) denoting the linear dimension of the system) is always a relevant variable in the renormalization group sense (increasing under renormalization/coarse graining); this leads to rounding of critical singularities, which can be quantitatively analysed (see Sect. 10.5). As a consequence, Morrison’s argument, if valid, would not apply to finite systems (nor to systems which are off-critical for other reasons), and therefore the so-called irrelevant variables will contribute to the determination of the macro-behaviour of all finite systems.

It may be worth at this point to explicitly discuss the specific nature and reach of RNG analyses of macroscopic systems. To begin with it is helpful to recall that with respect to the analysis of collective behaviour in general and of phase transitions in particular RNG has a twofold explanatory role. These two roles concern two sets of questions that ought to be distinguished.

The first set of questions addressed by RNG concerns the behaviour of individual critical systems: Why does the compressibility of water diverge according to some fixed function of temperature etc.? With regard to questions like this RNG is simply a coarse-graining procedure that allows us to calculate approximately correct results. The explanation of the single system’s critical exponents starts with the description of the microstructure. In this context RNG is effectively used as a tool for micro-reductive explanation of the system’s behaviour. RNG is merely an effective tool to evaluate thermodynamic functions of interacting systems (albeit in the majority of cases only approximately), where exact evaluations are infeasible. In some sense, RNG can be regarded as a successor theory of mean field theory, which was unable to produce even approximately correct critical exponents.

There is, however, a second set of questions. Micro-reductive explanations may appear to be unable to answer these. These questions concern universality and scaling (i.e. the fact that the critical exponents obey certain system-independent relations). Why are there universality classes at all, i.e. why is it that systems with extremely different microstructure such as alloys ferro-magnets and liquids obey exactly the same laws near their critical points, i.e. why is it that the values of the critical exponents of the members of such classes coincide? Furthermore, why is it the case that all critical systems—irrespective their universality class—obey the scaling relations?Footnote 10

RNG appears to answer the above questions non-reductively. The essential ingredients of such an explanation are not properties of the constituents of single critical systems, one might argue, but rather properties of the renormalization-group-flow—topological features of the space of coupling constants (see Morrison 2012, p. 161/2). The renormalization-group-transformation induces a mapping between Hamiltonians or the coupling-constants of the Hamiltonians in question. The iteration of such a mapping defines a flow in the space of coupling constants as represented in Fig. 10.5. Fixed points come along with basins of attraction. All Hamiltonians/physical systems within such a basin flow towards the same critical point, i.e. their behaviour is identical. It is—basically—the existence of these basins of attraction that explain why physical systems with widely diverging microstructure behave identically near the critical point.

The explanation of universality and the scaling relation do not appear to appeal to the microscopic constitution of individual systems that show this behaviour. Instead it appeals to topological features of the space of coupling constants/Hamiltonians.

But the reductionist is able to defend her view: Why should micro-explanations be unable to explain that a variety of systems behave identical near the critical point? There is a feature on the micro-level that all of these systems have in common. And it is in virtue of this common feature that RNG works:

The starting point in the renormalization group approach is to realize that the most important fluctuations at the critical point have no characteristic length. Instead the important fluctuations have all wavelengths ranging from the atomic spacing up to the correlation length; close to the critical point the correlation length is much larger than the atomic spacing (Wilson 1976).

The absence of a characteristic length (the divergence of the correlation length) at the critical point implies invariance of a system under coarse graining, or scale transformations. An RNG transformation which describes the effect of coarse graining in terms of a transformation \( \varvec{K} \to \varvec{K}^{\prime } \) of the systems’ set of couplings will therefore identify a critical point with a fixed point \( \varvec{K}^{*} \) of that transformation. The reductionist will thus think of topological features of the transformation such as basins of attraction (i.e. universality classes) as an unavoidable consequence of this type of analysis.

This is finally, once more the point to recall that there is a second way in which fluctuations of thermodynamic properties of a system can fail to exhibit a characteristic length: this is the situation where the correlation length vanishes and the system is statistically fully homogeneous (and therefore also scale invariant). This possibility is realized at the high- or low-temperature fixed points of the RNG transformations (as discussed in Sect. 10.4), which will come with their own basins of attraction and associated notions of insensitivity to detail, as embodied in statistical limit theorems.

To sum up: What we claim to have shown is that the reductionist picture according to which the constituents’ properties and states determine the behaviour of the compound system, and the macro-phenomena can be explained in terms of the properties and states of the constituents is neither undermined by stable phenomena in general nor by universal phenomena in particular.

Let us add, however, that the reductionist picture as outlined above does not imply that an explanation of the macro-behaviour of a system always has to appeal to the micro-level. The fact that it is possible to give such an explanation does not imply that it is the best explanation let alone the only available explanation. In fact, once we know that many details are irrelevant, we have a perfectly good reason to focus on those factors that are not irrelevant. This is the topic of the following section.

7 Post Facto Justification of Modelling

Let us close with the following observation: A remarkable feature of our analysis of stability of macroscopic system properties based on a renormalization group approach is the fact that it provides us with a justification for certain kinds of idealisation. Models that are used to describe critical (or other collective) behaviour of given physical systems are often grossly simplified, and it is a non-trivial problem to understand why such models can nevertheless be as successful, as they apparently are. RNG illuminates this point as follows. It teaches us that systems, which are described by Hamiltonians that differ only with respect to irrelevant couplings (systems within the basin of attraction of the RNG fixed point relevant for the large scale phenomenon under study) will under renormalization be attracted to the same fixed point, and will therefore exhibit the same type of collective behaviour behaviour. The presence of irrelevant couplings can in this sense be regarded as belonging to the inessential details which do not significantly affect the problem under study, such as critical behaviour of a given many-particle system, or macroscopic off-critical properties. In a quantitatively well-defined sense, such irrelevant couplings can therefore be neglected when analysing collective phenomena in such systems—critical or not. A description of a system which neglects or abstracts from these details constitutes what would properly be described as an idealized description. Within the RNG setting we therefore have a well-defined and even quantifiable notion of the sense in which an idealized description of a complex system will nevertheless capture the essence of its collective behaviour.

It is a remarkable fact that conventional second order phase transitions are characterized by just two relevant couplings in the RNG sense; this tells us that all but two operators within an infinite set characterizing the universe of possible Hamiltonians for a given system are irrelevant, and can therefore be neglected when analysing asymptotic critical behaviour associated with second order phase transitions. Moreover it is the presence of these same two relevant couplings which is responsible for driving the RNG flow to off-critical fixed points at which systems are statistically fully homogeneous and their macroscopic properties thus describable in terms of statistical limit theorems for uncorrelated or weakly correlated random variables.

It is due to this remarkable fact that simplified models do at all allow us to capture the essence of collective behaviour. In this sense RNG provides a justification for the idea of modelling per se, and it gives us a glimpse on the reasons why “simple” models of condensed matter could at all be successful. Note finally that it is essential that systems are large to allow levels of description which are sufficiently coarse-grained compared to atomistic scales. Stability, and as we have just discussed, simplicity only arises at this sufficiently coarse scale. It has been pointed out (Kühn 2008) that in this sense large numbers appear to be an essential prerequisite for facilitating successful theory formation for condensed matter systems.

Notes

- 1.

The time unit in these simulations is given by the time span during which every single spin has on average once been selected for an update of its state. It is this unit of time which is comparable for systems of different sizes (Binder and Stauffer 1987, pp. 1–36); it would correspond to a time-span of approximately 10−12 s in conventional units.

- 2.

Measured in conventional time units, such a system will exhibit a magnetisation which remains stable for times of the order of several years.

- 3.

For precise formulations of conditions and proofs, see Feller (1968).

- 4.

On this, see also Batterman (1998), who referred to the relation on several occasions in the context of debates on reductionism.

- 5.

The notion of critical phenomena refers to a set of anomalies (non-analyticities) of thermodynamic functions in the vicinity of continuous, second order phase transitions. A lucid exposition is given by Fisher (1983).

- 6.

The dimensionless free energy is just the product of the standard free energy and the inverse temperature \( \beta \). At a formal level, the partition function is closely related to the characteristic function of a (set of) random variables, in terms of which we analysed the idea of large-scale descriptions for sums of independent random variables in Appendix 10.8.

- 7.

These correspond to a subset of the couplings encoded in \( \varvec{K} \), whose distance from the critical manifold is increased under renormalization. The critical manifold itself is parameterized by the so-called irrelevant couplings; their distance from the critical point \( \varvec{K}^{*} \) is decreased under successive renormalizations.

- 8.

Note that in practical RG analyses of the liquid gas critical point, chemical potential is usually used instead of pressure. Also, proper independent coordinates of the manifold of relevant couplings are not necessarily the physical parameters themselves; they could be, and often are, constructed from suitable combinations thereof. For a detailed discussion, see e.g. Lavis and Bell (1998).

- 9.

This includes asymptotic scaling functions and even asymptotic corrections to scaling (Wegner 1976).

- 10.

For the sake of completeness, we note that there are well-understood exceptions (see e.g. Wegner 1976), which we need, however, not discuss in the context of the present paper.

- 11.

In this appendix, we follow the mathematical convention to distinguish in notation between a random variable \( X \) and its realisation \( x \).

References

Barber, M.N.: Finite-size scaling. In: Domb, C., Lebowitz, J.L. (eds.) Phase Transitions and Critical Phenomena, vol. 8, pp. 146–266. Academic Press, London (1983)

Batterman, R.: Why equilibrium statistical mechanics works: universality and the renormalization group. Philos. Sci. 65, 183–208 (1998)

Batterman, R.: Multiple realizability and universality. Br. J. Philos. Sci. 51, 115–145 (2000)

Batterman, R.: The Devil in the Details. Oxford University Press, Oxford (2002)

Batterman, R.W.: Emergence, singularities, and symmetry breaking. Found. Phys. 41, 1031–1050 (2011)

Bedau, M., Humphreys, P.: Introduction. In: Bedau, M., Humphreys, P. (eds.) Emergence: Contemporary Readings in Philosophy and Science, pp. 1–6. Bradford Books, Cambridge (2008)

Binder, K., Stauffer, D.: A simple introduction to monte carlo simulation and some specialised topic. In: Binder, K. (ed.) Applications of the Monte Carlo Method in Statistical Physics, pp. 1–36, 2nd edn. Springer, Berlin (1987)

Butterfield, J.: Less is different: emergence and reduction reconciled. Found. Phys. 41, 1065–1135 (2011)

Feller, W.: An Introduction to Probability Theory and its Applications, vols. I and II, 3rd edn. Wiley, New York (1968)

Fisher, M.E.: The renormalization group in the theory of critical behavior. Rev. Mod. Phys. 46, 597–616 (1974)

Fisher, M.E.: Scaling, universality and renormalization group theory. In: Hahne, F.J.W. (ed.) Critical Phenomena: Proceedings of the Summer School Held at the University of Stellenbosch, South Africa, January 1829, 1982. Springer Lecture Notes in Physics, vol. 186, pp. 1–139. Springer, Berlin (1983)

Fodor, J.: Special sciences: still autonomous after all these years. Philos. Perspect. 11, 149–163 (1997)

Jona-Lasinio, G.: The renormalization group: a probabilistic view. In: Il Nuovo Cimento 26B(1), 99–119 (1975)

Kadanoff, L.: Theories of matter: infinities and renormalization. In: Robert Batterman (ed.) The Oxford Handbook of Philosophy of Physics, Oxford, 141–188 (2013)

Khinchin, A.I.: Mathematical Foundations of Statistical Mechanics. Dover, New York (1949)

Kühn, R.: Über die konstitutive Rolle des Undendlichen bei der Entstehung physicalischer Theorien füer makroskopische Systeme. In: Brachtendorf, J., Möllenbeck, T., Nickel, G., Schaede, S. (eds.) Unendlichkeit. Mohr Siebeck, Tübingen (2008). http://www.mth.kcl.ac.uk/~kuehn/published/Infinity.pdf

Lange, M.: Laws and Lawmakers. Oxford University Press, Oxford (2009)

Laughlin, R., Pines, D.: The theory of everything. In: Proceedings of the National Academy of Sciences, vol. 97 (2000) (reprinted In: Bedau, M., Humphreys, P. (eds.) Emergence: Contemporary Readings in Philosophy and Science. Bradford Books, Cambridge, pp. 259–268 (2008))

Lavis, D.A., Bell, G.M.: Statistical Mechanics of Lattice Systems, vol. 2. Springer, Berlin (1998)

Menon, T., Callender, C.: Turn and face the strange Ch-Ch-changes: philosophical questions raised by phase transitions. In: Batterman, R. (ed.) The Oxford Handbook of Philosophy of Physics. Oxford University Press, Oxford, pp. 189–223 (2013)

Mitchell, S.: Biological Complexity and Integrative Pluralism. Cambridge University Press, Cambridge (2003)

Morrison, M.: Emergent physics and micro-ontology. Philos. Sci. 79(1), 141–166 (2012)

Rudder-Baker, L.: Non-reductive materialism. In: McLaughlin, B.P., Beckermann, A., et al. (eds.) The Oxford Handbook of Philosophy of Mind. Oxford University Press, Oxford, pp. 109–127 (2009)

Sinai, Y.: Probability Theory: an Introductory Course, Berlin (1992)

Wegner, F.: The critical state, general aspects. In: Domb, C., Green, M.S. (eds.) Phase Transitions and Critical Phenomena, vol. 6, pp. 7–124. Academic Press, London (1976)

Wilson, K.G.: The renormalization group—introduction. In: Domb, C., Green, M.S. (eds.) Phase Transitions and Critical Phenomena, vol. 6, pp. 1–5. Academic Press, London (1976)

Woodward, J.: Causation with a human face. In: Corry, R., Huw, P. (eds.) Causation, Physics, and the Constitution of Reality. Russells Republic Revisited, pp. 66–105. Oxford University Press, Oxford (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Renormalization and Cumulant Generating Functions

The renormalization group transformation for the case of sums of independent random variables is best investigated in terms of their cumulant generating functions.

Given a random variable \( X \), its characteristic function is defined as the Fourier transform of its probability density \( p_{X} \),Footnote 11

Characteristic functions are important tools in probability. Among other things, they can be used to express moments of a random variable in compact form via differentiation,