Abstract

Most of the earliest work in both experimental and theoretical/computational system neuroscience focused on sensory systems and the peripheral (spinal) control of movement. However, over the last three decades, attention has turned increasingly toward higher functions related to cognition, decision making and voluntary behavior. Experimental studies have shown that specific brain structures – the prefrontal cortex, the premotor and motor cortices, and the basal ganglia – play a central role in these functions, as does the dopamine system that signals reward during reinforcement learning. Because of the complexity of the issues involved and the difficulty of direct observation in deep brain structures, computational modeling has been crucial in elucidating the neural basis of cognitive control, decision making, reinforcement learning, working memory, and motor control. The resulting computational models are also very useful in engineering domains such as robotics, intelligent agents, and adaptive control. While it is impossible to encompass the totality of such modeling work, this chapter provides an overview of significant efforts in the last 20 years. It also outlines many of the theoretical issues underlying this work, and discusses significant experimental results that motivated the computational models.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Overview

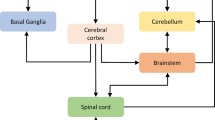

Mental function is usually divided into three parts: perception, cognition, and action – the so-called sense-think-act cycle. Though this view is no longer held dogmatically, it is useful as a structuring framework for discussing mental processes. Several decades of theory and experiment have elucidated an intricate, multiconnected functional architecture for the brain [1, 2] – a simplified version of which is shown in Fig. 35.1. While all regions and functions shown – and many not shown – are important, this figure provides a summary of the main brain regions involved in perception, cognition, and action. The highlighted blocks in Fig. 35.1 are discussed in this chapter, which focuses mainly on the higher level mechanisms for the control of behavior.

The control of action (or behavior) is, in a real sense, the primary function of the nervous system. While such actions may be voluntary or involuntary, most of the interest in modeling has understandably focused on voluntary action. This chapter will follow this precedent.

It is conventional to divide the neural substrates of behavior into higher and lower levels. The latter involves the musculoskeletal apparatus of action (muscles, joints, etc.) and the neural networks of the spinal cord and brainstem. These systems are seen as representing the actuation component of the action system, which is controlled by the higher level system comprising cortical and subcortical structures. This division between a controller (the brain) and the plant (the body and spinal networks), which parallels the models used in robotics, has been criticized as arbitrary and unhelpful [3, 4], and there has recently been a shift of interest toward more embodied views of cognition [5, 6]. However, the conventional division is useful for organizing material covered in this chapter, which focuses primarily on the higher level systems, i. e., those above the spinal cord and the brainstem.

The higher level system can be divided further into a cognitive control component involving action selection, configuration of complex actions, and the learning of appropriate behaviors through experience, and a motor control component that generates the control signals for the lower level system to execute the selected action. The latter is usually identified with the motor cortex (GlossaryTerm

M1

), premotor cortex (GlossaryTermPMC

), and the supplementary motor area (GlossaryTermSMA

), while the former is seen as involving the prefrontal cortex (GlossaryTermPFC

), basal ganglia (GlossaryTermBG

), the anterior cingulate cortex (GlossaryTermACC

) and other cortical and subcortical regions [7]. With regard to the generation of actions per se, an influential viewpoint for the higher level system is summarized by Doya [8]. It proposes that higher level control of action has three major loci: the cortex, the cerebellum, and the GlossaryTermBG

. Of these, the cortex – primarily the GlossaryTermM1

– provides a self-organized repertoire of possible actions that, when triggered, generate movement by activating muscles via spinal networks, the cerebellum implements fine motor control configured through error-based supervised learning [9], and the GlossaryTermBG

provide the mechanisms for selecting among actions and learning appropriate ones through reinforcement learning [10, 11, 12, 13]. The motor cortex and cerebellum can be seen primarily as motor control (though see [14]), whereas the GlossaryTermBG

falls into the domain of cognitive control and working memory (GlossaryTermWM

). The GlossaryTermPFC

is usually regarded as the locus for higher order choice representations, plans, goals, etc. [15, 16, 17, 18], while the GlossaryTermACC

is thought to be involved in conflict monitoring [19, 20, 21].2 Motor Control

Given its experimental accessibility and direct relevance to robotics, motor control has been a primary area of interest for computational modeling [22, 23, 24]. Mathematical, albeit non-neural, theories of motor control were developed initially within the framework of dynamical systems. One of these directions led to models of action as an emergent phenomenon [25, 26, 27, 28, 29, 3, 30, 31, 32, 33] arising from interactions among preferred coordination modes [34]. This approach has continued to yield insights [29] and has been extended to multiactor situations as well [33, 35, 36, 37]. Another approach within the same framework is the equilibrium point hypothesis [38, 39], which explains motor control through the change in the equilibrium points of the musculoskeletal system in response to neural commands. Both these dynamical approaches have paid relatively less attention to the neural basis of motor control and focused more on the phenomenology of action in its context. Nevertheless, insights from these models are fundamental to the emerging synthesis of action as an embodied cognitive function [5, 6].

A closely related investigative tradition has been developed from the early studies of gaits and other rhythmic movements in cats, fish, and other animals [40, 41, 42, 43, 44, 45], leading to computational models for central pattern generators (GlossaryTerm

CPG

s), which are neural networks that generate characteristic periodic activity patterns autonomously or in response to control signals [46]. It has been found that rhythmic movements can be explained well in terms of GlossaryTermCPG

s – located mainly in the spinal cord – acting upon the coordination modes inherent in the musculoskeletal system. The key insight to emerge from this work is that a wide range of useful movements can be generated by modulation of these GlossaryTermCPG

s by rather simple motor control signals from the brain, and feedback from sensory receptors can shape these movements further [43]. This idea was demonstrated in recent work by Ijspeert etal [47] showing how the same simple GlossaryTermCPG

network could produce both swimming and walking movements in a robotic salamander model using a simple scalar control signal.While rhythmic movements are obviously important, computational models of motor control are often motivated by the desire to build humanoid or biomorphic robots, and thus need to address a broader range of actions – especially aperiodic and/or voluntary movements. Most experimental work on aperiodic movement has focused on the paradigm of manual reaching [30, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64]. However, seminal work has also been done with complex reflexes in frogs and cats [65, 66, 67, 68, 69, 70, 71, 72], isometric tasks [73, 74], ball-catching [75], drawing and writing [60, 76, 77, 78, 79, 80, 81], and postural control [71, 72, 82, 83].

A central issue in understanding motor control is the degrees of freedom problem [84] which arises from the immense redundancy of the system – especially in the context of multijoint control. For any desired movement – such as reaching for an object – there are an infinite number of control signal combinations from the brain to the muscles that will accomplish the task (see [85] for an excellent discussion). From a control viewpoint, this has usually been seen as a problem because it precludes the clear specification of an objective function for the controller. To the extent that they consider the generation of specific control signals for each action, most computational models of motor control can be seen as direct or indirect ways to address the degrees of freedom problem.

2.1 Cortical Representation of Movement

It has been known since the seminal work by Penfield and Boldrey [86] that the stimulation of specific locations in the GlossaryTerm

M1

elicit motor responses in particular locations on the body. This has led to the notion of a motor homunculus – a map of the body on the GlossaryTermM1

. However, the issue of exactly what aspect of movement is encoded in response to individual neurons is far from settled. A crucial breakthrough came with the discovery of population coding by Georgopoulos etal [49]. It was found that the activity of specific neurons in the hand area of the GlossaryTermM1

corresponded to reaching movements in particular directions. While the tuning of individual cells was found to be rather broad (and had a sinusoidal profile), the joint activity of many such cells with different tuning directions coded the direction of movement with great precision, and could be decoded through neurally plausible estimation mechanisms. Since the initial discovery, population codes have been found in other regions of the cortex that are involved in movement [49, 53, 54, 60, 77, 78, 79, 80, 87]. Population coding is now regarded as the primary basis of directional coding in the brain, and is the basis of most brain–machine interfaces (GlossaryTermBMI

) and brain-controlled prosthetics [88, 89]. Neural network models for population coding have been developed by several researchers [90, 91, 92, 93], and population coding has come to be seen as a general neural representational strategy with application far beyond motor control [94]. Excellent reviews are provided in [95, 96]. Mathematical and computational models for Bayesian inference with population codes are discussed in [97, 98].An active research issue in the cortical coding of movement is whether it occurs at the level of kinematic variables, such as direction and velocity, or in terms of kinetic variables, such as muscle forces and joint torques. From a cognitive viewpoint, a kinematic representation is obviously more useful, and population codes suggest that such representations are indeed present in the motor cortex [100, 48, 53, 54, 60, 77, 78, 79, 80, 99] and GlossaryTerm

PFC

[101, 15]. However, movement must ultimately be constructed from the appropriate kinetic variables, i. e., by controlling the forces generated by specific muscles and the resulting joint torques. Studies have indicated that some neurons in the GlossaryTermM1

are indeed tuned to muscle forces and joint torques [100, 102, 103, 58, 59, 73, 99]. This apparent multiplicity of cortical representations has generated significant debate among researchers [74]. One way to resolve this issue is to consider the kinetic and kinematic representations as dual representations related through the constraints of the musculoskeletal system. However, Shah et al. [104] have used a simple computational model to show that neural populations tuned to kinetic or kinematic variables can act jointly in motor control without the need for explicit coordinate transformations.Graziano etal [105] studied movements elicited by the sustained electrode stimulation of specific sites in the motor cortex of monkeys. They found that different sites led to specific complex, multijoint movements such as bringing the hand to the mouth or lifting the hand above the head regardless of the initial position. This raises the intriguing possibility that individual cells or groups of cells in the GlossaryTerm

M1

encode goal-directed movements that can be triggered as units. The study also indicated that this encoding is not open-loop, but can compensate – at least to some degree – for variation or extraneous perturbations. The GlossaryTermM1

and other related regions (e. g., the supplementary motor area and the GlossaryTermPMC

) appear to encode spatially organized maps of a few canonical complex movements that can be used as basis functions to construct other actions [105, 106, 107]. A neurocomputational model using self-organized feature maps has been proposed in [108] for the representation of such canonical movements.In addition to rhythmic and reaching movements, there has also been significant work on the neural basis of sequential movements, with the finding that such neural codes for movement sequences exist in the supplementary motor area [109, 110, 111], cerebellum [112, 113], GlossaryTerm

BG

[112], and the GlossaryTermPFC

[101]. Coding for multiple goals in sequential reaching has been observed in the parietal cortex [114].2.2 Synergy-based Representations

A rather different approach to studying the construction of movement uses the notion of motor primitives, often termed synergies [115, 116, 63]. Typically, these synergies are manifested in coordinated patterns of spatiotemporal activation over groups of muscles, implying a force field over posture space [117, 118]. Studies in frogs, cats, and humans have shown that a wide range of complex movements in an individual subject can be explained as the modulated superposition of a few synergies [115, 119, 120, 63, 65, 66, 67, 68, 69, 70, 71, 72]. Given a set of n muscles, the n-dimensional time-varying vector of activities for the muscles during an action can be written as

where is a time-varying synergy function that takes only nonnegative values, is the gain of the kth synergy used for action q, and is the temporal offset with which the kth synergy is triggered for action q [69]. The key point is that a broad range of actions can be constructed by choosing different gains and offsets over the same set of synergies, which represent a set of hard-coded basis functions for the construction of movements [120, 121]. Even more interestingly, it appears that the synergies found empirically across different subjects of the same species are rather consistent [67, 72], possibly reflecting the inherent constraints of musculoskeletal anatomy. Various neural loci have been suggested for synergies, including the spinal cord [107, 122, 67], the motor cortex [123], and combinations of regions [124, 85].

Though synergies are found consistently in the analysis of experimental data, their actual existence in the neural substrate remains a topic for debate [125, 126]. However, the idea of constructing complex movements from motor primitives has found ready application in robotics [127, 128, 129, 130, 131, 132], as discussed later in this chapter. A hierarchical neurocomputational model of motor synergies based on attractor networks has recently been proposed in [133, 134].

2.3 Computational Models of Motor Control

Motor control has been modeled computationally at many levels and in many ways, ranging from explicitly control-theoretic models through reinforcement-based models to models based on emergent dynamical patterns. This section provides a brief overview of these models.

As discussed above the GlossaryTerm

M1

, premotor cortex (GlossaryTermPMC

) and the supplementary motor area (GlossaryTermSMA

) are seen as providing self-organized codes for specific actions, including information on direction, velocity, force, low-level sequencing, etc., while the GlossaryTermPFC

provides higher level codes needed to construct more complex actions. These codes, comprising a repertoire of actions [10, 106], arise through self-organized learning of activity patterns in these cortical systems. The GlossaryTermBG

system is seen as the primary locus of selection among the actions in the cortical repertoire. The architecture of the system involving the cortex, GlossaryTermBG

, and the thalamus, and in particular the internal architecture of the GlossaryTermBG

[135], makes this system ideally suited to selectively disinhibiting specific cortical regions, presumably activating codes for specific actions [10, 136, 137]. The GlossaryTermBG

system also provides an ideal substrate for learning appropriate actions through a dopamine-mediated reinforcement learning mechanism [138, 139, 140, 141].Many of the influential early models of motor control were based on control-theoretic principles [142, 143, 144], using forward and inverse kinematic and dynamic models to generate control signals [145, 146, 147, 148, 149, 150, 55, 57] – see [146] for an excellent introduction. These models have led to more sophisticated ones, such as GlossaryTerm

MOSAIC

(modular selection and identification for control) [151] and GlossaryTermAVITEWRITE

(adaptive vector integration to endpoint handwriting) [81]. The GlossaryTermMOSAIC

model is a mixture of experts, consisting of many parallel modules, each comprising three subsystems. These are: A forward model relating motor commands to predicted position, a responsibility predictor that estimates the applicability of the current module, and an inverse model that learns to generate control signals for desired movements. The system generates motor commands by combining the recommendations of the inverse models of all modules weighted by their applicability. Learning in the model is based on a variant of the EM algorithm. The model in [57] is a comprehensive neural model with both cortical and spinal components, and builds upon the earlier VITE model in [55]. The GlossaryTermAVITEWRITE

model [81], which is a further extension of the VITE model, can generate the complex movement trajectories needed for writing by using a combination of pre-specified phenomenological motor primitives (synergies). A cerebellar model for the control of timing during reaches has been presented by Barto etal [152].The use of neural maps in models of motor control was pioneered in [153, 154]. These models used self-organized feature maps (GlossaryTerm

SOFM

s) [155] to learn visuomotor coordination. Baraduc etal [156] presented a more detailed model that used multiple maps to first integrate posture and desired movement direction and then to transform this internal representation into a motor command. The maps in this and most subsequent models were based on earlier work by [90, 91, 92, 93]. An excellent review of this approach is given in [94]. A more recent and comprehensive example of the map-based approach is the GlossaryTermSURE-REACH

(sensorimotor, unsupervised, redundancy-resolving control architecture) model in [157] which focuses on exploiting the redundancy inherent in motor control [84]. Unlike many of the other models, which use neutrally implausible error-based learning, GlossaryTermSURE-REACH

relies only on unsupervised and reinforcement learning. Maps are also the central feature of a general cognitive architecture called GlossaryTermERA

(epigenetic robotics architecture) by Morse etal [158].Another successful approach to motor control models is based on the use of motor primitives, which are used as basis functions in the construction of diverse actions. This approach is inspired by the experimental observation of motor synergies as described above. However, most models based on primitives implement them nonneurally, as in the case of GlossaryTerm

AVITEWRITE

[81]. The most systematic model of motor primitives has been developed by Schaal etal [129, 130, 131, 132]. In this model, motor primitives are specified using differential equations, and are combined after weighting to produce different movements. Recently, Matsubara et al. [159] have shown how the primitives in this model can be learned systematically from demonstrations. Drew et al. [123] proposed a conceptual model for the construction of locomotion using motor primitives (synergies) and identified the characteristics of such primitives experimentally. A neural model of motor primitives based on hierarchical attractor networks has been proposed recently in [133, 134, 160], while Neilson and Neilson [124, 85] have proposed a model based on coordination among adaptive neural filters.Motor control models based on primitives can be simpler than those based on trajectory tracking because the controller typically needs to choose only the weights (and possibly delays) for the primitives rather than specifying details of the trajectory (or forces). Among other things, this promises a potential solution to the degrees of freedom problem [84] since the coordination inherent in the definition of motor primitives reduces the effective degrees of freedom in the system. Another way to address the degrees of freedom problem is to use an optimal control approach with a specific objective function. Researchers have proposed objective functions such as minimum jerk [161], minimum torque [162], minimum acceleration [163], or minimum energy [85], but an especially interesting idea is to optimize the distribution of variability across the degrees of freedom in a task-dependent way [144, 164, 165, 166, 167]. From this perspective, motor control trades off variability in task-irrelevant dimensions for greater accuracy in task-relevant ones. Thus, rather than specifying a trajectory, the controller focuses only on correcting consequential errors. This also explains the experimental observation that motor tasks achieve their goals with remarkable accuracy while using highly variable trajectories to achieve the same goal. Trainin et al. [168] have shown that the optimal control principle can be used to explain the observed neural coding of movements in the cortex. Biess et al. [169] have proposed a detailed computational model for controlling an arm in three-dimensional space by separating the spatial and temporal components of control. This model is based on optimizing energy usage and jerk [161], but is not implemented at the neural level.

An alternative to these prescriptive and constructivist approaches to motor control is provided by models based on dynamical systems [25, 26, 27, 29, 3, 31, 32, 33]. The most important way in which these models diverge from the others is in their use of emergence as the central organizational principle of control. In this formulation, control programs, structures, primitives, etc., are not preconfigured in the brain–body system, but emerge under the influence of task and environmental constraints on the affordances of the system [33]. Thus, the dynamical systems view of motor control is fundamentally ecological [170], and like most ecological models, is specified in terms of low-dimensional state dynamics rather than high-dimensional neural processes. Interestingly, a correspondence can be made between the dynamical and optimal control models through the so-called uncontrolled manifold concept [171, 31, 33, 39]. In both models, the dimensions to be controlled and those that are left uncontrolled are decided by external constraints rather than internal prescription, as in classical models.

3 Cognitive Control and Working Memory

A lot of behavior – even in primates – is automatic, or almost so. This corresponds to actions (or internal behaviors) so thoroughly embedded in the sensorimotor substrate that they emerge effortlessly from it. In contrast, some tasks require significant cognitive effort for one or more reason, including:

-

1.

An automatic behavior must be suppressed to allow the correct response to emerge, e. g., in the Stroop task [172].

-

2.

Conflicts between incoming information and/or recalled behaviors must be resolved [19, 20].

-

3.

More contextual information – e. g., social context – must be taken into account before acting.

-

4.

Intermediate pieces of information need to be stored and recalled during the performance of the task, e. g., in sequential problem solving.

-

5.

The timing of subtasks within the overall task is complex, e. g., in delayed-response tasks or other sequential tasks [173].

Roughly speaking, the first three fall under the heading of cognitive control, and the latter two of working memory. However, because of the functions are intimately linked, the terms are often subsumed into each other.

3.1 Action Selection and Reinforcement Learning

Action selection is arguably the central component of the cognitive control process. As the name implies, it involves selectively triggering an action from a repertoire of available ones. While action selection is a complex process involving many brain regions, a consensus has emerged that the GlossaryTerm

BG

system plays a central role in its mechanism [10, 12, 14]. The architecture of the GlossaryTermBG

system and the organization of its projections to and from the cortex [135, 174, 175] make it ideally suited to function as a state-dependent gating system for specific functional networks in the cortex. As shown in Fig. 35.2, the hypothesis is that the striatal layer of the GlossaryTermBG

system, receiving input from the cortex, acts as a pattern recognizer for the current cognitive state. Its activity inhibits specific parts of the globus pallidus (GlossaryTermGPi

), leading to disinhibition of specific neural assemblies in the cortex – presumably allowing the behavior/action encoded by those assemblies to proceed [10]. The associations between cortical activity patterns and behaviors are key to the functioning of the GlossaryTermBG

as an action selection system, and the configuration and modulation of these associations are thought to lie at the core of cognitive control. The neurotransmitter dopamine (GlossaryTermDA

) plays a key role here by serving as a reward signal [138, 139, 140] and modulating reinforcement learning [176, 177] in both the GlossaryTermBG

and the cortex [141, 178, 179, 180].3.2 Working Memory

All nontrivial behaviors require task-specific information, including relevant domain knowledge and the relative timing of subtasks. These are usually grouped under the function of GlossaryTerm

WM

. An influential model of GlossaryTermWM

in [181] identifies three components in GlossaryTermWM

: (1) a central executive, responsible for attention, decision making, and timing; (2) a phonological loop, responsible for processing incoming auditory information, maintaining it in short-term memory, and rehearsing utterances; and (3) a visuospatial sketchpad, responsible for processing and remembering visual information, keeping track of what and where information, etc. An episodic buffer to manage relationships between the other three components is sometimes included [182]. Though already rather abstract, this model needs even more generalized interpretation in the context of many cognitive tasks that do not directly involve visual or auditory data. Working memory function is most closely identified with the GlossaryTermPFC

[183, 184, 185].Almost all studies of GlossaryTerm

WM

consider only short-term memory, typically on the scale of a few seconds [186]. Indeed, one of the most significant – though lately controversial – results in GlossaryTermWM

research is the finding that only a small number of items can be kept in mind at any one time [187, 188]. However, most cognitive tasks require context-dependent repertoires of knowledge and behaviors to be enabled collectively over longer periods. For example, a player must continually think of chess moves and strategies over the course of a match lasting several hours. The configuration of context-dependent repertoires for extended periods has been termed long-term working memory [189].3.3 Computational Models of Cognitive Control and Working Memory

Several computational models have been proposed for cognitive control, and most of them share common features. The issues addressed by the models include action selection, reinforcement learning of appropriate actions, decision making in choice tasks, task sequencing and timing, persistence and capacity in GlossaryTerm

WM

, task switching, sequence learning, and the configuration of context-appropriate workspaces. Most of the models discussed below are neural with a range of biological plausibility. A few important nonneural models are also mentioned.The action selection and reinforcement learning substrate in the BG. Wide filled arrows indicate excitatory projections while wide unfilled arrows represent inhibitory projections. Linear arrows indicate generic excitatory and inhibitory connectivity between regions. The inverted D-shaped contacts indicate modulatory dopamine connections that are crucial to reinforcement learning. Abbreviations: SMAsupplementary motor area; SNcsubstantia nigra pars compacta; VTAventral tegmental area; OFCorbitofrontal cortex; GPeglobus pallidus (external nuclei); GPiglobus pallidus (internal nuclei); STNsubthalamic nucleus; Dexcitatory dopamine receptors; Dinhibitory dopamine receptors. The primary neurons of GPi are inhibitory and active by default, thus keeping all motor plans in the motor and premotor cortices in check. The neurons of the striatum are also inhibitory but usually in an inactive down state (after [190]). Particular subgroups of striatal neurons are activated by specific patterns of cortical activity (after [136]), leading first to disinhibition of specific actions via the direct input from striatum to GPi, and then by re-inhibition via the input through STN. Thus the system gates the triggering of actions appropriate to current cognitive contexts in the cortex. The dopamine input from SNc projects a reward signal based on limbic system state, allowing desirable context-action pairs to be reinforced (after [191, 192]) – though other hypotheses also exist (after [14]). The dopamine input to PFC from the VTA also signals reward and other task-related contingencies

A comprehensive model using spiking neurons and incorporating many biological features of the GlossaryTerm

BG

system has been presented in [13, 193]. This model focuses only on the GlossaryTermBG

and explicitly on the dynamics of dopamine modulation. A more abstract but broader model of cognitive control is the agents of the mind model in [14], which incorporates the cerebellum as well as the GlossaryTermBG

. In this model, the GlossaryTermBG

provide the action selection function while the cerebellum acts to refine and amplify the choices. A series of interrelated models have been developed by O’Reilly, Frank etal [17, 179, 194, 195, 196, 197, 198, 199]. All these models use the adaptive gating function of the GlossaryTermBG

in combination with the GlossaryTermWM

function of the prefrontal cortex to explain how executive function can arise without explicit top-down control – the so-called homunculus [196, 197]. A comprehensive review of these and other models of cognitive control is given in [200]. Models of goal-directed action mediated by the GlossaryTermPFC

have also been presented in [201] and [202]. Reynolds and O’Reilly etal [203] have proposed a model for configuring hierarchically organized representations in the GlossaryTermPFC

via reinforcement learning. Computational models of cognitive control and working have also been used to explain mental pathologies such as schizophrenia [204].An important aspect of cognitive control is switching between tasks at various time-scales [205, 206]. Imamizu etal [207] compared two computational models of task switching – a mixture-of-experts (GlossaryTerm

MoE

) model and GlossaryTermMOSAIC

– using brain imaging. They concluded that task switching in the GlossaryTermPFC

was more consistent with the GlossaryTermMoE

model and that in the parietal cortex and cerebellum with the GlossaryTermMOSAIC

model.An influential abstract model of cognitive control is the interactive activation model in [208, 209]. In this model, learned behavioral schemata contend for activation based on task context and cognitive state. While this model captures many phenomenological aspects of behavior, it is not explicitly neural. Botvinick and Plaut [173] present an alternative neural model that relies on distributed neural representations and the dynamics of recurrent neural networks rather than explicit schemata and contention. Dayan etal [210, 211] have proposed a neural model for implementing complex rule-based decision making where decisions are based on sequentially unfolding contexts. A partially neural model of behavior based on the CLARION cognitive model has been developed in [212].

Recently, Grossberg and Pearson [213] have presented a comprehensive model of GlossaryTerm

WM

called LIST PARSE. In this model, the term working memory is applied narrowly to the storage of temporally ordered items, i. e., lists, rather than more broadly to all short-term memory. Experimentally observed effects such as recency (better recall of late items in the list) and primacy (better recall of early items in the list) are explained by this model, which uses the concept of competitive queuing for sequences. This is based on the observation [101, 214] that multiple elements of a behavioral sequence are represented in the GlossaryTermPFC

as simultaneously active codes with activation levels representing the temporal order. Unlike the GlossaryTermWM

models discussed in the previous paragraph, the GlossaryTermWM

in LIST PARSE is embedded within a full cognitive control model with action selection, trajectory generation, etc. Many other neural models for chains of actions have also been proposed [214, 215, 216, 217, 218, 219, 220, 221, 222, 223, 224].Higher level cognitive control is characterized by the need to fuse information from multiple sensory modalities and memory to make complex decisions. This has led to the idea of a cognitive workspace. In the global workspace theory (GlossaryTerm

GWT

) developed in [225], information from various sensory, episodic, semantic, and motivational sources comes together in a global workspace that forms brief, task-specific integrated representations that are broadcast to all subsystems for use in GlossaryTermWM

. This model has been implemented computationally in the intelligent distribution agent (GlossaryTermIDA

) model by Franklin etal [226, 227]. A neurally implemented workspace model has been developed by Dehaene etal [172, 228, 229] to explain human subjects’ performance on effortful cognitive tasks (i. e., tasks that require suppression of automatic responses), and the basis of consciousness. The construction of cognitive workspaces is closely related to the idea of long-term working memory [189]. Unlike short-term working memory, there are few computational models for long-term working memory. Neural models seldom cover long periods, and implicitly assume that a chaining process through recurrent networks (e. g., [173]) can maintain internal attention. Iyer etal [230, 231] have proposed an explicitly neurodynamical model of this function, where a stable but modulatable pattern of activity called a graded attractor is used to selectively bias parts of the cortex in the context-dependent fashion. An earlier model was proposed in [232] to serve a similar function in the hippocampal system.Another class of models focuses primarily on single decisions within a task, and assume an underlying stochastic process [186, 233, 234, 235]. Typically, these models address two-choice short-term decisions made over a second or two [186]. The decision process begins with a starting point and accumulates information over time resulting in a diffusive (random walk) process. When the diffusion reaches one of two boundaries on either side of the starting point, the corresponding decision is made. This elegant approach can model such concrete issues as decision accuracy, decision time, and the distribution of decisions without any reference to the underlying neural mechanisms, which is both its chief strength and its primary weakness. Several connectionist models have also been developed based on paradigms similar to the diffusion approach [236, 237, 238]. The neural basis of such models has been discussed in detail in [239]. A population-coding neural model that makes Bayesian decisions based on cumulative evidence has been described by Beck etal [98].

Reinforcement learning [176] is widely used in many engineering applications, but several models go beyond purely computational use and include details of the underlying brain regions and neurophysiology [141, 240]. Excellent reviews of such models are provided in [241, 242, 243]. Recently, models have also been proposed to show how dopamine-mediated learning could work with spiking neurons [244] and population codes [245].

Computational models that focus on working memory per se (i. e., not on the entire problem of cognitive control) have mainly considered how the requirement of selective temporal persistence can be met by biologically plausible neural networks [246, 247]. Since working memories must bridge over temporal durations (e. g., in remembering a cue over a delay period), there must be some neural mechanism to allow activity patterns to persist selectively in time. A natural candidate for this is attractor dynamics in recurrent neural networks [248, 249], where the recurrence allows some activity patterns to be stabilized by reverberation [250]. The neurophysiological basis of such persistent activity has been studied in [251]. A central feature in many models of GlossaryTerm

WM

is the role of dopamine in the GlossaryTermPFC

[252, 253, 254]. In particular, it is believed that dopamine sharpens the response of GlossaryTermPFC

neurons involved in GlossaryTermWM

[255] and allows for reliable storage of timing information in the presence of distractors [246]. The model in [246, 252] includes several biophysical details such as the effect of dopamine on different ion channels and its differential modulation of various receptors. More abstract neural models for GlossaryTermWM

have been proposed in [256] and [257].A especially interesting type of attractor network uses the so-called bump attractors – spatially localized patterns of activity stabilized by local network connectivity and global competition [258]. Such a network has been used in a biologically plausible model of GlossaryTerm

WM

in the GlossaryTermPFC

in [259], which demonstrates that the memory is robust against distracting stimuli. A similar conclusion is drawn in [180] based on another bump attractor model of working memory. It shows that dopamine in the GlossaryTermPFC

can provide robustness against distractors, but robustness against internal noise is achieved only when dopamine in the GlossaryTermBG

locks the state of the striatum. Recently, Mongillo etal [260] have proposed the novel hypothesis that the persistence of neural activity in GlossaryTermWM

may be due to calcium-mediated facilitation rather than reverberation through recurrent connectivity.4 Conclusion

This chapter has attempted to provide an overview of neurocomputational models for cognitive control, GlossaryTerm

WM

, and motor control. Given the vast body of both experimental and computational research in these areas, the review is necessarily incomplete, though every attempt has been made to highlights the major issues, and to provide the reader with a rich array of references covering the breadth of each area.The models described in this chapter relate to several other mental functions including sensorimotor integration, memory, semantic cognition, etc., as well as to areas of engineering such as robotics and agent systems. However, these links are largely excluded from the chapter – in part for brevity, but mainly because most of them are covered elsewhere in this Handbook.

Abbreviations

- ACC:

-

anterior cingulate cortex

- AVITEWRITE:

-

adaptive vector integration to endpoint handwriting

- BG:

-

basal ganglia

- BMI:

-

brain–machine interface

- CPG:

-

central pattern generator

- DA:

-

dopamine

- ERA:

-

epigenetic robotics architecture

- GPi:

-

globus pallidus

- GWT:

-

global workspace theory

- IDA:

-

intelligent distribution agent

- M1:

-

motor cortex

- MoE:

-

mixture of experts

- MOSAIC:

-

modular selection and identification for control

- PFC:

-

prefrontal cortex

- PMC:

-

premotor cortex

- SMA:

-

supplementary motor area

- SOFM:

-

self-organized feature maps

- SURE-REACH:

-

sensorimotor, unsupervised, redundancy-resolving control architecture

- WM:

-

working memory

References

J. Fuster: The cognit: A network model of cortical representation, Int. J. Psychophysiol. 60, 125–132 (2006)

J. Fuster: The Prefrontal Cortex (Academic, London 2008)

M.T. Turvey: Coordination, Am. Psychol. 45, 938–953 (1990)

D. Sternad, M.T. Turvey: Control parameters, equilibria, and coordination dynamics, Behav. Brain Sci. 18, 780–783 (1996)

R. Pfeifer, M. Lungarella, F. Iida: Self-organization, embodiment, and biologically inspired robotics, Science 318, 1088–1093 (2007)

A. Chemero: Radical Embodied Cognitive Science (MIT Press, Cambridge 2011)

J.C. Houk, S.P. Wise: Distributed modular architectures linking basal ganglia, cerebellum, and cerebral cortex: Their role in planning and controlling action, Cereb. Cortex 5, 95–110 (2005)

K. Doya: What are the computations of the cerebellum, the basal ganglia and the cerebral cortex?, Neural Netw. 12, 961–974 (1999)

M. Kawato, H. Gomi: A computational model of four regions of the cerebellum based on feedback-error learning, Biol. Cybern. 68, 95–103 (1992)

A.M. Graybiel: Building action repertoires: Memory and learning functions of the basal ganglia, Curr. Opin. Neurobiol. 5, 733–741 (1995)

A.M. Graybiel: The basal ganglia and cognitive pattern generators, Schizophr. Bull. 23, 459–469 (1997)

A.M. Graybiel: The basal ganglia: Learning new tricks and loving it, Curr. Opin. Neurobiol. 15, 638–644 (2005)

M.D. Humphries, R.D. Stewart, K.N. Gurney: A physiologically plausible model of action selection and oscillatory activity in the basal ganglia, J. Neurosci. 26, 12921–12942 (2006)

J.C. Houk: Agents of the mind, Biol. Cybern. 92, 427–437 (2005)

E. Hoshi, K. Shima, J. Tanji: Neuronal activity in the primate prefrontal cortex in the process of motor selection based on two behavioral rules, J. Neurophysiol. 83, 2355–2373 (2000)

E.K. Miller, J.D. Cohen: An integrative theory of prefrontal cortex function, Annu. Rev. Neurosci. 4, 167–202 (2001)

N.P. Rougier, D.C. Noelle, T.S. Braver, J.D. Cohen, R.C. O'Reilly: Prefrontal cortex and flexible cognitive control: Rules without symbols, PNAS 102, 7338–7343 (2005)

J. Tanji, E. Hoshi: Role of the lateral prefrontal cortex in executive behavioral control, Physiol. Rev. 88, 37–57 (2008)

M.M. Botvinick, J.D. Cohen, C.S. Carter: Conflict monitoring and anterior cingulate cortex: An update, Trends Cogn. Sci. 8, 539–546 (2004)

M.M. Botvinick: Conflict monitoring and decision making: Reconciling two perspectives on anterior cingulate function, Cogn. Affect Behav. Neurosci. 7, 356–366 (2008)

J.W. Brown, T.S. Braver: Learned predictions of error likelihood in the anterior cingulate cortex, Science 307, 1118–1121 (2005)

J.S. Albus: New approach to manipulator control: The cerebellar model articulation controller (CMAC), J. Dyn. Sys. Meas. Control 97, 220–227 (1975)

D. Marr: A theory of cerebellar cortex, J. Physiol. 202, 437–470 (1969)

M.H. Dickinson, C.T. Farley, R.J. Full, M.A.R. Koehl, R. Kram, S. Lehman: How animals move: An integrative view, Science 288, 100–106 (2000)

H. Haken, J.A.S. Kelso, H. Bunz: A theoretical model of phase transitions in human hand movements, Biol. Cybern. 51, 347–356 (1985)

E. Saltzman, J.A.S. Kelso: Skilled actions: A task dynamic approach, Psychol. Rev. 82, 225–260 (1987)

P.N. Kugler, M.T. Turvey: Information, Natural Law, and the Self-Assembly of Rhythmic Movement (Lawrence Erlbaum, Hillsdale 1987)

G. Schöner: A dynamic theory of coordination of discrete movement, Biol. Cybern. 63, 257–270 (1990)

J.A.S. Kelso: Dynamic Patterns: The Self-Organization of Brain and Behavior (MIT Press, Cambridge 1995)

P. Morasso, V. Sanguineti, G. Spada: A computational theory of targeting movements based on force fields and topology representing networks, Neurocomputing 15, 411–434 (1997)

J.P. Scholz, G. Schöner: The uncontrolled manifold concept: Identifying control variables for a functional task, Exp. Brain Res. 126, 289–306 (1999)

M.A. Riley, M.T. Turvey: Variability and determinism in motor behavior, J. Mot. Behav. 34, 99–125 (2002)

M.A. Riley, N. Kuznetsov, S. Bonnette: State-, parameter-, and graph-dynamics: Constraints and the distillation of postural control systems, Sci. Mot. 74, 5–18 (2011)

E.C. Goldfield: Emergent Forms: Origins and Early Development of Human Action and Perception (Oxford Univ. Press, Oxford 1995)

J.A.S. Kelso, G.C. de Guzman, C. Reveley, E. Tognoli: Virtual partner interaction (VPI): Exploring novel behaviors via coordination dynamics, PLoS ONE 4, e5749 (2009)

V.C. Ramenzoni, M.A. Riley, K. Shockley, A.A. Baker: Interpersonal and intrapersonal coordinative modes for joint and individual task performance, Human Mov. Sci. 31, 1253–1267 (2012)

M.A. Riley, M.C. Richardson, K. Shockley, V.C. Ramenzoni: Interpersonal synergies, Front. Psychol. 2(38), DOI 10.3389/fpsyg.2011.00038. (2011)

A.G. Feldman, M.F. Levin: The equilibrium-point hypothesis–past, present and future, Adv. Exp. Med. Biol. 629, 699–726 (2009)

M.L. Latash: Motor synergies and the equilibrium-point hypothesis, Mot. Control 14, 294–322 (2010)

C.E. Sherrington: Integrative Actions of the Nervous System (Yale Univ. Press, New Haven 1906)

C.E. Sherrington: Remarks on the reflex mechanism of the step, Brain 33, 1–25 (1910)

C.E. Sherrington: Flexor-reflex of the limb, crossed extension reflex, and reflex stepping and standing (cat and dog), J. Physiol. 40, 28–121 (1910)

S. Grillner, T. Deliagina, O. Ekeberg, A. El Manira, R.H. Hill, A. Lansner, G.N. Orlovsky, P. Wallén: Neural networks that co-ordinate locomotion and body orientation in lamprey, Trends Neurosci. 18, 270–279 (1995)

P.J. Whelan: Control of locomotion in the decerebrate cat, Prog. Neurobiol. 49, 481–515 (1996)

S. Grillner: The motor infrastructure: From ion channels to neuronal networks, Nat. Rev. Neurosci. 4, 673–686 (2003)

S. Grillner: Biological pattern generation: The cellular and computational logic of networks in motion, Neuron 52, 751–766 (2006)

A.J. Ijspeert, A. Crespi, D. Ryczko, J.M. Cabelguen: From swimming to walking with a salamander robot driven by a spinal cord model, Science 315, 1416–1420 (2007)

A.P. Georgopoulos, J.F. Kalaska, R. Caminiti, J.T. Massey: On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex, J. Neurosci. 2, 1527–1537 (1982)

A.P. Georgopoulos, R. Caminiti, J.F. Kalaska, J.T. Massey: Spatial coding of movement: A hypothesis concerning the coding of movement direction by motor cortical populations, Exp. Brain Res. Suppl. 7, 327–336 (1983)

A.P. Georgopoulos, R. Caminiti, J.F. Kalaska: Static spatial effects in motor cortex and area 5: Quantitative relations in a two-dimensional space, Exp. Brain Res. 54, 446–454 (1984)

A.P. Georgopoulos, R.E. Kettner, A.B. Schwartz: Primate motor cortex and free arm movements to visual targets in three-dimensional space. II: Coding of the direction of movement by a neuronal population, J. Neurosci. 8, 2928–2937 (1988)

A.P. Georgopoulos, J. Ash, N. Smyrnis, M. Taira: The motor cortex and the coding of force, Science 256, 1692–1695 (1992)

J. Ashe, A.P. Georgopoulos: Movement parameters and neural activity in motor cortex and area, Cereb. Cortex 5(6), 590–600 (1994)

A.B. Schwartz, R.E. Kettner, A.P. Georgopoulos: Primate motor cortex and free arm movements to visual targets in 3-D space. I. Relations between singlecell discharge and direction of movement, J. Neurosci. 8, 2913–2927 (1988)

D. Bullock, S. Grossberg: Neural dynamics of planned arm movements: emergent invariants and speed–accuracy properties during trajectory formation, Psychol. Rev. 95, 49–90 (1988)

D. Bullock, S. Grossberg, F.H. Guenther: A self-organizing neural model of motor equivalent reaching and tool use by a multijoint arm, J. Cogn. Neurosci. 5, 408–435 (1993)

D. Bullock, P. Cisek, S. Grossberg: Cortical networks for control of voluntary arm movements under variable force conditions, Cereb. Cortex 8, 48–62 (1998)

S.H. Scott, J.F. Kalaska: Changes in motor cortex activity during reaching movements with similar hand paths but different arm postures, J. Neurophysiol. 73, 2563–2567 (1995)

S.H. Scott, J.F. Kalaska: Reaching movements with similar hand paths but different arm orientations. I. Activity of individual cells in motor cortex, J. Neurophysiol. 77, 826–852 (1997)

D.W. Moran, A.B. Schwartz: Motor cortical representation of speed and direction during reaching, J. Neurophysiol. 82, 2676–2692 (1999)

R. Shadmehr, S.P. Wise: The Computational Neurobiology of Reaching and Pointing: A Foundation for Motor Learning (MIT Press, Cambridge 2005)

A. d'Avella, A. Portone, L. Fernandez, F. Lacquaniti: Control of fast-reaching movements by muscle synergy combinations, J. Neurosci. 26, 7791–7810 (2006)

E. Bizzi, V.C. Cheung, A. d'Avella, P. Saltiel, M. Tresch: Combining odules for movement, Brain Res. Rev. 7, 125–133 (2008)

S. Muceli, A.T. Boye, A. d'Avella, D. Farina: Identifying representative synergy matrices for describing muscular activation patterns during multidirectional reaching in the horizontal plane, J. Neurophysiol. 103, 1532–1542 (2010)

S.F. Giszter, F.A. Mussa-Ivaldi, E. Bizzi: Convergent force fields organized in the frog's spinal cord, J. Neurosci. 13, 467–491 (1993)

F.A. Mussa-Ivaldi, S.F. Giszter: Vector field approximation: A computational paradigm for motor control and learning, Biol. Cybern. 67, 491–500 (1992)

M.C. Tresch, P. Saltiel, E. Bizzi: The construction of movement by the spinal cord, Nat. Neurosci. 2, 162–167 (1999)

W.J. Kargo, S.F. Giszter: Rapid correction of aimed movements by summation of force-field primitives, J. Neurosci. 20, 409–426 (2000)

A. d'Avella, P. Saltiel, E. Bizzi: Combinations of muscle synergies in the construction of a natural motor behavior, Nat. Neurosci. 6, 300–308 (2003)

A. d'Avella, E. Bizzi: Shared and specific muscle synergies in natural motor behaviors, Proc. Natl. Acad. Sci. USA 102, 3076–3081 (2005)

L.H. Ting, J.M. Macpherson: A limited set of muscle synergies for force control during a postural task, J. Neurophysiol. 93, 609–613 (2005)

G. Torres-Oviedo, J.M. Macpherson, L.H. Ting: Muscle synergy organization is robust across a variety of postural perturbations, J. Neurophysiol. 96, 1530–1546 (2006)

L.E. Sergio, J.F. Kalaska: Systematic changes in motor cortex cell activity with arm posture during directional isometric force generation, J. Neurophysiol. 89, 212–228 (2003)

R. Ajemian, A. Green, D. Bullock, L. Sergio, J. Kalaska, S. Grossberg: Assessing the function of motor cortex: Single-neuron models of how neural response is modulated by limb biomechanics, Neuron 58, 414–428 (2008)

B. Cesqui, A. d'Avella, A. Portone, F. Lacquaniti: Catching a ball at the right time and place: Individual factors matter, PLoS ONE 7, e31770 (2012)

P. Morasso, F.A. Mussa-Ivaldi: Trajectory formation and handwriting: A computational model, Biol. Cybern. 45, 131–142 (1982)

A.B. Schwartz: Motor cortical activity during drawing movements: Single unit activity during sinusoid tracing, J. Neurophysiol. 68, 528–541 (1992)

A.B. Schwartz: Motor cortical activity during drawing movements: Population representation during sinusoid tracing, J. Neurophysiol. 70, 28–36 (1993)

A.B. Schwartz: Direct cortical representation of drawing, Science 265, 540–542 (1994)

D.W. Moran, A.B. Schwartz: Motor cortical activity during drawing movements: Population representation during spiral tracing, J. Neurophysiol. 82, 2693–2704 (1999)

R.W. Paine, S. Grossberg, A.W.A. Van Gemmert: A quantitative evaluation of the AVITEWRITE model of handwriting learning, Human Mov. Sci. 23, 837–860 (2004)

G. Torres-Oviedo, L.H. Ting: Muscle synergies characterizing human postural responses, J. Neurophysiol. 98, 2144–2156 (2007)

L.H. Ting, J.L. McKay: Neuromechanics of muscle synergies for posture and movement, Curr. Opin. Neurobiol. 17, 622–628 (2007)

N. Bernstein: The Coordination and Regulation of Movements (Pergamon, Oxford 1967)

P.D. Neilson, M.D. Neilson: On theory of motor synergies, Human Mov. Sci. 29, 655–683 (2010)

W. Penfield, E. Boldrey: Somatic motor and sensory representation in the motor cortex of man as studied by electrical stimulation, Brain 60, 389–443 (1937)

T.D. Sanger: Theoretical considerations for the analysis of population coding in motor cortex, Neural Comput. 6, 29–37 (1994)

J.K. Chapin, R.A. Markowitz, K.A. Moxo, M.A.L. Nicolelis: Direct real-time control of a robot arm using signals derived from neuronal population recordings in motor cortex, Nat. Neurosci. 2, 664–670 (1999)

A.A. Lebedev, M.A.L. Nicolelis: Brain-machine interfaces: Past, present, and future, Trends Neurosci. 29, 536–546 (2006)

E. Salinas, L. Abbott: Transfer of coded information from sensory to motor networks, J. Neurosci. 15, 6461–6474 (1995)

E. Salinas, L. Abbott: A model of multiplicative neural responses in parietal cortex, Proc. Natl. Acad. Sci. USA 93, 11956–11961 (1996)

A. Pouget, T. Sejnowski: A neural model of the cortical representation of egocentric distance, Cereb. Cortex 4, 314–329 (1994)

A. Pouget, T. Sejnowski: Spatial transformations in the parietal cortex using basis functions, J. Cogn. Neurosci. 9, 222–237 (1997)

A. Pouget, L.H. Snyder: Computational approaches to sensorimotor transformations, Nat. Neurosci. Supp. 3, 1192–1198 (2000)

A. Pouget, P. Dayan, R.S. Zemel: Information processing with population codes, Nat. Rev. Neurosci. 1, 125–132 (2000)

A. Pouget, P. Dayan, R.S. Zemel: Inference and computation with population codes, Annu. Rev. Neurosci. 26, 381–410 (2003)

W.J. Ma, J.M. Beck, P.E. Latham, A. Pouget: Bayesian inference with probabilistic population codes, Nat. Neurosci. 9, 1432–1438 (2006)

J.M. Beck, W.J. Ma, R. Kiani, T. Hanks, A.K. Churchland, J. Roitman, M.N. Shadlen, P.E. Latham, A. Pouget: Probabilistic population codes for bayesian decision making, Neuron 60, 1142–1152 (2008)

R. Ajemian, D. Bullock, S. Grossberg: Kinematic coordinates in which motor cortical cells encode movement direction, Neurophys. 84, 2191–2203 (2000)

R. Ajemian, D. Bullock, S. Grossberg: A model of movement coordinates in the motor cortex: Posture-dependent changes in the gain and direction of single cell tuning curves, Cereb. Cortex 11, 1124–1135 (2001)

B.B. Averbeck, M.V. Chafee, D.A. Crowe, A.P. Georgopoulos: Parallel processing of serial movements in prefrontal cortex, PNAS 99, 13172–13177 (2002)

R. Caminiti, P.B. Johnson, A. Urbano: Making arm movements within different parts of space: Dynamic aspects in the primate motor cortex, J. Neurosci. 10, 2039–2058 (1990)

K.M. Graham, K.D. Moore, D.W. Cabel, P.L. Gribble, P. Cisek, S.H. Scott: Kinematics and kinetics of multijoint reaching in nonhuman primates, J. Neurophysiol. 89, 2667–2677 (2003)

A. Shah, A.H. Fagg, A.G. Barto: Cortical involvement in the recruitment of wrist muscles, J. Neurophysiol. 91, 2445–2456 (2004)

M.S.A. Graziano, T. Aflalo, D.F. Cooke: Arm movements evoked by electrical stimulation in the motor cortex of monkeys, J. Neurophysiol. 94, 4209–4223 (2005)

M.S.A. Graziano: The organization of behavioral repertoire in motor cortex, Annu. Rev. Neurosci. 29, 105–134 (2006)

M.S.A. Graziano: The Intelligent Movement Machine (Oxford Univ. Press, Oxford 2008)

T.N. Aflalo, M.S.A. Graziano: Possible origins of the complex topographic organization of motor cortex: Reduction of a multidimensional space onto a two-dimensional array, J. Neurosci. 26, 6288–6297 (2006)

K. Shima, J. Tanji: Both supplementary and presupplementary motor areas are crucial for the temporal organization of multiple movements, J. Neurophysiol. 80, 3247–3260 (1998)

J.-W. Sohn, D. Lee: Order-dependent modulation of directional signals in the supplementary and presupplementary motor areas, J. Neurosci. 27, 13655–13666 (2007)

H. Mushiake, M. Inase, J. Tanji: Neuronal Activity in the primate premotor, supplementary, and precentral motor cortex during visually guided and internally determined sequential movements, J. Neurophysiol. 66, 705–718 (1991)

H. Mushiake, P.L. Strick: Pallidal neuron activity during sequential arm movements, J. Neurophysiol. 74, 2754–2758 (1995)

H. Mushiake, P.L. Strick: Preferential activity of dentate neurons during limb movements guided by vision, J. Neurophysiol. 70, 2660–2664 (1993)

D. Baldauf, H. Cui, R.A. Andersen: The posterior parietal cortex encodes in parallel both goals for double-reach sequences, J. Neurosci. 28, 10081–10089 (2008)

T. Flash, B. Hochner: Motor primitives in vertebrates and invertebrates, Curr. Opin. Neurobiol. 15, 660–666 (2005)

J.A.S. Kelso: Synergies: Atoms of brain and behavior. In: Progress in Motor Control, ed. by D. Sternad (Springer, Berlin, Heidelberg 2009) pp. 83–91

F.A. Mussa-Ivaldi: Do neurons in the motor cortex encode movement direction? An alternate hypothesis, Neurosci. Lett. 91, 106–111 (1988)

F.A. Mussa-Ivaldi: From basis functions to basis fields: vector field approximation from sparse data, Biol. Cybern. 67, 479489 (1992)

A. d'Avella, D.K. Pai: Modularity for sensorimotor control: Evidence and a new prediction, J. Mot. Behav. 42, 361–369 (2010)

A. d'Avella, L. Fernandez, A. Portone, F. Lacquaniti: Modulation of phasic and tonic muscle synergies with reaching direction and speed, J. Neurophysiol. 100, 1433–1454 (2008)

G. Torres-Oviedo, L.H. Ting: Subject-specific muscle synergies in human balance control are consistent across different biomechanical contexts, J. Neurophysiol. 103, 3084–3098 (2010)

C.B. Hart: A neural basis for motor primitives in the spinal cord, J. Neurosci. 30, 1322–1336 (2010)

T. Drew, J. Kalaska, N. Krouchev: Muscle synergies during locomotion in the cat: A model for motor cortex control, J. Physiol. 586(5), 1239–1245 (2008)

P.D. Neilson, M.D. Neilson: Motor maps and synergies, Human Mov. Sci. 24, 774–797 (2005)

J.J. Kutch, A.D. Kuo, A.M. Bloch, W.Z. Rymer: Endpoint force fluctuations reveal flexible rather than synergistic patterns of muscle cooperation, J. Neurophysiol. 100, 2455–2471 (2008)

M.C. Tresch, A. Jarc: The case for and against muscle synergies, Curr. Opin. Neurobiol. 19, 601–607 (2009)

A. Ijspeert, J. Nakanishi, S. Schaal: Learning rhythmic movements by demonstration using nonlinear oscillators, IEEE Int. Conf. Intell. Rob. Syst. (IROS 2002), Lausanne (2002) pp. 958–963

A. Ijspeert, J. Nakanishi, S. Schaal: Movement imitation with nonlinear dynamical systems in humanoid robots, Int. Conf. Robotics Autom. (ICRA 2002), Washington (2002) pp. 1398–1403

A. Ijspeert, J. Nakanishi, S. Schaal: Trajectory formation for imitation with nonlinear dynamical systems, IEEE Int. Conf. Intell. Rob. Syst. (IROS 2001), Maui (2001) pp. 752–757

A. Ijspeert, J. Nakanishi, S. Schaal: Learning attractor landscapes for learning motor primitives. In: Advances in Neural Information Processing Systems 15, ed. by S. Becker, S. Thrun, K. Obermayer (MIT Press, Cambridge 2003) pp. 1547–1554

S. Schaal, J. Peters, J. Nakanishi, A. Ijspeert: Control, planning, learning, and imitation with dynamic movement primitives, Proc. Workshop Bilater. Paradig. Humans Humanoids. IEEE Int. Conf. Intell. Rob. Syst. (IROS 2003), Las Vegas (2003)

S. Schaal, P. Mohajerian, A. Ijspeert: Dynamics systems vs. optimal control – a unifying view. In: Computational Neuroscience: Theoretical Insights into Brain Function, Progress in Brain Research, Vol. 165, ed. by P. Cisek, T. Drew, J.F. Kalaska (Elsevier, Amsterdam 2007) pp. 425–445

K.V. Byadarhaly, M. Perdoor, A.A. Minai: A neural model of motor synergies, Proc. Int. Conf. Neural Netw., San Jose (2011) pp. 2961–2968

K.V. Byadarhaly, M.C. Perdoor, A.A. Minai: A modular neural model of motor synergies, Neural Netw. 32, 96–108 (2012)

G.E. Alexander, M.R. DeLong, P.L. Strick: Parallel organization of functionally segregated circuits linking basal ganglia and cortex, Annu. Rev. Neurosci. 9, 357–381 (1986)

A.W. Flaherty, A.M. Graybiel: Input–output organization of the sensorimotor striatum in the squirrel monkey, J. Neurosci. 14, 599–610 (1994)

S. Grillner, J. Hellgren, A. Ménard, K. Saitoh, M.A. Wikström: Mechanisms for selection of basic motor programs – roles for the striatum and pallidum, Trends Neurosci. 28, 364–370 (2005)

W. Schultz, P. Dayan, P.R. Montague: A neural substrate of prediction and reward, Science 275, 1593–1599 (1997)

W. Schultz, A. Dickinson: Neuronal coding of prediction errors, Annu. Rev. Neurosci. 23, 473–500 (2000)

W. Schultz: Multiple reward signals in the brain, Nat. Rev. Neurosci. 1, 199–207 (2000)

P.R. Montague, S.E. Hyman, J.D. Cohen: Computational roles for dopamine in behavioural control, Nature 431, 760–767 (2004)

D.M. Wolpert, M. Kawato: Multiple paired forward and inverse models for motor control, Neural Netw. 11, 1317–1329 (1998)

M. Kawato: Internal models for motor control and trajectory planning, Curr. Opin. Neurobiol. 9, 718–727 (1999)

D.M. Wolpert, Z. Ghahramani: Computational principles of movement neuroscience, Nat. Neurosci. Supp. 3, 1212–1217 (2000)

M. Kawato, K. Furukawa, R. Suzuki: A hierarchical neural network model for control and learning of voluntary movement, Biol. Cybern. 57, 169–185 (1987)

R. Shadmehr, F.A. Mussa-lvaldi: Adaptive representation of dynamics during learning of a motor task, J. Neurosci. 74, 3208–3224 (1994)

D.M. Wolpert, Z. Ghahramani, M.I. Jordan: An internal model for sensorimotor integration, Science 269, 1880–1882 (1995)

A. Karniel, G.F. Inbar: A model for learning human reaching movements, Biol. Cybern. 77, 173–183 (1997)

A. Karniel, G.F. Inbar: Human motor control: Learning to control a time-varying, nonlinear, many-to-one system, IEEE Trans. Syst. Man Cybern. Part C 30, 1–11 (2000)

Y. Burnod, P. Baraduc, A. Battaglia-Mayer, E. Guigon, E. Koechlin, S. Ferraina, F. Lacquaniti, R. Caminiti: Parieto-frontal coding of reaching: An integrated framework, Exp. Brain Res. 129, 325–346 (1999)

M. Haruno, D.M. Wolpert, M. Kawato: MOSAIC model for sensorimotor learning and control, Neural Comput. 13, 2201–2220 (2001)

A.G. Barto, A.H. Fagg, N. Sitkoff, J.C. Houk: A cerebellar model of timing and prediction in the control of reaching, Neural Comput. 11, 565–594 (1999)

H. Ritter, T. Martinetz, K. Schulten: Topology-conserving maps for learning visuo-motor-coordination, Neural Netw. 2, 159–168 (1989)

T. Martinetz, H. Ritter, K. Schulten: Three-dimensional neural net for learning visuo-motor coordination of a robot arm, IEEE Trans. Neural Netw. 1, 131–136 (1990)

T. Kohonen: Self-organized formation of topologically correct feature maps, Biol. Cybern. 43, 59–69 (1982)

P. Baraduc, E. Guignon, Y. Burnod: Recoding arm position to learn visuomotor transformations, Cereb. Cortex 11, 906–917 (2001)

M.V. Butz, O. Herbort, J. Hoffmann: Exploiting redundancy for flexible behavior: Unsupervised learning in a modular sensorimotor control architecture, Psychol. Rev. 114, 1015–1046 (2007)

A.F. Morse, J. de Greeff, T. Belpeame, A. Cangelosi: Epigenetic Robotics Architecture (ERA), IEEE Trans. Auton. Ment. Develop. 2 (2002) pp. 325–339

T. Matsubara, S.-H. Hyon, J. Morimoto: Learning parametric dynamic movement primitives from multiple demonstrations, Neural Netw. 24, 493–500 (2011)

K.V. Byadarhaly, A.A. Minai: A Hierarchical Model of Synergistic Motor Control, Proc. Int. Joint Conf. Neural Netw., Dallas (2013)

T. Flash, N. Hogan: The coordination of arm movements: An experimentally confirmed mathematical model, J. Neurosci. 5, 1688–1703 (1985)

Y. Uno, M. Kawato, R. Suzuki: Formation and control of optimal trajectories in human multijoint arm movements: Minimum torque-change model, Biol. Cybern. 61, 89–101 (1989)

S. Ben-Itzhak, A. Karniel: Minimum acceleration criterion with constraints implies bang-bang control as an underlying principle for optimal trajectories of arm reaching movements, Neural Comput. 20, 779–812 (2008)

C.M. Harris, D.M. Wolpert: Signal-dependent noise determines motor planning, Nature 394, 780–784 (1998)

E. Todorov, M.I. Jordan: Optimal feedback control as a theory of motor coordination, Nat. Neurosci. 5, 1226–1235 (2002)

E. Todorov: Optimality principles in sensorimotor control, Nat. Neurosci. 7, 907–915 (2004)

F.J. Valero-Cuevas, M. Venkadesan, E. Todorov: Structured variability of muscle activations supports the minimal intervention principle of motor control, J. Neurophysiol. 102, 59–68 (2009)

E. Trainin, R. Meir, A. Karniel: Explaining patterns of neural activity in the primary motor cortex using spinal cord and limb biomechanics models, J. Neurophysiol. 97, 3736–3750 (2007)

A. Biess, D.G. Libermann, T. Flash: A computational model for redundant arm three-dimensional pointing movements: Integration of independent spatial and temporal motor plans simplifies movement dynamics, J. Neurosci. 27, 13045–13064 (2007)

J.J. Gibson: The Theory of Affordances. In: Perceiving, Acting, and Knowing: Toward an Ecological Psychology, ed. by R. Shaw, J. Bransford (Lawrence Erlbaum, Hillsdale 1977) pp. 67–82

M.L. Latash, J.P. Scholz, G. Schöner: Toward a new theory of motor synergies, Mot. Control 11, 276–308 (2007)

S. Dehaene, M. Kerszberg, J.-P. Changeux: A neuronal model of a global workspace in effortful cognitive tasks, Proc. Natl. Acad. Sci. USA 95, 14529–14534 (1998)

M. Botvinick, D.C. Plaut: Doing without schema hierarchies: A recurrent connectionist approach to normal and impaired routine sequential action, Psychol. Rev. 111, 395–429 (2004)

F.A. Middleton, P.L. Strick: Basal ganglia output and cognition: Evidence from anatomical, behavioral, and clinical studies, Brain Cogn. 42, 183–200 (2000)

F.A. Middleton, P.L. Strick: Basal ganglia `projections' to the prefrontal cortex of the primate, Cereb. Cortex 12, 926–945 (2002)

R.S. Sutton, A.G. Barto: Reinforcement Learning (MIT Press, Cambridge 1998)

R.S. Sutton: Learning to predict by the methods of temporal difference, Mach. Learn. 3, 9–44 (1988)

N.D. Daw, Y. Niv, P. Dayan: Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control, Nat. Neurosci. 8, 1704–1711 (2005)

M.J. Frank, R.C. O'Reilly: A mechanistic account of striatal dopamine function in human cognition: Psychopharmacological studies with cabergoline and haloperidol, Behav. Neurosci. 120, 497–517 (2006)

A.J. Gruber, P. Dayan, B.S. Gutkin, S.A. Solla: Dopamine modulation in the basal ganglia locks the gate to working memory, J. Comput. Neurosci. 20, 153–166 (2006)

A. Baddeley: Human Memory (Lawrence Erlbaum, Hove, UK 1990)

A. Baddeley: The episodic buffer: A new component of working memory?, Trends Cogn. Sci. 4, 417–423 (2000)

P.S. Goldman-Rakic: Cellular basis of working memory, Neuron 14, 477–485 (1995)

P.S. Goldman-Rakic, A.R. Cools, K. Srivastava: The prefrontal landscape: implications of functional architecture for understanding human mentation and the central executive, Philos. Trans.: Biol. Sci. 351, 1445–1453 (1996)

J. Duncan: An adaptive coding model of neural function in prefrontal cortex, Nat. Rev. Neurosci. 2, 820–829 (2001)

R. Ratcliff, G. McKoon: The diffusion decision model: Theory and data for two-choice decision tasks, Neural Comput. 20, 873–922 (2008)

G. Miller: The magical number seven, plus or minus two: Some limits of our capacity for processing information, Psychol. Rev. 63, 81–97 (1956)

J.E. Lisman, A.P. Idiart: Storage of $7\pm 2$ short-term memories in oscillatory subcycles, Science 267, 1512–1515 (1995)

K. Ericsson, W. Kintsch: Long-term working memory, Psychol. Rev. 102, 211–245 (1995)

C.J. Wilson: The contribution of cortical neurons to the firing pattern of striatal spiny neurons. In: Models of Information Processing in the Basal Ganglia, ed. by J.C. Houk, J.L. Davis, D.G. Beiser (MIT Press, Cambridge 1995) pp. 29–50

A.M. Graybiel, T. Aosaki, A.W. Flaherty, M. Kimura: The basal ganglia and adaptive motor control, Science 265, 1826–1831 (1994)

A.M. Graybiel: The basal ganglia and chunking of action repertoires, Neurobiol. Learn. Mem. 70, 119–136 (1998)

M.D. Humphries, K. Gurney: The role of intra-thalamic and thalamocortical circuits in action selection, Network 13, 131–156 (2002)

R.C. O'Reilly, Y. Munakata: Computational explorations in cognitive neuroscience: Understanding the mind by simulating the brain (MIT Press, Cambridge 2000)

M.J. Frank, B. Loughry, R.C. O'Reilly: Interactions between frontal cortex and basal ganglia in working memory: A computational model, Cogn. Affect Behav. Neurosci. 1, 137–160 (2001)

T.E. Hazy, M.J. Frank, R.C. O'Reilly: Banishing the homunculus: Making working memory work, Neuroscience 139, 105–118 (2006)

R.C. O'Reilly, M.J. Frank: Making working memory work: A computational model of learning in the prefrontal cortex and basal ganglia, Neural Comput. 18, 283–328 (2006)

M.J. Frank, E.D. Claus: Anatomy of a decision: Striato-orbitofrontal interactions in reinforcement learning, decision making and reversal, Psychol. Rev. 113, 300–326 (2006)

R.C. O'Reilly: Biologically based computational models of high level cognition, Science 314, 91–94 (2006)

R.C. O'Reilly, S.A. Herd, W.M. Pauli: Computational models of cognitive control, Curr. Opin. Neurobiol. 20, 257–261 (2010)

M.E. Hasselmo: A model of prefrontal cortical mechanisms for goal-directed behavior, J. Cogn. Neurosci. 17, 1–14 (2005)

M.E. Hasselmo, C.E. Stern: Mechanisms underlying working memory for novel information, Trends Cogn. Sci. 10, 487–493 (2006)

J.R. Reynolds, R.C. O'Reilly: Developing PFC representations using reinforcement learning, Cognition 113, 281–292 (2009)

T.S. Braver, D.M. Barch, J.D. Cohen: Cognition and control in schizophrenia: A computational model of dopamine and prefrontal function, Biol. Psychiatry 46, 312–328 (1999)

S. Monsell: Task switching, Trends Cog. Sci. 7, 134–140 (2003)

T.S. Braver, J.R. Reynolds, D.I. Donaldson: Neural mechanisms of transient and sustained cognitive control during task switching, Neuron 39, 713–726 (2003)

H. Imamizu, T. Kuroda, T. Yoshioka, M. Kawato: Functional magnetic resonance imaging examination of two modular architectures for switching multiple internal models, J. Neurosci. 24, 1173–1181 (2004)

R.P. Cooper, T. Shallice: Contention scheduling and the control of routine activities, Cogn. Neuropsychol. 17, 297–338 (2000)

R.P. Cooper, T. Shallice: Hierarchical schemas and goals in the control of sequential behavior, Psychol. Rev. 113, 887–916 (2006)

P. Dayan: Images, frames, and connectionist hierarchies, Neural Comput. 18, 2293–2319 (2006)

P. Dayan: Simple substrates for complex cognition, Front. Neurosci. 2, 255–263 (2008)

S. Helie, R. Sun: Incubation, insight, and creative problem solving: A unified theory and a connectionist model, Psychol. Rev. 117, 994–1024 (2010)

S. Grossberg, L.R. Pearson: Laminar cortical dynamics of cognitive and motor working memory, sequence learning and performance: Toward a unified theory of how the cerebral cortex works, Psychol. Rev. 115, 677–732 (2008)

B.J. Rhodes, D. Bullock, W.B. Verwey, B.B. Averbeck, M.P.A. Page: Learning and production of movement sequences: Behavioral, neurophysiological, and modeling perspectives, Human Mov. Sci. 23, 683–730 (2004)

B. Ans, Y. Coiton, J.-C. Gilhodes, J.-L. Velay: A neural network model for temporal sequence learning and motor programming, Neural Netw. 7, 1461–1476 (1994)

R.S. Bapi, D.S. Levine: Modeling the role of frontal lobes in sequential task performance. I: Basic Strucure and primacy effects, Neural Netw. 7, 1167–1180 (1994)

J.G. Taylor, N.R. Taylor: Analysis of recurrent cortico-basal ganglia-thalamic loops for working memory, Biol. Cybern. 82, 415–432 (2000)

R.P. Cooper: Mechanisms for the generation and regulation of sequential behaviour, Philos. Psychol. 16, 389–416 (2003)

R. Nishimoto, J. Tani: Learning to generate combinatorial action sequences utilizing the initial sensitivity of deterministic dynamical systems, Neural Netw. 17, 925–933 (2004)

P.F. Dominey: From sensorimotor sequence to grammatical construction: evidence from simulation and neurophysiology, Adapt. Behav. 13, 347–361 (2005)

E. Salinas: Rank-order-selective neurons form a temporal basis set for the generation of motor sequences, J. Neurosci. 29, 4369–4380 (2009)

S. Vasa, T. Ma, K.V. Byadarhaly, M. Perdoor, A.A. Minai: A Spiking Neural Model for the Spatial Coding of Cognitive Response Sequences, Proc. IEEE Int. Conf. Develop. Learn., Ann Arbor (2010) pp. 140–146

F. Chersi, P.F. Ferrari, L. Fogassi: Neuronal chains for actions in the parietal lobe: A computational model, PloS ONE 6, e27652 (2011)

M.R. Silver, S. Grossberg, D. Bullock, M.H. Histed, E.K. Miller: A neural model of sequential movement planning and control of eye movements: Item-order-rank working memory and saccade selection by the supplementary eye fields, Neural Netw. 26, 29–58 (2011)

B.J. Baars: A Cognitive Theory of Consciousness (Cambridge Univ. Press, Cambridge 1988)

B.J. Baars, S. Franklin: How conscious experience and working memory interact, Trends Cog. Sci. 7, 166–172 (2003)

S. Franklin, F.G.J. Patterson: The LIDA Architecture: Adding New Modes of Learning to an Intelligent, Autonomous, Software Agent, IDPT-2006 Proc. (Integrated Design and Process Technology) (Society for Design and Process Science, San Diego 2006)

S. Dehaene, J.-P. Changeux: The Wisconsin card sorting test: Theoretical analysis and modeling in a neuronal network, Cereb. Cortex 1, 62–79 (1991)

S. Dehaene, L. Naccache: Towards a cognitive neuroscience of consciousness: Basic evidence and a workspace framework, Cognition 79, 1–37 (2001)

L.R. Iyer, S. Doboli, A.A. Minai, V.R. Brown, D.S. Levine, P.B. Paulus: Neural dynamics of idea generation and the effects of priming, Neural Netw. 22, 674–686 (2009)

L.R. Iyer, V. Venkatesan, A.A. Minai: Neurocognitive spotlights: Configuring domains for ideation, Proc. Int. Conf. Neural Netw. (2011) pp. 2961–2968

S. Doboli, A.A. Minai, P.J. Best: Latent attractors: A model for context-dependent place representations in the hippocampus, Neural Comput. 12, 1003–1037 (2000)

R. Ratcliff: A theory of memory retrieval, Psychol. Rev. 85, 59–108 (1978)

F.G. Ashby: A biased random-walk model for two choice reaction times, J. Math. Psychol. 27, 277–297 (1983)

J.R. Busemeyer, J.T. Townsend: Decision field theory, Psychol. Rev. 100, 432–459 (1993)

J.L. McClelland, D.E. Rumelhart: An interactive activation model of context effects in letter perception. Part 1: An account of basic findings, Psychol. Rev. 88, 375–407 (1981)

D.E. Rumelhart, J.L. McClelland: An interactive activation model of context effects in letter perception: Part 2. The contextual enhancement effect and some tests and extensions of the model, Psychol. Rev. 89, 60–94 (1982)

M. Usher, J.L. McClelland: The time course of perceptual choice: The leaky, competing accumulator model, Psychol. Rev. 108, 550–592 (2001)

J.I. Gold, M.N. Shadlen: The neural basis of decision making, Annu. Rev. Neurosci. 30, 535–574 (2007)

M. Khamassi, L. Lachèze, B. Girard, A. Berthoz, A. Guillot: Actor–critic models of reinforcement learning in the basal ganglia: From natural to artificial rats, Adapt. Behav. 13, 131–148 (2005)

N.D. Daw, K. Doya: The computational neurobiology of learning and reward, Curr. Opin. Neurobiol. 16, 199–204 (2006)

P. Dayan, Y. Niv: Reinforcement learning: The Good, The Bad and The Ugly, Curr. Opin. Neurobiol. 18, 185–196 (2008)

K. Doya: Modulators of decision making, Nat. Neurosci. 11, 410–416 (2008)

E.M. Izhikevich: Solving the distal reward problem through linkage of STDP and dopamine signaling, Cereb. Cortex 17, 2443–2452 (2007)

R. Urbanczik, W. Senn: Reinforcement learning in populations of spiking neurons, Nat. Neurosci. 12, 250–252 (2009)

D. Durstewitz, J.K. Seamans, T.J. Sejnowski: Dopamine mediated stabilization of delay-period activity in a network model of prefrontal cortex, J. Neurophysiol. 83, 1733–1750 (2000)

D. Durstewitz, J.K. Seamans: The computational role of dopamine D1 receptors in working memory, Neural Netw. 15, 561–572 (2002)

J.J. Hopfield: Neural networks and physical systems with emergent collective computational abilities, Proc. Natl. Acad. Sci. USA 79, 2554–2558 (1982)

D.J. Amit, N. Brunel: Learning internal representations in an attractor neural network with analogue neurons, Netw. Comput. Neural Syst. 6, 359–388 (1995)

D.J. Amit, N. Brunel: Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex, Cereb. Cortex 7, 237–252 (1997)