Abstract

We have described a distributed system independent source separation of form and position of each shy; based on the ratio of energy value f-component sensors get clustering and optimization method. The simulation conditions realistic with Doppler effect is by Rayleigh fading to illustrate how to realize the system problem is to exercise more source orientation. The results show that the system will be low to high precision, need to communication cost for large data sets.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The previous works have found relative angles between the sound sources and the receiving sensor array which is called the direction of arrival (AOAs). Use of AOA technical scheme, so far, most acoustic methods can only to solve a tracking object, only a few people are many target tracking [1, 2]. To solve the multi-objective tracking and come array sensor, the use of independent component analysis (ICA) is natural from ICA is a powerful method to separate and restore the original data, these sources to provide statistical independence. In fact, an acoustic signal has a different propagation of the delay in time for sensors, generating convolved mixed data. Some of the methods have been developed to deal with the problem in the time domain and frequency domain. However, they are not too much of the computational load or too complicated, especially when finite impulse response (FIR) linear algebra is used for ICA in complex field. In addition, so far all related technology usually needs concentration of algorithm, make communication load too big, applicable to wireless sensor network (network).

2 Proposed Method for Multi-Object Tracking

Consider M objects emitting continuous zero-mean acoustic signals and N location-known sensors. The signals are denoted by s j (t), j = 1. M while at each sensor i, the received data are denoted by x i (t) and modeled as in [2]

where \( a_{ij} > 0, \) is the amplitude gain of the signal from source j measured at sensor i and \( \tau_{ij} \left( t \right) \) is the propagation time of this signal. When the sources move, these parameters change over time and cause different shifts to different f-components at the receivers. That phenomenon is called Doppler effect [3]:

where \( f_{j} \) is some f-component of source j, \( f_{ij} \) is the shifted version of \( f_{j} \) at sensor i, and \( \theta_{ij} \left( t \right) \) is the intermediate angle between \( \overrightarrow {ij} \) and \( \overrightarrow {{v_{j} }} . \)

The issue is: with the received data and the only knowledge that the delayed versions of the sources are statistically independent of one another, the source positions must be indicated.

Applying short time Fourier transformation (STFT) to the sampled data at the sensor i, the time-delay \( \tau_{ij} \) only affects the phase spectral image, not the magnitude spectral image (so-called frequency image). Since the continuous form of STFT is not suitable for computing and storing, the discrete Fourier transformation (DFT) is replaced for calculation at sensors. Also note that when the speeds of the source are not zero, a source’s magnitude frequency images calculated at different sensors do not have the same form, thus the results of DFT for recorded data are

\( X_{i} \left( {\omega_{k} } \right) \) in the above equation is the DFT results of \( x_{i} \left( t \right) \) and k represents the discrete index. Meanwhile, \( \left| {S_{ij} \left( {\omega_{k} } \right)} \right| \) is the discrete frequency image of the signal emitted by source j and recorded by sensor i. Now consider a particular interval on the frequency domain \( \left( {\omega_{a} ,\omega_{b} } \right) \) containing all shifted versions of some f-component of source z without any interference from other sources’ shifted f-components, the frequency images in this interval are

where \( \omega_{k}^{\left( m \right)} \in \left( {\omega_{a} ,\omega_{b} } \right) \) and m is the index of the f-component. Although this f-component has different shifted versions, its energy is unchanged since the magnitude of the signal on the time domain is the same, or

Based on the fact from (14.4) and (14.5), if an t′ . Component belongs to source z, then all relative distance relationships are

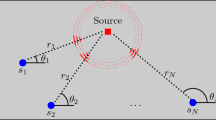

where \( d_{lz} \) and \( d_{iz} \) are the distances from source z to sensor l and to sensor i respectively; \( \tilde{X}_{i} \left( {\omega_{k}^{{}} } \right) \) is the result after the step of noise filtering \( X_{i} \left( {\omega_{k}^{{}} } \right), \) and \( \tilde{X}_{i} \left( {\omega_{k}^{\left( m \right)} } \right) \) is the frequency image of \( \tilde{X}_{i} \left( {\omega_{k}^{{}} } \right) \) on the segment \( \left( {\omega_{a} ,\omega_{b} } \right) \) (see Fig. 14.1). That means, for each f-component m within the frequency interval, a set of constraints is calculated and the location of the source having these components can be estimated. Thus, two clustering steps are needed, one for grouping the shifted frequency components to determine the segment \( \left( {\omega_{a} ,\omega_{b} } \right), \) and the other for grouping f-component positions to calculate source locations after f-component positions are computed. The advantages of this system are: (a) it is more robust than our previous system even when the sources are fixed, (b) it works well with moving sources and tolerates the co-existence of Doppler effect and Rayleigh multi-path fading, (c) it is considered to be a distributed method since the computation load is shared among the sensors and the communication cost is low, and (d) it is not constrained by the condition that the sensor number is greater than the source number.

3 Proposed System Architecture

The architecture design of the acoustic tracking system is displayed in Fig. 14.l due to the extraction of distance information method as mentioned in Subsection II-B.

On frequency domain, the Gaussian noise level can be detected and all low f-components can be forced to zero (see Fig. 14.2). Filtering step keeps only several dominant f-components, so the data to be transmitted from a sensor to the base computer is reduced significantly. This is one of the key ideas for compressing the communication load so that the method can be applied into WSNs.

The calculation of high load sensor with DFT transforms the lengthy frame. However, you can see in Fig. 14.2, a sensor can skip the calculation of the frequency of probability of the existence of the garbage is in main f-components according to the feedback of low base. Therefore, the computational load sensor is reduced considerably [4].

The received data compression and input is “Frequency-Segmentation” stage. This process dominated f-components signs and the corresponding section contains component index m. Then the block “Relative Distance Information Calculate” calculates a set of \( r_{il}^{\left( m \right)} \) for each component. These sets are then input into the “F-component Positioning” process to estimate the output position of each dominant f-component \( p_{{}}^{\left( m \right)} . \) Frequency leakages, setting noise, Doppler effect, and Rayleigh fading influence the detection result and make f-components belonging to the same source j not having the same position. Therefore, the final stage “Source Positioning” is necessary to cluster those \( p_{{}}^{\left( m \right)} \) and estimate p j under the averaging mechanism [5].

-

(1)

Frequency Segmentation: This stage is used to show that every frequency interval, including all versions of the leading f-component transfer. On a mission, group clustering f-component version of the change, decided frequency section. F-components Doppler effect is the effect of different, higher frequency, big changes. From (14.2), an t″ component of source j at f 0 has shifted versions within \( \left( {\frac{{v_{c} }}{{v_{c} + v_{j} }}f_{0} ,\;\frac{{v_{c} }}{{v_{c} - v_{j} }}f_{0} } \right). \) This frequency interval varies depending on f o on the frequency scale, however, it is fixed on the logarithmic scale as can be seen below:

$$ \vartriangle f\left( {dB} \right) = \log_{10} \left( {\frac{{v_{c} }}{{v_{c} - v_{j} }}f_{0} } \right) - \log_{10} \left( {\frac{{v_{c} }}{{v_{c} + v_{j} }}f_{0} } \right) = \log_{10} \left( {\frac{{v_{c} + v_{j} }}{{v_{c} - v_{j} }}} \right) $$(14.7)As the result, clustering task should be performed on the \( \log_{10} \left( \cdot \right) \) scale of the frequency image under following criteria: (a) the width of each segment is not larger than \( \vartriangle f\,\left( {\text{dB}} \right) \) (see 14.7); (b) the number of nonzero f-components within the grouped interval is greater than 2 so that the number of constraints is at least 3; and (c) the average energy of an f-component received at the sensors must be larger than the detected noise level. A sliding window with the width \( \vartriangle f\,\left( {\text{dB}} \right) \) is then used to detect the frequency segments that hold (b) and (c). As the result, the number of sources can be larger than that of sensors. Moreover, the total loss of some f-components due to filtering is acceptable and the redundant f-component will hardly be taken into account.

-

(2)

F-component Positioning: All constraint ratios rilz are computed in “Relative Distance Information Calculating” process before being fed into the “F-component Positioning” process. The error in the constraints is unavoidable due to frequency leakage and the setting noise, so the solution for the position of f-component m should be a vector \( p^{\left( m \right)} , \) \( p^{\left( m \right)} \in R^{2} \) that compromises the constraints. We propose an objective function for this compromise and the solution for source j will be

$$ F_{j} = \sum\limits_{i}^{N} {\sum\limits_{l,l \ne i}^{N - 1} {\left( {d_{ij} - r_{ilj} d_{lj} } \right)^{2} ,\quad 0 < r_{ilj} < \infty } } $$(14.8)$$ p^{\left( m \right)} = \mathop {\arg }\limits_{{p^{\left( m \right)} }} \hbox{min} F_{j} $$(14.9)The simple negative gradient method is chosen for this optimization problem.

4 Conclusions

The system can be regarded as a kind of design, this paper introduces the network of future generations, because it requires a powerful sensor in the long passages of the DFT data. However, the powerful computation ability is not necessary, because the feedback of the foundation, on the sensor for a full DFT and focus only calculated DFT in the trash section contains several of the frequency of leading f-components. The system is actually more useful not only in the positioning multiple sources. It also can output characteristic for a further specified source position estimation, because of most acoustic characteristics and recognition in the frequency domain.

References

Sasaki Y, Kagami S, Mizoguchi H (2009) Multiple sound source mapping for a mobile robot by self-motion triangulation. In: IEEE international conference on intelligent robots and systems, pp 380–385

Stanacevic M (2010) Micropower gradient flow acoustic localizer. IEEE Trans Circuits Syst 52:2148–2157

Birchfield ST, Gilmor DK (2001) A unifying framework for acoustic localization. In: IEEE international conference, vol 31. IEEE Computer Society, pp 80–87

Mahajana A, Walworth M (2001) 3-D position sensing using the differences in the time-of-flights from a wave source to various receivers. IEEE Trans Robotics Autom 17:91–94

Valin J-M, Michaud F, Rouat J, LetoWlleau D (1998) Robust sound source localization using a microphone array on a mobile robot, vol 20. In: Proceedings of ICASSP98, pp 1228–1233

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Zou, W. (2013). A Distributed System for Independent Acoustic Source Positioning Using Magnitude Ratios. In: Yang, Y., Ma, M. (eds) Proceedings of the 2nd International Conference on Green Communications and Networks 2012 (GCN 2012): Volume 2. Lecture Notes in Electrical Engineering, vol 224. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-35567-7_14

Download citation

DOI: https://doi.org/10.1007/978-3-642-35567-7_14

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-35566-0

Online ISBN: 978-3-642-35567-7

eBook Packages: EngineeringEngineering (R0)