Abstract

Questions concerning the meaning of probability and its applications in physics are notoriously subtle. In the philosophy of the exact sciences, the conceptual analysis of the foundations of a theory often lags behind the discovery of the mathematical results that form its basis. The theory of probability is no exception. Although Kolmogorov’s axiomatization of the theory [1] is generally considered definitive, the meaning of the notion of probability remains a matter of controversy.1 Questions pertain both to gaps between the formalism and the intuitive notions of probability and to the inter-relationships between the intuitive notions. Further, although each of the interpretations of the notion of probability is usually intended to be adequate throughout, independently of context, the various applications of the theory of probability pull in different interpretative directions: some applications, say in decision theory, are amenable to a subjective interpretation of probability as representing an agent’s degree of belief, while others, say in genetics, call upon an objective notion of probability that characterizes certain biological phenomena. In this volume we focus on the role of probability in physics. We have the dual goal and challenge of bringing the analysis of the notion of probability to bear on the meaning of the physical theories that employ it, and of using the prism of physics to study the notion of probability.

We thank Orly Shenker for comments on an earlier draft.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Questions concerning the meaning of probability and its applications in physics are notoriously subtle. In the philosophy of the exact sciences, the conceptual analysis of the foundations of a theory often lags behind the discovery of the mathematical results that form its basis. The theory of probability is no exception. Although Kolmogorov’s axiomatization of the theory [1] is generally considered definitive, the meaning of the notion of probability remains a matter of controversy.Footnote 1 Questions pertain both to gaps between the formalism and the intuitive notions of probability and to the inter-relationships between the intuitive notions. Further, although each of the interpretations of the notion of probability is usually intended to be adequate throughout, independently of context, the various applications of the theory of probability pull in different interpretative directions: some applications, say in decision theory, are amenable to a subjective interpretation of probability as representing an agent’s degree of belief, while others, say in genetics, call upon an objective notion of probability that characterizes certain biological phenomena. In this volume we focus on the role of probability in physics. We have the dual goal and challenge of bringing the analysis of the notion of probability to bear on the meaning of the physical theories that employ it, and of using the prism of physics to study the notion of probability.

The concept of probability is indispensable in contemporary physics. On the micro-level, quantum mechanics, at least in its standard interpretation, describes the behavior of elementary particles such as the decay of radioactive atoms, the interaction of light with matter and of electrons with magnetic fields, by employing probabilistic laws as its first principles. On the macro-level, statistical mechanics appeals to probabilities in its account of thermodynamic behavior, in particular the approach to equilibrium and the second law of thermodynamics. These two entries of probability into modern physics are quite distinct. In standard quantum mechanics, probabilistic laws are taken to replace classical mechanics and classical electrodynamics. Quantum probabilities are here understood as reflecting genuine stochastic behavior, ungoverned by deterministic laws.Footnote 2 By contrast, in classical statistical mechanics, probabilistic laws are supplementary to the underlying deterministic mechanics, or perhaps even reducible to it. These probabilities may therefore be the understood as reflecting our ignorance about the details of the microstates of the world or as a byproduct of our coarse-grained descriptions of these states.

Despite this radical difference, the probabilistic laws in both theories pertain to the behavior of real physical systems. When quantum mechanics ascribes a certain probability to the decay of a radium atom, it must be saying something about the atom, not only about our beliefs, expectations or knowledge regarding the atom. Likewise, when classical statistical mechanics ascribes a high probability to the spreading of a gas throughout the volume accessible to it, it is purportedly saying something about the gas, not only about our subjective beliefs about the gas. In this sense the probabilistic laws in both quantum and statistical mechanics are supposed to have some genuine objective content. What this objective content is, however, and how it is related to epistemic notions such as ignorance, rational belief and the accuracy of our descriptions are open issues, hotly debated in the literature, and reverberating through this volume. Before getting into these issues in the physical context, let us briefly review some of the general problems confronting the interpretation of probability.Footnote 3

1.1 The Notion of Probability

Consider the paradigmatic example of a game of chance, a flip of a coin in which in each flip there is a fixed probability of 1/2 for getting tails and 1/2 for getting heads. How are we to understand the term ‘probability’ in this context? At least three distinct answers can be found in the literature. First, we could be referring to the objective chance for getting heads or tails in each flip. This objective chance is supposed to pertain to the coin, or the flip, or both, or, more generally, to the set up of the coin flip. On this understanding, the chance is a kind of property, a tendency (propensity) of the set up, analogous to other tendencies of physical systems. The analogy suggests that, just as fragility is a tendency to break, chance is the tendency to… The problem in completing this sentence is that fragile objects sometimes in fact break whereas a chance 1/2 coin always lands on one of its faces. In other words, a single outcome never instantiates the chance in the way a broken glass instantiates fragility.

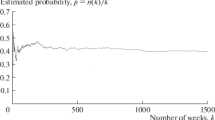

The response to this problem leads to the second notion of probability according to which when we say that the probability of tails (heads) in each game is 1/2 we refer to the relative frequency of tails (heads) in a (finite or infinite) series of repeated games. The intuition here is that it is the relative frequency that links the probability of an event to the actual occurrences. While the link cannot be demonstrated in any single event, it is manifest in the long run by a series of events. How does this response affect the notion of chance? We may either conclude that chance has been eliminated in favor of relative frequency, or hold on to the notion of chance along with the caveat that it is instantiated (and thus verified) only by relative frequency. An example of the first kind is the relative frequency analysis by von Mises [5], where probability is identified with the infinite limit (when it exists) of the relative frequency along what von Mises called random sequences. Of course, the notion of a random sequence is itself in need of a precise and non-circular characterization. Moreover, the relation between a single case chance and relative frequency is not yet as close as we would like it to be, for any objective chance, say 1/2 for tails, is compatible with any finite sequence of heads and tails, no matter how long it is.

The third notion of probability is known as subjective or epistemic probability. On this interpretation, championed by Ramsey, de Finetti and Savage, probabilities represent degrees of belief of rational agents, where rationality is defined as acting so as to maximize profit. The rationality requirement places normative constraints on subjective degrees of belief: the beliefs of rational agents, it is claimed, must obey the axioms of probability theory in the following sense. Call a series of bets each of which is acceptable to the agent a Dutch-book if they collectively yield a sure loss to the agent, regardless of the outcomes of the games. It has been proved independently by de Finetti and Ramsey that if the degrees of belief (the subjective probabilities) of the agent violate the axioms of probability theory, then a Dutch-book can be tailored against her. And conversely, if the subjective probabilities of the agent conform to the axioms of probability, then no Dutch book can be made against her. That is, obeying the axioms of probability is necessary and sufficient to guarantee Dutch-book coherence, and in this sense, rationality. Thus construed, the theory of probability – as a theory of partial belief – is actually an extension of logic!

One of the problems that the subjective interpretation faces is that without further assumptions, the consistency of our beliefs (in the above sense of avoiding a Dutch book, or complying with the axioms of probability theory) does not guarantee their reasonableness. In order to move from consistency to reasonableness, it would seem, we need some guidance from ‘reality’, namely, we need a procedure for adjusting our subjective probabilities to objective evidence. Here Dutch book considerations are less effective – they do not even compel rational agents to update their beliefs by conditioning on the evidence (see van Fraassen [6]). To do that, one needs a so-called diachronic (rather than synchronic) Dutch book argument. More generally, the question arises of whether the evidence can be processed without recourse to objectivist notions of probability.Footnote 4 If it cannot, then subjective probability is not, after all, the entirely self-sufficient concept that extreme subjectivists have in mind. Suppose we accept this conclusion and seek to link the subjective notion of probability to objective matters of fact. Ideally, it seems that the subjective probability assigned by a rational agent to a certain outcome should converge on the objective probability of that outcome, where the objective probability is construed either in terms of chance or in terms of relative frequency. Can we appeal to the laws of large numbers of probability theory in order to justify this idea and close the gaps between the three interpretations of probability? Not quite, for the following reasons.

According to Bernoulli’s weak law of large numbers, for any ε there is a number n such that in a sequence of (independent) n flips, the relative frequency of tails will, with some fixed probability, be in the interval \( \raise.5ex\hbox{$\scriptstyle 1$}\kern-.1em/ \kern-.15em\lower.25ex\hbox{$\scriptstyle 2$} \pm \varepsilon \). And if we increase the number of flips without bound then according to the weak law, the relative frequency of tails and heads will approach their objective probability with probability that approaches one. That is, the relative frequency would probably be close to the objective probability. But this means that the convergence of relative frequency to objective chance is itself probabilistic. Threatening to lead to a regress, these second-order probabilities cast doubt on the identification of the concept of objective chance with that of relative frequency. A similar problem arises for the subjectivist: are the second order probabilities to be understood subjectively or objectively? The former reply is what we would expect from a confirmed subjectivist, but it leaves the theorem rather mysterious.

Moreover, consider a situation in which the objective chance is unknown and one wishes to form an opinion about the chance relying on the observed evidence: Can the law of large numbers support such an inference? Unfortunately, the answer is negative, essentially because any finite relative frequency is compatible with infinitely many objective chances. Suppose that we want to find out whether the objective chance in our coin flip is 1/3 for tails and 2/3 for heads, or whether it is 1/2 for both. Even if we assume that the flips are independent of each other (so that the law of large numbers applies), we cannot infer the chance from long run relative frequencies unless we put weights on all possible sequences of tails and heads. Placing such weights, however, amounts to presupposing the objective chances we are looking for.Footnote 5 We will encounter several variations on this question in the context of quantum mechanics and classical statistical mechanics. In both cases, the choice of the probability measure over the relevant set of sequences is designed to allow for the derivation of the chances from the relative frequencies. Let us now turn to these theories.

1.2 Statistical Mechanics

Statistical mechanics, developed in the late nineteenth century by Maxwell, Boltzmann, Gibbs and others, is an attempt to understand thermodynamics in terms of classical mechanics. In particular, it aims to explain the irreversibility typical of thermodynamic phenomena on the basis of the laws of classical mechanics. Thus, while in classical thermodynamics, the irreversibility characteristic of the approach to equilibrium and the second law of thermodynamics is put forward in addition to the Newtonian laws of classical mechanics, in statistical mechanics the laws of thermodynamics are expected to be reducible to the Newtonian laws. This, at least, was the aspiration underlying the theory. One of the major difficulties in this respect is that the laws of classical mechanics (as well as those of other fundamental theories) are time-symmetric, whereas the second law of thermodynamics is time-asymmetric. How can we derive an asymmetry in time from time-symmetric laws?

In order to appreciate the full force of this question, it is instructive to consider Boltzmann’s approach in the early stages of the kinetic theory of gases.Footnote 6 In his famous H-theorem, Boltzmann attempted to prove a mechanical version of the second law of thermodynamics on the basis of the mechanical equations of motion (describing the evolution of a low density gas in terms of the distribution of the velocities of the particles that make up the gas). Roughly, according to the H-theorem, the dynamical evolution of the gas as described by Boltzmann’s equation is bound to approach the distribution that maximizes the entropy of the gas, that is, the Maxwell-Boltzmann equilibrium distribution. Essentially, what this startling result was meant to show was that an isolated low-density gas in a non-equilibrium state evolves deterministically towards equilibrium, and therefore its entropy increases with time. However, Boltzmann’s H-theorem turned out to be inconsistent with the fundamental time-symmetric principles of mechanics. This was the thrust of the reversibility objection raised by Loschmidt: given the time-symmetry of the classical equations of motion, for any trajectory passing through a sequence of thermodynamic states along which entropy increases with time, there is a corresponding trajectory which travels through the same sequence of states in the reversed direction (i.e., with reversed velocity), along which entropy therefore decreases in the course of time.Footnote 7

It is at this juncture that probability came to play an essential role in physics. In the face of the reversibility objections, Boltzmann concluded that his H-theorem must be interpreted probabilistically. The initial hope was that an analysis of the behavior of many-particles systems in probabilistic terms would reveal a straightforward linkage between probability and entropy. If one then makes the seemingly natural assumption that a system tends to move from less probable to more probable states, a direction in the evolution of thermodynamic systems towards high entropy states would emerge. In other words, what Boltzmann now took the H-theorem to prove was that although it is possible for a thermodynamic system to evolve away from equilibrium, such an anti-thermodynamic evolution is highly unlikely or improbable.

To see how this idea can be made to work, consider the following. First, thermodynamic magnitudes such as volume, pressure and temperature, are associated with regions in the phase space called macrostates, where a macrostate is conceived as an equivalence class of all the microstates that realize it. Second, the thermodynamic entropy of a macrostate is identified with the number of microstates that realize that macrostate, as measured by the Lebesgue measure (or volume) of the phase space region associated with the macrostate. Third, a dynamical hypothesis is put forward to the effect that the trajectory of a thermodynamic system in the phase space is dense in the sense that the trajectory passes arbitrarily close to every microstate in the energy hypersurface. This idea, which goes back to Boltzmann’s so-called ergodic hypothesis, was rigorously proved around 1932 by Birkhoff and von Neumann. According to their ergodic theorem, a system is ergodic if and only if the relative time its trajectory spends in a measurable region of the phase space is equal to the relative Lebesgue measure of that region (i.e., its volume) in the limit of infinite time. This feature holds for all initial conditions except for a set of Lebesgue measure zero. Now, since the probability of a macrostate (or rather the relative frequency of that macrostate) along a typical infinite trajectory can be thought of as the relative time the trajectory spends in the macrostate, it seems to follow that in the long run the probability of a macrostate is equal to its entropy. And this in turn seems to imply that an ergodic system will most probably follow trajectories that in the course of time pass from low-entropy macrostates to high-entropy macrostates. And so, if thermodynamic systems are in fact ergodic, we seem to have a probabilistic version of the second law of thermodynamics.

This admittedly schematic outline gives the essential idea of how the thermodynamic time-asymmetry was thought to follow from the time-symmetric laws of classical mechanics. Despite its ingenuity, the probabilistic construal of the second law still faces intriguing questions. Current research in the foundations of statistical mechanics is particularly beleaguered by the problems generated by the (alleged?) reduction of statistical mechanics to the fundamental laws of physics. Here are some of the questions that are addressed in this volume.

-

1.

The first challenge is to give a mathematically rigorous proof of a probabilistic version of the second law of thermodynamics. Some attempts are based on specific assumptions about the initial conditions characterizing thermodynamic systems (as in Boltzmann’s H-theorem), while others appeal to general features characterizing the dynamics of thermodynamic systems (as in the ergodic approach). The extent to which these attempts are successful is still an open question. In this volume, Roman Frigg and Charlotte Werndl defend a number of variations on the ergodic approach.

A related issue is that of Maxwell’s Demon. Maxwell introduced his thought experiment – portraying a Demon who violates the second law – to argue that it is impossible to derive a universal proof of this law from the principles of mechanics. He concluded that, while the law is generally (probabilistically) valid, its violation under specific circumstances, such as those described in his thought experiment, is in fact possible. In the literature, however, the standard view is that Maxwell was wrong. That is, it is argued that the operation of the Demon in bringing about a local decrease of entropy is inevitably counterbalanced by an appropriate increase of entropy in the environment (including the Demon’s own entropy).Footnote 8 The article by Eric Fanchon, Klil Ha-Horesh Neori and Avshalom Elitzur analyses some new aspects of the Demon’s operation in defense of this view.

-

2.

Another question concerns the status of the probabilities in statistical mechanics. Given that the classical equations of motion are completely deterministic, what exactly do probabilities denote in a classical theory? Of course, classical deterministic dynamics applies to the microstructure of physical systems whereas Boltzmann’s probabilities are assigned to macrostates, which can be realized in numerous ways by various microstates. Nonetheless, the determinism of classical mechanics implies that anything that happens in the world is fixed by the world’s actual trajectory. And so, at first sight, the probabilities in statistical mechanics can only represent the ignorance of observers with respect to the microstructure. But if so, it is not clear what could be objective about statistical mechanical probabilities and how they could be assigned to physical states and processes.Footnote 9 Since we expect these probabilities to account for thermodynamic behavior– the approach to thermodynamic equilibrium and the second law of thermodynamics – which are as physically objective as anything we can get, the epistemic construal of probability is deeply puzzling. Responding to this challenge, David Albert provides an over-arching account of the structure of probability in physics in terms of single case chances. Wayne Myrvold, in turn, proposes a synthesis of objective and subjective elements, construing probabilities in statistical mechanics as objective, albeit epistemic, chances.

-

3.

We noted that the appeal to probability was meant to counter the reversibility objection. But does it? What we would like to get from statistical mechanics is not only a high probability for the increase of entropy towards the future but also an asymmetry in time – an ‘arrow of time’ (or rather an arrow of entropy in time) – so that the same probabilistic laws would also indicate a decrease of entropy towards the past. And once again, the time-reversal symmetry of classical mechanics stands in our way. Whatever probability implies with respect to evolutions directed forward in time must be equally true with respect to evolutions directed backward in time. In particular, whenever entropy increases towards the future, it also increases towards the past. If this is correct, it implies that the present entropy of the universe, for any present moment, must always be the minimal one. That is to say, one would be justified to infer that the cup of coffee in front of me was at room temperature a few minutes ago and has spontaneously warmed up as to infer that it will cool off in a few minutes. To fend off this absurd implication, Richard Feynman ([25], p. 116) said, “I think it necessary to add to the physical laws the hypothesis that in the past the universe was more ordered, in the technical sense, than it is today.” Here Feynman is introducing the so-called past-hypothesis in statistical mechanics,Footnote 10 which in classical statistical mechanics is the only way to get a temporal arrow of entropy. Note that the past-hypothesis amounts to adding a distinction between past and future, as it were, by hand. It is an open question as to whether quantum mechanics may be more successful in this respect.Footnote 11 The past-hypothesis and its role in statistical mechanics are subject to a thorough examination and critique in Alon Drory’s paper.

-

4.

In the assertion that thermodynamic behavior is highly probable, the high probability pertains to subsystems of the universe. It is generally assumed that the trajectory of the entire universe, giving rise to this high probability, is itself fixed by laws and initial conditions. Questions now arise about these initial conditions and their probabilities. Must they be highly probable in order to confer high probability on the initial conditions of subsystems? Would improbable initial conditions of the universe make its present state inexplicable? But even before we answer these questions, it is not clear that it even makes sense to consider a probability distribution over the initial conditions of the universe. A probability distribution suggests some sort of a random sampling, but with respect to the initial conditions of the universe, this random sampling seems an empirically meaningless fairy tale. An attempt to answer these questions goes today under the heading of the typicality approach. The idea is to justify the set of conditions that give rise to thermodynamic behavior in statistical mechanics (or to quantum mechanical behavior in Bohmian mechanics) by appealing to the high measure of this set in the space of all possible initial conditions, where the high measure is not understood as high probability. Two articles in this volume contribute to this topic: Sheldon Goldstein defends the non-probabilistic notion of typicality in both statistical and Bohmian mechanics; Meir Hemmo and Orly Shenker criticize the typicality approach.

-

5.

Finally, a probability distribution over a continuous set of points (e.g. the phase space) requires a choice of measure. The standard choice in statistical mechanics (relative to which the probability distribution is uniform) is the Lebesgue measure. But in continuous spaces infinitely many other measures (including some that do not even agree with the Lebesgue measure on the measure zero and one sets) are mathematically possible. Such measures could lead to predictions that differ significantly from the standard predictions of statistical mechanics. Are there mathematical or physical grounds that justify the choice of the Lebesgue measure? This problem is intensified when combined with the previous question about the probability of the initial conditions of the universe. And here too there are attempts to use the notion of typicality to justify the choice of a particular measure. In his contribution to this volume, Itamar Pitowsky justifies the choice of the Lebesgue measure, claiming it to be the mathematically natural extension of the counting (combinatorial) measure in discrete cases to the continuous phase space of statistical mechanics.

1.3 Quantum Mechanics

The probabilistic interpretation of the Schrödinger wave equation, put forward by Max Born in 1926, has become the cornerstone of the standard interpretation of quantum mechanics. Two (possibly interconnected) features distinguish quantum mechanical probabilities from their classical counterparts. First, on the standard interpretation of quantum mechanics, quantum probabilities are irreducible, that is, the probabilistic laws in which they appear are not superimposed on an underlying deterministic theory. Second, the structure of the quantum probability space differs from that of the classical space. Let us look at each of these differences more closely.

According to the von Neumann-Dirac formulation of quantum mechanics, the ordinary evolution of the wave function is governed by the deterministic Schrödinger equation. Upon measurement, however, the wave function undergoes a genuinely stochastic, instantaneous and nonlocal ‘collapse,’ yielding a definite value – one out of a (finite or infinite) series of possible outcomes. The probabilities of these outcomes are given by the quantum mechanical algorithm (e.g. the modulus square of the amplitude of Born’s rule). Formally, this process is construed as a projection of the system into an eigenstate of the operator representing the measured observable, where the measured value represents the corresponding eigenvalue of this state. Thus, the collapse of the wave function, unlike its ordinary evolution, is said to be governed by a ‘projection postulate’. Among other implications, this ‘projection’ means that in contrast with the classical picture, where a measurement yields a result predetermined by the dynamics and the initial conditions of the system at hand, on the standard interpretation of quantum mechanics, measurement results are not predetermined. Moreover, they come about through the measurement process (although how precisely this happens is an open question) and in no way reflect the state of the system prior to measurement.

The collapse of the wave function is the Achilles heel of the standard interpretation. Is it a physical process or just a change in the state of our knowledge? Why does it occur specifically during measurement? Can it be made Lorentz invariant in a way that ensures compatibility with special relativity? Above all, can quantum mechanics be formulated in a more uniform way, one that does not single out measurement as a distinct process and makes no recourse to ‘projection’? These questions are the departure point of alternative, non-standard, interpretations of quantum mechanics that seek to solve the above problems either by altogether getting rid of the collapse or by providing a dynamical account that explains it. As it turns out, rival interpretations also provide divergent accounts of the meaning of quantum probabilities. While the standard interpretation has been combined with practically all the probabilistic notions, relative frequency, single case chance and epistemic probability, the non-standard alternatives are generally more genial to a specific interpretation.

Bohm’s [16] theory is a deterministic theory, empirically equivalent to quantum mechanics. Here there is no collapse. Rather, the probability distribution \( {\left| {\left| {\psi (x)} \right\rangle } \right|^2} \), introduced in order to reproduce the empirical predictions of standard quantum mechanics, reflects ignorance about the exact positions of particles (and ultimately about the exact initial position of the entire universe). In this sense the probabilities in Bohm’s theory play a role very similar to that played by probability in statistical mechanics, and can thus be construed along similar lines. By contrast, stochastic theories, such as that proposed by Ghirardi et al. (GRW, [17]), prima facie construe quantum probabilities as single case chances. In these theories, probability-as-chance enters quantum mechanics not only through the measurement process but through a purely stochastic dynamics of spontaneous ‘jumps’ of the wave function under general dynamical conditions. Many worlds approaches, based on Everett’s relative-state theory [18], take quantum mechanics to be a deterministic theory involving neither collapse nor ignorance over additional variables. Rather, the unitary dynamics of the wave function is associated with a peculiarly quantum mechanical process of branching (fission and fusion) of worlds. However, since the branching is unrelated to the quantum probabilities, the role of probability in this theory remains obscure and is widely debated in the literature. Addressing this issue, Lev Vaidman’s article secures a place for probabilities (obeying Born’s rule) in the many worlds theory.

In addition, there are subjectivist approaches, according to which quantum probabilities are constrained only by Dutch-book coherence. Such an epistemic approach was defended by Itamar Pitowsky [19] and is advocated in this volume by Christopher Fuchs and Rüdiger Schack. A subjectivist approach inspired by de Finetti is the subject of Joseph Berkovitz’s paper. He discusses the implications of de Finetti’s verificationism for the understanding of the quantum mechanical probabilities in general and Bell’s nonlocality theorem in particular.

The second characteristic that distinguishes quantum mechanical probabilities from classical probabilities is the logical structure of the quantum probability space. This feature, first identified by Schrödinger in 1935, was the basis of Itamar Pitowsky’s interpretation of quantum mechanics. In a series of papers culminating in 2006, he urged that quantum mechanics is to be understood primarily as a non-classical theory of probability. On this approach, it is the non-classical nature of the probability space of quantum mechanics that is at the basis of other characteristic features of quantum mechanics, such as the non-locality exemplified by the violation of the Bell inequalities. Since these probabilities obey non-classical axioms, the expectation values they lead to deviate from expectations derived from classical ignorance interpretations.

Pitowsky believed that the difference between quantum and classical mechanics is already manifested at the phenomenological level, that is, the level of events and their correlations. He therefore insisted that before saving the quantum phenomena by means of theoretical terms such as superposition, interference, nonlocality, probabilities over initial conditions, collapse of the wavefunction, fission of worlds, etc. (let alone the vaguer notions of duality or complementarity), we should first have the phenomena themselves in clear view. The best handle on the phenomena, he maintained, is provided by the non-classical nature of the probability space of quantum events. Note that what is at issue here is not the familiar claim that the laws of quantum mechanics, unlike those of classical mechanics, are irreducibly probabilistic, but the more radical claim that quantum probabilities deviate in significant ways from classical probabilities. Hence the project of providing an axiom system for quantum probability. The very notion of non-classical probability, that is, the idea that there are different notions of probability captured by different axiom systems, has far-reaching implications not only for the interpretation of quantum mechanics, but also for the theory of probability and the understanding of rational belief.

Pitowsky’s probabilistic interpretation brings together suggestions made by a number of earlier theorists, among them Schrödinger and Feynman, who saw quantum mechanics as a new theory of probability, and Birkhoff and von Neumann, who sought to ground quantum mechanics in a non-classical logic. However, Pitowsky goes beyond these earlier works in spelling out in detail the non-classical nature of quantum probability. In addition, his work differs from that of his predecessors in three important respects. First, Pitowsky clarified the relation between quantum probability and George Boole’s work on the foundations of classical probability. Second, Pitowsky explored in great detail the geometrical structure of the non-classical probability space of quantum mechanics, an exploration that sheds new light on central features of quantum mechanics, and non-locality in particular. Finally, unlike any of the above mentioned theorists, Pitowsky endorsed an epistemic interpretation of probability [19, 20]. It is this epistemic component of his interpretation, arguably its most controversial component, that he took to be necessary in order to challenge rival interpretations of quantum mechanics, such as the many worlds and Bohmian interpretations. Once quantum mechanics is seen as a non-classical theory of probability, he argued, and once probability is construed subjectively, as a measure of credence, the puzzles that gave rise to these rival interpretations lose much of their bite. Specifically, Pitowsky thought we may no longer need to worry about the notorious measurement problem. For, as Pitowsky and Bub put it in their joint paper [24], we may now safely reject “the two dogmas of quantum mechanics,” namely, the concept of the quantum state as a physical state on a par with the classical state, and the concept of measurement as a physical process that must receive a dynamical account. Instead, we should view the formalism of quantum mechanics as a ‘book-keeping’ algorithm that places constraints on (rational) degrees of belief regarding the possible results of measurement.

To motivate this point of view, Pitowsky and Bub draw an analogy between quantum mechanics and the special theory of relativity: According to special relativity, effects such as Lorenz contraction and time dilation, previously thought to require dynamical explanations, are now construed as inherent to the relativistic kinematics (and therefore the very concept of motion). Theories that do provide dynamical accounts of the said phenomena may serve to establish the inner consistency of special relativity, but do not constitute an essential part of this theory. Similarly, on the probabilistic approach to quantum mechanics, the puzzling effects of quantum mechanics need no deeper ‘physical’ explanation over and above the fact that they are entailed by the non-classical probability structure. And again, theories that do provide additional structure, e.g. collapse dynamics of the measurement process, should be regarded as consistency proofs of the quantum formalism rather than an essential part thereof. As it happens, the debate over the purely kinematic understanding of special relativity has recently been renewed (see Brown [21]). Following Pitowsky’s work, a similar debate may be conducted in the context of quantum mechanics. A number of papers in this volume contribute to such a debate. While Laura Ruetsche and John Earman examine the applicability of Pitowsky’s interpretation to quantum field theories, William Demopoulos and Yemima Ben-Menahem address issues related to its philosophical underpinnings.

To illustrate the difference between classical and quantum probability, it is useful to follow Pitowsky and revisit George Boole’s pioneering work on the “conditions of possible experience.” Boole raised the following question: given a series of numbers p 1, p 2,… p n , what are the conditions for the existence of a probability space and a series of events E 1, E 2,… E n , such that the given numbers represent the probabilities of these events. (Probabilities are here understood as frequencies in finite samples represented by numbers between 0 and 1). The answer given by Boole was that the envisaged necessary and sufficient conditions could be expressed by a set of linear equations in the given numbers. All, and only, numbers that satisfy these equations, he argued, can be the obtained from experience, i.e. from actual frequencies of events. In the simple case of two events E 1 and E 2, with probabilities p 1 and p 2 respectively, an intersection \( {E_1} \cap {E_2} \)whose probability is p 12, and a union \( {E_1} \cup {E_2} \), Booles conditions are:

Prima facie, these conditions seem self-evident or a priori. Evidently, if we have an urn of balls containing, among others, red, wooden and red-and-wooden balls, the probability of drawing a ball that is either red or wooden (or both) cannot exceed the sum of the probabilities for drawing a red ball and a wooden ball. And yet, violations of these predictions are predicted by quantum mechanics and demonstrated by experiments. In the famous two-slit experiment, for instance, there are areas on the screen that receive more hits than allowed by the condition that the probability of the union event cannot exceed the sum of the probabilities of the individual events. We are thus on the verge of a logical contradiction. The only reason we do not actually face an outright logical contradiction is that the probabilities in question are obtained from different samples, or different experiments (in this case, one that has both slits open at once, the other that has them open separately). It is customary to account for the results of the two-slit experiment by means of the notion of superposition and the wave ontology underlying it. But as noted, Pitowsky’s point was that acknowledging the deviant phenomena should take precedence over their explanation, especially when such an explanation involves a dated ontology.

Pitowsky [22] showed further that the famous Bell-inequalities (and other members of the Bell inequalities family such as the Clauser, Horn, Shimony and Holt inequality) can be derived from Boole’s conditions. Thus, violations of Bell-inequalities actually amount to violations of Boole’s supposedly a priori “conditions of possible experience”! This derivation provides the major motivation for Pitowsky’s interpretation of quantum mechanics, for, if Bell’s inequalities characterize classical probability, their violation (as predicted by quantum mechanics) indicates a shift to an alternative, non-classical, theory of probability. Note that the classical assumption of ‘real’ properties, existing prior to measurement and discovered by it, underlies both Pitowsky’s derivation of the Bell inequalities via Boole’s conditions and the standard derivations of the inequalities. Consequently, in both cases, the violation of the inequalities suggests renunciation of a realist understanding of states and properties taken for granted in classical physics. In this respect, Pitowsky remained closer to the Copenhagen tradition than to its more realist rivals – Bohmian, Everett and GRW mechanical theories.

Examining the geometrical meaning of Boole’s classical conditions, Pitowsky showed that the probabilities satisfying Boole’s linear equations lie within n-dimensional polytopes whose dimensions are determined by the number of the events and whose facets are determined by the equations. The geometrical expression of the fact that the classical conditions can be violated is the existence of quantum probabilities lying outside of the corresponding classical polytope. The violation of Bell-type inequalities implies that in some experimental set-ups we get higher correlations than those permitted by classical considerations, that is, we get nonlocality. If, as Pitowsky argued, the violation of the inequalities is just a manifestation of the non-classical structure of the quantum probability space, non-locality is likewise such a manifestation, integral to this structure and requiring no further explanation.

The next step was to provide an axiom system for quantum probability. The core of such a system had been worked out early on by Birkhoff and von Neumann [23], who considered it a characterization of the non-Boolean logic of quantum mechanics. Pitowsky endorsed the basic elements of the Birkhoff-von Neumann axioms, in particular the Hilbert space structure with its lattice of its subspaces. He also followed Birkhoff and von Neumann in identifying the failure of the classical axiom of reducibility as the distinct feature of quantum mechanics. By employing a number of later results, however, Pitowsky managed to strengthen the connection between the Birkhoff-von Neumann axioms and the theory of probability. To begin with, Gleason’s celebrated theorem ensures that the only non-contextual probability measure definable on an n-dimensional Hilbert Space (n ≥ 3) yields the Born rule. On the basis of this theorem, Pitowsky was able to motivate the non-contextuality of probability, namely the claim that the probability of an event is independent of that of the context in which it is measured. Further, Pitowsky linked this non-contextuality to what is usually referred to as ‘no signaling,’ the relativistic limit on the transmission of information between entangled systems even though they may exhibit nonlocal correlations. In his interpretation, then, both nonlocality and no signaling are derived from formal properties of quantum probability rather than from any physical assumptions. It is the possibility of such a formal derivation that inspired the analogy between the probabilistic interpretation of quantum mechanics and the kinematic understanding of special relativity.

Several articles in this volume further explore the probabilistic approach and the formal connections between the different characteristics of quantum mechanics. Alexander Wilce offers a derivation of the logical structure of quantum mechanical probabilities (in a quantum world of finite dimensions) from four (and a half) probabilistically motivated axioms. Daniel Rohrlich attempts to invert the logical order by taking nonlocality as an axiom and derive standard quantum mechanics (and its probabilities) from nonlocality and no signaling. Jeffrey Bub takes up Wheeler’s famous question ‘why the quantum?’ and uses the no signaling principle to explain why, despite the fact that stronger violations of locality are logically possible, the world still obeys the quantum mechanical bound on correlations.

From a philosophical point of view, Pitowsky’s approach to quantum mechanics constitutes a landmark not only in the interpretation of quantum mechanics, but also in our understanding of the notion of probability. Traditionally, the theory of probability is conceived as an extension of logic, in the sense that both logic and the theory of probability lay down rules for rational inference and belief. Like logic, the theory of probability is therefore viewed as a priori. But if Pitowsky’s conception of quantum mechanics as a non-classical theory of probability is correct, then the question of which is the right theory of probability is an empirical question, contingent on the way the world is. The shift from an a priori to an empirical construal has its precedents in the history of science: Geometry as well as logic have been claimed to be empirical rather than a priori, the former in the context of the theory of relativity, the latter in the context of quantum mechanics. Pitowsky’s approach to quantum mechanics suggests a similar reevaluation of the status of probability and rational belief.

Notes

- 1.

As far as interpretation is concerned, the drawback of the axiom system is that, in formalizing measure theory in general, it captures more than the intuitive notion of probability, including such notions as length and volume.

- 2.

This does not apply to Bohmian quantum mechanics, which is deterministic. See also Earman [2] for an analysis of determinism and for an unorthodox view about both classical and quantum mechanics regarding their accordance with determinism.

- 3.

- 4.

It has been shown that the subjective probabilities of an agent who updates her beliefs in accordance with Bayes’ theorem, converge on the observed relative frequencies no matter what her prior subjective probabilities are. But this is different from converging on the chances or the relative frequencies in the infinite limit. Similar considerations apply also to the so-called ‘logical’ approach to probability on which probabilities are quantitative expressions of the degree of support of a statement conditional on the evidence.

- 5.

See van Fraassen [7], p. 83. Note again that the condition of Dutch-book coherence is of no help here since the objective probabilities in the situation we consider are unknown.

- 6.

- 7.

In fact, there are other arguments, which show that Boltzmann’s deterministic approach in deriving the H-theorem could not be consistent with the classical dynamics, e.g. the historically famous objection by Zermelo based on the Poincaré recurrence theorem. It was later discovered that one of the premises in Boltzmann’s proof was indeed time-asymmetric.

- 8.

- 9.

See Maudlin [15] for a more detailed discussion of this problem.

- 10.

- 11.

The situation, however, is somewhat disappointing, since in quantum mechanics the question of how to account for the past is notoriously hard due to the problematic nature of retrodiction in standard quantum mechanics.

References

Kolmogorov, A.N.: Foundations of Probability. Chelsea, New York (1933). Chelsea 1950, Eng. Trans

Earman, J.: A Primer on Determinism. University of Western Ontario Series in Philosophy of Science, vol. 32. Reidel, Dordrecht (1986)

Fine, T.: Theories of Probability: An Examination of Foundations. Academic, New York (1973)

Hajek, A.: Interpretations of probability, Stanford Encyclopedia of Philosophy. http://plato.stanford.edu/entries/probability-interpret/ (2009)

von Mises, R.: Probability, Statistics and Truth. Dover, New York (1957), second revised English edition. Originally published in German by J. Springer in 1928

van Fraassen, B.: Belief and the will. J. Philos. 81, 235–256 (1984)

van Fraassen, B.: Laws and Symmetry. Clarendon, Oxford (1989)

Ehrenfest, P., Ehrenfest, T.: The Conceptual Foundations of the Statistical Approach in Mechanics. Cornell University Press, New York (1912), 1959 (Eng. Trans.)

Uffink, J.: Compendium to the foundations of classical statistical physics. In: Butterfield, J., Earman, J. (eds.) Handbook for the Philosophy of Physics, pp. 923–1074. Elsevier, Amsterdam (2007). Part B

Leff, H., Rex, A.: Maxwell’s Demon 2: Entropy, Classical and Quantum Information, Computing. Institute of Physics Publishing, Bristol (2003)

Maroney, O.: Information Processing and Thermodynamic Entropy. http://plato.stanford.edu/entries/information-entropy/ (2009)

Albert, D.: Time and Chance. Harvard University Press, Cambridge (2000)

Hemmo, M., Shenker, O.: Maxwell’s Demon. J. Philos. The Journal of Philosophy 107, 389–411 (2010)

Hemmo, M., Shenker, O.: Szilard’s perpetuum mobile, Philosophy of Science 78, 264–283 (2011)

Maudlin, T.: What could be objective about probabilities? Stud. Hist. Philos. Mod. Phys. 38, 275–291 (2007)

Bohm, D.: A suggested interpretation of the quantum theory in terms of ‘Hidden’ variables. Phys. Rev. 85, 166–179 (1952) (Part I), 180–193 (Part II)

Ghirardi, G.C., Rimini, A., Weber, T.: Unified dynamics for microscopic and macroscopic systems. Phys Rev D 34, 470–479 (1986)

Everett, H.: ‘Relative state’ formulation of quantum mechanics. Rev. Mod. Phys. 29, 454–462 (1957)

Pitowsky, I.: Betting on the outcomes of measurements: a Bayesian theory of quantum probability. Stud. Hist. Philos. Mod. Phys. 34, 395–414 (2003)

Pitowsky, I.: Quantum mechanics as a theory of probability. In: Demopoulos, W., Pitowsky, I. (eds.) Physical Theory and Its Interpretation: Essays in Honor of Jeffrey Bub. The Western Ontario Series in Philosophy of Science, pp. 213–239. Springer, Dordrecht (2006)

Brown, H.: Physical Relativity. Oxford University Press, Oxford (2005)

Pitowsky, I.: George Boole’s ‘Conditions of possible experience’ and the quantum puzzle. Br. J. Philos. Sci. 45, 95–125 (1994)

Birkhoff, G., von Neumann, J.: The logic of quantum mechanics. Ann. Math. 37(4), 823–843 (1936)

Bub, J., Pitowsky, I.: Two dogmas about quantum mechanics. In: Saunders, S., Barrett, J., Kent, A., Wallace, D. (eds.) Many Worlds? Everett, Quantum Theory and Reality. Oxford University Press, Oxford (2010), pp. 433–459

Feynman, R.: The Character of Physical Law. MIT Press, Cambridge (1965)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Ben-Menahem, Y., Hemmo, M. (2012). Introduction. In: Ben-Menahem, Y., Hemmo, M. (eds) Probability in Physics. The Frontiers Collection. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-21329-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-642-21329-8_1

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-21328-1

Online ISBN: 978-3-642-21329-8

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)