Abstract

Silicon photonics is nothing new. It has been around for decades, but in recent years, it has gained traction as electronic design challenges increase drastically with their atomic-level limitations. Silicon photonics has made significant advancements during this period, but there are many obstacles without an acceptable level of comfort as seen by the lack of semiconductor community involvement. Apart from a series of technological barriers, such as extreme fabrication sensitivity, inefficient light generation on-chip, etc., there are also certain design challenges. In this chapter, we will discuss the challenges and the opportunities in photonic integrated circuit design software tools, examine existing design flows for photonics design and how these fit different design styles, and review the activities in collaboration and standardization efforts to improve design flows.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

4.1 Silicon Photonics—History Repeats Itself

Integrated photonics has been around for many years, and like the electronic semiconductor industry, it continues to evolve and change. Its progression is similar to electronics with a progression from discrete products assembled on a printed circuit board to more highly integrated circuits.

In the late 1970s and early 1980s, there was a significant shift in the electronics market in the designing of custom integrated circuits. The shift came in the form of the use and reuse of pre-characterized building blocks or “cells” as opposed to designing each transistor from scratch for each new chip. This technique later became known as “standard cells” or cell-based design, and it became the standard way used to build application-specific integrated circuits (ASIC s).

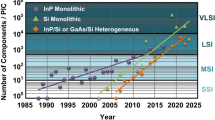

At the same time, there was a major shift in the methodology used to design and verify integrated circuits (ICs). General-purpose computing and engineering workstations were becoming available to the engineering community in conjunction with the advent of computer-aided design (CAD) tools. Later, this turned into an entire industry now known as electronic design automation (EDA ). Additionally, over the next several decades, hierarchical design and test methodologies [1] were codified, taught, and used to progressively enable scaling of IC design complexity from small-scale integration (SSI) to very large-scale integration (VLSI) and to what we now know as system on chip (SoCs).

Photonics is now in a similar state as to what the IC industry was in the early 1980s. There is a desire in the industry to integrate photonic components onto a common substrate and to bridge these photonics with their electrical counterparts monolithically either on the same die or on a separate die, but within the same package. Like the IC design in the early 1980s, there is now a need to codify design methodologies and to put into place the necessary industry ecosystems to support the scaling, both technical and economical, of integrated photonic design, manufacturing, and testing.

The good news for the industry is that engineers can leverage much of the infrastructure and learning that has gone into the electronics IC industry over the last 30 years.

4.2 Photonic Integrated Circuit Design Methodologies and Flows

Integrated photonic circuit design follows much the same design methodology and flows as traditional electrical analog circuit design. However, there is still a weakness in the photonic design process, and that is a successful circuit-like, schematic capture , approach. Even today, many photonic engineering teams with considerable design expertise start from the layout. Unlike digital electrical design, it is not easy to abstract the circuit into simple logical gates synthesized from a high-level design language. Instead, the circuit is envisioned at the system level and usually modeled at that level with tools like MATLAB [2], C-coded models, Verilog-A [3], or scattering matrices . Once an engineer designs the high-level function, it is then up to the optics designer to partition the design and map it into photonic components realized in the integrated circuit. This mapping process is typically a manual task and involves many trade-offs based on the material and the fabrication facility to be used to manufacture the product. Eventually, the design is captured and simulated with physical effects of the material being taken into account. After the physical design is complete, the design is checked for manufacturing design rule compliance and then passed on to the fab in industry standard GDSII format to be manufactured.

4.2.1 Front End Versus Building Blocks Methodology

As with electronic design of the late 1970s, it is not uncommon for photonic designers to bypass logic capture and go straight to physical design of individual components. In many cases, each component is laid out and simulated with mode solvers and propagation simulators to ensure that the component’s design meets the designer’s intent. These steps repeat for each component and then the designer places and routes the entire circuit together and resimulates with a circuit simulator using compact models for the components derived from the physical simulations or measurements.

As explained in the introduction, many designers continue to start from layout (front end). But as the photonic circuit becomes larger and more complex, it becomes essential to start from the circuit or logic level (using photonic building blocks to construct a photonic circuit), where system specifications dictate how to build the circuitry. Specialization occurs: some photonic designers focus on the physical properties of single components (e.g., MMIs , waveguide bends, MZIs , splitters, rings, etc.) and perform physical simulations. Others concentrate on the logic level, where circuit simulations use compact models .

Circuit simulation is possible, because, different from RF design, the dimensions of most building blocks are larger than the wavelengths of interest. In most cases, this allows a logic separation of the building blocks. Optical waveguides are used to connect the building blocks, and act as a dispersive medium.

4.2.2 Process Design Kit Driven Design

It was at the end of the 1980s and in the early 1990s that integrated photonics research started to surface and become visible to a wider audience. Materials like polymers, doped glass, and dielectric thin film materials like silicon oxide and nitride were dominant at that time. This new emerging field was called integrated optics, studying lightwave devices or planar lightwave circuits (PLC). As a result of these research activities, a need for proper design software emerged focusing on the simulation of mostly passive optical structures at the micrometer scale and the mask layout for the actual fabrication of the structures and devices. This was reflected in the start of an annual conference on Optical Waveguide Theory and Numerical Modeling (OWTNM ) in 1992, and the first commercial activities for design services and simulation tools (BBV [today PhoeniX Software ] in the Netherlands in 1991 and PhotonDesign in the United Kingdom in 1992).

As with electronic design moving into the 1980s, the optic design flow is now giving way to a more traditional flow as used by electrical analog design. Since the early 1990s, photonic IC design software has developed into what is available today; a set of more or less integrated solutions from a variety of vendors covering different levels in what is called the photonics design flow (PDF) or photonics design automation (PDA). The required software tools to create a full PDF or PDA include circuit simulators, mask layout tools, measurement databases, and design rule checkers. However, they also include physical modeling tools such as mode solvers and propagation simulators .

From the beginning, the designers in the field of integrated photonics have been working with a bottom-up approach, starting with the fabrication technology and materials and taking these as a starting point to develop integrated photonic devices. With the introduction of more standardized and generic fabrication processes since 2005 and the resulting creation of process design kits (PDKs , also called physical design kits) (see Sect. 4.5.3), a mixed design approach has evolved in which a group of designers develop the contents of the PDKs and a second group of designers use these PDKs in a top-down approach starting from the system or circuit level.

There are more fabrication facilities becoming available for complex photonic designs. The fab-provided PDKs contain documentation, rule “decks” for verification software, process information, technology files for software, a device library of basic devices (referred to as “PDK cells”) that are validated by the process, and layout guidelines for designing custom devices (further referred to as “user cells”). These PDKs are developed and maintained by the fabrication facility and include plugins for commercial CAD tools. Depending on the application or completeness of the foundry PDK , photonic designs make heavy (like digital IC design ) or light use (like analog IC design ) of PDK cells vis-à-vis user cells. Nevertheless, compared to the digital IC design flow, fabrication facility-provided photonic PDKs today address few aspects of a complete design flow and contain a limited device library.

Once a designer chooses a technology platform, they are required to obtain the PDK for that technology, which gives the designer everything needed for the physical design of the chip, including custom device design. For example, each technology will specify a recommended typical and minimum bend radius for the waveguides (below which the bend will lead to sizable waveguide loss). Alternatively, process tolerance information like the maximum width deviation of the waveguide core can be ±1 nm. The designer needs to take all these rules and guidelines into account when laying out custom cells. On the other hand, the library of validated cells allows for top-down design. Compact models enable the designer to make the connection between the higher level circuit design and the devices available in the technology of interest, without needing to resort to electromagnetic or device simulation.

Photonic PDKs contain various cells and templates, proven to work for a certain wavelength. Here are a few examples:

-

[fixed cell] A grating coupler , used to couple light from an optical fiber (vertically) in/out of the chip. Different cells may exist with each designed and tested for various wavelength ranges.

-

[fixed cell] A 3 dB splitter, used to split input light into two channels (50 %/50 %).

-

[templates] Waveguide templates, which specify the cross section of a waveguide (i.e., thickness of the core and slab region), typically designed to offer minimal loss.

-

[fixed cell] A high-speed PN junction-based MZI modulator.

-

[custom cell] Typical custom cells are introducing filtering and processing of the optical signal s. A widely applied custom cell or parametrized photonic building block is the arrayed waveguide grating (AWG ). This AWG , in fact, acts as a MUX or DEMU X and can contain up to hundreds of waveguide sections.

The PDK -supplied rule decks for DRC and LVS enable the designer to verify his design against design rules and ensure consistency between schematic and layout prior to sending the design to the fab.

Several key items in the above description are still under active development. In particular, today’s silicon photonic PDKs most often lack compact models for devices and, while LVS for photonics is still being developed, a few early rule decks do exist.

In conclusion, PDKs allow the user to carry out the physical implementation of the design, based on building blocks made available by the fab combined with custom designed cells based on given design rules.

4.2.3 Overview of Flow—Comparison to Analog Design Flow

With the advent of predefined photonic components and multiple foundries, the design flow and the methodologies used to create photonic integrated circuits (PICs) become directly analogous to electrical analog design. The foundry collects the predefined components into a PDK that is developed and maintained for each of their supported photonic processes. Once a PDK is installed the photonics designer can select from the PDK cells and instantiate and place their multiple copies, adjust their parameters when provided, and then connect them together with waveguides. As designs become more complex, the PIC designer can employ the use of hierarchy, which allows for the copy and reuse of blocks or “cells” of connected custom components with well-defined interfaces, throughout a design. These user cells can be documented and stored for reuse by other designers. In the electrical design world, this collection of cells is typically called a library. A PDK is a distinct type of library as it is a set of programmable cells (pcells) that come directly from the foundry and are already pre-characterized.

Furthermore, designers are now working with more third-party foundries to make predefined and characterized components available, best tuned for the selected foundry process. Unlike digital design, these components are not static but instead are parameterized to allow the optics designer to be able to dial-in certain parameters to meet design requirements. Once the parameters are set, the programmable device autogenerates the component layout along with a compact model used for circuit-level simulation. As with electrical analog design, these programmable components also check for valid ranges of input parameters for which the compact models will be valid. The idea is to use as many of these predefined components as possible to reduce risk and time required to create the detailed, yet fab-compliant, layouts and fab-qualified models. Such libraries are being developed with photonics designers or CAD tool vendors in collaboration with the fab.

4.2.3.1 Schematic-Driven Layout

With the increase in complexity, many designers wish to capture their circuit at the logic level before spending a considerable amount of time creating layout. This desire is directly analogous to analog design in the electrical world. Instead of jumping right to layout after system design, the designer captures the design in a schematic abstraction of the circuit. The symbols used in the schematic have a direct one-for-one relationship with the programmable components used during layout. The CAD flow automatically makes a connection between the logic symbols and the correct compact models for circuit simulation. The symbols are placed and connected together with abstractions for the waveguides, ideal parameters are set on the components and waveguides in the schematic, and then the circuit is simulated. The designer iterates on the design, changing parameters on components, adding or removing components, and resimulating until all design objectives are met.

The benefit of using the abstracted level of the design at this stage, is that it allows the designer to focus on getting the circuit to function as desired without getting bogged down in the details of layout design rules, layout placement, and shaping of individual waveguides that can be very time-consuming. The idea is to avoid spending too much time crafting placements and waveguides only to find out that the basic circuit still is not functioning.

Once the PIC designer is satisfied that the design is converging, the layout process can begin. Electrical designers have learned over the years that it is best to use a methodology known as schematic-driven layout (SDL ). SDL employs a correct-by-construction (CBC) methodology whereby the CAD tools do not allow the designer to layout and connect up something that does not match the connectivity of the schematic design.

SDL also ensures that all parameters used during the schematic design get used for the correct generation of the programmable components, so there are no surprises caused by accidentally using the wrong parameters on the optical layout components. At this stage, we want the designer focused on the best possible placement of the components that allows for straightforward connections by the necessary waveguides and avoiding interference between multiple neighboring components.

Since the designer must work in a confined 2-D environment, there will most likely be some parameters or constraints that were forward annotated from the schematics to the layout that cannot physically be met; these could be, for example, nonverified user cells. When this happens the CAD flows must allow the designer to adjust parameters in the layout and then back-annotate these changes into a view of the schematic that can be resimulated.

4.2.3.2 Design for Test and Manufacturability

At the current stage of integrated photonics, the concepts of design for test (DFT ) and design for manufacturing (DFM ) have not been well addressed. Most fabrication facilities have limited DFT sites to test the functionality of PDK cells for wafer qualification. As silicon photonic circuit design complexity increases, netlist -driven automated DFT site generation will be increasingly important. DFT development will follow a mature SDL environment. DFM in silicon photonics today consists only of basic design verification comprising rules for shapes of polygons within a process layer (width, space, diameter, etc.), and rules for inter-process layer alignments (overlaps, enclosures, etc.). The current DFM maturity is only sufficient for checking the manufacturability of the design but not its yield, functionality, or reliability. (In commercial manufacturing sites, mainly PLC and InP, these things are in place.)

Like electronic design, the design specialty field of DFT and DFM for integrated photonics will eventually be required, especially as the design complexity for integrated photonics continues to grow. DFM will be of particular interest to photonic designers, as subtle changes in the process can have dramatic effects on the performance and functionality of photonic components. As the integrated photonics industry matures, additional work to characterize areas of yield loss will be required, and methods will need to be created for optics designers to design in such a way for the designs to be robust to process variations.

4.2.3.3 Design Sensitivity

For interferometric devices, the phase relationship between two waveguide paths determines the overall behavior of the device; examples include ring resonators , Mach–Zehnder interferometers , and so on. This behavior is quite comparable to RF design, where the exact wire length and shape largely affect the overall behavior.

Even more so, the fabrication tolerances are very strict. A 1 nm change in waveguide width can cause a 1 nm shift in filter response [4]. A 1 nm shift, at a wavelength of 1.55 μm, corresponds to a frequency shift of 125 GHz, which is sufficient to span more than one channel in a typical wavelength division multiplexing (WDM) device. The same holds for temperature changes: a temperature change of roughly 12 K corresponds to the same 1 nm shift [5].

For this reason, smart designs can be made that compensate for design tolerances [6], which are a real challenge for designing photonic chips that contain interferometric devices. Good control over the waveguides line shape in the technology process, together with clever engineering, can reduce the risks for not obtaining the targeted specifications. For more information, see Sect. 4.3.1 on silicon photonics fabrication processes.

4.2.4 Schematic Capture

A schematic is an abstracted view of the circuit at the logic level. The objects placed in the schematic can come directly from a foundry PDK or could be symbols created by the designer that represent more levels of design hierarchy. Schematics serve many purposes. They are used to capture the implemented logic, as well as design intent. Design intent can be in many forms, but the most common are simple textual notes that record the designer’s assumptions. Designers also annotate their schematics with instructions for how the associated layout should handle various components. In both electrical and photonic domains, designers annotate parameters on the instances of schematic components that use both the simulation and the layout of the actual component.

More advanced schematic capture packages also allow the designer to represent repeated structures in very compact forms like buses and “for loops” that contain circuit components. Schematics are meant to be printed, and when circuits get too large they cannot be represented on one schematic. There are special annotations that allow the designer to continue the design on more pages of the same schematic hierarchy. Similarly, there are conventions that can be used to simplify the schematic drawing so that referencing connections is made between components on opposite sides of the schematic by name instead of having to route a line between them. The idea here is to make capture of the logic accessible so that the designer can get to simulation and debug of the circuit behavior quickly—the less drawing and more debugging, the better. To deal with hierarchy, the CAD tools make use of the concept of pins and ports on components as a way for the software to keep track of connections between levels of hierarchy and connections from page-to-page. At a given level of the hierarchy, “ports” are defined as the interface for the cell being designed. Once the cell is complete, the CAD tools have automated ways to create an abstracted symbol for the cell. The symbols have “pins” which are connection points for the symbol. There is a one-for-one correspondence for ports in the schematic for each pin on the symbol. Typically, these associations are done by name on the port and associated pin. A newly created symbol for a schematic placed in a library, instantiated, and then used in other levels of the design hierarchy, helps the user to abstract the design at any level of hierarchy to something that is meaningful to anyone else who reads the schematic.

4.2.4.1 Interface to Simulation and Analysis

In both the electronic and photonic domains, designers employ circuit simulators to verify and debug the function of their designs. The schematic serves as a way to capture the circuit in a form that the simulator can use through steps known as elaboration and netlisting. A netlist is a non-graphical representation (and typically human readable) of the connectivity of the design. Netlists can be hierarchical or flat and typically carry the property information needed by downstream tools like simulators and layout generators. Elaboration is the step that unwinds the higher levels of abstraction into specific instances that will be simulated and generated in the layout. An example of this in electronic design is when the designer uses something called a multiplier factor parameter or “M factor ” parameter [7]. A transistor symbol, with an M factor of 4, means that the designer will actually get four transistors for the one abstracted symbol in the schematic. Each of these four transistors connects in parallel, and each will have the same properties as assigned to the symbol on the schematic. The elaboration step expands the one transistor into four in the netlist and makes sure that all of the appropriate properties are on each of the new instances.

In addition to the netlist , simulators also need a variety of inputs from the user. This would include things like identification of circuit input and output terminals for the simulation, identification of signals that the designer wishes to monitor for viewing in a waveform viewer, and other analysis points on the circuit that the user may want the simulator to monitor and output for debug purposes. The user interface for this is typically integrated with the schematic to make it easy for the designer to identify graphically nodes of the circuit as opposed to having to type in terminal names. The CAD tools also have graphical interfaces to allow the designers to map the symbols to different levels of simulation models allowing for mixed-level simulations. Not all simulators are capable of this, so the CAD tools typically have dialog boxes that are unique to the chosen simulator. This user interface allows the designer to use the same schematic capture system for high-level systems design, circuit design, and mixed mode simulations where parts of the circuit are still at behavioral levels while other areas of the circuit are at the component level.

In more advanced design flows, the netlister and elaborator are also responsible for forward annotating constraints captured by the designer in the schematic on to the layout generation tools. The netlister and elaborator take care of all of the internal connections that must be made between the different editing tools so that the designer can focus on design and not tool integration complexities.

4.2.4.2 Matching Simulation to Layout

A general methodology used by electrical designers is to design their analog circuit first as an ideal circuit. Ideally, in this case, it means that the designer debugs the circuit without taking into account the physical effects of component placement and the parasitics due to routing. In this case, the circuit is simpler in nature, and it should be easier to tune the design to get the desired response. The same is true in photonics design. Wires connect the major components in the schematic. These wires represent waveguides in the layout. The parameters of the abstracted components and waveguides are set by the designer in the schematic and then work using the simulator to bring the circuit to the desired behavior.

Once this step is close to completion, the designer then moves to layout. The idea here is that the parameters used in the ideal circuit are forward annotated to the layout system where a CBC layout methodology is used to try to meet the original assumptions of the designer. Presumably, if the designer can meet the original assumptions, then the post-layout simulations of the virtual layout should match the simulations of the idea circuit. Matching the original circuit simulation and post-layout simulation can be challenging, and this is especially true for photonics. In photonics, this is because the phase has to be controlled precisely (e.g., in Mach–Zehnder interferometers , ring resonators , etc.). Phase is a function of the topology and materials of the components and waveguides, and the designer is not yet aware of these at schematic capture time. The CBC methodology proposes that the phase relationships are passed on to the layout tools, which would then try to construct the components and waveguides in such a way that the phase relationships are maintained. Depending on the overall circuit, this may not be possible. In which case, some of the parameters on the components and waveguides will need to be changed to accommodate the physical constraints of the area allowed for the layout. When this happens, the CAD tools must have a mechanism to back-annotate and merge the changed parameters of the layout with the original parameters of the ideal design. This data is stored as a revision of the netlist so that the original ideal circuit description is not lost. The revised netlist can then run against the same simulation test benches used to debug and characterize the ideal circuit. A designer then compares the results between the ideal circuit and the virtual layout and any issues caused by the changes must then be resolved either by changing the layout or by changing the logic circuit to be more robust to the physical effects and ultimately reiterating the design.

4.2.5 Photonic Circuit Modeling

Numerical modeling is an essential part of any modern circuit design, whether electrical or photonic. A designer performs simulations to optimize the circuit and ensure that it will meet its performance requirements. Also, circuit simulation can provide information on circuit yield if the effects of realistic manufacturing imperfections can be correctly taken into account, allowing the designer to optimize the circuit for manufacturability. The key requirement of circuit simulation is that it must be predictive, or in other words, that the results of the circuit simulation agree to sufficient accuracy with the actual circuit performance after manufacturing.

4.2.5.1 Electronic Simulations Using SPICE

For electronic circuits, SPICE is the de facto standard for simulating resistors, capacitors, and inductors (RLC circuits) better known as linear electrical circuits. Moreover, there are many methods for modeling nonlinear devices, such as diodes and transistors, by linearizing them around operating points. A SPICE tool can then simulate the small-signal frequency domain or (transient) time domain behavior of the circuit. On a higher level of abstraction, Verilog-A can be used to describe the input–output relationship of an arbitrary component and, at the system level, IBIS can be used for modeling communication links with SerDes devices [8]. Advanced simulation strategies can then seamlessly interpret all this information and perform a coherent simulation.

4.2.5.2 Electronic Versus Photonic Simulation

Similar to electronic circuit simulation, photonic circuit simulation commonly requires both transient and scattering data analysis to model signal propagation within the time and frequency domain. Therefore, one approach has been to reuse circuit simulation algorithms originally designed for the simulation of electrical circuits such as SPICE [9]. However, as photonic circuits are physically described using wave phenomena, it is not straightforward to map this onto an SPICE-like formalism, which assumes that the wavelength of the information carrier is much larger than the size of the components and can be treated as lumped elements. Typical photonic circuits exist of several building blocks, which themselves are often larger than the signal wavelengths: the signal wavelengths are in the visible to near-infrared (i.e., 700 nm–10 µm, with 1.3 µm, and 1.55 µm the most commonly used wavelength bands for data and telecom applications). In contrast, individual building blocks can be of the size of ~10–1000 μm2, depending on the technology.

Unlike electrical circuit simulators, photonic circuit simulators must take into account the complex nature of light that includes the optical signal’s polarization, phase, and wavelength. The optical simulation and analysis of PICs is particularly challenging because it involves bidirectional , multichannel , and even multimode propagation of light and waveguide connections between consecutive components require specialized treatment unlike that done for electrical traces [10]. In addition, photonic circuit components often involve both electrical and optical signals , and there is still little standardization for how to perform the necessary, mixed-signal simulations in the time domain. Lasers , modulators, couplers, filters, and detectors are just a few of the different components present in a complete PIC . Each of these components has different operation principles that are highly dependent upon the particular process, technology, and physical geometry. Photonic circuit simulators, therefore, must rely on proper compact models , calibrated for a particular foundry process, which accurately represent the optical and electrical responses of these components in the time and frequency domains.

4.2.5.3 Photonic Circuit Simulation

Photonic building blocks or components are linked using waveguides that guide optical signals . They are represented as a delay line, adding delay and phase to the signal. To complicate matters: both the group delay and the phase delay can be very dependent on the signal wavelength, and multiple wavelength carriers can be used simultaneously in the same waveguide circuit.

In a photonic circuit simulation, each component (including waveguides) is represented by a black box model with input and output ports and a linear or nonlinear model describes the relationship between the ports. The larger photonic circuit contains the collection of these building blocks, connected at the ports, with a given connection topology. These connections are purely logical (Figs. 4.1, 4.2, 4.3 and 4.4).

Designers implement the building block model description in different ways: purely linear, frequency domain based, or a more complex description for time domain and/or nonlinear interaction. For the linear part, matrix formalism can be used. The two most commonly used formats are

-

(1)

Transfer-matrix methods: in this case, the assumption is made that there is a set of input and a set of output ports. The output ports of component 1 cascade to the input ports of component 2. This method is simple and easy to understand (and can be easily implemented, e.g., in MATLAB or Python), but it has the drawback that no reflections or feedback loops are possible.

-

(2)

Scattering matrix methods (see Fig. 4.5): here, reflections and feedback loops can be taken into account. As most photonic circuitry will contain these two effects, it will lead to a more accurate result. For complex circuitry, it quickly becomes beneficial to adopt the scatter matrix formalism. A mathematical description of how to calculate the system response based on individual scattering matrices can be found in [11].

Time domain models that take nonlinearities into account can augment both methods. The main advantage of this approach is the natural representation of variables such as temperature, carriers (e.g., the plasma dispersion effect), and resonator energy (for coupled mode theory models), making it simpler to interpret these models.

When combining photonic circuits with electronic circuits, it is not trivial to balance the two different simulation strategies. One approach is to reduce the photonic model to a description that a designer can implement into Verilog-A then simulate using an electronics simulator. This approach requires that some photonic quantities, such as optical power and phase, map into native Verilog-A variables. Also, more elaborate information of the photonic model, such as multiwavelength behavior, polarization, etc., are not taken into account, or need to be simulated as a parameter sweep. Such a compact model can work well in a small operation region (i.e., for a fixed wavelength, temperature, and voltage). As long as this model satisfies the need for a particular application (e.g., single-wavelength optical interconnects ), this approach can be used for the co-simulation of photonics and electronics. The challenge is to find a good compact model that is valid over the operation region of the circuit. This simulation strategy has the lowest threshold for electronic engineers engaging in the field of photonics and has some limitations as described.

It is worth noting that while some electrical simulators support scatter matrices (which can map onto an RLC circuit), the shape of the response will determine accuracy. Moreover, nonlinearity/reflections are not easy to model accurately.

4.2.5.4 Frequency Domain Analysis

Frequency domain analysis is performed using the same type of scattering analysis used in the high-frequency electrical domain for solving microwave circuits, enabling bidirectional signals to be accurately simulated [12]. This approach can be extended to allow for an arbitrary number of modes in the waveguide with possible coupling between those modes that can occur in any element. Consequently, the scattering matrix of a given element describes both the relationship between its input and output ports and the relationship between its input and output modes. The advantage of frequency domain analysis is that it is relatively standardized. The so-called S-matrices for each component (including possible coupling between modes on the same waveguide ports) are all that is required to perform a frequency domain simulation of the circuit. However, the frequency domain simulation, while very valuable for a broad range of design challenges, is insufficient for most circuits and systems that make use of active components, which require the simulation of both electrical and optical signals .

4.2.5.5 Time Domain Analysis

Unlike frequency domain analysis, there is little standardization in time domain analysis. For time domain analysis, it is necessary to represent multichannel signals in multimode waveguides with bidirectional propagation . These waveguide modes must not limit polarization states to only two, which are a clear distinction, compared to many fiber-optical systems. Also, there must be the ability to support both electrical and digital waveforms since, for example, an electro-optical modulator must take both electrical and optical inputs to produce a modulated optical output.

One approach is to use a dynamic data flow simulator. When a simulation runs, data flows from the output port of one element to one or more input ports of one or more connected elements. When an element receives data, its compact model applies the element’s behavior to the data and generates the appropriate output. In the time domain simulation, data can be represented as a stream of samples where each sample represents the value of the signal, either optically, electrically or even digitally, at a particular point in time. This approach has the flexibility to represent different element behaviors and signal types, which enables the development of compact models that can comprehensively address the variety of active and nonlinear optoelectronic devices present in photonic integrated circuits .

This type of simulation approach used in combination with electrical circuit simulation methods such as SPICE is the simplest method to run separate electrical and optical simulations, and exchange waveforms between them, and this approach has been demonstrated successfully [13]. In the future, it will likely be necessary to extend this to a full co-simulation approach whereby the photonic circuit simulation runs in a larger scale electronic simulation.

The time domain signal integrity analysis of a circuit under test is performed by analyzing the input or output signal at different ports, which may be electrical, optical, or digital. A typical result from a time domain simulation is the eye diagram , as shown in Fig. 4.6. The analysis of the eye diagram offers insight into the nature of the circuit imperfections. The time domain simulation can calculate the eye diagrams, and the resulting analysis can determine key signal integrity parameters such as bit error rate (BER) , optimum threshold and decision instant, and extinction ratio. As increasingly complex modulation formats become more widespread, such as quadrature phase-shift keying (QPSK), calculating constellation diagrams will produce the best signal integrity analysis. An example of the eye diagram and constellation diagram from a simulation of an optical QPSK with electrical 16-QAM (quadrature amplitude modulator ) system is shown in Fig. 4.8 as part of an example circuit.

4.2.6 Model Extraction for Compact Models

The compact models required for PICS modeling must be generated using a combination of experimental results and accurate optical and electrical physical simulation. If the optical component is passive and linear, it suffices to provide a scattering matrix (typically wavelength dependent). Devices with dynamical behavior will need more complex models (e.g., an optical phase modulator will require the electrical voltage over the p(i)n diode as additional input).

In the case of passive components, the scattering matrix can be obtained by performing a full physical simulation (e.g., finite-difference time domain [FDTD ]). Previously, fabricated components can be measured to obtain a wavelength-dependent spectrum.

From the given spectrum, compact models can be made that represent the original component, within a given accuracy. Measurement noise has to be eliminated in order to create a useful model in cases where a designer obtains the spectrum from a measurement. Passivity and reciprocity are essential properties of the model obtained.

One example of creating these models is the vector fitting method [14]. With this method, an arbitrary S-matrix is approximated with a set of poles and zeros. Some challenges with this method are to find a good filter order and to cope with nonsymmetry of the optical filters due to dispersion.

A second example is to model scattering matrices using FIR filters, which have more degrees of freedom than IIR filters, but are computationally more demanding to execute. In the case of active components, the model, characterization, and parameter extraction need to be tailored for each component. For example, an optical laser can be described using rate equations [15]. Optical phase modulators have a voltage-dependent transmission, described as a series of steady-state, voltage-dependent scattering matrices , or with a more dynamical model, where the transmission is dependent on the number of free carriers in the p(i)n junction, and an ordinary differential equation (ODE) simulates the number of free carriers. All of these methods are vital when building robust PDKs (see Sect. 4.2.2).

4.2.6.1 Methods and Challenges

Waveguides are an excellent example of components that require a combination of simulated and experimental data. A mode solver, knowing the cross-sectional geometry, can generate the effective index, group index, and dispersion. It is possible to calculate the dependence of these quantities on geometric changes such as waveguide width and height, as well as external influences such as temperature, electrical fields, or mechanical stress. However, the waveguide loss, in a well-designed geometry, comes primarily from sidewall roughness. While it is possible to simulate these losses if enough information on the roughness measurements of surfaces (RMS) and correlation length is known, in practice, it is much easier to use experimental waveguide loss results when creating the compact model.

4.2.6.2 Model Extraction from Physical Simulations

Similarly, the majority of passive component compact models can be calibrated using a combination of simulation results and experimental data. For example, when creating a compact model for a splitter, the insertion loss (IL) may come directly from the experimental data. However, even well-designed splitters have a small but nonnegligible reflection that is challenging to measure directly. In this case, a simulation of the design can provide the reflection and the phase of the S-parameters while confirming the experimental IL data. As with waveguides, the simulation can provide sensitivity analysis, such as the dependence of parameters on waveguide thickness, which may be challenging to obtain experimentally.

4.2.6.3 Compact Models in the Time Domain

Compact models for electro-optical modulators for time domain simulations are much more challenging. To simulate a Mach–Zehnder modulator requires, at a minimum, the waveguide effective index and loss as a function of bias voltage calculated by a combination of electrical and optical simulations, where the electrical simulations must be calibrated against experimental data such as the capacitance versus voltage curve. Excellent agreement with experimental results with a DC bias can be obtained [16] once calibrated, as well as with the spectral response under different bias conditions [17].

For time domain simulations, the compact model must be able to respond to time-varying electrical and optical stimuli. When driven at higher frequencies, the Mach–Zehnder modulators frequently have complex effects that must be accounted for, such as: impedance mismatches of the transmission line and the feeds; improper termination of the transmission line; and velocity mismatches of the transmission line and the optical waveguide. To accurately simulate a modulator driven by an electrical bit sequence that contains frequencies from DC to 10s of GHz and beyond, it is necessary to calibrate carefully the models to account for these effects.

The photodetector responsivity versus bias voltage is often measured experimentally under continuous-wave (CW) illumination , and this data can be used to create the compact model. The high-frequency behavior can be recreated using a filter with parameters calibrating against experimental data. Similarly, the dark current is often measured experimentally. The temperature dependence of these quantities can be obtained experimentally if available, or it is simulated with a combination of optical and electrical solvers.

4.2.6.4 Photonic Circuit Modeling Examples

A typical circuit analyzed in the frequency domain is an optical switch [18, 19]. These circuits can include hundreds of components due to all the waveguide crossings that are required. An example circuit diagram is shown in Fig. 4.7, which also makes it clear that the larger the number of elements, the more necessary the circuit hierarchy becomes.

The entire circuit is displayed together with a zoomed view of a portion of the circuit including the optical network analyzer. Also shown, is the inside of a subcircuit element that includes a large number of waveguide crossings. The entire circuit contains hundreds of elements. Nevertheless, the results of the optical network analyzer can be calculated in less than a minute over a typical range of frequencies.

In the time domain, a typical circuit to simulate is a transceiver [20]. In Fig. 4.8, a 16-QAM transmitter is shown along with the resulting eye and constellation diagrams.

4.2.7 Schematic-Driven Layout

In photonics, historically most emphasis has been on the full-custom layout of the individual components and combining these into (simple) circuits. Today, with the increasing complexity of the circuits the mask layout ideally should be generated from the schematic, as created with a circuit design tool.

Schematic-driven layout (SDL ) is a methodology and design flow whereby the layout tool continually checks the connectivity of the layout against the connectivity of the elaborated netlist . If the designer tries to connect up something in the layout that is not in the elaborated netlist , the CAD tool will flag the issue to the designer. In some companies, policies are put in place whereby the CAD tool is set up to not allow changes to the connectivity within in the layout tool. The schematic must include any connectivity changes which forces the designer to remember to rerun simulation verification on the new netlist . Other companies find this too restrictive and allow for design connectivity changes made directly in the layout. This practice, however, should be discouraged, as it is very difficult to back-annotate design changes from the layout to the schematic. It should also be noted that since CAD tools can be set up to allow for different change scenarios, SDL should not be relied upon as the last check before manufacturing to ensure the circuit layout is connected up. It is the responsibility of the designer to both resimulate the design and to run physical verification tools that perform a more exhaustive check of all layout versus schematic connectivity.

In addition to checking connectivity, the SDL flow is also responsible for setting parameters of any programmable layout cells (pcells ) forward annotated from the schematics. It should be noted, at this point, that the hierarchy of the layout does not need to match the hierarchy of the schematic. If this is the case, the CAD tool is responsible for all name and parameter mappings between the two hierarchies. CAD tools that handle this methodology typically have a hierarchy browser that lets the designer cross-probe between the schematic and the layout views even when the hierarchies of the two views are different.

The benefit of using an SDL -based design methodology becomes clear when design sizes increase, especially when a team of designers is working on a project as opposed to a single designer. Different people usually do circuit design and layout design. Using SDL ensures accurately communicated design connectivity between the schematic and layout stages. A second benefit of using an SDL -based flow is that last minute design changes can be tracked by the CAD system to ensure that the changes made in the layout are, in fact, the same changes that are in the schematic. The CAD tool will flag the differences it sees in the layout versus the newly updated schematic.

CAD tools ensure that connectivity is correctly preserved in the layout tool using the SDL -based flow that enables the use of more advanced constraint-driven layout engines such as pcells, auto-placement, and autorouting.

The SDL strategy is well developed in electronics, using semiautomated algorithms for placing “functional pieces” and routing the connecting “wires”. Electronic design is very suitable for this since, in most designs, the wires can be considered as “just a connection” and they do not influence the overall design, for example, due to increased delay times. For many low(er) speed applications, the electric wires on the chip are just a low-loss way to transmit signals. Therefore, the placement of the functional parts with nonoverlapping wires between the different pieces is a purely geometrical problem. This simple concept requirement is frequently solved using autorouting approaches of the wires, where the paths are typically vertical or horizontal (Manhattan ) routing patterns. Nowadays, routing at angles other than 90° is sometimes also supported, but then at only a few fixed angles, like 30°, 45°, and 60° only.

For high-speed (RF) tracks, analog design, and high-speed (>10 GHz) digital designs these assumptions are no longer valid as the transmission losses can become considerable and both impedance mismatches, voltage drops over the wire, and timing delays are becoming crucial as in photonic designs. For photonics, the “wires” are in most cases, not just simple connections. The physical properties are starting to play a role or are even determining the functionality of a component or the whole photonic circuit. Therefore, the connecting “wires” between building blocks or components are called “waveguides,” because the purpose of the connection is to guide an electromagnetic wave from one place to another. Remember that the telecom C band comprises infrared wavelengths around 1550 nm, corresponding to a frequency of 193 THz. Quite often, the functional pieces themselves consist mainly of waveguides and/or waveguiding structures with very specific requirements for individual waveguides or combinations of waveguides. These detailed requirements can fluctuate from a very precise control over the length and width or length and width differences and even mathematically defined varying widths along the length of a waveguide (so-called tapering ). These fluctuations are also why a proper translation of the actual design intent for the waveguide structures into the final discretized mask file (GDSII ) is paramount, avoiding gridding and rounding errors.

Based on these boundary conditions, it is easily understood that a mask layout tool for photonics has some unique requirements, not necessarily available in electronics mask layout tools. All angle capabilities, the ability to produce very smooth curves, and connectivity are the most important ones. Since the actual shape of the waveguides plays a dominant role, designers want to have full control over these shapes and how these shapes connect. In 1992, the concept of parametric design was introduced for this purpose. Instead of drawing the shape, a designer sets some parameters and software will then translate this design intent into a set of geometric shapes like polygons.

Based on a library of predefined geometrical primitives, dedicated for integrated photonics, all required waveguide structures can be designed and used in larger structures or composite building blocks, like a Mach–Zehnder interferometer , an arrayed waveguide grating, and even full circuits. The crucial step of translating the “design intent” into the final “geometry” can be covered by manual coding in generic script languages, like Python, as used in Luceda ’s IPKISS [21], or Mentor Graphics ’ AMPLE [22], as applied in the Mentor Graphics®’ Pyxis ™ layout framework, used for the formulation of parametric cells. PhoeniX Software ’s OptoDesigner [23] provides domain-specific scripting capabilities also to the built-in photonics aware synthesizers as well as specific layout preparing functionalities, thus removing this translation burden from the designer.

4.2.7.1 Floorplanning

Floorplanning is a stage of layout whereby the layout designer partitions the layout space of the overall photonic die to accomplish several objectives. Some of these goals include allocating space for die interfaces that will match up to the packaging methodology of choice. As an example, a SiGe die with VCSEL lasers is flip chip ped onto a silicon photonic substrate. The substrate floorplan must comprehend the location of the VCSELs to make sure the laser to grating interfaces work properly. Another objective is ensuring that adequate space exists for photonic components and their associated waveguides.

A challenge that is unique to photonics is that the waveguides that connect components are typically created using only one physical layer as opposed to electrical connections that may use many layers of metal interconnect . As the designer must ensure that there is adequate room to place the waveguides in a planar fashion, this makes the placement of components more challenging. Care must also be taken in the placement of components so that they do not interfere with each other in their function.

Connectivity of the individual parts of the waveguide structures, and the connections between the building blocks or components, is required to be able to make designs that contain multiple parts, without the need to manually adjust positions when there are additional changes to parts of the design. A good example of this is a Mach–Zehnder interferometer composite building block constructed of several photonics primitives like junctions, bends, and straights. These individual waveguide parts all have their parameters, depending on the actual waveguide shape or cross section, the wavelength of interest, and the phase difference that is required. These individual waveguide parameters relate strongly to the composite building block parameters often using fairly simple equations: for example, the path length of one branch of a Mach–Zehnder interferometer should be a precise amount longer than the other branch. The waveguide materials, dimensions, and required filtering characteristics determine this length difference. When designing such a composite building block, it is very beneficial that all the individual smaller pieces stay connected when changes are made to the design based on simulation results or measurement data. The need for connectivity and the automatic translation of the design intent into the required layout instead of drawing or programming these complex polygons by hand is now well understood.

4.2.7.2 Routing

Waveguide routing is unique to photonics. As mentioned previously, waveguides typically only run on one physical layer. However, unlike electronic integrated circuits, it is possible to run many different wavelengths down the same waveguide. The concept of a bus becomes more like a highway on which multiple different types of cars can travel, as opposed to electronics, where a bus has dedicated lanes and can only be shared by multiplexing and demultiplexing in different signals. With photonics, multiple wavelengths and light modes can all share the same waveguide at the same time.

Waveguides are also unlike metal traces in electronic ICs because they can allow for intersections between waveguides on the same layer, which is analogous to an intersection on a highway. Care must be taken, however, as light from one direction will bleed into the other waveguides of the intersection, and the circuit must be able to handle this functionally.

Waveguides also play a very active role in the function of the photonic circuit. As mentioned previously, turns in waveguides are typically made with curvilinear shapes, not the orthogonal shapes used in electronic design. The number of turns, the radius of those turns and the width of the waveguide all affect the performance of the waveguide and the overall circuit. Once the waveguides have been routed all of these parameters for the resulting waveguide need to be back-annotated to the schematic for post-layout circuit verification using the photonics circuit simulator.

4.2.7.3 Specialty Design

Although integrated photonics is very similar to analog IC design , there are no libraries of photonics components that will meet the requirements of individual designer’s application. Today, a typical PIC design contains more than 70 % custom design. Except for the provided IO modules (fiber chip couplers ) and a photodiode or modulator, most of the design is entirely or partly customized. Moreover, even the above-mentioned example library components are tweaked or changed to meet the wavelength requirements for a particular application. As a result of this, designers need flexible tools to work with, being able to cope with the special requirements for photonics design. Especially at the layout level, a large variety of designs can be observed, creating functions that at the circuit level are very similar. This large variety is a result of technology constraints (material properties, waveguide types, process variations, etc.) and the need to use the chip area as efficiently as possible. Phase relations create many photonic functions and therefore folding, bending, and/or rotating are widely used during the layout implementation (Figs. 4.9 and 4.10).

Figure 4.11 shows how photonics designers can automatically generate layout implementations after being given the required technology information and optically or geometrically defined photonics building blocks (PhoeniX Software ’s OptoDesigner ).

4.2.8 Overview of Physical Verification for Silicon Photonics

As silicon photonics design migrates from research into commercial production, photonics designers must borrow some techniques from complementary metal–oxide–semiconductor (CMOS ) design in order to fully realize these benefits. One of the key challenges is to adapt existing IC design tools into an EDA design flow that is compatible with silicon photonics design characteristics [24]. Physical Verification (PV ) is one of the key components of the EDA design flow. The role of the PV flow is to ensure

-

the design layout is appropriate for manufacturing given the target foundry or fab

-

the design layout meets the original design intent.

There are a number of components borrowed from the traditional CMOS IC physical verification . All, however, will require some modification. By leveraging the advanced capabilities of today’s leading physical verification products, it is likely that existing tools can achieve all of these requirements. However, tools need the addition of dedicated rule files for nonphotonic purposes, separate from rule files associated with the same process.

The main tasks associated with PV and DFM can vary slightly from process to process, but typically consist of the following: design rule checking (DRC ), fill insertion, layout versus source (LVS ), parasitic extraction (PEX), lithography process verification or checking (LPC or LFD), and chemical–mechanical polish analysis (CMPA). Enabling this level of verification requires both process specific information, as well as details of the expected behavior of the components implemented into the design layout. This information typically provided by the foundry or fab, targets the manufacture of the design in the form of rule files, which are typically ASCII files, written in tool proprietary syntaxes that may be left readable to the user or may be encrypted.

PV for photonics will differ from that of the IC world. Rather than pushing electrons through metal wires and vias, photons are being passed through waveguides. This has an impact on the LVS and the PEX aspects of the design flow, as the device and interconnect physics applied is now different.

4.2.8.1 Design Rule Checking

DRC ensures that the geometric layout of a design, as represented in GDSII or OASIS data formats, adheres to the foundry’s prescribed rules in order to achieve acceptable yields. An IC design must go through DRC compliance, or “sign-off,” which is the fundamental procedure for a foundry to accept a design for fabrication. DRC results obtained from an automated DRC tool from a trusted EDA provider validates that a particular design adheres to the physical constraints imposed by the technology.

Traditional integrated circuit DRC uses one-dimensional measurements of geometries and spacing to determine rule compliance. However, photonic layout designs include nonrectilinear shapes, such as curves, spikes, and tapers , which require an extended DRC methodology to ensure reliability and scalability for fabrication (Fig. 4.12). These shapes expand the complexity of the DRC task—in some cases it may not be possible to describe completely the physical constraints with traditional one-dimensional DRC rules.

One technique used is upfront scaling of the design by a factor of 10,000× so that snapping and rounding issues are alleviated. However, some conventional EDA tools snap curvilinear shapes to grid lines during layout. Such snapping renders this technique useless for conjoint photonic structures, which are formed by abutment of primitive shapes, since the intersection of these shapes may not lie on a grid point.

Another approach relies on a DRC capability called equation-based DRC (eqDRC ), which works well with photonic designs [25]. This facility extends traditional DRC technology with a programmable modeling engine that allows users to define multidimensional feature measurements with flexible mathematical expressions. EqDRC can be used to develop, calibrate, and optimize models for design analysis and verification.

4.2.8.1.1 False Errors Induced by Curvilinear Structures

Current EDA DRC tools support layout formats such as GDSII , where polygons represent all geometric shapes. The vertices of these polygons snap to a grid, the size of which is specified by the technology or process node. Traditional DRC tools are optimized to operate on rectilinear shapes. However, photonic designs involve curvilinear shapes to create various device structures as well as in waveguide routing to minimize internal losses. The design fragments into sets of polygons that approximate the curvilinear shape for geometrical manipulation in DRC and other processes to handle curved shapes. These result in discrepancies between the intended design and what the DRC tool measures.

While this discrepancy of a few nanometers (dependent on the grid size) is negligible compared to a typical waveguide design with a width of 450 nm, its impact on DRC is significant. The tiniest geometrical discrepancies, which DRC reports, can add up to an enormous number of DRC violations (hundreds and thousands of errors on a single device), which makes the design nearly impossible to debug. Figure 4.13 shows a curved waveguide design layer, with the inset figure showing a DRC violation of minimum width. Although the waveguide is correctly designed, there is a discrepancy in width value between the design layer (off-grid) and the fragmented polygon layer (on-grid), creating a false width error.

The green waveguide is an example of an off-grid, curved waveguide design layer, while the red polygon is an example of the on-grid, fragmented polygon layer. The inset shows an enlarged view including the polygon layer that flags the width error of the waveguide. The polygon vertices are on-grid, which results in the discrepancy in the width measurement

Even though the designers carefully followed the design rules, a significant number of false DRC errors are reported. The extensive presence of curvilinear shapes in photonics design makes debugging or manually waiving these errors both time-consuming and prone to human error and is a typical scenario where designers can take advantage of eqDRC capabilities. They can use the DRC tool to query various geometrical properties (including the properties of error layers), and perform further manipulations on them with user-defined mathematical expressions to filter out the false DRC errors. In addition to knowing whether the shape passes or fails the DRC rule, users can also determine the amount of error, apply tolerances to compensate for the grid snapping effects, perform checks on property values, and process the data with mathematical expressions.

To illustrate this approach, one can compare the traditional technique with an eqDRC implementation. First, let us examine the result given by a traditional DRC format. A conventional width check can be written as 4.1:

where width stands for the DRC operation or operations that generate the error layer under a specified width constraint (smaller than w), and wg is the name for the waveguide layer that is examined by the width operation.

Using eqDRC , the width check can be extended as follows:

where the conditional statement evaluates whether the waveguide polygon is non-Manhattan -like (i.e., curvilinear, based on the user’s definition). Then tol is a tolerance value that is set to discriminate for any possible error induced by grid snapping. Combining with the Boolean expression, the rule functions as a traditional check while also incorporating the user-specified conditional and mathematical expressions needed to minimize false errors. Debugging also becomes much easier, with property values (e.g., the error width, the adjusted width, and the amount of adjustment needed) visually displayed on the layout.

4.2.8.1.2 Multidimensional Rule Check on Tapered Structures

Another important photonic design feature that does not exist in IC design is the taper , or spike, which is when, in any geometrical facet, the two adjacent edges are not parallel to each other (Fig. 4.14). This kind of geometry exists intentionally in the waveguide structure, where the optical mode profile is modified according to the cross-sectional variation, including the width from the layout view and the depth determined by the technology. The DRC width checks to ensure that fabrication of these structures must flag those taper ends when thinned down too far, which can lead to breakage, and possible diffusion to other locations on the chip to create physical defects. It also holds true for the DRC spacing checks in the case of taper -like spacing.

A primitive rule to describe this constraint could be stated as:

This primitive rule is a simple way of describing the width constraint for a tapered design. It differs from the conventional width rule for IC design in that it involves the angle parameter in addition to the width, which allows more flexibility in this kind of feature, which is typical for photonic designs. However, the implementation of the rule is impossible with one-dimensional traditional DRC since more than one parameter must be evaluated at the same time.

Conversely, using eqDRC capability, users can code a multidimensional rule:

where angle stands for the DRC operation that evaluates the angle of the tapered end with a width condition (smaller than w), which means that users can perform checks that were not previously allowed by traditional DRC .

These are just a couple of examples of the issues involved in DRC for photonic circuits. Because photonic circuit design requires a wide variety of geometrical shapes that do not exist in CMOS IC designs, traditional DRC methods are unable to fulfill the requirements for reliable and consistent geometrical verification of such layouts. However, the addition of photonics property libraries and the ability to interface these libraries with a programmable engine to perform mathematical calculations mean that photonic designs can enjoy an elegant solution for an accurate, efficient, and easy debugging DRC approach for PICs. Such an approach helps effectively filter false errors, enables multidimensional rule checks to perform physical verification that was previously impossible, and implements user-defined models for a more accurate and efficient geometrical verification that finds errors that would otherwise be missed.

In addition to the traditional EDA DRC solutions, there are tools that from nature are coping with all angle designs. These tools provide a relevant set of DRC capabilities especially targeting the curvilinear structures so common in PIC design.

4.2.8.1.3 Density and Fill Insertion

An additional part of DRC is to check adherence to density rules. Density rules check the ratios of given layers within a region across the chip and are used to ensure that they meet the manufacturing requirements as dictated by the chemical–mechanical planarization (CMP), etch ing, and other parts of the manufacturing process. They ensure that no one portion of a design has too much or too little of a given layer to cause a problem.

In the case where a region is too dense, the only recourse is to modify the design to spread the structures out. In the case where a region is insufficiently dense, however, fill techniques can be used to help correct the problem. Fill shapes are geometric structures with one or more layers inserted into the layout, but not connected to any of the circuit components. Because these serve no function in the circuit itself, they are often referred to as “dummy” objects.

In electronics, the DRC tools are used to insert these dummy fill objects into the layout. The simplest approach is to identify low-density areas and then fill them as much as possible with rectangular dummies, ensuring that these structures do not interact or come to close to existing circuit geometries. This approach, however, can be overly corrective. Adding too many dummies may cause two neighboring regions to now have vastly different densities, causing new manufacturing problems. Also, these dummy structures may still have some impact on the neighboring circuit structure behavior.

For these reasons, new fill techniques have been introduced. Referred to as “smart fill”, these techniques are designed to be aware of the full circuit density from a local scope in the neighborhood of each local region, to the entire circuit. With this knowledge, the tools can automate the insertion of fill structures to enable the fewest geometries required to meet all density requirements, without overfilling. These approaches also enable the creation and placement of more complex structures including multiple layers and hierarchical cell structures.

These smart fill techniques are also implemented for use in photonics layout, either by the layout tool directly or in the post-processing step with DRC tools. Separate filling rules can be set to separate out the spacing required for fill shapes and circuit topology shapes based on the device type. Impact on the optical behavior of the circuit can be significantly reduced by reducing the number of added fill shapes.

4.2.8.2 Layout Versus Schematic

In a traditional IC process, designers create a design based on the desired electrical behavior, typically using a schematic capture tool. Next, they simulate the circuit’s performance using foundry device models, usually in the SPICE format, to ensure the achieved behavior. Finally, they build a layout to implement the schematic design. As noted, this layout must comply with the foundry’s process design rules, which is confirmed by passing the design layout, typically in a layout format such as GDSII , to a DRC tool ensure that the drawn layout can be manufactured. It does not guarantee that the silicon represented by the layout will behave as designed and simulated. To achieve expected behavior, the physical circuit design is validated using an LVS comparison flow. The LVS flow reads the physical layout and extracts a netlist that represents its electrical structure in the form of a SPICE circuit representation. A comparison of this extracted netlist to the original netlist simulation is then made. If they match, the designer has confidence that the layout is both manufacturable and correctly implements the intended performance. When they do not match, error details can be provided to help the designer fix and debug common errors such as short circuits, open circuits, or incorrect devices.

4.2.8.2.1 Challenges of Silicon Photonics for LVS

However, this process flow does not work well for silicon photonics. While photonic design shares many similarities with custom analog IC design at a high level, the challenge is in the details. Although silicon photonics design also relies heavily on early model simulations, SPICE does not have the sophistication required to simulate optical devices, as described before. Most notably, a large portion of a PIC design is made out of custom cells or parameterized building blocks, and only a fraction is composed of the pre-characterized components from the library or PDK .

Another complication in LVS for photonic circuits lies in the unusual nature of the devices. The typical LVS flow goes through three stages: recognition of the devices in the layout, characterization of the devices, and comparison of the device connectivity and parameters with those in the schematics. The first step presents a relatively small challenge because the photonic devices are formed from easily recognizable patterns. However, the complexity and curved nature of these patterns make device characterization very difficult [26]. The performance of the photonic devices depends on many details of the complex shapes that form the devices, as well as adjacent layout features (Fig. 4.15).

Figure 4.16 shows a simple ring resonator device. There are four pins—In1, In2, Out1, and Out2. Six parameters (all of which can vary independently) are relevant to the behavior of this device—Rin, Rout, Gap_length1, Gap_width1, Gap_length2, and Gap_width2. If any parameter differs from the intended value, the device will not implement the intended behavior. Given the curved nature of the Rin and Rout, it is hard to represent the design accurately in GDSII , which is a rectilinear format (i.e., straight lines), used to represent the shapes and their locations in the physical design. As a result, inaccuracies in the radii of a curved photonic device can occur, which may be significant enough to cause a functionality problem.

The traditional approach to device characterization in LVS is to collect all layout objects around the device that could possibly affect its performance, and take measurements to describe the interactions between these features and the device itself, such as distances and projection lengths. These measurements are substituted into closed-form expressions, either based on first-principle theories (i.e., physical equations) or by empirical curve fitting techniques.

However, this approach fails when many features can affect the device, or the nature of the interaction between layout objects cannot be captured with sufficient accuracy by a few simple measurements, which is the case for photonic devices. This situation is very similar to the problems faced by analog circuit designers, where device performance sometimes depends on mutual capacitances and inductances of thousands of layout objects, in addition to the few objects making up the device itself.

4.2.8.2.2 Adjustments to the LVS Flow

One possible solution is to forgo characterization based on precise measurements, and instead recognizes devices from a set of known patterns, including both the primary device features and the surrounding “halo” of layout shapes. The devices can be pre-characterized using existing silicon photonics simulators. If necessary, a small number of degrees of variability can be introduced into the pattern, but, for the most part, the device in the layout must match one of the pre-characterized patterns exactly. When the designer implements these pre-characterized devices in the layout, the LVS tool can extract the device, measure its relevant parameters, and compare them to the pre-characterized pattern. Any device that is not found in the pattern library is flagged as an unknown device and considered a layout error.