Abstract

Introduced in this paper is a family of statistics, G, that can be used as a measure of spatial association in a number of circumstances. The basic statistic is derived, its properties are identified, and its advantages explained. Several of the G statistics make it possible to evaluate the spatial association of a variable within a specified distance of a single point. A comparison is made between a general G statistic and Moran’s I for similar hypothetical and empirical conditions. The empirical work includes studies of sudden infant death syndrome by county in North Carolina and dwelling unit prices in metropolitan San Diego by zip-code districts. Results indicate that G statistics should be used in conjunction with I in order to identify characteristics of patterns not revealed by the I statistic alone and, specifically, the G i and G i ∗ statistics enable us to detect local “pockets” of dependence that may not show up when using global statistics.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Spatial Autocorrelation

- Sudden Infant Death Syndrome

- Spatial Association

- Common Neighbor

- Standard Normal Variate

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The importance of examining spatial series for spatial correlation and autocorrelation is undeniable. Both Anselin and Griffith (1988) and Arbia (1989) have shown that failure to take necessary steps to account for or avoid spatial autocorrelation can lead to serious errors in model interpretation. In spatial modeling, researchers must not only account for dependence structure and spatial heteroskedasticity, they must also assess the effects of spatial scale. In the last twenty years a number of instruments for testing for and measuring spatial autocorrelation have appeared. To geographers, the best-known statistics arc Moran’s I and, to a lesser extent, Geary’s c (Cliff and Ord, 1973). To geologists and remote sensing analysts, the semi-variance is most popular (Davis, 1986). To spatial econometricians, estimating spatial autocorrelation coefficients of regression equations is the usual approach (Anselin, 1988).

A common feature of these procedures is that they are applied globally, that is, to the complete region under study. However, it is often desirable to examine pattern at a more local scale, particularly if the process is spatially nonstationary. Foster and Gorr (1986) provide an adaptive filtering method for smoothing parameter estimates, and Cressie and Head (1989) present a modeling procedure. The ideas presented in this paper are complementary to these approaches in that we also focus upon local effects, but from the viewpoint of testing rather than smoothing.

This paper introduces a family of measures of spatial association called G statistics. These statistics have a number of attributes that make them attractive for measuring association in a spatially distributed variable. When used in conjunction with a statistic such as Moran’s I, they deepen the knowledge of the processes that give rise to spatial association, in that they enable us to detect local “pockets” of dependence that may not show up when using global statistics. In this paper, we first derive the statistics G i (d) and G(d), then outline their attributes. Next, the G(d) statistic is compared with Moran’s I. Finally, there is a discussion of empirical examples. The examples are taken from two different geographic scales of analysis and two different sets of data. They include sudden infant death syndrome (SIDS) by county in North Carolina, and house prices by zip-code district in the San Diego metropolitan area.

2 The G i (d)Statistic

This statistic measures the degree of association that results from the concentration of weighted points (or area represented by a weighted point) and all other weighted points included within a radius of distance d from the original weighted point. We are given an area subdivided into n regions, i = 1, 2, …, n, where each region is identified with a point whose Cartesian coordinates are known. Each i has associated with it a value x (a weight) taken from a variable X. The variable has a natural origin and is positive. The G i (d) statistic developed below allows for tests of hypotheses about the spatial concentration of the sum of x values associated with the j points within d of the ith point.

The statistic is

where {w ij } is a symmetric one/zero spatial weight matrix with ones for all links defined as being within distance d of a given i; all other links are zero including the link of point i to itself. The numerator is the sum of all x j within d of i but not including x i . The denominator is the sum of all x j not including x i .

Adopting standard arguments (cf. Cliff and Ord, 1973, pp. 32–33), we may fix the value x i for the ith point and consider the set of (n − 1)! random permutations of the remaining x values at the j points. Under the null hypothesis of spatial independence, these permutations are equally likely. That is, let X j be the random variable describing the value assigned to point j, then

and \(E({X}_{j}) ={ \sum }_{r\neq i}^{}{x}_{r}/(n - 1).\) Thus

where W i = ∑ j w ij (d).

Similarly,

Since

and

Recalling that the weights are binary

and so

Thus

If we set \(\frac{{\sum }_{j}{x}_{j}} {(n-1)} = {Y }_{i1}\) and \(\frac{{\sum }_{j}{x}_{j}^{2}} {(n-1)} - {Y }_{i1}^{2} = {Y }_{ i2},\) then

As expected, Var(G i ) = 0 when W i = 0 (no neighbors within d), or when \({W}_{i} = n - 1\) (all n − 1 observations are within d), or when Y i2 = 0 (all n − 1 observations are equal).

Note that W i , Y i1, and Y i2 depend on i. Since G i is a weighted sum of the variable X j , and the denominator of G i is invariant under random permutations of {x j , j≠i}, it follows, provided \({W}_{i}/(n - 1)\) is bounded away from 0 and from 1, that the permutations distribution of G i under H o approaches normality as n → ∞; cf. Hoeffding (1951) and Cliff and Ord (1973, p. 36). When d, and thus W i is small, normality is lost, and when d is large enough to encompass the whole study area, and thus \((n - 1 - {W}_{i})\) is small, normality is also lost. It is important to note that the conditions must be satisfied separately for each point if its G, is to be assessed via the normal approximation.

Table 10.1 shows the characteristic equations for G i (d) and the related statistic, G i ∗(d), which measures association in cases where the j equal to i term is included in the statistic. This implies that any concentration of the x values includes the x at i. Note that the distribution of G i ∗(d) is evaluated under the null hypothesis that all n! random permutations are equally likely.

3 Attributes of G i Statistics

It is important to note that G i is scale-invariant (Y i = bX i yields the same scores as X i ) but not location-invariant (\({Y }_{i} = a + {X}_{i}\) gives different results than X i ). The statistic is intended for use only for those variables that possess a natural origin. Like all other such statistics, transformations like Y i = logX i , will change the results.

G i (d) measures the concentration or lack of concentration of the sum of values associated with variable X in the region under study. G i (d) is a proportion of the sum of all x j values that are within d of i. If, for example, high-value x j s are within d of point i, then G i (d) is high. Whether the G i (d) value is statistically significant depends on the statistics distribution.

Earlier work on a form of the G i (d) statistic is in Getis (1984), Getis and Franklin (1987), and Getis (1991). Their work is based on the second-order approach to map pattern analysis developed by Ripley (1977).

In typical circumstances, the null hypothesis is that the set of x values within d of location i is a random sample drawn without replacement from the set of all x values. The estimated G i (d) is computed from (10.1) using the observed x j values. Assuming that G i (d) is approximately normally distributed, when

is positively or negatively greater than some specified level of significance, then we say that positive or negative spatial association obtains. A large positive Z i implies that large values of x j (values above the mean x j ) are within d of point i. A large negative Z i means that small values of x j are within d of point i.

A special feature of this statistic is that the pattern of data points is neutralized when the expectation is that all x values are the same. This is illustrated for the case when data point densities are high in the vicinity of point i, and d is just large enough to contain the area of the clustered points. Theoretical G i (d) values are high because W i is high. However, only if the observed x, values in the vicinity of point i differ systematically from the mean is there the opportunity to identify significant spatial concentration of the sum of x j s. That is, as data points become more clustered in the vicinity of point i, the expectation of G i (d) rises, neutralizing the effect of the dense cluster of j values.

In addition to its above meaning, the value of d can be interpreted as a distance that incorporates specified cells in a lattice. It is to be expected that neighboring G i will be correlated if d includes neighbors. To examine this issue, consider a regular lattice. When n is large, the denominator of each G i is almost constant so it follows that corr (G i , G j ) proportion of neighbors that i and j have in common.

Example 1. Consider the rook’s case. Cell i has no common neighbors with its four immediate neighbors, but two with its immediate diagonal neighbors. The numbers of common neighbors are as illustrated below:

All the other cells have no common neighbors with i. Thus, the G-indices for the four diagonal neighbors have correlations of about 0.5 with G i , four others have correlations of about 0.25 and the rest are virtually uncorrelated.

For more highly connected lattices (such as the queen’s case) the array of nonzero correlations stretches further, but the maximum correlation between any pair of G-indices remains about 0.5.

Example 2.

Set \(A + B = 2m\), therefore \(\bar{x} = m\); n = 50;A ≥ 0;B ≥ 0;put \(A = m(l + c)\), \(B = m(l - c)\), 0 ≤ c ≤ 1

Using this example, the G i and G i ∗ statistics are compared in the following table.

We note that G i , and G i ∗ are similar in this case; if the central A was replaced by a B, Z(G i ) would be unchanged, whereas Z(G i ∗) drops to 4.25. Thus, G i and G i ∗ typically convey much the same information.

Example 3. Consider a large regular lattice for which we seek the distribution under H o for Gi ∗ with W i neighbors. Let p = proportion of A s = proportion of B s and \(1 - 2p =\) proportion of ms.

Let (k 1, k 2, k 3) denote the number of A s , B s , and ms, respectively so that \({k}_{1} + {k}_{2} + {k}_{3} = n\). For large lattices, in this case, the joint distribution is approximately tri(multi-)nomial with index W and parameters (p, p, 1 − 2p). Since \({G}_{i}^{{_\ast}} = [{W}_{i} + ({k}_{1} - {k}_{2})c]/n\) clearly \(E({G}_{i}^{{_\ast}}) = {W}_{i}/n\) as expected and \(V ({G}_{i}^{{_\ast}}) = 2pWi/n\), reflecting the large sample approximation. The distribution is symmetric and the standardized fourth moment is

This is close to 3 provided pW i is not too small.

Since we are using G i , and G i ∗ primarily in a diagnostic mode, we suggest that W ≥ 8 at least (that is, the queen’s case), although further work is clearly necessary to establish cut-off values for the statistics.

4 A General GStatistic

Following from these arguments, a general statistic, G(d) can be developed. The statistic is general in the sense that it is based on all pairs of values (x i , x j ) such that i and j are within distance d of each other. No particular location i is fixed in this case. The statistic is

The G-statistic is a member of the class of linear permutation statistics, first introduced by Pitman (1937). Such statistics were first considered in a spatial context by Mantel (1967) and Cliff and Ord (1973), and developed as a general cross-product statistic by Hubert (1977, 1979) and Hubert et al. (1981).

For (10.5),

so that

The variance of G follows from Cliff and Ord (1973, pp. 70–71):

where \({m}_{j} ={ \sum }_{j=1}{{x}_{i}}^{j},j = 1,2,3,4\) and \({n}^{(r)} = n(n - 1)(n - 2)\cdots (n - r + 1).\) The coefficients, B, are

where \({S}_{1} = 1/2{\sum }_{i}{ \sum }_{j}{({w}_{ij} + {w}_{ji})}^{2}\), j not equal to i and \({S}_{2} = 1/2{\sum }_{i}{({w}_{i.} + {w}_{.i})}^{2}\); w i. = ∑ j w ij , j not equal to i; thus

5 The G(d) Statisticand Moran’s ICompared

The G(d) statistic measures overall concentration or lack of concentration of all pairs of (x i , x j ) such that i and j are within d of each other. Following (10.5), one finds G(d) by taking the sum of the multiples of each x i with all x j s within d of all i as a proportion of the sum of all x i x j . Moran’s I, on the other hand, is often used to measure the correlation of each x i with all x j s within d of i and, therefore, is based on the degree of covariance within d of all x i . Consider K 1, K 2 as constants invariant under random permutations. Then using summation shorthand we have

and

where w i. = ∑ j w ij and w . i = ∑ j w ji .

Since both G(d) and I(d) can measure the association among the same set of weighted points or areas represented by points, they may be compared. They will differ when the weighted sums ∑w i. x i and ∑w . i x i differ from \(W\bar{x}\), that is, when the patterns of weights are unequal. The basic hypothesis is of a random pattern in each case. We may compare the performance of the two measures by using their equivalent Z values of the approximate normal distribution.

Example 4.

Let us use the lattice of Example 2. As before,

Set \(A + B = 2m\), therefore \(\bar{x} = m\); n = 50;

A ≥ 0;

B ≥ 0;

put \(A = m(1 + c),B = m(1 - c),0 \leq c \leq 1\).

In addition, put

For the rook’s case, \(W = \sum \sum {w}_{ij} = 170\).

for all choices of a, m.

When \(c = 0,A = B = m\), and G is a minimum.

When \(c = I,A = 2m,B = 0\), and G is a maximum.

G depends on the relative absolute magnitudes of the sample values. Note that I is positive for any A and B, while G values approach a maximum when the ratio of A to B or B to A becomes large.

Example 5.

\(A,B,\bar{x},n,W\) as in Examples 2 and 4.

Neither statistic can differentiate between a random pattern and one with little spatial variation. Contributions to G(d) are large only when the product x i x j is large, whereas contributions to I(d) are large when \(({x}_{i} - m)({x}_{j} - m)\) is large. It should be noted that the distribution is nowhere near normal in this case.

Example 6.

\(A,B,\bar{x},n,W\) as in the above examples.

The juxtaposition of high values next to lows provides the high negative covariance needed for the strong negative spatial autocorrelation Z(I), but it is the multiplicative effect of high values near lows that has the negative effect on Z(G).

Table 10.2 gives some idea of the values of Z(G) and Z(I) under various circumstances. The differences result from each statistics structure. As shown in the examples above, if high values within d of other high values dominate the pattern, then the summation of the products of neighboring values is high, with resulting high positive Z(G) values. If low values within d of low values dominate, then the sum of the product of the xs is low resulting in strong negative Z(G) values. In the Moran’s case, both when high values are within d of other high values and low-values are within d of other low values, positive covariance is high, with resulting high Z(I) values.

6 General Discussion

Any test for spatial association should use both types of statistics. Sums of products and covariances are two different aspects of pattern. Both reflect the dependence structure in spatial patterns. The I(d) statistic has its peculiar weakness in not being able to discriminate between patterns that have high values dominant within d or low values dominant. Both statistics have difficulty discerning a random pattern from one in which there is little deviation from the mean. If a study requires that I(d) or G(d) values be traced over time, there are advantages to using both statistics to explore the processes thought to be responsible for changes in association among regions. If data values increase or decrease at the same rate, that is, if they increase or decrease in proportion to their already existing size, Moran’s I changes while G(d) remains the same. On the other hand, if all x values increase or decrease by the same amount, G(d) changes but I(d) remains the same. It must be remembered that G(d) is based on a variable that is positive and has a natural origin. Thus, for example, it is inappropriate to use G(d) to study residuals from regression. Also, for both I(d) and G(d) one must recognize that transformations of the variable X result in different values for the test statistic. As has been mentioned above, conditions may arise when d is so small or large that tests based on the normal approximation are inappropriate.

7 Empirical Examples

The following examples of the use of G statistics were selected based on size and type of spatial units, size of the x values, and subject matter. The first is a problem concerning the rate of SIDS by county in North Carolina, and the second is a study of the mean price of housing units sold by zip-code district in the San Diego metropolitan region. In both cases the data arc explained, hypotheses made clear, and G(d) and I(d) values calculated for comparable circumstances.

7.1 Sudden Infant Death Syndrome by Countyin North Carolina

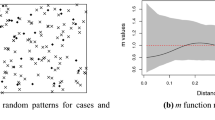

SIDS is the sudden death of an infant 1-year old or less that is unexpected and inexplicable after a postmortem examination (Cressie and Chan, 1989). The data presented by Cressie and Chan were collected from a variety of sources cited in the article. Among other data, the authors give the number of SIDs by county for the period 1979–1984, the number of births for the same period, and the coordinates of the counties. We use as our data the number of SIDs as a proportion of births multiplied by 1,000 (see Fig. 10.1). Since no viral or other causes have been given for SIDS, one should not expect any spatial association in the data. To some extent, high or low rates may be dependent on the health care infants receive. The rates may correlate with variables such as income or the availability of physicians’ services. In this study we shall not expect any spatial association.

Table 10.3 gives the values for the standard normal variate of I and G for various distances.

Results using the G statistic verify the hypothesis that there is no discernible association among counties with regard to SIDS rates. The values of Z(G) are less than one. In addition, there seems to be no smooth pattern of Z values as d increases. The Z(I) results are somewhat contradictory, however. Although none are statistically significant at the 0.05 level, Z(I) values from 30–50 miles, about the distance from the center of each county to the center of its contiguous neighboring counties, are well over one. This represents a tendency toward positive spatial autocorrelation at those distances. Taking the two results together, one should be cautious before concluding that a spatial association exists for SIDS among counties in North Carolina. Perhaps more light can be shed on the issue by using the G i (d) and G i ∗(d) statistics.

Table 10.4 and Fig. 10.2 give the results of an analysis based on the G i (d) and G i ∗(d) statistics for a d of 33 miles. This represents the distance to the furthest first-nearest neighbor county of any county.

The G i ∗(d) statistic identifies five of the one hundred counties of North Carolina as significantly positively or negatively associated with their neighboring counties (at the 0.05 level). Four of these, clustered in the central south portion of the state, display values greater than +1.96, while one county, Washington near Albemarle Sound, has a Z value of less than 1.96 (see Fig. 10.2). Taking into account values greater than +1.15 (the 87.5 percentile), it is clear that several small clusters in addition to the main cluster are widely dispersed in the southern part of the state. The main cluster of values less than 1.15 (the 12.5 percentile) is in the eastern part of the state. It is interesting to note that many of the counties in this cluster are in the sparsely populated swamp lands surrounding the Albemarle and Pamlico Sounds. If overall error is fixed at 0.05 and a Bonferroni correction is applied, the cutoff value for each county is raised to about 3.50. However, such a figure is unduly conservative given the small numbers of neighbors.

In this case it becomes clear that an overall measure of association such as G(d) or I(d) can be misleading because it prompts one to dismiss the possibility of significant spatial clustering. The G i (d) statistics, however, are able to identify the tendency for positive spatial clustering and the location of pockets of high and low spatial association. It remains for the social scientist or epidemiologist to explain the subtle patterns shown in Fig. 10.2.

7.2 Dwelling Unit Prices in San Diego Countyby Zip-Code Area, September 1989

Data published in the Los Angeles Times on October 29, 1989, give the adjusted average price by zip code for all new and old dwelling units sold by builders, real estate agents, and homeowners during the month of September 1989 in San Diego County (see appendix). The data are supplied by TRW Real Estate Information Services. One outlier was identified: Rancho Santa Fe, a wealthy suburb of the city of San Diego, had prices of sold dwelling units that were nearly three times higher than the next highest district (La Jolla). Since neither statistic is robust enough to be only marginally affected by such an observation, Rancho Santa Fe was not considered in the analysis.

Although the city of San Diego has a large and active downtown, San Diego County is not a monocentric region. One would not expect housing prices to trend upward from the city center to the suburbs in a uniform way. One would expect, however, that since the data are for reasonably small sections of the metropolitan area, that there would be distinct spatial autocorrelation tendencies (see Fig. 10.3). High positive I values are expected. G(d) values are dependent on the tendencies for high values or low values to group, if the low cost areas dominate, the G(d) value is negative. In this case, G(d) is a refinement of the knowledge gained from I.

Table 10.5 shows that there are strong positive values for Z(I) for distances of 4 miles and greater. Z(G) also shows highly significant values at 4 miles and beyond, but here the association is negative, that is, low values near low values are much more influential than are the high values near high values. Moran’s I clearly indicates that there is significant spatial autocorrelation, but, without knowledge of G(d), one might conclude that at this scale of analysis, in general, high income districts are significantly associated with one another.

By looking at the results of the G i (d) statistics analysis for d equal to five, the individual district pattern is unmistakable. The Z(G i ∗(5)) values shown in Table 10.6 and Fig. 10.4 provide evidence that two coastal districts are positively associated at the 0.05 level of significance while eight central and south central districts are negatively associated at the 0.05 level. There is a strong tendency for the negative values to be higher. It is for this reason that the Z(G) values given above are so decidedly negative. The districts with high values along the coast have fewer near neighbors with similar values than do the central city lower value districts. The cluster of districts with negative Z(G ∗) values dominates the pattern. The adjusted Bonferroni cutoff is about 3.27, but again is overly conservative.

8 Conclusions

The G statistics provide researchers with a straightforward way to assess the degree of spatial association at various levels of spatial refinement in an entire sample or in relation to a single observation, when used in conjunction with Moran’s I or some other measure of spatial autocorrelation, they enable us to deepen our understanding of spatial series. One of the G statistics’ useful features, that of neutralizing the spatial distribution of the data points, allows for the development of hypotheses where the pattern of data points will not bias results.

When G statistics are contrasted with Moran’s I, it becomes clear that the two statistics measure different things. Fortunately, both statistics are evaluated using normal theory so that a set of standard normal variates taken from tests using each type of statistic are easily compared and evaluated.

9 Appendix

References

Anselin L (1988) Spatial econometrics: methods and models. Kluwer, Dordrecht

Anselin L, Griffith DA (1988) Do spatial effects really matter in regression analysis? Pap Reg Sci 65:11–34

Arbia G (1989) Spatial data configuration in statistical analysis of regional economic and related problems. Kluwer, Dordrecht

Cliff AD, Ord JK (1973) Spatial autocorrelation. Pion, London

Cressie N, Chan N (1989) Spatial modeling of regional variables. J Am Stat Assoc 84:393—401

Cressie N, Head THC (1989) Spatial data analysis of regional counts. Biom J 31:699–719

Davis JC (1986) Statistics and data analysis in geology. Wiley, New York

Foster SA, Gorr WL (1986) An adaptive filter for estimating spatially-varying parameters: application to modeling police hours spent in response to calls for service. Manage Sci 32:878–889

Getis A (1984) Interaction modeling using second-order analysis. Environ Plan A 16:173–183

Getis A (1991) Spatial interaction and spatial autocorrelation: a cross-product approach. Environ Plan A 23:1269–1277

Getis A, Franklin J (1987) Second-order neighborhood analysis of mapped point patterns. Ecology 68:473–477

Hoeffding W (1951) A combinatorial central limit theorem. Ann Math Stat 22:558–566

Hubert L (1977) Generalized proximity function comparisons. Br J Math Stat Psychol 31:179–182

Hubert L, Golledge RG, Costanzo C (1981) Generalized procedures for evaluating spatial autocorrelation. Geogr Anal 13:224–233

Mantel N (1967) The detection of disease clustering and a generalized regression approach. Cancer Res 27:209–220

Pitman EJG (1937) The ‘closest’ estimates of statistical parameters. Biometrika 58:299–312

Ripley BD (1977) Modelling spatial patterns (with discussion). J R Stat Soc B 39:172–212

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2010 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Getis, A., Ord, J.K. (2010). The Analysis of Spatial Association by Use of Distance Statistics. In: Anselin, L., Rey, S. (eds) Perspectives on Spatial Data Analysis. Advances in Spatial Science. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-01976-0_10

Download citation

DOI: https://doi.org/10.1007/978-3-642-01976-0_10

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-01975-3

Online ISBN: 978-3-642-01976-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)