Abstract

Today, human-computer interfaces are increasingly more often used and become necessary for human daily activities. Among some remarkable applications, we find: Wireless-computer controlling through hand movement, wheelchair directing/guiding with finger motions, and rehabilitation. Such applications are possible from the analysis of electromyographic (EMG) signals. Despite some research works have addressed this issue, the movement classification through EMG signals is still an open challenging issue to the scientific community -especially, because the controller performance depends not only on classifier but other aspects, namely: used features, movements to be classified, the considered feature-selection methods, and collected data. In this work, we propose an exploratory work on the characterization and classification techniques to identifying movements through EMG signals. We compare the performance of three classifiers (KNN, Parzen-density-based classifier and ANN) using spectral (Wavelets) and time-domain-based (statistical and morphological descriptors) features. Also, a methodology for movement selection is proposed. Results are comparable with those reported in literature, reaching classification errors of 5.18% (KNN), 14.7407% (ANN) and 5.17% (Parzen-density-based classifier).

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Electromyographic signals (EMG) are graphical recordings of the electrical activity produced by the skeletal muscles during movement. The analysis of EMG signals has traditionally been used in medical diagnostic procedures, and more recently its applicability in human-machine control interfaces (HCIs) designing has increased, becoming -at some extent- indispensable for the activities of people’s lives. Some remarkable applications of EMG-based HCI to mention are: Wireless-computer controlling through hand movement, wheelchair directing/guiding with finger motions, and rehabilitation [1, 2].

The muscle-signal-based control is possible thanks to the development of fields such as microprocessors, amplifiers, signal analysis, filtering and pattern recognition techniques. One of the main branches in the investigation of EMG signal recognition is that one aiming to identify features providing a better description of a specific movement. Often, such an identification process results in a difficult task since this kind of signals are sensitive to several artifacts, such as noise from electronic components, the action potentials that activate the muscles, the patient’s health, physical condition, and hydration level, among others [3]. For this reason, it is essential to well-perform a preprocessing stage so that such artifacts can be corrected or mitigated and therefore a proper, cleaner EMG signal is obtained, being more suitable for any today’s application, e.g. a prosthesis’ control.

Along with adequate acquisition and preprocessing, EMG signals also require a characterization procedure consisting of extracting the most representative/informative, separable features and measures from the original signal so that the subsequent classification task may work well. That said, every signal EMG-signal processing stage plays a crucial role in the automatic movement identification [4,5,6]. Despite this research problem has a lot of manners to be addressed, it still lacks a definite solution and then remains a challenging, open issue.

Consequently, in this work, we present an exploratory study on characterization and classification techniques to identify movements through EMG signals. In particular, the spectral features (wavelet coefficients), temporal features and statistics are used (Area under the curve, absolute mean value, effective value, standard deviation, variance, median, entropy) [1, 4, 7, 8]. The characterization of the signal, leaves a matrix of large dimensions so it is necessary to make a dimension reduction. Two processes are performed to achieve a good reduction in size, the first is the selection of movements, proposed methodology of comparison between movements, seeking for which have a greater differentiability and present a lower error in their classification. The second process, is a selection of features, consists of the calculation of contribution of each feature to the classification, is carried out through the WEKA program and its RELIEF algorithm [9, 10]. Finally, the performance comparison of three machine learning techniques, K-Nearest Neighbors (KNN), Artificial Neural Network (ANN) and classifier based on Parzen density. Each of the stages is developed and explained in depth in the text.

The rest of this paper is structured as follows: Sect. 2 describes the stages of the EMG signal classification procedure for movement identification purposes as well as the database used for experiments. Section 3 presents the proposed experimental setup. Results, Discussion and Future work are gathered in Sect. 4.

2 Materials and Methods

This section describes the proposed scheme to explore the classification effectiveness on upper limb movements identification through different machine learning techniques. Broadly, our scheme involves stages for preprocessing, segmentation, characterization, movement selection, feature selection and classification as depicted in the explaining block diagram from Fig. 1.

2.1 Database

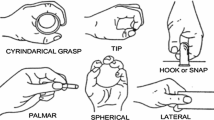

The database considered in this study is available at the Ninaweb repository from Ninapro project [7]. It contains the upper-limb-electromyographic activity of 27 healthy people, performing 52 movements, namely: 12 movements of fingers, 8 isometric and isotonic configurations of the hand, 9 wrist movements and 23 functional grip movements. Such movements were selected from relevant literature as well as rehabilitation guides. Also, the Ninapro database includes an acquisition protocol, and brief descriptions of the subjects involved in the data collection. Muscle activity is recorded by 10 double differential electrodes at a sampling rate of 100 Hz. The position of the hand is registered through a Dataglove and a inclinometer. The Electrodes are equipped with an amplifier, and a pass filter and a RMS rectifier. The amplification has a factor of 14000. Eight electrodes are placed uniformly around the forearm using an elastic band, at a constant distance, just below the elbow. In turn, two additional electrodes are placed in the long flexor and extensor of the forearm [4].

By obeying experimental protocol, each subject sit down in a chair, in front of a table with a big monitor. While the electrodes, Dataglove and inclinometer are working, the patient repeats 10 times each of the 52 movements shown on the screen, as can be seen in Fig. 2. Each repetition takes 5 s, followed by a rest period of 3 s.

EMG signal acquisition protocol for Ninapro database oriented to movement identification [4].

2.2 Stages of System

Pre-processing. The amplitude and frequency features of the raw electromyography signal have been shown to be highly variable and sensitive to many factors, like Extrinsic factors (electrode position, skin preparation, among others) and Intrinsic factors (physiological, anatomical and biochemical features of the muscles and others) [3]. Some Normalization procedure is therefore necessary for the conversion of the signal to a scale relative to a known and repeatable value. For the structure of the database, the normalization was applied by electrode. An electrode is taken and the maximum value is found. Then, with this value the whole signal is divided.

Segmentation. At this stage, a procedure is performed of segmentation. By virtue, the database contains a tags vector, facilitating the trimming of the signals. The vector of tags indicates what action the patient was doing throughout the data collection process. A number 0 means that the patient is at rest and with the rest of the numbers that is making some movement, each movement has an identification number. So the segmentation consists, in taken the signal with its tag vector and eliminate all pauses, leaving only the signals of the movements. The trimmed and normalized electromyographic signals are stored in a data structure.

Characterization. The matrix of features is organized in the following way: In each row, the data corresponding to patient n is placed, which is performing a movement j and a repetition k and so on. For columns, a bibliographic review is made, obtaining 28 different features for the EMG signals, which are applied to each of the 10 electrodes. As mentioned above, the feature matrix has a size of 14040 per 280. Among the 28 features, there are two types that can be identified. For a better understanding, each one of them is explained below:

-

Temporal features: Made a reference to the variables that we can get of the signals that find in the time domain, and quantified each T seconds of time. The features used for this study are: Area under the curve, absolute mean value, rms value, standard deviation, variance, median, entropy, energy and power [1, 2, 11].

-

Spectral features: The time-frequency representation of a signal provides information of the distribution of its energy in the two domains, obtaining a more complete description of the physical phenomenon. The most common techniques used in the extraction of spectral are: the Fourier transform STFT (Short Time Fourier Transform), the continuous wavelet transform CWT, the discrete wavelet transform DWT and the wavelet packet transform WPT [5, 8, 11]. On one hand, the Fourier transform is widely used in the processing and analysis of signals, the results obtained after its application have been satisfactory in cases where the signals were periodic and sufficiently regular. The results are different when analyzing signals whose spectrum varies with time (non-stationary signals). On the other hand, the Wavelet transform is efficient for the local analysis of non-stationary signals and of rapid transience. Like the Fourier Transform with a time window, locates the signal into a time-scale representation. The temporal aspect of the signals is preserved. The difference is that the Transformed Wavelet provides a multi-resolution analysis with dilated window. The transform of a Wavelet function is the decomposition of the function f(t) into a set of functions \(\psi _{s,\tau }\), providing a basis called Wavelets \(W_{f}\). The wavelet transform is defined as Eq. (1):

$$\begin{aligned} W_{f}(s,\tau )=\int f(t) \psi _{s,\tau }(t) dt \end{aligned}$$(1)Wavelets are generated from the transfer and scale change of a function \(\psi (t)\), called “Mother Wavelet", as detailed in Eq. 2.

$$\begin{aligned} \psi _{s,\tau }(t)=\frac{1}{\sqrt{s}} \psi (\frac{t-\tau }{s}) \end{aligned}$$(2)Where s is the scale factor, and \(\tau \) is the translation factor.

The wavelet coefficients can be calculated by a discrete algorithm implemented in the recursive application of discrete high-pass and low-pass filters. As shown in Fig. 3. A wavelet Daubechies 2 (db2) function is used, with 3 levels of decomposition to obtain the coefficients of the filters of analysis and of detail, then features are extracted to the wavelet coefficients as well as for the discretized signal over time.

Movements Selection. To reduce the number of movements, a comparison methodology is proposed. Involves taking a group of 2 movements, classify them and calculate their average error, then add one more movement and repeat successively the process until finishing with the 52 movements.

Different combinations are made to find the movements that, when classified, have the least possible error. In the event that when adding a movement to the work group, the error goes up abruptly, this movement is eliminated immediately. As a result of this process, we obtained 10 movements, which have a very low classification error and few misclassified objects. It is important to highlight that the classifier used is KNN; each classification was repeated 25 times per group of movements and all functions applied are in the toolbox PRtools.

Features Selection. The aim of this stage is to decrease the number of variables, deleting redundant or useless information. To improve the classifiers training time, is reduced the computational cost and performance is improved, when carrying out the training with a subset instead of the original data set.

This stage is carried out with the RELIEF algorithm, used in the binary classification, which generalizes to the polynomial classification through different binary problems and giving contribution weights to each feature [9, 10].

The algorithm orders the features according to their contributions, from highest to lowest, so that the feature matrix is reorganized and those columns or features that do not contribute to the classification of the movements are eliminated.

To decide which number of features are appropriate, tests are performed with the KNN classifier, the number of features are varied each 25 iterations, in each one the movements are classified and the average error is calculated at the end of the iterations. As a result, you get a vector with the average error according to the number of features, as shown in Fig. 4.

Thereby, the number of features is reduced to 60 columns, where the error is minimum. This new feature matrix, is used for the next step, the comparison of each classifiers. As seen In Fig. 5, the block diagram shows the methodology explained previously.

2.3 Classification

The final stage of this process is the classification, we carry out a bibliographic review on the algorithms used for the classification of movements with EMG signals. There are many researches and articles on this topic, where different algorithms were used and its performance is good, but we look for an algorithm, which does not take much time in training, its computational cost has been low, and it has been tested in multiple class problems. Continue with the process, with the new feature matrix, the classification of these movements is carried out with the following techniques.

-

1.

K-nearest neighbors (KNN): It is a method of non-parametric supervised classification. A simple configuration is used, it consists in assigning to a sample the most frequent class to which its nearest K neighbors belong. Having a data matrix that stores N cases, each of which is defined with n features \((X_{1} \ldots X_{n})\), and a variable C that defines the class of each sample. The N cases are denoted:

$$\begin{aligned} (\mathbf x _{1}, c_{1}), . . . , (\mathbf x _{N}, c_{N}), \end{aligned}$$(3)where:

$$\begin{aligned}&\mathbf{x }_{i} = (x_{i,1} . . . x_{i,n})\text { for all }i=1,...,N.\\&c_{i} \in \lbrace {c^1,...,c^m}\rbrace \text { for all }i=1,...,N. \end{aligned}$$\(c_{1}...c_{m}\) denote the possible m values of c. \(\mathbf x \) is the new sample to classify. The algorithm calculates the euclidean distances of the cases classified to the new case \(\mathbf x \). Once the nearest K cases have been selected, \(\mathbf x \) is assigned the most frequent class c. Empirically through different tests, \(k = 5\) was established [8, 12, 13].

-

2.

Artificial neural network (ANN): Artificial Neuronal Network is heuristic classification technique emulates the behavior of a biological brain through a large number of artificial neurons that connect and they are activated by means of functions. The model of a single neuron can be represented as in Fig. 6. Where \(\mathbf x \) denotes the input values or features, each of the n inputs has an associated weight w (emulates synapsys force). The input values are multiplied by their weights and summed, obtaining:

$$\begin{aligned} v = w_{1} x_{1} + w_{2} x_{2} + ... w_{n}x_{n} = \sum _{i=1}^{n}{w_{i} x_{i}}. \end{aligned}$$(4)The Neural Network is a collection of neurons connected in a network with three layers: Input layer is associated with the input variables. The Hidden Layer is not connected directly to the environment, but in this layer is where we can calculate each w. The Output Layer is associated with the output variables and are followed by an activation function. The process of finding a set of weights w such that for a given input the network produces the desired output is called training [1, 6, 14]. In this work a neural network is trained with a back propagation algorithm with a hidden layer with 10 neurons. The weight initialization consists of setting all weights to be zero, as well as the dataset is used as a tuning set. A sigmoid function is used in this work.

-

3.

Parzen-density-based classifier: Usually the principals classifiers are designed for binary classification, but in practical applications, it is common that the number of classes is greater than two, in our case we have ten different movements to classify [15, 16]. So, the Parzen-density-based- classifier is designed to work with multi-class problems. This probabilistic-based classification method requires a smoothing parameter for the Gaussian distribution computation, which is optimized.

3 Experimental Setup

Importantly for this process, we use the toolbox of Matlab called PRTools, which has all the necessary functions to perform the classification of movements with the different machine learning techniques and the calculation of efficiency of them.

From the new matrix, two groups are obtained in a random way, one of training that uses 75% of the data and the other group of verification with the rest of the data. With the selected groups, we proceed to classify the movements, this step is repeated 30 times with each classifier, and the error and the misclassified movements are stored in a two vectors. At the end, the average error and the deviation are calculated, which are the measures to estimate the effectiveness of classification of the different machine learning techniques used.

4 Results, Discussion and Future Work

Based on the result of the mean error and the standard deviation, it was clear that in Fig. 7 that the KNN and Parzen-density-based classifier present a better overall performance with 94.82% and 94.8%, while the neural network does not get close to the performance of the other two classifiers, with the 60 selected features reached 85.26% of recognition rate, possibly the number of features or data is not enough for a training of the neural network, since the average number of misclassified movements does not have a big difference with respect to KNN and Parzen, as seen in Table 1. In Fig. 4 shows that it is possible to obtain a good performance with KNN algorithm using only twenty features.

Figure 7 Also reveals greater uniformity of the KNN and Parzen classifiers in each of the tests, giving a standard deviation of 0.79% for KNN, 0.72% for Parzen-density-based classifier and 4.52% for the Neural Network. These results are comparable to the results obtained in [1], where with back-propagation neural networks a 98.21% performance was obtained but classifying only 5 movements. The recognition rates were 84.9% for the k-NN in [13] where five wrist movements were classified, this article highlight the difficult to place the electrodes in the forearm, but in our case the database used was acquired with a strict protocol avoiding this problem, this makes a difference in the results obtained, Ninapro also has more data acquisition channels, with which it is possible to obtain more information and discern the most important through the RELIEF algorithm.

As future work, it is proposed to develop a comparison with more classifiers such as SVM and LDA. Also observe the result of using variations of the algorithms, such as KNN with weights and FF-ANN. Other parameters of performance as specificity, sensitivity and computational cost must also be evaluated. We will explore the possibility to apply this knowledge in a practical application as a hand prosthesis or human machine interactive in real time using EMG signals of the forearm, searching a high classification rate.

References

Phinyomark, A., Phukpattaranont, P., Limsakul, C.: A review of control methods for electric power wheelchairs based on electromyography signals with special emphasis on pattern recognition. IETE Techn. Rev. 28(4), 316–326 (2011)

Aguiar, L.F., Bó, A.P.: Hand gestures recognition using electromyography for bilateral upper limb rehabilitation. In: 2017 IEEE Life Sciences Conference (LSC), pp. 63–66. IEEE (2017)

Halaki, M., Ginn, K.: Normalization of EMG signals: to normalize or not to normalize and what to normalize to? (2012)

Atzori, M., et al.: Electromyography data for non-invasive naturally-controlled robotic hand prostheses. Sci. Data 1, 140053 (2014)

Podrug, E., Subasi, A.: Surface EMG pattern recognition by using DWT feature extraction and SVM classifier. In: The 1st Conference of Medical and Biological Engineering in Bosnia and Herzegovina (CMBEBIH 2015), 13–15 March 2015 (2015)

Vicario Vazquez, S.A., Oubram, O., Ali, B.: Intelligent recognition system of myoelectric signals of human hand movement. In: Brito-Loeza, C., Espinosa-Romero, A. (eds.) ISICS 2018. CCIS, vol. 820, pp. 97–112. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-76261-6_8

Atzori, M., et al.: Characterization of a benchmark database for myoelectric movement classification. IEEE Trans. Neural Syst. Rehabil. Eng. 23(1), 73–83 (2015)

Krishna, V.A., Thomas, P.: Classification of emg signals using spectral features extracted from dominant motor unit action potential. Int. J. Eng. Adv. Technol. 4(5), 196–200 (2015)

Kononenko, I.: Estimating attributes: analysis and extensions of RELIEF. In: Bergadano, F., De Raedt, L. (eds.) ECML 1994. LNCS, vol. 784, pp. 171–182. Springer, Heidelberg (1994). https://doi.org/10.1007/3-540-57868-4_57

Kira, K., Rendell, L.A.: A practical approach to feature selection. In: Machine Learning Proceedings 1992, pp. 249–256. Elsevier (1992)

Romo, H., Realpe, J., Jojoa, P., Cauca, U.: Surface EMG signals analysis and its applications in hand prosthesis control. Rev. Av. en Sistemas e Informática 4(1), 127–136 (2007)

Shin, S., Tafreshi, R., Langari, R.: A performance comparison of hand motion EMG classification. In: 2014 Middle East Conference on Biomedical Engineering (MECBME), pp. 353–356. IEEE (2014)

Kim, K.S., Choi, H.H., Moon, C.S., Mun, C.W.: Comparison of k-nearest neighbor, quadratic discriminant and linear discriminant analysis in classification of electromyogram signals based on the wrist-motion directions. Curr. Appl. Phys. 11(3), 740–745 (2011)

Arozi, M., Putri, F.T., Ariyanto, M., Caesarendra, W., Widyotriatmo, A., Setiawan, J.D., et al.: Electromyography (EMG) signal recognition using combined discrete wavelet transform based on artificial neural network (ANN). In: International Conference of Industrial, Mechanical, Electrical, and Chemical Engineering (ICIMECE), pp. 95–99. IEEE (2016)

Pan, Z.W., Xiang, D.H., Xiao, Q.W., Zhou, D.X.: Parzen windows for multi-class classification. J. Complex. 24(5), 606–618 (2008)

Kurzynski, M., Wolczowski, A.: Hetero- and homogeneous multiclassifier systems based on competence measure applied to the recognition of hand grasping movements. In: Piętka, E., Kawa, J., Wieclawek, W. (eds.) Information Technologies in Biomedicine. AISC, vol. 4, pp. 163–174. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-06596-0_15

Acknowledgements

This work is supported by the “Smart Data Analysis Systems - SDAS” group (http://sdas-group.com), as well as the “Grupo de Investigación en Ingeniería Eléctrica y Electrónica - GIIEE” from Universidad de Nariño. Also, the authors acknowledge to the research project supported by Agreement No. 095 November 20th, 2014 by VIPRI from Universidad de Nariño.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Viveros-Melo, A. et al. (2018). Exploration of Characterization and Classification Techniques for Movement Identification from EMG Signals: Preliminary Results. In: Serrano C., J., Martínez-Santos, J. (eds) Advances in Computing. CCC 2018. Communications in Computer and Information Science, vol 885. Springer, Cham. https://doi.org/10.1007/978-3-319-98998-3_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-98998-3_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-98997-6

Online ISBN: 978-3-319-98998-3

eBook Packages: Computer ScienceComputer Science (R0)