Abstract

In this study, we present a hybrid model that combines the advantages of the identification, verification and triplet models for person re-identification. Specifically, the proposed model simultaneously uses Online Instance Matching (OIM), verification and triplet losses to train the carefully designed network. Given a triplet images, the model can output the identities of the three input images and the similarity score as well as make the L-2 distance between the mismatched pair larger than the one between the matched pair. Experiments on two benchmark datasets (CUHK01 and Market-1501) show that the proposed method can achieve favorable accuracy while compared with other state of the art methods.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Convolutional neural networks

- Person re-identification

- Identification model

- Verification model

- Triplet model

1 Introduction

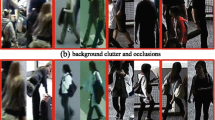

As a basic task of multi-camera surveillance system, person re-identification aims to re-identify a query pedestrian observed from non-overlapping cameras or across different time with a single camera [1]. Person re-identification is an important part of many computer vision tasks, including behavioral understanding, threat detection [2,3,4,5,6] and video surveillance [7,8,9]. Recently, the task has drawn significant attention in computer vision community. Despite the researchers make great efforts on addressing this issue, it remains a challenging task due to the appearance of the same pedestrian may suffer significant changes under non-overlapping camera views.

Recently, owing to the great success of convolution neural network (CNN) in computer vision community [10,11,12,13,14], many CNN-based methods are introduced to address the person re-identification issue. These CNN-based methods achieve many promising performances. Unlike the hand-craft methods, CNN-based methods can learn deep features automatically by an end-to-end way [28]. These CNN-based methods for person re-identification can be roughly divided into three categories: identification models, verification models and triplet models. The three categories of models differ in input data form and loss function and have their own advantages and limitations. Our motivation is to combine the advantages of three models to learn more discriminative deep pedestrian descriptors.

The identification model treats the person re-identification issue as a task of multi-class classification. The model can make full use of annotation information of datasets [1]. However, it cannot consider the distance metric between image pairs. Verification model regards the person re-identification problem as a binary classification task, it takes paired images as input and outputs whether the paired images belong to the same person. Thus, verification model considers the relationship between the different images, but it does not make full use of the label information of datasets. As regards to the triplet model [15], it takes triplet unit \( (x_{i} ,x_{j} ,x_{k} ) \) as input, where \( x_{i} \) and \( x_{k} \) are the mismatched pair and the \( x_{i} \) and \( x_{j} \) are the matched pair. Given a triplet images, the model tries to make the relative distance between \( x_{i} \) and \( x_{k} \) larger than the one between \( x_{i} \) and \( x_{j} \). Accordingly, triplet-based model can learn a similarity measurement for the pair images, but it also cannot take advantage of the label information of the datasets and uses weak labels only [1].

We design a CNN-based architecture that combines the three types of popular deep models used for person re-identification, i.e. identification, verification and triplet models. The proposed architecture can jointly output the IDs of the input triplet images and the similarity score as well as force the L2 distance between the mismatched pair larger than the one between the matched pair, thus enhancing the discriminative ability of the learned deep features.

2 Proposed Method

As shown in Fig. 1, the proposed method is a triplet-based CNN model that combines identification, verification and triplet losses.

2.1 Loss Function

Identification Loss. In this work, we utilize the Online Instance Matching [16] to instead of the cross-entropy loss for supervising the identification submodule. For one image M, the similarity score of it belong to the ID j is written as:

where \( v_{j}^{T} \) is the transposition of the j-th column of lookup table, \( \mu_{k}^{T} \) represents the transposition of the k-th ID of circular queue. \( \partial \) is the temperature scalar.

Verification Loss. For the verification subnetwork, we treat person re-identification as a task of binary classification issue. Similar to identification model, we also adopt cross-entropy loss as identification loss function, which is:

in which ni is the labels of paired image, when the pair is the same pedestrian, n1= 1 and n2= 0; otherwise, n1= 0 and n2= 1.

Triplet Loss. The triplet subnetwork is adopted to make the Euclidean distance between the positive pairs smaller than that between negative pairs. For one triplet unit \( R_{i} \), the triplet loss function can be written as:

where \( thre \) is a threshold value and is a positive number, \( \left[ x \right] + \, = { \hbox{max} }(0,{\text{x}}) \), and d () is Euclidean distance.

Hybrid Loss. The deep architecture is jointly supervised by three OIM losses, two cross-entropy losses and one triplet loss. During the training phase, the hybrid loss function can be written as:

in which \( \alpha_{1} \), \( \alpha_{2} \) and \( \alpha_{3} \) are the balance parameters.

2.2 Training Strategies

We utilize the pytorch framework to implement our network. In this work, we use the Adaptive Moment Estimation (Adam) as the optimizer of the deep model. We use two types of training strategies proposed by [17]. Specially, for large-scale dataset like Market-1501, we use the designed model directly to transfer on its training set. As for the small datasets (e.g. CUHK01), we first train the model on the large-scale person re-identification dataset (e.g. Market-1501), then fine-tune the model on the small dataset.

3 Experiments

We first resize the training images into 256*128, then the mean image is subtracted by those resized training images. For our hybrid model, it is crucial for it to organize the mini-batch that can satisfy the training purpose of both identification, verification and triplet subnetworks. In this study, we use the protocol proposed by, we sample Q identities randomly, and then all the images R in the training set are selected. Finally, we use QR images to constitute one mini-batch. Among these QR images, we choose the hardest positive and negative sample for each anchor to form the triplet units. And we randomly selected 100 paired images for verification training. As to the identification subnetwork, we use all QR images in the mini-batch for training.

-

(1)

Evaluation on CUHK01

For this dataset, 485 pedestrians are randomly selected to form the training set. The remainder 486 identities are selected for test. From Table 1, we can observe that the proposed method beats the most of compared approaches, which demonstrates the effectiveness of the proposed method.

-

(2)

Evaluation on Market-1501

We compare the proposed model with eight state-of-the-art methods on Market-1501 dataset. We report the performances of mean average precision (mAP), Rank-1 and Rank-5. Both the results are based on single-query evaluation. From Table 2, it can be observed that the accuracies of mAP, Rank-1 and Rank-5 of the proposed model achieve 77.43%, 91.60% and 98.33%, respectively, and our method beats all the other competing methods, which further proves the effectiveness of the proposed method.

Table 2. Results (mAP, Rank-1 and Rank-5 matching accuracy in %) on the Market-1501 dataset in the single-shot. ‘-’ means no reported results is available.

4 Conclusions

In this paper, we design a hybrid deep model for the person re-identification. The proposed model combines the identification, verification and triplet losses to handle the intra/inter class distances. Through the hybrid strategy, the model can learn a similarity measurement and the discriminative features at the same time. The proposed model outperforms most of the state-of-the-art on the CUHK01 and Market-1501 datasets.

References

Zheng, L., et al.: Person Re-identification: Past, Present and Future. Computer Vision and Pattern Recognition (2016). arXiv: 1610.02984

Huang, D.S.: A constructive approach for finding arbitrary roots of polynomials by neural networks. IEEE Trans. Neural. Netw. 15(2), 477–491 (2004)

Huang, D.S., et al.: A case study for constrained learning neural root finders. Appl. Math. Comput. 165, 699–718 (2005)

Huang, D.S., et al.: Zeroing polynomials using modified constrained neural network approach. IEEE Trans. Neural Netw. 16, 721–732 (2005)

Huang, D.S., et al.: A new partitioning neural network model for recursively finding arbitrary roots of higher order arbitrary polynomials. Appl. Math. Comput. 162, 1183–1200 (2005)

Huang, D.S., et al.: Dilation method for finding close roots of polynomials based on constrained learning neural networks ☆. Phys. Lett. A 309, 443–451 (2003)

Huang, D.S.: The local minima-free condition of feedforward neural networks for outer-supervised learning. IEEE Trans. Syst. Man Cybern. Part B Cybern. 28, 477 (1998). A Publication of the IEEE Systems Man & Cybernetics Society

Huang, D.S.: The united adaptive learning algorithm for the link weights and shape parameter in RBFN for pattern recognition. Int. J. Pattern Recognit. Artif. Intell. 11, 873–888 (1997)

Huang, D.S., Ma, S.D.: Linear and nonlinear feedforward neural network classifiers: a comprehensive understanding: journal of intelligent systems. J. Intell. Syst. 9, 1–38 (1999)

Huang, D.S., Du, J.X.: A constructive hybrid structure optimization methodology for radial basis probabilistic neural networks. IEEE Trans. Neural Netw. 19, 2099 (2008)

Wang, X.F., Huang, D.S.: A novel density-based clustering framework by using level set method. IEEE Trans. Knowl. Data Eng. 21, 1515–1531 (2009)

Shang, L., et al.: Palmprint recognition using FastICA algorithm and radial basis probabilistic neural network. Neurocomputing 69, 1782–1786 (2006)

Zhao, Z.Q., et al.: Human face recognition based on multi-features using neural networks committee. Pattern Recogn. Lett. 25, 1351–1358 (2004)

Huang, D.S., et al.: A neural root finder of polynomials based on root moments. Neural Comput. 16, 1721–1762 (2004)

Ding, S., et al.: Deep feature learning with relative distance comparison for person re-identification. Pattern Recogn. 48, 2993–3003 (2015)

Xiao, T., et al.: Joint detection and identification feature learning for person search. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3376–3385. IEEE (2017)

Geng, M., et al.: Deep transfer learning for person re-identification (2016)

Chen, S.Z., et al.: Deep ranking for person re-identification via joint representation learning. IEEE Trans. Image Process. 25, 2353–2367 (2016)

Matsukawa, T., et al.: Hierarchical Gaussian Descriptor for Person Re-identification. In: Proceedings of the Computer Vision and Pattern Recognition, pp. 1363–1372 (2016)

Xiao, T., et al.: Learning deep feature representations with domain guided dropout for person re-identification. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1249–1258 (2016)

Chen, W., et al.: Beyond triplet loss: a deep quadruplet network for person re-identification. In: Proceedings of the CVPR, pp. 1320–1329 (2017)

Jin, H., et al.: Deep person re-identification with improved embedding and efficient training. In IEEE International Joint Conference on Biometrics, pp. 261–267 (2017)

Liu, H., et al.: End-to-End comparative attention networks for person re-identification. IEEE Trans. Image Process. 26(7), 3492–3506 (2017). A Publication of the IEEE Signal Processing Society

Zheng, Z., et al.: A discriminatively learned CNN embedding for person re-identification. ACM Trans. Multimedia Comput. Commun. Appl. (TOMM) 14(1), 13 (2016)

Zheng, Z., et al.: Unlabeled samples generated by GAN improve the person re-identification baseline in vitro, 3774–3782 (2017). arXiv preprint arXiv:1701.07717

Zhong, Z., et al.: Re-ranking person re-identification with k-reciprocal encoding. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3652–3661 (2017)

Hermans, A., et al.: In Defense of the Triplet Loss for Person Re-Identification (2017). arXiv preprint, arXiv:1703.07737

Huang, D.S.: Systematic Theory of Neural Networks for Pattern Recognition, Publishing House of Electronic Industry of China, May 1996

Acknowledgements

This work was supported by the grants of the National Science Foundation of China, Nos. 61472280, 61672203, 61472173, 61572447, 61772357, 31571364, 61520106006, 61772370, 61702371 and 61672382, China Postdoctoral Science Foundation Grant, Nos. 2016M601646 & 2017M611619, and supported by “BAGUI Scholar” Program of Guangxi Zhuang Autonomous Region of China. De-Shuang Huang is the corresponding author of this paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Wu, D. et al. (2018). A Hybrid Deep Model for Person Re-Identification. In: Huang, DS., Gromiha, M., Han, K., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2018. Lecture Notes in Computer Science(), vol 10956. Springer, Cham. https://doi.org/10.1007/978-3-319-95957-3_25

Download citation

DOI: https://doi.org/10.1007/978-3-319-95957-3_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-95956-6

Online ISBN: 978-3-319-95957-3

eBook Packages: Computer ScienceComputer Science (R0)