Abstract

The aim of this chapter is to (a) provide a broad didactical treatment of the first-order stochastic differential equation model—also known as the continuous-time (CT) first-order vector autoregressive (VAR(1)) model—and (b) argue for and illustrate the potential of this model for the study of psychological processes using intensive longitudinal data. We begin by describing what the CT-VAR(1) model is and how it relates to the more commonly used discrete-time VAR(1) model. Assuming no prior knowledge on the part of the reader, we introduce important concepts for the analysis of dynamic systems, such as stability and fixed points. In addition we examine why applied researchers should take a continuous-time approach to psychological phenomena, focusing on both the practical and conceptual benefits of this approach. Finally, we elucidate how researchers can interpret CT models, describing the direct interpretation of CT model parameters as well as tools such as impulse response functions, vector fields, and lagged parameter plots. To illustrate this methodology, we reanalyze a single-subject experience-sampling dataset with the R package ctsem; for didactical purposes, R code for this analysis is included, and the dataset itself is publicly available.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

2.1 Introduction

The increased availability of intensive longitudinal data—such as obtained with ambulatory assessments, experience sampling, ecological momentary assessments, and electronic diaries—has opened up new opportunities for researchers to investigate the dynamics of psychological processes, that is, the way psychological variables evolve, vary, and relate to one another over time (cf. Bolger and Laurenceau 2013; Chow et al. 2011; Hamaker et al. 2005). A useful concept in this respect is that of people being dynamic systems whose current state depends on their preceding states. For instance, we may be interested in the relationship between momentary stress and anxiety. We can think of stress and anxiety as each defining an axis in a two-dimensional space, and let the values of stress and anxiety at each moment in time define a position in this space. Over time, the point that represents a person’s momentary stress and anxiety moves through this two-dimensional space, and our goal is to understand the lawfulness that underlies these movements.

There are two frameworks that can be used to describe such movements: (1) the discrete-time (DT) framework, in which the passage of time is treated in discrete steps, and (2) the continuous-time (CT) framework, in which time is viewed as a continuous variable. Most psychological researchers are at least somewhat familiar with the DT approach, as it is the basis of the vast majority of longitudinal models used in the social sciences. In contrast, CT models have gained relatively little attention in fields such as psychology: This is despite the fact that many psychological researchers have been advocating their use for a long time, claiming that the CT approach overcomes practical and conceptual problems associated with the DT approach (e.g., Boker 2002; Chow et al. 2005; Oud and Delsing 2010; Voelkle et al. 2012). We believe there are two major hurdles that hamper the adoption of the CT approach in psychological research. First, the estimation of CT models typically requires the use of specialized software (cf. Chow et al. 2007; Driver et al. 2017; Oravecz et al. 2016) or unconventional use of more common software (cf. Boker et al. 2010a, 2004; Steele and Ferrer 2011). Second, the results from CT models are not easily understood, and researchers may not know how to interpret and represent their findings.

Our goal in this chapter is twofold. First, we introduce readers to the perspective of psychological processes as CT processes; we focus on the conceptual reasons for which the CT perspective is extremely valuable in moving our understanding of processes in the right direction. Second, we provide a didactical description of how to interpret the results of a CT model, based on our analysis of an empirical dataset. We examine the direct interpretation of model parameters, examine different ways in which the dynamics described by the parameters can be understood and visualized, and explain how these are related to one another throughout. We will restrict our primary focus to the simplest DT and CT models, that is, first-order (vector) autoregressive models and first-order differential equations.

The organization of this chapter is as follows. First, we provide an overview of the DT and CT models under consideration. Second, we discuss the practical and conceptual reasons researchers should adopt a CT modeling approach. Third, we illustrate the use and interpretation of the CT model using a bivariate model estimated from empirical data. Fourth, we conclude with a brief discussion of more complex models which may be of interest to substantive researchers.

2.2 Two Frameworks

The relationship between the DT and CT frameworks has been discussed extensively by a variety of authors. Here, we briefly reiterate the main issues, as this is vital to the subsequent discussion. For a more thorough treatment of this topic, the reader is referred to Voelkle et al. (2012). We begin by presenting the first-order vector autoregressive model in DT, followed by the presentation of the first-order differential equation in CT. Subsequently, we show how these models are connected and discuss certain properties which can be inferred from the parameters of the model. For simplicity, and without loss of generalization, we describe single-subject DT and CT models, in terms of observed variables. Extensions for multiple-subject data, and extensions for latent variables, in which the researchers can account for measurement error by additionally specifying a measurement model, are readily available (in the case of CT models, see, e.g., Boker et al. 2004; Driver et al. 2017; Oravecz and Tuerlinckx 2011).

2.2.1 The Discrete-Time Framework

DT models are those models for longitudinal data in which the passage of time is accounted for only with regard to the order of observations. If the true data-generating model for a process is a DT model, then the process only takes on values at discrete moments in time (e.g., hours of sleep per day or monthly salary). Such models are typically applied to data that consist of some set of variables measured repeatedly over time. These measurements typically show autocorrelation, that is, serial dependencies between the observed values of these variables at consecutive measurement occasions. We can model these serial dependencies using (discrete-time) autoregressive equations, which describe the relationship between the values of variables observed at consecutive measurement occasions.

The specific type of DT model that we will focus on in this chapter is the first-order vector autoregressive (VAR(1)) model (cf. Hamilton 1994). Given a set of V variables of interest measured at N different occasions, the VAR(1) describes the relationship between y τ, a V × 1 column vector of variables measured at occasion τ (for τ = 2, …, N) and the values those same variables took on at the preceding measurement occasion, the vector y τ−1. This model can be expressed as

where Φ represents a V × V matrix with autoregressive and cross-lagged coefficients that regress y τ on y τ−1. The V × 1 column vector 𝜖 τ represents the variable-specific random shocks or innovations at that occasion, which are normally distributed with mean zero and a V × V variance-covariance matrix Ψ. Finally, c represents a V × 1 column vector of intercepts.

In the case of a stationary process, the mean μ and the variance-covariance matrix of the variables y τ (generally denoted Σ) do not change over time.Footnote 1 Then, the vector μ represents the long-run expected values of the random variables, E(y τ), and is a function of the vector of intercepts and the matrix with lagged regression coefficients, that is, μ = (I −Φ)−1 c, where I is a V × V identity matrix (cf. Hamilton 1994). In terms of a V -dimensional dynamical system of interest, μ represents the equilibrium position of the system. By definition, τ is limited to positive integers; that is, there is no 0.1th or 1.5th measurement occasion.

Both the single-subject and multilevel versions of the VAR(1) model have frequently been used to analyze intensive longitudinal data of psychological variables, including symptoms of psychopathology, such as mood- and affect-based measures (Bringmann et al. 2015, 2016; Browne and Nesselroade 2005; Moberly and Watkins 2008; Rovine and Walls 2006). In these cases, the autoregressive parameters ϕ ii are often interpreted as reflecting the stability, inertia, or carry-over of a particular affect or behavior (Koval et al. 2012; Kuppens et al. 2010, 2012). The cross-lagged effects (i.e., the off-diagonal elements ϕ ij for i ≠ j) quantify the lagged relationships, sometimes referred to as the spillover, between different variables in the model. These parameters are often interpreted in substantive terms, either as predictive or Granger-causal relationships between different aspects of affect or behavior (Bringmann et al. 2013; Gault-Sherman 2012; Granger 1969; Ichii 1991; Watkins et al. 2007). For example, if the standardized cross-lagged effect of y 1,τ−1 on y 2,τ is larger than the cross-lagged effect of y 2,τ−1 on y 1,τ, researchers may draw the conclusion that y 1 is the driving force or dominant variable of that pair (Schuurman et al. 2016). As such, substantive researchers are typically interested in the (relative) magnitudes and signs of these parameters.

2.2.2 The Continuous-Time Framework

In contrast to the DT framework, which treats values of processes indexed by observation τ, the CT framework treats processes as functions of the continuous variable time t: The processes being modeled are assumed to vary continuously with respect to time, meaning that these variables may take on values if observed at any imaginable moment. CT processes can be modeled using a broad class of differential equations, allowing for a wide degree of diversity in the types of dynamics that are being modeled. It is important to note that many DT models have a differential equation counterpart. For the VAR(1) model, the CT equivalent is the first-order stochastic differential equation (SDE), where stochastic refers to the presence of random innovations or shocks.

The first-order SDE describes how the position of the V -dimensional system at a certain point in time, y(t), relative to the equilibrium position μ, is related to the rate of change of the process with respect to time (i.e., \(\frac {d\boldsymbol {y}(t)}{dt}\)) in that same instant. The latter can also be thought of as a vector of velocities, describing in what direction and with what magnitude the system will move an instant later in time (i.e., the ratio of the change in position over some time interval, to the length of that time interval, as the length of the time interval approaches zero). The first-order SDE can be expressed as

where y(t),\(\frac {d\boldsymbol {y}(t)}{dt}\) and μ are V × 1 column vectors described above, y(t) −μ represents the position as a deviation from the equilibrium, and the V × V matrix A represents the drift matrix relating \(\frac {d\boldsymbol {y}(t)}{dt}\) to (y(t) −μ). The diagonal elements of A, relating the position in a certain dimension to the velocity in that same dimension, are referred to as auto-effects, while the off-diagonal elements are referred to as cross-effects. The second part on the right-hand side of Eq. (2.2) represents the stochastic part of the model: W(t) denotes the so-called Wiener process, broadly speaking a continuous-time analogue of a random walk. This stochastic element has a variance-covariance matrix GG ′ = Q, which is often referred to as the diffusion matrix (for details see Voelkle et al. 2012).

The model representation in Eq. (2.2) is referred to as the differential form as it includes the derivative \(\frac {d\boldsymbol {y}(t)}{dt}\). The same model can be represented in the integral form, in which the derivatives are integrated out, sometimes referred to as the solution of the derivative model. The integral form of this particular first-order differential equation is known as the CT-VAR(1) or Ornstein-Uhlenbeck model (Oravecz et al. 2011). In this form, we can describe the same system but now in terms of the positions of the system (i.e., the values the variables take on) at different points in time. For notational simplicity, we can represent y(t) −μ as y c(t), denoting the position of the process as a deviation from its equilibrium.

The CT-VAR(1) model can be written as

where A has the same meaning as above, the V × 1 vector y c(t − Δt) represents the position as a deviation from equilibrium some time interval Δt earlier, e represents the matrix exponential function, and the V × 1 column vector w(Δt) represents the stochastic innovations, the integral form of the Wiener process in Eq. (2.2). These innovations are normally distributed with a variance-covariance matrix that is a function of the time interval between measurements Δt, the drift matrix A, and the diffusion matrix Q (cf. Voelkle et al. 2012).Footnote 2 As the variables in the model have been centered around their equilibrium, we omit any intercept term. The relationship between lagged variables, that is, the relationships between the positions of the centered variables in the multivariate space, separated by some time interval Δt, is an (exponential) function of the drift matrix A and the length of that time interval.

2.2.3 Relating DT and CT Models

It is clear from the integral form of the first-order SDE given in Eq. (2.3) that the relationship between lagged values of variables is dependent on the length of the time interval between these lagged values. As such, if the DT-VAR(1) model in Eq. (2.1) is fitted to data generated by the CT model considered here, then the autoregressive and cross-lagged effects matrix Φ will be a function of the time interval Δt between the measurements. We denote this dependency by writing Φ(Δt). This characteristic of the DT model has been referred to as the lag problem (Gollob and Reichardt 1987; Reichardt 2011).

The precise relationship between the CT-VAR(1) and DT-VAR(1) effect matrices is given by the well-known equality

Despite this relatively simple relationship, it should be noted that taking the exponential of a matrix is not equivalent to taking the exponential of each of the elements of the matrix. That is, any lagged effect parameter ϕ ij(Δt), relating variable i and variable j across time points, is not only dependent on the corresponding CT cross-effect a ij but is a nonlinear function of the interval and every other element of the matrix A. For example, in the bivariate case, the DT cross-lagged effect of y 1(t − Δt) on y 2(t), denoted ϕ 21(Δt), is given by

where e represents the scalar exponential. In higher-dimensional models, these relationships quickly become intractable. For a derivation of Eq. (2.5), we refer readers to Appendix.

This complicated nonlinear relationship between the elements of Φ and the time interval has major implications for applied researchers who wish to interpret the parameters of a DT-VAR(1) model in the substantive terms outlined above. In the general multivariate case, the size, sign, and relative strengths of both autoregressive and cross-lagged effects may differ depending on the value of the time interval used in data collection (Deboeck and Preacher 2016; Dormann and Griffin 2015; Oud 2007; Reichardt 2011). As such, conclusions that researchers draw regarding the stability of processes and the nature of how different processes relate to one another may differ greatly depending on the time interval used.

While the relationship in Eq. (2.4) describes the DT-VAR(1) effects matrix we would find given the data generated by a CT-VAR(1) model, the reader should note that not all DT-VAR(1) processes have a straightforward equivalent representation as a CT-VAR(1). For example, a univariate discrete-time AR(1) process with a negative autoregressive parameter cannot be represented as a CT-AR(1) process; as the exponential function is always positive, there is no A that satisfies Eq. (2.4) for Φ < 0. As such, we can refer to DT-VAR(1) models with a CT-VAR(1) equivalent as those which exhibit “positive autoregression.” We will focus throughout on the CT-VAR(1) as the data-generating model.Footnote 3

2.2.4 Types of Dynamics: Eigenvalues, Stability, and Equilibrium

Both the DT-VAR(1) model and the CT-VAR(1) model can be used to describe a variety of different types of dynamic behavior. As the dynamic behavior of a system is always understood with regard to how the variables in the system move in relation to the equilibrium position, often dynamic behaviors are described by differentiating the type of equilibrium position or fixed point in the system (Strogatz 2014). In the general multivariate case, we can understand these different types of dynamic behavior or fixed points with respect to the eigenvalues of the effects matrices A or Φ (see Appendix for a more detailed explanation of the relationship between these two matrices and eigenvalues). In this chapter we will focus on stable processes, in which, given a perturbation, the system of interest will inevitably return to the equilibrium position. We limit our treatment to these types of processes, because we believe these are most common and most relevant for applied researchers. A brief description of other types of fixed points and how they relate to the eigenvalues of the effects matrix A is given in the discussion section—for a more complete taxonomy, we refer readers to Strogatz (2014, p. 136).

In DT settings, stable processes are those for which the absolute values of the eigenvalues of Φ are smaller than one. In DT applications researchers also typically discuss the need for stationarity, that is, time-invariant mean and variance, as introduced above. Stability of a process ensures that stationarity in relation to the mean and variance hold. For CT-VAR(1) processes, stability is ensured if the real parts of the eigenvalues of A are negative. It is interesting to note that the equilibrium position of stable processes can be related to our observed data in various ways: In some applications μ is constrained to be equal to the mean of the observed values (e.g., Hamaker et al. 2005; Hamaker and Grasman 2015), while in others the equilibrium can be specified a priori or estimated to be equal to some (asymptotic) value (e.g., Bisconti et al. 2004).

We can further distinguish between dynamic processes that have real eigenvalues, complex eigenvalues, or, in the case of systems with more than two variables, a mix of both. In the section “Making Sense of CT Models,” we will focus on the interpretation of a CT-VAR(1) model with real, negative, non-equal eigenvalues. We can describe the equilibrium position of this system as a stable node. In the discussion section, we examine another type of system which has been the focus of psychological research, sometimes described as a damped linear oscillator (e.g., Boker et al. 2010b), in which the eigenvalues of A are complex, with a negative real part. The fixed point of such a system is described as a stable spiral. Further detail on the interpretation of these two types of systems is given in the corresponding sections.

2.3 Why Researchers Should Adopt a CT Process Perspective

There are both practical and theoretical benefits to CT model estimation over DT model estimation. Here we will discuss three of these practical advantages which have received notable attention in the literature. We then discuss the fundamental conceptual benefits of treating psychological processes as continuous-time systems.

The first practical benefit to CT model estimation is that the CT model deals well with observations taken at unequal intervals, often the case in experience sampling and ecological momentary assessment datasets (Oud and Jansen 2000; Voelkle and Oud 2013; Voelkle et al. 2012). Many studies use random intervals between measurements, for example, to avoid participant anticipation of measurement occasions, potentially resulting in unequal time intervals both within and between participants. The DT model, however, is based on the assumption of equally spaced measurements, and as such estimating the DT model from unequally spaced data will result in an estimated Φ matrix that is a blend of different Φ(Δt) matrices for a range of values of Δt.

The second practical benefit of CT modeling over DT modeling is that, when measurements are equally spaced, the lagged effects estimated by the DT models are not generalizable beyond the time interval used in data collection. Several different researchers have demonstrated that utilizing different time intervals of measurement can lead researchers to reach very different conclusions regarding the values of parameters in Φ (Oud and Jansen 2000; Reichardt 2011; Voelkle et al. 2012). The CT model has thus been promoted as facilitating better comparisons of results between studies, as the CT effects matrix A is independent of time interval (assuming a sufficient frequency of measurement to capture the relevant dynamics).

Third, the application of CT models allows us to explore how cross-lagged effects are expected to change depending on the time interval between measurements, using the relationship expressed in Eq. (2.4). Some authors have used this relationship to identify the time interval at which cross-lagged effects are expected to reach a maximum (Deboeck and Preacher 2016; Dormann and Griffin 2015). Such information could be used to decide upon the “optimal” time interval that should be used in gathering data in future research.

While these practical concerns regarding the use of DT models for CT processes are legitimate, there may be instances in which alternative practical solutions can be used, without necessitating the estimation of a CT model. For instance, the problem of unequally spaced measurements in DT modeling can be addressed by defining a time grid and adding missing data to your observations, to make the occasions approximately equally spaced in time. Some simulation studies indicate that this largely reduces the bias that results from using DT estimation of unequally spaced data (De Haan-Rietdijk et al. 2017).

Furthermore, the issue of comparability between studies that use different time intervals can be solved, in certain circumstances, by a simple transformation of the estimated Φ matrix, described in more detail by Kuiper and Ryan (2018). Given an estimate of Φ(Δt), we can solve for the underlying A using Eq. (2.4). This is known as the “indirect method” of CT model estimation (Oud et al. 1993). However this approach cannot be applied in all circumstances, as it involves using the matrix logarithm, the inverse of the matrix exponential function. As the matrix logarithm function in the general case does not give a unique solution, this method is only appropriate if both the estimated Φ(Δt) and true underlying A matrices have real eigenvalues only (for further discussion of this issue, see Hamerle et al. 1991).

However, the CT perspective has added value above and beyond the potential practical benefits discussed above. Multiple authors have argued that psychological phenomena, such as stress, affect, and anxiety, do not vary in discrete steps over time but likely vary and evolve in a continuous and smooth manner (Boker 2002; Gollob and Reichardt 1987). Viewing psychological processes as CT dynamic systems has important implications for the way we conceptualize the influence of psychological variables on each other. Gollob and Reichardt (1987) give the example of a researcher who is interested in the effect of taking aspirin on headaches: This effect may be zero shortly after taking the painkiller, substantial an hour or so later, and near zero again after 24 h. All of these results may be considered as accurately portraying the effect of painkillers on headaches for a specific time interval, although each of these intervals considered separately represents only a snapshot of the process of interest.

It is only through examining the underlying dynamic trajectories, and exploring how the cross-lagged relationships evolve and vary as a function of the time interval, that we can come to a more complete picture of the dynamic system of study. We believe that—while the practical benefits of CT modeling are substantial—the conceptual framework of viewing psychological variables as CT processes has the potential to transform longitudinal research in this field.

2.4 Making Sense of CT Models

In this section, we illustrate how researchers can evaluate psychological variables as dynamic CT processes by describing the interpretation of the drift matrix parameters A. We describe multiple ways in which the dynamic behavior of the model in general, as well as specific model parameters, can be understood. In order to aid researchers who are unfamiliar with this type of analysis, we take a broad approach in which we incorporate the different ways in which researchers interested in dynamical systems and similar models interpret their results. For instance, Boker and colleagues (e.g., Boker et al. 2010b) typically interpret the differential form of the model directly; in the econometrics literature, it is typical to plot specific trajectories using impulse response functions (Johnston and DiNardo 1997); in the physics tradition, the dynamics of the system are inspected using vector fields (e.g., Boker and McArdle 1995); the work of Voelkle, Oud, and others (e.g., Deboeck and Preacher 2016; Voelkle et al. 2012) typically focuses on the integral form of the equation and visually inspecting the time interval dependency of lagged effects.

We will approach the interpretation of a single CT model from these four angles and show how they each represent complimentary ways to understand the same system. For ease of interpretation, we focus here on a bivariate system; the analysis of larger systems is addressed in the discussion section.

2.4.1 Substantive Example from Empirical Data

To illustrate the diverse ways in which the dynamics described by the CT-VAR(1) model can be understood, we make use of a substantive example. This example is based on our analysis of a publicly available single-subject ESM dataset (Kossakowski et al. 2017). The subject in question is a 57-year-old male with a history of major depression. The data consists of momentary, daily, and weekly items relating to affective states. The assessment period includes a double-blind phase in which the dosage of the participant’s antidepression medication was reduced. We select only those measurements made in the initial phases of the study, before medication reduction; it is only during this period that we would expect the system of interest to be stable. The selected measurements consist of 286 momentary assessments over a period of 42 consecutive days. The modal time interval between momentary assessments was 1.766 h (interquartile range of 1.250–3.323).

For our analysis we selected two momentary assessment items, “I feel down” and “I am tired,” which we will name Down (Do) and Tired (Ti), respectively. Feeling down is broadly related to assessments of negative affect (Meier and Robinson 2004), and numerous cross-sectional analyses have suggested a relationship between negative affect and feelings of physical tiredness or fatigue (e.g., Denollet and De Vries 2006). This dataset afforded us the opportunity to investigate the links between these two processes from a dynamic perspective. Each variable was standardized before the analysis to facilitate ease of interpretation of the parameter estimates. Positive values of Do indicate that the participant felt down more than average, negative values indicate below-average feelings of being down and likewise for positive and negative values of Ti.

The analysis was conducted using the ctsem package in R (Driver et al. 2017). Full details of the analysis, including R code, can be found in the online supplementary materials. Parameter estimates and standard errors are given in Table 2.1, including estimates of the stochastic part of the CT model, represented by the diffusion matrix Q. The negative value of γ 21 indicates that there is a negative covariance between the stochastic input and the rates of change of Do and Ti; in terms of the CT-VAR(1) representation, there is a negative covariance between the residuals of Do and Ti in the same measurement occasion. Further interpretation of the diffusion matrix falls beyond the scope of the current chapter. As the analysis is meant as an illustrative example only, we will throughout interpret the estimated drift matrix parameter as though they are true population parameters.

2.4.2 Interpreting the Drift Parameters

The drift matrix relating the processes Down (Do(t)) and Tired (Ti(t)) is given by

As the variables are standardized, the equilibrium position is μ = [0, 0] (i.e., E[Do(t)] = E[Ti(t)] = 0). The drift matrix A describes how the position of the system at any particular time t (i.e., Do(t) and Ti(t)) relates to the velocity or rate of change of the process, that is, how the position of the process is changing. The system of equations which describe the dynamic system made up of Down and Tired is given by

such that

where the rates of change of Down and Tired at any point in time are both dependent on the positions of both Down and Tired at that time.

Before interpreting any particular parameter in the drift matrix, we can determine the type of dynamic process under consideration by inspecting the eigenvalues of A. The eigenvalues of A are λ 1 = −2.554 and λ 2 = −0.857; since both eigenvalues are negative, the process under consideration is stable. This means that if the system takes on a position away from equilibrium (e.g., due to a random shock from the stochastic part of the model on either Down or Tired), the system will inevitably return to its equilibrium position over time. It is for this reason that the equilibrium position or fixed point in stable systems is also described as the attractor point, and stable systems are described as equilibrium-reverting. As the eigenvalues of the system are real-valued as well as negative, the system returns to equilibrium with an exponential decay; when the process is far away from the equilibrium, it takes on a greater velocity, that is, moves faster toward equilibrium. We can refer to the type of fixed point in this system as a stable node (Strogatz 2014).

Typical of such an equilibrium-reverting process, we see negative CT auto-effects a 11 = −0.995 and a 22 = −2.416. This reflects that, if either variable in the system takes on a position away from the equilibrium, they will take on a velocity of opposite sign to this deviation, that is, a velocity which returns the process to equilibrium. For higher values of Do(t), the rate of change of Do(t) is of greater (negative) magnitude, that is, the velocity toward the equilibrium is higher. In addition, the auto-effect of Ti(t) is more than twice as strong (in an absolute sense) as the auto-effect of Do(t). If there were no cross-effects present, this would imply that Ti(t) returns to equilibrium faster than Do(t); however, as there are cross-effects present, such statements cannot be made in the general case from inspecting the auto-effects alone.

In this case the cross-effects of Do(t) and Ti(t) on each other’s rates of change are positive rather than negative. Moreover, the cross-effect of Ti(t) on the rate of change of Do(t) (a 12 = 0.573) is slightly stronger than the corresponding cross-effect of Do(t) on the rate of change of Ti(t) (a 21 = 0.375). These cross-effects quantify the force that each component of the system exerts on the other. However, depending on what values each variable takes on at a particular point in time t, that is, the position of the system in each of the Do(t) and Ti(t) dimensions, this may translate to Do(t) pushing Ti(t) to return faster to its equilibrium or to deviate away from its equilibrium position and vice versa. To better understand both the cross-effects and auto-effects described by A, it is helpful to visualize the possible trajectories of our two-dimensional system.

2.4.3 Visualizing Trajectories

We will now describe and apply two related tools which allow us to visualize the trajectories of the variables in our model over time: impulse response functions and vector fields. These tools can help us to understand the dynamic system we are studying, by exploring the dynamic behavior which results from the drift matrix parameters.

2.4.3.1 Impulse Response Functions

Impulse response functions (IRFs) are typically used in the econometrics literature to aid in making forecasts based on a DT-VAR model. The idea behind this is to allow us to explore how an impulse to one variable in the model at occasion τ will affect the values of both itself and the other variables in the model at occasions τ + 1, τ + 2, τ + 3, and so on. In the stochastic systems we focus on in this chapter, we can conceptualize these impulses as random perturbations or innovations or alternatively as external interventions in the system.Footnote 4 IRFs thus represent the trajectories of the variables in the model over time, following a particular impulse, assuming no further stochastic innovations (see Johnston and DiNardo 1997, Chapter 9).

To specify impulses in an IRF, we generally assign a value to a single variable in the system at some initial occasion, y i,τ. The corresponding values of the other variables at the initial occasion y j,τ, j ≠ i are usually calculated based on, for instance, the covariance in the stochastic innovations, Ψ, or the stable covariance between the processes Σ. Such an approach is beneficial in at least two ways: first, it allows researchers to specify impulses which are more likely to occur in an observed dataset; second, it aids researchers in making more accurate future predictions or forecasts. For a further discussion of this issue in relation to DT-VAR models, we refer the reader to Johnston and DiNardo (1997, pp. 298–300). Below, we will take a simplified approach and specify bivariate impulses at substantively interesting values.

The IRF can easily be extended for use with the CT-VAR(1) model. We can calculate the impulse response of our system by taking the integral form of the CT-VAR(1) model in Eq. (2.3) and (a) plugging in the A matrix for our system, (b) choosing some substantively interesting set of impulses y(t = 0), and (c) calculating y(t) for increasing values of t > 0. To illustrate this procedure, we will specify four substantively interesting sets of impulses. The four sets of impulses shown here include y(0) = [1, 0], reflecting what happens when Do(0) takes on a positive value 1 standard deviation above the persons mean, while Ti(0) is at equilibrium; y(0) = [0, 1] reflecting when Ti(0) takes on a positive value of corresponding size while Do(0) is at equilibrium; y(0) = [1, 1] reflecting what happens when Do(0) and Ti(0) both take on values 1 standard deviation above the mean; and y(0) = [1, −1] reflecting what happens when Do(0) and Ti(0) take on values of equal magnitude but opposite valence (1SD more and 1SD less than the mean, respectively). Figure 2.1a–d contains the IRFs for both processes in each of these four scenarios.

Impulse response function for the model in Eq. (2.7) for four different sets of impulses; red solid line = Do(t) and blue dashed line = Ti(t). (a) Do(0) = 1, Ti(0) = 0, (b) Do(0) = 0, Ti(0) = 1, (c) Do(0) = 1, Ti(0) = 1, (d) Do(0) = 1, Ti(0) = −1

Examining the IRFs shows us the equilibrium-reverting behavior of the system: Given any set of starting values, the process eventually returns, in an exponential fashion, to the bivariate equilibrium position where both processes take on a value of zero.

In Figure 2.1a, we can see that when Ti(t) is at equilibrium and Do(0) takes on a value of plus one, then Ti(t) is pushed away from equilibrium in the same (i.e., positive) direction. In substantive terms, when our participant is feeling down at a particular moment, he begins to feel a little tired. Eventually, both Do(t) and Ti(t) return to equilibrium due to their negative auto-effects. The feelings of being down and tired have returned to normal around t = 4, that is, 4 h after the initial impulse; stronger impulses (|Do(0)| > 1) will result in the system taking longer to return to equilibrium, and weaker impulses (|Do(0)| < 1) would dissipate quicker.

Figure 2.1b shows the corresponding reaction of Do(t) at equilibrium to a positive value of Ti(0). We can further see that the deviation of Do(t) in Fig. 2.1b is greater than the deviation of Ti(t) in Fig. 2.1a: a positive value of Ti(t) exerts a greater push on Do(t) than vice versa, because of the greater cross-effect of Ti(t) on Do(t). In this case this strong cross-effect, combined with the relatively weaker auto-effect of Do(t), results in Do(t) taking on a higher value than Ti(t) at around t = 1, 1 h after the initial impulse. Substantively, when our participant is feeling physically tired at a particular moment (Fig. 2.1b), he begins to feel down over the next couple of hours, before eventually these feelings return to normal (again in this case, around 4 h later).

Figure 2.1c further demonstrates the role of the negative auto-effects and positive cross-effects in different scenarios. In Fig. 2.1c, both processes take on positive values at t = 0; the positive cross-effects result in both processes returning to equilibrium at a slower rate than in Fig. 2.1a, b. In substantive terms this means that, when the participant is feeling very down, and very tired, it takes longer for the participant to return to feeling normal. Here also the stronger auto-effect of Ti(t) than Do(t) is evident: although both processes start at the same value, an hour later Ti(1) is much closer to zero than Do(1), that is, Ti(t) decays faster to equilibrium than Do(t). In substantive terms, this tells us that when the participant is feeling down and physically tired, he recovers much quicker from the physical tiredness than he does from feeling down.

In Fig. 2.1d, we see that Do(0) and Ti(0) taking on values of opposite signs result in a speeding up of the rate at which each variable decays to equilibrium. The auto-effect of Do(t) is negative, which is added to by the positive cross-effect of Ti(t) multiplied by the negative value of Ti(0). This means that Do(0) in Fig. 2.1d takes on a stronger negative velocity, in comparison to Fig. 2.1a or c. A positive value for Do(0) has a corresponding effect of making Ti(0) take on an even stronger positive velocity. Substantively, this means that when the participant feels down, but feels less tired (i.e., more energetic) than usual, both of these feelings wear off and return to normal quicker than in the other scenarios we examined. The stronger auto-effect of Ti(t), in combination with the positive cross-effect of Do(t) on Ti(t), actually results in Ti(t) shooting past the equilibrium position in the Ti(t) dimension (Ti(t) = 0) and taking on positive values around t = 1.5, before the system as a whole returns to equilibrium. Substantively, when the participant is feeling initially down but quite energetic, we expect that he feels a little bit more tired than usual about an hour and half later, before both feelings return to normal.

2.4.3.2 Vector Fields

Vector fields are another technique which can be used to visualize the dynamic behavior of the system by showing potential trajectories through a bivariate space. In our case the two axes of this space are Do(t) and Ti(t). The advantage of vector fields over IRFs in this context is that in one plot it shows how, for a range of possible starting positions, the process is expected to move in the (bivariate) space a moment later. For this reason, the vector field is particularly useful in bivariate models with complex dynamics, in which it may be difficult to obtain the full picture of the dynamic system from a few IRFs alone. Furthermore, by showing the dynamics for a grid of values, we can identify areas in which the movement of the process is similar or differs.

To create a vector field, \(E[\frac {d\boldsymbol {y}(t)}{dt}]\) is calculated for a grid of possible values for y 1(t) and y 2(t) covering the full range of the values both variables can take on. The vector field for Do(t) and Ti(t) is shown in Fig. 2.2. The base of each arrow represents a potential position of the process y(t). The head of the arrow represents where the process will be if we take one small step in time forward, that is the value of y(t + Δt) as Δt approaches zero. In other words, the arrows in this vector field represent the information of two derivatives, dDo(t)∕dt and dTi(t)∕dt. Specifically, the direction the arrow is pointing is a function of the sign (positive or negative) of the derivatives, while the length of the arrow represents the magnitude of this movement and is a function of the absolute values of the derivative(s).

If an arrow in the vector field is completely vertical, this means that, for that position, taking one small step forward in time would result in a change in the system’s position along the Ti(t) axis (i.e., a change in the value of Tired), but not along the Do(t) axis (i.e., dDo(t)∕dt = 0 and dTi(t)∕dt ≠ 0). The converse is true for a horizontal arrow (i.e., dDo(t)∕dt ≠ 0 and dTi(t)∕dt = 0). The two lines in Fig. 2.2, blue and red, identify at which positions dDo(t)∕dt = 0 and dTi(t)∕dt = 0, respectively; these are often referred to as nullclines. If the nullclines are not perfectly perpendicular to one another, this is due to the presence of at least one cross-effect. The point at which these nullclines cross represents the equilibrium position in this two-dimensional space, here located at Do(t) = 0, Ti(t) = 0. The crossing of these nullclines splits the vector field in four quadrants, each of which is characterized by a different combination of negative and positive values for dDo(t)∕dt and dTi(t)∕dt. The top left and bottom right quadrants represent areas in which the derivatives are of opposite sign, dDo(t)∕dt > 0 & dTi(t)∕dt < 0 and dDo(t)∕dt < 0 & dTi(t)∕dt > 0, respectively. The top right and bottom left quadrants represent areas where the derivatives are of the same sign, dDo(t)∕dt < 0 & dTi(t) < 0 and dDo(t)∕dt > 0 & dTi(t) > 0, respectively.

By tracing a path through the arrows, we can see the trajectory of the system of interest from any point in the possible space of values. In Fig. 2.2, we include the same four bivariate trajectories as we examined with the IRFs. Instead of the IRF representation of two variables whose values are changing, the vector field represents this as the movement of one process in a two-dimensional space. For instance, the trajectory starting at Do(t) = 0 and Ti(t) = 1 begins in the top left quadrant, where dDo(t)∕dt is positive and dTi(t)∕dt is negative; this implies that the value of Down will increase, and the value of Tired will decrease (as can be seen in Fig. 2.1b). Instead of moving directly to the equilibrium along the Ti(t) dimension, the system moves away from equilibrium along the Do(t) dimension, due to the cross-effect of Ti(t) on Do(t), until it moves into the top right quadrant. In this quadrant, dDo(t)∕dt and dTi(t)∕dt are both negative; once in this quadrant, the process moves toward equilibrium, tangent to the dDo(t)∕dt nullcline. The other trajectories in Fig. 2.2 analogously describe the same trajectories as in Fig. 2.1a, c, d.

In general, the trajectories in this vector field first decay the quickest along the Ti(t) dimension and the slowest along the Do(t) dimension. This can be clearly seen in trajectories (b), (c), and (d). Each of these trajectories first changes steeply in the Ti(t) dimension, before moving to equilibrium at a tangent to the red (\(\frac {dDo(t)}{dt}\)) nullcline. This general property of the bidimensional system is again related to the much stronger auto-effect of Ti(t) and the relatively small cross-effects. In a technical sense, we can say that Do(t) represents the “slowest eigendirection” (Strogatz 2014, Chapter 5).

2.4.4 Inspecting the Lagged Parameters

Another way to gain insight into the processes of interest is by determining the relationships between lagged positions of the system, according to our drift matrix. To this end, we can use Eq. (2.4) to determine Φ(Δt) for some Δt. For instance, we can see that the autoregressive and cross-lagged relationships between values of competence and exhaustion given Δt = 1 are

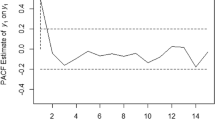

For this given time interval, the cross-lagged effect of Down on Tired (ϕ 21(Δt = 1) = 0.077) is smaller than the cross-lagged effect of Tired on Down (ϕ 12(Δt = 1) = 0.117). However, as shown in Eq. (2.5), the value of each of these lagged effects changes in a nonlinear way depending on the time interval chosen. To visualize this, we can calculate Φ(Δt) for a range of Δt and represent this information graphically in a lagged parameter plot, as in Fig. 2.3. From Fig. 2.3, we can see that both cross-lagged effects reach their maximum (and have their maximum difference) at a time interval of Δt = 0.65; furthermore, we can see that the greater cross-effect (a 12) results in a stronger cross-lagged effect ϕ 12(Δt) for a range of Δt. Moreover, we can visually inspect how the size of each of the effects of interest, as well as the difference between these effects, varies according to the time interval. From a substantive viewpoint, we could say that the effect of feeling physically tired has the strongest effect on feelings of being down around 40 min later.

While the shape of the lagged parameters may appear similar to the shapes of the trajectories plotted in the IRFs, lagged parameter plots and IRFs represent substantively different information. IRFs plot the positions of each variable in the system as they change over time, given some impulse (y(t) vs t given some y(0)). In contrast, lagged parameter plots show how the lagged relationships change depending on the length of the time interval between them, independent of impulse values (e AΔt vs Δt). The lagged relationships can be thought of as the components which go into determining any specific trajectories.

2.4.5 Caution with Interpreting Estimated Parameters

It is important to note that in the above interpretation of CT models, we have treated the matrix A as known. In practice of course, researchers should take account of the uncertainty in parameter estimates. For example, the ctsem package also provides lagged parameter plots with credible intervals to account for this uncertainty.

Furthermore, researchers should be cautious about extrapolating beyond the data. For instance, when we consider a vector field, we should be careful about interpreting regions in which there is little or no observed data (cf. Boker and McArdle 1995). The same logic applies for the interpretation of IRFs for impulses that do not match observed values. Moreover, we should also be aware that interpreting lagged parameter plots for time intervals much shorter than those we observe data at is a form of extrapolation: It relies on strong model-based assumptions, such as ruling out the possibility of a high-frequency higher-order process (Voelkle and Oud 2013; Voelkle et al. 2012).

2.5 Discussion

In this chapter we have set out to clarify the connection between DT- and CT-VAR(1) models and how we can interpret and represent the results from these models. So far we have focused on single-subject, two-dimensional, first-order systems with a stable node equilibrium. However, there are many ways in which these models can be extended, to match more complicated data and/or dynamic behavior. Below we consider three such extensions: (a) systems with more than two dimensions (i.e., variables), (b) different types of fixed points resulting from non-real eigenvalues of the drift matrix, and (c) moving from single-subject to multilevel datasets.

2.5.1 Beyond Two-Dimensional Systems

In the empirical illustration, we examined the interpretation of a drift matrix in the context of a bivariate CT-VAR(1) model. Notably, the current trend in applications of DT-VAR(1) models in psychology has been to focus more and more on the analysis of large systems of variables, as typified, for example, by the dynamic network approach of Bringmann et al. (2013, 2016). The complexity of these models grows rapidly as the number of variables is added: To estimate a full drift matrix for a system of three variables, we must estimate nine unique parameters, in contrast to four drift matrix parameters for a bivariate system. In addition, we must estimate a three-by-three covariance matrix for the residuals, rather than a two-by-two matrix.

The relationship between the elements of A and Φ(Δt) becomes even less intuitive once the interest is in a system of three variables, because the lagged parameter values are dependent on the drift matrix as a whole, as explained earlier. This means that both the relative sizes and the signs of the cross-lagged effects may differ depending on the interval: The same lagged parameter may be negative for some time intervals and positive for others, and zero elements of A can result in corresponding non-zero elements of Φ (cf. Aalen et al. 2017, 2016, 2012; Deboeck and Preacher 2016). Therefore, although we saw in our bivariate example that, for instance, negative CT cross-effects resulted in negative DT cross-lagged effects, this does not necessarily hold in the general case (Kuiper and Ryan 2018).

Additionally, substantive interpretation of the lagged parameters in systems with more than two variables also becomes less straightforward. For example, Deboeck and Preacher (2016) and Aalen et al. (2012, 2016, 2017) argue that the interpretation of Φ(Δt) parameters in mediation models (with three variables and a triangular A matrix) as direct effects may be misleading: Deboeck and Preacher argue that instead they should be interpreted as total effects. This has major consequences for the practice of DT analyses and the interpretation of its results.

2.5.2 Complex and Positive Eigenvalues

The empirical illustration is characterized by a system with negative, real, non-equal eigenvalues, which implies that the fixed point in the system is a stable node. In theory, however, there is no reason that psychological processes must adhere to this type of dynamic behavior. We can apply the tools we have defined already to understand the types of behavior that might be described by other types of drift matrices. Notably, some systems may have drift matrices with complex eigenvalues, that is, eigenvalues of the form α ± ωi, where \(i=\sqrt {-1}\) is the imaginary number, ω ≠ 0, α is referred to as the real part, and ωi as the imaginary part of the eigenvalue. If the real component of these eigenvalues is negative (α < 0), then the system is still stable, and given a deviation it will return eventually to a resting state at equilibrium. However, unlike the systems we have described before, these types of systems spiral or oscillate around the equilibrium point, before eventually coming to rest. Such systems have been described as stable spirals, or alternatively as damped (linear or harmonic) oscillators (Boker et al. 2010b; Voelkle and Oud 2013).

A vector field for a process which exhibits this type of stable spiral behavior is shown in Fig. 2.4, with accompanying trajectories. The drift matrix corresponding to this vector field is

which is equivalent to our empirical example above but with the value of a 21 altered from 0.375 to − 2.000. The eigenvalues of this matrix are λ 1 = −1.706 + 0.800i and λ 2 = −1.706 − 0.800i. In contrast to our empirical example, we can see that the trajectories follow a spiral pattern; the trajectory which starts at y 1(t) = 1, y 2(t) = 1 actually overshoots the equilibrium in the Ti(t) dimension before spiraling back once in the bottom quadrant. There are numerous examples of psychological systems that are modeled as damped linear oscillators using second-order differential equations, which include the first- and second-order derivatives (cf., Bisconti et al. 2004; Boker et al. 2010b; Boker and Nesselroade 2002; Horn et al. 2015). However, as shown here, such behavior may also result from a first-order model.

Stable nodes and spirals can be considered the two major types of stable fixed points, as they occur whenever the real part of the eigenvalues of A is negative, that is, α < 0. Many other types of stable fixed points can be considered as special cases: when we have real, negative eigenvalues that are exactly equal, the fixed point is called a stable star node (if the eigenvectors are distinct) or a stable degenerate node (if the eigenvectors are not distinct). In contrast, if the real part of the eigenvalues of A is positive, then the system is unstable, also referred to as non-stationary or a unit root in the time series literature (Hamilton 1994). This implies that, given a deviation, the system will not return to equilibrium; in contrast to stable systems, in which trajectories are attracted to the fixed point, the trajectories of unstable systems are repelled by the fixed point. As such we can also encounter unstable nodes, spirals, star nodes, and degenerate nodes. The estimation and interpretation of unstable systems in psychology may be a fruitful ground for further research.

Two further types of fixed points may be of interest to researchers; in the special case where the eigenvalues of A have an imaginary part and no real part (α = 0), the fixed point is called a center. In a system with a center fixed point, trajectories spiral around the fixed point without ever reaching it. Such systems exhibit oscillating behavior, but without any damping of oscillations; certain biological systems, such as the circadian rhythm, can be modeled as a dynamic system with a center fixed point. Such systems are on the borderline between stable and unstable systems, sometimes referred to as neutrally stable; trajectories are neither attracted to or repelled by the fixed point. Finally, a saddle point occurs when the eigenvalues of A are real but of opposite sign (one negative, one positive). Saddle points have one stable and one unstable component; only trajectories which start exactly on the stable axis return to equilibrium, and all others do not. Together spirals, nodes, and saddle points cover the majority of the space of possible eigenvalues for A. Strogatz (2014) describes the different dynamic behavior generated by different combinations of eigenvalues of A in greater detail.

2.5.3 Multilevel Extensions

The time series literature (such as from the field of econometrics) as well as the dynamic systems literature (such as from the field of physics) tends to be concerned with a single dynamic system, either because there is only one case (N = 1) or because all cases are exact replicates (e.g., molecules). In psychology however, we typically have data from more than one person, and we also know that people tend to be highly different. Hence, when we are interested in modeling their longitudinal data, we should take their differences into account somehow. The degree to which this can be done depends on the number of time points we have per person. In traditional panel data, we typically have between two and six waves of data. In this case, we should allow for individual differences in means or intercepts, in order to separate the between-person, stable differences from the within-person dynamic process, while assuming the lagged relationships are the same across individuals (cf. Hamaker et al. 2015).

In contrast, experience sampling data and other forms of intensive longitudinal data consist of many repeated measurement per person, such that we can allow for individual differences in the lagged coefficients. This can be done by either analyzing the data of each person separately or by using a dynamic multilevel model in which the individuals are allowed to have different parameters (cf. Boker et al. 2016; Driver and Voelkle 2018). Many recent studies have shown that there are substantial individual differences in the dynamics of psychological phenomena and that these differences can be meaningfully related to other person characteristics, such as personality traits, gender, age, and depressive symptomatology, but also to later health outcomes and psychological well-being (e.g., Bringmann et al. 2013; Kuppens et al. 2010, 2012).

While the current chapter has focused on elucidating the interpretation of a single-subject CT-VAR(1) model, the substantive interpretations and visualization tools we describe here can be applied in a straightforward manner to, for example, the fixed effects estimated in a multilevel CT-VAR(1) model or to individual-specific parameters estimated in a multilevel framework. The latter would however lead to an overwhelming amount of visual information. The development of new ways of summarizing the individual differences in dynamics, based on the current tools, is a promising area.

2.5.4 Conclusion

There is no doubt that the development of dynamical systems modeling in the field of psychology has been hampered by the difficulty in obtaining suitable data to model such systems. However this is a barrier that recent advances in technology will shatter in the coming years. Along with this new source of psychological data, new psychological theories are beginning to emerge, based on the notion of psychological processes as dynamic systems. Although the statistical models needed to investigate these theories may seem exotic or difficult to interpret at first, they reflect the simple intuitive and empirical notions we have about psychological processes: Human behavior, emotion, and cognition fluctuate continuously over time, and the models we use should reflect that. We hope that our treatment of CT-VAR(1) models and their interpretation will help researchers to overcome the knowledge barrier to this approach and can serve as a stepping stone toward a broader adaptation of the CT dynamical system approach to psychology.

Notes

- 1.

The variance-covariance matrix of the variables Σ is a function of both the lagged parameters and the variance-covariance matrix of the innovations, vec(Σ) = (I −Φ ⊗Φ)−1 vec(Ψ), where vec(.) denotes the operation of putting the elements of an N × N matrix into an NN × 1 column matrix (Kim and Nelson 1999, p. 27).

- 2.

- 3.

In general, there is no straightforward CT-VAR(1) representation of DT-VAR(1) models with real, negative eigenvalues. However it may be possible to specify more complex continuous-time models which do not exhibit positive autoregression. Notably, Fisher (2001) demonstrates how a DT-AR(1) model with negative autoregressive parameter can be modeled with the use of two continuous-time (so-called) Itô processes.

- 4.

Similar functions can be used for deterministic systems (those without a random innovation part); however in these cases the term initial value is more typically used.

References

Aalen, O., Gran, J., Røysland, K., Stensrud, M., & Strohmaier, S. (2017). Feedback and mediation in causal inference illustrated by stochastic process models. Scandinavian Journal of Statistics, 45, 62–86. https://doi.org/10.1111/sjos.12286

Aalen, O., Røysland, K., Gran, J., Kouyos, R., & Lange, T. (2016). Can we believe the DAGs? A comment on the relationship between causal DAGs and mechanisms. Statistical Methods in Medical Research, 25(5), 2294–2314. https://doi.org/10.1177/0962280213520436

Aalen, O., Røysland, K., Gran, J., & Ledergerber, B. (2012). Causality, mediation and time: A dynamic viewpoint. Journal of the Royal Statistical Society: Series A (Statistics in Society), 175(4), 831–861.

Bisconti, T., Bergeman, C. S., & Boker, S. M. (2004). Emotional well-being in recently bereaved widows: A dynamical system approach. Journal of Gerontology, Series B: Psychological Sciences and Social Sciences, 59, 158–167. https://doi.org/10.1093/geronb/59.4.P158

Boker, S. M. (2002). Consequences of continuity: The hunt for intrinsic properties within parameters of dynamics in psychological processes. Multivariate Behavioral Research, 37(3), 405–422. https://doi.org/10.1207/S15327906MBR3703-5

Boker, S. M., Deboeck, P., Edler, C., & Keel, P. (2010a). Generalized local linear approximation of derivatives from time series. In S. Chow & E. Ferrar (Eds.), Statistical methods for modeling human dynamics: An interdisciplinary dialogue (pp. 179–212). Boca Raton, FL: Taylor & Francis.

Boker, S. M., & McArdle, J. J. (1995). Statistical vector field analysis applied to mixed cross-sectional and longitudinal data. Experimental Aging Research, 21, 77–93. https://doi.org/10.1080/03610739508254269

Boker, S. M., Montpetit, M. A., Hunter, M. D., & Bergeman, C. S. (2010b). Modeling resilience with differential equations. In P. Molenaar & K. Newell (Eds.), Learning and development: Individual pathways of change (pp. 183–206). Washington, DC: American Psychological Association. https://doi.org/10.1037/12140-011

Boker, S. M., Neale, M., & Rausch, J. (2004). Latent differential equation modeling with multivariate multi-occasion indicators. In K. van Montfort, J. H. L. Oud, & A. Satorra (Eds.), Recent developments on structural equation models (pp. 151–174). Dordrecht: Kluwer.

Boker, S. M., & Nesselroade, J. R. (2002). A method for modeling the intrinsic dynamics of intraindividual variability: Recovering parameters of simulated oscillators in multi-wave panel data. Multivariate Behavioral Research, 37, 127–160.

Boker, S. M., Staples, A. D., & Hu, Y. (2016). Dynamics of change and change in dynamics. Journal for Person-Oriented Research, 2(1–2), 34. https://doi.org/10.17505/jpor.2016.05

Bolger, N., & Laurenceau, J.-P. (2013). Intensive longitudinal methods: An introduction to diary and experience sampling research. New York, NY: The Guilford Press.

Bringmann, L., Lemmens, L., Huibers, M., Borsboom, D., & Tuerlinckx, F. (2015). Revealing the dynamic network structure of the beck depression inventory-ii. Psychological Medicine, 45(4), 747–757. https://doi.org/10.1017/S0033291714001809

Bringmann, L., Pe, M., Vissers, N., Ceulemans, E., Borsboom, D., Vanpaemel, W., …Kuppens, P. (2016). Assessing temporal emotion dynamics using networks. Assessment, 23(4), 425–435. https://doi.org/10.1177/1073191116645909

Bringmann, L., Vissers, N., Wichers, M., Geschwind, N., Kuppens, P., Peeters, …Tuerlinckx, F. (2013). A network approach to psychopathology: New insights into clinical longitudinal data. PLoS ONE, 8, e60188. https://doi.org/10.1371/journal.pone.0060188

Browne, M. W., & Nesselroade, J. R. (2005). Representing psychological processes with dynamic factor models: Some promising uses and extensions of ARMA time series models. In A. Maydue-Olivares & J. J. McArdle (Eds.), Psychometrics: A festschrift to Roderick P. McDonald (pp. 415–452). Mahwah, NJ: Lawrence Erlbaum Associates.

Chow, S., Ferrer, E., & Hsieh, F. (2011). Statistical methods for modeling human dynamics: An interdisciplinary dialogue. New York, NY: Routledge.

Chow, S., Ferrer, E., & Nesselroade, J. R. (2007). An unscented Kalman filter approach to the estimation of nonlinear dynamical systems models. Multivariate Behavioral Research, 42(2), 283–321. https://doi.org/10.1080/00273170701360423

Chow, S., Ram, N., Boker, S., Fujita, F., Clore, G., & Nesselroade, J. (2005). Capturing weekly fluctuation in emotion using a latent differential structural approach. Emotion, 5(2), 208–225.

De Haan-Rietdijk, S., Voelkle, M. C., Keijsers, L., & Hamaker, E. (2017). Discrete- versus continuous-time modeling of unequally spaced ESM data. Frontiers in Psychology, 8, 1849. https://doi.org/10.3389/fpsyg.2017.01849

Deboeck, P. R., & Preacher, K. J. (2016). No need to be discrete: A method for continuous time mediation analysis. Structural Equation Modeling: A Multidisciplinary Journal, 23(1), 61–75.

Denollet, J., & De Vries, J. (2006). Positive and negative affect within the realm of depression, stress and fatigue: The two-factor distress model of the Global Mood Scale (GMS). Journal of Affective Disorders, 91(2), 171–180. https://doi.org/10.1016/j.jad.2005.12.044

Dormann, C., & Griffin, M. A. (2015). Optimal time lags in panel studies. Psychological Methods, 20(4), 489. https://doi.org/10.1037/met0000041

Driver, C., Oud, J. H. L., & Voelkle, M. (2017). Continuous time structural equation modelling with r package ctsem. Journal of Statistical Software, 77, 1–35. https://doi.org/10.18637/jss.v077.i05

Driver, C. C., & Voelkle, M. C. (2018). Hierarchical Bayesian continuous time dynamic modeling. Psychological Methods. Advance online publication. http://dx.doi.org/10.1037/met0000168

Fisher, M. (2001). Modeling negative autoregression in continuous time. http://www.markfisher.net/mefisher/papers/continuous_ar.pdf

Gault-Sherman, M. (2012). It’s a two-way street: The bidirectional relationship between parenting and delinquency. Journal of Youth and Adolescence, 41, 121–145. https://doi.org/10.1007/s10964-011-9656-4

Gollob, H. F., & Reichardt, C. S. (1987). Taking account of time lags in causal models. Child Development, 58, 80–92. https://doi.org/10.2307/1130293

Granger, C. W. J. (1969). Investigating causal relations by econometric models and cross-spectral methods. Econometrica, 37, 424–438. https://doi.org/10.2307/1912791

Hamaker, E. L., Dolan, C. V., & Molenaar, P. C. M. (2005). Statistical modeling of the individual: Rationale and application of multivariate time series analysis. Multivariate Behavioral Research, 40(2), 207–233. https://doi.org/10.1207/s15327906mbr4002_3

Hamaker, E. L., & Grasman, R. P. P. P. (2015). To center or not to center? Investigating inertia with a multilevel autoregressive model. Frontiers in Psychology, 5, 1492. https://doi.org/10.3389/fpsyg.2014.01492

Hamaker, E. L., Kuiper, R., & Grasman, R. P. P. P. (2015). A critique of the cross-lagged panel model. Psychological Methods, 20(1), 102–116. https://doi.org/10.1037/a0038889

Hamerle, A., Nagl, W., & Singer, H. (1991). Problems with the estimation of stochastic differential equations using structural equations models. Journal of Mathematical Sociology, 16(3), 201–220. https://doi.org/10:1080=0022250X:1991:9990088

Hamilton, J. D. (1994). Time series analysis. Princeton, NJ: Princeton University Press.

Horn, E. E., Strachan, E., & Turkheimer, E. (2015). Psychological distress and recurrent herpetic disease: A dynamic study of lesion recurrence and viral shedding episodes in adults. Multivariate Behavioral Research, 50(1), 134–135. https://doi.org/10.1080/00273171.2014.988994

Ichii, K. (1991). Measuring mutual causation: Effects of suicide news on suicides in Japan. Social Science Research, 20, 188–195. https://doi.org/10.1016/0049-089X(91)90016-V

Johnston, J., & DiNardo, J. (1997). Econometric methods (4th ed.). New York, NY: McGraw-Hill.

Kim, C.-J., & Nelson, C. R. (1999). State-space models with regime switching: Classical and Gibbs-sampling approaches with applications. Cambridge, MA: The MIT Press. https://doi.org/10.2307/2669796

Kossakowski, J., Groot, P., Haslbeck, J., Borsboom, D., & Wichers, M. (2017). Data from critical slowing down as a personalized early warning signal for depression. Journal of Open Psychology Data, 5(1), 1.

Koval, P., Kuppens, P., Allen, N. B., & Sheeber, L. (2012). Getting stuck in depression: The roles of rumination and emotional inertia. Cognition and Emotion, 26, 1412–1427.

Kuiper, R. M., & Ryan, O. (2018). Drawing conclusions from cross-lagged relationships: Re-considering the role of the time-interval. Structural Equation Modeling: A Multidisciplinary Journal. https://doi.org/10.1080/10705511.2018.1431046

Kuppens, P., Allen, N. B., & Sheeber, L. B. (2010). Emotional inertia and psychological maladjustment. Psychological Science, 21(7), 984–991. https://doi.org/10.1177/0956797610372634

Kuppens, P., Sheeber, L. B., Yap, M. B. H., Whittle, S., Simmons, J., & Allen, N. B. (2012). Emotional inertia prospectively predicts the onset of depression in adolescence. Emotion, 12, 283–289. https://doi.org/10.1037/a0025046

Meier, B. P., & Robinson, M. D. (2004). Why the sunny side is up: Associations between affect and vertical position. Psychological Science, 15(4), 243–247. https://doi.org/10.1111/j.0956-7976.2004.00659.x

Moberly, N. J., & Watkins, E. R. (2008). Ruminative self-focus and negative affect: An experience sampling study. Journal of Abnormal Psychology, 117, 314–323. https://doi.org/10.1037/0021-843X.117.2.314

Moler, C., & Van Loan, C. (2003). Nineteen dubious ways to compute the exponential of a matrix, twenty-five years later. SIAM Review, 45(1), 3–49.

Oravecz, Z., & Tuerlinckx, F. (2011). The linear mixed model and the hierarchical Ornstein–Uhlenbeck model: Some equivalences and differences. British Journal of Mathematical and Statistical Psychology, 64(1), 134–160. https://doi.org/10.1348/000711010X498621

Oravecz, Z., Tuerlinckx, F., & Vandekerckhove, J. (2011). A hierarchical latent stochastic difference equation model for affective dynamics. Psychological Methods, 16, 468–490. https://doi.org/10.1037/a0024375

Oravecz, Z., Tuerlinckx, F., & Vandekerckhove, J. (2016). Bayesian data analysis with the bivariate hierarchical Ornstein-Uhlenbeck process model. Multivariate Behavioral Research, 51(1), 106–119. https://doi.org/10.1080/00273171.2015.1110512

Oud, J. H. L. (2007). Continuous time modeling of reciprocal relationships in the cross-lagged panel design. In S. M. Boker & M. J. Wenger (Eds.), Data analytic techniques for dynamic systems in the social and behavioral sciences (pp. 87–129). Mahwah, NJ: Lawrence Erlbaum Associates.

Oud, J. H. L., & Delsing, M. J. M. H. (2010). Continuous time modeling of panel data by means of SEM. In K. van Montfort, J. H. L. Oud, & A. Satorra (Eds.), Longitudinal research with latent variables (pp. 201–244). New York, NY: Springer. https://doi.org/10.1007/978-3-642-11760-2-7

Oud, J. H. L., & Jansen, R. A. (2000). Continuous time state space modeling of panel data by means of SEM. Psychometrika, 65(2), 199–215. https://doi.org/10.1007/BF02294374

Oud, J. H. L., van Leeuwe, J., & Jansen, R. (1993). Kalman filtering in discrete and continuous time based on longitudinal lisrel models. In Advances in longitudinal and multivariate analysis in the behavioral sciences (pp. 3–26). Nijmegen: ITS.

Reichardt, C. S. (2011). Commentary: Are three waves of data sufficient for assessing mediation? Multivariate Behavioral Research, 46(5), 842–851.

Rovine, M. J., & Walls, T. A. (2006). Multilevel autoregressive modeling of interindividual differences in the stability of a process. In T. A. Walls & J. L. Schafer (Eds.), Models for intensive longitudinal data (pp. 124–147). New York, NY: Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195173444.003.0006

Schuurman, N. K., Ferrer, E., de Boer-Sonnenschein, M., & Hamaker, E. L. (2016). How to compare cross-lagged associations in a multilevel autoregressive model. Psychological methods, 21(2), 206–221. https://doi.org/10.1037/met0000062

Steele, J. S., & Ferrer, E. (2011). Latent differential equation modeling of selfregulatory and coregulatory affective processes. Multivariate Behavioral Research, 46(6), 956–984. https://doi.org/10.1080/00273171.2011.625305

Strogatz, S. H. (2014). Nonlinear dynamics and chaos: With applications to physics, biology, chemistry, and engineering. Boulder, CO: Westview press.

Voelkle, M., & Oud, J. H. L. (2013). Continuous time modelling with individually varying time intervals for oscillating and non-oscillating processes. British Journal of Mathematical and Statistical Psychology, 66(1), 103–126. https://doi.org/10.1111/j.2044-8317.2012.02043.x

Voelkle, M., Oud, J. H. L., Davidov, E., & Schmidt, P. (2012). An SEM approach to continuous time modeling of panel data: Relating authoritarianism and anomia. Psychological Methods, 17, 176–192. https://doi.org/10.1037/a0027543

Watkins, M. W., Lei, P.-W., & Canivez, G. L. (2007). Psychometric intelligence and achievement: A cross-lagged panel analysis. Intelligence, 35, 59–68. https://doi.org/10.1016/j.intell.2006.04.005

Acknowledgment

We thank an editor and anonymous reviewer for helpful comments that led to improvements in this chapter. The work of the authors was supported by grants from the Netherlands Organization for Scientific Research (NWO Onderzoekstalent 406-15-128) to Oisín Ryan and Ellen Hamaker, and (NWO VENI 451-16-019) to Rebecca Kuiper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

2.1 Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Appendix: Matrix Exponential

Appendix: Matrix Exponential

Similar to the scalar exponential, the matrix exponential can be defined as an infinite sum

The exponential of a matrix is not equivalent to taking the scalar exponential of each element of the matrix, unless that matrix is diagonal. The exponential of a matrix can be found using an eigenvalue decomposition

where V is a matrix of eigenvectors of A and D is a diagonal matrix of the eigenvalues of A (cf. Moler and Van Loan 2003). The matrix exponential of A is given by

where e D is the diagonal matrix whose entries are the scalar exponential of the eigenvalues of A. When we want to solve for the matrix exponential of a matrix multiplied by some constant Δt, we get

Take it that we have a 2 × 2 square matrix given by

and we wish to solve for e AΔt. The eigenvalues of A are given by

where we will from here on denote

for notational simplicity. The exponential of the diagonal matrix made up of eigenvalues multiplied by the constant Δt is given by

The matrix of eigenvectors of A is given by

assuming c ≠ 0, with inverse

Multiplying V e D V −1 gives us

Note that we present here only a worked out example for a 2 × 2 square matrix. For larger square matrices (representing models with more variables), the eigenvalue decomposition remains the same although the terms for the eigenvalues, eigenvectors, and determinants become much less feasible to present.

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

Ryan, O., Kuiper, R.M., Hamaker, E.L. (2018). A Continuous-Time Approach to Intensive Longitudinal Data: What, Why, and How?. In: van Montfort, K., Oud, J.H.L., Voelkle, M.C. (eds) Continuous Time Modeling in the Behavioral and Related Sciences. Springer, Cham. https://doi.org/10.1007/978-3-319-77219-6_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-77219-6_2

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-77218-9

Online ISBN: 978-3-319-77219-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)