Abstract

In this chapter we review continuous time series modeling and estimation by extended structural equation models (SEM) for single subjects and N > 1. First-order as well as higher-order models will be dealt with. Both will be handled by the general state space approach which reformulates higher-order models as first-order models. In addition to the basic model, the extensions of exogenous variables and traits (random intercepts) will be introduced. The connection between continuous time and discrete time for estimating the model by SEM will be made by the exact discrete model (EDM). It is by the EDM that the exact estimation procedure in this chapter differentiates from many approximate procedures found in the literature. The proposed analysis procedure will be applied to the well-known Wolfer sunspot data, an N = 1 time series that has been analyzed by several continuous time analysts in the past. The analysis will be carried out by ctsem, an R-package for continuous time modeling that interfaces to OpenMx, and the results will be compared to those reported in the previous studies.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1.1 Introduction

This chapter is closely based on an earlier article in Multivariate Behavioral Research (Oud et al. 2018). The chapter extends the previous work by the analysis of the well-known Wolfer sunspot data along with more detailed information on the relation between the discrete time and continuous time model in the so-called exact discrete model (EDM). The previously published MBR article provides additional information on the performance of CARMA(p, q) models by means of several simulations, an empirical example about the relationships between mood at work and mood at home along with a subject-group-reproducibility test. However, to improve readability, we repeat the basic introduction to CARMA(p, q) modeling in the present chapter.

Time series analysis has a long history. Although the roots can be traced back to the earlier work of statisticians such as Yule, Kendall, and Durbin (see the historical overview in Mills 2012), it was the landmark publication “Time Series Analysis, Forecasting and Control” by Box and Jenkins (1970) that made time series analysis popular in many fields of science. Examples are Harvey (1989) and Lütkepohl (1991) in economics or Gottman (1981) and McCleary and Hay (1980) in the social sciences.

Time series analysis has greatly benefited from the introduction of the state space approach. The state space approach stems from control engineering (Kalman 1960; Zadeh and Desoer 1963) and sharply distinguishes the state of a system, which is a vector of latent variables driven by the system dynamics in the state transition equation, from the observations. The measurement part of the state space model specifies the relation between the observed and the underlying latent variables. It turns out that any Box-Jenkins autoregressive and moving average (ARMA) model as well as any extended ARMAX model, in which exogenous variables are added to the model, can be represented as a state space model (Caines 1988; Deistler 1985; Harvey 1981; Ljung 1985). However, the state space representation is much more flexible, allows one to formulate many time series models that cannot easily be handled by the Box-Jenkins ARMA approach, and makes important state space modeling techniques such as the Kalman filter and smoother accessible for time series analysis (Durbin and Koopman 2001). Therefore, the ARMA model in continuous time presented in this chapter, called a CARMA model (Brockwell 2004; Tómasson 2011; Tsai and Chan 2000), will in this chapter be formulated as a state space model.

Structural equation modeling (SEM) was introduced by Jöreskog (1973, 1977) along with the first SEM software: LISREL (Jöreskog and Sörbom 1976). The strong relationships between the state space approach and SEM were highlighted by Oud (1978) and Oud et al. (1990). Both consist of a measurement part and an explanatory part, and in both the explanatory part specifies the relationships between latent variables. Whereas in the state space approach the latent explanatory part is a recursive dynamic model, in SEM it is a latent structural equation model. As explained in detail by Oud et al. (1990), SEM is sufficiently general to allow specification of the state space model as a special case and estimation of its parameters by maximum likelihood and other estimation procedures offered by SEM. By allowing arbitrary measurement error structures, spanning the entire time range of the model, SEM further enhances the flexibility of the state space approach (Voelkle et al. 2012b).

An important drawback of almost all longitudinal models in SEM and time series analysis, however, is their specification in discrete time. Oud and Jansen (2000), Oud and Delsing (2010), and Voelkle et al. (2012a) discussed a series of problems connected with discrete time models, which make their application in practice highly questionable. One main problem is the dependence of discrete time results on the chosen time interval. This leads, first, to incomparability of results over different observation intervals within and between studies. If unaccounted for, it can easily lead to contradictory conclusions. In a multivariate model, one researcher could find a positive effect between two variables x and y, while another researcher finds a negative effect between the same variables, just because of a different observation interval length in discrete time research. Second, because results depend on the specific length of the chosen observation interval, the use of equal intervals in discrete time studies does not solve the problem (Oud and Delsing 2010). Another interval might have given different results to both researchers. Continuous time analysis is needed to make the different and possibly contradictory effects in discrete time independent of the interval for equal as well as unequal intervals.

The CARMA time series analysis procedure to be presented in this chapter is based on SEM continuous-time state-space modeling, developed for panel data by Oud and Jansen (2000) and Voelkle and Oud (2013). The first of these publications used the nonlinear SEM software package Mx (Neale 1997) to estimate the continuous time parameters, the second the ctsem program (Driver et al. 2017). ctsem interfaces to OpenMx (Boker et al. 2011; Neale et al. 2016), which is a significantly improved and extended version of Mx. In both publications the kernel of the model is a multivariate stochastic differential equation, and in both maximum likelihood estimation is performed via the so-called exact discrete model EDM (Bergstrom 1984). The EDM uses the exact solution of the stochastic differential equation to link the underlying continuous time parameters exactly to the parameters of the discrete time model describing the data. The exact solution turns out to impose nonlinear constraints on the discrete time parameters. Several authors tried to avoid the implementation of nonlinear constraints by using approximate procedures, although acknowledging the advantages of the exact procedure (e.g., Gasimova et al. 2014; Steele and Ferrer 2011a,b). In an extensive simulation study, Oud (2007) compared, as an example, the multivariate latent differential equation procedure (MLDE; Boker et al. 2004), which is an extension of the local linear approximation (LLA; Boker 2001), to the exact procedure. The exact procedure was found to give considerably lower biases and root-mean-square error values for the continuous time parameter estimates.

Instead of SEM, most time series procedures, particularly those based on the state space approach (Durbin and Koopman 2001; Hannan and Deistler 1988; Harvey 1989), use filtering techniques for maximum likelihood estimation of the model. Singer (1990, 1991, 1998) adapted these techniques for continuous time modeling of panel data. In a series of simulations, Oud (2007) and Oud and Singer (2008) compared the results of SEM and filtering in maximum likelihood estimation of various continuous time models. It turned out that in case of identical models, being appropriate for both procedures, the parameter estimates as well as the associated standard errors are equal. Because both procedures maximize the likelihood, this ought to be the case. Considerable technical differences between the two procedures, however, make it nevertheless worthwhile to find that results indeed coincide. In view of its greater generality and its popularity outside of control theory, especially in social science, SEM can thus be considered a useful alternative to filtering. Making use of the extended SEM framework underlying OpenMx (Neale et al. 2016), both SEM and the Kalman filter procedure, originally developed in control theory, are implemented in ctsem.

An argument against SEM as an estimation procedure for time series analysis could be that it is known and implemented as a large N procedure, while time series analysis is defined for N = 1 or at least includes N = 1 as the prototypical case. In the past, different minimum numbers of sample units have been proposed for SEM, such as N ≥ 200, at least 5 units per estimated parameter, at least 10 per variable, and so on, with all recommendations being considerably larger than N = 1. Recently, Wolf et al. (2013), on the basis of simulations, settled on a range of minimum values from 30 to 460, depending on key model properties. For small N, in particular N = 1, leading to a nonpositive definite sample covariance matrix, some SEM programs simply refuse to analyze the data or they change the input data before the analysis. For example, LISREL gives a warning (“Matrix to be analyzed is not positive definite”) and then increases the values on the diagonal of the data covariance matrix S to get this matrix positive definite (“Ridge option taken with ridge constant = …”), before starting the analysis.

As made clear by Hamaker et al. (2003), Singer (2010), and Voelkle et al. (2012b), however, the long-standing suggestion that a SEM analysis cannot be performed because of a nonpositive definite covariance matrix S is wrong. Nothing in the likelihood function requires the sample covariance matrix S to be positive definite. It is the model implied covariance matrix Σ that should be positive definite, but this depends only on the chosen model and in no way on the sample size N. For the same reason, minimum requirements with regard to N do not make sense, and N = 1 is an option as well as any other N. The quality of the estimates as measured, for example, by their standard errors, depends indeed on the amount of data. The amount of data, however, can be increased both by the number of columns in the data matrix (i.e., the number of time points T in longitudinal research) and by the number of rows (i.e., the number of subjects N in the study). The incorrect requirement of a positive definite S for maximum likelihood estimation (Jöreskog and Sörbom 1996) may be explained by the modified likelihood function employed in LISREL and other SEM programs. This contains the quantity \(\log |S|\) (see Equation (3) in Voelkle et al. 2012b), and thus these programs cannot handle N = 1 and N smaller than the number of columns in S. By employing the basic “raw data” or “full-information” maximum likelihood function (RML or FIML), OpenMx avoids this problem and (a) allows any N, including N = 1, (b) permits the nonlinear constraints of the EDM, (c) allows any arbitrary missing data pattern under the missing at random (MAR) assumption, and (d) allows individually varying observation intervals in continuous time modeling by means of so-called definition variables (Voelkle and Oud 2013).

Until now, however, the SEM continuous time procedure has not yet been applied on empirical N = 1 data, although the possibility is discussed and proven to be statistically sound by Singer (2010), and the software to do so is now readily available (Driver et al. 2017). Thus, the aim of the present chapter is, first, to discuss the state space specification of CARMA models in a SEM context. Second, to show that the SEM continuous time procedure is appropriate for N = 1 as well as for N > 1. Third, the proposed analysis procedure using ctsem will be applied on the well-known Wolfer sunspot data. This N = 1 data set has been analyzed by several continuous time analysts before. The results from ctsem will be compared to those found previously.

1.2 Continuous Time Model

1.2.1 Basic Model

In discrete time, the multivariate autoregressive moving-average model ARMA (p, q) with p the maximum lag of the dependent variables vector y t and q the maximum lag of the error components vector e t reads for a model with, for example, p = 2 and q = 1

The autoregressive part with F-matrices specifies the lagged effects of the dependent variables, while the moving-average part with G-matrices handles the incoming errors and lagged errors. Assuming the errors in the vectors e t, e t−1 to be independently standard normally distributed (having covariance matrices I) and G t, G t,t−1 lower-triangular, the moving-average effects G t e t, G t,t−1 e t−1 get covariance matrices \({\mathbf {Q}}_t={\mathbf {G}}_t{\mathbf {G}}_t^{\prime },{\mathbf {Q}}_{t,t-1}={\mathbf {G}}_{t,t-1}{\mathbf {G}}_{t,t-1}^{\prime }\), which may be nondiagonal and with arbitrary variances on the diagonal. Specifying moving-average effects is no less general than the covariance matrices. Any covariance matrix Q can be written as Q = GG ′ in terms of a lower-triangular matrix (Cholesky factor) G. In addition, estimating G instead of directly Q has the advantage of avoiding possible negative variance estimates showing up in the direct estimate of Q.

The moving-average part G t e t + G t,t−1 e t−1 in Eq. (1.1) may also be written as G t,t−1 e t−1 + G t,t−2 e t−2 with the time indices shifted backward in time from t and t − 1 to t − 1 and t − 2. Replacing the instantaneous error component G t e t by the lagged one G t,t−1 e t−1 (and G t,t−1 e t−1 by G t,t−2 e t−2) could be considered more appropriate, if the errors are taken to stand for the unknown causal influences on the system, which need some time to operate and to affect the system. The fact that the two unobserved consecutive error components get other names but retain their previous values will result in an observationally equivalent (equally fitting) system. Although equivalent, the existence of different representations in discrete time (forward or instantaneous representation in terms of t and t − 1 and backward or lagging representation in terms of t − 1 and t − 2) is nevertheless unsatisfactory. The forward representation puts everything that happens in between t and t − 1 forward in time at t, the backward representation puts the same information backward in time at t − 1. From a causal standpoint, though, the backward representation is no less problematic than the forward representation, since it is anticipating effects that in true time will happen only later.

The ambiguous representation in discrete time of the behavior between t and t − 1 in an ARMA(p, q) model disappears in the analogous continuous time CARMA(p, q) model, which reads for p = 2 and q = 1

Writing y(t) instead of y t emphasizes the development of y across continuous time. The role of successive lags in discrete time is taken over by successive derivatives in continuous time. The causally unsatisfactory instantaneous and lagging representations meet, so to speak, in the derivatives, which instead of using a discrete time interval Δt = t − (t − 1) = 1, let the time interval go to zero: Δt → 0. Equation (1.2) is sometimes written as

with F 2 = −I and opposite signs for G 0, G 1, making it clear that the CARMA(2,1) model has F 2 as the highest degree matrix in the autoregressive part and G 1 as the highest degree in the moving-average part.

The next subsection will show in more detail how discrete time and continuous time equations such as (1.1) and (1.2) become connected as Δt → 0. The error process in continuous time is the famous Wiener processFootnote 1 W(t) or random walk through continuous time. Its main defining properties are the conditions of independently and normally distributed increments, ΔW(t) = W(t) −W(t − Δt), having mean 0 and covariance matrix Δt I. This means that the increments with arbitrary Δt are standard normally distributed for Δt = 1 as assumed for e t, e t−1 in discrete time. Likewise, the role of lower-triangular G 0, G 1 in (1.2) is analogous to the role of G t, G t,t−1 in discrete time. Derivative dW(t)∕dt (white noise) does not exist in the classical sense but can be defined in the generalized function sense, and also integral \(\int _{t_0}^t\mathbf {G}\text{d}\mathbf {W}(t)\) can be defined rigorously (Kuo 2006, pp. 104–105 and pp. 260–261). Integrals are needed to go back again from the continuous time specification in Eq. (1.2) to the observed values in discrete time.

We will now show how the CARMA(2,1) model in Eq. (1.2) and the general CARMA(p, q) model can be formulated as special cases of the continuous time state space model. The continuous time state space model consists of two equations: a latent dynamic equation (1.4) with so-called drift matrix A and diffusion matrix G and a measurement equation (1.5) with loading matrix C and measurement error vector v(t):

In general, the variables in state vector x(t) are assumed to be latent and only indirectly measured by the observed variables in y(t) with measurement errors in v(t). The measurement error vector v(t) is assumed independent of x(t) and normally distributed: v(t) ∼ N(0, R). For the initial state x(t 0), we assume \(\mathbf {x}(t_0)\sim N(\boldsymbol {\upmu }_{\mathbf {x}(t_0)},\boldsymbol {\Phi }_{\mathbf {x}(t_0)})\). Often, but not necessarily, it is assumed \(E[\mathbf {x}(t_0)] = \boldsymbol {\upmu }_{\mathbf {x}(t_0)} = \mathbf {0}\). The latter would imply that the model has an equilibrium state: E[x(t)] = E[x(t 0)] = 0, and in case all eigenvalues of A have negative real part, 0 is the stable equilibrium state in the model.

In state space form, the observed second-order model CARMA(2,1) in Eq. (1.2) gets a state vector x(t) = [x 1(t)′ x 2(t)′]′, which is two times the size of the observed vector y(t). The first part x 1(t) is not directly related to the observed variables and thus belongs to the latent part of the state space model. This special case of the state space model equates the second part to the observed vector: y(t) = x 2(t). Equation (1.2) then follows from state space model (1.4)–(1.5) by specification

Applying (1.6) we find first

Substituting (1.7) into the implication in (1.8) gives

which for y(t) = x 2(t) leads to the CARMA(2,1) model in (1.2).

The specification in the previous paragraph can be generalized to find the CARMA(p, q) model in state space form in (1.10), where \(r = \max \left ( {p,q + 1} \right )\). G leads to diffusion covariance matrix Q = GG ′, which has Cholesky factor-based covariance matrices G i G i ′ on the diagonal and in case q > 0 off-diagonal matrices G i G j ′ (i, j = 0, 1, …, r − 1). In the literature one often finds the alternative state space form (1.11) (see e.g., Tómasson 2011; Tsai and Chan 2000). Here the moving average matrices G 1, G 2, …, G r−1 are rewritten as G i = H i G 0 in terms of corresponding matrices H 1, H 2, …, H r−1, specified in the measurement part of the state space

model and G 0. For two reasons we prefer (1.10) in the case of CARMA(p, q) models with q > 0. The derivation of (1.11) requires the matrices H i and F j to commute, which in practice restricts the applicability to the univariate case. In addition, using the measurement part for the moving average specification would make it difficult to specify at the same time measurement parameters. For CARMA(p,0) models (all H i = 0), we prefer (1.11), because each state variable in state vector x(t) is easily interpretable as the derivative of the previous one. The interpretation of the state variables in (1.7)–(1.8) is less simple.

The fact that the general CARMA(p, q) model fits seamlessly into the state space model means that all continuous time time series problems in modeling and estimation can be handled by state space form (1.4)–(1.5). The state space approach in fact reformulates higher-order models as a first-order model, and this will be applied in the sequel.

1.2.2 Connecting Discrete and Continuous Time Model in the EDM

The EDM combines the discrete time and continuous time model and does so in an exact way. It is by the EDM that the exact procedure in this chapter differentiates from many approximate procedures found in the literature. We show how the exact connection looks like between the general continuous time state space model and its discrete time counterpart, derived from it. The first-order models ARMA(1,0) and CARMA(1,0) in state space form differ from the general discrete and continuous time state space model only in a simpler measurement equation. So, having made the exact connections between the general state space models and thus between ARMA(1,0) and CARMA(1,0) and knowing that each CARMA(p, q) model can be written as a special case of the general state space model, the exact connections between CARMA(p, q) and ARMA(p, q), where the latter is derived from the former, follow. Next we consider the question of making an exact connection between an arbitrary ARMA(p ∗, q ∗) model and a CARMA(p, q) model, where the degrees p ∗ and p as well as q ∗ and q need not be equal.

Comparing discrete time equation (1.1) to the general discrete time state space model in (1.12)–(1.13), one observes that the latter becomes immediately the ARMA(1,0) model for y t = x t but is more flexible in time handling.

Inserting arbitrary lag Δt instead of fixed lag Δt = 1 enables us to put discrete time models with different intervals (e.g., years and months) on the same time scale and to connect them to the common underlying continuous time model for Δt → 0.

State equation (1.12) can be put in the equivalent difference quotient form

So we have the discrete time state space model in two forms: difference quotient form (1.14) and solution form (1.12). Equation (1.12) is called the solution of (1.14), because it describes the actual state transition across time in accordance with (1.14) and is so said to satisfy the difference quotient equation. Note that analogously the general continuous time state space model (1.4)–(1.5) immediately accommodates the special CARMA(1,0) model for y(t) = x(t) and can be put in two forms: stochastic differential equation (1.4) and its solution (1.15) (Arnold 1974; Singer 1990):

In the exact discrete model EDM the connection between discrete and continuous time is made by means of the solutions, which in both cases describe the actual transition from the previous state at t − Δt to the next state at t. The EDM thus combines both models and connects them exactly by the equalities:

While discrete time autoregression matrix A Δt and continuous time drift matrix A are connected via the highly nonlinear matrix exponential, the errors are indirectly connected by their covariance matrices Q Δt = G Δt G ′ Δt and Q = GG ′. In estimating, after finding the drift matrix A on the basis of A Δt, next on the basis of G Δt the diffusion matrix G is found.

The connection between discrete and continuous time becomes further clarified by two definitions of the matrix exponential eA Δt. The standard definition (1.17)

shows the rather complicated presence of A in A Δt and the formidable task to extract continuous time A from discrete time A Δt in an exact fashion. However, it also shows that for Δt → 0, the quantity between parentheses becomes arbitrarily small, and the linear part I + A Δt could be taken as an approximation of eA Δt, leading precisely to A ∗ Δt in the difference quotient equation (1.14) as approximation of A in the differential equation (1.4). Depending on the length of the interval Δt, however, the quality of this approximation can be unduly bad in practice. An alternative definition is based on oversampling (Singer 2012), meaning that the total time interval between measurements is divided into arbitrary small subintervals: \(\delta = \frac {{{\Delta } t}}{D}\) for D →∞. The definition relies on the multiplication property of autoregression: x t = A 0.5 Δt x t−0.5 Δt and x t−0.5 Δt = A 0.5 Δt x t− Δt ⇒ x t = A 0.5 Δt A 0.5 Δt x t− Δt = A Δt x t− Δt which is equally valid in the continuous time case: x t = eA(0.5 Δt)eA(0.5 Δt) x t− Δt = eA Δt x t− Δt, leading to multiplicative definition:

I + A Δt in (1.17), becoming I + A δ in (1.18), is no longer an approximation of eAδ but becomes equal to eAδ for D →∞ and δ → 0, while then A ∗δ =(e Aδ −I)∕δ in difference equation (1.14) becomes equal to drift matrix A in differential equation (1.4). So, the formidable task to extract A from A Δt is performed here in the same simple way as done by just taking approximate A ∗ Δt from A Δt in (1.14), but instead of once over the whole interval Δt, it is repeated over many small subintervals, becoming for sufficiently large D equal to the exact procedure in terms of (1.17). We write A ∗ Δt ≈A, but one should keep in mind that by taking a sufficiently small interval in terms of the right-hand side of (1.18), one can get A ∗ Δt as close to A as wanted. An adapted versionFootnote 2 of the oversampling procedure is described in Voelkle and Oud (2013).

Let us illustrate the connection between CARMA(1,0) and ARMA(1,0) by an example. If  , the exact connection in the EDM is for Δt = 1 made by \({{\mathbf {A}}_{{\Delta } t = 1}} = {{\text{e}}^{{\mathbf {A}}{\Delta } t}} = {{\text{e}}^{\mathbf {A}}} = \left [ {\begin {array}{*{20}{c}} {0.377}&{0.058} \\ {{\text{0.088 }}}&{0.231} \end {array}} \right ]\). A in the differential equation may be compared to

, the exact connection in the EDM is for Δt = 1 made by \({{\mathbf {A}}_{{\Delta } t = 1}} = {{\text{e}}^{{\mathbf {A}}{\Delta } t}} = {{\text{e}}^{\mathbf {A}}} = \left [ {\begin {array}{*{20}{c}} {0.377}&{0.058} \\ {{\text{0.088 }}}&{0.231} \end {array}} \right ]\). A in the differential equation may be compared to  in the difference equation for Δt = 1. For Δt = 0.1 we get

in the difference equation for Δt = 1. For Δt = 0.1 we get  which is much closer to A and for Δt = 0.001,

which is much closer to A and for Δt = 0.001,  becomes virtually equal to A.

becomes virtually equal to A.

Making an exact connection between an ARMA(p ∗, q ∗) model and a model CARMA(p, q) such that the ARMA process {y t;t = 0, t = Δt, t = 2 Δt, …} generated by ARMA(p ∗, q ∗) is a subset of the CARMA process \(\{ {\mathbf {y}}(t); t \geqslant 0\} \) generated by CARMA(p, q) is called “embedding” in the literature. The degrees p ∗ and q ∗ of the embedded model and p and q of the embedding model need not be equal. Embeddability is a much debated issue. Embedding is not always possible and need not be unique. Embedding is clearly possible for the case of ARMA(1,0) model y t = A Δt y t− Δt + G Δt e t− Δt derived from CARMA(1,0) model \(\frac {{{\text{d}}{\mathbf {y}}(t)}}{{{\text{d}}t}} = {\mathbf {Ay}}(t) + {\mathbf {G}}\frac {{{\text{d}}{\mathbf {W}}(t)}}{{{\text{d}}t}}\) with A Δt = eA Δt,\({}{{\mathbf {Q}}_{{\Delta } t}} = \int _{t - {\Delta } t}^t {{{\text{e}}^{{\mathbf {A}}(t - s)}}} {\mathbf {Q}}{{\text{e}}^{{{\mathbf {A}}^{\prime }}(t - s)}}{\text{d}}s\), Q Δt = G Δt G ′ Δt, Q = GG ′ as shown above. The same is true for the higher-order ARMA(p, q) model, derived from CARMA(p, q). However, in general it is nontrivial to prove embeddability and to find the parameters of the CARMA(p, q) model embedding an ARMA(p ∗, q ∗) process. For example, not all ARMA(1,0) processes have a CARMA(1,0) process in which it can be embedded. A well-known example is the simple univariate process y t = a Δt y t− Δt + g Δt e t− Δt with − 1 < a Δt < 0, because there does not exist any a for which a Δt = ea Δt can be negative. However, Chan and Tong (1987) showed that for this ARMA(1,0) process with − 1 < a Δt < 0, a higher order CARMA(2,1) process can be found, in which it can be embedded.

Also embeddability need not be unique. Different CARMA models may embed one and the same ARMA model. A classic example is “aliasing” in the case of matrices A with complex conjugate eigenvalue pairs λ 1,2 = α ± βi with i the imaginary unit (Hamerle et al. 1991; Phillips 1973). Such complex eigenvalue pairs imply processes with oscillatory movements. Adding ± k2π∕ Δt to β leads for arbitrary integer k to a different A with a different oscillation frequency but does not change A Δt = eA Δt and so may lead to the same ARMA model. The consequence is that the CARMA model cannot uniquely be determined (identified) by the ARMA model and the process generated by it. Fortunately, the number of aliases in general is limited in the sense that there exists only a finite number of aliases that lead for the same ARMA model to a real G and so to a positive definite Q in the CARMA model (Hansen and Sargent 1983). The size of the finite set additionally depends on the observation interval Δt, a smaller Δt leading to less aliases. The number of aliases may also be limited by sampling the observations in the discrete time process at unequal intervals (Oud and Jansen 2000; Tómasson 2015; Voelkle and Oud 2013).

An important point with regard to the state space modeling technique of time series is the latent character of the state. Even in the case of an observed ARMA(p, q) or CARMA(p, q) model of such low dimension as p = 2, we have seen that part of the state is not directly connected to the data. This has especially consequences for the initial time point. Suppose for the ARMA(2,1) model in state space form (1.19)–(1.20),

the initial time point, where the initial data are located, is t 0 = t − Δt. It means that there are no data directly or indirectly connected to (the lagged) part of x t− Δt. The initial parameters related to this part can nevertheless be estimated but become highly dependent on the model structure, and the uncertainty will be reflected in high standard errors. It does not help to start the model at later time point t 0 + Δt. That would result in data loss, since the 0 in (1.20) simply eliminates the lagged part of x t− Δt without any connection to the data. Similar remarks apply to the initial derivative dx 1(t)∕dt

in (1.21)–(1.22), which is not directly connected to the data and cannot be computed at the initial time point. Again, the related initial parameters can be estimated in principle. One should realize, however, that dependent on the model structure, the number of time points analyzed and the length of the observation intervals, these initial parameter estimates can become extremely unreliable. In a simulation study of a CARMA(2,0) model with oscillating movements, Oud and Singer (2008) found in the case of long interval lengths extremely large standard errors for the estimates related to the badly measured initial dx 1(t)∕dt. This lack of data and relative unreliability of estimates are the price one has to pay for choosing higher-order ARMA(p, q) and CARMA(p, q) models.

1.2.3 Extended Continuous Time Model

The extended continuous time state space model reads

In comparison to the basic model in (1.4)–(1.5), the extended model exhibits one minor notational change and two major additions. The minor change is in the measurement equation and is only meant to emphasize the discrete time character of the data at the discrete time points t i (i = 0, …, T − 1) with x(t i) and u(t i) sampling the continuous time vectors x(t) and u(t) at the observation time points. One major addition are the effects Bu(t) and Du(t) of fixed exogenous variables in vector u(t). The other is the addition of random subject effect vectors γ and κ to the equations. While the (statistically) fixed variables in u(t) may change across time (time-varying exogenous variables), the subject-specific effects γ and κ with possibly a different value for each subject in the sample are assumed to be constant across time but normally distributed random variables: γ ∼ N(0, Φ γ), κ ∼ N(0, Φ κ). To distinguish them from the changing states, the constant random effects in γ are called traits. Because trait vector γ is modeled to influence x(t) continuously, before as well as after t 0, \({{{\boldsymbol {\Phi }}}_{{\mathbf {x}}({t_0}),{\boldsymbol {\upgamma }}}}\), the covariance matrix between initial state and traits cannot in general be assumed zero. The additions in state equation (1.23) lead to the following extended solution:

1.2.4 Exogenous Variables

We have seen that the basic model in the case of stability (all eigenvalues of A having negative real part) has 0 as stable equilibrium state. Exogenous effects Bu(t) and Du(t) accommodate nonzero constant as well as nonconstant mean trajectories E[x(t)] and E[y(t)] even in the case of stability. By far the most popular exogenous input function is the unit function, e(s) = 1 for all s over the interval, with the effect b e called intercept and integrating over interval [t 0, t) into \(\int _{{t_0}}^t {{{\text{e}}^{{\mathbf {A}}(t - s)}}} {{\mathbf {b}}_e}e(s){\text{d}}(s) = {{\mathbf {A}}^{ - 1}}[{{\text{e}}^{{\mathbf {A}}(t - {t_0})}} - {\mathbf {I}}]{{\mathbf {b}}_e}\). In the measurement equation, the effect of the unit variable in D is called measurement intercept or origin and allows measurement instruments to have scales with different starting points in addition to the different units specified in C.

Useful in describing sudden changes in the environment is the intervention function, a step function that takes on a certain value a until a specific time point t′ and changes to value b at that time point until the end of the interval: i(s) = a for all s < t′, i(s) = b for all \(s \geqslant t'\). An effective way of handling the step or piecewise constant function is a two-step procedure, in which Eq. (1.26) is applied twice: first with u(t 0) containing the step function value of the first step before t′ and next with u(t 0) containing the step function value of the second step.

In the second step, the result x(t) of the first step is inserted as x(t 0). The relatively simple solution equation (1.26) has much more general applicability, though, than just for step functions. It can be used to approximate any exogenous behavior function in steps and approximate its effect arbitrarily closely by oversampling (dividing the observation interval in smaller intervals) and choosing the oversampling intervals sufficiently small.Footnote 3

The handling of exogenous variables takes another twist, when it is decided to endogenize them. The problem with oversampling is that it is often not known, how the exogenous behavior function looks like in between observations. By endogenizing the exogenous variables, they are handled as random variables, added to the state vector, and in the same way as the other state variables related to their past values in an autoregressive fashion. Advantages of endogenizing are its nonapproximate nature and the fact that the new state variables may not only be modeled to influence the other state variables but also to be reciprocally influenced by them.

In addition to differentiating time points within subjects, exogenous variables also enable to differentiate subjects in case of an N > 1 sample. Suppose the first element of u(t) is the unit variable, corresponding in B with first column b e, and the second element is a dummy variable differentiating boys and girls (boys 0 at all-time points and girls 1 at all-time points) and corresponding to second column b d. Supposing all remaining variables have equal values, the mean or expectation E[x(t)] of girls over the interval [t 0, t) will then differ by the amount of \({{\mathbf {A}}^{ - 1}}[{{\text{e}}^{{\mathbf {A}}(t - {t_0})}} - {\mathbf {I}}]{{\mathbf {b}}_d}\) from the one of boys. This amount will be zero for t − t 0 = 0, but the regression-like analysis procedure applied at initial time point t 0 allows to distinguish different initial means E[x(t 0)] for boys and girls. Thus, the same dummy variable at t 0 may impact both the state variables at t 0 and according to the state space model over the interval t − t 0 the state variables at the next observation time point.

1.2.5 Traits

Although, as we have just seen, there is some flexibility in the mean or expected trajectory, because subjects in different groups can have different mean trajectories, it would nevertheless be a strange implication of the model, if a subject’s expected current and future behavior is totally dependent on the group of which he or she is modeled to be a member. It should be noted that the expected trajectories are not only interesting per se, but they also play a crucial role in the estimated latent sample trajectory of a subject, defined as the conditional mean E[x(t)|y], where y is the total data vector of the subject (Kalman smoother), or E[x(t)|y[t 0, t]], where y[t 0, t] is all data up to and including t (Kalman filter). In a model without traits, the subject regresses toward (in the case of a stable model) or egresses from (in an unstable model) the mean trajectory of its group. The consequences are particularly dramatic for predictions, because then after enough time is elapsed, the subject’s trajectory in a stable model will be coinciding with its group trajectory.

From solution equation (1.25), it becomes clear, however, that in the state-trait model, each subject gets its own mean trajectory that differs from the group’s mean. After moving the initial time point of a stable model sufficiently far into the past, t 0 →−∞, the subject’s expected trajectory is

which keeps a subject-specific distance −A −1 γ from the subject’s group mean trajectory \(E[{\mathbf {x}}(t)] = \int _{ - \infty }^t {{{\text{e}}^{{\mathbf {A}}(t - s)}}} {\mathbf {Bu}}(s){\text{d}}(s)\). As a result the subject’s sample trajectory regresses toward its own mean instead of its group mean. A related advantage of the state-trait model is that it clearly distinguishes trait variance (diagonals of Φ γ), also called unobserved heterogeneity between subjects, from stability. Because in a pure state model (γ = 0) all subject-specific mean trajectories coincide with the group mean trajectory, trait variance and stability are confounded in the sense that an actually nonzero trait variance leads to a less stable model (eigenvalues of A having less negative real part) as a surrogate for keeping the subject-specific mean trajectories apart. In a state-trait model, however, stability is not hampered by hidden heterogeneity.

It should be noted that the impact of the fixed and random effects Bu(t) and γ in the state equation (1.23) is quite different from that of Du(t i) and κ in the measurement equation (1.24). The latter is a one-time snapshot event with no consequences for the future. It just reads out in a specific way the current contents of the system’s state. However, the state equation is a dynamic equation where influences may have long-lasting and cumulative future effects that are spelled out by Eq. (1.25) or (1.26). In particular, the traits γ differ fundamentally from the nondynamic or “random measurement bias” κ, earlier proposed for panel data by Goodrich and Caines (1979), Jones (1993), and Shumway and Stoffer (2000).

1.3 Model Estimation by SEM

As emphasized above, if the data are collected in discrete time, we need the EDM to connect the continuous time parameter matrices to the discrete time parameter matrices describing the data. The continuous time model in state space form contains eight parameter matrices that are connected to the corresponding discrete time matrices as shown in (1.28). In (1.28) ⊗ is the Kronecker product and the row operator puts the elements of the Q matrix row-wise in a column vector, whereas irow stands for the inverse operation.

While there are only eight continuous time parameter matrices, there may be many more discrete time parameter matrices. This is typically the case for the dynamic matrices \({{\mathbf {A}}_{{\Delta } {t_{i,j}}}},{{\mathbf {B}}_{{\Delta } {t_{i,j}}}},{{\mathbf {Q}}_{{\Delta } {t_{i,j}}}}.\) The observation time points t i (i = 0, …, T − 1) may differ for different subjects j (j = 1, …, N) but also the observation intervals Δt i,j = t i,j − t i−1,j (i = 1, …, T − 1) between the observation time points. Different observation intervals can lead to many different discrete time matrices \({{\mathbf {A}}_{{\Delta } {t_{i,j}}}},{{\mathbf {B}}_{{\Delta } {t_{i,j}}}},{{\mathbf {Q}}_{{\Delta } {t_{i,j}}}}\) but all based on the same underlying continuous time matrices A, B, Q.

The most extreme case is that none of the intervals is equal to any other interval, a situation a traditional discrete time analysis would be unable to cope with but is unproblematic in continuous time analysis (Oud and Voelkle 2014).

The initial parameter matrices \({{\boldsymbol {{\upmu }}}_{{\mathbf {x}}({t_0})}}\) and \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0})}}\) deserve special attention. In a model with exogenous variables, the initial state mean may take different values in different groups defined by the exogenous variables. Since the mean trajectories E[x(t)] may be deviating from each other because of exogenous influences after t 0, it is natural to let them already differ at t 0 as a result of past influences. These differences are defined regression-wise by \(E[{\mathbf {x}}({t_0})] = {{\mathbf {B}}_{{t_0}}}{\mathbf {u}}({t_0})\) with \({{\mathbf {B}}_{{t_0}}}{\mathbf {u}}({t_0})\) absorbing all unknown past influences. For example, if u(t 0) consists of two variables, the unit variable (1 for all subjects) and a dummy variable defining gender (0 for boys and 1 for girls), there will be two means E[x(t 0)], one for the boys and one for the girls. If u(t 0) contains only the unit variable, the single remaining vector \({{\mathbf {b}}_{{t_0}}}\) in \({{\mathbf {b}}_{{t_0}}}\) will become equal to the initial mean: \(E[{\mathbf {x}}({t_0})] = {{\mathbf {b}}_{{t_0}}}\). Because the instantaneous regression matrix \({{\mathbf {b}}_{{t_0}}}\) just describes means and differences as a result of unknown effects from before t 0, it should not be confused with the dynamic B and as a so-called predetermined quantity in estimation not undergo any constraint from B. Similarly, \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0})}}\) should not undergo any constraint from the continuous time diffusion covariance matrix Q.

A totally new situation for the initial parameters arises, however, if we assume the system to be in equilibrium. Equilibrium means first \({{\boldsymbol {{\upmu }}}_{{\mathbf {x}}(t)}} = {{\boldsymbol {{\upmu }}}_{{\mathbf {x}}({t_0})}}\) as well as all exogenous variables u(t) = u(t 0) being constant. Evidently, the latter is the case, if the only exogenous variable is the unit variable, reducing B to a vector of intercepts, but also if it contains additional gender or any other additional exogenous variables, differentiating subjects from each other but constant in time. The assumption of equilibrium, \({{\boldsymbol {{\upmu }}}_{{\mathbf {x}}(t)}} = {{\boldsymbol {{\upmu }}}_{{\mathbf {x}}({t_0})}}\),—possibly but not necessarily a stable equilibrium—leads to equilibrium value

with u c the value of the constant exogenous variables u(t) = u(t 0) = u c. If we assume the system to be stationary, additionally \({{\boldsymbol {\Phi }}_{{\mathbf {x}}(t)}} = {{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0})}}\) is assumed to be in equilibrium, leading to equilibrium value

The novelty of the stationarity assumption is that the initial parameters are totally defined in terms of the dynamic parameters as is clearly seen from (1.29) and (1.30). It means, in fact, that the initial parameters disappear and the total number of parameters to be estimated is considerably reduced. Although attractive and present as an option in ctsem (Driver et al. 2017), the stationarity assumption is quite restrictive and can be unrealistic in practice.

To estimate the EDM as specified in (1.28) by SEM, we put all variables and matrices of the EDM into SEM model

The SEM model consists of two equations, structural equation (1.31) and measurement equation (1.32), in terms of four vectors, η, ζ, y, 𝜖, and four matrices,  From Eqs. (1.31)–(1.32), one easily derives the model implied mean μ and covariance matrix

From Eqs. (1.31)–(1.32), one easily derives the model implied mean μ and covariance matrix  and next the raw maximum likelihood equation (1.33) (see e.g., Bollen 1989)

and next the raw maximum likelihood equation (1.33) (see e.g., Bollen 1989)

The subscript j makes the SEM procedure extremely flexible by allowing any number of subjects, including N = 1, and any missing value pattern for each of the subjects j, as the number of variables m

j (m = pT), the data vector y

j, the mean vector μ

j, and the covariance matrix  may all be subject specific. In case of missing values, the corresponding rows and columns of the missing elements for that subject j are simply deleted.

may all be subject specific. In case of missing values, the corresponding rows and columns of the missing elements for that subject j are simply deleted.

For obtaining the maximum likelihood estimates of the EDM, it suffices to show how the SEM vectors η, y, ζ, 𝜖, and matrices,  include the variables and matrices of the EDM. This is done in (1.34). In the vector of exogenous variables u(t) = [u

c u

v(t)], we distinguish two parts: the part u

c, consisting of the unit variable and, for example, gender and other variables that differ between subjects but are constant across time, and the part u

v(t) that at least for one subject in the sample is varying across time. Exogenous variables like weight and income, for example, are to be put into u

v(t). We abbreviate the dynamic errors \(\int _{{t_{i,j}} - {\Delta } {t_{i,j}}}^{{t_{i,j}}} {{{\text{e}}^{{\mathbf {A}}({t_{i,j}} - s)}}} {\mathbf {G}}{\text{d}}{\mathbf {W}}(s)\) to w(t

i,j − Δt

i,j). The mean sum of squares and cross-products matrix of u

j over sample units is called Φ

u, and the mean sum of cross-products between \({\mathbf {x}}({t_{0,j}}) - {{{\boldsymbol {\upmu }}}_{{\mathbf {x}}({t_{0,j}})}}\) and u

j is called \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0}),{\mathbf {u}}}}\). The latter must be estimated, though, if the state is latent.

include the variables and matrices of the EDM. This is done in (1.34). In the vector of exogenous variables u(t) = [u

c u

v(t)], we distinguish two parts: the part u

c, consisting of the unit variable and, for example, gender and other variables that differ between subjects but are constant across time, and the part u

v(t) that at least for one subject in the sample is varying across time. Exogenous variables like weight and income, for example, are to be put into u

v(t). We abbreviate the dynamic errors \(\int _{{t_{i,j}} - {\Delta } {t_{i,j}}}^{{t_{i,j}}} {{{\text{e}}^{{\mathbf {A}}({t_{i,j}} - s)}}} {\mathbf {G}}{\text{d}}{\mathbf {W}}(s)\) to w(t

i,j − Δt

i,j). The mean sum of squares and cross-products matrix of u

j over sample units is called Φ

u, and the mean sum of cross-products between \({\mathbf {x}}({t_{0,j}}) - {{{\boldsymbol {\upmu }}}_{{\mathbf {x}}({t_{0,j}})}}\) and u

j is called \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0}),{\mathbf {u}}}}\). The latter must be estimated, though, if the state is latent.

The traits γ and κ are not explicitly displayed but can be viewed as a special kind of constant zero-mean exogenous variables u c,j in u j, whose covariance matrices Φ γ and Φ κ in Φ u as well as \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0}),{\boldsymbol {\upgamma }}}}\) and \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0}),{\boldsymbol {\upkappa }}}}\) in \({{\boldsymbol {\Phi }}_{{\mathbf {x}}({t_0}),{\mathbf {u}}}}\) are not fixed quantities but have to be estimated. These latent variables have no loadings in Λ and have \({{\mathbf {B}}_{c,{t_{0{}}}}} = {\mathbf {0}}\) in B. For γ the \({{\mathbf {B}}_{c,{\Delta } {t_{i,j}}}}\) in B are replaced by \({{\mathbf {A}}^\circ }_{{\Delta } {t_{i,j}}}\) (see (1.28)) and for κ the \({{\mathbf {D}}_{c,{t_{i,j}}}}\) in Λ by I.

For the crucial property of measurement invariance, we need to specify

Although measurement invariance is important for substantive reasons, statistically speaking, the assumption of strict measurement invariance may be relaxed if necessary. An additional advantage of the SEM approach is the possibility of specifying measurement error covariances across time in  . This can be done in ctsem by the MANIFESTTRAIT option. Measurement instruments often measure specific aspects, which they do not have in common with other instruments and can be taken care of by freeing corresponding elements in

. This can be done in ctsem by the MANIFESTTRAIT option. Measurement instruments often measure specific aspects, which they do not have in common with other instruments and can be taken care of by freeing corresponding elements in  . In view of identification, however, one should be very cautious in choosing elements of

. In view of identification, however, one should be very cautious in choosing elements of  to be freed.

to be freed.

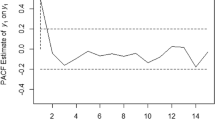

1.4 Analysis of Sunspot Data: CARMA(2,1) on N =1, T =167

The Wolfer sunspot data from 1749 to 1924 is a famous time series of the number of sunspots that has been analyzed by many authors. Several of them applied a CARMA(2,1) model to the series, the results of which are summarized in Table 1.1 together with the results of ctsem. The sunspot data, which are directly available in R (R Core Team 2015) by “sunspot.year,” are monthly data averaged over the years 1700–1988. The data from 1749 to 1924 are analyzed by the authors in Table 1.1 as a stationary series. Stationarity means that the initial means and (co)variances are in the stable equilibrium position of the model, which is defined in terms of the other model parameters. An option of ctsem lets the initial means and (co)variances be constrained in terms of these parameters. ctsem version 1.1.6 and R version 3.3.2 were used for our analyses.Footnote 4

As shown in Table 1.1, differences in parameter estimates between ctsem and previously reported analyses are small and likely caused by numerical imprecision. The most recent estimates by Tómasson (2011) and our results are closer to each other than to the older ones reported by Phadke and Wu (1974) and Singer (1991). The results in Table 1.1 imply an oscillatory movement of the sunspot numbers. Complex eigenvalues of the matrix A lead to oscillatory movements. The eigenvalues of \({\mathbf {A}} = \left [ {\begin {array}{*{20}{c}} 0&{{\text{ 1}}} \\ {{}{f_0}}&{{f_1}} \end {array}} \right ]\)are

where j is the imaginary number \(\sqrt { - 1} \). The eigenvalues are complex, because − f

1

2∕4 > f

0 for all 4 sets of parameter estimates in Table 1.1. The period of the oscillation  is computed by

is computed by

resulting in a period of 10.9 years for all 4 sets of parameter estimates, despite the small differences in parameter estimates.

1.5 Conclusion

As noted by Prado and West (2010), “In many statistical models the assumption that the observations are realizations of independent random variables is key. In contrast, time series analysis is concerned with describing the dependence among the elements of a sequence of random variables” (p. 1). Without doubt, SEM for a long period took position in the first group of models, which hampered the development of N = 1 modeling and time series analysis in an SEM context. The present chapter attempts to reconcile both perspectives by putting time series of independently drawn subjects in one and the same overall SEM model, while using continuous time state space modeling to simultaneously account for the dependence between observations in each time series over time. The present article explained in detail how this may be achieved for first- and higher-order CARMA(p, q) models in an extended SEM framework.

Attempts to combine time series of different subjects in a common model are rare in traditional time series analysis and state space modeling. A first, rather isolated proposal was done by Goodrich and Caines (1979). They call a data set consisting of N > 1 time series “cross-sectional,” thereby using this term in a somewhat different meaning from what is customary in social science. They give a consistency proof for state space model parameter estimates in this kind of data in which “the number T of observations on the transient behavior is fixed but the number N of independent cross-sectional samples tends to infinity” (p. 403). As a matter of fact, an important advantage of N > 1 models is to not be forced to T →∞ asymptotics, which at least in the social sciences is often unrealistic. Arguably, there are not many processes with, for example, exactly the same parameter values over the whole time range until infinity. As argued by Yu (2014, p. 738), an extra advantage offered by continuous time modeling in this respect is that asymptotics can be applied on the time dimension, even if T is taken as fixed. Supposing the discretely observed data to be recorded at 0, Δt, 2 Δt, n Δt(= T), this so-called “in-fill” asymptotics takes T as fixed but lets n →∞ in continuous time. By letting N as well as n go to infinity, a kind of double asymptotics results, which may be particularly useful for typical applications in the social sciences, where it is often hard to argue that T will approach infinity.

Notes

- 1.

In this chapter we follow the common practice to write the Wiener process by capital letter W, although it is here a vector whose size should be inferred from the context.

- 2.

The adapted version uses (1.18A)

$$\displaystyle \begin{aligned} {{\text{e}}^{{\mathbf{A}}{\Delta} t}} = \mathop {\lim }\limits_{D \to \infty } \prod_{d = 0}^{D - 1} {\Big[\Big({\mathbf{I}}} - \frac{1}{2}{\mathbf{A}}{\delta _d}{\Big)^{ - 1}}\Big({\mathbf{I}} + \frac{1}{2}{\mathbf{A}}{\delta _d}\Big)\Big]{\text{ for }}{\delta _d} = \frac{{{\Delta} t}}{D},{} \end{aligned} $$(1.18A)which converges much more rapidly than (1.18). It is based on the approximate discrete model (ADM), described by Oud and Delsing (2010), which just as the EDM goes back to Bergstrom (1984). Computation of the matrix exponential by (1.18) or (1.18A) has the advantage over the diagonalization method (Oud and Jansen 2000) that no assumptions with regard to the eigenvalues need to be made. Currently by most authors the Padé-approximation (Higham 2009) is considered the best computation method, which therefore is implemented in the most recent version of ctsem.

- 3.

A better approximation than a step function is given by a piecewise linear or polygonal approximation (Oud and Jansen 2000; Singer 1992). Then we write u(t) in (1.23) as \({\mathbf {u}}(t) = {\mathbf {u}}({t_0}) +(t - {t_0}){{\mathbf {b}}_{({t_0},t]}}\) and (1.26) becomes:

$$\displaystyle \begin{aligned} \begin{aligned} {\mathbf{x}}(t) &= {{\text{e}}^{{\mathbf{A}}(t - {t_0})}}{\mathbf{x}}({t_0}) + {{\mathbf{A}}^{ - 1}}\big[{{\text{e}}^{{\mathbf{A}}(t - {t_0})}} - {\mathbf{I}}\big]{\mathbf{Bu}}({t_0}) + \left\{ {{\mathbf{A}}^{ - 2}}\big[{{\text{e}}^{{\mathbf{A}}(t - {t_0})}} - {\mathbf{I}}\big] - {{\mathbf{A}}^{ - 1}}(t - {t_0}) \right\} {\mathbf{B}}{{\mathbf{b}}_{({t_0},t]}} \hfill \\ & \quad+ {{\mathbf{A}}^{ - 1}}\big[{{\text{e}}^{{\mathbf{A}}(t - {t_0})}} - {\mathbf{I}}\big]{\boldsymbol{\upgamma}} + \int_{{t_0}}^t {{{\text{e}}^{{\mathbf{A}}(t - s)}}} {\mathbf{G}}{\text{d}}{\mathbf{W}}(s). \hfill \\ \end{aligned}{} \end{aligned} $$(1.26A) - 4.

The programming code of the analysis is available as supplementary material at the book website http://www.springer.com/us/book/9783319772189.

References

Arnold, L. (1974). Stochastic differential equations. New York: Wiley.

Bergstrom, A. R. (1984). Continuous time stochastic models and issues of aggregation over time. In Z. Griliches & M. D. Intriligator (Eds.), Handbook of econometrics (Vol. 2, pp. 1145–1212). Amsterdam: North-Holland. https://doi.org/10.1016/S1573-4412(84)02012-2

Boker, S. M. (2001). Differential structural equation modeling of intraindividual variability. In L. M. Collins & A. G. Sayer (Eds.), New methods for the analysis of change (pp. 5–27). Washington, DC: American Psychological Association. https://doi.org/10.1007/s11336-010-9200-6

Boker, S. M., Neale, M., Maes, H., Wilde, M., Spiegel, M., Brick, T., …Fox, J. (2011). OpenMx: An open source extended structural equation modeling framework. Psychometrika, 76(2), 306–317. https://doi.org/10.1007/s11336-010-9200-6

Boker, S. M., Neale, M., & Rausch, J. (2004). Latent differential equation modeling with multivariate multi-occasion indicators. In K. van Montfort, J. H. L. Oud, & A. Satorra (Eds.), Recent developments on structural equation models (pp. 151–174). Amsterdam: Kluwer.

Bollen, K. A. (1989). Structural equations with latent variables. New York: Wiley. https://doi.org/10.1002/9781118619179

Box, G. E. P., & Jenkins, G. M. (1970). Time serie analysis: Forecasting and control. Oakland, CA: Holden-Day.

Brockwell, P. J. (2004). Representations of continuous-time ARMA processes. Journal of Applied Probability, 41(a), 375–382. https://doi.org/10.1017/s0021900200112422

Caines, P. E. (1988). Linear stochastic systems. New York: Wiley.

Chan, K., & Tong, H. (1987). A note on embedding a discrete parameter ARMA model in a continuous parameter ARMA model. Journal of Time Series Analysis, 8, 277–281. https://doi.org/10.1111/j.1467-9892.1987.tb00439.x

Deistler, M. (1985). General structure and parametrization of arma and state-space systems and its relation to statistical problems. In E. J. Hannan, P. R. Krishnaiah, & M. M. Rao (Eds.), Handbook of statistics: Volume 5. Time series in the time domain (pp. 257–277). Amsterdam: North Holland. https://doi.org/10.1016/s0169-7161(85)05011-8

Driver, C. C., Oud, J. H., & Voelkle, M. C. (2017). Continuous time structural equation modeling with R package ctsem. Journal of Statistical Software, 77, 1–35. https://doi.org/10.18637/jss.v077.i05

Durbin, J., & Koopman, S. J. (2001). Time series analysis by state space methods. Oxford: Oxford University Press. https://doi.org/10.1093/acprof:oSo/9780199641178.001.0001

Gasimova, F., Robitzsch, A., Wilhelm, O., Boker, S. M., Hu, Y., & Hülür, G. (2014). Dynamical systems analysis applied to working memory data. Frontiers in Psychology, 5, 687 (Advance online publication). https://doi.org/10.3389/fpsyg.2014.00687

Goodrich, R. L., & Caines, P. (1979). Linear system identification from nonstationary cross-sectional data. IEEE Transactions on Automatic Control, 24, 403–411. https://doi.org/10.1109/TAC.1979.1102037

Gottman, J. M. (1981). Time-series analysis: A comprehensive introduction for social scientists. Cambridge: Cambridge University Press.

Hamaker, E. L., Dolan, C. V., & Molenaar, C. M. (2003). ARMA-based SEM when the number of time points T exceeds the number of cases N: Raw data maximum likelihood. Structural Equation Modeling, 10, 352–379. https://doi.org/10.1207/s15328007sem1003-2

Hamerle, A., Nagl, W., & Singer, H. (1991). Problems with the estimation of stochastic differential equations using structural equations models. Journal of Mathematical Sociology, 16(3), 201–220. https://doi.org/10.1080/0022250X.1991.9990088

Hannan, E. J., & Deistler, M. (1988). The statistical theory of linear systems. New York: Wiley.

Hansen, L. P., & Sargent, T. J. (1983). The dimensionality of the aliasing problem. Econometrica, 51, 377–388. https://doi.org/10.2307/1911996

Harvey, A. C. (1981). Time series models. Oxford: Philip Allen.

Harvey, A. C. (1989). Forecasting, structural time series models and the Kalman filter. Cambridge: Cambridge University Press.

Higham, N. J. (2009). The scaling and squaring method for the matrix exponential revisited. SIAM Review, 51, 747–764. https://doi.org/10.1137/090768539

Jones, R. H. (1993). Longitudinal data with serial correlation; a state space approach. London: Chapman & Hall. https://doi.org/10.1007/978-1-4899-4489-4

Jöreskog, K. G. (1973). A general method for estimating a linear structural equation system. In A. S. Goldberger & O. D. Duncan (Eds.), Structural equation models in the social sciences (pp. 85–112). New York: Seminar Press.

Jöreskog, K. G. (1977). Structural equation models in the social sciences: Specification, estimation and testing. In P. R. Krishnaiah (Ed.), Applications of statistics (pp. 265–287). Amsterdam: North Holland.

Jöreskog, K. G., & Sörbom, D. (1976). LISREL III: Estimation of linear structural equation systems by maximum likelihood methods: A FORTRAN IV program. Chicago: National Educational Resources.

Jöreskog, K. G., & Sörbom, D. (1996). LISREL 8: User’s reference guide. Chicago: Scientific Software International.

Kalman, R. E. (1960). A new approach to linear filtering and prediction problems. Journal of Basic Engineering, 82, 35–45 (Trans. ASME, ser. D).

Kuo, H. H. (2006). Introduction to stochastic integration. New York: Springer.

Ljung, L. (1985). Estimation of parameters in dynamical systems. In E. J. Hannan, P. R. Krishnaiah, & M. M. Rao (Eds.), Handbook of statistics: Volume 5. Time series in the time domain (pp. 189–211). Amsterdam: North Holland. https://doi.org/10.1016/s0169-7161(85)05009-x

Lütkepohl, H. (1991). Introduction to multiple time series analysis. Berlin: Springer.

McCleary, R., & Hay, R. (1980). Applied time series analysis for the social sciences. Beverly Hills: Sage.

Mills, T. C. (2012). A very British affair: Six Britons and the development of time series analysis during the 20th century. Basingstoke: Palgrave Macmillan.

Neale, M. C. (1997). Mx: Statistical modeling (4th ed.). Richmond, VA: Department of Psychiatry.

Neale, M. C., Hunter, M. D., Pritikin, J. N., Zahery, M., Brick, T. R., Kirkpatrick, R. M., …Boker, S. M. (2016). OpenMx 2.0: Extended structural equation and statistical modeling. Psychometrika, 81, 535–549. https://doi.org/10.1007/s11336-014-9435-8

Oud, J. H. L. (1978). Systeem-methodologie in sociaal-wetenschappelijk onderzoek [Systems methodology in social science research]. Unpublished doctoral dissertation, Radboud University Nijmegen, Nijmegen.

Oud, J. H. L. (2007). Comparison of four procedures to estimate the damped linear differential oscillator for panel data. In K. van Montfort, J. H. L. Oud, & A. Satorra (Eds.), Longitudinal models in the behavioral and related sciences (pp. 19–39). Mahwah, NJ: Erlbaum.

Oud, J. H. L., & Delsing, M. J. M. H. (2010). Continuous time modeling of panel data by means of SEM. In K. van Montfort, J. H. L. Oud, & A. Satorra (Eds.), Longitudinal research with latent variables (pp. 201–244). New York: Springer. https://doi.org/10.1007/978-3-642-11760-2-7

Oud, J. H. L., & Jansen, R. A. R. G. (2000). Continuous time state space modeling of panel data by means of SEM. Psychometrika, 65, 199–215. https://doi.org/10.1007/BF02294374

Oud, J. H. L., & Singer, H. (2008). Continuous time modeling of panel data: SEM versus filter techniques. Statistica Neerlandica, 62, 4–28. https://doi.org/10.1111/j.1467-9574.2007.00376.x

Oud, J. H. L., van den Bercken J. H., & Essers, R. J. (1990). Longitudinal factor score estimation using the Kalman filter. Applied Psychological Measurement, 14, 395–418. https://doi.org/10.1177/014662169001400406

Oud, J. H. L., & Voelkle, M. C. (2014). Do missing values exist? Incomplete data handling in cross-national longitudinal studies by means of continuous time modeling. Quality & Quantity, 48, 3271–3288. https://doi.org/10.1007/s11135-013-9955-9

Oud, J. H. L., Voelkle, M. C., & Driver, C. C. (2018). SEM based CARMA time series modeling for arbitrary N. Multivariate Behavioral Research, 53(1), 36–56. https://doi.org/10.1080/00273171.1383224

Phadke, M., & Wu, S. (1974). Modeling of continuous stochastic processes from discrete observations with application to sunspots data. Journal of the American Statistical Association, 69, 325–329. https://doi.org/10.1111/j.1467-9574.2007.00376.x

Phillips, P. C. B. (1973). The problem of identification in finite parameter continuous time models. In A. R. Bergstrom (Ed.), Statistical inference in continuous time models (pp. 135–173). Amsterdam: North-Holland.

Prado, R., & West, M. (2010). Time series: Modeling, computation, and inference. Boca Raton: Chapman & Hall.

R Core Team. (2015). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. Retrieved from http://www.R-project.org/

Shumway, R. H., & Stoffer, D. (2000). Time series analysis and its applications. New York: Springer. https://doi.org/10.1007/978-1-4419-7865-3

Singer, H. (1990). Parameterschätzung in zeitkontinuierlichen dynamischen Systemen [Parameter estimation in continuous time dynamic systems]. Konstanz: Hartung-Gorre.

Singer, H. (1991). LSDE-A program package for the simulation, graphical display, optimal filtering and maximum likelihood estimation of linear stochastic differential equations: User’s guide. Meersburg: Author.

Singer, H. (1992). The aliasing-phenomenon in visual terms. Journal of Mathematical Sociology, 17, 39–49. https://doi.org/10.1080/0022250X.1992.9990097

Singer, H. (1998). Continuous panel models with time dependent parameters. Journal of Mathematical Sociology, 23, 77–98.

Singer, H. (2010). SEM modeling with singular moment part I: ML estimation of time series. Journal of Mathematical Sociology, 34, 301–320. https://doi.org/10.1080/0022250X.2010.509524

Singer, H. (2012). SEM modeling with singular moment part II: ML-estimation of sampled stochastic differential equations. Journal of Mathematical Sociology, 36, 22–43. https://doi.org/10.1080/0022250X.2010.532259

Steele, J. S., & Ferrer, E. (2011a). Latent differential equation modeling of self-regulatory and coregulatory affective processes. Multivariate Behavioral Research, 46, 956–984. https://doi.org/10.1080/00273171.2011.625305

Steele, J. S., & Ferrer, E. (2011b). Response to Oud & Folmer: Randomness and residuals. Multivariate Behavioral Research, 46, 994–1003. https://doi.org/10.1080/00273171.625308

Tómasson, H. (2011). Some computational aspects of Gaussian CARMA modelling. Vienna: Institute for Advanced Studies.

Tómasson, H. (2015). Some computational aspects of Gaussian CARMA modelling. Statistical Computation, 25, 375–387. https://doi.org/10.1007/s11222-013-9438-9

Tsai, H., & Chan, K.-S. (2000). A note on the covariance structure of a continuous-time ARMA process. Statistica Sinica, 10, 989–998.

Voelkle, M. C., & Oud, J. H. L. (2013). Continuous time modelling with individually varying time intervals for oscillating and non-oscillating processes. British Journal of Mathematical and Statistical Psychology, 66, 103–126. https://doi.org/10.1111/j.2044-8317.2012.02043.x

Voelkle, M. C., Oud, J. H. L., Davidov, E., & Schmidt, P. (2012a). An SEM approach to continuous time modeling of panel data: Relating authoritarianism and anomia. Psychological Methods, 17, 176–192. https://doi.org/10.1037/a0027543

Voelkle, M. C., Oud, J. H. L., Oertzen, T. von, & Lindenberger, U. (2012b). Maximum likelihood dynamic factor modeling for arbitrary N and T using SEM. Structural Equation Modeling: A Multidisciplinary Journal, 19, 329–350.https://doi.org/10.1080/10705511.2012.687656

Wolf, E. J., Harrington, K. M., Clark, S. L., & Miller, M. W. (2013). Sample size requirements for structural equation models: An evaluation of power, bias, and solution propriety. Educational and Psychological Measurement, 73, 913–934. https://doi.org/10.1177/0013164413495237

Yu, J. (2014). Econometric analysis of continuous time models: A survey of Peter Philips’s work and some new results. Econometric Theory, 30, 737–774. https://doi.org/10.1017/S0266466613000467

Zadeh, L. A., & Desoer, C. A. (1963). Linear system theory: The state space approach. New York: McGraw-Hill.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1.1 Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

Oud, J.H.L., Voelkle, M.C., Driver, C.C. (2018). First- and Higher-Order Continuous Time Models for Arbitrary N Using SEM. In: van Montfort, K., Oud, J.H.L., Voelkle, M.C. (eds) Continuous Time Modeling in the Behavioral and Related Sciences. Springer, Cham. https://doi.org/10.1007/978-3-319-77219-6_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-77219-6_1

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-77218-9

Online ISBN: 978-3-319-77219-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)